Detecting Fine-Grained Emotions in Literature

Abstract

1. Introduction

1.1. Motivation

- The use of coarse-grained emotions stemming from the use of “basic” or “top level” categories in psychological models of emotions;

- A single emotion label per sentence;

- The small size of the dataset by current machine learning standards.

1.2. Overview

1.3. Related Work

- Our work focuses on introducing a semi-supervised approach instead of crowdsourced workers for annotating training data;

- We introduce a dataset with more fine-grained emotions (38 labels) compared to previous datasets (11, 27, or 8 labels);

- Our work introduces the first multi-label or fine-grained emotion dataset for literature.

- We use NLI for binary ranking of candidates instead of directly providing labels;

- The final labels of our datasets are provided by a binary classifier for each emotion rather than by directly using NLI, allowing us to use only the highest NLI ranked examples for each emotion.

1.4. Contribution

- A novel semi-supervised approach capable of creating fine-grained multi-label emotion classification datasets;

- A large, balanced dataset with 38 fine-grained emotion labels, surpassing existing datasets;

- A more comprehensive taxonomy and definitions for emotion detection from text;

- Analysis of emotion correlation and sentiment within the dataset, informing future work in emotion detection;

- Publicly available, easy-to-use trained models for researchers.

2. Materials and Methods

2.1. Data

- Strip away template text added to the book (https://github.com/c-w/gutenberg/ (accessed on 22 June 2023));

- Check the language of the book based on the characters in the interval [1000:20,000];

- Split the book into sentences based on the newline character first and then using a sentence tokenizer;

- Discard any sentences in all uppercase (most likely headings or template text);

- Discard any sentences that do not start with a character of the English alphabet;

- Discard any sentences with less than 6 tokens or more than 40 (whitespace delimited);

- Discard any sentences that are not identified as English or any sentences that contain 10-token segments that are not identified as English;

- We randomly shuffled the sentences and limited their total number to 10 M to reduce the amount of compute required in the following sections.

2.2. Deduplication

2.3. Emotion Taxonomy

2.4. Weak-Labeling

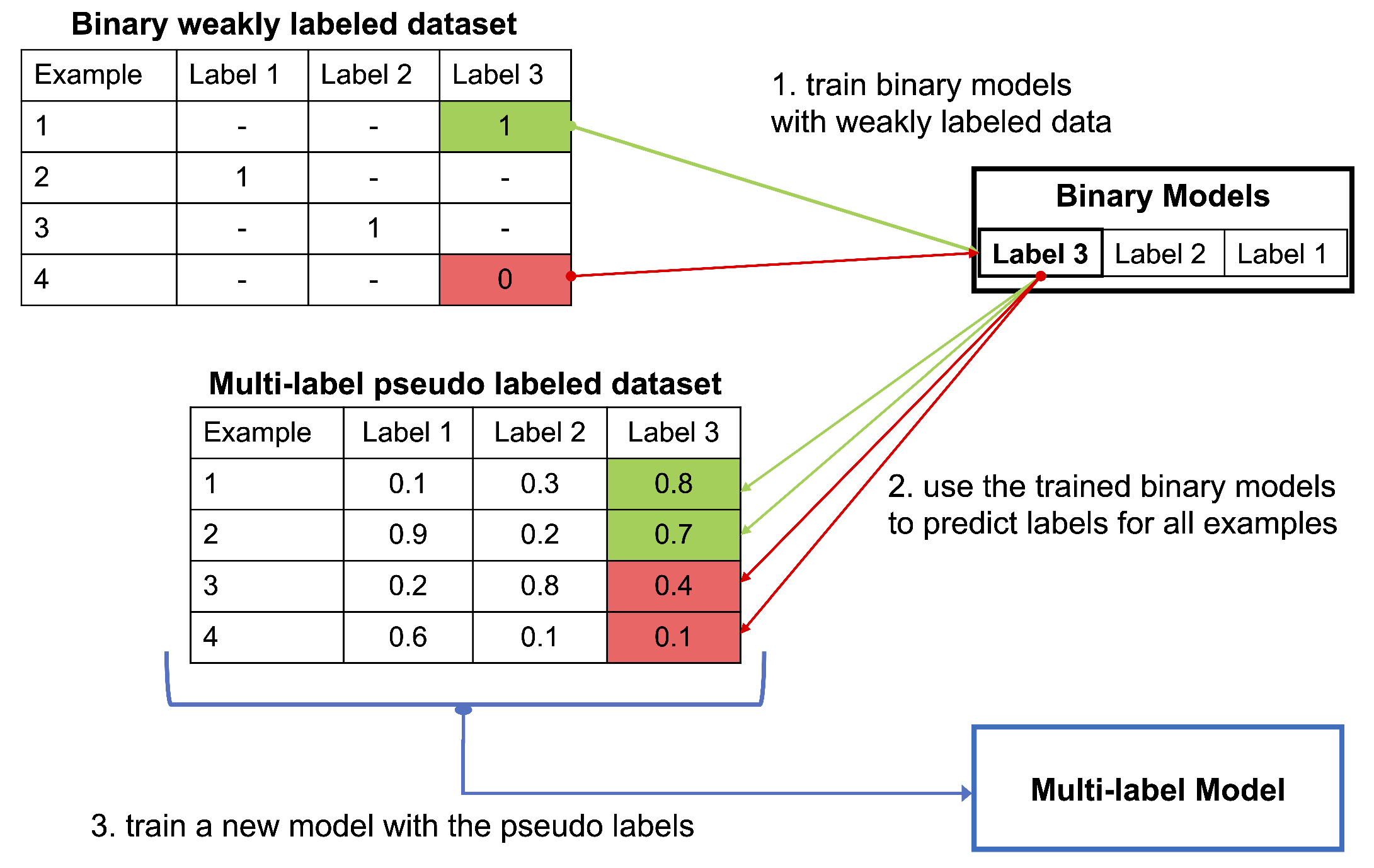

2.5. Pseudo-Labeling

2.6. Supervised Classification

2.7. Evaluation Metrics

- True Positives (TP): For a specific label, TP represents the instances where the model correctly predicts the presence of that label, and the ground truth also indicates the presence of that label. It is the count of instances that are correctly identified as positive for a particular label;

- False Positives (FP): For a specific label, FP occurs when the model predicts the presence of that label, but the ground truth indicates the absence of that label. It is the count of instances that are incorrectly classified as positive for a particular label;

- True Negatives (TN): For a specific label, TN represents the instances where the model correctly predicts the absence of that label, and the ground truth also indicates the absence of that label. It is the count of instances that are correctly identified as negative for a particular label;

- False Negatives (FN): For a specific label, FN happens when the model predicts the absence of that class, but the ground truth indicates the presence of that label. It is the count of instances that are incorrectly classified as negative for a particular label.

- Precision measures the proportion of true positive predictions out of the total positive predictions (Equation (1)). It focuses on the correctness of positive predictions. A high precision indicates a low rate of false positives;

- Recall measures the proportion of true positive predictions out of the total positive instances in the dataset (Equation (2)). It focuses on capturing all positive instances without missing any. A high recall indicates a low rate of false negatives;

- F1 score is the harmonic mean of precision and recall. It provides a balanced measure that combines both precision and recall into a single metric (Equation (3)). It is commonly used when both precision and recall are equally important, providing an overall measure of the model’s performance.

3. Data Analysis

3.1. Label Distribution

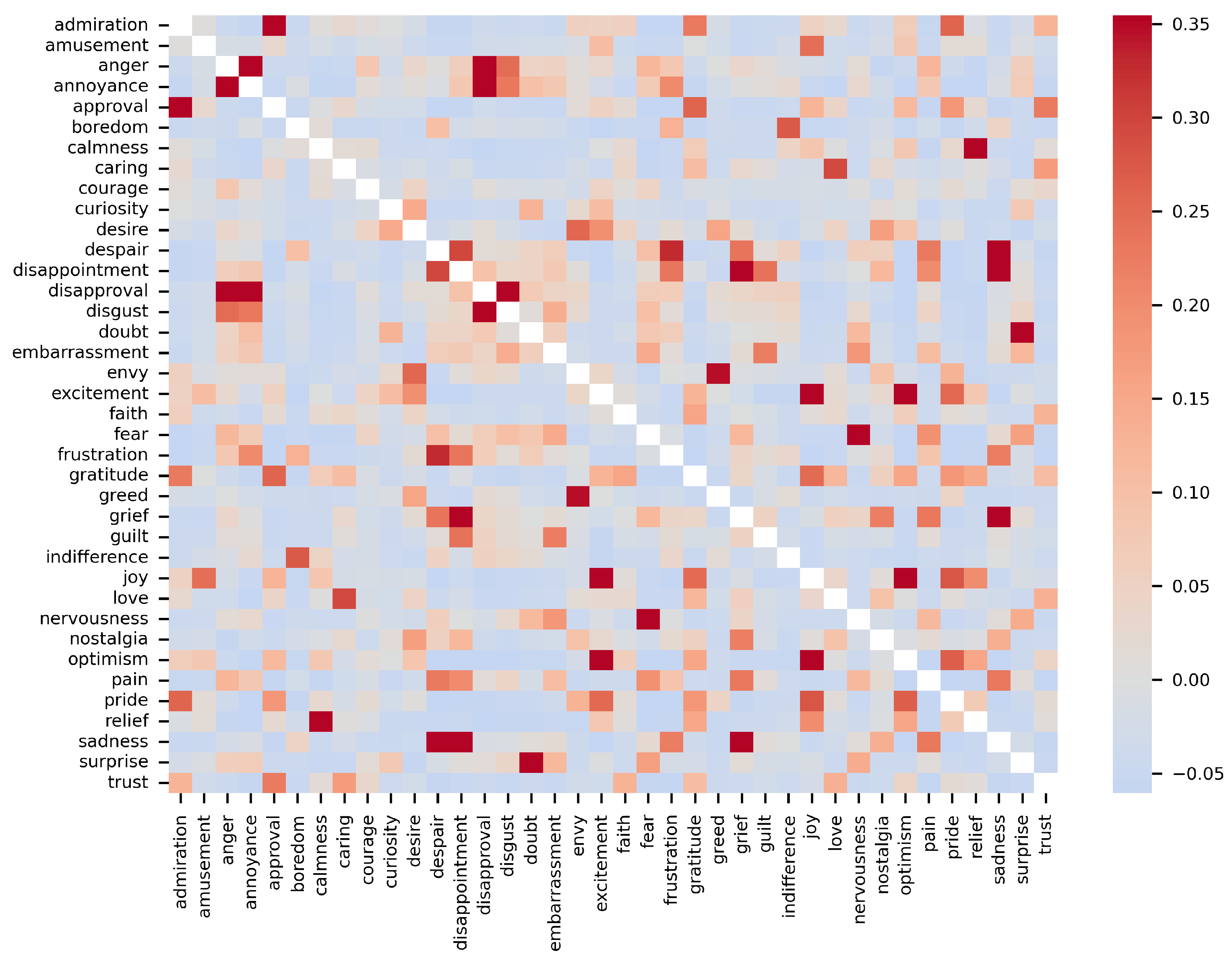

3.2. Label Correlation

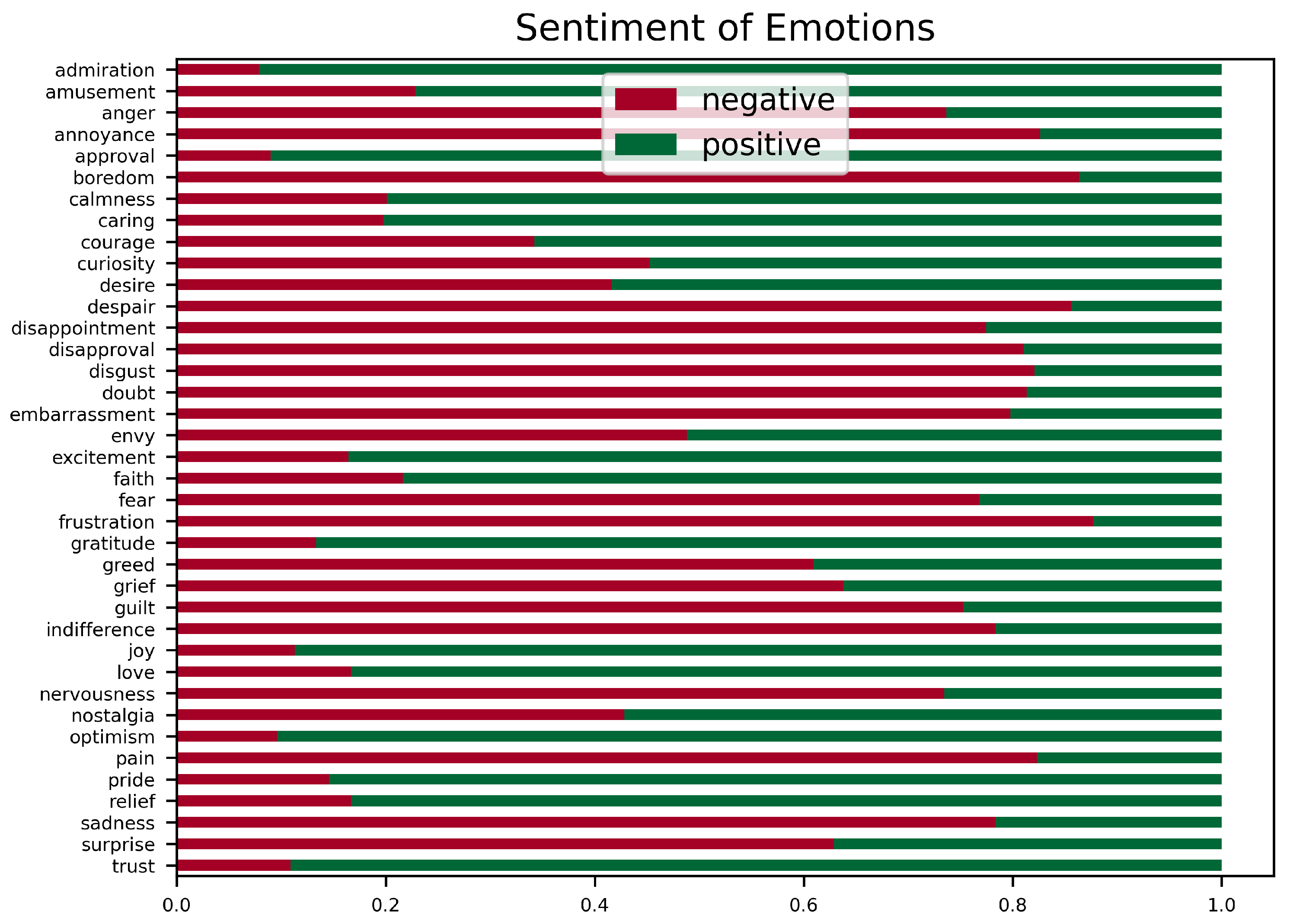

3.3. Label Sentiment

4. Experimental Results

4.1. Evaluation Data

4.2. Supervised Evaluation

4.3. Zero-Shot Transfer

4.4. Few-Shot Transfer

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Label Maps

| Tales | EmoLit |

|---|---|

| angry-disgusted | anger, annoyance, disapproval, disgust |

| happy | excitement, amusement, joy, relief, gratitude, optimism |

| fearful | fear, nervousness |

| sad | disappointment, despair, sadness, grief |

| surprised | surprise |

| ISEAR | EmoLit |

| fear | fear, nervousness |

| shame | embarrassment |

| guilt | guilt |

| disgust | disgust |

| anger | anger, annoyance, frustration |

| joy | approval, relief, gratitude, joy, optimism |

| sadness | grief, sadness |

| EMOINT | EmoLit |

| anger | anger, annoyance |

| joy | joy |

| fear | fear, nervousness |

| sadness | boredom, despair, sadness |

Appendix B. NLI Hypothesis Comparison

| Threshold | Hypothesis | Fear | Joy | Sadness | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | ||

| 0.5 | Short | 0.21 | 0.98 | 0.35 | 0.81 | 0.96 | 0.88 | 0.33 | 0.99 | 0.5 |

| Long | 0.16 | 1.00 | 0.27 | 0.39 | 1.00 | 0.56 | 0.39 | 1.0 | 0.56 | |

| 0.6 | Short | 0.22 | 0.97 | 0.36 | 0.83 | 0.94 | 0.88 | 0.35 | 0.99 | 0.51 |

| Long | 0.16 | 1.00 | 0.28 | 0.39 | 1.00 | 0.56 | 0.32 | 1.00 | 0.49 | |

| 0.7 | Short | 0.24 | 0.96 | 0.38 | 0.84 | 0.92 | 0.88 | 0.36 | 0.99 | 0.53 |

| Long | 0.17 | 1.00 | 0.28 | 0.4 | 1.00 | 0.57 | 0.33 | 0.99 | 0.5 | |

| 0.8 | Short | 0.27 | 0.93 | 0.42 | 0.86 | 0.87 | 0.87 | 0.39 | 0.98 | 0.55 |

| Long | 0.17 | 1.00 | 0.29 | 0.42 | 1.00 | 0.59 | 0.35 | 0.99 | 0.52 | |

| 0.9 | Short | 0.36 | 0.89 | 0.52 | 0.87 | 0.76 | 0.81 | 0.45 | 0.92 | 0.6 |

| Long | 0.18 | 1.00 | 0.31 | 0.46 | 0.99 | 0.63 | 0.38 | 0.98 | 0.55 | |

| 0.95 | Short | 0.51 | 0.75 | 0.6 | 0.88 | 0.64 | 0.74 | 0.53 | 0.88 | 0.66 |

| Long | 0.19 | 1.0 | 0.32 | 0.53 | 0.99 | 0.69 | 0.42 | 0.95 | 0.58 | |

Appendix C. Nostalgia

References

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Johansen, J.D. Feelings in literature. Integr. Psychol. Behav. Sci. 2010, 44, 185–196. [Google Scholar] [CrossRef] [PubMed]

- Oatley, K. Emotions and the story worlds of fiction. In Narrative Impact; Psychology Press: Mahwah, NJ, USA, 2003; pp. 39–69. [Google Scholar]

- Hogan, P.C. What Literature Teaches Us about Emotion; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Frevert, U. Emotions in History—Lost and Found; Central European University Press: Budapest, Hungary, 2011. [Google Scholar]

- Massri, M.B.; Novalija, I.; Mladenić, D.; Brank, J.; Graça da Silva, S.; Marrouch, N.; Murteira, C.; Hürriyetoğlu, A.; Šircelj, B. Harvesting Context and Mining Emotions Related to Olfactory Cultural Heritage. Multimodal Technol. Interact. 2022, 6, 57. [Google Scholar] [CrossRef]

- Alm, C.O.; Roth, D.; Sproat, R. Emotions from text: Machine learning for text-based emotion prediction. In Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 6–8 October 2005; pp. 579–586. [Google Scholar]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Williams, L.; Arribas-Ayllon, M.; Artemiou, A.; Spasić, I. Comparing the utility of different classification schemes for emotive language analysis. J. Classif. 2019, 36, 619–648. [Google Scholar] [CrossRef]

- Öhman, E. Emotion Annotation: Rethinking Emotion Categorization. In Proceedings of the DHN Post-Proceedings, Riga, Latvia, 21–23 October 2020; pp. 134–144. [Google Scholar]

- Bostan, L.A.M.; Klinger, R. An Analysis of Annotated Corpora for Emotion Classification in Text. In Proceedings of the 27th International Conference on Computational Linguistics. Association for Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 2104–2119. [Google Scholar]

- Mohammad, S.; Bravo-Marquez, F.; Salameh, M.; Kiritchenko, S. SemEval-2018 Task 1: Affect in Tweets. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 1–17. [Google Scholar] [CrossRef]

- Demszky, D.; Movshovitz-Attias, D.; Ko, J.; Cowen, A.; Nemade, G.; Ravi, S. GoEmotions: A Dataset of Fine-Grained Emotions. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 4040–4054. [Google Scholar]

- Kim, E.; Klinger, R. Who feels what and why? annotation of a literature corpus with semantic roles of emotions. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 1345–1359. [Google Scholar]

- Menini, S.; Paccosi, T.; Tonelli, S.; Van Erp, M.; Leemans, I.; Lisena, P.; Troncy, R.; Tullett, W.; Hürriyetoğlu, A.; Dijkstra, G.; et al. A multilingual benchmark to capture olfactory situations over time. In Proceedings of the 3rd Workshop on Computational Approaches to Historical Language Change, Dublin, Ireland, 26–27 May 2022; pp. 1–10. [Google Scholar]

- Rei, L.; Mladenic, D.; Dorozynski, M.; Rottensteiner, F.; Schleider, T.; Troncy, R.; Lozano, J.S.; Salvatella, M.G. Multimodal metadata assignment for cultural heritage artifacts. Multimed. Syst. 2023, 29, 847–869. [Google Scholar] [CrossRef]

- Pita Costa, J.; Rei, L.; Stopar, L.; Fuart, F.; Grobelnik, M.; Mladenić, D.; Novalija, I.; Staines, A.; Pääkkönen, J.; Konttila, J.; et al. NewsMeSH: A new classifier designed to annotate health news with MeSH headings. Artif. Intell. Med. 2021, 114, 102053. [Google Scholar] [CrossRef] [PubMed]

- Dagan, I.; Glickman, O.; Magnini, B. The pascal recognising textual entailment challenge. In Proceedings of the Machine Learning Challenges. Evaluating Predictive Uncertainty, Visual Object Classification, and Recognising Tectual Entailment: First PASCAL Machine Learning Challenges Workshop, MLCW 2005, Southampton, UK, 11–13 April 2005; pp. 177–190. [Google Scholar]

- Yin, W.; Hay, J.; Roth, D. Benchmarking Zero-shot Text Classification: Datasets, Evaluation and Entailment Approach. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3914–3923. [Google Scholar] [CrossRef]

- Andreevskaia, A.; Bergler, S. CLaC and CLaC-NB: Knowledge-based and corpus-based approaches to sentiment tagging. In Proceedings of the Fourth International Workshop on Semantic Evaluations (SemEval-2007), Prague, Czech Republic, 23–24 June 2007; pp. 117–120. [Google Scholar]

- Bermingham, A.; Smeaton, A.F. A study of inter-annotator agreement for opinion retrieval. In Proceedings of the 32nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Boston, MA, USA, 19–23 July 2009; pp. 784–785. [Google Scholar]

- Russo, I.; Caselli, T.; Rubino, F.; Boldrini, E.; Martínez-Barco, P. EMOCause: An Easy-adaptable Approach to Extract Emotion Cause Contexts. In Proceedings of the 2nd Workshop on Computational Approaches to Subjectivity and Sentiment Analysis (WASSA 2.011), Portland, OR, USA, 24 June 2011; pp. 153–160. [Google Scholar]

- Mohammad, S. A practical guide to sentiment annotation: Challenges and solutions. In Proceedings of the 7th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, San Diego, CA, USA, 16 June 2016; pp. 174–179. [Google Scholar]

- Plutchik, R. A general psychoevolutionary theory of emotion. In Theories of Emotion; Elsevier: Amsterdam, The Netherlands, 1980; pp. 3–33. [Google Scholar]

- Scherer, K.R.; Wallbott, H.G. Evidence for universality and cultural variation of differential emotion response patterning. J. Personal. Soc. Psychol. 1994, 66, 310. [Google Scholar] [CrossRef] [PubMed]

- Öhman, E.; Pàmies, M.; Kajava, K.; Tiedemann, J. XED: A Multilingual Dataset for Sentiment Analysis and Emotion Detection. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 6542–6552. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Kocoń, J.; Cichecki, I.; Kaszyca, O.; Kochanek, M.; Szydło, D.; Baran, J.; Bielaniewicz, J.; Gruza, M.; Janz, A.; Kanclerz, K.; et al. ChatGPT: Jack of all trades, master of none. Inf. Fusion 2023, 99, 101861. [Google Scholar] [CrossRef]

- Ameer, I.; Bölücü, N.; Siddiqui, M.H.F.; Can, B.; Sidorov, G.; Gelbukh, A. Multi-label emotion classification in texts using transfer learning. Expert Syst. Appl. 2023, 213, 118534. [Google Scholar] [CrossRef]

- Alhuzali, H.; Ananiadou, S. SpanEmo: Casting Multi-label Emotion Classification as Span-prediction. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 1573–1584. [Google Scholar] [CrossRef]

- Basile, A.; Pérez-Torró, G.; Franco-Salvador, M. Probabilistic Ensembles of Zero- and Few-Shot Learning Models for Emotion Classification. In Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2021), Online, 1–3 September 2021; pp. 128–137. [Google Scholar]

- Plaza-del Arco, F.M.; Martín-Valdivia, M.T.; Klinger, R. Natural Language Inference Prompts for Zero-shot Emotion Classification in Text across Corpora. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 6805–6817. [Google Scholar]

- Tesfagergish, S.G.; Kapočiūtė-Dzikienė, J.; Damaševičius, R. Zero-Shot Emotion Detection for Semi-Supervised Sentiment Analysis Using Sentence Transformers and Ensemble Learning. Appl. Sci. 2022, 12, 8662. [Google Scholar] [CrossRef]

- Gera, A.; Halfon, A.; Shnarch, E.; Perlitz, Y.; Ein-Dor, L.; Slonim, N. Zero-Shot Text Classification with Self-Training. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 1107–1119. [Google Scholar]

- Peterson, J.C.; Battleday, R.M.; Griffiths, T.L.; Russakovsky, O. Human uncertainty makes classification more robust. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9617–9626. [Google Scholar]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- El Gayar, N.; Schwenker, F.; Palm, G. A study of the robustness of KNN classifiers trained using soft labels. In Proceedings of the Artificial Neural Networks in Pattern Recognition: Second IAPR Workshop, ANNPR 2006, Ulm, Germany, 31 August–2 September 2006; pp. 67–80. [Google Scholar]

- Thiel, C. Classification on soft labels is robust against label noise. In Proceedings of the Knowledge-Based Intelligent Information and Engineering Systems: 12th International Conference, KES 2008, Zagreb, Croatia, 3–5 September 2008; pp. 65–73. [Google Scholar]

- Galstyan, A.; Cohen, P.R. Empirical comparison of “hard” and “soft” label propagation for relational classification. In Proceedings of the International Conference on Inductive Logic Programming, Corvallis, OR, USA, 25–27 October 2007; pp. 98–111. [Google Scholar]

- Zhao, Z.; Wu, S.; Yang, M.; Chen, K.; Zhao, T. Robust machine reading comprehension by learning soft labels. In Proceedings of the 28th International Conference on Computational Linguistics, Online, 8–13 December 2020; pp. 2754–2759. [Google Scholar]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2009. [Google Scholar]

- Kiss, T.; Strunk, J. Unsupervised multilingual sentence boundary detection. Comput. Linguist. 2006, 32, 485–525. [Google Scholar] [CrossRef]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. arXiv 2016, arXiv:1607.01759. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Douze, M.; Jégou, H.; Mikolov, T. FastText.zip: Compressing text classification models. arXiv 2016, arXiv:1612.03651. [Google Scholar]

- Broder, A.Z. On the resemblance and containment of documents. In Proceedings of the Compression and Complexity of SEQUENCES 1997 (Cat. No. 97TB100171), Positano, Italy, 11–13 June 1997; pp. 21–29. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar] [CrossRef]

- Williams, A.; Nangia, N.; Bowman, S. A Broad-Coverage Challenge Corpus for Sentence Understanding through Inference. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), Seattle, WA, USA, 10–15 July 2022; pp. 1112–1122. [Google Scholar]

- Wang, W.; Wei, F.; Dong, L.; Bao, H.; Yang, N.; Zhou, M. Minilm: Deep self-attention distillation for task-agnostic compression of pre-trained transformers. Adv. Neural Inf. Process. Syst. 2020, 33, 5776–5788. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Porter, M.F. An algorithm for suffix stripping. Program 1980, 14, 130–137. [Google Scholar] [CrossRef]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 16–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Sechidis, K.; Tsoumakas, G.; Vlahavas, I. On the Stratification of Multi-label Data. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, Athens, Greece, 5–9 September 2011; Gunopulos, D., Hofmann, T., Malerba, D., Vazirgiannis, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 145–158. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners; Technical Report; OpenAI: San Francisco, CA, USA, 2019. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Schutze, H.; Manning, C.D.; Raghavan, P. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; p. 281. [Google Scholar]

- Mohammad, S.; Bravo-Marquez, F. Emotion Intensities in Tweets. In Proceedings of the 6th Joint Conference on Lexical and Computational Semantics (*SEM 2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 65–77. [Google Scholar] [CrossRef]

| Emotion | Definition |

|---|---|

| admiration | finds something admirable, impressive or worthy of respect |

| amusement | finds something funny, entertaining or amusing |

| anger | is angry, furious, or strongly displeased; displays ire, rage, or wrath |

| annoyance | is annoyed or irritated |

| approval | expresses a favorable opinion, approves, endorses or agrees with something or someone |

| boredom | feels bored, uninterested, monotony, tedium |

| calmness | is calm, serene, free from agitation or disturbance, experiences emotional tranquility |

| caring | cares about the well-being of someone else, feels sympathy, compassion, affectionate concern towards someone, |

| displays kindness or generosity | |

| courage | feels courage or the ability to do something that frightens one, displays fearlessness or bravery |

| curiosity | is interested, curious, or has strong desire to learn something |

| desire | has a desire or ambition, wants something, wishes for something to happen |

| despair | feels despair, helpless, powerless, loss or absence of hope, desperation, despondency |

| disappointment | feels sadness or displeasure caused by the non-fulfillment of hopes or expectations, being or let down, |

| expresses regret due to the unfavorable outcome of a decision | |

| disapproval | expresses an unfavorable opinion, disagrees or disapproves of something or someone |

| disgust | feels disgust, revulsion, finds something or someone unpleasant, offensive or hateful |

| doubt | has doubt or is uncertain about something, bewildered, confused, or shows lack of understanding |

| embarrassment | feels embarrassed, awkward, self-conscious, shame, or humiliation |

| envy | is covetous, feels envy or jealousy; begrudges or resents someone for their achievements, possessions, or qualities |

| excitement | feels excitement or great enthusiasm and eagerness |

| faith | expresses religious faith, has a strong belief in the doctrines of a religion, or trust in god |

| fear | is afraid or scared due to a threat, danger, or harm |

| frustration | feels frustrated: upset or annoyed because of inability to change or achieve something |

| gratitude | is thankful or grateful for something |

| greed | is greedy, rapacious, avaricious, or has selfish desire to acquire or possess more than what one needs |

| grief | feels grief or intense sorrow, or grieves for someone who has died |

| guilt | feels guilt, remorse, or regret to have committed wrong or failed in an obligation |

| indifference | is uncaring, unsympathetic, uncharitable, or callous, shows indifference, lack of concern, coldness towards someone |

| joy | is happy, feels joy, great pleasure, elation, satisfaction, contentment, or delight |

| love | feels love, strong affection, passion, or deep romantic attachment for someone |

| nervousness | feels nervous, anxious, worried, uneasy, apprehensive, stressed, troubled or tense |

| nostalgia | feels nostalgia, longing or wistful affection for the past, something lost, or for a period in one’s life, |

| feels homesickness, a longing for one’s home, city, or country while being away; longing for a familiar place | |

| optimism | feels optimism or hope, is hopeful or confident about the future, that something good may happen, |

| or the success of something | |

| pain | feels physical pain or is experiences physical suffering |

| pride | is proud, feels pride from one’s own achievements, self–fulfillment, or from the achievements |

| of those with whom one is closely associated, or from qualities or possessions that are widely admired | |

| relief | feels relaxed, relief from tension or anxiety |

| sadness | feels sadness, sorrow, unhappiness, depression, dejection |

| surprise | is surprised, astonished or shocked by something unexpected |

| trust | trusts or has confidence in someone, or believes that someone is good, honest, or reliable |

| Hypothesis | |

|---|---|

| Short (Ranking) | This expresses the emotion fear. |

| Long (Re-ranking) | Speaker or someone is afraid or scared due to a threat, danger, or harm. |

| Hyperparameter | Value |

|---|---|

| Batch Size | 32 |

| Learning Rate | |

| Max Epochs | 2 |

| Smoothing | 0.2 |

| Train | Validation | Test | Gold | |

|---|---|---|---|---|

| admiration | 7700 | 963 | 962 | 110 |

| amusement | 7427 | 928 | 928 | 52 |

| anger | 8556 | 1070 | 1070 | 72 |

| annoyance | 10,730 | 1341 | 1341 | 57 |

| approval | 8531 | 1066 | 1066 | 98 |

| boredom | 8113 | 1014 | 1014 | 53 |

| calmness | 8573 | 1072 | 1072 | 45 |

| caring | 8972 | 1122 | 1121 | 64 |

| courage | 8484 | 1061 | 1060 | 42 |

| curiosity | 7738 | 967 | 967 | 67 |

| desire | 10,160 | 1270 | 1270 | 81 |

| despair | 10,009 | 1251 | 1251 | 44 |

| disappointment | 12,133 | 1517 | 1517 | 39 |

| disapproval | 11,130 | 1391 | 1391 | 111 |

| disgust | 8987 | 1123 | 1123 | 72 |

| doubt | 9012 | 1127 | 1127 | 43 |

| embarrassment | 9642 | 1205 | 1205 | 22 |

| envy | 9942 | 1243 | 1243 | 14 |

| excitement | 10,794 | 1349 | 1349 | 38 |

| faith | 8442 | 1055 | 1055 | 13 |

| fear | 11,556 | 1445 | 1445 | 39 |

| frustration | 11,162 | 1395 | 1395 | 54 |

| gratitude | 11,279 | 1410 | 1410 | 14 |

| greed | 7423 | 928 | 928 | 25 |

| grief | 10,972 | 1372 | 1371 | 14 |

| guilt | 8660 | 1082 | 1082 | 13 |

| indifference | 8549 | 1069 | 1069 | 37 |

| joy | 9404 | 1175 | 1175 | 61 |

| love | 8838 | 1105 | 1105 | 50 |

| nervousness | 7747 | 968 | 968 | 24 |

| nostalgia | 14,805 | 1851 | 1851 | 29 |

| optimism | 9560 | 1195 | 1195 | 37 |

| pain | 10,014 | 1252 | 1252 | 22 |

| pride | 10,744 | 1343 | 1343 | 27 |

| relief | 9317 | 1165 | 1165 | 25 |

| sadness | 9589 | 1199 | 1199 | 52 |

| surprise | 9818 | 1227 | 1227 | 36 |

| trust | 8606 | 1076 | 1076 | 43 |

| neutral | 22,803 | 2890 | 2919 | 15 |

| Sentences | 160,000 | 20,000 | 20,000 | 727 |

| No of Emotions | Examples (%) |

|---|---|

| 0 | 14.3 |

| 1 | 27.4 |

| 2 | 20.8 |

| 3 | 14.4 |

| 4 | 10.0 |

| 5 | 6.6 |

| 6 | 4.3 |

| 7 | 2.3 |

| Highest Correlation | Lowest Correlation | ||

|---|---|---|---|

| despair | sadness | optimism | pain |

| calmness | relief | annoyance | optimism |

| fear | nervousness | approval | frustration |

| anger | annoyance | frustration | gratitude |

| excitement | joy | disappointment | optimism |

| Most Positive | Most Negative | ||

|---|---|---|---|

| Emotion | Positive (%) | Emotion | Negative (%) |

| admiration | 92 | frustration | 88 |

| approval | 91 | boredom | 86 |

| optimism | 90 | despair | 86 |

| trust | 89 | annoyance | 83 |

| joy | 89 | pain | 82 |

| Hard Labels | Soft Labels | |||||

|---|---|---|---|---|---|---|

| Emotion | Precision | Recall | F1 | Precision | Recall | F1 |

| admiration | 0.74 | 0.31 | 0.45 | 0.72 | 0.31 | 0.43 |

| amusement | 0.73 | 0.87 | 0.79 | 0.75 | 0.87 | 0.8 |

| anger | 0.70 | 0.65 | 0.68 | 0.71 | 0.68 | 0.69 |

| annoyance | 0.51 | 0.74 | 0.60 | 0.5 | 0.72 | 0.59 |

| approval | 0.83 | 0.51 | 0.63 | 0.8 | 0.49 | 0.61 |

| boredom | 0.67 | 0.94 | 0.78 | 0.64 | 0.92 | 0.75 |

| calmness | 0.65 | 0.82 | 0.73 | 0.63 | 0.82 | 0.71 |

| caring | 0.73 | 0.83 | 0.77 | 0.69 | 0.8 | 0.74 |

| courage | 0.47 | 0.67 | 0.55 | 0.46 | 0.67 | 0.54 |

| curiosity | 0.76 | 0.82 | 0.79 | 0.76 | 0.84 | 0.79 |

| desire | 0.82 | 0.79 | 0.81 | 0.81 | 0.77 | 0.78 |

| despair | 0.72 | 0.70 | 0.71 | 0.7 | 0.7 | 0.7 |

| disappointment | 0.44 | 0.46 | 0.45 | 0.4 | 0.44 | 0.42 |

| disapproval | 0.47 | 0.23 | 0.31 | 0.48 | 0.25 | 0.33 |

| disgust | 0.84 | 0.38 | 0.52 | 0.79 | 0.36 | 0.5 |

| doubt | 0.72 | 0.49 | 0.58 | 0.61 | 0.44 | 0.51 |

| embarrassment | 0.58 | 0.64 | 0.61 | 0.5 | 0.73 | 0.59 |

| envy | 0.28 | 0.86 | 0.42 | 0.28 | 0.93 | 0.43 |

| excitement | 0.55 | 0.68 | 0.61 | 0.57 | 0.68 | 0.62 |

| faith | 0.39 | 0.85 | 0.54 | 0.45 | 0.77 | 0.57 |

| fear | 0.48 | 0.41 | 0.44 | 0.42 | 0.41 | 0.42 |

| frustration | 0.54 | 0.57 | 0.56 | 0.53 | 0.61 | 0.57 |

| gratitude | 0.28 | 0.79 | 0.42 | 0.26 | 0.71 | 0.38 |

| greed | 0.57 | 0.64 | 0.60 | 0.55 | 0.68 | 0.61 |

| grief | 0.27 | 0.86 | 0.41 | 0.31 | 0.93 | 0.46 |

| guilt | 0.43 | 0.69 | 0.53 | 0.45 | 0.77 | 0.57 |

| indifference | 0.66 | 0.89 | 0.76 | 0.65 | 0.84 | 0.73 |

| joy | 0.84 | 0.43 | 0.57 | 0.77 | 0.44 | 0.56 |

| love | 0.69 | 0.66 | 0.67 | 0.69 | 0.72 | 0.71 |

| nervousness | 0.54 | 0.54 | 0.54 | 0.55 | 0.46 | 0.5 |

| nostalgia | 0.29 | 0.97 | 0.44 | 0.27 | 0.97 | 0.42 |

| optimism | 0.52 | 0.43 | 0.47 | 0.5 | 0.38 | 0.43 |

| pain | 0.33 | 0.55 | 0.41 | 0.42 | 0.73 | 0.53 |

| pride | 0.46 | 0.59 | 0.52 | 0.48 | 0.59 | 0.53 |

| relief | 0.54 | 0.84 | 0.66 | 0.51 | 0.84 | 0.64 |

| sadness | 0.70 | 0.60 | 0.65 | 0.67 | 0.62 | 0.64 |

| surprise | 0.68 | 0.69 | 0.68 | 0.71 | 0.69 | 0.70 |

| trust | 0.71 | 0.63 | 0.67 | 0.76 | 0.65 | 0.70 |

| macro-average | 0.58 | 0.66 | 0.59 | 0.58 | 0.66 | 0.59 |

| std | 0.17 | 0.18 | 0.13 | 0.16 | 0.18 | 0.13 |

| Hyperparameter | Value |

|---|---|

| Batch Size | 16 |

| Learning Rate | |

| Max Epochs | 10 |

| Encoder Name | Encoder Architecture | Encoder Parameters | F1 (Macro) |

|---|---|---|---|

| RoBERTa-large | L = 24, H = 1024, A = 16 | 355 M | 0.59 |

| BERT-large | L = 24, H = 1024, A = 16 | 340 M | 0.59 |

| RoBERTa-base | L = 12, H = 768, A = 12 | 125 M | 0.59 |

| BERT-base | L = 12, H = 768, A = 12 | 110 M | 0.58 |

| DistilRoBERTa-base | L = 6, H = 768, A = 12 | 82 M | 0.58 |

| DistilBERT-base | L = 6, H = 768, A = 12 | 66 M | 0.56 |

| Dataset | Emotions | Model | F1 (Macro) |

|---|---|---|---|

| EmoLit (ours) | 38 | RoBERTa | 0.59 |

| BERT | 0.58 | ||

| SemEval-2018 Task-1C | 11 | RoBERTa | 0.60 [30] |

| XED | 8 | BERT | 0.54 [26] |

| GoEmotions | 27 | BERT | 0.46 [13] |

| Hyperparameter | Value |

|---|---|

| Batch Size | 8 |

| Learning Rate | |

| Epochs | 3 |

| Dataset | Tales | ISEAR | EMOINT | GoEmotions26 |

|---|---|---|---|---|

| Self | 0.83 | 0.76 | 0.82 | 0.52 1 |

| Transfer | ||||

| GoEmotions | 0.74 (89%) | 0.52 (68%) | 0.50 (61%) | NA 2 |

| EmoLit (Hard Labels) | 0.74 (89%) | 0.53 (70%) | 0.57 (70%) | 0.28 (54%) |

| EmoLit (Soft Labels) | 0.77 (93%) | 0.56 (74%) | 0.60 (73%) | 0.28 (54%) |

| Tales | ISEAR | EMOINT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Literature | Self-Reporting | Tweets | ||||||||||

| 50 | 100 | 150 | 200 | 50 | 100 | 150 | 200 | 50 | 100 | 150 | 200 | |

| Baseline | 0.2 | 0.57 | 0.64 | 0.82 | 0.17 | 0.43 | 0.25 | 0.62 | 0.17 | 0.21 | 0.47 | 0.72 |

| GoEmotions | 0.58 | 0.75 | 0.80 | 0.81 | 0.47 | 0.58 | 0.62 | 0.63 | 0.53 | 0.62 | 0.67 | 0.68 |

| EmoLit (Hard Labels) | 0.60 | 0.72 | 0.79 | 0.81 | 0.36 | 0.52 | 0.55 | 0.60 | 0.56 | 0.65 | 0.69 | 0.71 |

| EmoLit (Soft Labels) | 0.61 | 0.74 | 0.79 | 0.81 | 0.36 | 0.54 | 0.55 | 0.62 | 0.59 | 0.67 | 0.70 | 0.72 |

| Hyperparameter | Value |

|---|---|

| Batch Size | 8 |

| Learning Rate | |

| Epochs | 8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rei, L.; Mladenić, D. Detecting Fine-Grained Emotions in Literature. Appl. Sci. 2023, 13, 7502. https://doi.org/10.3390/app13137502

Rei L, Mladenić D. Detecting Fine-Grained Emotions in Literature. Applied Sciences. 2023; 13(13):7502. https://doi.org/10.3390/app13137502

Chicago/Turabian StyleRei, Luis, and Dunja Mladenić. 2023. "Detecting Fine-Grained Emotions in Literature" Applied Sciences 13, no. 13: 7502. https://doi.org/10.3390/app13137502

APA StyleRei, L., & Mladenić, D. (2023). Detecting Fine-Grained Emotions in Literature. Applied Sciences, 13(13), 7502. https://doi.org/10.3390/app13137502