1. Introduction

Effective feature representation is key to image processing [

1,

2,

3] and video understanding [

4,

5,

6]. Spatio-temporal features [

7,

8], subspace features [

9,

10], and label information [

11,

12] have been investigated for action recognition. Nevertheless, in

Figure 1, we observe that video understanding represents a significant evolution through new datasets and approaches. The activities scenarios have moved on from simple sports, isolated movies, normal surveillance to cluttered home sequences, egocentric interactions of kitchens, real-world anomalous events, part-level action parsing, dark environments, and complex surveillance videos. Considering the various views, illumination, poses, and outdoor conditions of activities, while the data distribution of feature space remains uncertain, how do we discover the underlying embedded subspace for different types of features, and what are the boundaries of action clips?

On the other hand, large-scale videos are constantly emerging nowadays; thus, lots of segments need automatic labeling, but this requires human labor. Large amounts of normal behaviors are more than those of anomalous events. It is important to measure data similarity by sample matching with distance metric learning. Noticeably, some segments in untrimmed videos may be out of specific categories [

13], or there are no annotations of new sequences in the dark environment [

14]. Therefore, in order to solve the point-matching problem in a semi-supervised manner, we discuss how to convert the video-set matching problem to a data distance measurement problem on the manifold subspace.

Correlations between multiple features may provide distinctive information; hence, feature correlation mining has been explored to improve the recognition results when labeled data are scarce [

10,

15]. However, these approaches may have limitations in learning discriminant features. First, although existing algorithms evaluate the common shared structures among different actions, they do not take inter-class separability into account. Second, current semi-supervised approaches solve the non-convex optimization problem by impressive derivation, but the global optimum may not be computed mathematically through the alternating least-squares (ALS) iterative method.

Figure 1.

Sample frames from (

a) home activities (Charades [

16]), (

b) real-world anomalies (UCF-Crime [

13]), (

c) dark environments (ARID [

14]), (

d) egocentric interactions (EPIC-KITCHENS-100 [

17]), (

e) part-level actions (Kinetics-TPS [

18]), and (

f) fight scenarios (CSCD [

19]). All videos have a large gap towards target-oriented and diversity-oriented. (

a) Charades depicts cluttered home actions from multimedia, (

b) UCF-Crime shows real-world events containing anomalous and normal segments in untrimmed videos, (

c) ARID aims to recognize actions in low illumination through semi-supervised methods, (

d) EPIC-KITCHENS-100 consists of daily activities in the kitchen from first-person videos, (

e) Kinetics-TPS develops a large-scale kinetics-temporal part state for encoding the composition of body parts, and (

f) CSCD collects fight and no-fight scenarios from surveillance cameras.

Figure 1.

Sample frames from (

a) home activities (Charades [

16]), (

b) real-world anomalies (UCF-Crime [

13]), (

c) dark environments (ARID [

14]), (

d) egocentric interactions (EPIC-KITCHENS-100 [

17]), (

e) part-level actions (Kinetics-TPS [

18]), and (

f) fight scenarios (CSCD [

19]). All videos have a large gap towards target-oriented and diversity-oriented. (

a) Charades depicts cluttered home actions from multimedia, (

b) UCF-Crime shows real-world events containing anomalous and normal segments in untrimmed videos, (

c) ARID aims to recognize actions in low illumination through semi-supervised methods, (

d) EPIC-KITCHENS-100 consists of daily activities in the kitchen from first-person videos, (

e) Kinetics-TPS develops a large-scale kinetics-temporal part state for encoding the composition of body parts, and (

f) CSCD collects fight and no-fight scenarios from surveillance cameras.

2. Motivation and Contributions

To overcome the limitations of using multiple features for training, we propose modeling intra-class compactness and inter-class separability simultaneously, then capturing high-level semantic patterns via multiple-feature analysis. Considering the optimization process, we introduce the PBB algorithm because of its effectiveness in obtaining an optimal solution [

20]. The PBB method is a non-monotone line-search technique considered for the minimization of differentiable functions on closed convex sets [

21].

Inspired by the research using multiple features [

11,

15], our framework was extended in a multiple-feature-based manner to improve recognition. We proposed the characterization of high-level semantic patterns through low-level action features using multiple-feature analysis. Multiple features were extracted from different views of labeled and unlabeled action videos. Based on the constructed graph model, pseudo-information of unlabeled videos can be generated by label propagation and feature correlations. For each type of feature, nearby samples preserve the consistency separately, while unlabeled training data perform the label prediction by jointly global consistency of multiple features. Thus, an adaptive semi-supervised action classifier was trained. The main contributions can be summarized as follows:

(1) This work first simultaneously considers manifold learning and Grassmannian kernels in semi-supervised action recognition, as we assume that action video samples may be found in a Grassmannian manifold space. By modeling an embedding manifold subspace, both inter-class separability and intra-class compactness were considered.

(2) To solve the unconstrained minimization problem, we incorporate the PBB method to avoid matrix inversion, and apply globalization strategy via adaptive step sizes to render the objective functions non-monotonic, leading to improved convergence and accuracy.

(3) Extensive experiments verified that our method is better than other approaches on three benchmarks in a semi-supervised setting. We believe that this study presents valuable insights into adaptive feature analysis for semi-supervised action recognition.

3. Related Work

We review the related research on semi-supervised action recognition, multiple-feature analysis, and embedded subspace representation in this section.

3.1. Semi-Supervised Action Recognition

Unlabeled samples are valuable for learning data correlations in a semi-supervised manner [

9,

10,

12,

22]. Although they tend to achieve remarkable performance via semi-supervised learning with limited labeled data, there are still many issues, such as inherent multi-modal attributes leading to local optimum, or unconvincing pseudo-labels leading to inaccurate predictions [

23,

24].

Si et al. [

25] tackle the challenge of semi-supervised 3D action recognition for effectively learning motion representations from unlabeled data. Singh et al. [

6] maximize the similarity of the same video at two different speeds, and recognize actions by training a two-pathway temporal contrastive model. Kumar and Rawat [

26] develop a spatio-temporal consistency-based approach with two regularization constraints: temporal coherency and gradient smoothness, which can detect video action in an end-to-end semi-supervised manner.

3.2. Multiple-Feature Analysis

Because we can describe an object by different features that provide different discriminative information, multiple-feature analysis has gained increasing interest in many applications. In the early and late fusion strategies, multi-stage fusion schemes have recently been investigated [

10,

27,

28,

29]. However, the correlations of each feature type have not been considered in most late-fusion approaches.

Wang et al. [

10] apply shared structural analysis to characterize discriminative information and preserve data distribution information from each type of feature. Chang and Yang [

15] discover shared knowledge from related multi-tasks, take various correlations into account, then select features in a batch mode. Huynh-The et al. [

30] capture multiple high-level features at image-based representation by fine-tuning a pre-trained network, transfer the skeleton pose to encoded information, and depict an action through spatial joint correlations and temporal pose dynamics.

3.3. Embedded Subspace Representation

Previous studies have shown that manifold subspace learning can mine geometric structure information by considering the space of probabilities as a manifold [

31,

32,

33]. Recent research focuses on graph-embedded subspace or distance metric learning to measure activity similarity [

34,

35,

36,

37,

38].

Rahimi et al. [

39] build neighborhood graphs with geodesic distance instead of Euclidean distance, and project high-dimensional action to low-dimensional space by kernelized Grassmann manifold learning. Yu et al. [

40] propose an action-matching network to recognize open-set actions, construct an action dictionary, and classify an action via the distance metric. Peng et al. [

41] alleviate the over-smoothing issue of graph representation when multiple GCN layers are stacked by the flexible graph deconvolution technique.

The two aforementioned studies [

9,

10] are similar to ours. They assume that the visual words in different actions share a common structure in a specific subspace. A transformation matrix is introduced to characterize the shared structures. They solve the constrained non-convex optimization problem through an ALS–like iterative approach and matrix derivation. Nevertheless, the deduced inverse matrix is poorly scaled during optimization or close to singular, which may lead to inaccurate results.

To address these problems, we hypothesize that manifold mapping can preserve the local geometry and maximize discriminatory power, as shown in

Figure 2. However, we did not aim to mine shared structures. Therefore, we ignored shared-structure regularization and modeled the manifold by creating two graphs. As the optimization solution in [

9,

10] may be mathematically imprecise, Karush–Kuhn–Tucker (KKT) conditions and PBB are introduced to improve algorithm convergence and avoid matrix inversion.

Different from another related research [

12], this work makes two major modifications as follows: multiple-feature analysis with combined Grassmannian kernels, and non-monotone line-search strategy with adaptive step sizes.

4. Proposed Approach

Our approach incorporates several techniques, including semi-supervised action recognition, multiple-feature analysis, PBB, KKT, manifold learning, and Grassmannian kernels. It is named Kernel Grassmann manifold analysis (KGMA).

4.1. Formulation

To leverage the multiple-feature correlation, n training sample points are defined from the underlying Grassmannian manifold, where . We aim to uncover a new manifold while preserving the local geometry of data points, that is, . Since we should demonstrate data distribution on the manifold, a predicted label matrix is defined, where the predicted vector of the i-th datum is .

We assume that a similarity measurement of data points in the manifold subspace is available through a Grassmannian kernel [

31]

. By confining the solution to a linear function, that is,

, we define the prediction function

f as

. By denoting

and

, it can be shown that

, and thus,

, where

and

. As mentioned in [

42], the performance of the least-square loss function is comparable to hinge loss or logistic loss. This is associated with its diagonal matrix

, where

is the label matrix. We employ least-squares regression to solve the following optimization problem, then obtain the projection matrix

:

where

is the regularization parameter.

denotes the Frobenius norm.

controls the model complexity to prevent overfitting.

4.2. Manifold Learning

In contrast to [

10], which utilizes a graph model to estimate data distribution on the manifold, we model the local geometrical structure by generating between-class similarity graph

and within-class similarity graph

, where

, if

or

; otherwise,

.

applies the same method, although it selects

or

, where

contains neighbors with different labels, and

is the set of neighbors

sharing the same label as

. Notably, the intra-class and inter-class distances can be mapped on a manifold by similarity graphs [

33].

Inspired by manifold learning [

12,

31,

33], we maximize inter-class separability and minimize intra-class compactness simultaneously. An ideal transform pushes the connected points of

to the extent possible while moving the connected points of

closer. The discriminative information can be represented as follows:

where

is a regularization parameter, which controls the trade-off between inter-class separability and intra-class compactness.

denotes the trace operator, and

denotes the Laplacian matrix. Furthermore,

is a diagonal matrix with

, and

is a diagonal matrix with

.

4.3. Multiple-Feature Analysis

Multiple features imply combining kernelized embedding features, data-point manifold subspace learning (1st term in Equation (

4)), and label propagation (2nd term in Equation (

4)) with low-level feature correlations (3rd term in Equation (

4)) for labeled and unlabeled data.

We modify the aforementioned function to leverage both labeled and unlabeled samples. First, the training dataset is redefined as

, where

is the labeled data subset, and

is the unlabeled data subset. The label matrix

, where

. The unlabeled matrix

. According to [

9,

43], diagonal label matrix

and the similarity graphs

should be consistent with the label prediction matrix

. We generalize the graph-embedded label consistency as follows:

In contrast to previous shared-structure learning algorithms, we do not consider shared-structure learning within a semi-supervised learning framework. Alternatively, we propose a novel joint framework that incorporates the multiple-feature analyses of multiple manifolds. As discussed in the problem formulation section, by employing the Frobenius norm regularized loss function, we can reformulate the objective:

where

,

and

are regular parameters.

Equation (

4) is an unconstrained convex optimization problem; hence, we can obtain the global optimum by performing ALS or the projected gradient method. Although the correlation matrix can only be singular under specific circumstances, the projected gradient method can handle the aforementioned issues without matrix inversion [

20], and therefore leads to a better optimum than ALS. Notably, the convergence conditions in [

9,

10] merely depend on a monotone decrease, which may result in mathematically improper convergence; therefore, KKT conditions are utilized to consider this problem.

4.4. Grassmannian Kernels

The similarity between two action sample points

and

can be measured by projective kernel combination:

One attempt to solve the point-matching problem is the notion of principal angles [

31]. Given

and

, we can define the canonical correlation kernel as

subject to

and

.

We create a combined Grassmannian kernel through existing Grassmannian kernels [

31].

where

. Notably,

defines a new kernel based on the theory of reproducing the kernel Hilbert space, as described in [

31].

4.5. Optimization

According to [

20,

21], a general unconstrained minimization problem can be solved by the trace operator and the PBB method. Hence, a new objective function

instead of Equation (

4) is defined:

If

is an approximate stationary point in Equation (

8), it must satisfy the KKT conditions in Equation (

8). Then, we have an iteration-stopping criterion

where

is a non-negative small constant.

4.6. Projected Barzilai-Borwein

Similar to [

20], a sequence of feasible points

is generated by the gradient method:

where

is another step size, and

denotes the non-monotone line-search step size that is determined through an appropriate selection rule. Following [

21], we have two choices for step size:

where

The characteristic of the adaptive step sizes (

11) can render the objective functions non-monotonic; hence,

may increase in some iterations. Alternatively, using (

11) is better than merely using one of them [

21]; the step size is expressed by

To guarantee the convergence of

, a globalization strategy based on the non-monotone line-search technique is described as [

20]

where

are the parameters of the Armoji line-search method [

21]. Following [

20], in order to overcome some drawbacks of non-monotone techniques, the traditional largest function value is converted by the weighted-average function value:

5. Experiments

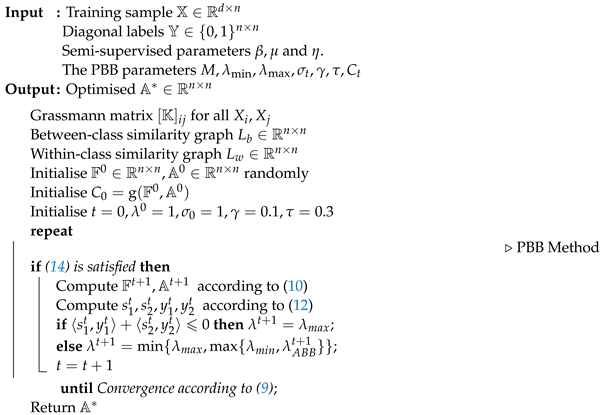

The proposed method called KGMA is summarized in Algorithm

Section 5. The conventional method that uses SPG [

12] and the ALS method instead of PBB, called kernel spectral projected gradient analysis (KSPG) and kernel alternating least-squares analysis (KALS), respectively, was also adopted to solve the objective function (

8) for comparison in our experiments.

| Algorithm 1: Kernel Grassmann Manifold Analysis (KGMA). | |

![Applsci 13 07684 i001]() |

5.1. Features

For handcrafted features, we follow [

12] to extracted improved dense trajectories (IDTs) and Fisher vector (FV), as shown in

Figure 3.

For deep-learned features, we retrained the temporal segment network (TSN) [

7] models of 15 ×

c, and then extracted the global pool features of 15 ×

c using a pre-trained TSN model, concatenating rgb + flow into 2048 dimensions with power L2-normalization, as listed in

Table 1.

We verified the proposed algorithm using three kernels: projection kernel , canonical correlation kernel , and combined kernel . In some cases, is better than or vice versa, suggesting that the kernels combination is more suitable for different data distributions. For , the mixing coefficients and were fixed at one. We obtain better results by combining two kernels.

5.2. Datasets

Three datasets were used in the experiments: JHMDB [

44], HMDB51 [

45], and UCF101 [

46]. The

JHMDB dataset has 21 action categories. The average recognition accuracies over three training–test splits are reported. The

HMDB51 dataset records 51 action categories. We reported the MAP over three training–test splits. The

UCF101 dataset includes 101 action categories, containing 13,320 video clips. The average accuracy of the first split was reported.

For the JHMDB dataset, we followed the standard data partitioning (three splits) provided by the authors. For other datasets, we used the first split provided by the authors, and applied the original testing sets for fair comparison. Because the semi-supervised training set contained unlabeled data, we performed the following procedure to reform the training set for each individual dataset. The class number c was denoted for each dataset (c = 21, 51, and 101 for JHMDB, HMDB51, and UCF101, respectively).

Using JHMDB as an example, we first randomly selected 30 training samples per category to form a training set ( samples) in our experiment. From this training set, we randomly sampled m videos (m = 3, 5, 10, and 15) per category as labeled samples. Therefore, if , -labeled samples will be available, leaving () videos as unlabeled samples for the semi-supervised training setting. We used three splits of testing set on JHMDB and HMDB51 but only the first testing split on UCF101 due to lack of GPU memory resources. Owing to the random selected training samples, the experiments were repeated 10 times to avoid bias.

5.3. Experimental Setup

To demonstrate the superiority of our approach (KGMA), we adopted 8 methods for comparison: SVM, SFUS [

47], SFCM [

9], MFCU [

10], KSPG, and KALS. Notably, SFUS, SFCM, MFCU, KSPG, and KALS are semi-supervised action recognition approaches. Using the available codes, we can facilitate a fair comparison.

For the semi-supervised parameters

for SFUS, SFCM, MFCU, KSPG, KALS, and KGMA, we follow the same settings utilized in [

9,

10], ranging from {

}. Because the PBB parameters were not sensitive to our algorithm, we initialized the parameters as in [

20], as indicated in Algorithm

Section 5. Notably, since KGMA applied PBB to solve the optimal value of the objective function (

8), it resulted in non-monotonic convergence with oscillating objective function values, as shown in

Figure 4. Thus, using only the absolute error made it difficult to determine when to stop iterating, and relative error of objective function values was better than absolute error, which may be mathematically improper convergence. We chose constant

as the iteration-stopping criterion in (

9).

5.4. Mathematical Comparisons

The recognition results with handcrafted features on three datasets are demonstrated in

Figure 3. We compared our method with deep-learned features in

Table 1.

Regarding the presented objective function (

8),

Figure 4 summarizes the computational results of the three optimization methods. When we used the 2048-dimensional deep-learned features by TSN on the JHMDB dataset, the model was trained with only 15 labeled samples and 15 unlabeled samples per class. With the same semi-supervised parameters set up,

, the performance differences during the solving of the same objective function could be compared in terms of running time, number of iterations, absolute error, relative error, and objective function value.

Figure 4 shows the convergence curves of the three optimization methods. Since both SPG and PBB were non-monotonic optimization methods with relatively large fluctuations in objective function values, we omitted the first 29 iterations of SPG and PBB in

Figure 4, and only displayed the data starting from the 30th iteration so as to better illustrate the monotonic convergence process of ALS.

As shown in

Figure 3, for a randomly selected video data sample, ALS exhibited the fewest iterations, shortest running time, and fastest computation speed of 0.1220 s after extracting the deep features by TSN. In contrast, PBB exhibited the most iterations, longest running time, and slowest computation speed of 0.4212 s, while SPG’s performance was intermediate between ALS and PBB. Considering

Figure 4 and

Table 2, it is evident that despite using the PBB optimization method, our KGMA algorithm still achieves the highest accuracy on the kernelized Grassmann manifold space. Nevertheless, Equation (

9) using SPG results in marginal improvement over ALS, which is likely attributable to our novel kernelized Grassmann manifold space.

5.5. Performance on Action Recognition

A linear SVM was utilized as the baseline. Based on the comparisons, we observe the following: (1) KGMA achieved the best performance, and our semi-supervised algorithm was better than linear SVM, which is a widely used supervised classifier (2) all methods achieved better performances using more labeled training data, as shown in

Figure 3, or enlarging the semi-supervised parameter (i.e.,

) range such as

Figure 5; (3) we averaged an accuracy of

,

,

, and

cases, and the recognition of KGMA on JHMDB, HMDB51, and UCF101 improved by 2.97%, 2.59%, and 2.40%, respectively. When using TSN features, the recognition of our KGMA on the above-mentioned datasets improved by 2.21%, 3.77%, and 2.23%, respectively. Evidently, our semi-supervised method can improve recognition by leveraging unlabeled data compared to linear SVM with labeled data merely.

Figure 3 illustrates that our algorithm benefits from the multiple-feature analysis, kernelized Grassman space, and iterative skills of the PBB method.

These results can be attributed to several factors. First, our method not only leverages semi-supervised approaches, but also leverages intra-class action variation and inter-class action ambiguity simultaneously. Therefore, ours gain more significant performance than other approaches when there are few labeled samples. Second, we uncover the action feature subspace on the Grassmannian manifold by incorporating Grassmannian kernels, and solve the objective function optimization by the adaptive line-search strategy and the PBB method mathematically. Hence, the proposed algorithm works well in few labeled cases.

5.6. Convergence Study

According to the objective function (

4), we conducted experiments with the TSN feature, fixed the semi-supervised parameters

, and then executed both the ALS and PBB methods 10 times. The results of the study are listed in

Table 2. Although no oscillation exists in the convergence of the ALS and it requires fewer iterations, the PBB method can outperform the ALS for three reasons. First, the PBB method uses a non-monotone line-search strategy to globalize the process [

21], which can obtain the global optimal objective function value rather than being trapped in local optima using the monotone ALS method. Second, the character of adaptive step sizes is an essential characteristic that determines efficiency in the projected gradient methodology [

21], whereas the iteration step skill has not been considered in ALS. Finally, the efficient convergence properties of the projected gradient method have been demonstrated because the PBB is well defined [

21].

5.7. Computation Complexity

In the training stage, we computed the Laplacian matrix L, the complexity of which was . To optimize the objective function, we computed the projected gradient and trace operators of several matrices. Therefore, the complexity of these operations was .

5.8. Parameter Sensitivity Study

We verified that KGMA benefits from the intra-class and inter-class by manifold discriminant analysis, as shown in

Figure 5. We analyze the impact of manifold learning on JHMDB and HMDB51, set

and

at optimal values over split2, for

-labeled training data. As

varied from

to

, the accuracy oscillated significantly and reached a peak value when

. Since

controls the proportion of the intra-class local geometric structure and the inter-class global manifold structure, as shown in

Figure 5, when the intra-class local geometric structure is treated as a constant 1,

can be considered such that the inter-class global manifold structure has a larger proportion in the objective function and vice versa. When

, no inter-class structure is utilized; thus, if

, no intra-class structure is present. When the Grassmann manifold space leverages an adequate balance of intra-class action variation and inter-class action ambiguity, the proposed algorithm can further enhance the discriminatory power of the transformation matrix.

6. Conclusions

This study proposed a new approach to categorize human action videos. With Grassmannian kernel combinations and multiple-feature analysis on multiple manifolds, our method can improve recognition by uncovering the intrinsic features relationships. We evaluated the presented approach on three benchmark datasets, and experiment results show ours outperformed all competing methods, particularly when there are few labeled samples.

Author Contributions

Conceptualization, Z.X.; methodology, Z.X., X.L., J.L. and H.C.; software, Z.X., X.L. and J.L.; validation, Z.X.; formal analysis, Z.X. and X.L.; investigation, Z.X. and H.C.; resources, Z.X. and R.H.; data curation, Z.X.; writing—original draft, Z.X., X.L., J.L. and H.C.; writing—review and editing, X.L. and J.L.; visualization, Z.X.; supervision, R.H.; project administration, Z.X.; funding acquisition, Z.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (61862015, 11961010, 12261026), the Science and Technology Project of Guangxi (AD21220114), the Guangxi Key Laboratory of Automatic Detecting Technology and Instruments (YQ23103), the Outstanding Youth Science and Technology Innovation Team Project of Colleges and Universities in Hubei Province (T201923), the Key Science and Technology Project of Jingmen (2021ZDYF024), the Guangxi Key Research and Development Program (AB17195025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Many thanks to all the authors who took the time out of their busy schedules to review the paper and provide references.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sun, H.; Li, B.; Dan, Z.; Hu, W.; Du, B.; Yang, W.; Wan, J. Multi-level Feature Interaction and Efficient Non-Local Information Enhanced Channel Attention for image dehazing. Neural Netw. 2023, 163, 10–27. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, Y.; Chen, P.; Dan, Z.; Sun, S.; Wan, J.; Li, W. Scale-free heterogeneous cycleGAN for defogging from a single image for autonomous driving in fog. Neural Comput. Appl. 2021, 35, 3737–3751. [Google Scholar] [CrossRef]

- Wan, J.; Liu, J.; Zhou, J.; Lai, Z.; Shen, L.; Sun, H.; Xiong, P.; Min, W. Precise Facial Landmark Detection by Reference Heatmap Transformer. IEEE Trans. Image Process. 2023, 32, 1966–1977. [Google Scholar] [CrossRef]

- Wang, H.; Dan, O.; Verbeek, J.; Schmid, C. A Robust and Efficient Video Representation for Action Recognition. Int. J. Comput. Vis. 2016, 119, 219–238. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Hu, R.; Chen, J.; Chen, H.; Li, H. Global Contrast Based Salient Region Boundary Sampling for Action Recognition. In Proceedings of the 22nd International Conference on MultiMedia Modeling, Miami, FL, USA, 4–6 January 2016; pp. 187–198. [Google Scholar]

- Singh, A.; Chakraborty, O.; Varshney, A.; Panda, R.; Feris, R.; Saenko, K.; Das, A. Semi-supervised action recognition with temporal contrastive learning. In Proceedings of the 2021 IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10384–10394. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 20–36. [Google Scholar]

- Xu, Z.; Hu, R.; Chen, J.; Chen, C.; Chen, H.; Li, H.; Sun, Q. Action recognition by saliency-based dense sampling. Neurocomputing 2017, 236, 82–92. [Google Scholar] [CrossRef]

- Wang, S.; Yang, Y.; Ma, Z.; Li, X.; Pang, C.; Hauptmann, A.G. Action recognition by exploring data distribution and feature correlation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: New York, NY, USA, 2012; pp. 1370–1377. [Google Scholar]

- Wang, S.; Ma, Z.; Yang, Y.; Li, X.; Pang, C.; Hauptmann, A.G. Semi-supervised multiple feature analysis for action recognition. IEEE Trans. Multimed. 2014, 16, 289–298. [Google Scholar] [CrossRef]

- Luo, M.; Chang, X.; Nie, L.; Yang, Y.; Hauptmann, A.G.; Zheng, Q. An Adaptive Semisupervised Feature Analysis for Video Semantic Recognition. IEEE Trans. Cybern. 2018, 48, 648–660. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Hu, R.; Chen, J.; Chen, C.; Jiang, J.; Li, J.; Li, H. Semisupervised discriminant multimanifold analysis for action recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2951–2962. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the 2018 IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar]

- Xu, Y.; Yang, J.; Cao, H.; Mao, K.; Yin, J.; See, S. Arid: A new dataset for recognizing action in the dark. In Proceedings of the International Workshop on Deep Learning for Human Activity Recognition, Kyoto, Japan, 8 January 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 70–84. [Google Scholar]

- Chang, X.; Yang, Y. Semisupervised Feature Analysis by Mining Correlations Among Multiple Tasks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2294–2305. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sigurdsson, G.A.; Russakovsky, O.; Gupta, A. What Actions are Needed for Understanding Human Actions in Videos? In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2156–2165. [Google Scholar]

- Wang, X.; Zhu, L.; Wang, H.; Yang, Y. Interactive Prototype Learning for Egocentric Action Recognition. In Proceedings of the 2021 IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8148–8157. [Google Scholar]

- Ma, Y.; Wang, Y.; Wu, Y.; Lyu, Z.; Chen, S.; Li, X.; Qiao, Y. Visual Knowledge Graph for Human Action Reasoning in Videos. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 4132–4141. [Google Scholar]

- Aktı, Ş.; Tataroğlu, G.A.; Ekenel, H.K. Vision-based fight detection from surveillance cameras. In Proceedings of the 2019 Ninth International Conference on Image Processing Theory, Tools and Applications, Istanbul, Turkey, 6–9 November 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Liu, H.; Li, X. Modified subspace Barzilai-Borwein gradient method for non-negative matrix factorization. Comput. Optim. Appl. 2013, 55, 173–196. [Google Scholar] [CrossRef]

- Barzilai, J.; Borwein, J.M. Two-point step size gradient methods. IMA J. Numer. Anal. 1988, 8, 141–148. [Google Scholar] [CrossRef]

- Harandi, M.T.; Sanderson, C.; Shirazi, S.; Lovell, B.C. Kernel analysis on Grassmann manifolds for action recognition. Pattern Recognit. Lett. 2013, 34, 1906–1915. [Google Scholar] [CrossRef] [Green Version]

- Xiao, J.; Jing, L.; Zhang, L.; He, J.; She, Q.; Zhou, Z.; Yuille, A.; Li, Y. Learning from Temporal Gradient for Semi-supervised Action Recognition. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 3242–3252. [Google Scholar]

- Xu, Y.; Wei, F.; Sun, X.; Yang, C.; Shen, Y.; Dai, B.; Zhou, B.; Lin, S. Cross-model pseudo-labeling for semi-supervised action recognition. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 2959–2968. [Google Scholar]

- Si, C.; Nie, X.; Wang, W.; Wang, L.; Tan, T.; Feng, J. Adversarial self-supervised learning for semi-supervised 3d action recognition. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Kumar, A.; Rawat, Y.S. End-to-end semi-supervised learning for video action detection. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 14700–14710. [Google Scholar]

- Bi, Y.; Bai, X.; Jin, T.; Guo, S. Multiple feature analysis for infrared small target detection. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 1333–1337. [Google Scholar] [CrossRef]

- Shahroudy, A.; Ng, T.T.; Gong, Y.; Wang, G. Deep multimodal feature analysis for action recognition in rgb+d videos. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1045–1058. [Google Scholar] [CrossRef] [Green Version]

- Khaire, U.M.; Dhanalakshmi, R. Stability of feature selection algorithm: A review. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 1060–1073. [Google Scholar] [CrossRef]

- Huynh-The, T.; Hua, C.H.; Ngo, T.T.; Kim, D.S. Image representation of pose-transition feature for 3D skeleton-based action recognition. Inf. Sci. 2020, 513, 112–126. [Google Scholar] [CrossRef]

- Harandi, M.T.; Sanderson, C.; Shirazi, S.; Lovell, B.C. Graph embedding discriminant analysis on Grassmannian manifolds for improved image set matching. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2705–2712. [Google Scholar]

- Yan, Y.; Ricci, E.; Subramanian, R.; Liu, G.; Sebe, N. Multitask linear discriminant analysis for view invariant action recognition. IEEE Trans. Image Process. 2014, 23, 5599–5611. [Google Scholar] [CrossRef]

- Jiang, J.; Hu, R.; Wang, Z.; Cai, Z. CDMMA: Coupled discriminant multi-manifold analysis for matching low-resolution face images. Signal Process. 2016, 124, 162–172. [Google Scholar] [CrossRef]

- Markovitz, A.; Sharir, G.; Friedman, I.; Zelnik-Manor, L.; Avidan, S. Graph embedded pose clustering for anomaly detection. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10536–10544. [Google Scholar]

- Manessi, F.; Rozza, A.; Manzo, M. Dynamic graph convolutional networks. Pattern Recognit. 2020, 97, 107000. [Google Scholar] [CrossRef]

- Cai, J.; Fan, J.; Guo, W.; Wang, S.; Zhang, Y.; Zhang, Z. Efficient deep embedded subspace clustering. In Proceedings of the 2022 IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 1–10. [Google Scholar]

- Islam, A.; Radke, R. Weakly supervised temporal action localization using deep metric learning. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 547–556. [Google Scholar]

- Ruan, Y.; Xiao, Y.; Hao, Z.; Liu, B. A nearest-neighbor search model for distance metric learning. Inf. Sci. 2021, 552, 261–277. [Google Scholar] [CrossRef]

- Rahimi, S.; Aghagolzadeh, A.; Ezoji, M. Human action recognition based on the Grassmann multi-graph embedding. Signal, Image Video Process. 2019, 13, 271–279. [Google Scholar] [CrossRef]

- Yu, J.; Kim, D.Y.; Yoon, Y.; Jeon, M. Action matching network: Open-set action recognition using spatio-temporal representation matching. Vis. Comput. 2020, 36, 1457–1471. [Google Scholar] [CrossRef]

- Peng, W.; Shi, J.; Zhao, G. Spatial temporal graph deconvolutional network for skeleton-based human action recognition. IEEE Signal Process. Lett. 2021, 28, 244–248. [Google Scholar] [CrossRef]

- Fung, G.M.; Mangasarian, O.L. Multicategory Proximal Support Vector Machine Classifiers. Mach. Learn. 2005, 59, 77–97. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Wu, F.; Nie, F.; Shen, H.T.; Zhuang, Y.; Hauptmann, A.G. Web and Personal Image Annotation by Mining Label Correlation With Relaxed Visual Graph Embedding. IEEE Trans. Image Process. 2012, 21, 1339–1351. [Google Scholar] [CrossRef]

- Jhuang, H.; Gall, J.; Zuffi, S.; Schmid, C.; Black, M.J. Towards Understanding Action Recognition. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3192–3199. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A Large Video Database for Human Motion Recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Ma, Z.; Nie, F.; Yang, Y.; Uijlings, J.R.R.; Sebe, N. Web Image Annotation Via Subspace-Sparsity Collaborated Feature Selection. IEEE Trans. Multimed. 2012, 14, 1021–1030. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).