Abstract

Three-dimensional object detection plays a crucial role in achieving accurate and reliable autonomous driving systems. However, the current state-of-the-art two-stage detectors lack flexibility and have limited feature extraction capabilities to effectively handle the disorder and irregularity of point clouds. In this paper, we propose a novel network called FANet, which combines the strengths of PV-RCNN and PAConv (position adaptive convolution). The goal of FANet is to address the irregularity and disorder present in point clouds. In our network, the convolution operation constructs convolutional kernels using a basic weight matrix, and the coefficients of these kernels are adaptively learned by LearnNet from relative points. This approach allows for the flexible modeling of complex spatial variations and geometric structures in 3D point clouds, leading to the improved extraction of point cloud features and generation of high-quality 3D proposal boxes. Compared to other methods, extensive experiments on the KITTI dataset have demonstrated that the FANet exhibits superior 3D object detection accuracy, showcasing a significant improvement in our approach.

1. Introduction

Point cloud data constitute a significant modality of three-dimensional (3D) scene information, encompassing a richer set of attributes, including but not limited to dimensions (length, width, and height), velocity, and reflection angles, when compared to images. The utilization of point cloud detection holds immense potential across diverse domains, including autonomous driving, robot navigation, and virtual reality [,]. Presently, the development of three-dimensional object detection algorithms that leverage point cloud data has emerged as a focal point within the research community. Nevertheless, the accurate identification of three-dimensional objects continues to pose a substantial challenge owing to the inherent irregularity and disorderliness inherent in point cloud data.

Researchers have proposed a number of solutions for 3D object detection, which can be broadly classified into two categories [], namely voxel-based [] approaches and point-based [] approaches. The voxel-based approach converts irregular point cloud data into a regular voxel network, encodes the data points falling within the voxel and extracts features through deep learning, and uses 3D convolutional neural networks or voxel-based deep learning to detect objects. This approach can achieve excellent detection accuracy, but inevitably results in causing a loss of information and reduced fine-grained localization accuracy, which leads to reduced accuracy in object recognition. Point-based methods can easily achieve larger perceptual fields through point set abstraction, but are computationally more expensive. In addition, some researchers have recently designed convolutional kernels that deal directly with point clouds, which can be used for feature extraction and help in the subsequent generation of 3D proposal boxes. However, in practice, the lack of flexibility of the convolution kernel has led to a lack of accuracy.

In this paper, we present FANet, a highly accurate 3D object detector that incorporates key elements from PAConv and PV-RCNN for enhanced performance. The convolution operation of our network constructs kernels based on a basic weight matrix, with adaptive combination coefficients learned by the LearnNet from relative points. This enables FANet to capture multi-scale feature information including voxel semantics and original point locations. Consequently, our network excels in handling irregular and disordered point clouds, boasting robust feature extraction capabilities and the ability to accurately generate 3D proposal boxes.

2. Related Work

In terms of three-dimensional object detection with voxel-based methods [,,,,,,], VoxelNet [] divides point clouds into a certain number of voxels according to the ratio of length × width × height and divides points in point cloud space into corresponding voxels of location. It uses several individual feature coding layers for local feature extraction for each non-empty voxel. However, ignoring the sparse distribution of point cloud data in space wastes a lot of computation. Yan [] introduced new angular loss regression by improving the sparse convolution method, which reduces the disadvantages of voxel-based network models in terms of irregularity and disorder. Based on SECOND, SA-SSD [] to preserve structure information is proposed. Dense voxel fusion [] is a sequential fusion method that generates multi-scale dense voxel feature representations, improving the expressiveness in low-point-density regions. CenterPoint [] uses key point detectors and point feature regression to represent, detect, and track 3D objects. Based on a framework of dynamic graphs, DGCNN [] uses key point detectors on point clouds and point feature regression to represent and detect 3D objects and proposes an ensemble-to-set knowledge distillation method applicable to 3D detection. This method produces more efficient 3D proposal boxes; however, important geometric information may be lost due to quantization [].

In terms of three-dimensional object detection with point-based approach [,,], Qi designed early work called PointNet [] based on learning point cloud representations, which is simple and effective. Many subsequent point-based 3D object detection networks have been developed based on PointNet. Transform operations and maximum pooling in PointNet can effectively extract the global features of points, but local features cannot be obtained. PointNet++ [] proposed sampling and grouping to improve local feature extraction for PointNet. In order to further reduce the inference time, 3D-SSD [] uses only the backbone for downsampling feature extraction to complete the detection task. SE-SSD [] includes a pair of teacher and student SSDs, contains an efficient IoU-based matching strategy to filter the teacher’s soft targets, and formulates a consistency loss to align the student’s predictions with it. Pointformer [] uses local and global transformer modules to model dependencies between points. 3D-CenterNet [] can preferentially estimate the center of an object from a point cloud. This approach provides flexible receptive fields for point cloud feature learning [], however, which makes it difficult to express the complex variability in the point cloud space [].

PV-RCNN [], which is a two-stage 3D point cloud object detection method, mainly adopts a two-step strategy of point–voxel feature aggregation for accurate 3D object detection. This combines the advantages of both point-based and voxel-based methods to obtain multi-scale feature information, including multi-scale voxel semantic information and the location information of points. The voxel set abstraction module in PV-RCNN draws upon the concept in PointNet++, which is theoretically successful, but in practice has also traded off its design flexibility for effectiveness [], resulting in poor object detection accuracy [].

PAConv [] builds a convolution kernel from a basic weight matrix, whose combined coefficients are adaptively learned by LearnNet from relative points. PAConv is flexible enough to accurately model complex spatial variations and geometric structures in 3D point clouds. However, PAConv itself does not have object detection capability. Therefore, this paper forms a new 3D object detector by embedding PAConv into the PointNet++ module. The detector offers not only point-based and voxel-based advantages, such as its provision of flexible acceptance domains and efficient 3D proposal boxes, but also has good object detection accuracy.

3. Methods

Currently, the convolution used by 3D object detection in feature extraction is static []. In practical applications, this method often reduces the model accuracy and expressiveness ability due to insufficient computational resources []. This paper combines dynamic convolution to process irregular and disordered point cloud data through higher flexibility, which enables higher accuracy of 3D object detectors.

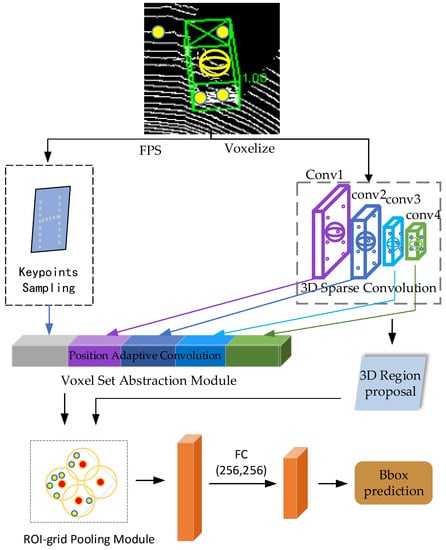

Considering the existence of the above problems, this paper proposes a high-accuracy 3D object detector that can solve the above problems through dynamic convolution. In the 3D space of the point cloud, the relationship between points is complex. PAC can adapt the selection of the convolution kernel to the changes around it, and improve the accuracy of the 3D object detector. The overall framework of FANet is shown in Figure 1. This network incorporates PAConv into the Voxel Set Abstraction module, which aggregates features from 3D sparse convolutional neural networks and keypoints collected through furthest point sampling, and encodes them together. This, combined with the high-quality proposals generated through voxel generation, results in the generation of highly accurate 3D proposal boxes.

Figure 1.

The overall framework of the FANet.

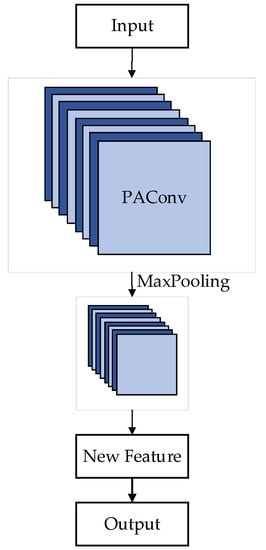

The voxel set abstraction module of PV-RCNN is capable of learning features directly from point cloud keypoints and voxels generated by the 3D sparse neural network, exhibiting a multi-level feature extraction structure. In this paper, we embed PAConv within the voxel set abstraction module to generate a dynamic network, as shown in Figure 2. In the dynamic network, dynamic network encoding is performed on each group of local neighborhoods, where the sampled and grouped points are assembled into dynamic kernels as inputs to PAConv, thereby increasing the feature dimensionality for each point to capture more informative features. Subsequently, the most significant features are selected as the new feature output through max pooling. In this process, the coordinate space dimension does not change, but the feature space dimension becomes higher, in other words, the number of feature channels increases, allowing more feature information to be obtained and facilitating subsequent accurate feature extraction.

Figure 2.

Dynamic network.

On the one hand, this network generates the gridded features from the original point cloud with 3D sparse convolution, and projects the downsampled 8× feature map to birds-eye view (bew), generating 3D prediction frames, two prediction frames for each pixel and each class, 0° and 90°, respectively. The keypoints are sampled uniformly from the surrounding area of the proposal via sectorized proposal-centric keypoint sampling (spc). On the other hand, this network determines the nearest neighbors under one radius of each grid point, and then a PointNet++ module embedded in PAConv is used to integrate the features into the features of the grid points. This particular PointNet++ module has more flexibility to better handle irregular and disordered point cloud data. After the point features of all grid points are obtained by means of multi-scale feature fusion, a 256-dimensional proposal feature is obtained through the use of a two-layer multi-layer perceptron (MLP). The above feature information is fused with multi-scale features by the voxel set abstraction module to obtain new multi-scale features. The new features are refined to derive a more accurate 3D prediction box.

Position Adaptive Convolution Embedded Network

In the 3D space, the relationship between points is very different from the relationship between points in the 2D plane. In the 2D plane space, the features learned by using the convolutional neural network can reflect the correlation between points well, but in the 3D space, due to the disorder and irregularity of points, using the convolutional kernel operator to learn features in the 2D space will make the correlation poor, leading to inaccurate detection.

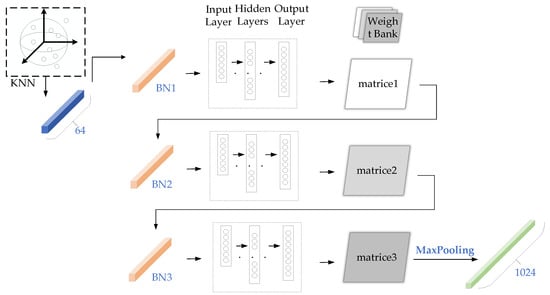

Therefore, we redesigned the convolutional kernel function to enable it to learn point features dynamically. As shown in Figure 3, we first defined a weight library consisting of several weight matrices. Next, we designed a vector of LearnNet learning coefficients to combine the weight matrices based on the location of the points. Finally, we generated dynamic kernels by combining the weight matrices and their associated positional adaptation coefficients. In this way, the kernel is constructed in a data-driven manner, giving our approach greater flexibility than 2D convolution to better handle irregular and disordered point cloud data. In addition, we make the learning process less complex by combining weight matrices rather than forcing the kernels to be predicted from the location of the points.

Figure 3.

The framework of position adaptive convolution.

Firstly, a weight bank consisting of several weight matrices is defined. Then, the LearnNet is designed. The weighting matrix is combined according to the location of the points. Finally, a dynamic kernel is generated by combining the weighting matrix and its related location adaptive coefficients. Where the weight bank W is defined as Formula (1), is the weight matrix, CP is the input channel, and Cq is the output channel. T is the number of weight matrices controlling the weights stored in the weight repository W. The larger the value of T, the more diverse the weight matrix assembled by the kernel. However, too many weight matrices can cause redundancy and heavy memory and computing overheads. Experiments [] have shown that optimal accuracy can be achieved when T is 8. The next step is to create a mapping of the discrete kernel of the weight matrix to the continuous 3D space. We use LearnNet to learn coefficients based on the location relationships of the points to combine the weight matrix and produce a dynamic kernel that fits the point cloud. The input to LearnNet is a specific positional relationship between the center point and the neighboring point of a local region in the point cloud; LearnNet predicts the location adaptive coefficient of each weight matrix WT, and the expression of the point location relationship P is as in Formula (2).

Expression of the output vectors Ai,j of LearnNet:

In Formula (3), δ is a non-linear function implemented for use with a multi-layer sensor; Relu is the activation function; Softmax is a normalization function; and the score of the Softmax output is in the (0,1) range. This normalization ensures that each weight matrix is selected with probability, with higher scores indicating stronger relationships between the position input and the weight matrix. The higher the score, the stronger the relationship between the location input and the weight matrix. PAConv’s dynamic kernel K combines the weight matrix in W with the input characteristic Fp, and then multiplies it by the corresponding coefficient Ai,J of the LearnNet prediction to obtain the dynamic kernel K, which is seen in Formula (5):

With the dynamic kernel, the generated adaptive dynamic convolution can learn features more flexibly; so, the larger the size of the weight bank, the greater the flexibility and availability of the weight matrix. This kernel assembly strategy allows for the flexible modeling of irregular geometries of point clouds.

Weight matrices are randomly initialized and may converge to similar matrices, which does not guarantee diversity in the weight matrices. Therefore, to avoid this situation, this paper penalizes the correlation between different matrices by a weight regularization function, which ensures diversity in weights, making the generated kernels also diverse, which is defined as follows:

This approach allows the network to be more flexible in learning features from point clouds, to represent the complex spatial variation in point clouds with relative accuracy, and to have sufficient point cloud geometry information to enable more accurate object detection, resulting in a significant improvement in object detection accuracy over the original method.

4. Experimental Results and Analysis

This experiment was completed using the Ubuntu 20.04 operating system. The experimental environment consisted of an IntelI CoITM) i7-11700H for the central processor (CPU), NVIDIA GeForce RTX 3070 laptop, PyTorch version 1.8.1, and Python version 3.9.

4.1. Dataset

Currently, the KITTI [] dataset, Waymo [] dataset, and nuScenes dataset are commonly used for 3D object detection. The KITTI dataset is one of the most important test sets in the field of auto-driving. It plays an important role in 3D object detection. Therefore, this paper chose the KITTI 3D point cloud dataset as the training and test set for the experiment.

The KITTI dataset contains 7481 training samples and 7518 test samples. The dataset consists of four parts: Calib, Image, Label, and Velodyne. Calib is the camera calibration file; Image is a two-dimensional image data file; and Label is a data label file (there is no label in the test set). There are three types of objects labeled by the data: car, cyclist, and pedestrian. Velodyne is a point cloud file. For efficiency reasons, Velodyne scans are stored as floating-point binary files, with each point stored in its (x, y, z) coordinates and additional reflectance value R.

This network model uses, in order, 80% of the training sample data to train the model and the remaining 20% for validation [].

4.2. Evaluation Metrics

In this paper, the above-mentioned optimized network was accurately compared by analyzing Precision, Recall, AP (average precision), etc. The formulae are as follows.

where TP, TF, and FN denote true positive, false positive, and false negative, respectively. AP and mAP are commonly used as evaluation metrics in the field of object detection, reflecting the overall performance of the model. The value of AP is calculated from the area formed between the Precision, Recall, and the horizontal and vertical axes. The value of mAP represents the average of all of the AP values, which is shown in the formula.

4.3. Data and Analysis

To verify the effectiveness of the algorithm, the PV-RCNN before and after optimization will run in the same environment. Compare mean average precision with other advanced methods.

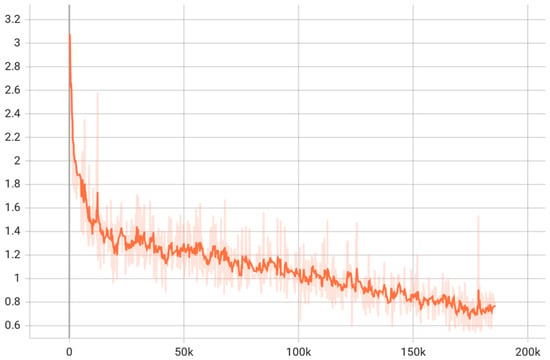

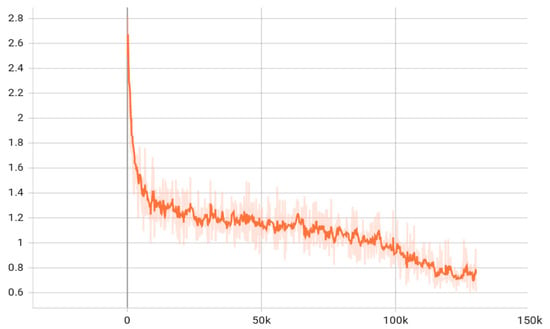

According to Figure 4 and Figure 5, FANet has faster network convergence than PV-RCNN. FANet only needs a limited number of iterations to achieve good results, while PV-RCNN needs more iterations to achieve the same effect as FANet.

Figure 4.

Loss of PC-RCNN.

Figure 5.

Loss of FANet.

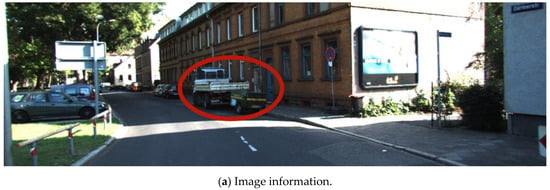

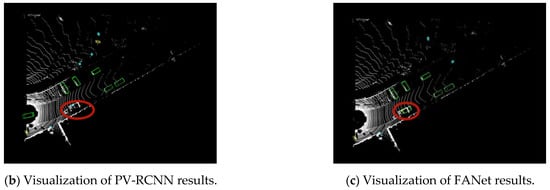

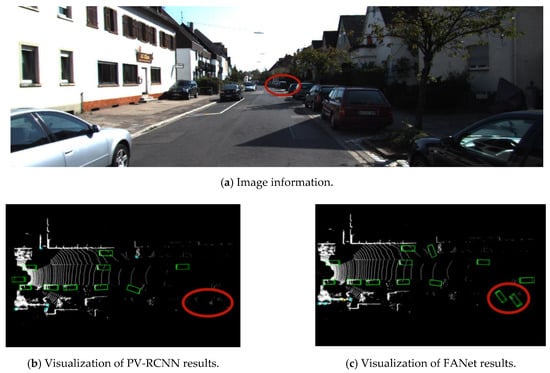

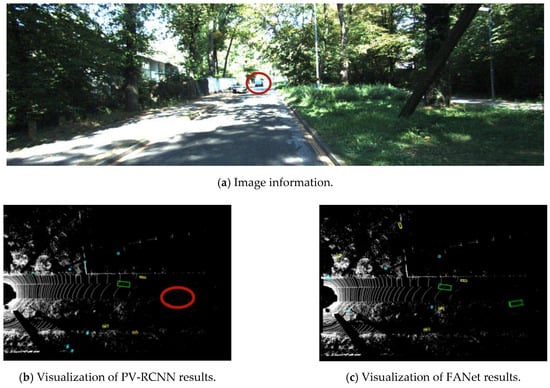

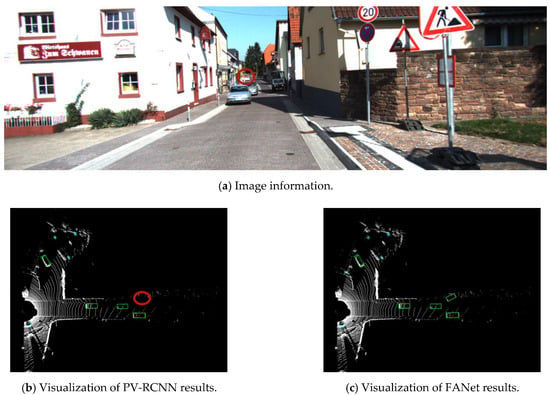

In this paper, to show that our method has higher accuracy than PV-RCNN, we selected five random sets of images for comparison. The (a) of each set of images is the camera photo, the (b) of each set of images is the result of the point cloud information identified using PV-RCNN, and the (c) of each set of images is the result of the point cloud information identified using FANet. We used the image information to determine which method was better and more accurate for object detection.

As shown in Figure 6, according to the image information, FANet can recognize the truck on the front right, while PV-RCNN cannot accurately recognize it.

Figure 6.

Scene 1.

As shown in Figure 7, according to the image information, FANet can recognize the car in front, while PV-RCNN cannot accurately recognize it.

Figure 7.

Scene 2.

As shown in Figure 8, according to the image information, FANet can recognize the distant car on the front right, while PV-RCNN cannot accurately recognize it.

Figure 8.

Scene 3.

As shown in Figure 9, according to the image information, FANet can recognize the distant car in front, while PV-RCNN cannot accurately recognize it.

Figure 9.

Scene 4.

As shown in Figure 10, according to the image information, FANet can recognize the distant car in front, while PV-RCNN cannot accurately recognize it.

Figure 10.

Scene 5.

In the above five sets of scenes, the image information provided strong evidence that FANet outperforms PV-RCNN in terms of object detection accuracy. Specifically, FANet is able to identify objects in a variety of scenes, demonstrating its superior ability to detect objects in complex and distant scenes. In contrast, PV-RCNN was unable to accurately identify objects in these distant scenes. When we consider both the camera photos and the point cloud data, it becomes even more apparent that FANet is a more accurate 3D object detector than PV-RCNN. The point cloud data allow our method to extract key features from the scene, and our approach enables us to effectively deal with point cloud irregularity and disorder. These features, combined with the ability to accurately recognize objects in complex scenes, lead to significantly improved accuracy compared to PV-RCNN.

In this paper, we recorded the average accuracy of each type of object recognition with an IoU threshold of 0.7 (car) and 0.5 (pedestrian and cyclist). Table 1, Table 2 and Table 3 show the comparison between the object detection effect of the PV-RCNN network model before and after optimization for car, pedestrian, and cyclist. Table 4 shows how our method compares with state-of-the-art methods with respect to mAP.

Table 1.

List of symbols.

Table 2.

Comparison between car class recognition accuracy of PV-RCNN and FANet.

Table 3.

Comparison between pedestrian class recognition accuracy of PV-RCNN and FANet.

Table 4.

Comparison between cyclist class recognition accuracy of PV-RCNN and FANet.

Table 2, Table 3 and Table 4 display the experimental results of the FANet model on the KITTI validation dataset. The accuracy improvements achieved using FANet are noteworthy, with almost all of the projects surpassing the state-of-the-art PV-RCNN method. In particular, the average accuracy of the car class improved by 3.05%, demonstrating the effectiveness of the proposed new network in improving the target detection accuracy. The pedestrian class showed the highest increase, with a maximum improvement of 1.76% in terms of average precision. Similarly, the average precision of the cyclist class increased by up to 3.07%. Although PV-RCNN has a slight advantage in pedestrian and cyclist BEV detection, the proposed FANet model demonstrates superior overall performance in terms of accuracy improvement. These results validate the effectiveness of the proposed PAConv module in enhancing the performance of 3D object detection models.

Table 5 illustrates the evaluation of our proposed method on the KITTI benchmark dataset, providing a comprehensive comparison with state-of-the-art techniques, including PV-RCNN++ [], a novel extension of PV-RCNN introduced by Shi. The results highlight the exceptional performance of our method across all three difficulty levels: easy, moderate, and hard. Our method consistently outperformed PV-RCNN, showcasing a notable accuracy improvement of up to 2.49%. Furthermore, when compared to PV-RCNN++, our method still exhibited superior performance, underscoring its distinct advantage in achieving high precision and recall rates for 3D object detection. Additionally, our method secured first place in the easy and moderate levels, as well as a commendable second place in the hard level among the evaluated methods. It is worth noting that the performance gap between our method and the first-place approach in the hard level was a mere 0.64%, indicating a negligible difference. These comparative findings reinforce the exceptional competitiveness of our proposed approach. The comprehensive evaluation and comparison with existing techniques demonstrate that our approach operates at a level on par with or even surpassing the current state-of-the-art methods. In conclusion, the evaluation results provide strong evidence of the effectiveness of our proposed method, validating its credibility and establishing its superiority over PV-RCNN.

Table 5.

mAP comparison between the accuracy of different algorithms on the KITTI dataset. Results are evaluated according to average accuracy and 40 recall positions.

5. Conclusions

In this paper, we propose a high-precision 3D object detector, FANet, which combines the advantages of both PV-RCNN and PAConv.

FANet is an advanced 3D object detection method that improves accuracy by dynamically adjusting the weights of points according to their relative positions in a point cloud. FANet combines an adaptive learning mechanism with a region proposal network and a point–voxel feature encoding module. An experimental evaluation of the KITTI dataset shows that FANet outperforms PV-RCNN, with an average accuracy improvement of 3.07% for cyclists, 3.05% for cars, and 1.76% for pedestrians. In addition, FANet outperforms other state-of-the-art methods, affirming its competitiveness and effectiveness in 3D object detection. FANet significantly improves accuracy and demonstrates the potential to integrate multiple existing methods to further advance the field.

While the FANet method shows a significant improvement in 3D object detection accuracy, it still has limitations in certain challenging scenarios, such as adverse weather conditions like rain and fog. To address this issue, we plan to optimize the network for such scenarios in future work.

Author Contributions

Conceptualization, F.Z. and J.Y.; methodology, F.Z. and J.Y.; software, J.Y. and Y.Q.; validation, F.Z. and J.Y.; formal analysis, F.Z. and J.Y.; investigation, Y.Q.; data curation, F.Z.; funding acquisition, F.Z. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J.J. An automated driving systems data acquisition and analytics platform. Transp. Res. Part C Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Meng, Z.; Xia, X.; Xu, R.; Liu, W.; Ma, J.J. HYDRO-3D: Hybrid Object Detection and Tracking for Cooperative Perception Using 3D LiDAR. IEEE Trans. Intell. Veh. 2023, 1–13. [Google Scholar] [CrossRef]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H.J. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Vis. Comput. 2021, 131, 531–551. [Google Scholar] [CrossRef]

- Li, B. 3D Fully Convolutional Network for Vehicle Detection in Point Cloud. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1513–1518. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.C.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Engelcke, M.; Rao, D.; Wang, D.Z.; Tong, C.H.; Posner, I. Vote3deep: Fast object detection in 3d point clouds using efficient convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1355–1361. [Google Scholar]

- Ye, Y.Y.; Chen, H.J.; Zhang, C.; Hao, X.L.; Zhang, Z.X. SARPNET: Shape attention regional proposal network for liDAR-based 3D object detection. Neurocomputing 2020, 379, 53–63. [Google Scholar] [CrossRef]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel r-cnn: Towards high performance voxel-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; pp. 1201–1209. [Google Scholar]

- Yang, Z.T.; Sun, Y.A.; Liu, S.; Shen, X.Y.; Jia, J.Y. STD: Sparse-to-Dense 3D Object Detector for Point Cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1951–1960. [Google Scholar]

- Mahmoud, A.; Hu, J.S.; Waslander, S.L. Dense voxel fusion for 3D object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–6 January 2023; pp. 663–672. [Google Scholar]

- Li, Y.; Chen, Y.; Qi, X.; Li, Z.; Sun, J.; Jia, J.J. Unifying voxel-based representation with transformer for 3d object detection. arXiv 2022, arXiv:2206.00630. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.X.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Structure Aware Single-Stage 3D Object Detection from Point Cloud. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 11870–11879. [CrossRef]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Wang, Y.; Solomon, J.M. Object dgcnn: 3d object detection using dynamic graphs. Adv. Neural Inf. Process. Syst. 2021, 34, 20745–20758. [Google Scholar]

- Mao, J.; Xue, Y.; Niu, M.; Bai, H.; Feng, J.; Liang, X.; Xu, H.; Xu, C. Voxel Transformer for 3D Object Detection. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Electric Network, Montreal, BC, Canada, 11–17 October 2021; pp. 3144–3153. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. SASA: Semantics-Augmented Set Abstraction for Point-based 3D Object Detection. Proc. Conf. AAAI Artif. Intell. 2022, 36, 221–229. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet plus plus: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3d single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C.-W. SE-SSD: Self-Ensembling Single-Stage Object Detector from Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electric Network, Nashville, TN, USA, 19–25 June 2021; pp. 14489–14498. [Google Scholar]

- Pan, X.; Xia, Z.; Song, S.; Li, L.E.; Huang, G. 3d object detection with pointformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7463–7472. [Google Scholar]

- Wang, Q.; Chen, J.; Deng, J.; Zhang, X.J. 3D-CenterNet: 3D object detection network for point clouds with center estimation priority. Pattern Recognit. 2021, 115, 107884. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Sheng, H.L.; Cai, S.J.; Liu, Y.; Deng, B.; Huang, J.Q.; Hua, X.S.; Zhao, M.J. Improving 3D Object Detection with Channel-wise Transformer. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Electric Network, Montreal, BC, Canada, 11–17 October 2021; pp. 2723–2732. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection from Point Cloud with Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharyya, P.; Czarnecki, K.J. Deformable PV-RCNN: Improving 3D object detection with learned deformations. Int. J. Comput. Vis. 2022, 131, 531–551. [Google Scholar] [CrossRef]

- Xu, M.T.; Ding, R.Y.; Zhao, H.S.; Qi, X.J. PAConv: Position Adaptive Convolution with Dynamic Kernel Assembling on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electric Network, Nashville, TN, USA, 19–25 June 2021; pp. 3172–3181. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6420–6429. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R.J. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).