A 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror

Abstract

1. Introduction

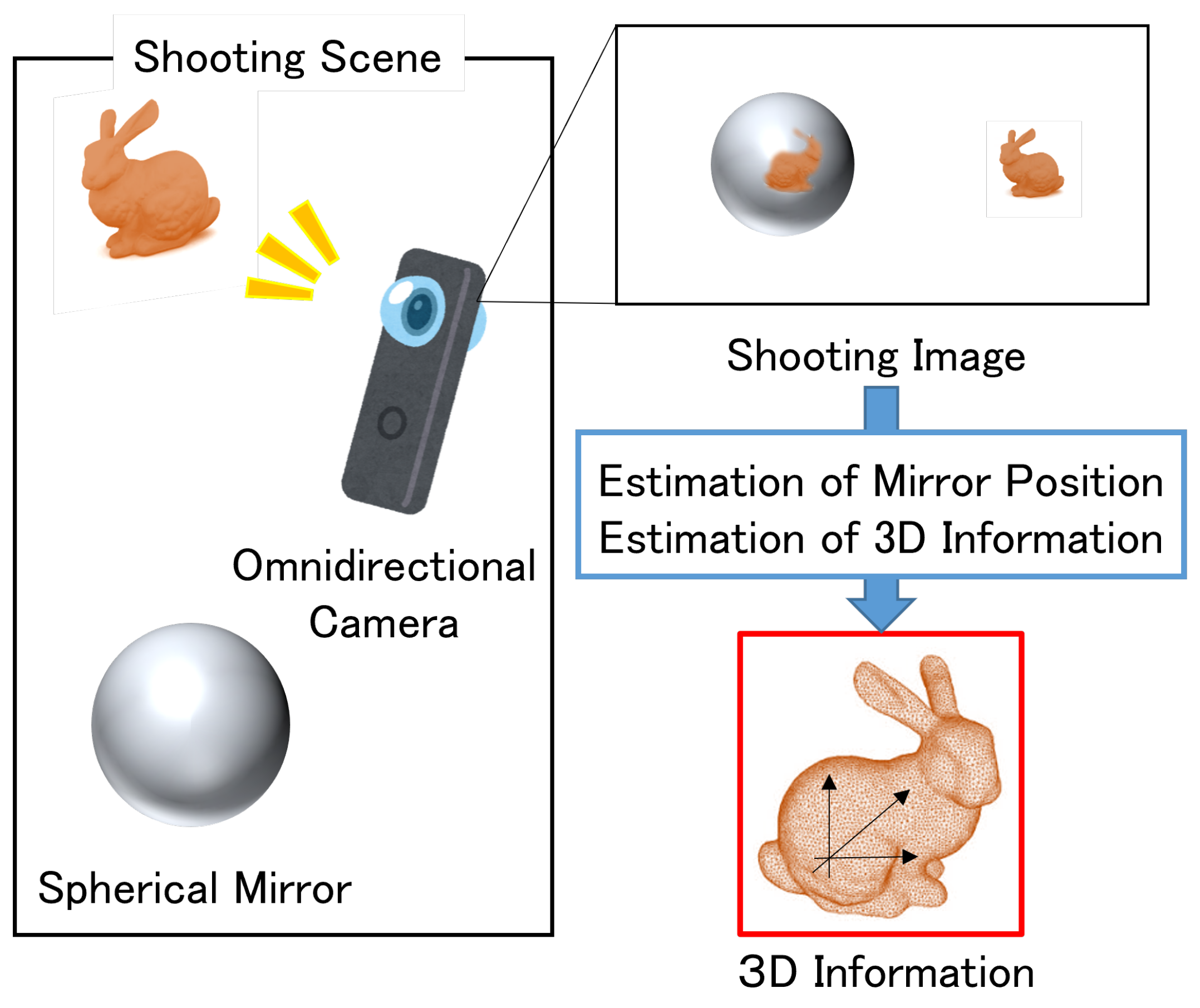

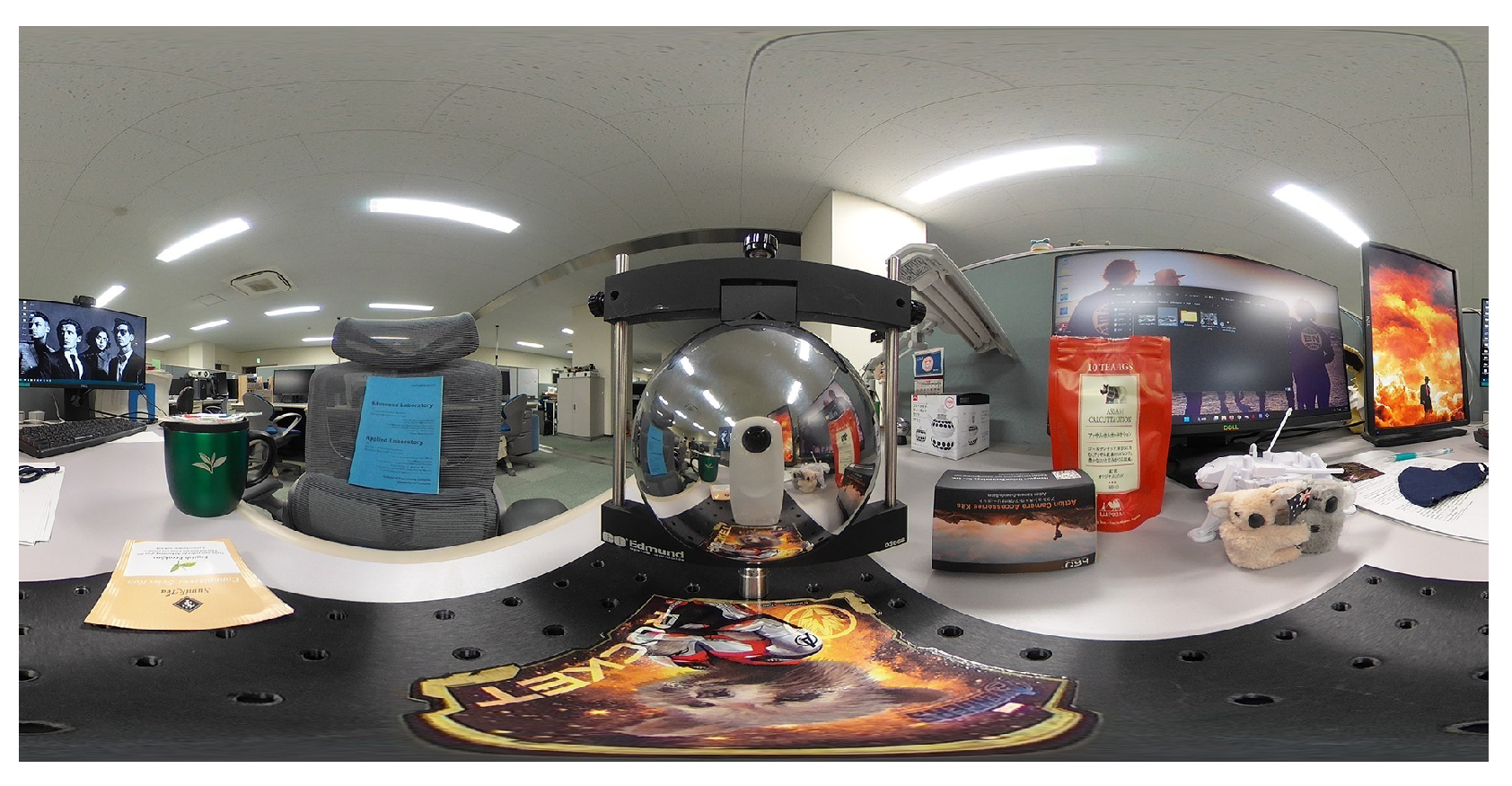

- Proposal of a catadioptric imaging system that combines an omnidirectional camera equipped with a fisheye lens and a single spherical mirror.

- Proposal of a compact 3D information estimation method for the scene shown in Figure 1, which does not require prior knowledge by one-shot imaging.

2. Related Works

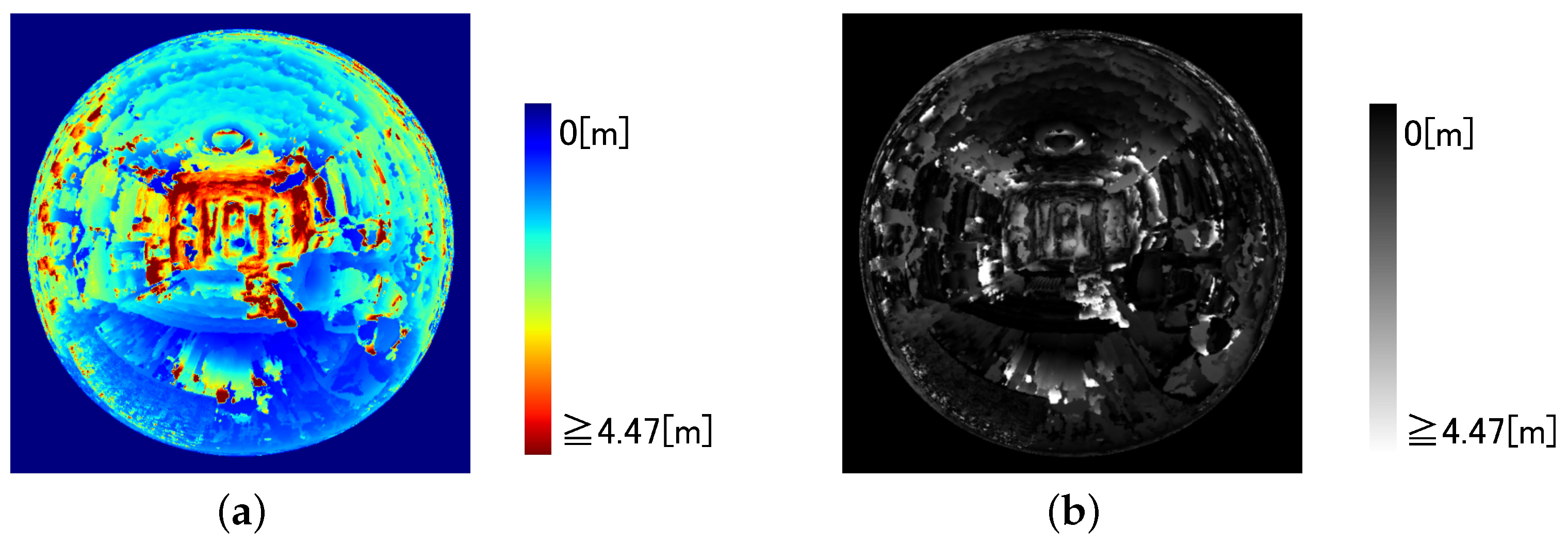

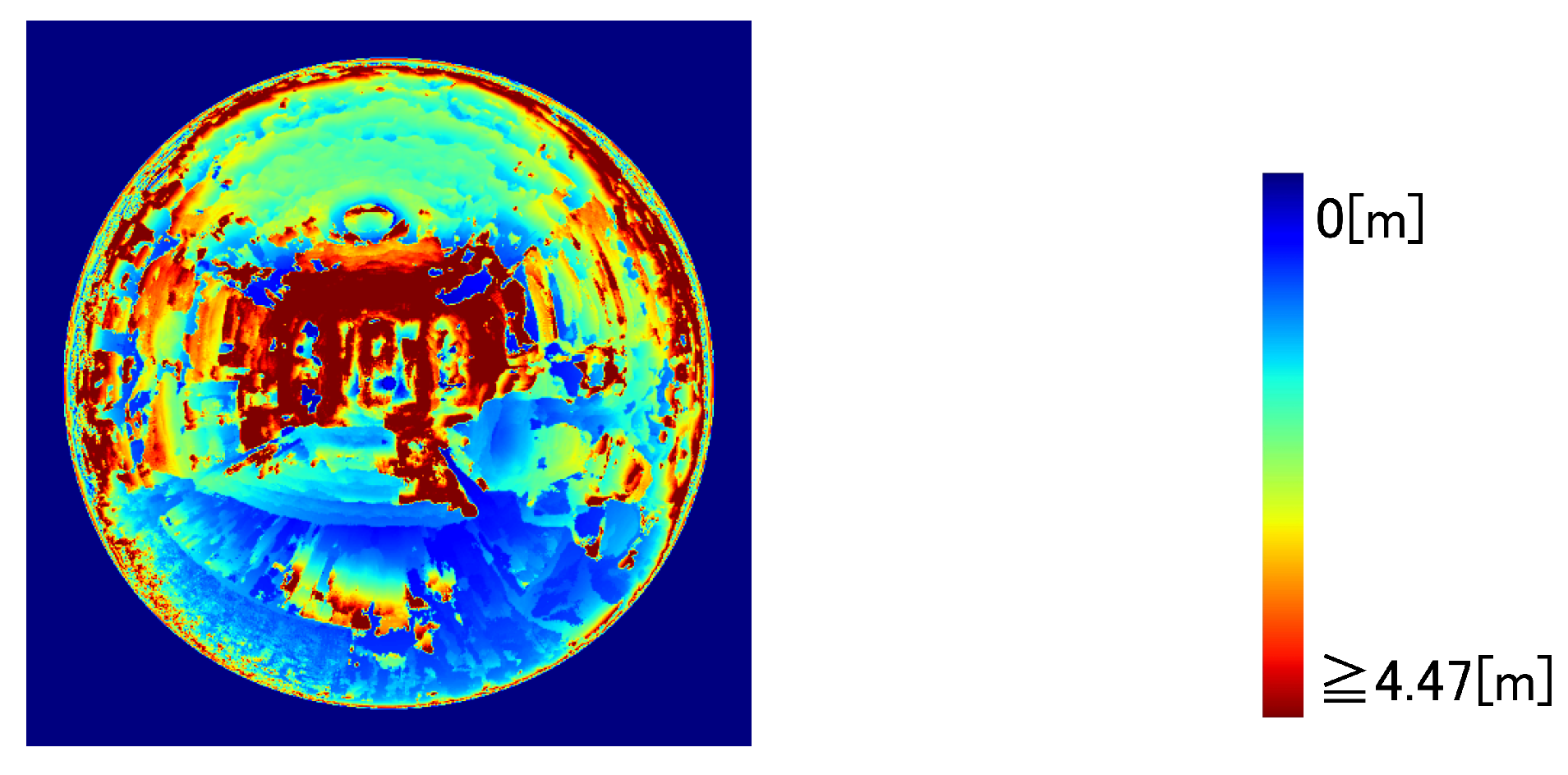

2.1. Projection Models for the Catadioptric Imaging Systems

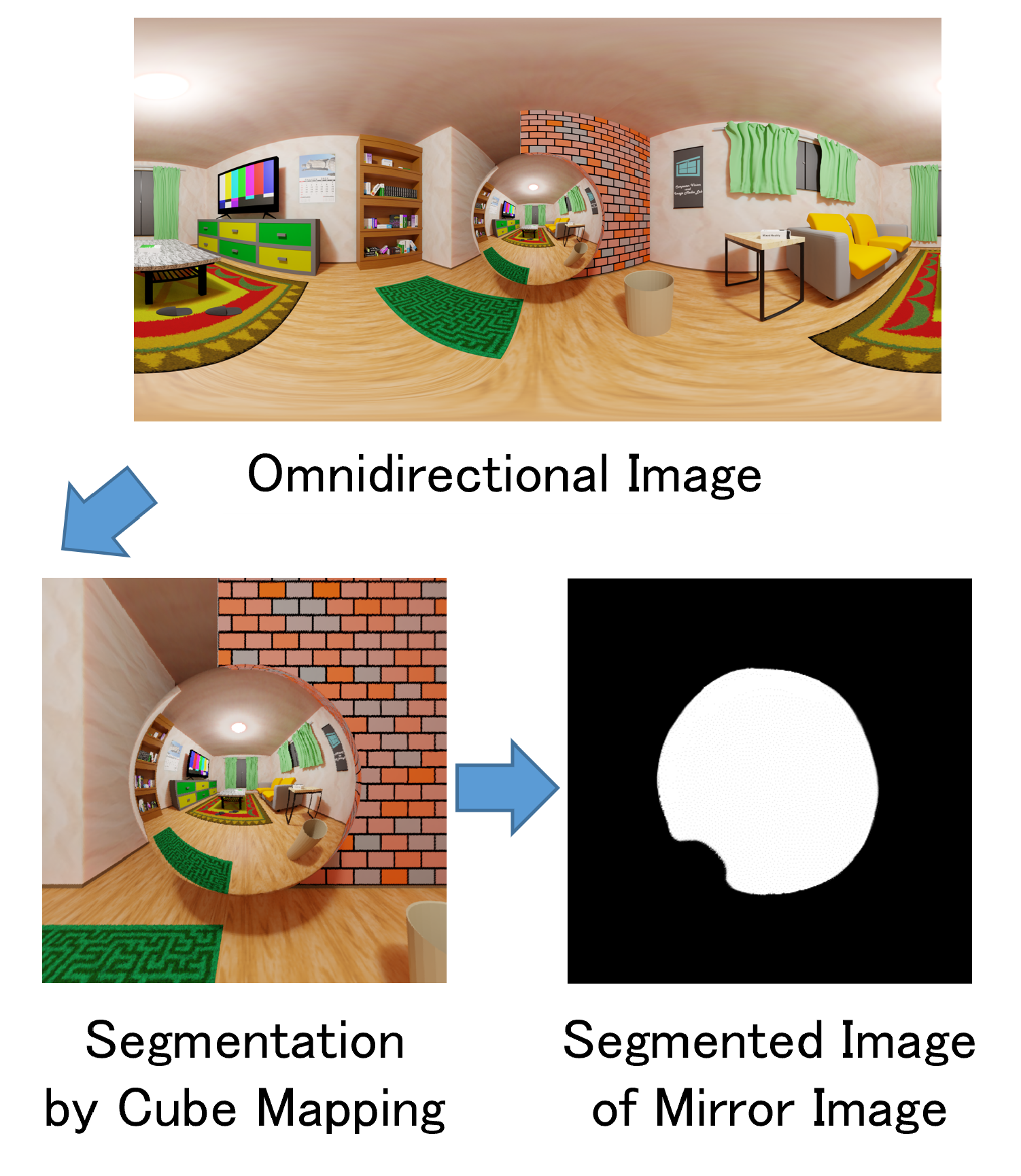

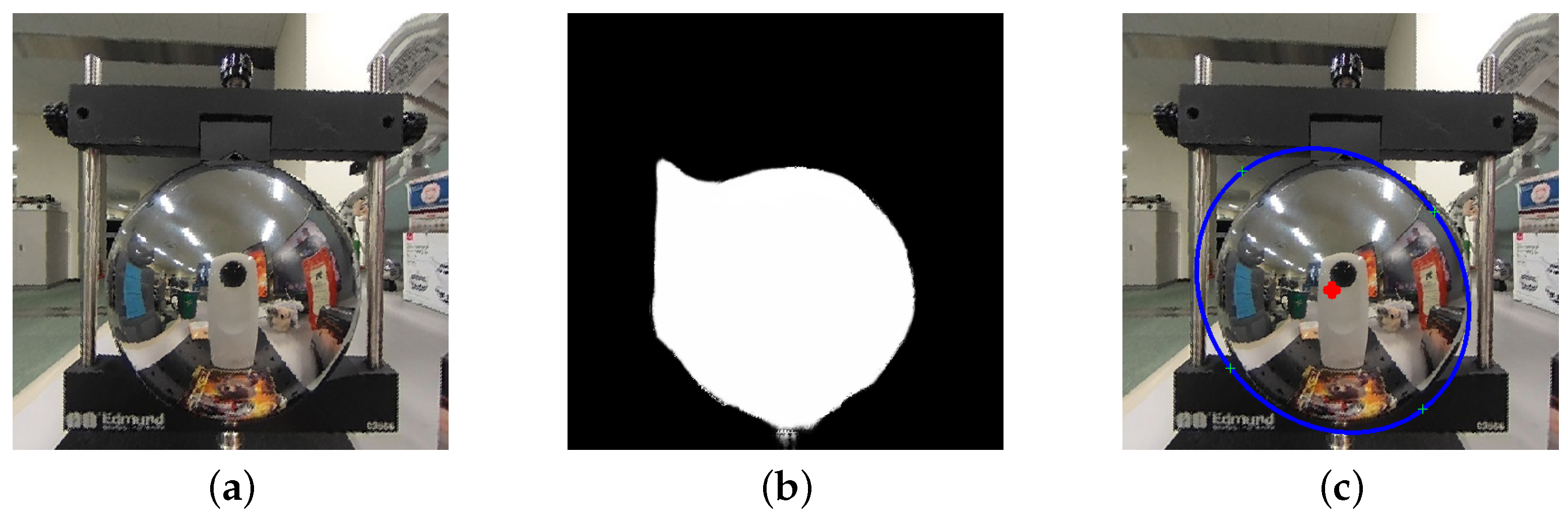

2.2. Segmentation of Mirror Image Region

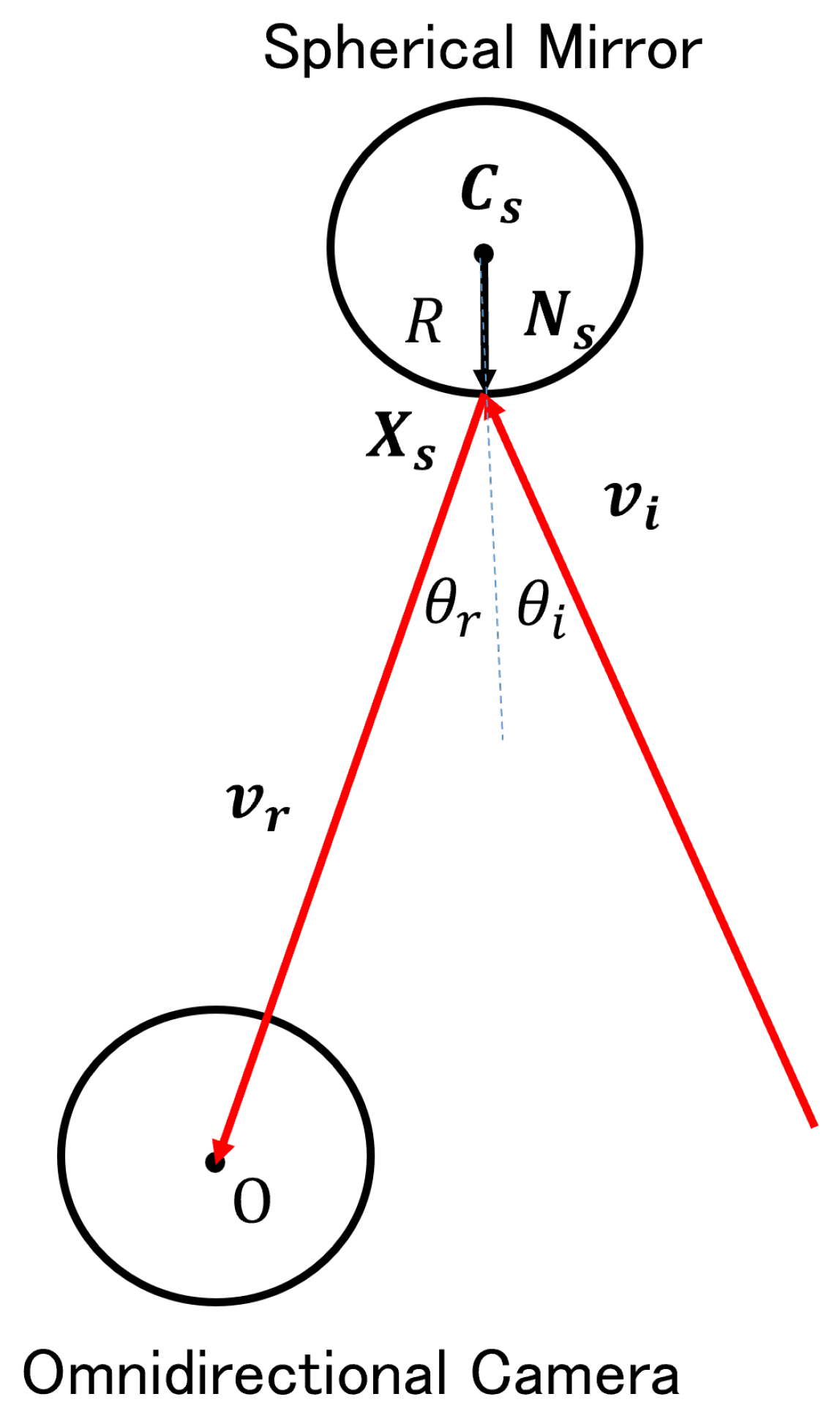

3. 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror

4. 3D Position Estimation of Spherical Mirror

4.1. Segmentation of Mirror Image Region in an Omnidirectional Image

4.2. 3D Position Estimation Based on Elliptical Shape

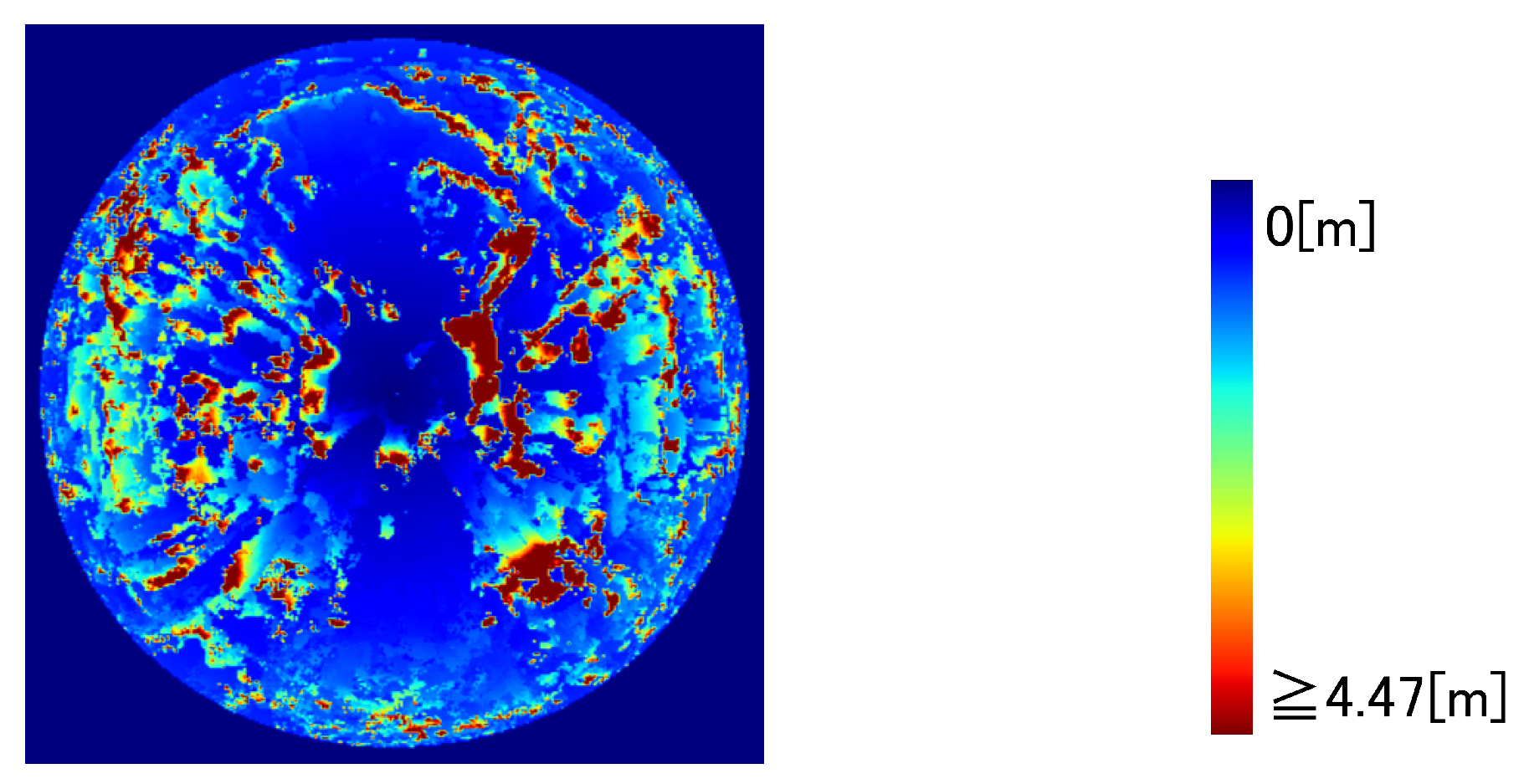

5. 3D Estimation of Observation Scene

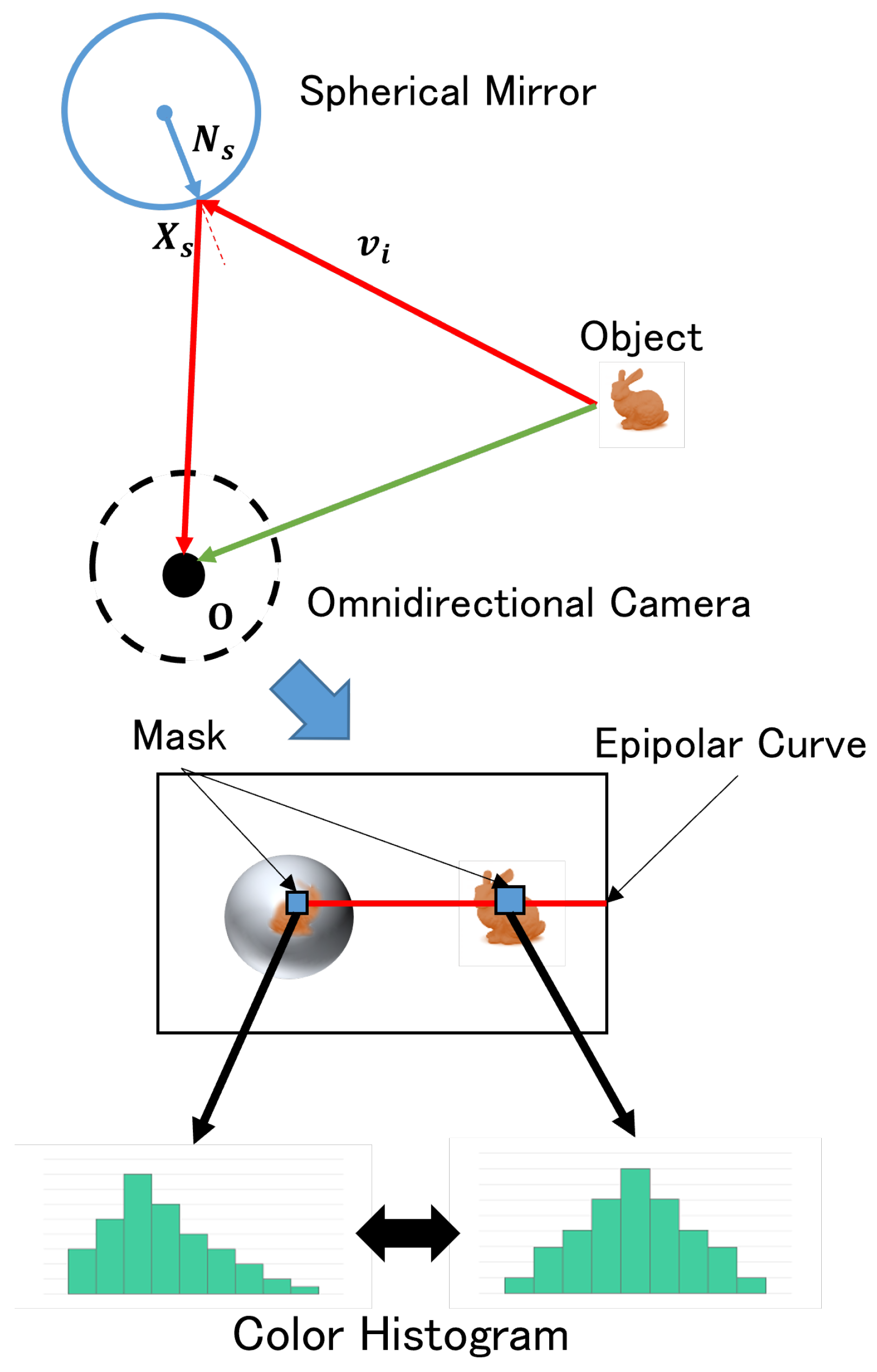

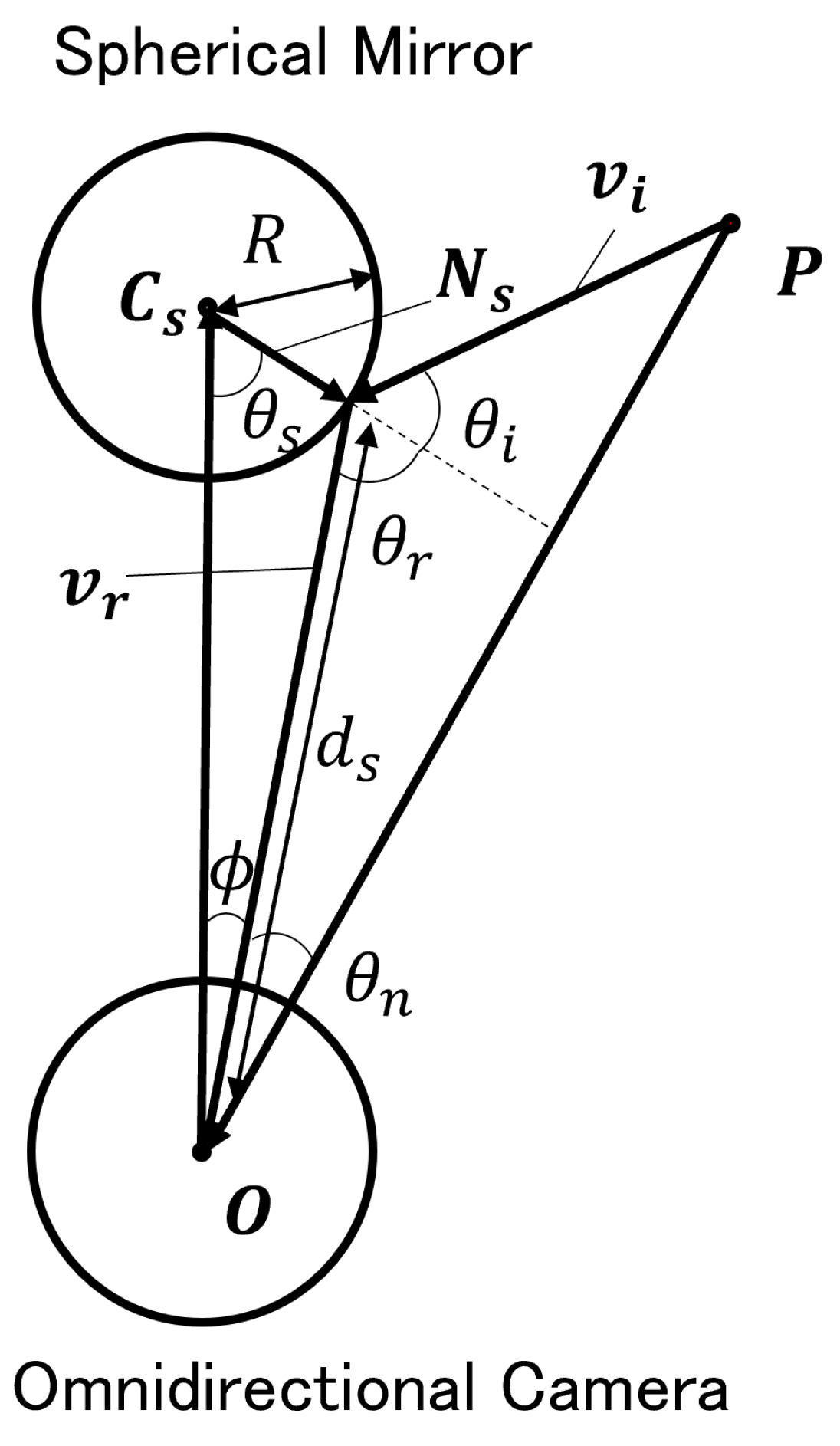

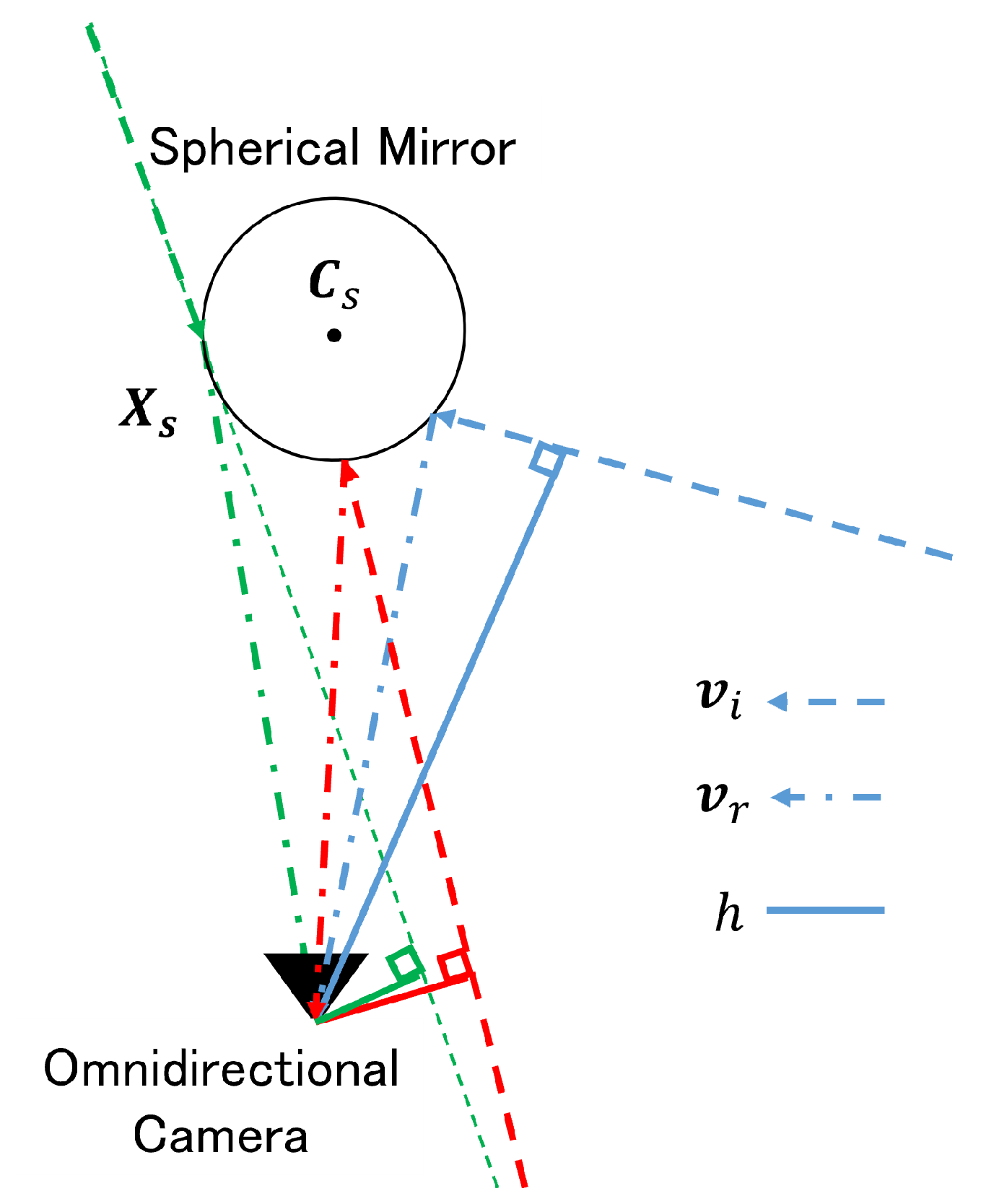

5.1. Lightpass Estimation to the Object Reflected in Mirror Image

5.2. Corresponding Point Search by Color Histogram

5.3. Effect of Errors in 3D Position Estimation of Spherical Mirror on 3D Estimation

6. Simulation

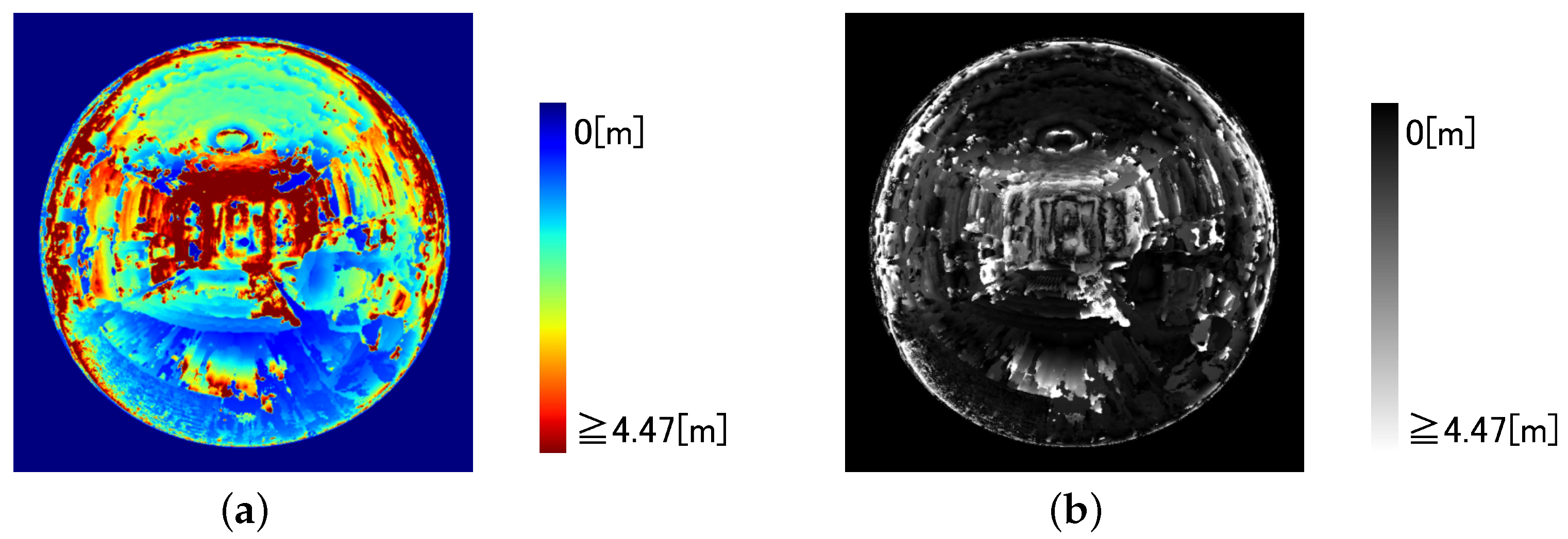

6.1. Simulation with CG Model of a Room

6.2. Discussion of Room Model Simulation

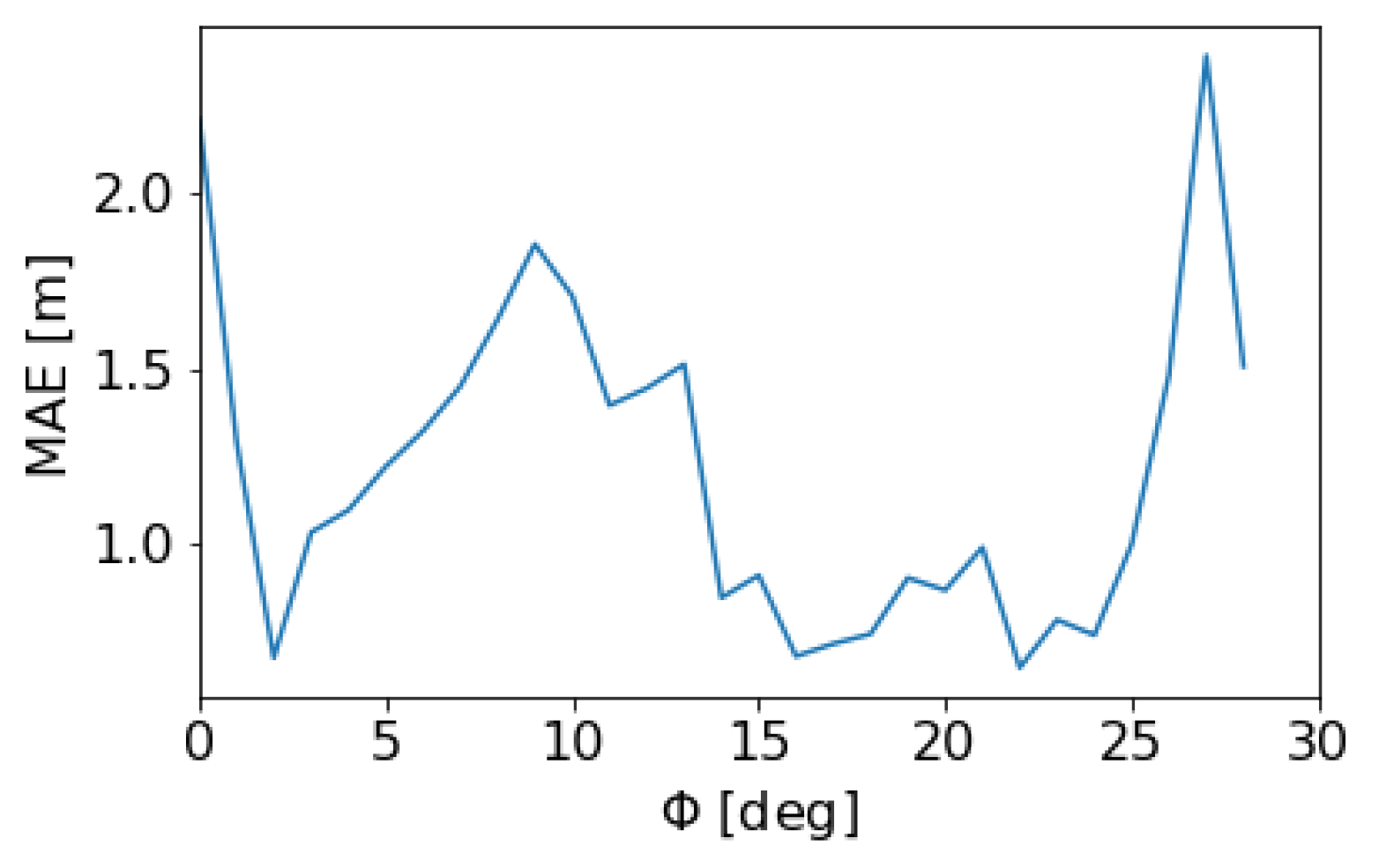

6.3. Verification of the Effect of 3D Position Estimation Error of a Spherical Mirror on 3D Information Estimation

6.4. Simulation with Image in Real-World

6.5. Limitation

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Behroozpour, B.; Sandborn, P.; Wu, M.; Boser, B. Lidar System Architectures and Circuits. IEEE Commun. Mag. 2017, 55, 135–142. [Google Scholar] [CrossRef]

- Dhond, U.; Aggarwal, J. Structure from Stereo—A Review. IEEE Trans. Syst. Man Cybernrtics 1989, 19, 1489–1510. [Google Scholar] [CrossRef]

- Zioulis, N.; Karakottas, A.; Zrpalas, D.; Daras, P. OmniDepth: Dense Depth Estimation for Indoors Spherical Panoramas. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yamazawa, K.; Yagi, Y.; Yachida, M. HyperOmni Vision: Visual Navigation with an Omnidirectional Image Sensor. Syst. Comput. Jpn. 1997, 28, 36–47. [Google Scholar] [CrossRef]

- Chaen, A.; Yamazawa, K.; Yokoya, N.; Takemura, H. Acquisition of Three-Dimensional Information Using Omnidirectional Stereo Vision. In Proceedings of the Asian Conference on Computer Vision, Hong Kong, China, 8–10 January 1998. [Google Scholar]

- Sagawa, R.; Kurita, N.; Echigo, T.; Yagi, Y. Compound Catadioptric Stereo Sensor for Omnidirectional Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004. [Google Scholar]

- Micusik, B.; Pajdla, T. Autocalibration & 3D Reconstruction with Non-central Catadioptric Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Agrawal, A.; Taguchi, Y.; Ramalingam, S. Beyond Alhazen’s Problem: Analytical Projection Model for Non-central Catadioptric Cameras with Quadric Mirrors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 21–23 June 2011. [Google Scholar]

- Sagawa, R.; Sakai, T.; Echigo, T.; Yagi, K.; Shiba, M.; Higuchi, K.; Arakawa, T.; Yagi, Y. Omnidirectional Vision Attachment for Medical Endoscopes. In Proceedings of the IEEE Workshop on Omnidirectional Vision, Camera Networks and Non-Classical Cameras, Marseille, France, 17 October 2008. [Google Scholar]

- Fujiyama, S.; Sakaue, F.; Sato, J. Multiple View Geometries for Mirrors and Cameras. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Nobuhara, S.; Kashino, T.; Matsuyama, T.; Takeuchi, K.; Fujii, K. A Single-shot Multi-path Interference Resolution for Mirror-based Full 3D Shape Measurement with a Correlation-based ToF Camera. In Proceedings of the International Conference on 3D Vision, Stanford, CA, USA, 25–28 October 2016. [Google Scholar]

- Ahn, B.; Gkioulekas, I.; Sankaranarayanan, A. Kaleidoscopic Structured Light. ACM Trans. Graph. 2021, 40, 214. [Google Scholar] [CrossRef]

- Whelan, T.; Goesele, M.; Lovegrove, S.; Straub, J.; Green, S.; Szeliski, R.; Butterfield, S.; Verma, S.; Newcombe, R. Reconstructing Scenes with Mirror and Glass Surfaces. ACM Trans. Graph. 2018, 37, 102. [Google Scholar] [CrossRef]

- Drbohlav, O.; Chantler, M. Can Two Specular Pixels Calibrate Photometric Stereo? In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015. [Google Scholar]

- Yang, X.; Mei, H.; Xu, K.; Wei, X.; Yin, B.; Lau, R. Where Is My Mirror? In Proceedings of the International Conference of Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Lin, J.; Wang, G.; Lau, R. Progressive Mirror Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020. [Google Scholar]

- Kanatani, K.; Sugaya, Y.; Kanazawa, Y. 3D Computer Vision Computation Handbook, 1st ed.; Morikita Publishing: Tokyo, Japan, 2016; pp. 124–138. (In Japanese) [Google Scholar]

- Kanatani, K.; Liu, W. 3D Interpretation of Conics and Orthogonality. Comput. Vision Graph. Image Process. 1993, 58, 286–301. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hiruta, Y.; Xie, C.; Shishido, H.; Kitahara, I. A 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror. Appl. Sci. 2023, 13, 8348. https://doi.org/10.3390/app13148348

Hiruta Y, Xie C, Shishido H, Kitahara I. A 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror. Applied Sciences. 2023; 13(14):8348. https://doi.org/10.3390/app13148348

Chicago/Turabian StyleHiruta, Yuya, Chun Xie, Hidehiko Shishido, and Itaru Kitahara. 2023. "A 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror" Applied Sciences 13, no. 14: 8348. https://doi.org/10.3390/app13148348

APA StyleHiruta, Y., Xie, C., Shishido, H., & Kitahara, I. (2023). A 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror. Applied Sciences, 13(14), 8348. https://doi.org/10.3390/app13148348