Concrete Dam Deformation Prediction Model Research Based on SSA–LSTM

Abstract

:1. Introduction

2. LSTM and SSA Fundamentals

2.1. LSTM Principles

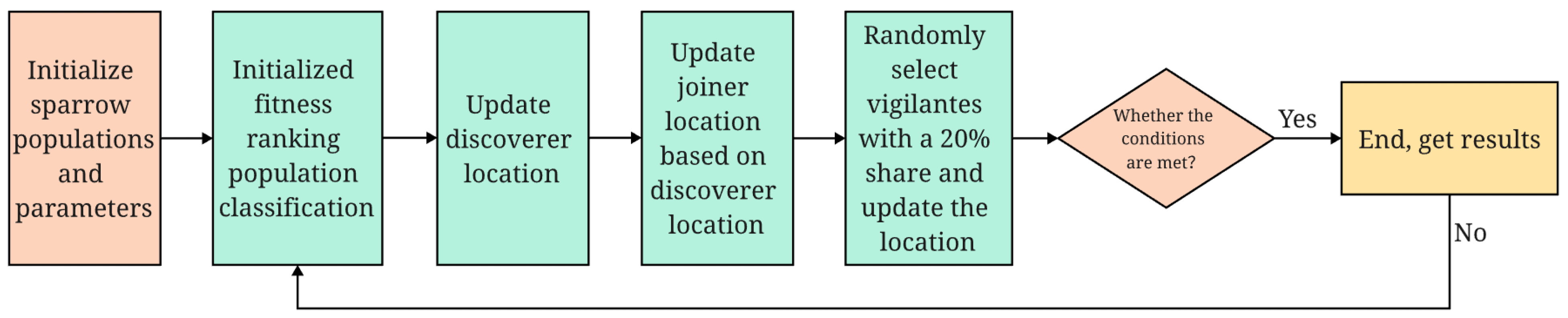

2.2. SSA Principles

3. SSA–LSTM Based Dam Deformation Prediction Model

3.1. Selection of Feature Factors and Model Parameters

3.2. Prediction Accuracy Evaluation Index

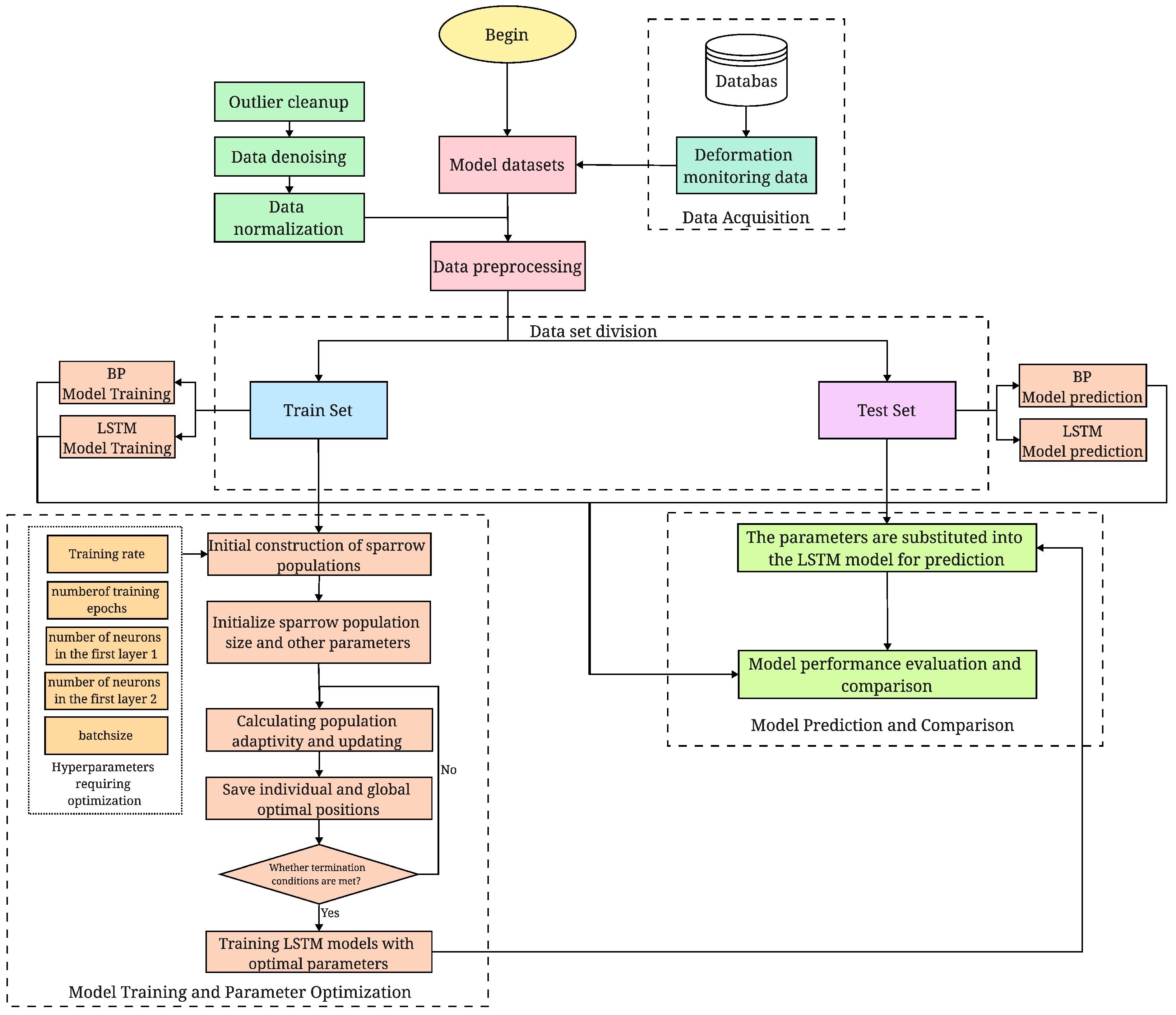

3.3. Implementation Framework

- (1)

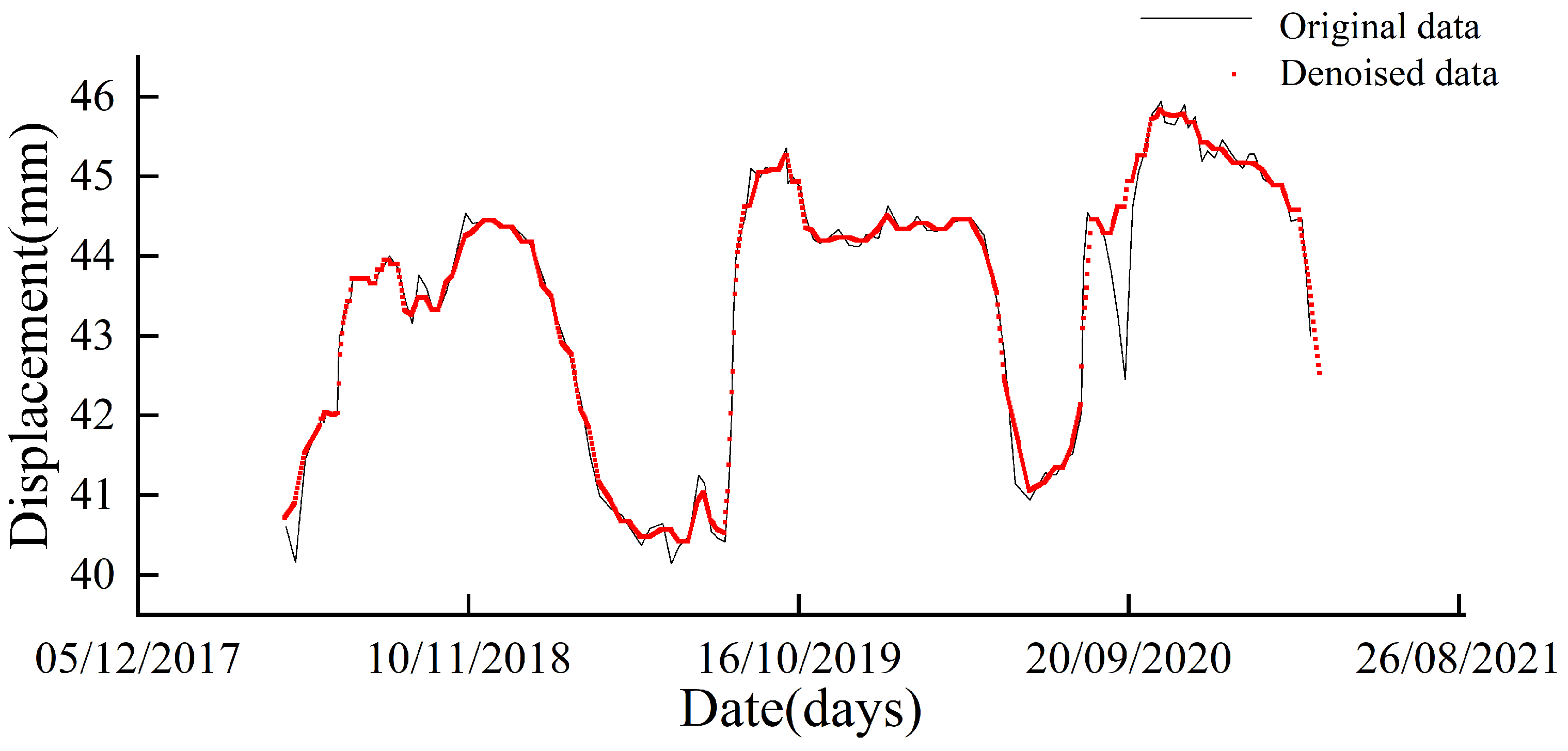

- Data preprocessing. To improve the predictive capability of the dam monitoring model, it is necessary to clean and transform the prototype observation data in advance. The first step is to clean up outliers and attempt to eliminate or reduce noise interference in the data. If the cleaning effect does not meet the requirements, various data denoising methods can be tested by considering the data structure. It may even be necessary to combine multiple denoising methods to achieve the desired cleaning effect. Commonly used data denoising methods include mean filtering, Gaussian filtering, and wavelet denoising. The Symlet wavelet filtering denoising method is based on the principle of wavelet analysis and threshold processing. It involves wavelet decomposition and reconstruction of data to achieve noise removal. Gaussian filtering, on the other hand, uses the shape of a Gaussian function to smooth the data and reduce the effect of noise through weighted averaging of the neighborhood around each data point. After clearing outliers, any missing parts of the data can be interpolated. Additionally, normalizing the data is necessary to standardize the baseline values and dispersion of different features in the sample matrix. By following these preprocessing steps, a more accurate and reliable database can be provided for the dam monitoring model.

- (2)

- Parameter optimization. Using the four equations mentioned in Section 2.2, the discoverer, joiner, and vigilant positions are updated in the iterations, and the fitness values are calculated. At the end of the final iteration, the global optimal sparrow position is output, and the optimal parameters of the LSTM model are obtained.

- (3)

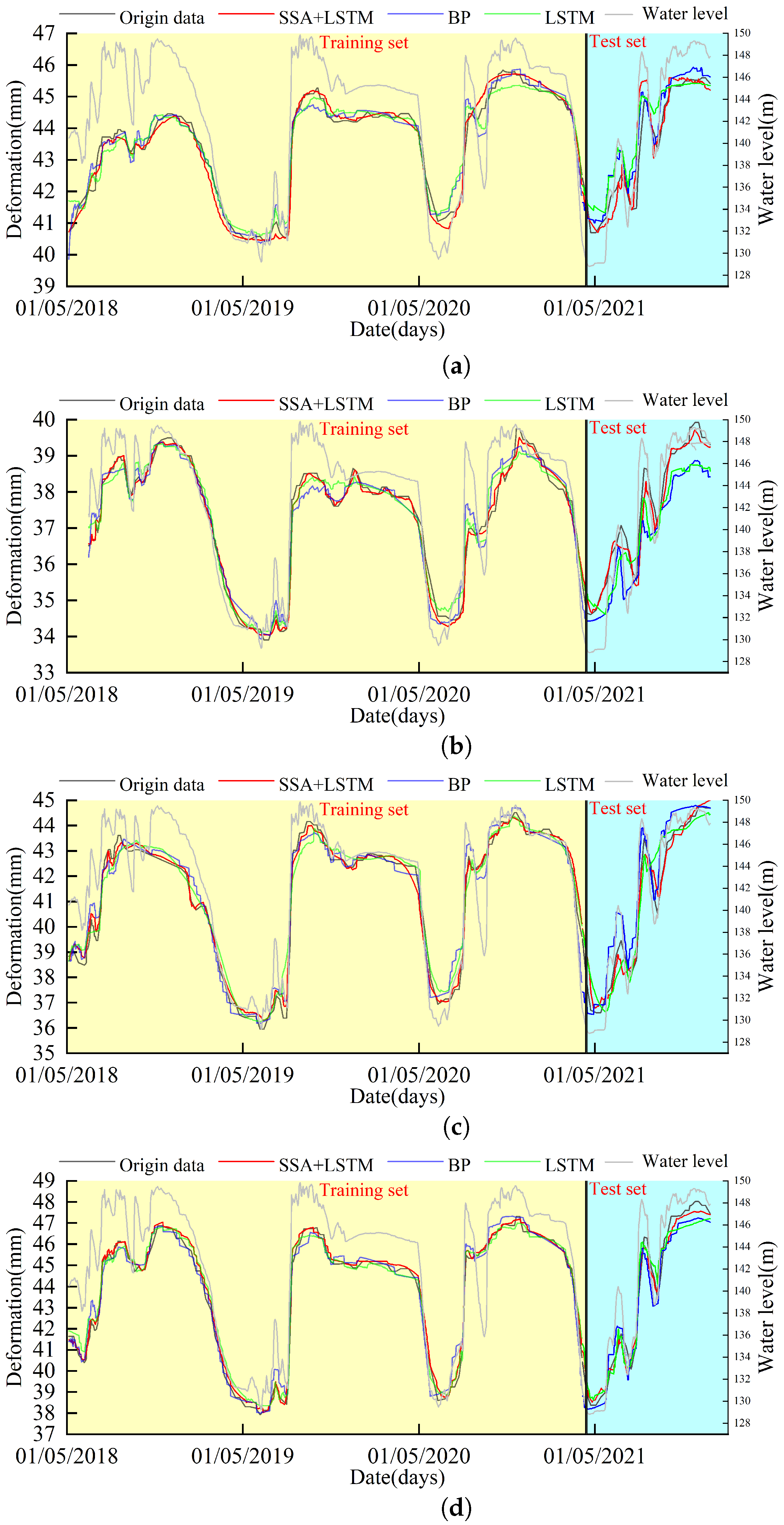

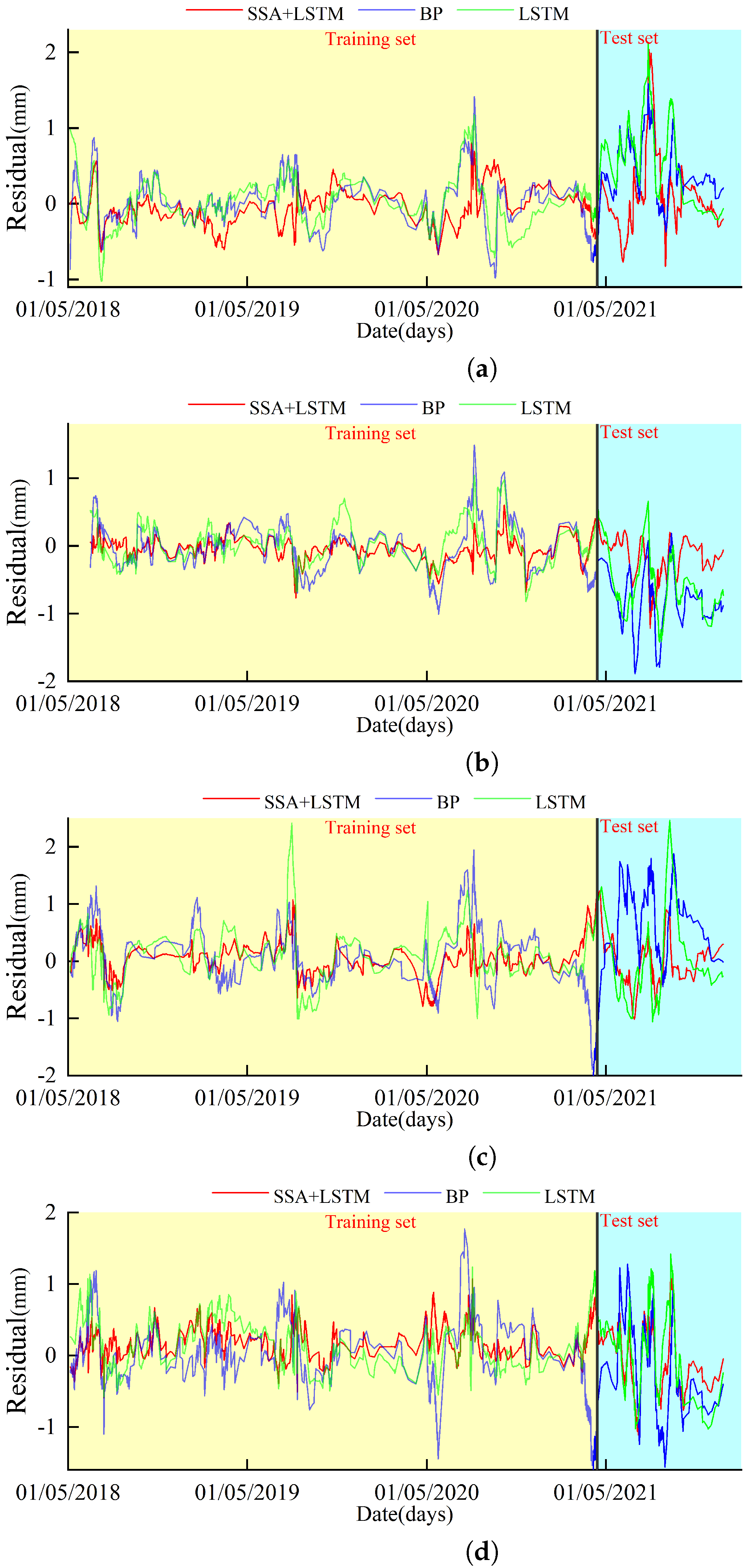

- Model training and prediction. The training and prediction datasets are used to train and predict the LSTM model, with hyperparameters determined by SSA. The accuracy of the model’s predictions is assessed by comparison with the true values from the test set. Additionally, the optimal hyperparameters are applied to different models for visual comparison to further evaluate the model’s performance. Detailed descriptions of each model’s prediction, along with model evaluation metrics, are considered to identify the optimal model.

4. Case Study

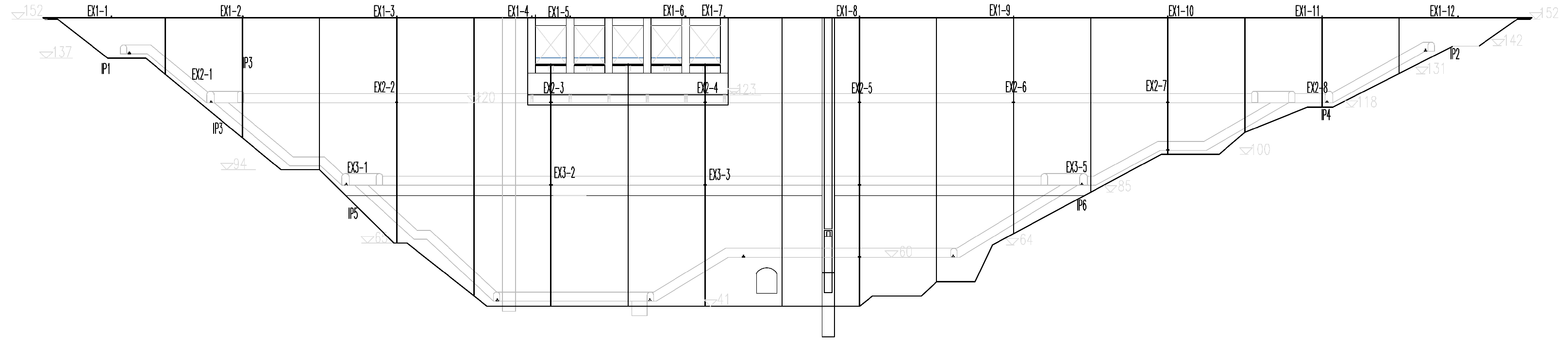

4.1. Project Overview

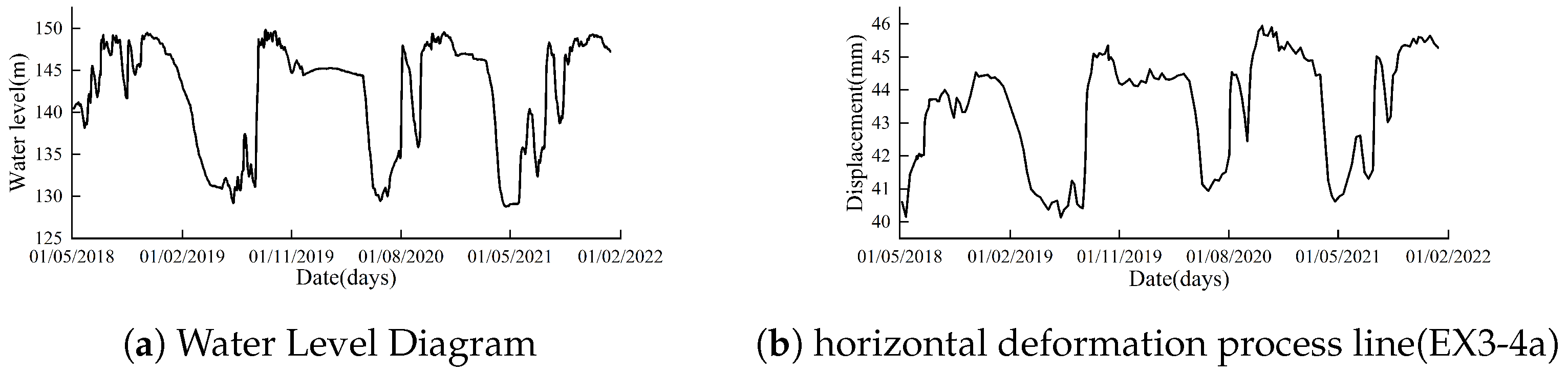

4.2. Data Preprocessing Results

4.3. SSA Algorithm Optimization Search Results

4.4. Contrast Analysis

5. Conclusions

- (1)

- For the random outliers in the dam deformation monitoring data, multiple denoising algorithm weights are used to complement each other in terms of data characteristics and noise types, which can obtain a better denoising effect on the measured data and obtain real data that can reflect the working state of the dam, effectively eliminating the influence of coarse differences on the prediction accuracy of the model.

- (2)

- Due to the different characteristics and intrinsic components of each measurement point, the SSA optimization algorithm proposed the optimal range of parameters and averaged parameter values, which can better obtain the characteristics of the dam deformation sequence in time sequence and take into account the backward and forward correlation of the input information, thus improving the prediction accuracy of the LSTM model.

- (3)

- The SSA–LSTM model established in this paper has higher prediction accuracy and stability than the BP and LSTM models, and the modeling results are consistent with the actual engineering situation, providing a new technique for predicting dam deformation with high accuracy. The method is simple and efficient and can be applied to the prediction analysis of other monitoring effects of dams with modification.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, C.; Wang, X.; Su, Y.; Zhang, T.; Lin, C. Deformation Forecasting of Pulp-Masonry Arch Dams via a Hybrid Model Based on CEEMDAN Considering the Lag of Influencing Factors. J. Struct. Eng. 2022, 148, 04022078. [Google Scholar] [CrossRef]

- Wang, S.; Yang, B.; Chen, H.; Fang, W.; Yu, T. LSTM-Based Deformation Prediction Model of the Embankment Dam of the Danjiangkou Hydropower Station. Water 2022, 14, 2464. [Google Scholar] [CrossRef]

- Lin, C.; Weng, K.; Lin, Y.; Zhang, T.; He, Q.; Su, Y. Time Series Prediction of Dam Deformation Using a Hybrid STL-CNN-GRU Model Based on Sparrow Search Algorithm Optimization. Appl. Sci. 2022, 12, 11951. [Google Scholar] [CrossRef]

- Qu, X.; Yang, J.; Chang, M. A Deep Learning Model for Concrete Dam Deformation Prediction Based on RS-LSTM. J. Sens. 2019, 2019, 4581672. [Google Scholar] [CrossRef]

- Bui, K.T.T.; Torres, J.F.; Gutierrez-Aviles, D.; Nhu, V.H.; Bui, D.T.; Martinez-Alvarez, F. Deformation forecasting of a hydropower dam by hybridizing a long short-term memory deep learning network with the coronavirus optimization algorithm. Comput.-Aided Civ. Infrastruct. Eng. 2022, 37, 1368–1386. [Google Scholar] [CrossRef]

- Li, M.; Li, M.; Ren, Q.; Li, H.; Song, L. DRLSTM: A dual-stage deep learning approach driven by raw monitoring data for dam displacement prediction. Adv. Eng. Inform. 2022, 51, 101510. [Google Scholar] [CrossRef]

- Yang, S.; Han, X.; Kuang, C.; Fang, W.; Zhang, J.; Yu, T. Comparative Study on Deformation Prediction Models of Wuqiangxi Concrete Gravity Dam Based on Monitoring Data. CMES—Comput. Model. Eng. Sci. 2022, 131, 49–72. [Google Scholar] [CrossRef]

- Vapnik, V.; Vashist, A. A new learning paradigm: Learning using privileged information. Neural Netw. 2009, 22, 544–557. [Google Scholar] [CrossRef]

- Su, H.; Chen, Z.; Wen, Z. Performance improvement method of support vector machine-based model monitoring dam safety. Struct. Control Health Monit. 2016, 23, 252–266. [Google Scholar] [CrossRef]

- Zhu, S.; Wu, S. Application of Gaussian process regression models in river water temperature modelling. J. Huazhong Univ. Sci. Technol. Nat. Sci. 2019, 46, 122–126. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Dai, B.; Chen, B. Chaos-based dam monitoring sequence wavelet RBF neural network prediction model. Water Resour. Hydropower Eng. 2016, 47, 80–85. [Google Scholar]

- Liu, W.; Pan, J.; Ren, Y.; Wu, Z.; Wang, J. Coupling prediction model for long-term displacements of arch dams based on long short-term memory network. Struct. Control Health Monit. 2020, 27, e2548. [Google Scholar] [CrossRef]

- Li, X.; Zhou, H.; Su, R.; Kang, J.; Sun, Y.; Yuan, Y.; Han, Y.; Chen, X.; Xie, P.; Wang, Y.; et al. A mild cognitive impairment diagnostic model based on IAAFT and BiLSTM. Biomed. Signal Process. Control 2023, 80, 104349. [Google Scholar] [CrossRef]

- Manna, T.; Anitha, A. Precipitation prediction by integrating Rough Set on Fuzzy Approximation Space with Deep Learning techniques. Appl. Soft Comput. 2023, 139, 110253. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, Z.; Huang, Z.; Nong, Y.; Li, L. Visual Measurement of dam concrete cracks based on U-net and improved thinning algorithm. J. Exp. Mech. 2022, 37, 209–220. [Google Scholar]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Hinchey, M.G.; Sterritt, R.; Rouff, C. Swarms and swarm intelligence. Computer 2007, 40, 111–113. [Google Scholar] [CrossRef]

- Bonabeau, E.; Meyer, C. Swarm intelligence. A whole new way to think about business. Harv. Bus. Rev. 2001, 79, 106–114. [Google Scholar] [PubMed]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) network. Phys. D-Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef] [Green Version]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, H.; Yue, J.; Xing, Y.; Zhou, Q. Research on Dam Deformation Prediction Based on Deep Fully Connected Neural Network. J. Geod. Geodyn. 2021, 41, 162–166. [Google Scholar]

- Liu, X.; Guo, H. Air quality indicators and AQI prediction coupling long-short term memory (LSTM) and sparrow search algorithm (SSA): A case study of Shanghai. Atmos. Pollut. Res. 2022, 13, 101551. [Google Scholar] [CrossRef]

- Wu, Z.R.; Gu, C.S.; Su, H.Z.; Chen, B. Review and prospect of calculation analysis methods in hydro-structure engineering. J. Hohai Univ. Nat. Sci. 2015, 43, 395–405. [Google Scholar]

- Yang, D.; Gu, C.; Zhu, Y.; Dai, B.; Zhang, K.; Zhang, Z.; Li, B. A Concrete Dam Deformation Prediction Method Based on LSTM with Attention Mechanism. IEEE Access 2020, 8, 185177–185186. [Google Scholar] [CrossRef]

| Parameters | Range | Averaging |

|---|---|---|

| Training rate (lr) | 0.001 | 0.001 |

| Number of training epochs (Epoch) | 100–112 | 106 |

| Number of neurons in the first layer (units1) | 104–130 | 169 |

| Number of neurons in the first layer (units2) | 244–256 | 250 |

| batch_size | 12–18 | 15 |

| Monitoring Point | Model | MAE (mm) | RMSE (mm) | MAPE (%) |

|---|---|---|---|---|

| EX3-4a | LSTM-SSA | 5 × 10 | 0.027 | 0.152 |

| BP | 6.5 × 10 | 0.233 | 0.939 | |

| LSTM | 7.3 × 10 | 0.932 | 2.130 | |

| EX3-3a | LSTM-SSA | 4.1 × 10 | 0.05 | 0.402 |

| BP | 2.4 × 10 | 0.11 | 1.791 | |

| LSTM | 4.0 × 10 | 0.496 | 1.173 | |

| EX2-7a | LSTM-SSA | 1.3 × 10 | 0.207 | 0.097 |

| BP | 1.3 × 10 | 0.196 | 0.278 | |

| LSTM | 1.4 × 10 | 0.011 | 0.670 | |

| EX2-6a | LSTM-SSA | 1.2 × 10 | 0.191 | 0.376 |

| BP | 1.7 × 10 | 0.247 | 0.481 | |

| LSTM | 1.9 × 10 | 0.250 | 0.502 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Madiniyeti, J.; Chao, Y.; Li, T.; Qi, H.; Wang, F. Concrete Dam Deformation Prediction Model Research Based on SSA–LSTM. Appl. Sci. 2023, 13, 7375. https://doi.org/10.3390/app13137375

Madiniyeti J, Chao Y, Li T, Qi H, Wang F. Concrete Dam Deformation Prediction Model Research Based on SSA–LSTM. Applied Sciences. 2023; 13(13):7375. https://doi.org/10.3390/app13137375

Chicago/Turabian StyleMadiniyeti, Jiedeerbieke, Yang Chao, Tongchun Li, Huijun Qi, and Fei Wang. 2023. "Concrete Dam Deformation Prediction Model Research Based on SSA–LSTM" Applied Sciences 13, no. 13: 7375. https://doi.org/10.3390/app13137375

APA StyleMadiniyeti, J., Chao, Y., Li, T., Qi, H., & Wang, F. (2023). Concrete Dam Deformation Prediction Model Research Based on SSA–LSTM. Applied Sciences, 13(13), 7375. https://doi.org/10.3390/app13137375