Abstract

Lung cancer is a leading cause of cancer-related deaths worldwide, and its diagnosis must be carried out as soon as possible to increase the survival rate. The development of computer-aided diagnosis systems can improve the accuracy of lung cancer diagnosis while reducing the workload of pathologists. The purpose of this study was to develop a learning algorithm (CancerDetecNN) to evaluate the presence or absence of tumor tissue in lung whole-slide images (WSIs) while reducing the computational cost. Three existing deep neural network models, including different versions of the CancerDetecNN algorithm, were trained and tested on datasets of tumor and non-tumor tiles extracted from lung WSIs. The fifth version of CancerDetecNN (CancerDetecNN Version 5) outperformed all existing convolutional neural network (CNN) models in the provided dataset, achieving higher precision (0.972), an area under the curve (AUC) of 0.923, and an F1-score of 0.897, while requiring 1 h and 51 min less for training than the best compared CNN model (ResNet-50). The results for CancerDetecNN Version 5 surpass the results of some architectures used in the literature, but the relatively small size and limited diversity of the dataset used in this study must be considered. This paper demonstrates the potential of CancerDetecNN Version 5 for improving lung cancer diagnosis since it is a dedicated model for lung cancer that leverages domain-specific knowledge and optimized architecture to capture unique characteristics and patterns in lung WSIs, potentially outperforming generic models in this domain and reducing the computational cost.

1. Introduction

Lung cancer is a significant public health problem, being the type of cancer with the highest mortality rate and with an incidence of approximately 2.21 million new cases in 2020 [1]. This type of cancer is most prevalent in individuals over the age of 50 [2]. Smoking is the leading cause of lung cancer, although other risk factors such as exposure to occupational carcinogens (such as arsenic, asbestos, chromium, nickel, and radon), polycyclic aromatic hydrocarbons, human immunodeficiency virus infection, as well as alcohol consumption, may also contribute to the development of the disease [3,4,5]. Advanced cases may result in weakness, fatigue, weight loss, and cachexia [6].

Non-small cell lung cancer (NSCLC) and small cell lung cancer (SCLC) have traditionally been the two primary subtypes of lung cancer. However, recent advancements in research have identified specific genetic mutations in several NSCLC subtypes, leading to a more accurate categorization [3]. NSCLC is currently classified into several subtypes, including adenocarcinoma (ADC), squamous cell carcinoma (SCC), and large cell carcinoma (LCC), as well as less common subtypes such as adenosquamous carcinoma and sarcomatoid carcinoma [7]. This more refined classification of NSCLC is vital for guiding treatment decisions, as different subtypes may respond differently to various therapies.

The process for the detection begins with radiologic techniques such as a chest X-ray, computed tomography, or magnetic resonance imaging (Figure 1a). If malignant tissue is suspected (Figure 1b), a biopsy is performed to obtain material for histopathological examination (Figure 1c). Formalin-fixed paraffin-embedded tissues are processed to create glass slides that are routinely stained with hematoxylin and eosin. Pathologists analyze these glass slides through either a brightfield microscope or a whole-slide image (WSI), which is a high-resolution digital scan of a microscopic slide that allows for counting, measuring the sizes and densities of objects, and applying image processing algorithms to detect lesions or cancer (Figure 1d). Finally, the exam results are communicated to the patient (Figure 1e) [3]. While the process seems simple, histological evaluation can be time-consuming, requires a high level of precision, and should be performed in the early stages of cancer to allow for more treatment options [8,9].

Figure 1.

Actual approach for lung cancer diagnosis. (a) Perform a radiologic technique; (b) if suspected tumor tissue, proceed to the biopsy; (c) perform the biopsy extracting suspected tissues; (d) diagnosis executed by the pathologists through a brightfield microscope or whole-slide image (WSI); (e) communicate the exam result to the patient.

Early detection is critical in improving treatment options and survival rates for lung cancer patients. Studies have consistently shown that individuals diagnosed with lung cancer at an early stage have a significantly better chance of survival than those diagnosed at a later stage [10,11]. In fact, the five-year survival rate for lung cancer patients is up to 70% when the cancer is detected at an early stage before it has spread to other parts of the body [12]. However, despite the clear benefits of early detection, a significant proportion of lung cancer cases are still diagnosed at an advanced stage, when treatment options are limited, and the prognosis is poor. This is often due to the non-specific nature of these symptoms, which can delay diagnosis until the cancer has advanced. Therefore, there is an urgent need for effective screening programs that can detect lung cancer at an early stage. One promising approach is the integration of software that uses technologies such as artificial intelligence (AI) to improve the accuracy and efficiency of a cancer diagnosis [13]. These AI-based systems can aid in the initial diagnosis, highlighting situations that require further study by a pathologist, or as a confirmatory diagnostic tool, potentially reducing the time spent by pathologists on these tasks and enabling quicker progress towards a definitive diagnosis and subsequent therapy [9].

Proposals for AI-based systems aimed at improving cancer diagnosis, such as breast, brain, and skin cancer diagnosis, often employ deep learning algorithms known as convolutional neural networks (CNNs) [14,15,16,17]. CNNs are designed to recognize complex patterns and features in images, making them an ideal tool for image classification, segmentation, and detection. The capacity of CNNs to learn from and perform better with additional data makes them extremely adaptable to different datasets and imaging modalities, which is one of their main advantages [18]. Recent research has shown that CNNs have the potential to increase the precision of medical image processing. For instance, in 2019, Ardila and his team developed a CNN algorithm that achieved an AUC (area under the curve) of 94.4% in detecting lung cancer in chest radiographs, outperforming six human radiologists [19]. However, despite their promising results, CNNs have some limitations in medical imaging, including the need for large amounts of labeled data and potential biases in the training data [18].

The availability of clinical data on WSIs is increasing with the emergence of whole-slide imaging, which provides a means to develop systems that can perform tasks such as cancer detection and classification, overcoming the constraints described above, i.e., reducing the time spent by the pathologists in tasks related to tumor detection and classification and improving the accuracy of a cancer diagnosis [8,9].

In recent years, several studies have been conducted to evaluate the performance of various AI-based approaches for lung cancer detection using WSIs (Table 1). In this context, notable approaches have been described in the literature.

Table 1.

Published studies on lung cancer detection using AI-based histopathological analysis.

Wang and colleagues released a publication in 2018 where they describe the development of a CNN based on the inception V3 algorithm to detect and classify ADC WSIs. The model used a sliding grid mechanism that traveled along the WSI in patches of 300 × 300 pixels, identifying each pixel as a nucleus centroid, non-nucleus, or nucleus boundary. They then extracted the geographic distribution in the tumor microenvironment, nuclear morphology, and textural aspects from the tumor site and used them as predictors in a recurrent cancer prediction model. The model achieved an accuracy of 89.8% from training and validation sets consisting of 267 and 457 images, respectively [20].

Coudray and colleagues employed a similar architecture to Wang’s work, but with larger patch sizes of 512 × 512 pixels. In addition to ADC samples, they included non-malignant and SCC samples, resulting in a dataset with 1635 slides. The team achieved an average area under the curve (AUC) of 0.97, suggesting that utilizing larger patch sizes and incorporating a variety of sample types may improve the performance of deep learning models in analyzing medical images [21].

Nikolay and colleagues analyzed annotated WSIs from 712 patients using seven different architectures. Among the tested networks, UNet achieved the highest performance with a precision and F1-score of 0.80 and a recall of 0.86 [22].

Li and colleagues investigated the performance of four different neural network architectures, AlexNet, VGG, ResNet, and SqueezeNet, for analyzing lung WSIs from a small dataset of 33 patients. The networks were trained using two approaches, from scratch and pre-trained, using input patches of 256 × 256 pixels cropped with a stride of 196 pixels to ensure sufficient overlap between neighboring patches. Regarding the results for the AUC metric, when trained from scratch, AlexNet achieved the highest score of 0.9119, followed closely by SqueezeNet at 0.9113. ResNet and VGGNet achieved AUC values of 0.8912 and 0.8810, respectively. In contrast, when using pre-trained models, SqueezeNet achieved the highest AUC of 0.8749, followed by ResNet with 0.8687. AlexNet and VGGNet achieved lower AUC values of 0.8585 and 0.8300, respectively [23].

In 2019, Yu and colleagues conducted a study where they utilized pre-trained CNNs, including AlexNet, VGG-16, GoogLeNet, and ResNet-50, from ImageNet to detect ADC and SCC. From a dataset of 1314 ADC and SCC WSIs, they used patches of 1000 × 1000 pixels with a 50% overlap to train the models. The results for the AUC metric in the validation cohort showed that, when identifying ADC from adjacent dense benign tissues, AlexNet achieved 0.890, VGG-16 was able to obtain 0.912, GoogLeNet obtained 0.901, and ResNet-50 obtained 0.935. Additionally, when detecting SCC for adjacent dense benign tissues, the AUC values were 0.995 for AlexNet, 0.991 for VGG-16, 0.993 for GoogLeNet, and 0.979 for ResNet-50 [24].

Šarić and his team used 256 × 256 pixels patches with a stride of 196 pixels to train the CNNs VGG-16 and ResNet-50. Their dataset was composed of tiles extracted from 25 lung WSIs, which resulted in receiver operating characteristic (ROC) values of 0.833 for VGG-16 and 0.796 for ResNet-50 [25].

In the next year, 2020, Hatuwal and Thapa developed their own CNN, which involved resizing each tile to 180 × 180 pixels. Their dataset comprised tiles from three classes: benign tissue, ADC, and SCC, with 5000 histopathology images per category. The model achieved a precision of 0.95, a recall of 0.97, and an F1-score of 0.96 for adenocarcinoma; a precision of 1.00, a recall of 1.00, and an F1-score of 1.00 for benign tissue; and a precision of 0.97, a recall of 0.95, and an F1-score of 0.96 for SCC [26].

Chen and his team proposed a novel approach in which the WSIs were used directly as an input for the ResNet-50 model, eliminating the need for pathologists to manually annotate the slides [3]. Due to memory constraints, they proposed using the WSI with a magnification of 4× initially to discover the critical regions and, for the final identification, the 40× magnification pictures of those regions. They tested their method on a dataset of 9662 WSIs and achieved AUC values of 0.9594 and 0.9414 for ADC and SCC, respectively [27].

In 2022, Jehangir and colleagues developed a custom CNN and trained it with 5000 histopathology images per category (benign, ADC, and SCC). The ADC was detected with a precision of 0.933, a recall of 0.717, and an F1-score of 0.811. The benign category was identified with a precision of 1.00 and a recall score of 0.943, yielding an F1-score of 0.971. The SCC category was recognized with a precision of 0.774 and a recall of 0.998, obtaining an F1-score of 0.872 [28].

Wahid and his team published a paper in 2023 where they tested three pre-trained CNN models (GoogLeNet, ResNet-19, and ShuffleNet V2) and created their own CNN. The employed dataset had 15,000 images, with 5000 images in each group (benign, ADC, and SCC). The F1-score of the GoogLeNet was 0.96 for ADC, 1.00 for benign, and 0.97 for SCC. ResNet-18 produced an F1-score of 0.98 for ADC, 1.00 for benign, and 0.98 for SCC. The ShuffleNet V2 earned an ADC score of 0.96, a benign score of 1.00, and an SCC value of 0.96. The created CNN scored 0.89 for ADC, 0.98 for benign, and 0.91 for SCC [29].

Based on the critical analysis of the previous studies, the authors of this study suggest an approach for the detection and diagnosis of tumors in WSIs. The proposed method involves splitting the WSI into patches of 512 × 512 pixels and feeding them into a CNN that produces a heatmap indicating the presence or absence of the tumor (Figure 2) [3,9]. Recurring to this method, the split of the WSI into smaller patches enables the models to capture detailed information from the regions of interest, allowing for a more accurate diagnosis of a tumor area. The application of a CNN benefits the extraction of meaningful features from the input patches, making it possible to identify patterns indicative of tumor presence. The resulting heatmap generated by the CNN provides a visual representation to the pathologists, indicating the tumor distribution across the WSI. This approach is expected to significantly reduce the time required for pathologists to analyze WSIs, decrease healthcare costs, and enhance the accuracy of diagnosis.

Figure 2.

Proposed approach to detect lung cancer according to a given WSI (adapted from [3]).

The open literature indicates that deep learning models have shown promising results in medical image analysis tasks, including breast cancer detection. As reviewed above, the studies provide solutions to identify the presence of a tumor in a lung WSI and/or classify it. However, there are gaps that need to be addressed, such as the fact that most of the authors in the field have utilized CNNs that have already been developed and tested on various image classification problems but did not create a specific model for lung cancer. By creating a dedicated model for lung cancer, it is possible to leverage domain-specific knowledge and optimize the architecture to capture the unique characteristics and patterns present in lung WSIs. This approach is crucial because lung cancer diagnosis requires a high level of accuracy and specificity, and a specialized model can potentially outperform generic models in this specific domain. Moreover, training complex networks, such as ResNet, is a computationally expensive and time-consuming task. Thus, this paper aims to introduce a novel and optimized CNN architecture for the accurate detection of lung cancer in WSIs. Additionally, the performance of existing CNN designs for this task is analyzed and compared. The proposed model has the potential to significantly improve the efficiency and accuracy of the WSI assessment, which is a crucial step in cancer diagnosis and therapy, while reducing the amount of time and computational cost needed to train a model. The outcomes of this work are foreseen to be implemented in a medical system of WSIs analysis.

This paper is divided into three more sections in addition to the Introduction: the second section, Materials and Methods, presents a detailed description of the dataset used in this study, as well as the tested and developed architectures of the neural networks employed to achieve the research objectives. In the third section, Results and Discussion, the characteristics of the training environment are illustrated, and the outcomes of training the neural networks (NNs) with varied numbers of epochs are discussed and analyzed. The fourth section, Conclusion and Future Work, concludes with the main findings, limits of the study, and future steps.

2. Materials and Methods

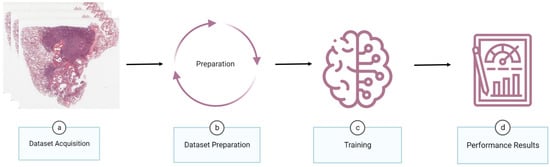

The starting point for developing a novel algorithm for histopathological lung cancer detection is the acquisition of lung WSIs (Figure 3a). Then, the dataset would be prepared, that is, annotated by pathologists, where the annotated regions are extracted from the WSIs, generating a new dataset that undergoes operations to balance, augment, or split the dataset (Figure 3b). Once the dataset is prepared, it is used to feed the neural networks during the training and validation processes (Figure 3c). At the end of the experiment, the model performance according to the defined metrics is shown (Figure 3d).

Figure 3.

Methodology to develop an artificial intelligence algorithm to detect lung cancer in WSIs.

2.1. Dataset Acquisition

CNNs require a dataset with images related to the problem context for training and validation. In this project, the National Lung Screening Trial (NLST) [30] and The Cancer Genome Atlas (TCGA) [31] datasets, comprising both malignant (specifically ADC and SCC) and non-malignant lung WSIs, were used. Table 2 presents the number of cases and respective WSIs obtained from each repository.

Table 2.

Image repository and respective numbers of cases and images associated with lung cancer.

2.2. Dataset Preparation

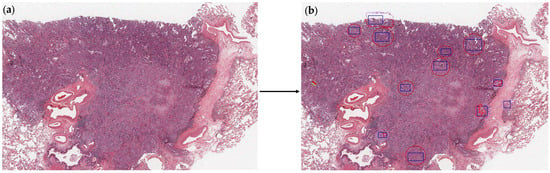

Among the NLST and TCGA datasets [30,31], NLST had a higher number of WSIs available and, for that reason, was selected for annotation and training. A total of 117 WSIs from the NLST repository were annotated by the pathologist (S.C.) (Figure 4).

Figure 4.

Example of a WSI that has been manually annotated. (a) Original WSI; (b) annotated WSI. The annotations are color-coded, with red representing the tumor regions, light purple representing non-tumor regions, and dark blue representing regions of interest.

The annotated regions were extracted from the original WSIs, which resulted in the identification of 362 tumor regions and 248 non-tumor regions of interest. These images were split into training and test datasets with a ratio of 80:20, respectively.

Since the dimension of the dataset can be considered low, various image transformations would be applied to the training dataset (Table 3). These transformations include rescaling the pixel values by a factor of 1/255 to normalize the images, introducing shearing with a shear intensity of 0.2, rotating the images up to an angle of 0.2 radians, randomly zooming in or out of the images within a range of 0.5, and randomly flipping the inputs horizontally. By applying these transformations, it was aimed to increase the variability and complexity of the dataset, leading to better model generalization and performance.

Table 3.

Image transformations applied to the training dataset.

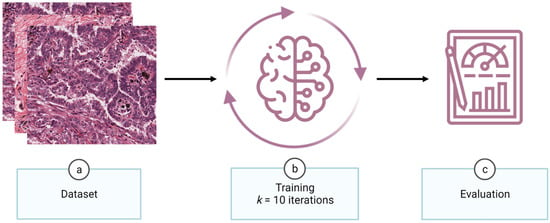

2.3. Training

Following the acquisition and preparation of the dataset, it is now possible to train a NN, which is a crucial step in the development of an AI-based system for image analysis (Figure 5). After the training phase, the performance of the models is assessed using an independent test dataset.

Figure 5.

Training workflow. Having the dataset prepared (a), the neural networks are trained using a 10-fold cross-validation technique (b). Subsequently, the performance of the models is evaluated on a separate test dataset (c).

2.3.1. Neural Networks

Based on the popular CNN architectures and the reviewed studies, three algorithms to be tested with the prepared dataset were selected: AlexNext, GoogLeNet, and ResNet-50. Furthermore, the authors designed and developed 13 additional architectures called CancerDetecNN versions. These architectures were created from scratch with the objective of improving the accuracy of lung cancer detection while also reducing computational costs. These novel architectures were designed to have fewer layers and parameters than the popular CNN models previously tested while maintaining high performance on the dataset.

AlexNet

Built by Alex Krizhevesky and his team, AlexNet was presented in 2012 during the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). AlexNet’s architecture consists of eight layers, of which five are convolutional layers and the other three are fully connected layers. The input image has a dimension of 224 × 224 × 3 pixels, and the output of the last layer is fed to a 1000-way softmax, which produces a distribution over the 1000 class labels. When participating in the competition, AlexNet achieved the best results, setting the percentage of test images for which the correct label was not in the top 5 predicted labels to 15.3% (top-5 error rate) [32].

GoogLeNet

In 2014, Szegedy and his team presented GoogLeNet, which consists of 22 layers (27 layers if counting pooling), of which 9 are inception modules. These modules are made up of an average pool layer, a convolution layer, two fully connected layers, and a linear layer with a softmax activation function. The inception modules perform a classification based on the inputs and add the calculated loss to the network’s total loss. GoogLeNet was used in both challenges of the ILSVRC in 2014 (detection and classification). The best model was achieved in the seventh version, with 144 crops and a top-5 error rate of 6.67% [33].

ResNet

One year later, in 2015, he and his team introduced ResNet with the concept of shortcut connection, which involves skipping one or more layers with the aim of performing identity mapping. The original ResNet had 34 layers, but it was later conducted experiments with 50, 101, and 152 layers. In contrast to VGG networks, ResNet’s majority of layers have 3 × 3 pixels filters, with batch normalization used after each convolution and before activation. If a convolution layer has a stride of 2, it is downsampled. The shortcut connections were inserted when the input and output had the same dimensions. ResNet achieved the best result in the classification challenge, with a top-5 error rate of 3.57% for the test dataset [34].

Transfer learning, a technique where pre-trained weights on a large dataset are used to improve performance on a smaller and related dataset, was also considered for the ResNet since it showed the best top-5 error rate among the three CNNs selected [35]. Even though the dataset of ImageNet is not comparable to the one used in this study, it can provide a useful starting point for training the model. Therefore, training using weights from pre-training in the ImageNet dataset will be performed in addition to the training from scratch.

CancerDetecNN

The authors developed CancerDetecNN in 13 different versions with incremental changes to the architecture in each version:

- Version 0: Composed of 3 layers, the model has the input dimensions set as 512 × 512 × 3 pixels. It starts with a convolution layer with a 3 × 3 pixels kernel size, 32 filters, 2 × 2 pixels strides, and the activation function is the rectified linear unit (ReLU). Then, there is a global average pooling layer followed by a fully connected layer of 1 unit employing the sigmoid activation function;

- Version 1: Introduced a fully connected layer with 512 units and ReLU as an activation function between global average pooling and fully connected layers of Version 0, in order to increase the model’s capacity to capture more complex patterns;

- Version 2: To reduce the dimensionality of the feature maps and enable the learning of more features, a max pooling layer with a pooling size of 2 × 2 pixels was added after the initial convolutional layer. Upon the addition of the new max pooling layer, a new set of convolution and max pooling layers were added, duplicating the identical dimensions and activation functions but with 64 filters rather than 32 in the convolution layer, which allows for learning more complicated features;

- Version 3: After the appended layers in Version 2, a new pair of convolution and max pooling layers were added, with the convolution layer having 128 filters. The increase in the number of filters makes the model more capable of acquiring more information about features and patterns;

- Version 4: Replicated the addition of Version 3;

- Version 5: The max pooling layer positioned before the global average pooling layer was removed to allow keeping more spatial information before the final classification;

- Version 6: The number of filters for the final convolution layer was increased to 256 to enhance the model’s ability to capture more complex features and patterns;

- Version 7: Contrary to the change made in Version 5, the max pooling layer placed before the global average pooling layer was reinserted with a size of 2 × 2 pixels, in order to downsample the spatial dimensions of the feature maps;

- Version 8: Placed a new set of convolution and max pooling layers before the global average pooling layer, one of which included 128 filters, and modified the first fully connected layer units from 512 to 128. While the reduction of units in the fully connected layer reduces the number of parameters and prevents overfitting, the addition of the convolutional layers increases the model’s capacity to learn more features, as described above;

- Version 9: Demonstrated a “U-architecture,” which means it has pairs of convolution layers and max pooling with only the filters changing in the following order: 32–64–128–256–128–64–32. This version also reduced the number of the first fully connected layer units from 128 to 32. The reasons for the changes remain the same as in the above versions;

- Version 10: Reverted the number of units in the first fully connected layer to 512 with the aim of capturing complex patterns;

- Version 11: Removed the previously added sets in Version 9 and changed the convolution layer from 256 to 128 filters. As a result, convolutional layers of 32, 64, and 128 filters were retained, culminating in a CNN with one convolution layer of 32 filters, another of 64 filters, and three of 128 filters. These changes simplified the CNN by reducing its parameters;

- Version 12: Deleted the last set of convolutional layers with 128 filters and max pooling layers, as well as the fully connected layer with 512 units, which reduces the number of parameters, helps prevent overfitting, and makes the model more computationally efficient.

Since the objective of this study is to perform binary classification by predicting tumor and non-tumor classes, CNNs such as AlexNet, GoogLeNet, and ResNet-50 were adapted for this task. To achieve this, the final layer of these models was modified to have a fully connected layer with one unit and sigmoid as the activation function. Table 4 presents all used CNNs, as well as their number of parameters.

Table 4.

Used convolutional neural networks and their respective number of parameters.

2.3.2. Performance Metrics

During the analysis of related works (Section 1), it was observed that the most used metrics were accuracy, AUC, F1-score, precision, and recall. In order to be possible and facilitate the comparison of the results with the literature, the authors of this article will also use the following metrics:

- Accuracy, which measures the proportion of correct classifications over the total number of classifications [36]:

- Precision (or Specificity), which measures the proportion of true positive predictions over the total number of positive predictions [36]:

- Recall (or Sensitivity), which measures the proportion of true positive predictions over the total number of actual positive samples [36]:

- F1-score, which measures a weighted mean of Precision and Recall [36]:

- AUC, which measures the performance of a binary classifier by calculating the area under the receiver operating characteristic (ROC) curve and plotting the true positive rate against the false positive rate at various classification thresholds [36]:

2.3.3. k-Fold Cross-Validation

The methodology selected to assess the effectiveness of the models and tune them to avoid overfitting or underfitting was k-fold cross-validation. This technique splits a dataset into k equally sized “folds” (or subsets) and then trains the model k times, each time using a different fold as the validation set and the remaining folds as the training set. At the end of all iterations, the model’s performance is evaluated by calculating the average of the validation values provided for each metric in each k iteration.

Based on the size of the training dataset present in this study and considering the availability of a high-performance computer to train the CNNs, the authors set the value of k to 10. This value was chosen because lung cancer detection is a critical task that requires high precision and having k = 10 will give more precise values for the model’s performance.

3. Results and Discussion

In this section, the results of the training of the CNNs are analyzed and discussed in comparison with the literature results.

3.1. Training Characteristics

As previously mentioned, the dataset was split into training and validation sets at an 80:20 ratio, respectively, and employed a 10-fold cross-validation in the training dataset. Each neural network was trained using six different epoch values, these being 50, 100, 150, 200, 250, and 300. The authors utilized the Adam optimization algorithm and binary cross-entropy as the loss function for the analysis. Table 5 summarizes the training characteristics used.

Table 5.

Training characteristics.

3.2. Hardware and Software Configuration

Each CNN was implemented using the Tensorflow library and trained on a HPC (high-performance computer) with the following specifications:

- Central Process Unit(s): 2 × CPU AMD EPYC ROME 7452 32C/64T 2.35 GHZ 128 MB SP3 155 W;

- Random Access Memory: 16 × DIMM HYNIX 32 GB DDR4 3200 MHZ NR ECCR;

- Graphical Process Unit(s): 4 × NVIDIA TESLA A100 40 GB;

- Physical Memory: 2 × SSD WESTERN DIGITAL DC SN640 3.84 TB NVME PCI-E 2.5″ 0.8 DWPD.

3.3. Evaluating the Performance of Existing Convolutional Neural Networks

The performance evaluation of current CNNs is important for understanding their behavior when trained on the acquired dataset and comparing their performance values to those reported in the literature.

As previously stated, three existing architectures were chosen to be trained from scratch to assess the performance: AlexNet, GoogLeNet, and ResNet-50. Weights from pre-training on the ImageNet dataset were used to train ResNet-50 as well. Table 6 presents the performance results for each CNN in the test dataset.

Table 6.

Performance results for existing convolutional neural networks in test dataset.

According to the accuracy results in Table 6, the ResNet-50 architecture outperformed all other evaluated CNNs, reaching the highest accuracy of 0.839 at 250 epochs. In contrast, the pre-trained version of ResNet-50 only achieved an accuracy value of 0.816, which was the lowest accuracy value among the best outcomes provided by the other architectures. One probable cause for this difference is that the weights of the pre-trained ResNet-50 were not able to adapt to the features of the current dataset, leading to less accurate predictions.

In terms of precision and recall, the pre-trained ResNet-50 achieved the highest values of 0.929 and 0.859, respectively. These high values demonstrate that transfer learning could potentiate the ability to capture relevant features and generalize them to the current dataset. However, these results were achieved at different epochs, with precision being maximized when trained with 300 epochs and recall with 150 epochs.

Regarding AUC, the ResNet-50 pre-trained model showed the best result (0.860) for 300 epochs, followed by the ResNet-50 trained from scratch with a score of 0.856 at 250 epochs. This result highlights the possibility that transfer learning could improve the model’s ability to distinguish between positive and negative classes. However, it should be noted that the pre-trained ResNet-50 required an additional 50 epochs compared to the ResNet-50 trained from scratch to obtain a higher score with a difference of 0.04, which may not be significant enough to justify the extra training time.

For the final metric, F1-score, ResNet-50 presented the best score of 0.864 at 250 epochs, followed by the pre-trained architecture with a score of 0.857 at 150 epochs. These values indicate that the ResNet-50 architecture was able to achieve a balance between precision and recall.

Comparing Training Time of Existing CNNs for Optimal Performance

Following the review of the obtained results, the CNNs with the best and most consistent results for the metrics were selected to compare the time spent training each CNN until it reached its best values (Table 7).

Table 7.

Best results achieved by each existing convolutional neural network.

Evaluating the results, it can be noted that ResNet-50 outperformed the other CNNs in almost every metric, only having a difference of 0.023 to the highest value obtained by the ResNet-50 pre-trained model at the recall metric. However, it needed 3 h and 23 min of training, which corresponds to 250 epochs, while GoogLeNet achieved competitive values in a shorter training time of 2 h and 7 min and a lower number of epochs (200 epochs). The superior performance of ResNet-50 can be attributed to its deep architecture, which allows it to capture complex patterns and features in the dataset, but the inception architecture present in GoogLeNet allows it to capture a wider range of features in the data while also reducing the number of parameters and computations needed.

3.4. Evaluating the Performance of CancerDetecNN

After analyzing and discussing the results of the existing CNNs on the acquired dataset, it was necessary to train CancerDetecNN Versions in order to evaluate and compare their performance. To ensure clarity and focus, this paper will limit its scope to a selected group of the highest-performing versions of CancerDetecNN. Specifically, the four versions that demonstrated the most consistent and greatest outcomes were identified and will therefore be the center of attention of this study’s analysis (Table 8). The four versions selected for assessment were 5, 6, 7, and 12.

Table 8.

Performance results for CancerDetecNN in test dataset.

Discussing the accuracy results presented by the training of the four CancerDetecNN versions, it is possible to observe that they range from 0.761 to 0.885, with the fifth version achieving the highest score at 200 epochs. It is possible to note that the two lowest values (0.761 and 0.766) were both achieved by Version 12 at 100 and 250 epochs, respectively. Compared to the other versions, the lower accuracy of Version 12 could be attributed to the fact that the final max pooling layer is followed by a global average pooling layer. This could lead to a loss of spatial resolution and potentially result in the loss of information.

The precision scores of the CancerDetecNN model were highest (0.983) and lowest (0.871) for Version 7 at 50 and 250 epochs, respectively. However, it is valuable to take the recall scores into account when assessing the overall effectiveness of the model. According to the values outputted, it is possible to state that all versions identified the negative samples correctly most of the time. Version 7 showed a trade-off between precision and recall, with the lowest precision and highest recall score of 0.863 when trained with 250 epochs. Version 12 had the lowest recall score of 0.626 at 100 epochs, indicating that the model failed to identify several positive cases.

The AUC metric scores range mainly from 0.890 to 0.930, indicating that all versions of the CancerDetecNN model distinguish positive and negative samples well. It is notable that the highest AUC score of 0.930 was achieved by Version 7 at 100 epochs, while the lowest score of 0.830 was obtained using Version 12 at 250 epochs.

Comparing the F1-score values, Versions 5 and 6 obtained the highest score of 0.897, although at different epochs (200 and 300, respectively). This indicates that they achieved a balance between precision and recall, which is essential for medical image analysis tasks where both false positives and false negatives can have severe consequences, such as patients undergoing unnecessary further treatments that could have negative effects on their health and quality of life or delaying the correct diagnosis and required treatment.

Comparing Training Time of CancerDetecNNs for Optimal Performance

Upon reviewing the results, the versions of CancerDetecNN that displayed the most superior and reliable metrics outcomes were chosen for comparison in terms of the time required to train each CNN until it achieved its optimal values (Table 9).

Table 9.

Best results achieved by each CancerDetecNN Version.

The four versions of CancerDetecNN achieved their highest outcomes when trained for 100, 200, and 300 epochs. Specifically, Version 7 showed its best performance at 100 epochs, Version 5 at 200 epochs, and Versions 6 and 12 at 300 epochs. Version 5 outperformed all the other versions, only being surpassed by Version 6 in the recall metric. Furthermore, as stated before, both Versions 5 and 6 achieved the same F1-score value. This could indicate that Version 6 had a lower proportion of true positive predictions over the total number of positive predictions but made up for it by measuring a higher proportion of true positive predictions over the total number of actual positive samples. However, achieving this required an additional 100 epochs of training, which corresponded to an extra 8 min of training time. One possible explanation for the better performance of Version 5 when compared to Version 6 is that Version 6 had one more convolutional layer with 256 filters, which may have caused overfitting and reduced the generalization ability.

Overall, Version 5 of CancerDetecNN demonstrated superior performance, producing the confusion matrix shown in Table 10 for the test dataset.

Table 10.

Confusion matrix for CancerDetecNN Version 5.

3.5. Comparative Analysis of the Convolutional Neural Networks

Validating the results of the existing CNNs with the literature that tested the same CNNs and considering that the present dataset size in this study was smaller most of the time, it is notable that the performance values for metrics such as precision, recall, AUC, and F1-score reached lower values. This suggests that the present dataset did not contain a favorable number of samples that allowed the CNNs to extract all the important features and understand the patterns, or that it was more challenging than the ones used in the previous studies, which underscores the importance of evaluating CNNs on diverse datasets to ensure their generalizability.

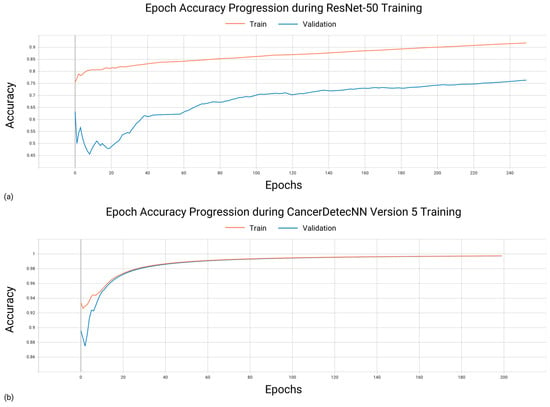

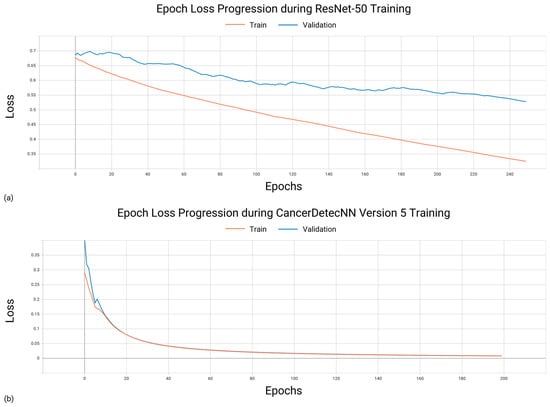

After analyzing the performance results for the existing CNNs and CancerDetecNN versions and comparing the accuracy (Figure A1) and loss (Figure A2) curves for the existing CNN that gave the best results (ResNet-50) and the best version of CancerDetecNN (Version 5), it is possible to state that Version 5 outperformed all existing CNNs in the provided dataset. Version 5 gave better results for all metrics except recall, where the difference between the best value obtained by Version 5 and the best value obtained by ResNet-50 pre-trained was only 0.026. Additionally, to achieve its highest performance, the ResNet-50 model, which can be considered the existing model with the greatest outcomes in the provided dataset, was trained during 250 epochs, which took 3 h and 23 min. In contrast, the fifth version of CancerDetecNN only took 1 h and 32 min to train during 200 epochs while still achieving better results for the main metrics, which results in 1 h and 51 min less computational cost. These findings highlight the superior performance of CancerDetecNN Version 5 in comparison to the existing CNNs when using the same dataset.

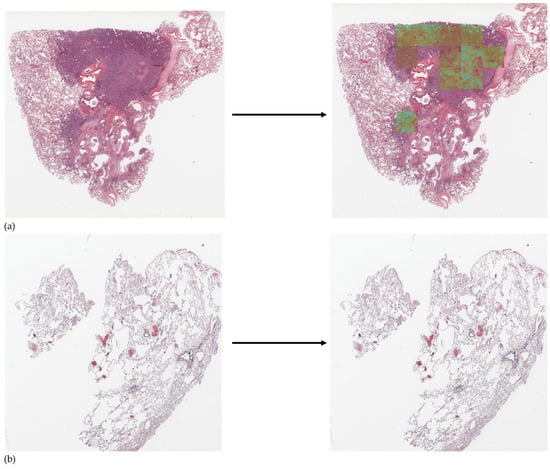

3.6. Detection Using CancerDetecNN Version 5

The heatmaps generated by the best-performing model (CancerDetecNN Version 5) were examined to identify regions that were incorrectly classified as either tumors or non-tumors (Figure 6). These areas were then annotated to be used in subsequent training of the model to increase its performance values.

Figure 6.

Examples of heatmaps generated for tumoral (a) and non-tumoral (b) WSIs. When presented with a tumor region, a color gradient is displayed from lighter to darker to give a more intuitive perspective of the tumor location. Otherwise, when there is no tumor area, the heatmap is not applied, showing only the lung tissue.

4. Conclusions and Future Work

The development of systems for optimizing lung cancer diagnosis is a burgeoning field, with several research approaches emerging to enhance the present procedures. Following this idea, the study presented in this paper demonstrates the development of a newly created learning algorithm (CancerDetecNN) that has as its main focus the evaluation of the presence or absence of tumor tissue in lung WSIs while reducing the computational cost required to train the neural networks. This allows for improved lung cancer diagnosis accuracy and decreases the time spent by the pathologist on this diagnosis. Along with the CancerDetecNN versions, scientifically accepted NNs (AlexNet, GoogleLeNet, and ResNet-50) were trained and tested on datasets made up of tumor and non-tumor tiles extracted from lung WSIs.

When evaluating the performance of the CancerDetecNN versions and comparing them to existing CNN models, it was found that Version 5 outperformed all existing CNNs in the provided dataset, achieving higher precision (0.972), AUC (0.923), and F1-score (0.897) values. The only metric where CancerDetecNN Version 5 did not perform better than the existing models was recall (0.833), but the difference was only 0.026. The ResNet-50 model achieved the best result for recall, but it took significantly longer to train, resulting in 1 h and 51 min more computational cost than CancerDetecNN Version 5 required.

The fundamental limitation of this study is the relatively small number of annotated WSIs present in the dataset. The lack of annotated datasets of adequate size for this task is a common issue in medical imaging, and it may have impacted the performance of the CNNs, making them fail to capture the variability and complexity present in real-world scenarios. Furthermore, the limited diversity of samples in the dataset may also limit the generalizability of the developed model. Different patient cohorts, imaging acquisition techniques, and datasets can affect the performance of the developed models.

Future research and development of CancerDetecNN could involve expanding the dataset to include more diverse samples to test the model’s generalizability. This will be a permanent learning algorithm. Additionally, investigating the use of ensemble models, as some authors have already started exploring, could enhance the model’s performance. In addition to that, further phases of this project include the application of the best model in a medical system for the analysis of lung WSIs and testing neural networks in the area of image segmentation, such as Mask R-CNN. Moreover, the potential of fractal geometry in the development of CNNs capable of capturing complex patterns in lung cancer WSIs will be explored, thus improving performance [37].

Overall, the creation of a specific model for lung cancer detection leveraged domain-specific knowledge and optimized the model architecture, allowing for greater accuracy and specificity, which leads to better outcomes in lung cancer diagnosis.

Author Contributions

Conceptualization, N.F.; data curation, N.F. and S.C.; formal analysis, N.F.; funding acquisition, V.C.; investigation, N.F.; methodology, N.F.; project administration, V.C.; resources, N.F., S.C. and V.C.; software, N.F.; supervision, S.C. and V.C.; validation, N.F. and S.C.; visualization, S.C. and V.C.; writing—original draft, N.F.; writing—review and editing, N.F., S.C. and V.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by FCT/MCTES grant number UIDB/05549/2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://portal.gdc.cancer.gov, accessed on 24 February 2023. The data presented in this study are available on request from the corresponding author. The data are not publicly available due to DTAs being required for each research project to protect the confidentiality of the identity of study participants.

Acknowledgments

The authors would like to thank the National Lung Screening Trial, The Cancer Genome Atlas, and the Genomic Data Commons Data Portal for the lung cancer datasets availability and to FCT—Fundação para a Ciência e Tecnologia and FCT/MCTES in the scope of the project UIDB/05549/2020 for funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

This appendix presents the accuracy and loss progression during the training of the existing neural network that presented the best results for the given dataset (ResNet-50) and the best version of CancerDetecNN (CancerDetecNN Version 5).

Analyzing Figure A1, it is possible to notice that CancerDetecNN Version 5 starts with a higher accuracy value than ResNet-50, and it is evident that CancerDetecNN Version 5 has a rapid increase in accuracy compared to ResNet-50. Thus, it can be concluded that CancerDetecNN Version 5 exhibits superior performance throughout the training process, consistently achieving higher accuracy scores compared to ResNet-50.

Figure A1.

Epoch accuracy progression during ResNet-50 (a) and CancerDetecNN Version 5 (b) training.

In Figure A2, the training and validation loss curves for ResNet-50 and CancerDetecNN Version 5 demonstrate that both are converging as the number of epochs increases, but CancerDetecNN Version 5 already starts with a lower loss value than ResNet-50. This means that CancerDetecNN Version 5 outperforms ResNet-50 in terms of lower loss values, indicating improved optimization and learning capabilities.

Figure A2.

Epoch loss progression during ResNet-50 (a) and CancerDetecNN Version 5 (b) training.

References

- World Health Organization Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/cancer (accessed on 24 February 2023).

- Nasim, F.; Sabath, B.F.; Eapen, G.A. Lung Cancer. Med. Clin. N. Am. 2019, 103, 463–473. [Google Scholar] [CrossRef] [PubMed]

- Faria, N.; Campelos, S.; Carvalho, V. Cancer Detec—Lung Cancer Diagnosis Support System: First Insights. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies—Volume 3: BIOINFORMATICS, Online, 9–11 February 2022; pp. 83–90, ISBN 978-989-758-552-4. [Google Scholar]

- Bade, B.C.; dela Cruz, C.S. Lung Cancer 2020: Epidemiology, Etiology, and Prevention. Clin. Chest Med. 2020, 41, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Duma, N.; Santana-Davila, R.; Molina, J.R. Non–Small Cell Lung Cancer: Epidemiology, Screening, Diagnosis, and Treatment. Mayo Clin. Proc. 2019, 94, 1623–1640. [Google Scholar] [CrossRef] [PubMed]

- Polanski, J.; Jankowska-Polanska, B.; Rosinczuk, J.; Chabowski, M.; Szymanska-Chabowska, A. Quality of Life of Patients with Lung Cancer. Onco Targets Ther. 2016, 9, 1023–1028. [Google Scholar] [CrossRef] [PubMed]

- Travis, W.D.; Brambilla, E.; Nicholson, A.G.; Yatabe, Y.; Austin, J.H.M.; Beasley, M.B.; Chirieac, L.R.; Dacic, S.; Duhig, E.; Flieder, D.B.; et al. The 2015 World Health Organization Classification of Lung Tumors: Impact of Genetic, Clinical and Radiologic Advances Since the 2004 Classification. J. Thorac. Oncol. 2015, 10, 1243–1260. [Google Scholar] [CrossRef]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial Intelligence in Digital Pathology — New Tools for Diagnosis and Precision Oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703. [Google Scholar] [CrossRef]

- Faria, N.; Campelos, S.; Carvalho, V. Development of a Lung Cancer Diagnosis Support System; IARIA: Porto, Portugal, 2022; pp. 30–32. [Google Scholar]

- De Koning, H.J.; van der Aalst, C.M.; de Jong, P.A.; Scholten, E.T.; Nackaerts, K.; Heuvelmans, M.A.; Lammers, J.-W.J.; Weenink, C.; Yousaf-Khan, U.; Horeweg, N.; et al. Reduced Lung-Cancer Mortality with Volume CT Screening in a Randomized Trial. New. Engl. J. Med. 2020, 382, 503–513. [Google Scholar] [CrossRef]

- Howlader, N.; Forjaz, G.; Mooradian, M.J.; Meza, R.; Kong, C.Y.; Cronin, K.A.; Mariotto, A.B.; Lowy, D.R.; Feuer, E.J. The Effect of Advances in Lung-Cancer Treatment on Population Mortality. N. Engl. J. Med. 2020, 383, 640. [Google Scholar] [CrossRef]

- Knight, S.B.; Crosbie, P.A.; Balata, H.; Chudziak, J.; Hussell, T.; Dive, C. Progress and Prospects of Early Detection in Lung Cancer. Open. Biol. 2017, 7, 170070. [Google Scholar] [CrossRef]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial Intelligence in Cancer Imaging: Clinical Challenges and Applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef]

- Gour, M.; Jain, S.; Sunil Kumar, T. Residual Learning Based CNN for Breast Cancer Histopathological Image Classification. Int. J. Imaging Syst. Technol. 2020, 30, 621–635. [Google Scholar] [CrossRef]

- Garg, R.; Maheshwari, S.; Shukla, A. Decision Support System for Detection and Classification of Skin Cancer Using CNN. Adv. Intell. Syst. Comput. 2021, 1189, 578–586. [Google Scholar] [CrossRef]

- Ezhilarasi, R.; Varalakshmi, P. Tumor Detection in the Brain Using Faster R-CNN. In Proceedings of the 2018 2nd International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC)I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 30–31 August 2018; pp. 388–392. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-End Lung Cancer Screening with Three-Dimensional Deep Learning on Low-Dose Chest Computed Tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Chen, A.; Yang, L.; Cai, L.; Xie, Y.; Fujimoto, J.; Gazdar, A.; Xiao, G. Comprehensive Analysis of Lung Cancer Pathology Images to Discover Tumor Shape and Boundary Features That Predict Survival Outcome. Sci. Rep. 2018, 8, 10393. [Google Scholar] [CrossRef]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and Mutation Prediction from Non–Small Cell Lung Cancer Histopathology Images Using Deep Learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Burlutskiy, N.; Gu, F.; Wilen, L.K.; Backman, M.; Micke, P. A Deep Learning Framework for Automatic Diagnosis in Lung Cancer. arXiv 2018, arXiv:1807.10466. [Google Scholar]

- Li, Z.; Hu, Z.; Xu, J.; Tan, T.; Chen, H.; Duan, Z.; Liu, P.; Tang, J.; Cai, G.; Ouyang, Q.; et al. Computer-Aided Diagnosis of Lung Carcinoma Using Deep Learning—A Pilot Study. arXiv 2018, arXiv:1803.05471. [Google Scholar]

- Yu, K.H.; Wang, F.; Berry, G.J.; Ré, C.; Altman, R.B.; Snyder, M.; Kohane, I.S. Classifying Non-Small Cell Lung Cancer Types and Transcriptomic Subtypes Using Convolutional Neural Networks. J. Am. Med. Inform. Assoc. 2020, 27, 757–769. [Google Scholar] [CrossRef]

- Saric, M.; Russo, M.; Stella, M.; Sikora, M. CNN-Based Method for Lung Cancer Detection in Whole Slide Histopathology Images. In Proceedings of the 2019 4th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 18–21 June 2019. [Google Scholar] [CrossRef]

- Hatuwal, B.K.; Chand Thapa, H. Lung Cancer Detection Using Convolutional Neural Network on Histopathological Images. Artic. Int. J. Comput. Trends Technol. 2020, 68, 21–24. [Google Scholar] [CrossRef]

- Chen, C.L.; Chen, C.C.; Yu, W.H.; Chen, S.H.; Chang, Y.C.; Hsu, T.I.; Hsiao, M.; Yeh, C.Y.; Chen, C.Y. An Annotation-Free Whole-Slide Training Approach to Pathological Classification of Lung Cancer Types Using Deep Learning. Nat. Commun. 2021, 12, 1193. [Google Scholar] [CrossRef] [PubMed]

- Jehangir, B.; Nayak, S.R.; Shandilya, S. Lung Cancer Detection Using Ensemble of Machine Learning Models. In Proceedings of the Confluence 2022—12th International Conference on Cloud Computing, Data Science and Engineering, Noida, India, 27–28 January 2022; pp. 411–415. [Google Scholar] [CrossRef]

- Wahid, R.R.; Nisa, C.; Amaliyah, R.P.; Puspaningrum, E.Y. Lung and Colon Cancer Detection with Convolutional Neural Networks on Histopathological Images. AIP Conf. Proc. 2023, 2654, 020020. [Google Scholar] [CrossRef]

- National Cancer Institute. NLST—The Cancer Data Access System. Available online: https://cdas.cancer.gov/nlst/ (accessed on 24 February 2023).

- National Cancer Institute. The Cancer Genome Atlas Program (TCGA). Available online: https://www.cancer.gov/ccg/research/genome-sequencing/tcga (accessed on 24 February 2023).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 1–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Müller, D.; Soto-Rey, I.; Kramer, F. Towards a Guideline for Evaluation Metrics in Medical Image Segmentation. BMC Res. Notes 2022, 15, 210. [Google Scholar] [CrossRef]

- Roberto, G.F.; Lumini, A.; Neves, L.A.; do Nascimento, M.Z. Fractal Neural Network: A New Ensemble of Fractal Geometry and Convolutional Neural Networks for the Classification of Histology Images. Expert. Syst. Appl. 2021, 166, 114103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).