The Concept of Interactive Dynamic Intelligent Virtual Sensors (IDIVS): Bridging the Gap between Sensors, Services, and Users through Machine Learning

Abstract

1. Introduction

Related Work and Research Gap

2. The IDIVS Concept and Application Domains

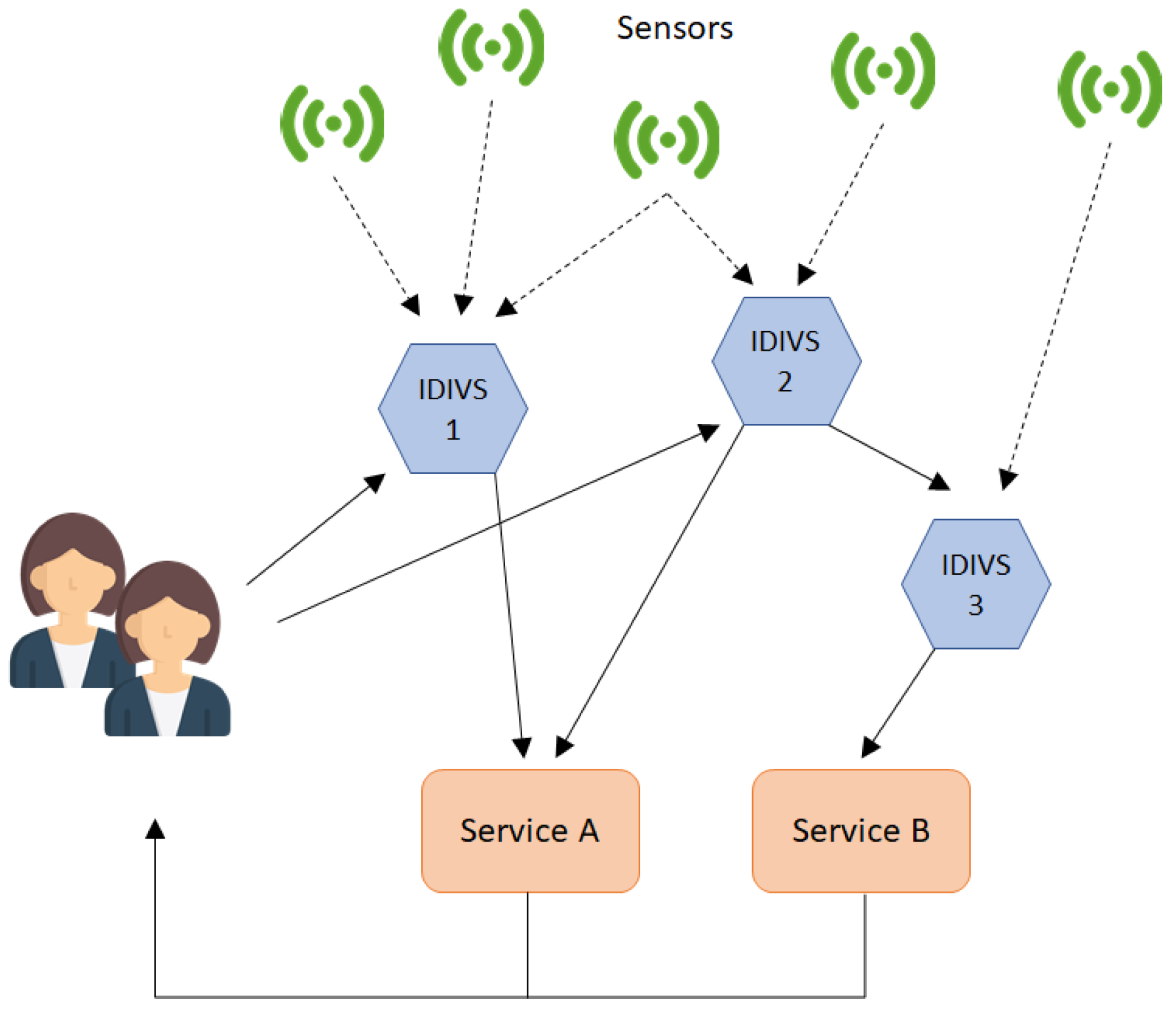

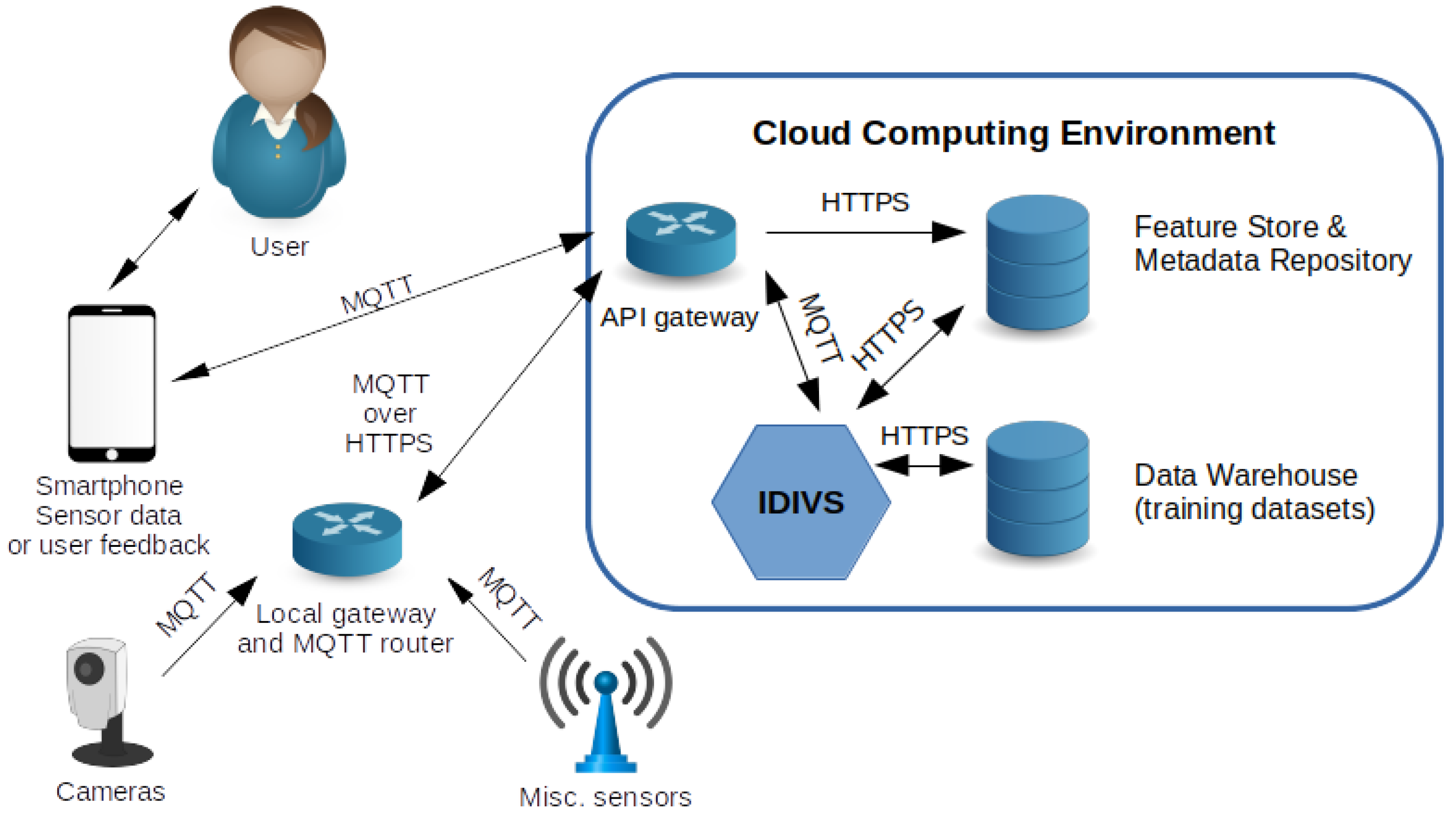

2.1. The IDIVS Concept

- Service user, i.e., a user of a service relying on the IDIVS output;

- Interactive service user, i.e., a user that also interacts with the system, providing input to the system that can help the system learn/adapt.

- System configurator, i.e., defining what set of sensor/IDIVS to use as input, what states to detect, where to distribute the output, etc.;

- IDIVS teacher, e.g., orchestrating scenarios, which is used for training IDIVSs in a systematic manner.

2.2. Capabilities

- Redundant/competitive fusion: multiple sensor data (of the same type) representing the same information area are integrated to increase the accuracy of the information about the state of an environment;

- Cooperative fusion: multiple sensor data (of different types) representing the same information area are integrated to obtain more information about the state of an environment;

- Complementary fusion: multiple sensor data representing different environments are integrated to obtain complete information about a state. This information is usually provided by multiple IDIVSs connecting to different environments.

- Fuse sensor data to produce a sensor-like output (numerical or categorical) about the state of a specific environment based on sensor-like input (numerical or categorical) relevant for the environment;

- Adapt to changes in the set of sensors, including integrating new relevant sensor values at run-time;

- Use labelled data to improve accuracy by machine learning, e.g., based on user feedback relevant for the current IDIVS output, a batch of pre-labelled data, or a model or partial model of IDIVS (e.g., by transfer learning);

- Support self-assessment of the accuracy of its output;

- Learn to detect new states of its environment (not specified at design time);

- Provide information about the sensor input it uses with respect to how important different sensor values are for generating the output (e.g., a type of information gain);

- Anomaly detection and diagnosis, e.g., detecting tampering attempts on input sensor streams.

2.3. IDIVS Input and Output

2.4. Application Areas

2.4.1. Smart Buildings

2.4.2. Smart Mobility

2.4.3. Smart Health

2.4.4. Smart Learning

2.5. Deployment

3. Results and Discussion

3.1. Learning Capabilities

- Cold start (no or very little initial training data);

- Dynamicity of available sensors;

- Concept drift.

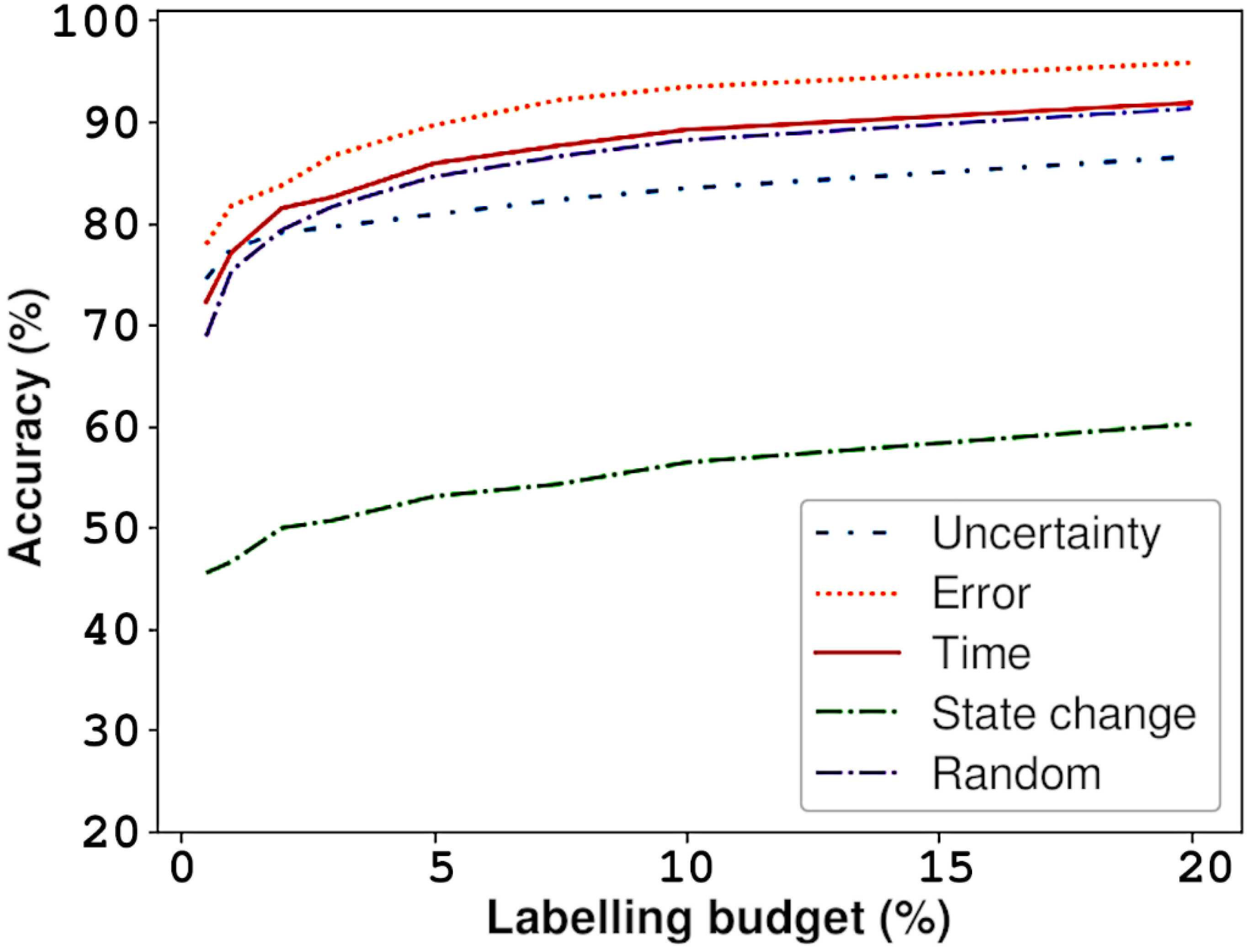

3.2. Interactive Learning/Human-In-The-Loop

3.3. Managing Dynamicity and Cold Start

3.4. Transfer Learning

3.5. End-User Interface for Automated IDIVS Generation

3.6. IDIVS and Information Security

- (i)

- Adversarial attacks. An adversary may trick the IDIVS ML components, e.g., the Online Incremental Learner, by furnishing malicious input that causes the system to make a false prediction. An example of this is having the adversary intentionally misclassify an activity type.

- (ii)

- Data poisoning attacks. An adversary may manipulate the input data being used by the IDIVS in a coordinated manner, potentially compromising the entire system. An example of this is having the adversary tamper with the input data to impact the ability of the IDIVS to output correct predictions.

- (iii)

- Online system manipulation attacks. An adversary may nudge the still-learning IDIVS, i.e., when operating using online learning, in the wrong direction. An example of this is having the adversary reprogram the IDIVS to capture environmental states that were not intended.

- (iv)

- Transfer learning attacks. An adversary may be able to devise attacks using a pre-trained (full/partial) IDIVS model against a tuned task-specific model. An example of this is having the adversary reverse-engineer the transfer layer of the IDIVS to discover attributes, sensing modalities, and augmented target tasks from the original model.

- (v)

- Data confidentiality attacks. An adversary may be able to extract confidential or sensitive data that were used for training and teaching the IDIVS. An example of this is having the adversary observe data flows being exchanged between the IDIVS teacher and the learning components.

3.7. IDIVS Compared to Virtual Sensors

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mihailescu, R.C.; Persson, J.; Davidsson, P.; Eklund, U. Towards Collaborative Sensing using Dynamic Intelligent Virtual Sensors. In Intelligent Distributed Computing X. IDC 2016. Studies in Computational Intelligence; Springer: Cham, Switzerland, 2017; Volume 678. [Google Scholar] [CrossRef]

- Tegen, A.; Davidsson, P.; Mihailescu, R.-C.; Persson, J.A. Collaborative Sensing with Interactive Learning Using Dynamic Intelligent Virtual Sensors. Sensors 2019, 19, 477. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.; Belqasmi, F.; Glitho, R.; Crespi, N.; Morrow, M.; Polakos, P. Wireless Sensor Network Virtualization: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 553–576. [Google Scholar] [CrossRef]

- Liu, L.; Kuo, S.M.; Zhou, M. Virtual Sensing Techniques and Their Applications. In Proceedings of the 2009 International Conference on Networking, Sensing and Control, Okayama, Japan, 26–29 March 2009; pp. 31–36. [Google Scholar]

- Islam, M.M.; Hassan, M.M.; Lee, G.-W.; Huh, E.-N. A Survey on Virtualization of Wireless Sensor Networks. Sensors 2012, 12, 2175–2207. [Google Scholar] [CrossRef] [PubMed]

- Kobo, H.I.; Abu-Mahfouz, A.M.; Hancke, G.P. A Survey on Software-Defined Wireless Sensor Networks: Challenges and Design Requirements. IEEE Access 2017, 5, 1872–1899. [Google Scholar] [CrossRef]

- Nguyen, C.; Hoang, D. Software-Defined Virtual Sensors for Provisioning IoT Services on Demand. In Proceedings of the 2020 5th International Conference on Computer and Communication Systems (ICCCS), Shanghai, China, 15–18 May 2020; pp. 796–802. [Google Scholar]

- Corsini, P.; Masci, P.; Vecchio, A. Configuration and Tuning of Sensor Network Applications through Virtual Sensors. In Proceedings of the Fourth Annual IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOMW’06), Pisa, Italy, 13–17 March 2006; p. 5. [Google Scholar]

- Settles, B. Active Learning Literature Survey; University of Wisconsin: Madison, WI, USA, 2010. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Kadlec, P.; Gabrys, B.; Strandt, S. Data-Driven Soft Sensors in the Process Industry. Comput. Chem. Eng. 2009, 33, 795–814. [Google Scholar] [CrossRef]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Virtual Sensors for Optimal Integration of Human Activity Data. Sensors 2019, 19, 2017. [Google Scholar] [CrossRef] [PubMed]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Multi-Sensor Fusion for Activity Recognition—A Survey. Sensors 2019, 19, 3808. [Google Scholar] [CrossRef] [PubMed]

- Arikumar, K.S.; Tamilarasi, K.; Prathiba, S.B.; Chalapathi, M.V.; Moorthy, R.S.; Kumar, A.D. The Role of Machine Learning in IoT: A Survey. In Proceedings of the 2022 3rd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 20–22 October 2022; pp. 451–457. [Google Scholar]

- Asghari, P.; Rahmani, A.M.; Javadi, H.H.S. Internet of Things Applications: A Systematic Review. Comput. Netw. 2019, 148, 241–261. [Google Scholar] [CrossRef]

- Jia, M.; Komeily, A.; Wang, Y.; Srinivasan, R.S. Adopting Internet of Things for the Development of Smart Buildings: A Review of Enabling Technologies and Applications. Autom. Constr. 2019, 101, 111–126. [Google Scholar] [CrossRef]

- Paiva, S.; Ahad, M.A.; Tripathi, G.; Feroz, N.; Casalino, G. Enabling Technologies for Urban Smart Mobility: Recent Trends, Opportunities and Challenges. Sensors 2021, 21, 2143. [Google Scholar] [CrossRef] [PubMed]

- Zantalis, F.; Koulouras, G.; Karabetsos, S.; Kandris, D. A Review of Machine Learning and IoT in Smart Transportation. Future Internet 2019, 11, 94. [Google Scholar] [CrossRef]

- Chen, S.; Jin, X.; Zhang, L.; Wan, J. A Comprehensive Review of IoT Technologies and Applications for Healthcare. In Proceedings of the Advances in Artificial Intelligence and Security, Dublin, Ireland, 19–23 July 2021; Sun, X., Zhang, X., Xia, Z., Bertino, E., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 358–367. [Google Scholar]

- Martín, A.C.; Alario-Hoyos, C.; Kloos, C.D. Smart Education: A Review and Future Research Directions. Proceedings 2019, 31, 57. [Google Scholar] [CrossRef]

- Tegen, A.; Davidsson, P.; Persson, J.A. A Taxonomy of Interactive Online Machine Learning Strategies. In Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2020; Hutter, F., Kersting, K., Lijffijt, J., Valera, I., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 12458. [Google Scholar] [CrossRef]

- Tegen, A.; Davidsson, P.; Persson, J. Activity Recognition through Interactive Machine Learning in a Dynamic Sensor Setting. Pers. Ubiquitous Comput. 2020, 2020, 1–14. [Google Scholar] [CrossRef]

- Tegen, A.; Davidsson, P.; Persson, J.A. Interactive Machine Learning for the Internet of Things: A Case Study on Activity Detection. In Proceedings of the 9th International Conference on the Internet of Things; Association for Computing Machinery: Bilbao, Spain, 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Tegen, A.; Davidsson, P.; Persson, J.A. The Effects of Reluctant and Fallible Users in Interactive Online Machine Learning. In Proceedings of the Interactive Adaptive Learning 2020, Ghent, Belgium, 14 September 2020; CEUR Workshop Proceedings. CEUR Workshops: Aachen, Germany, 2020; Volume 2660, pp. 55–71. [Google Scholar]

- Ghajargar, M.; Persson, J.; Bardzell, J.; Holmberg, L.; Tegen, A. The UX of Interactive Machine Learning. In Proceedings of the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society, Tallinn, Estonia, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–3. [Google Scholar]

- Donmez, P. Proactive Learning: Cost-Sensitive Active Learning with Multiple Imperfect Oracles. In Proceedings of the CIKM08, Napa Valley, CA, USA, 26–30 October 2008. [Google Scholar]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; Suh, J.; Iqbal, S.; Bennett, P.N.; Inkpen, K.; et al. Guidelines for Human-AI Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: Glasgow, UK, 2019; pp. 1–13. [Google Scholar]

- Preece, A. Asking ‘Why’ in AI: Explainability of Intelligent Systems—Perspectives and Challenges. Intell. Syst. Account. Financ. Manag. 2018, 25, 63–72. [Google Scholar] [CrossRef]

- Amershi, S.; Cakmak, M.; Knox, W.B.; Kulesza, T. Power to the People: The Role of Humans in Interactive Machine Learning. AI Mag. 2014, 35, 105–120. [Google Scholar] [CrossRef]

- Schröder, A.M.; Ghajargar, M. Unboxing the Algorithm: Designing an Understandable Algorithmic Experience in Music Recommender Systems. In Proceedings of the 15th ACM Conference on Recommender Systems (RecSys 2021), Amsterdam, The Netherlands, 25 September 2021. [Google Scholar]

- Mihailescu, R.-C. A Weakly-Supervised Deep Domain Adaptation Method for Multi-Modal Sensor Data. In Proceedings of the 2021 IEEE Global Conference on Artificial Intelligence and Internet of Things (GCAIoT), Dubai, United Arab Emirates, 12–16 December 2021; pp. 45–50. [Google Scholar]

- Mihailescu, R.-C.; Kyriakou, G.; Papangelis, A. Natural Language Understanding for Multi-Level Distributed Intelligent Virtual Sensors. IoT 2020, 1, 494–505. [Google Scholar] [CrossRef]

- Li, Y.-X.; Qin, L.; Liang, Q. Research on Wireless Sensor Network Security. In Proceedings of the 2010 International Conference on Computational Intelligence and Security, Nanning, China, 11–14 December 2010; pp. 493–496. [Google Scholar]

- Kebande, V.R.; Bugeja, J.; Persson, J.A. Internet of Threats Introspection in Dynamic Intelligent Virtual Sensing. In Proceedings of the 1st Workshop Cyber-Phys. Social Syst. (CPSS), Barcelona, Spain, 17–20 May 2022; pp. 1–8. [Google Scholar]

- Ferrag, M.A.; Derdour, M.; Mukherjee, M.; Derhab, A.; Maglaras, L.; Janicke, H. Blockchain Technologies for the Internet of Things: Research Issues and Challenges. IEEE Internet Things J. 2019, 6, 2188–2204. [Google Scholar] [CrossRef]

- Waqas, M.; Kumar, K.; Laghari, A.A.; Saeed, U.; Rind, M.M.; Shaikh, A.A.; Hussain, F.; Rai, A.; Qazi, A.Q. Botnet Attack Detection in Internet of Things Devices over Cloud Environment via Machine Learning. Concurr. Comput. Pract. Exp. 2022, 34, e6662. [Google Scholar] [CrossRef]

- Hussain, F.; Hussain, R.; Hassan, S.A.; Hossain, E. Machine Learning in IoT Security: Current Solutions and Future Challenges. IEEE Commun. Surv. Tutor. 2020, 22, 1686–1721. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Persson, J.A.; Bugeja, J.; Davidsson, P.; Holmberg, J.; Kebande, V.R.; Mihailescu, R.-C.; Sarkheyli-Hägele, A.; Tegen, A. The Concept of Interactive Dynamic Intelligent Virtual Sensors (IDIVS): Bridging the Gap between Sensors, Services, and Users through Machine Learning. Appl. Sci. 2023, 13, 6516. https://doi.org/10.3390/app13116516

Persson JA, Bugeja J, Davidsson P, Holmberg J, Kebande VR, Mihailescu R-C, Sarkheyli-Hägele A, Tegen A. The Concept of Interactive Dynamic Intelligent Virtual Sensors (IDIVS): Bridging the Gap between Sensors, Services, and Users through Machine Learning. Applied Sciences. 2023; 13(11):6516. https://doi.org/10.3390/app13116516

Chicago/Turabian StylePersson, Jan A., Joseph Bugeja, Paul Davidsson, Johan Holmberg, Victor R. Kebande, Radu-Casian Mihailescu, Arezoo Sarkheyli-Hägele, and Agnes Tegen. 2023. "The Concept of Interactive Dynamic Intelligent Virtual Sensors (IDIVS): Bridging the Gap between Sensors, Services, and Users through Machine Learning" Applied Sciences 13, no. 11: 6516. https://doi.org/10.3390/app13116516

APA StylePersson, J. A., Bugeja, J., Davidsson, P., Holmberg, J., Kebande, V. R., Mihailescu, R.-C., Sarkheyli-Hägele, A., & Tegen, A. (2023). The Concept of Interactive Dynamic Intelligent Virtual Sensors (IDIVS): Bridging the Gap between Sensors, Services, and Users through Machine Learning. Applied Sciences, 13(11), 6516. https://doi.org/10.3390/app13116516