Response Prediction for Linear and Nonlinear Structures Based on Data-Driven Deep Learning

Abstract

1. Introduction

1.1. Structural Response Analysis

1.2. AI in Earthquake Engineering

2. RNN and LSTM Models for Structural Response Prediction

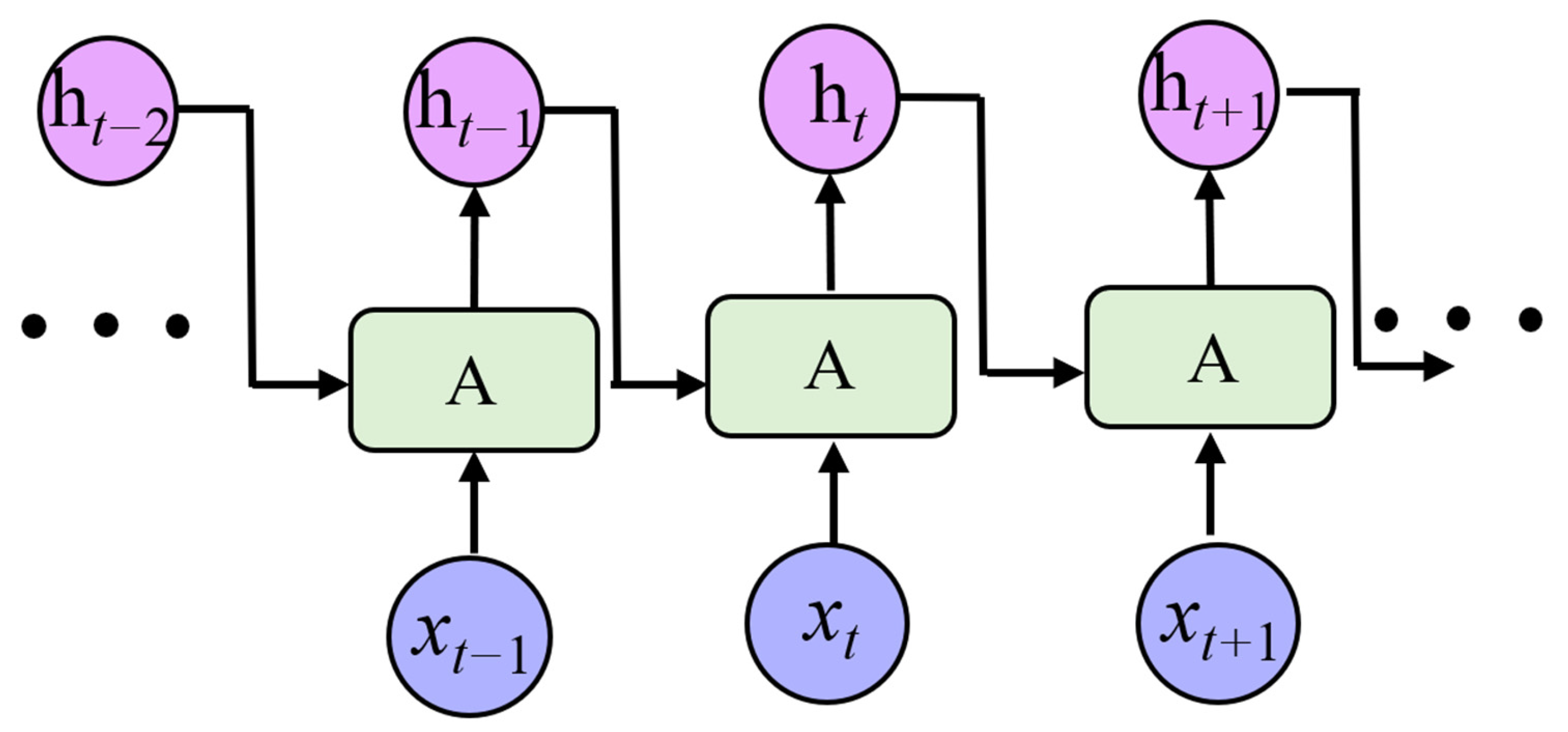

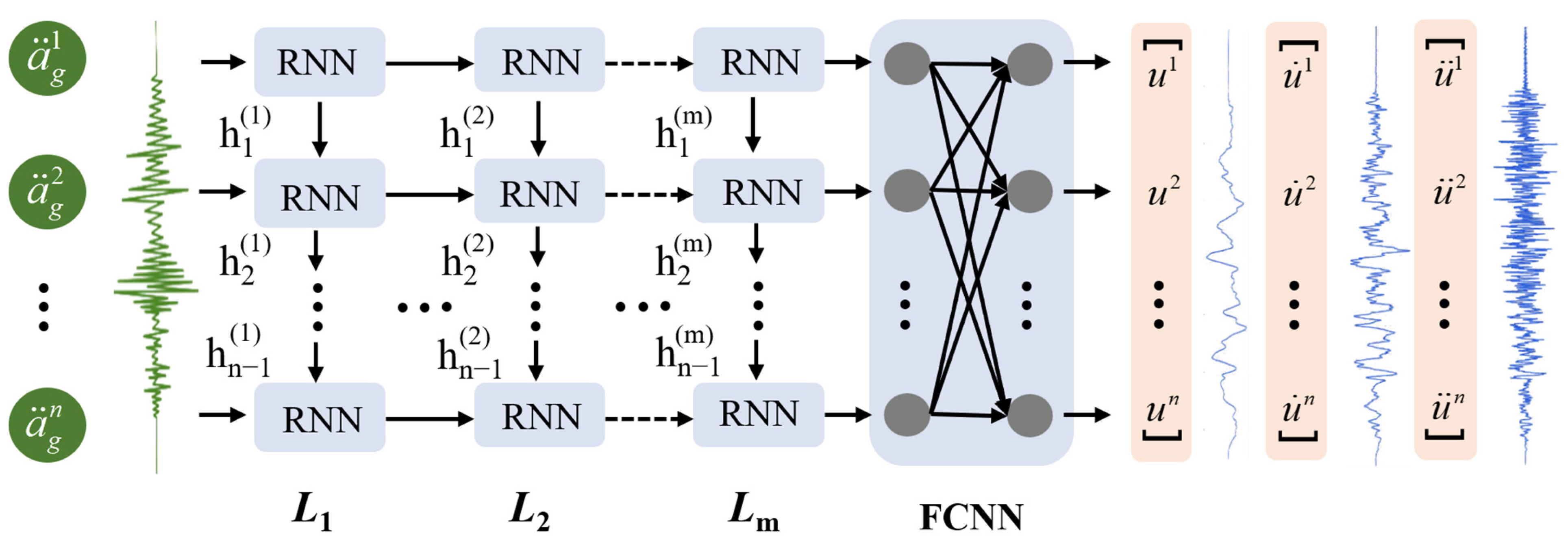

2.1. RNN Model

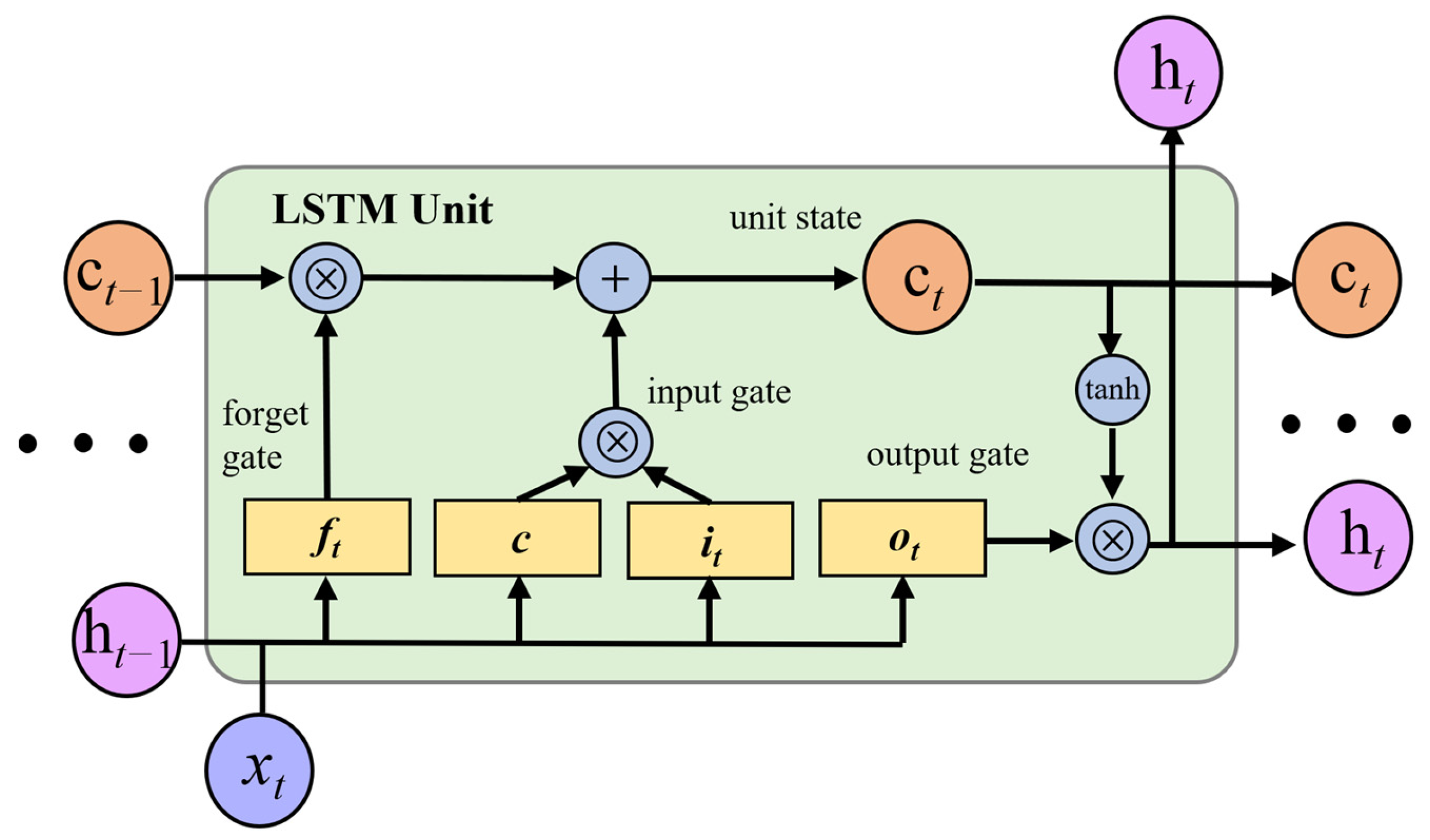

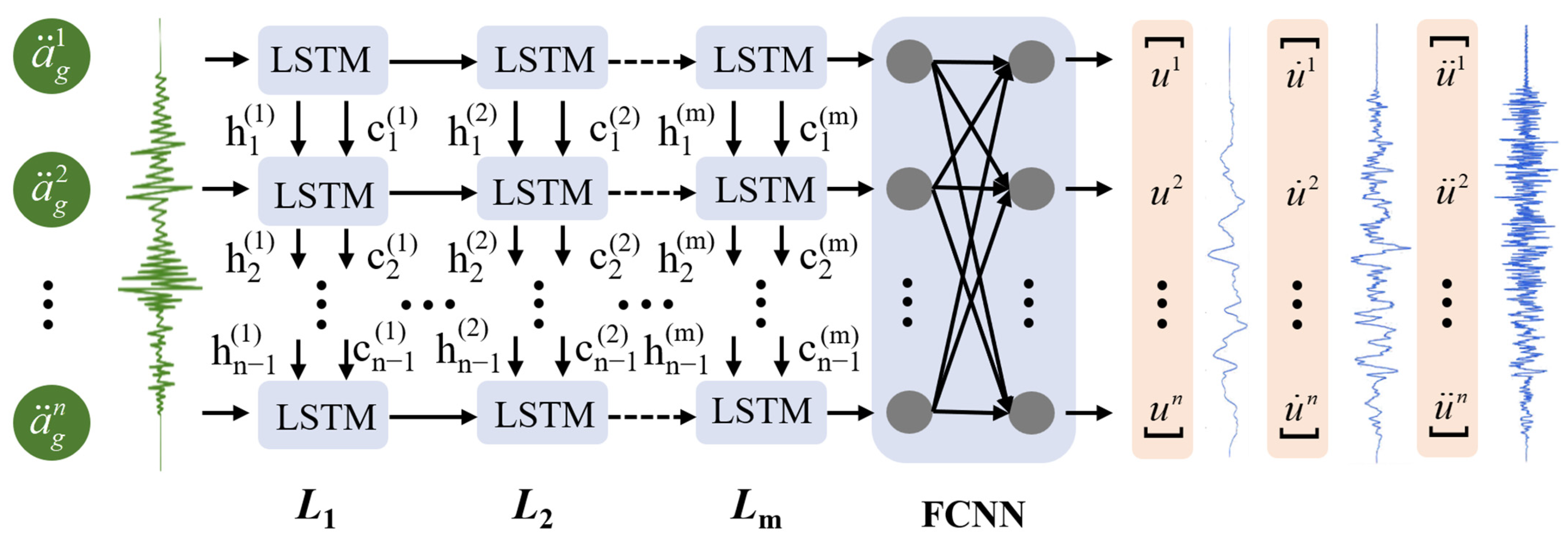

2.2. LSTM Model

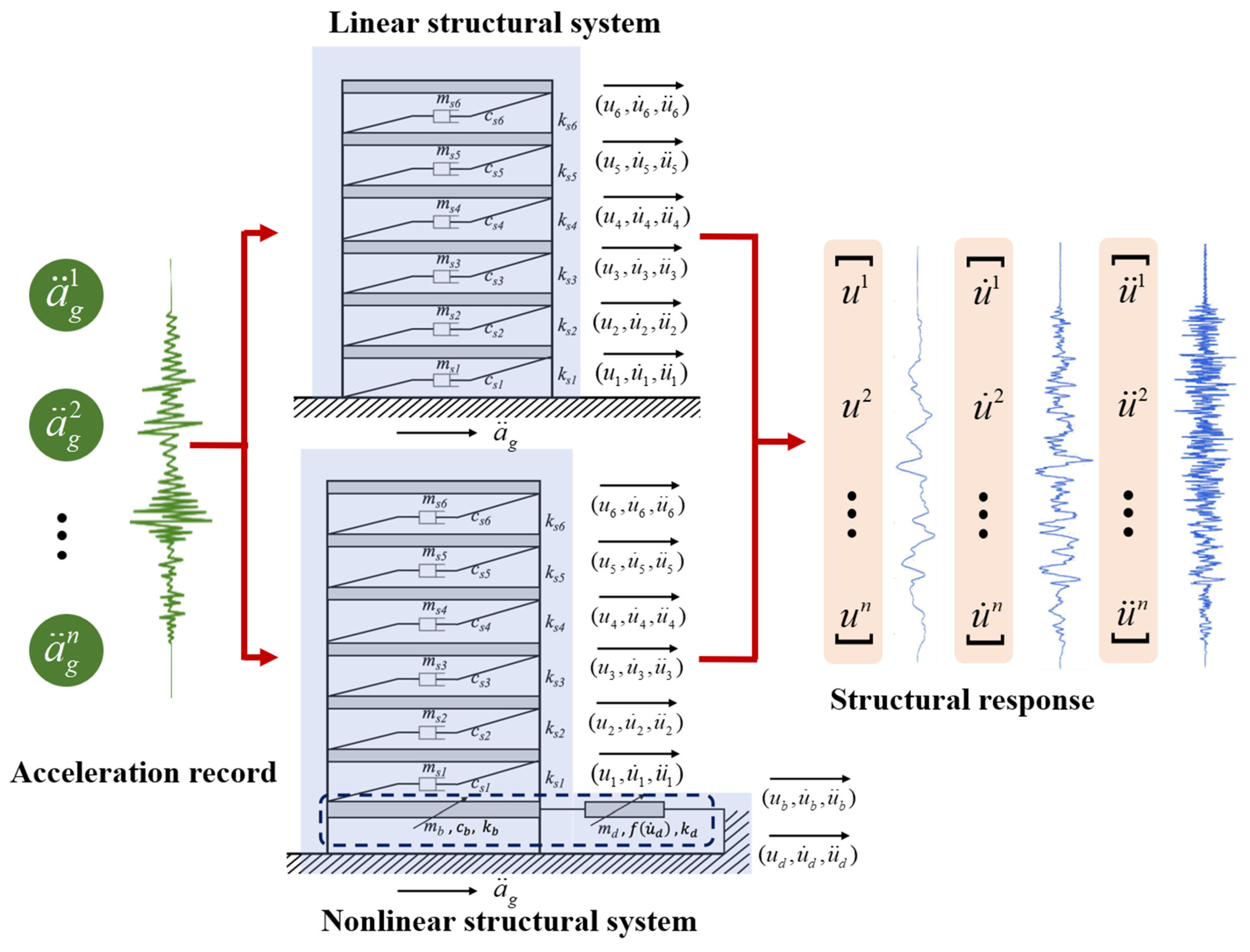

3. Construction of Dataset and Training of Models

3.1. Linear and Nonlinear Structural Models

3.2. Clustering of Seismic Waves Based on TSkmeans

3.2.1. Selection of Seismic Excitations

- The seismograph station is located on a free field or the ground floor of a low building;

- Strong earthquake records with magnitudes above 5.5;

- Velocity time history of seismic records with or without the pulse waveform;

- Fault distance ranges from 0 to 100 km.

3.2.2. Clustering of Seismic Records

- Input: the sequence set X, the number of clustering centers k, the equilibrium parameters , and the random initial values W and C.

- Repeat:Step 1: Based on W and C, U is solved by the following formula:where can be expressed as:Step 2: Based on the initial W and U obtained in step 1, C is solved by the following formula:Step 3: U and C are obtained based on steps 1 and 2, and W is solved by quadratic programming.

- Output: Determine convergence, output U, C, W.

3.3. Input and Output of Neural Network Model

3.4. Model Training

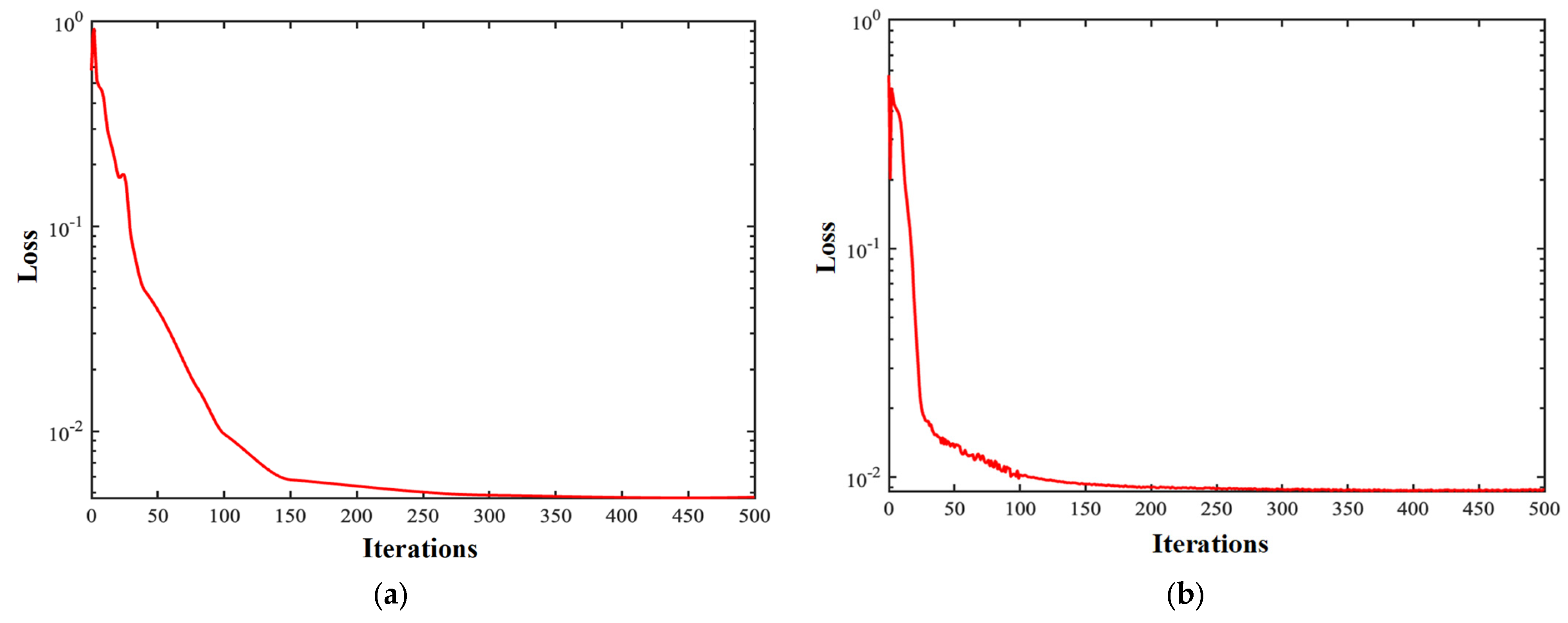

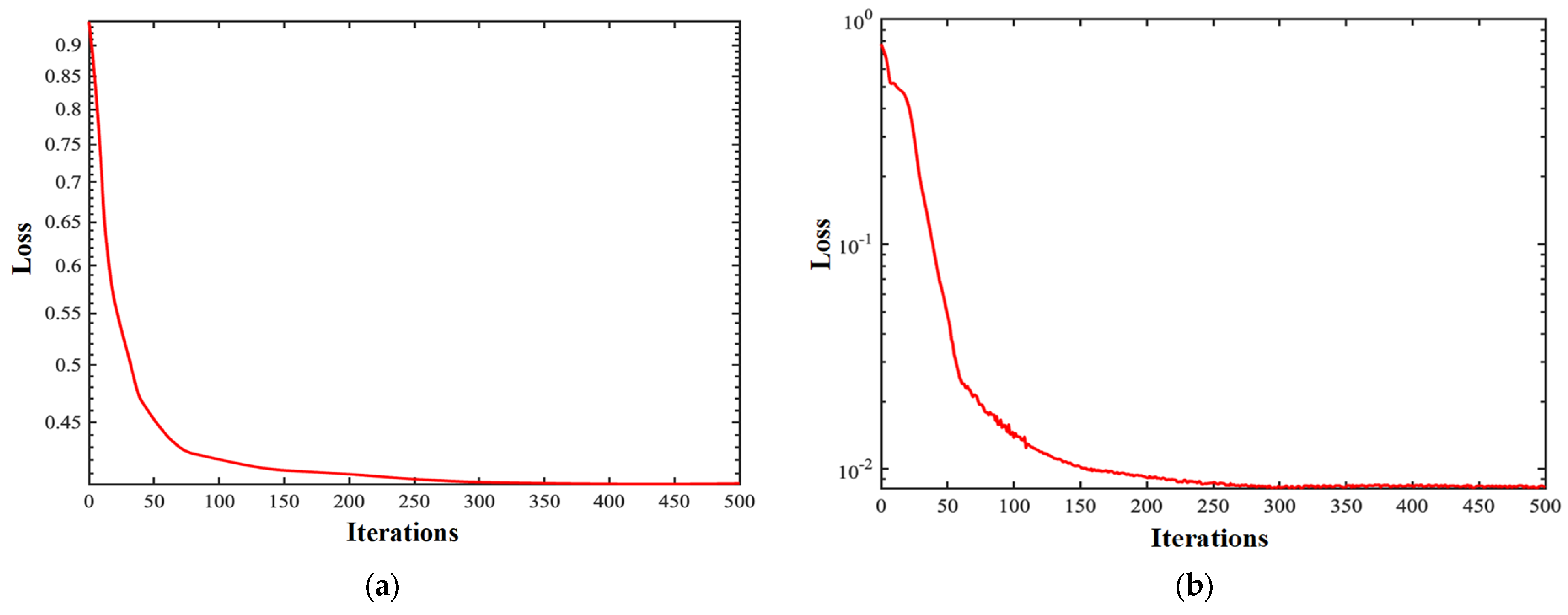

3.4.1. Model Training for the Linear Structure

3.4.2. Model Training for the Nonlinear Structure

4. Results and Discussion

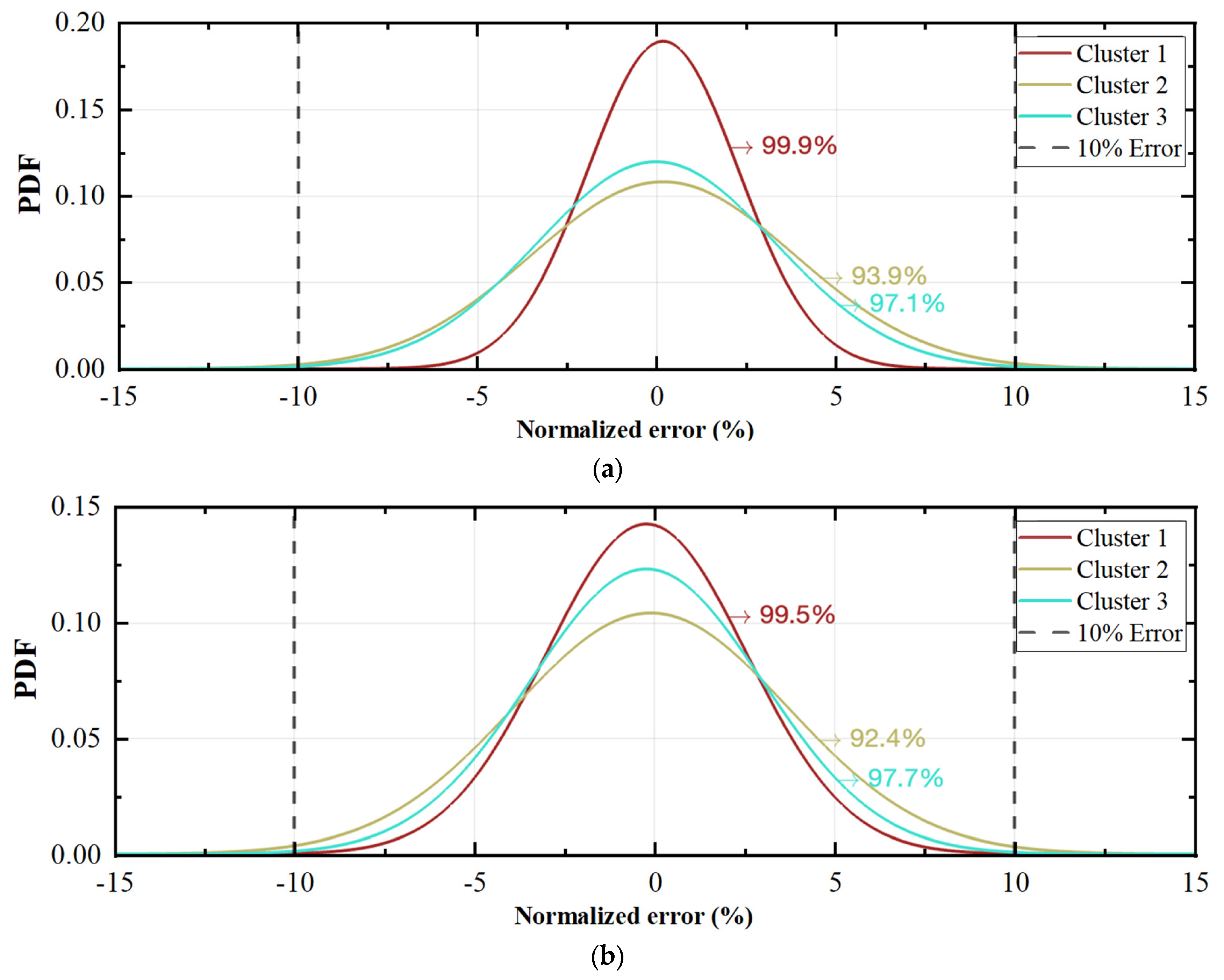

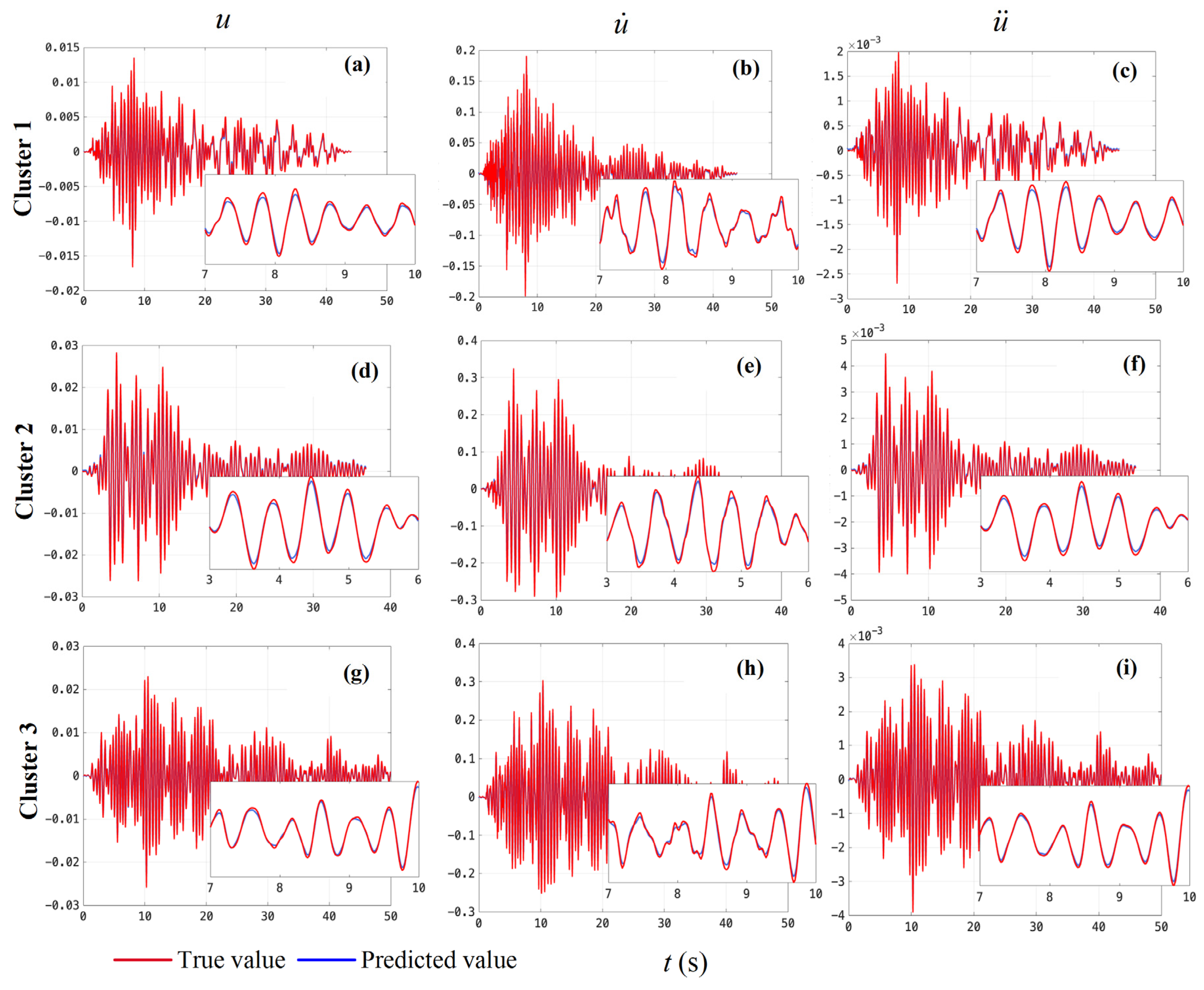

4.1. Response Prediction for the Linear Structure

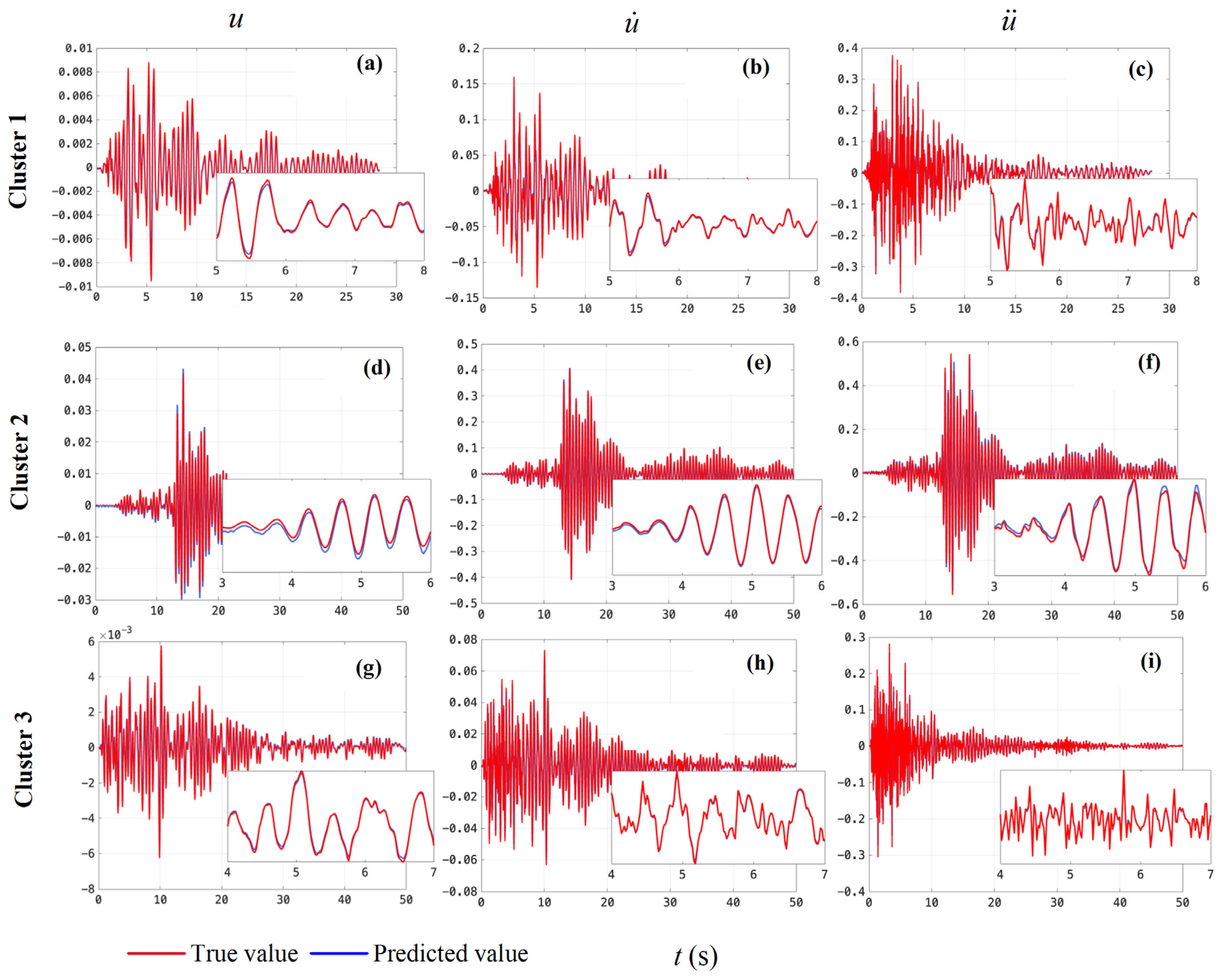

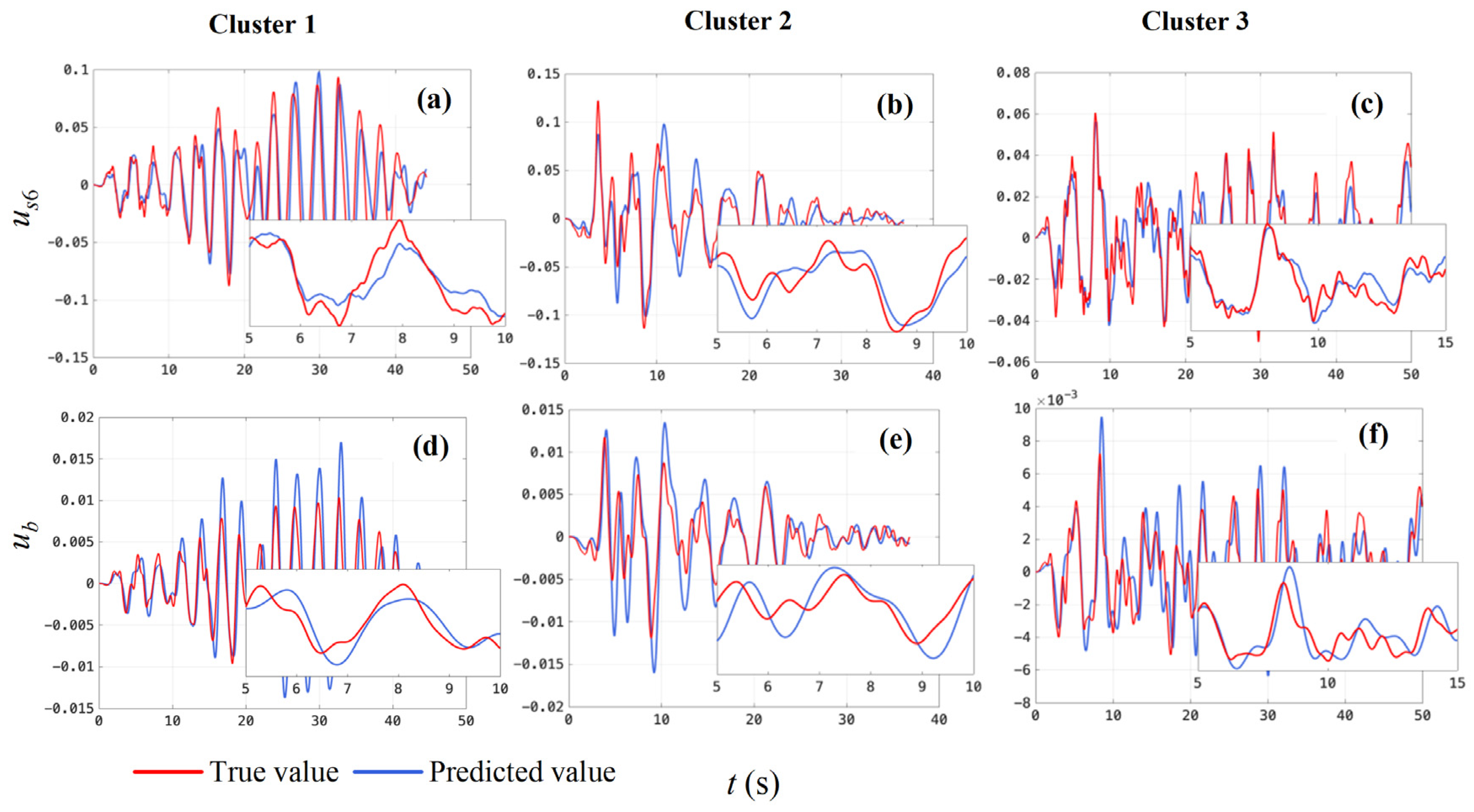

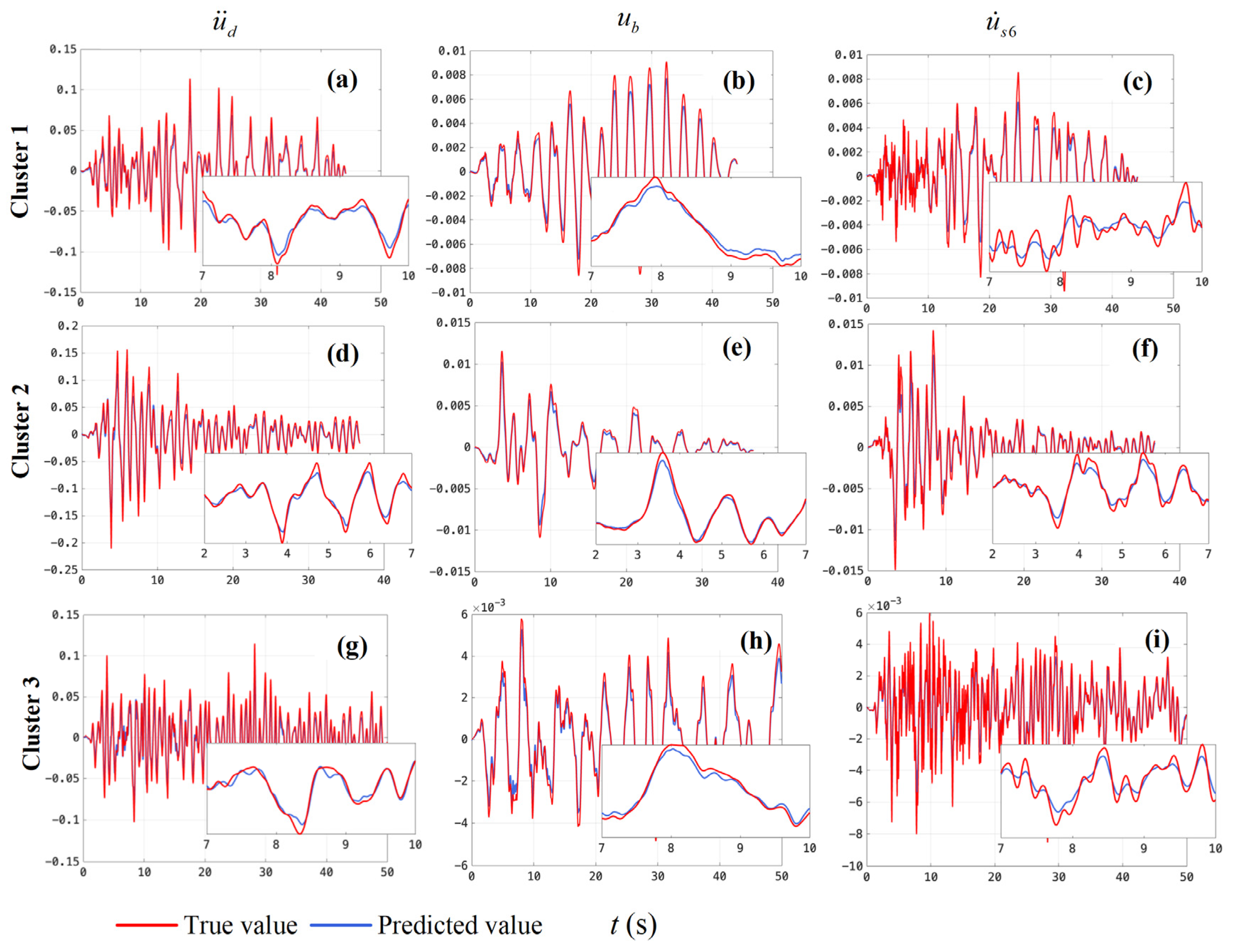

4.2. Response Prediction for Nonlinear Structure

5. Conclusions

- (1)

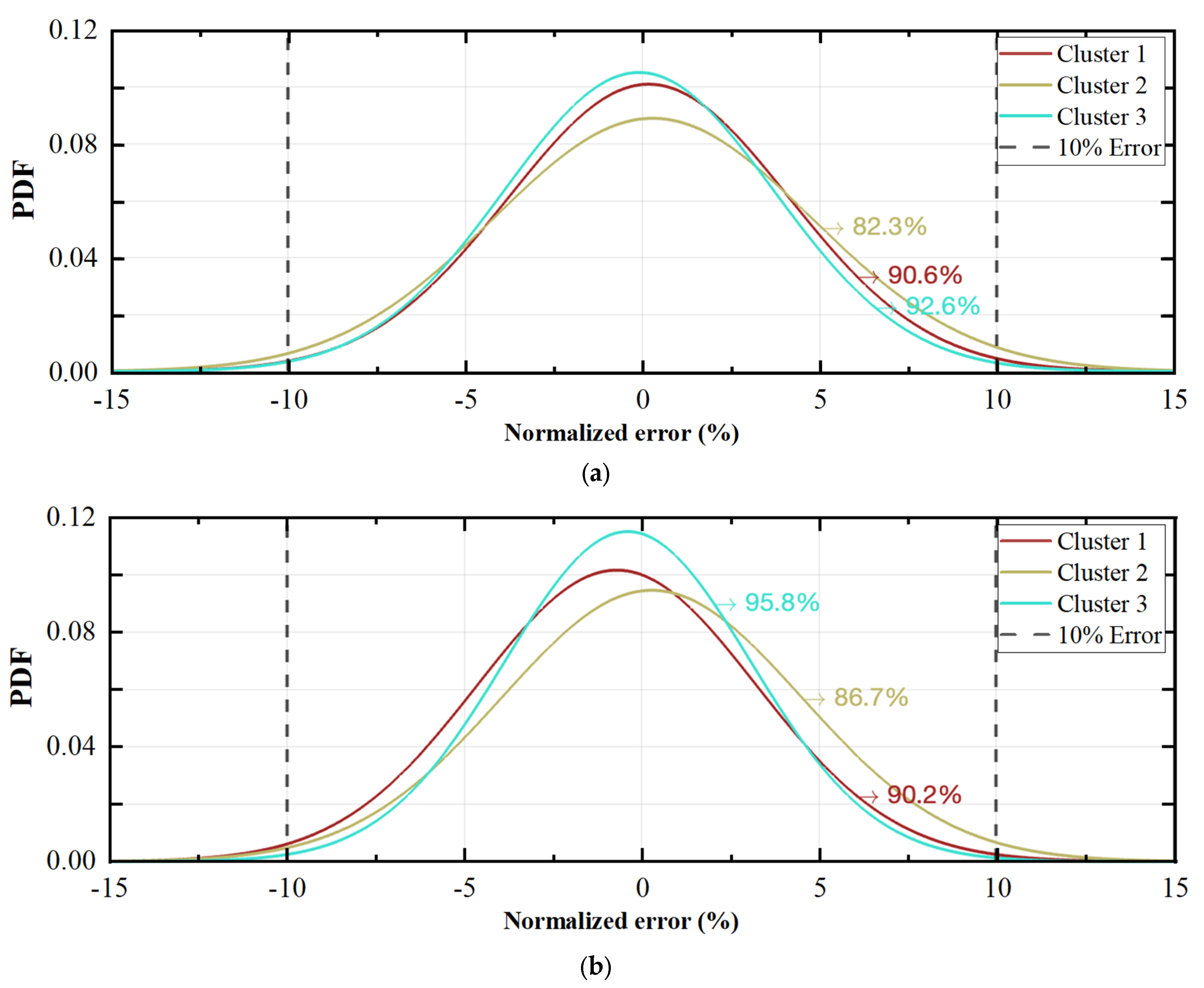

- The RNN and LSTM models had good accuracy and generalization for predicting linear structural responses. Within the confidence interval [−10%, 10%], the confidence degrees of the prediction results by the RNN model for linear structural responses under the three clusters’ seismic waves were 99.9%, 93.9%, and 97.1%, and those of the LSTM model were 99.5%, 92.4%, and 97.7%, respectively. Both the overall trend and details of the linear structure responses predicted by the two models were highly consistent with the true values. Both the RNN and LSTM models were capable of predicting the response of linear structures under different seismic waves. In addition, it was found that the non-stationary characteristics of seismic waves could reduce the prediction accuracy of the models.

- (2)

- The overall accuracy of the RNN in predicting nonlinear structural response was poor. Compared with the predicted results of linear structures, the prediction accuracy of the RNN model for nonlinear structure responses was significantly decreased. Within the confidence interval [−10%, 10%], the confidence degrees of the three clusters’ responses predicted by the RNN model were just 90.6%, 82.3%, and 92.6%, respectively. The predicted results of the RNN model were consistent with the overall trend of the true value; however, the prediction effect for some details was extremely poor (especially in the reproduction of high-frequency characteristics and peak values).

- (3)

- The performance of the LSTM model was significantly better than that of the RNN model in predicting nonlinear structural responses. Within the confidence interval [−10%, 10%], the confidence degrees of the three clusters’ responses predicted by the LSTM model were 90.2%, 86.7%, and 95.8%, respectively. Compared with the prediction result of linear structures, the prediction accuracy of the LSTM model for nonlinear structure responses was lower. The LSTM model could reproduce part of the response details of nonlinear structures.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Number | Seismic Event | Station | Fault Distance (km) | Magnitude | Vs30 | Pulse Pattern |

|---|---|---|---|---|---|---|

| 1 | Cape Mendocino | Petrolia | 8.18 | 7.01 | 422.17 | 2.996 |

| 2 | Cape Mendocino | Centerville Beach, Naval Fac | 18.31 | 7.01 | 459.04 | 1.967 |

| 3 | Chalfant Valley-01 | Bishop—Paradise Lodge | 15.13 | 5.77 | 585.12 | |

| 4 | Chi-Chi, Taiwan | CHY006 | 9.76 | 7.62 | 438.19 | 2.570 |

| 5 | Chuetsu-oki, Japan | Joetsu Kakizakiku Kakizaki | 11.94 | 6.80 | 383.43 | 1.400 |

| 6 | Coalinga-05 | Sulphur Baths (temp) | 11.42 | 5.77 | 617.43 | |

| 7 | Corinth, Greece | Corinth | 10.27 | 6.60 | 361.40 | |

| 8 | Coyote Lake | San Juan Bautista, 24 Polk St | 19.70 | 5.74 | 335.50 | |

| 9 | Darfield, New Zealand | GDLC | 1.22 | 7.00 | 344.02 | 6.230 |

| 10 | Denali, Alaska | TAPS Pump Station #10 | 2.74 | 7.90 | 329.40 | 3.157 |

| 11 | Fruili, Italy-03 | Buia | 11.98 | 5.50 | 310.68 | |

| 12 | Friuli, Italy-01 | Barcis | 49.38 | 6.50 | 496.46 | |

| 13 | Friuli, Italy-01 | Conegliano | 80.41 | 6.50 | 352.05 | |

| 14 | Imperial Valley-06 | Cerro Prieto | 15.19 | 6.53 | 471.53 | |

| 15 | Imperial Valley-06 | Parachute Test Site | 12.69 | 6.53 | 348.69 | |

| 16 | Imperial Valley-06 | Coachella Canal #4 | 50.10 | 6.53 | 336.49 | |

| 17 | Imperial Valley-06 | Plaster City | 30.33 | 6.53 | 316.64 | |

| 18 | Imperial Valley-06 | Superstition Mtn Camera | 24.61 | 6.53 | 362.38 | |

| 19 | Irpinia, Italy-01 | Bagnoli Irpinio | 8.18 | 6.90 | 649.67 | 1.713 |

| 20 | Irpinia, Italy-01 | Auletta | 9.55 | 6.90 | 476.62 | |

| 21 | Kern County | Santa Barbara Courthouse | 38.89 | 7.36 | 514.99 | |

| 22 | Kern County | Taft Lincoln School | 13.49 | 7.36 | 385.43 | |

| 23 | Kocaeli, Turkey | Arcelik | 13.49 | 7.51 | 523.00 | 7.791 |

| 24 | L’Aquila, Italy | L’Aquila-Parking | 5.38 | 6.30 | 717.0 | 1.981 |

| 25 | Livermore-01 | Antioch—510 G St | 15.13 | 5.80 | 304.68 | |

| 26 | Livermore-01 | APEEL 3E Hayward CSUH | 30.59 | 5.80 | 517.06 | |

| 27 | Livermore-01 | Del Valle Dam (Toe) | 24.95 | 5.80 | 403.37 | |

| 28 | Livermore-01 | Fremont-Mission San Jose | 35.68 | 5.80 | 367.57 | |

| 29 | Livermore-01 | Tracy-Sewage Treatm Plant | 53.82 | 5.80 | 650.05 | |

| 30 | Loma Prieta | Gilroy-Historic Bldg. | 10.97 | 6.93 | 308.55 | 1.638 |

| 31 | Loma Prieta | Gilroy Array #3 | 12.82 | 6.93 | 349.85 | 2.639 |

| 32 | Mammoth Lakes-01 | Long Valley Dam (Upr L Abut) | 15.46 | 6.06 | 537.16 | |

| 33 | Montenegro, Yugoslavia | Bar-Skupstina Opstine | 6.98 | 7.10 | 462.23 | 1.442 |

| 34 | Montenegro, Yugoslavia | Ulcinj-Hotel Olimpic | 5.76 | 7.10 | 318.74 | 1.974 |

| 35 | Morgan Hill | Gilroy Array #3 | 13.02 | 6.19 | 349.85 | |

| 36 | New Zealand-01 | Turangi Telephone Exchange | 8.84 | 5.50 | 356.39 | |

| 37 | Niigata, Japan | NIGH11 | 8.93 | 6.63 | 375.00 | 1.799 |

| 38 | Norcia, Italy | Bevagna | 31.45 | 5.90 | 401.34 | |

| 39 | Norcia, Italy | Spoleto | 13.28 | 5.90 | 535.24 | |

| 40 | Northridge-01 | Jensen Filter Plant Administrative Building | 5.43 | 6.69 | 373.07 | 3.157 |

| 41 | Northridge-01 | Pacoima Kagel Canyon | 7.26 | 6.69 | 508.08 | 0.728 |

| 42 | N. Palm Springs | Fun Valley | 14.24 | 6.06 | 388.63 | |

| 43 | Parkfield | Cholame—Shandon Array #12 | 17.64 | 6.19 | 408.93 | |

| 44 | Parkfield | San Luis Obispo | 63.34 | 6.19 | 493.50 | |

| 45 | Parkfield-02, CA | Parkfield-Fault Zone 9 | 2.85 | 6.00 | 372.26 | 1.134 |

| 46 | San Fernando | Castaic—Old Ridge Route | 19.63 | 6.61 | 450.28 | |

| 47 | San Fernando | Lake Hughes #12 | 19.30 | 6.61 | 602.10 | |

| 48 | San Fernando | Cedar Springs Pumphouse | 92.59 | 6.61 | 477.22 | |

| 49 | San Fernando | Cedar Springs, Allen Ranch | 89.72 | 6.61 | 813.48 | |

| 50 | San Fernando | Fairmont Dam | 30.19 | 6.61 | 634.33 | |

| 51 | San Fernando | LA-Hollywood Stor FF | 22.77 | 6.61 | 316.46 | |

| 52 | Southern Calif | San Luis Obispo | 73.41 | 6.00 | 493.50 | |

| 53 | Superstition Hills-02 | Parachute Test Site | 0.95 | 6.54 | 348.69 | 2.394 |

| 54 | Tabas, Iran | Ferdows | 91.14 | 7.35 | 302.64 | |

| 55 | Tabas, Iran | Boshrooyeh | 28.79 | 7.35 | 324.57 | |

| 56 | Tabas, Iran | Tabas | 2.05 | 7.35 | 766.77 | 6.188 |

| 57 | Tabas, Iran | Dayhook | 13.94 | 7.35 | 471.53 | |

| 58 | Westmorland | Parachute Test Site | 16.66 | 5.90 | 348.69 | 4.389 |

| 59 | Westmorland | Superstition Mtn Camera | 19.37 | 5.90 | 362.38 | |

| 60 | Whittier Narrows-01 | Arcadia—Campus Dr | 17.42 | 5.99 | 367.53 |

References

- Hosseinpour, V.; Saeidi, A.; Nollet, M.; Nastev, M. Seismic loss estimation software: A comprehensive review of risk assessment steps, software development and limitations. Eng. Struct. 2021, 232, 111866. [Google Scholar] [CrossRef]

- Huang, J.; Li, X.; Zhang, F.; Lei, Y. Identification of joint structural state and earthquake input based on a generalized Kalman filter with unknown input. Mech. Syst. Signal Process. 2021, 151, 107362. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, B.; Wang, T.; Su, T.; Chen, H. Dynamic analysis of multilayer-reinforced concrete frame structures based on NewMark-β method. Rev. Adv. Mater. Sci. 2021, 60, 567–577. [Google Scholar] [CrossRef]

- Sidari, M.; Andric, J.; Jelovica, J.; Underwood, J.M.; Ringsberg, J.W. Influence of different wave load schematisation on global ship structural response. Ships Offshore Struct. 2019, 14, 9–17. [Google Scholar] [CrossRef]

- Heidari, A.; Jamali, M.A.J.; Navimipour, N.J.; Shahin, A. A QoS-aware technique for computation offloading in IoT-Edge platforms using a convolutional neural network and markov decision process. IT Prof. 2023, 25, 24–39. [Google Scholar] [CrossRef]

- Li, L.; Sun, Q.; Wang, Y.; Gao, Y. A data-driven indirect approach for predicting the response of existing structures induced by adjacent excavation. Appl. Sci. 2023, 13, 3826. [Google Scholar] [CrossRef]

- Mahouti, P.; Belen, A.; Tari, O.; Belen, M.A.; Karahan, S.; Koziel, S. Data-driven surrogate-assisted optimization of metamaterial-based filtenna using deep learning. Electronics 2023, 12, 1584. [Google Scholar] [CrossRef]

- Alam, Z.; Sun, L.; Zhang, C.W.; Samali, B. Influence of seismic orientation on the statistical distribution of nonlinear seismic response of the stiffness-eccentric structure. Structures 2022, 39, 387–404. [Google Scholar] [CrossRef]

- Qiu, D.P.; Chen, J.Y.; Xu, Q. Improved pushover analysis for underground large-scale frame structures based on structural dynamic responses. Tunn. Undergr. Space Technol. 2020, 103, 103405. [Google Scholar] [CrossRef]

- Li, T.J.; Dong, H.J.; Zhao, X.; Tang, Y.Q. Overestimation analysis of interval finite element for structural dynamic response. Int. J. Appl. Mech. 2019, 11, 1950035. [Google Scholar] [CrossRef]

- Dong, Y.R.; Xu, Z.D.; Guo, Y.Q.; Xu, Y.S.; Chen, S.; Li, Q.Q. Experimental study on viscoelastic dampers for structural seismic response control using a user-programmable hybrid simulation platform. Eng. Struct. 2020, 216, 110710. [Google Scholar] [CrossRef]

- Yang, Y.S. Measurement of dynamic responses from large structural tests by analyzing non-synchronized videos. Sensors 2019, 19, 3520. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Ricles, J.M.; Karavasilis, T.L.; Chae, Y.; Sause, R. Evaluation of a real-time hybrid simulation system for performance evaluation of structures with rate dependent devices subjected to seismic loading. Eng. Struct. 2012, 35, 71–82. [Google Scholar] [CrossRef]

- Fu, C.S.; Ying, J. The application of push-over analysis in seismic design of building structures procedures. In Tall Buildings: From Engineering to Sustainability; World Scientific: Singapore, 2005; pp. 139–143. [Google Scholar]

- Xu, G.; Guo, T.; Li, A.Q. Equivalent linearization method for seismic analysis and design of self-centering structures. Eng. Struct. 2022, 271, 114900. [Google Scholar] [CrossRef]

- Moghaddam, S.H.; Shooshtari, A. An energy balance method for seismic analysis of cable-stayed bridges. Proc. Inst. Civ. Eng.-Struct. Build. 2019, 172, 871–881. [Google Scholar] [CrossRef]

- Ji, H.R.; Li, D.X. A novel nonlinear finite element method for structural dynamic modeling of spacecraft under large deformation. Thin Wall. Struct. 2021, 165, 107926. [Google Scholar] [CrossRef]

- Ma, J.; Liu, B.; Wriggers, P.; Gao, W.; Yan, B. The dynamic analysis of stochastic thin-walled structures under thermal-structural-acoustic coupling. Comput. Mech. 2020, 65, 609–634. [Google Scholar] [CrossRef]

- Huras, Ł.; Bońkowski, P.; Nalepka, M.; Kokot, S.; Zembaty, Z. Numerical analysis of monitoring of plastic hinge formation in frames under seismic excitations. J. Meas. Eng. 2018, 6, 190–195. [Google Scholar] [CrossRef]

- Chen, W.D.; Yu, Y.C.; Jia, P.; Wu, X.D.; Zhang, F.C. Application of finite volume method to structural stochastic dynamics. Adv. Mech. Eng. 2013, 5, 391704. [Google Scholar] [CrossRef]

- Tang, H.S.; Liao, Y.Y.; Yang, H.; Xie, L.Y. A transfer learning-physics informed neural network (TL-PINN) for vortex-induced vibration. Ocean Eng. 2022, 266, 113101. [Google Scholar] [CrossRef]

- Chang, G.W.; Lu, H.J.; Chang, Y.R.; Lee, Y.D. An improved neural network-based approach for short-term wind speed and power forecast. Renew. Energ. 2017, 105, 301–311. [Google Scholar] [CrossRef]

- Duan, J.K.; Zuo, H.C.; Bai, Y.L.; Duan, J.Z.; Chang, M.H.; Chen, B.L. Short-term wind speed forecasting using recurrent neural networks with error correction. Energy 2021, 217, 119397. [Google Scholar] [CrossRef]

- Lu, Y.; Luo, Q.X.; Liao, Y.Y.; Xu, W.H. Vortex-induced vibration fatigue damage prediction method for flexible cylinders based on RBF neural network. Ocean Eng. 2022, 254, 111344. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.F. Deep learning-based brace damage detection for concentrically braced frame structures under seismic loadings. Adv. Struct. Eng. 2019, 22, 3473–3486. [Google Scholar] [CrossRef]

- Li, H.L.; Wang, T.Y.; Wu, G. A Bayesian deep learning approach for random vibration analysis of bridges subjected to vehicle dynamic interaction. Mech. Syst. Signal Process. 2022, 170, 108799. [Google Scholar] [CrossRef]

- Maya, M.; Yu, W.; Telesca, L. Multi-step forecasting of earthquake magnitude using meta-learning based neural networks. Cybernet. Syst. 2022, 53, 563–580. [Google Scholar] [CrossRef]

- Wiszniowski, J. Estimation of a ground motion model for induced events by Fahlman’s Cascade Correlation Neural Network. Comput. Geosci. 2019, 131, 23–31. [Google Scholar] [CrossRef]

- Birky, D.; Ladd, J.; Guardiola, I.; Young, A. Predicting the dynamic response of a structure using an artificial neural network. J. Low Freq. Noise Vib. Act. 2022, 41, 182–195. [Google Scholar] [CrossRef]

- Cai, Y.; Shyu, M.L.; Tu, Y.X.; Teng, Y.T.; Hu, X.X. Anomaly detection of earthquake precursor data using long short-term memory networks. Appl. Geophys. 2019, 16, 257–266. [Google Scholar] [CrossRef]

- Saba, S.; Ahsan, F.; Mohsin, S. BAT-ANN based earthquake prediction for Pakistan region. Soft Comput. 2017, 21, 5805–5813. [Google Scholar] [CrossRef]

- Sreejaya, K.P.; Basu, J.; Raghukanth, S.; Srinagesh, D. Prediction of ground motion intensity measures using an artificial neural network. Pure Appl. Geophys. 2021, 178, 2025–2058. [Google Scholar] [CrossRef]

- Suryanita, R.; Maizir, H.; Firzal, Y.; Jingga, H.; Yuniarto, E. Response prediction of multi-story building using backpropagation neural networks method. MATEC Web Conf. 2019, 276, 01011. [Google Scholar] [CrossRef]

- Gonzalez, J.; Yu, W. Non-linear system modeling using LSTM neural networks. IFAC-Pap. 2018, 51, 485–489. [Google Scholar] [CrossRef]

- Liu, X.J.; Zhang, H.; Kong, X.B.; Lee, K.Y. Wind speed forecasting using deep neural network with feature selection. Neurocomputing 2020, 397, 393–403. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Jena, R.; Pradhan, B.; Al-Amri, A.; Lee, C.W.; Park, H.J. Earthquake probability assessment for the indian subcontinent using deep learning. Sensors 2020, 20, 4369. [Google Scholar] [CrossRef] [PubMed]

- Nicolis, O.; Plaza, F.; Salas, R. Prediction of intensity and location of seismic events using deep learning. Spat. Stat. 2021, 42, 100442. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, Conference Track Proceedings, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Method | Typical Method | Advantages/Disadvantages | References |

|---|---|---|---|

| Seismic testing | Shaking table loading Seismic simulator | Reliable results, intuitive phenomena/High costs, high equipment requirements, limited test conditions | [11,12,13] |

| Theoretical analysis | Response spectrum method Push-over method Energy method | Simple calculation, low computing costs, stable analysis results/Cannot reflect the dynamic characteristics, limited range of application, poor accuracy | [14,15,16] |

| Numerical analysis | Finite element method Finite difference method Finite volume method | Providing a wider range of structural data, wide range of application/Complex analysis process, high computational cost, high requirements for computing equipment | [17,18,19,20] |

| Floor Number | Story Height (m) | Mass (103 kg) | Stiffness (MN/m) |

|---|---|---|---|

| 1 | 3.5 | 560 | 1508 |

| 2 | 3.5 | 552 | 1487 |

| 3 | 3.5 | 550 | 1482 |

| 4 | 3.5 | 548 | 1462 |

| 5 | 3.5 | 546 | 1432 |

| 6 | 3.5 | 539 | 1357 |

| Mass (103 kg) | Stiffness (MN/m) | Damping | |

|---|---|---|---|

| mb | 613 | 17.14 | 1.63 × 106 N·s/m |

| md | 1350 | 51.43 | c1 = 2.13 × 107 N·(s/m)2 c2 = 1.07 × 107 N·(s/m)1.75 |

| Model | State | Variable |

|---|---|---|

| RNN/LSTM model for linear structure | Input | |

| Output | ||

| RNN/LSTM model for nonlinear structure | Input | |

| Output |

| Configuration | Performance Indicators |

|---|---|

| System | Windows 10 64-bit |

| CPU | Intel® Core™ i7-10875H 2.35 GHz |

| GPU | NVIDIA GeForce RTX 2060 Max-Q 6GB |

| RAM | 64 G |

| Python | 3.8.5 |

| Tensorflow | 1.6.0 |

| Hyper Parameter | Value | Hyper Parameter | Value |

|---|---|---|---|

| Neurons in the hidden layer | 128 | Forgetting rate | 0 |

| Number of fully connected layer | 1 | Regularization | L2 Regularization |

| Number of RNN layers | 2 | Learning rate | 0.001 |

| Number of iterations | 500 | Optimizer | Adam [39] |

| Minimum batch size | 1 |

| Hyper Parameter | Value | Hyper Parameter | Value |

|---|---|---|---|

| Neurons in the hidden layer | 100 | Forgetting rate | 0.06 |

| Number of fully connected layer | 1 | Gradient threshold | 0.1 |

| Number of LSTM layers | 2 | Learning rate | 0.01 |

| Number of iterations | 500 | Regularization | L2 Regularization |

| Minimum batch size | 2 | Optimizer | Adam [39] |

| Hyper Parameter | Value | Hyper Parameter | Value |

|---|---|---|---|

| Neurons in the hidden layer | 128 | Forgetting rate | 0 |

| Number of fully connected layer | 1 | Regularization | L2 Regularization |

| Number of RNN layers | 2 | Learning rate | 0.001 |

| Number of iterations | 500 | Optimizer | Adam [39] |

| Minimum batch size | 1 |

| Hyper Parameter | Value | Hyper Parameter | Value |

|---|---|---|---|

| Neurons in the hidden layer | 100 | Forgetting rate | 0.06 |

| Number of fully connected layer | 1 | Gradient threshold | 0.1 |

| Number of LSTM layers | 2 | Regularization | L2 Regularization |

| Number of iterations | 500 | Learning rate | 0.01 |

| Minimum batch size | 2 | Optimizer | Adam [39] |

| Model | Index | Variable | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|---|

| RNN | EWMAPE (%) | 4.388 | 1.067 | 2.566 | 4.030 | 2.453 | 1.678 | |

| 3.078 | 6.089 | 3.076 | 5.078 | 6.069 | 4.076 | |||

| 3.779 | 4.699 | 3.365 | 6.767 | 4.178 | 3.001 | |||

| EPEAK (%) | 4.532 | 2.584 | 3.399 | 4.593 | 2.463 | 2.884 | ||

| 3.598 | 5.665 | 3.855 | 5.776 | 6.952 | 6.287 | |||

| 4.793 | 7.998 | 8.589 | 2.388 | 5.110 | 9.300 | |||

| LSTM | EWMAPE (%) | 2.153 | 2.450 | 2.779 | 2.109 | 3.918 | 4.022 | |

| 2.031 | 2.136 | 2.434 | 2.539 | 4.346 | 4.578 | |||

| 1.125 | 1.924 | 2.586 | 2.392 | 3.079 | 3.481 | |||

| EPEAK (%) | 1.440 | 1.625 | 2.302 | 2.978 | 2.432 | 1.595 | ||

| 3.706 | 4.753 | 6.348 | 4.224 | 3.891 | 2.294 | |||

| 2.345 | 2.399 | 2.358 | 2.587 | 2.444 | 1.989 |

| Index | us6 | ub | ud | |

|---|---|---|---|---|

| Cluster 1 | EWMAPE (%) | 12.690 | 7.008 | 16.905 |

| EPEAK (%) | 32.366 | 42.877 | 51.623 | |

| Cluster 2 | EWMAPE (%) | 17.913 | 13.923 | 25.308 |

| EPEAK (%) | 21.607 | 58.182 | 66.567 | |

| Cluster 3 | EWMAPE (%) | 10.102 | 9.298 | 20.117 |

| EPEAK (%) | 38.397 | 49.993 | 53.695 |

| Index | |||||||

|---|---|---|---|---|---|---|---|

| Cluster 1 | EWMAPE (%) | 7.110 | 4.274 | 6.377 | 4.480 | 9.132 | 10.366 |

| EPEAK (%) | 6.456 | 17.949 | 3.750 | 10.625 | 17.978 | 31.579 | |

| Cluster 2 | EWMAPE (%) | 15.238 | 13.773 | 6.129 | 10.322 | 9.392 | 14.566 |

| EPEAK (%) | 2.343 | 28.696 | 2.144 | 20.236 | 9.053 | 20.556 | |

| Cluster 3 | EWMAPE (%) | 7.388 | 4.444 | 3.031 | 7.698 | 5.234 | 7.893 |

| EPEAK (%) | 8.772 | 18.421 | 1.993 | 38.889 | 22.143 | 7.826 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, Y.; Tang, H.; Li, R.; Ran, L.; Xie, L. Response Prediction for Linear and Nonlinear Structures Based on Data-Driven Deep Learning. Appl. Sci. 2023, 13, 5918. https://doi.org/10.3390/app13105918

Liao Y, Tang H, Li R, Ran L, Xie L. Response Prediction for Linear and Nonlinear Structures Based on Data-Driven Deep Learning. Applied Sciences. 2023; 13(10):5918. https://doi.org/10.3390/app13105918

Chicago/Turabian StyleLiao, Yangyang, Hesheng Tang, Rongshuai Li, Lingxiao Ran, and Liyu Xie. 2023. "Response Prediction for Linear and Nonlinear Structures Based on Data-Driven Deep Learning" Applied Sciences 13, no. 10: 5918. https://doi.org/10.3390/app13105918

APA StyleLiao, Y., Tang, H., Li, R., Ran, L., & Xie, L. (2023). Response Prediction for Linear and Nonlinear Structures Based on Data-Driven Deep Learning. Applied Sciences, 13(10), 5918. https://doi.org/10.3390/app13105918