Abstract

Hyperspectral image (HSI) classification is an important but challenging topic in the field of remote sensing and earth observation. By coupling the advantages of convolutional neural network (CNN) and Transformer model, the CNN–Transformer hybrid model can extract local and global features simultaneously and has achieved outstanding performance in HSI classification. However, most of the existing CNN–Transformer hybrid models use artificially specified hybrid strategies, which have poor generalization ability and are difficult to meet the requirements of recognizing fine-grained objects in HSI of complex scenes. To overcome this problem, we proposed a convolution–Transformer adaptive fusion network (CTAFNet) for pixel-wise HSI classification. A local–global fusion feature extraction unit, called the convolution–Transformer adaptive fusion kernel, was designed and integrated into the CTAFNet. The kernel captures the local high-frequency features using a convolution module and extracts the global and sequential low-frequency information using a Transformer module. We developed an adaptive feature fusion strategy to fuse the local high-frequency and global low-frequency features to obtain a robust and discriminative representation of the HSI data. An encoder–decoder structure was adopted in the CTAFNet to improve the flow of fused local–global information between different stages, thus ensuring the generalization ability of the model. Experimental results conducted on three large-scale and challenging HSI datasets demonstrate that the proposed network is superior to nine state-of-the-art approaches. We highlighted the effectiveness of adaptive CNN–Transformer hybrid strategy in HSI classification.

1. Introduction

Hyperspectral remote sensing integrates imaging and spectral technology [1] to obtain images with rich spectral and spatial information. Hyperspectral image (HSI) has been widely used in many fields, including agriculture, forestry, mining, and marine research [2,3,4,5]. For most of these applications, HSI classification is an important basic step, which aims to assign a semantic label to each pixel in the image [6]. Even though it has attracted considerable attention, it remains a challenging problem because of the large spatial variability of spectral signatures and the limited available training samples versus the high dimensionality of hyperspectral data [7].

Extracting discriminative and robust spatial–spectral features is the key to a successful HSI classification [7]. In the early research, spatial–spectral features were designed manually according to the prior knowledge on the land cover types. Many feature descriptors in the field of computer vision were adopted for HSI feature extraction. For example, principal component analysis (PCA) [8], independent component analysis (ICA) [9] and linear discriminant analysis (LDA) [10] were used to extract spectral features, and scale-invariant feature transform (SIFT) [11], local binary patterns (LBP) [12], and extended morphological profile (EMP) [13] were used to extract spatial features. On the basis of artificially designed features, traditional machine learning methods, such as support vector machine (SVM) [14], random forest (RF) [15], and extreme learning machine (ELM) [16] were used to classify HSI pixels into different types. The performance of these methods largely depends on the quality of the manually designed features. Generalization and robustness of these methods are generally poor because the features are designed for specific tasks, and the feature extraction requires complex processes of parameter tuning.

Different from traditional methods that rely on artificially designed features, deep learning-based methods can automatically learn multi-level nonlinear features, which are conducive to analyzing the inherent characteristics of HSI [7]. In recent years, as a representative deep learning model, the convolutional neural network (CNN) has made milestone progress in HSI classification. Relevant scholars have proposed a variety of CNN-based classification models, which can be divided into three categories according to the types of extracted features: (1) spectral CNN, which uses 1D CNN [17] to extract the spectral features of HSI; (2) spatial CNN, which uses two-dimensional CNN [18] to extract the spatial features from HSI after dimension reduction; (3) spectral-spatial CNN, which uses three-dimensional convolution [19] or dual branch network [20] to simultaneously extract spectral-spatial joint features of HSI. Although these CNN models have yielded satisfactory results in specific applications, they generally face the following two challenges: (1) due to the limited receptive field, they can hardly capture low-frequency signals, which provide global information (e.g., global shapes and structures) [21]; (2) the quality of the extracted high-frequency signals (e.g., local edges and texture) needs to be improved [22]. Several studies have attempted to improve the CNN models by directly extending the receptive field of the convolution kernel, including the use of dilated convolutions [23] and the construction of multi-scale feature pyramids [24]. Recent studies also introduced the attentional mechanism to enhance the useful components in the features while suppressing the useless ones, such as the spectral attention [25], spatial attention [26], and spectral–spatial attention [27]. Nevertheless, the above-mentioned methods do not fully overcome the limitations of CNNs, as they depend strictly on convolution operations, which are incapable of modeling long-term dependencies [28].

In recent years, the emergence of the Transformer has provided a new means for HSI classification. Transformer is a new neural network architecture consisting of a multi-head self-attention (MSA) module and a feed-forward neural network [29]. By introducing the MSA module, Transformer can effectively capture long-term dependencies. Qing et al. [30] proposed a self-attention Transformer network (SATNet) for HSI classification. SATNet employs Transformer encoders to extract image features and uses a multilevel residual structure to connect multiple encoder blocks to solve the vanishing gradient and over-fitting problems. Sun et al. [31] proposed an encoder–decoder network that fuses local-global spatial attention and spectral attention (FSSANet) for HSI classification. FSSANet introduces spectral attention into the Swin Transformer encoder [32] to encode the rich spectral–spatial information of HSI and therefore improves the classification accuracy. Although the application of Transformer as a backbone network for feature extraction has a good performance in HSI classification, it still faces two problems. First, Transformer needs to convert images to low-dimensional patch embeddings, which destroys the internal structure of the images and increases the requirement of quantity of training data to learn the unique properties of the images [33]. Secondly, Transformer cannot learn the correlation between different pixels within a patch, and it is difficult to capture local high-frequency information [34].

After reviewing the CNN-based and Transformer-based models, it was found that they complement each other, and combining use of them offers opportunities to enhance the modeling of both local high-frequency information and global long-term dependencies [35]. Sun et al. [36] proposed a spectral–spatial feature tokenization Transformer (SSFTT), which uses convolution layers to extract shallow spectral–spatial features and the Transformer encoder to capture deep semantic information. The extracted HSI spectral–spatial semantic features from shallow to deep were fused to improve classification accuracy. Song et al. [37] proposed a two branch HSI classification framework based on three-dimensional CNN and bottleneck spatial–spectral Transformer (B2ST). In this framework, both branches use a combination of shallow CNN and deep Transformer. One branch is used to extract spatial local–global joint features, and the other is focused on extracting spectral local–global joint features. The fused spectral–spatial features can express the local global semantic information, thus achieving outstanding classification performance. Although the existing CNN–Transformer hybrid models have made progress in HSI classification, they have at least two disadvantages. First, existing methods integrate convolution and Transformer through artificially specified strategies, which are empirical and might lead to poor generalization ability. Second, most of these models adopt the image-wise classification network based on “encoder–label” structure, which make predictions through the features of the last stage and cannot make full use of the information obtained from the other stages. As a result, the “encoder–label” structure might yield incorrect results, e.g., omission of fine-grained objects and confusion between similar objects in HSI of complex scenes [21,38]. It is urgent to develop a new method that can adaptively integrate the features extracted by convolution and Transformer and effectively capture the information obtained from multiple stages to overcome the limitations of existing methods.

Studies have noted that the deep learning methods normally suffer from the data-hungry problem [39]. This problem is particularly acute in HSI classification due to a lack of high-quality benchmark dataset. Most of the previous studies have used small-scale datasets, such as Indian Pians, Salinas, and Pavia University [17,20,23,27,30,40], which comprise only hundreds of rows by hundreds of columns of pixels. It is often the case that deep learning methods yield nearly perfect classification results on these datasets, possibly because the overlapping pixels between training data and test data will lead to information leakage [40,41]. The new data partition method solves the problem of information leakage to some extent [40,41,42]. However, the partitioned training data usually contains only thousands of pixels, which is difficult to support the training of deep learning models (usually including millions of parameters). At the same time, a small number of test samples are insufficient to comprehensively evaluate the performance of the model. Recently, a series of large-scale and challenging datasets have been developed [43,44]. It is of interest to further test existing deep learning models using these benchmark datasets to better understand their comprehensive performances.

The main objectives of this study are to: (1) develop a convolution–Transformer adaptive fusion network (CTAFNet) using an adaptive hybrid strategy to fuse high-frequency and low-frequency signals so as to extract more robust and discriminating local–global fusion representation and improve the performance of HSI classification; (2) to evaluate the performance of several widely used deep learning models (i.e., FSSANet [31], SS3FCN [42], UNet [45], etc.) using large-scale and challenging benchmark datasets to provide a fair and comprehensive comparison between the models.

2. Datasets and Methods

2.1. Datasets

2.1.1. Data Descriptions

This paper uses three large-scale and challenging HSI datasets as benchmarks: the AeroRIT scene [43], The Data Fusion Contest 2018 (DFC2018) dataset and Xiongan New Area Matiwan Village (hereinafter referred to as Xiongan) dataset [44].

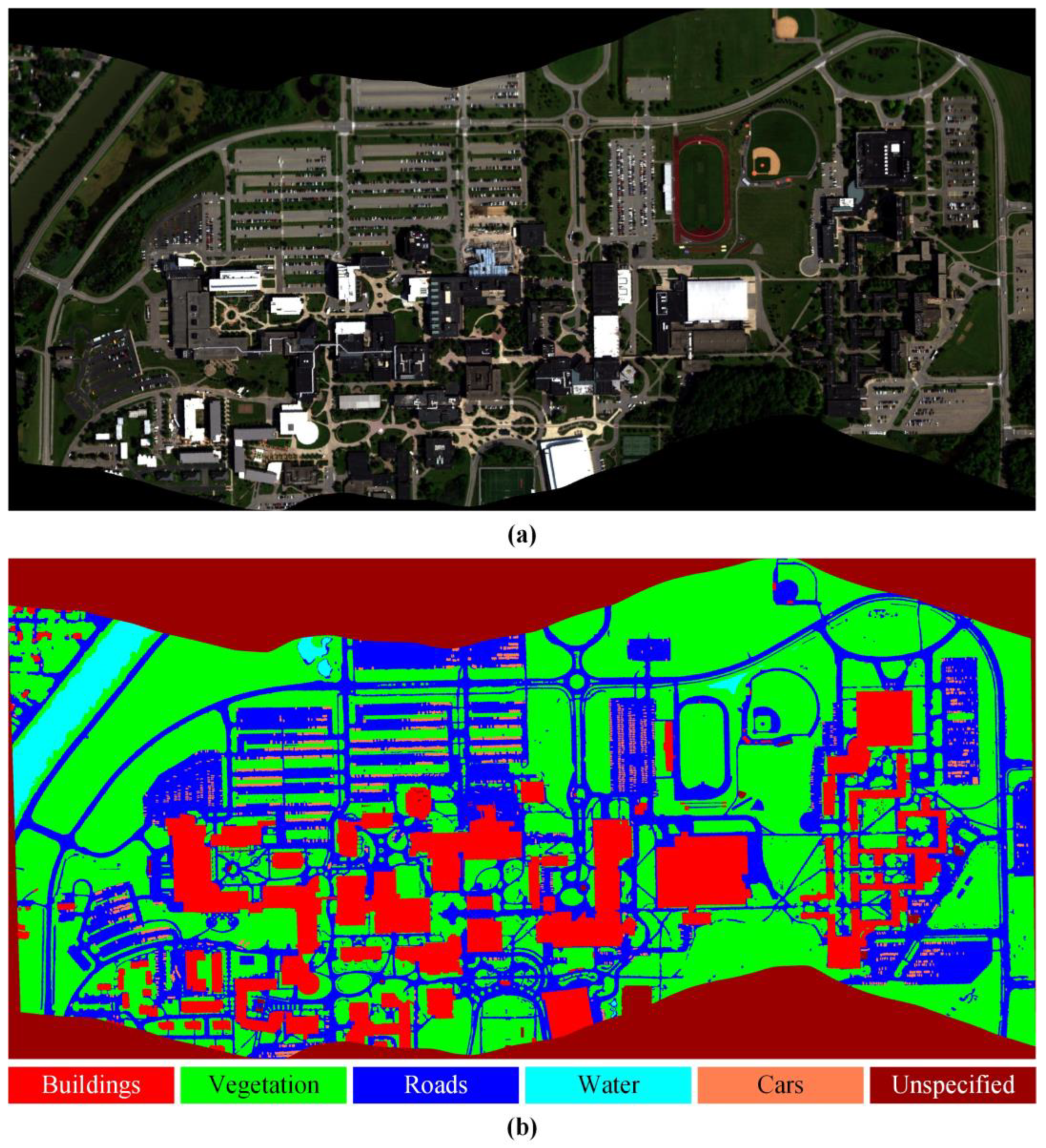

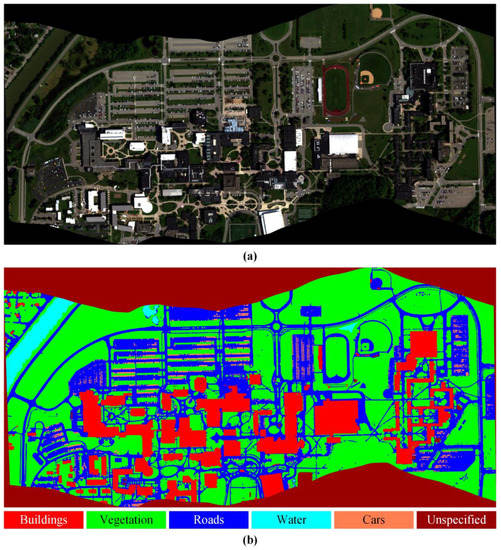

The AeroRIT scene is a HSI of the Rochester Institute of Technology’s university campus captured by the Headwall Micro E sensor. The sensor captures a total of 372 spectral bands. We use HSI of a total of 51 bands obtained by sampling every tenth band from 400 nm to 900 nm. This dataset has a ground sampling distance (GSD) of 0.4 m/px, resulting in a 1973 × 3975 px image. This dataset is marked with five types of ground objects, as shown in Figure 1. This dataset has problems, such as small target recognition, effects of glint, and shadows. Although there are few types of ground objects, accurate classification is still challenging.

Figure 1.

The AeroRIT scene. (a) RGB image; (b) Ground-truth classification map.

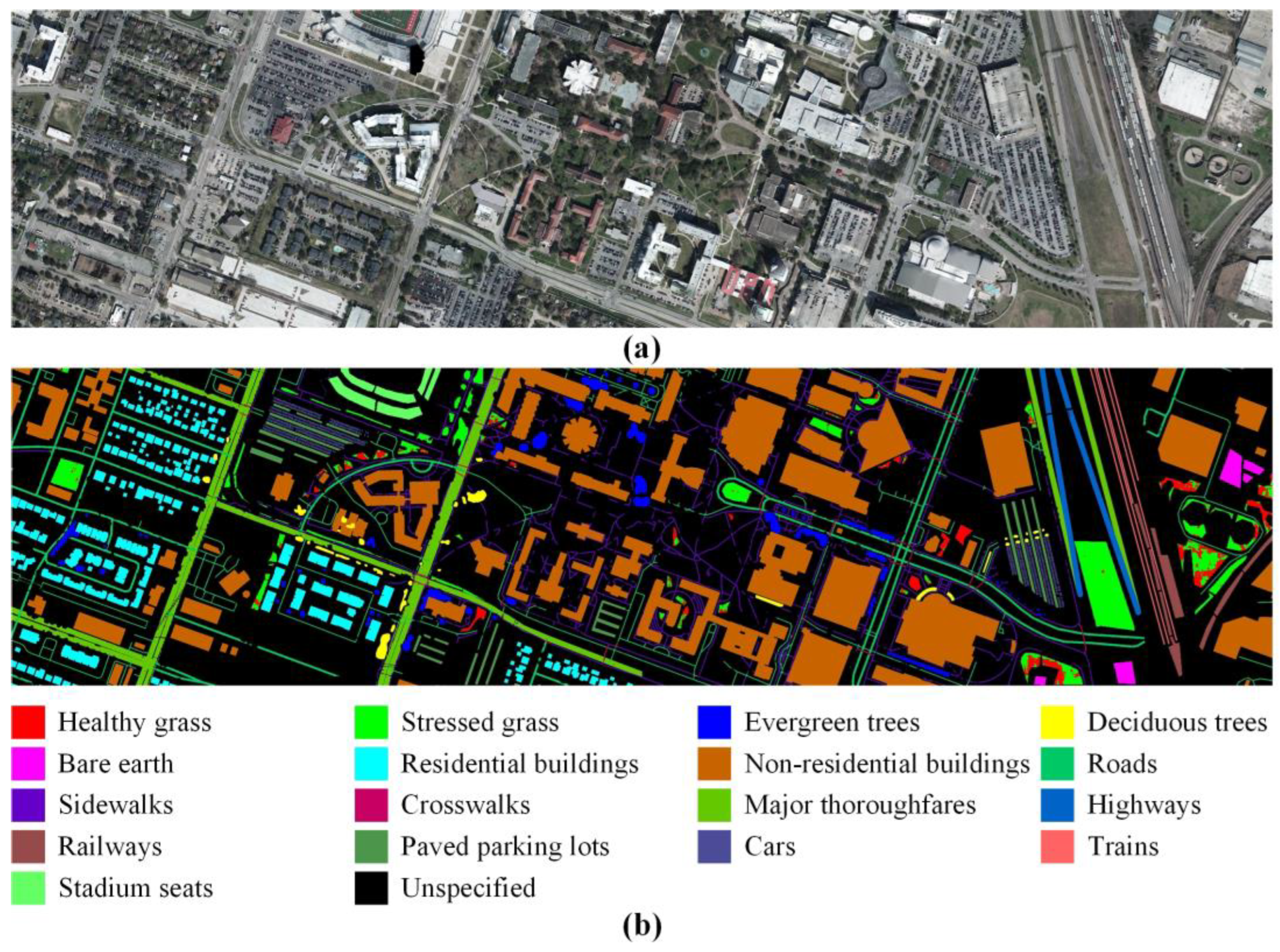

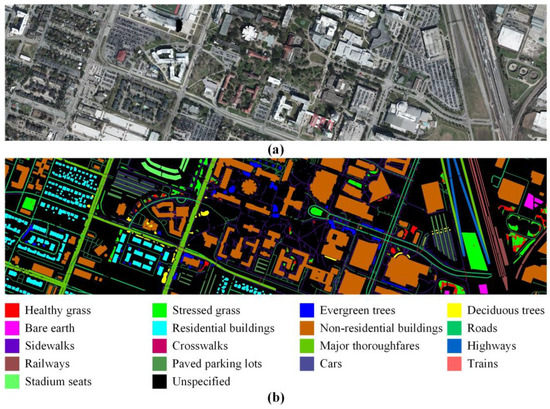

The DFC2018 hyperspectral data were acquired over Central Houston, Texas, USA, using an ITRES-CASI 1500 airborne sensor. It covers a 380–1050 nm spectral range over 48 contiguous bands at 1 m GSD, resulting in a 1202 × 4768 px image. This dataset has a total of 20 types of labels and contains many fine-grained objects. The number of artificial turn, water, and unpaved parking lots classes is too small to be partitioned into the training set and the test set at the same time. The method adopted in this paper cannot evaluate the performance of these three classes, therefore, these three classes are merged into the unspecified class. After processing the labels, there are 17 types of ground objects left, as shown in Figure 2.

Figure 2.

The DFC2018 dataset. (a) RGB image; (b) ground-truth classification map.

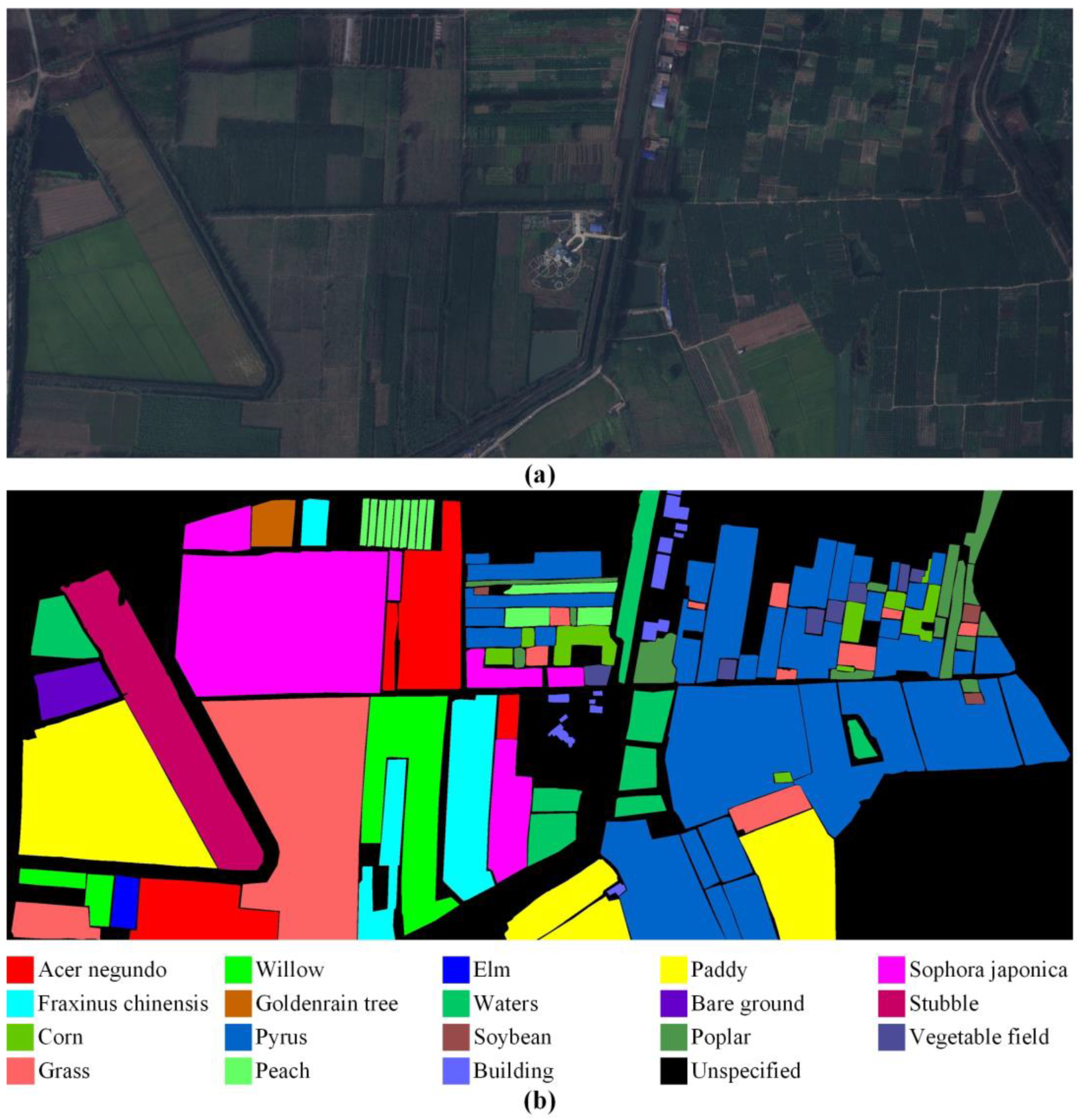

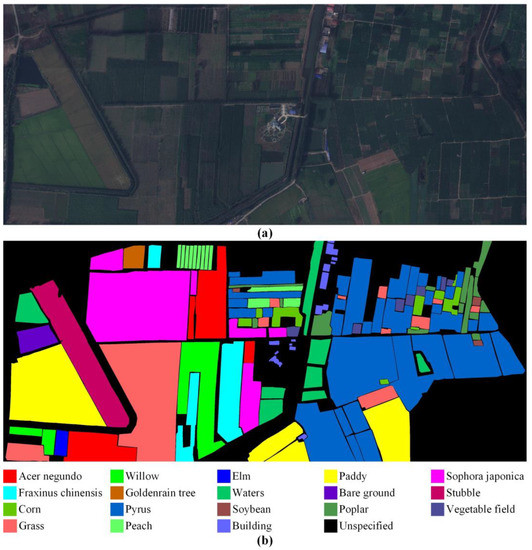

The Xiongan dataset is a HSI of Matiwan Village in Xiongan New Area of China, which is acquired using the visible and near-infrared imaging spectrometer. The spectral range is 400~1000 nm with 256 bands, and the spatial resolution is 0.5 m, resulting in a 1580 × 3750 px image. This dataset has a number of fine-grained objects, which are mainly croplands. For the same reason as DFC2018 dataset, we merged the acacia and sparse forests into the unspecified class. After merging, there are 18 types of ground objects, as shown in Figure 3.

Figure 3.

The Xiongan dataset. (a) three-band false color composite; (b) ground-truth map.

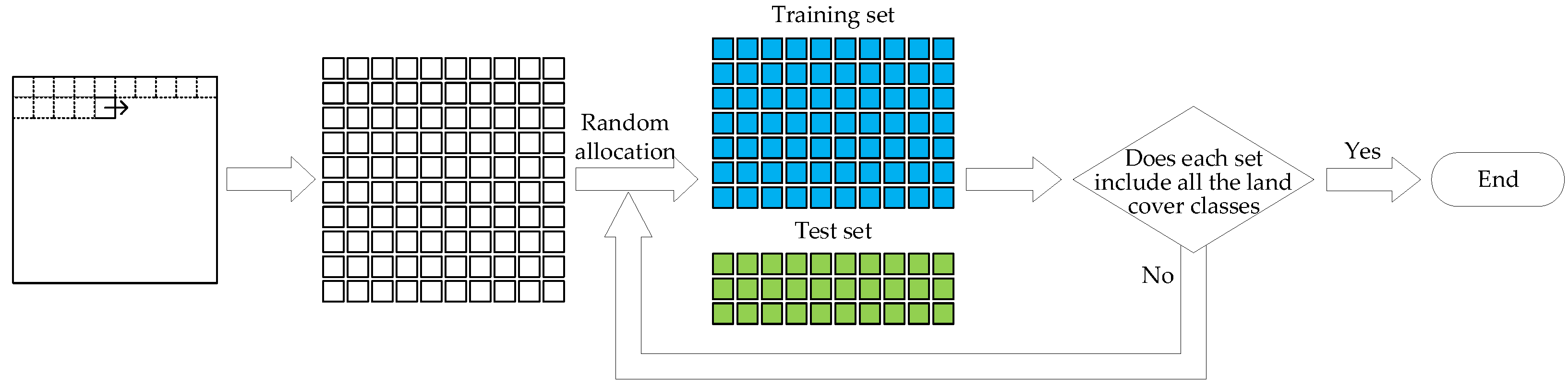

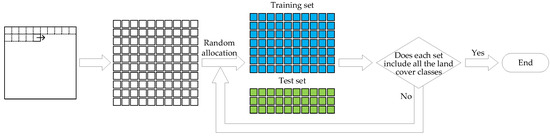

2.1.2. Data Partition Method

The AeroRIT dataset is partitioned to training, validation, and test sets according to the method in the paper [43], with a patch size of 64 × 64. The number of samples of each type is shown in Table 1. The DFC2018 dataset and the Xiongan dataset use the same data partition method as shown in Figure 4. We first crop the image into a number of 64 × 64 non-overlapping patches and then randomly partition these patches into the training set and test set. The number of samples of the DFC2018 dataset and the Xiongan dataset are shown in Table 2 and Table 3, respectively.

Table 1.

Sample size of each class in each set after partitioning the AeroRIT dataset.

Figure 4.

Dataset partition method.

Table 2.

Number of samples in train and test sets for the DFC2018 datasets.

Table 3.

Number of samples in train and test sets for the Xiongan datasets.

2.2. Method

We propose a convolution–Transformer adaptive fusion network (CTAFNet) for pixel-wise HSI classification. CTAFNet uses a novel local–global fusion feature extraction unit, called the convolution-Transformer adaptive fusion kernel, to capture both the local high-frequency features and the sequential low-frequency information. An adaptively feature fusion strategy was designed to obtain a more robust and discriminative representation of the HSI data. Moreover, CTAFNet adopts an encoder–decoder structure to improve the flow of fused local–global information between different stages, thus ensuring the generalization ability of the model.

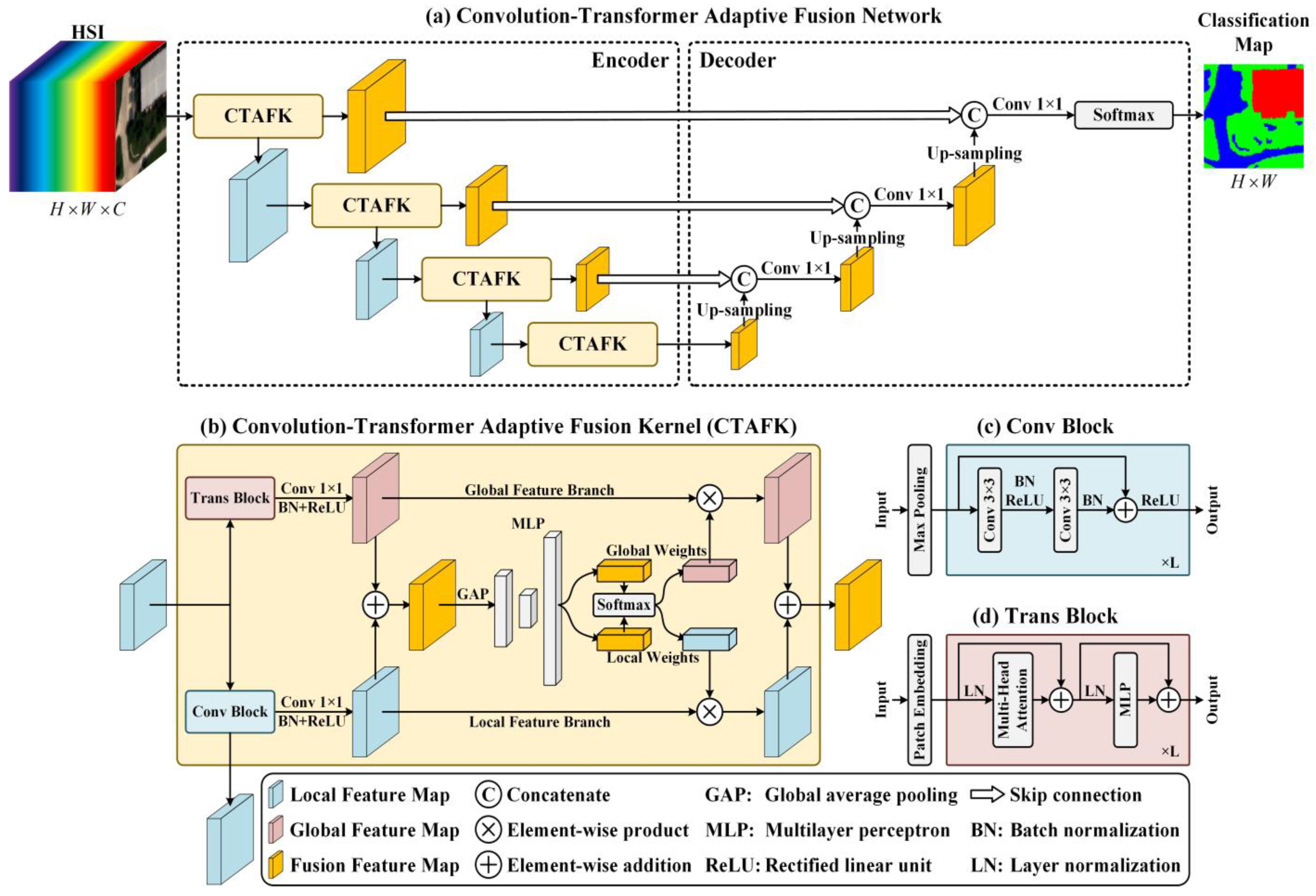

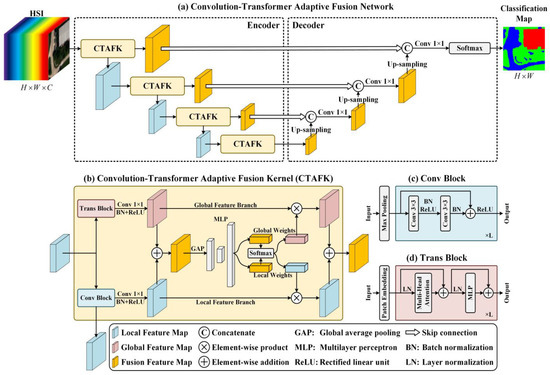

2.2.1. CTAFNet Architecture Overview

The overall architecture of the proposed CTAFNet for HSI classification is presented in Figure 5a. CTAFNet has a CNN–Transformer hybrid architecture, which mainly composed of encoder and decoder. The encoder follows the hierarchical pyramid architecture equipped with a CTAFK in each stage, which is used to capture local–global feature representations at different levels. The decoder uses bilinear interpolation for up-sampling to recover the spatial resolution of the feature map and concatenates the feature map from the previous and the current stage of encoders. The encoder–decoder framework enables CTAFNet to effectively utilize the local and global information extracted at each stage to improve HSI classification accuracy. The output feature size for each stage is shown in the Table 4.

Figure 5.

The framework of CTAFNet for HSI classification.

Table 4.

Output feature size for each stage.

2.2.2. Convolution–Transformer Adaptive Fusion Kernel

The artificially specified CNN–Transformer hybrid strategy has poor generalization ability and is difficult to satisfy the recognition requirements of fine-grained objects in complex scenes. To overcome this challenge, a feature extraction unit called the convolution–Transformer adaptive fusion kernel (CTAFK) was designed, as shown in Figure 5b.

CTAFK captures the local high-frequency features using a convolution block (Conv block) and extracts the global and sequential low-frequency information using a Transformer block (Trans block). Afterwards, the local high-frequency and global low-frequency features are adaptively weighted and fused to provide a more generalized and discriminative representation of the HSI data. The details of Conv block, Trans block, and CTAFK workflow are shown, as follows.

- Conv Block

Conv block is the basic module for extracting local features in CTAFK, which is composed of max pooling and residual block [46], as shown in Figure 5c. Among them, max pooling is used to halve the resolution of the input feature map, thus capturing multi-scale information. Note that we did not use the max pooling layer in the first stage of the encoder to ensure that the output image size is the same as the input image size. Residual block consists of a Conv 3 × 3, of a batch normalization (BN) [47], of a rectified linear unit (ReLU) [48] can efficiently encode spatial local information, and solve the degradation problem of deep network through shortcut connections, thus reducing the difficulty of model optimization. The number L of residual blocks for each stage is set to {1, 1, 3, 1}. The calculation formula of residual block is as follows:

where and are input and output vectors, the function represents the residual mapping to be learned, is the ReLU activation function, and denotes BN and the biases are omitted for simplifying notations.

- 2.

- Trans Block

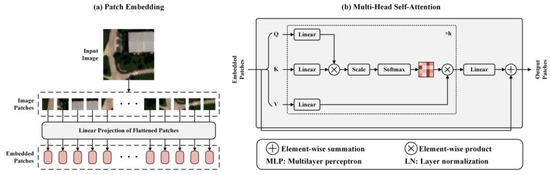

Trans block is the basic module for extracting global features in CTAFK, which is composed of patch embedding and Transformer encoder blocks [29], as shown in Figure 5d. As with the Conv block, the number L of the Transformer encoder for each of the four stages is also set to {1, 1, 3, 1}.

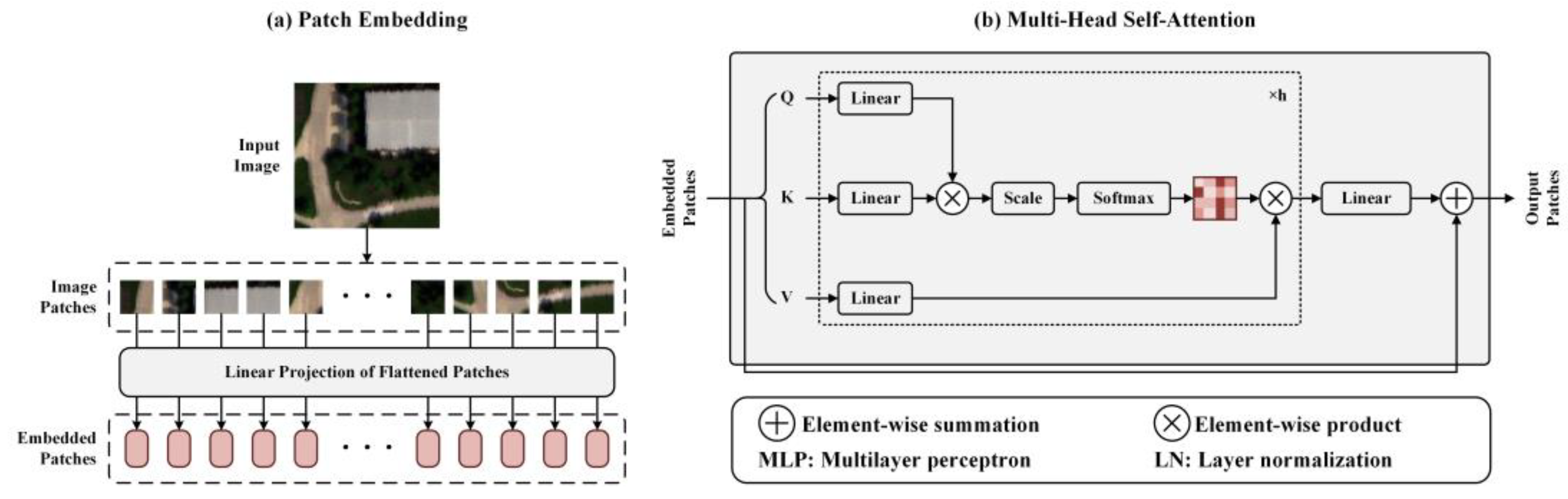

Patch embedding can convert two-dimensional images into one-dimensional token sequences with the standard Transformer as input, as shown in Figure 6a, and the calculation formula is as follows:

where is a sequence of flattened two-dimensional patches. is the resulting number of patches, is a trainable linear projection, which can map the patches to dimension, is the resolution of image patches, and the is set to {1, 2, 2, 2} for each stage. The Transformer encoder consists of multi-headed self-attention (MSA), multilayer perceptron (MLP) block, layer normalization (LN) [49], and shortcut connections. This process is expressed by the following equation:

Figure 6.

Patch embedding and multi-head self-attention mechanism.

As shown in Figure 6b, MSA mainly captures the correlation between input patches through the attention function. An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query (), keys (), values (), and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key. This process is expressed by this equation:

MSA involves multiple groups of the weight matrix in mapping , , and , using the same operation process to calculate the attention value. MSA allows the model to jointly attend to information from different representation subspaces at different positions. This process is expressed by this equation:

where , , , , and are parameter matrices.

MLP block is used to enhance the non-linear expression ability of Transformer and consists of two full connection layers. Between the two full connection layers, we use Gaussian error linear units (GELU) [50] as the activation functions.

- 3.

- CTAFK workflow

Overall, CTAFK deals with the input feature map via three steps, i.e., feature extraction and integration, adaptive weight calculation, and weighted fusion.

Feature extraction and integration: first, Conv block and Trans block are used to extract the local and global features of the given feature map , respectively. The two transformations can be expressed as and . Then, a convolution layer composed of Conv 1 × 1, BN and ReLU activation function is used to unify the number of channels of the two branches. Finally, integrate information from local feature branch and global feature branch via an element-wise addition:

Adaptive weight calculation: the weights of local and global features are calculated through a squeeze-and-excitation module [51]. First, a statistic is generated by shrinking through spatial dimensions :

where denotes the -th element of . Then, a MLP is used to describe the dependency of the two branches as , so as to guide the adaptive fusion. The used MLP consists of two full connection layers, its calculation formula is as follows:

where and denote the weights and biases, respectively. Finally, is divided into two vectors, i.e., and , and the weights are calculated by:

Weighted fusion: the fused feature map is obtained by weighting and :

where , denotes the -th channel of , and .

In addition, the output of CTAFK was input to the CTAFK in the next stage to transfer the inductive bias of convolution and the learned location information.

2.2.3. Comparison Methods

Nine representative deep learning models were selected for comparison. Among them, four models are the most popular solutions for semantic segmentation tasks in the computer vision domain, including UNet [45], Deeplab v3+ [52], SegFormer [53], and Swin-UNet [54]. The remaining five are the most advanced models in the current HSI classification tasks, including UNet-m-se(prelu)-gan [44] (hereinafter referred to as UNet-m), SS3FCN [42], ENLFCN [26], SSDGL [55], and FSSANet [31]. Among them, UNet and SS3FCN are methods based on two-dimensional and three-dimensional convolution respectively. Deeplab v3+ belongs to the method of directly expanding the receptive field of the convolution kernel, UNet-m, ENLFCN, and SSDGL belong to the methods that introduce the attention mechanism, and SegFormer, Swin-UNet, and FSSANet are methods based on Transformer. Please refer to the corresponding paper for details and parameter settings of the model.

3. Experiments and Analysis

3.1. Implementation Details and Metrics

During the training period, all models used the he-normal [56] method to initialize the weight parameters and the AdamW [57] optimizer to optimize the weight parameters. The initial learning rate is set to 0.001, and the weight decay is set to 0.0001. A weighted cross entropy loss function (Equation (14)) was used to handle the unspecific class in the dataset. Note that we used the same loss function and optimizer for all models to ensure a fair comparison. All models were implemented under the Pytorch 1.10 deep learning open-source framework using a NVIDIA GeForce RTX 3070 GPU with 8 GB memory.

where is the number of classes, and are the real and the predicted classes, respectively, is the number of unspecified sample, and is the sample size of a single class.

In this paper, the pixel accuracy, the intersection over union (IoU) of each class, and IoU averaged over all classes (mIoU) are selected as the evaluation metrics for the quantitative evaluation.

3.2. Experiment Result

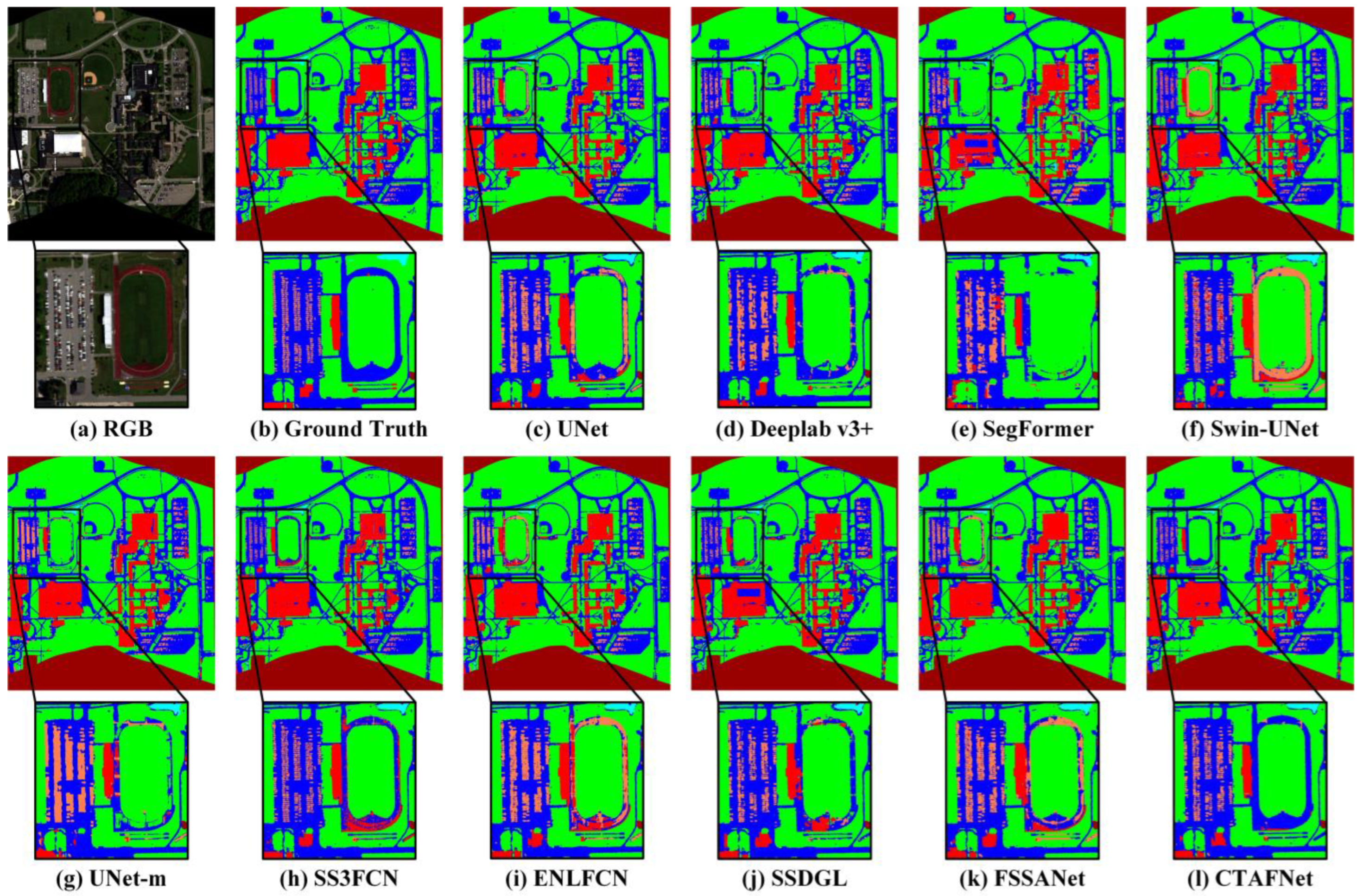

3.2.1. Experiment Results on AeroRIT Dataset

Figure 7 shows the visually comparison of different methods on the AeroRIT test set. Overall, the classification map obtained through CTAFNet is highly consistent with the ground truth, and there are few misclassification phenomena. The classification map generated by CTAFNet is the smoothest, while ENLFCN and SS3FCN have more salt and pepper noise. As can be seen in the enlarged images, the CTAFNet correctly extracted the racetrack and effectively preserved shapes. The car boundaries obtained from CTAFNet and SS3FCN are clearer than that from the other methods, whereas the object edges generated by CTAFNet are the closest to the ground truth.

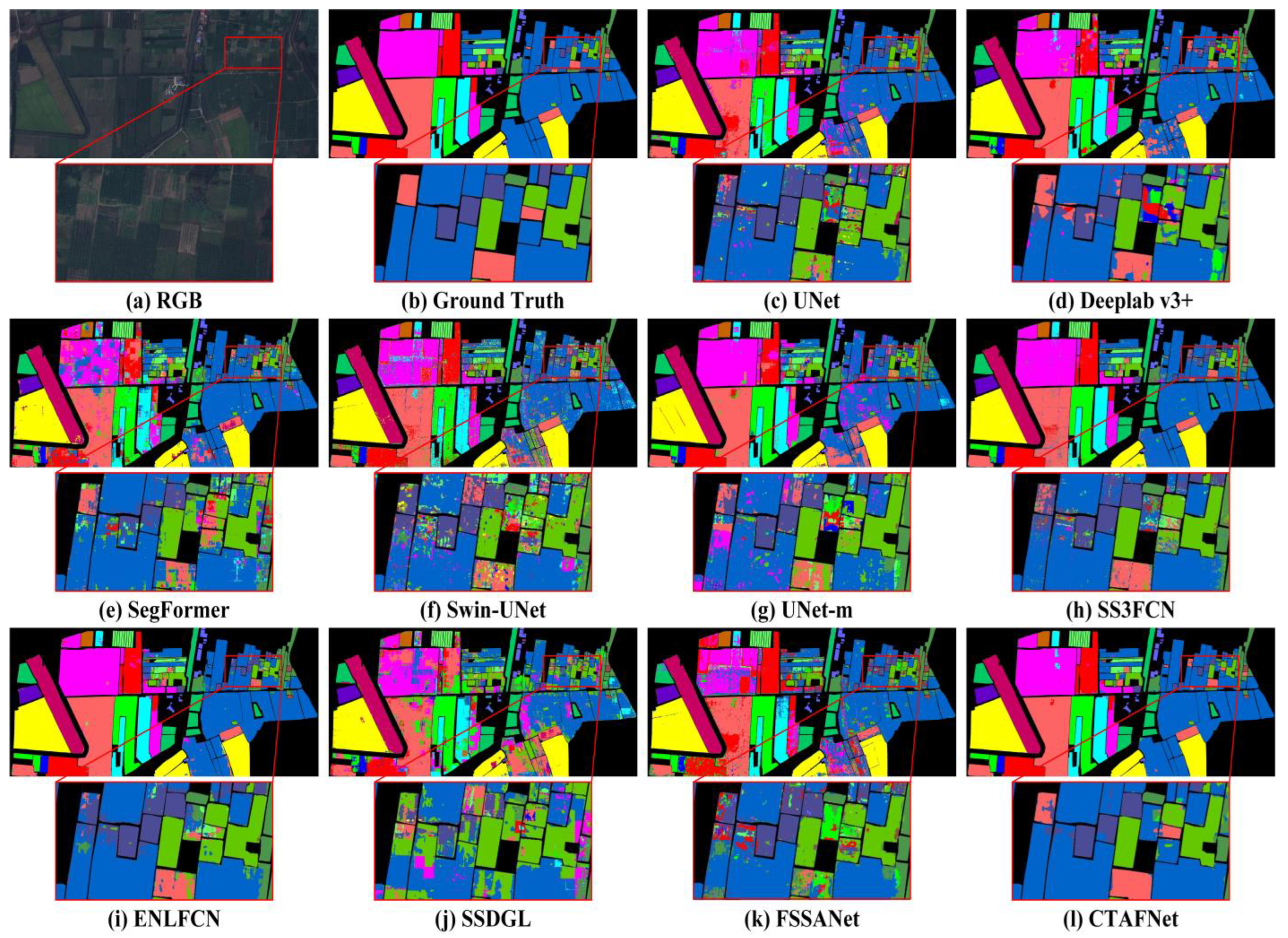

Figure 7.

Visually comparison of different methods on the AeroRIT test set.

Table 5 shows the experimental results of different methods in the AeroRIT test set. The experimental results demonstrate that the proposed CTAFNet method achieves the best performance, with 95.07% pixel accuracy and 81.41% mIoU. Specifically, CTAFNet proposed in this paper is 0.36% higher with regards to pixel accuracy and 1.69% mIoU with regard to the second-best model SS3FCN, while SegFormer has the worst performance, with 88.51% pixel accuracy and 57.32% mIoU. CTAFNet achieved the best score in four classes of Buildings, Roads, Water and Cars, which is 1.10%, 0.71%, 1.53%, and 1.20% higher than the second place, respectively.

Table 5.

Classification results for the AeroRIT test set.

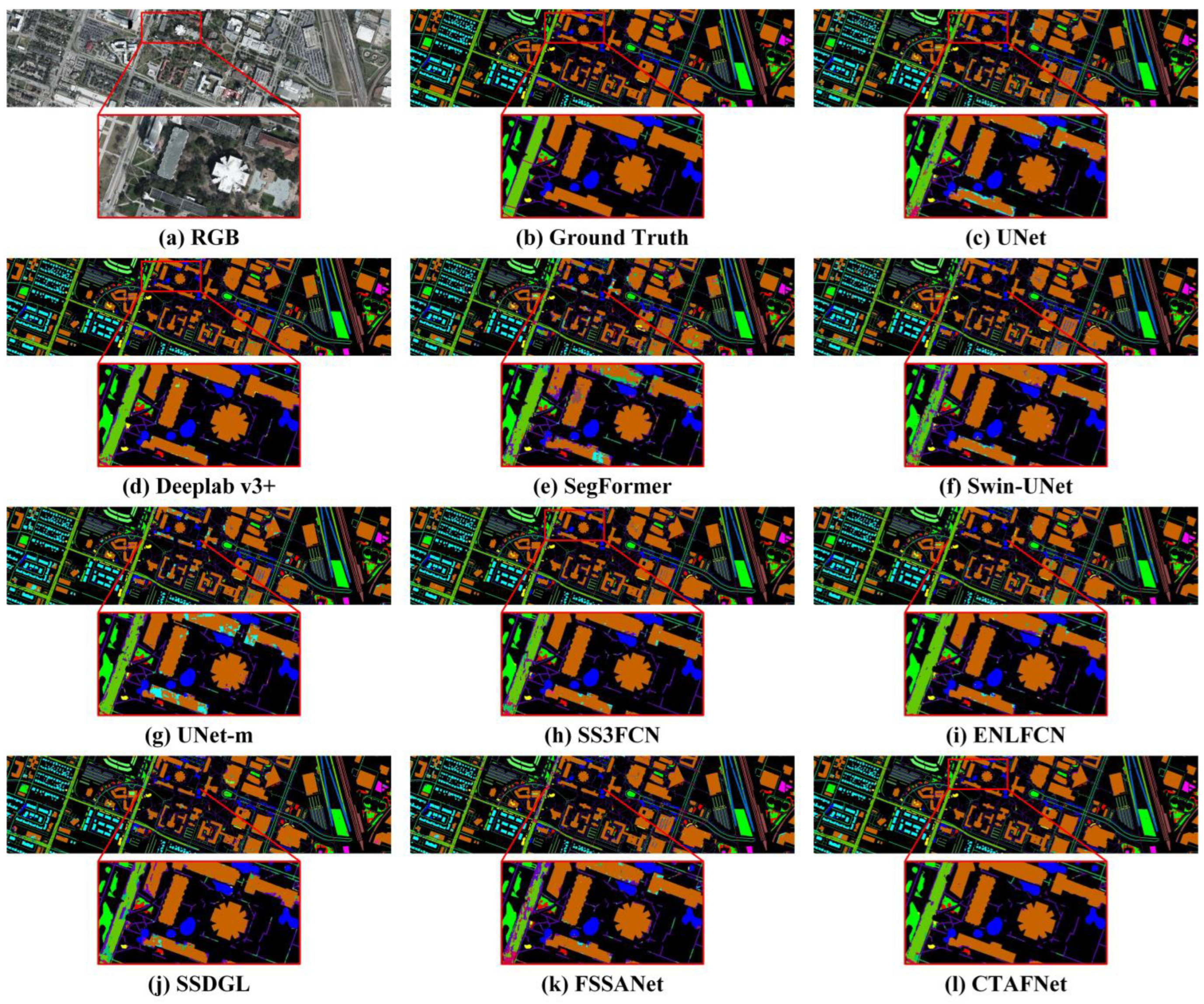

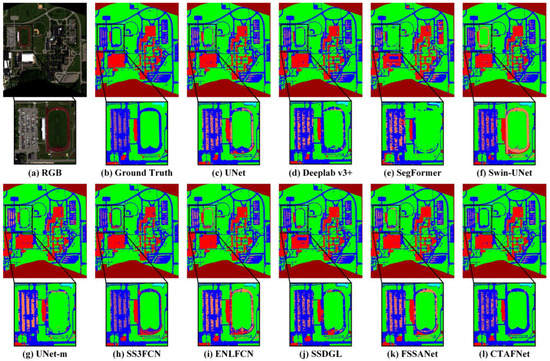

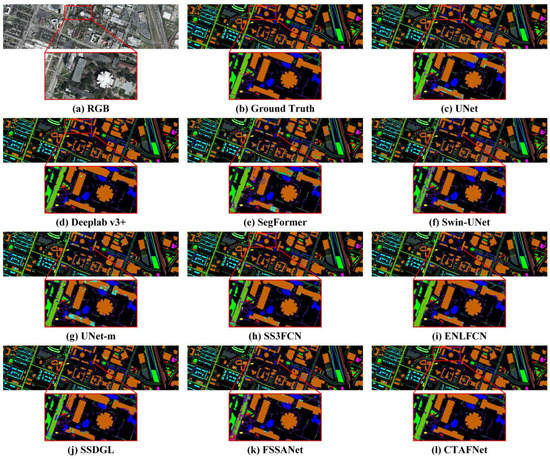

3.2.2. Experiment Results on DFC2018 Dataset

Figure 8 shows the visually comparison of different methods on the DFC2018 dataset. One can observe that CTAFNet obtained more accuracy classification map than the other methods. Moreover, the classification map generated by CTAFNet is the cleanest, with little noise in buildings and roads, while the classification map generated by other methods has noticeable noises.

Figure 8.

Visually comparison of different methods on the DFC2018 dataset.

Table 6 shows the experimental results of different methods in the DFC2018 test set. The experimental results demonstrate that the proposed CTAFNet method achieves the best performance, with 92.59% pixel accuracy and 82.10% mIoU. Specifically, the pixel accuracy and mIoU of CTAFNet is 1.47% and 2.50% higher than the second-best model SS3FCN, respectively. SegFormer has the worst performance, achieving only 73.44% pixel accuracy and 58.11% mIoU. In terms of classification accuracy of each class, CTAFNet obtained the best score in 12 of the 17 classes. Among them, IoU in residential buildings, major thoroughfare, and cars was far higher than the second place (7.46%, 10.93% and 8.57% higher, respectively).

Table 6.

Classification results for the DFC2018 test set.

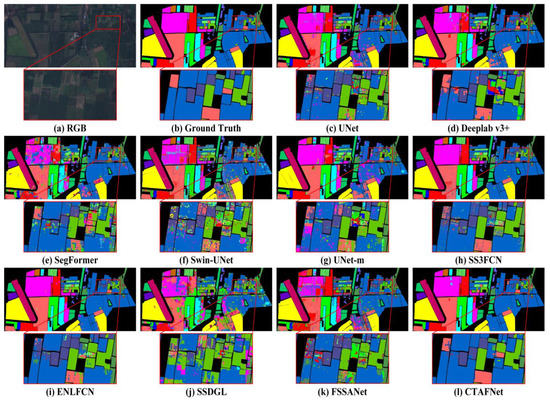

3.2.3. Experiment Results on Xiongan Dataset

Figure 9 shows the visually comparison of different methods on the Xiongan dataset. It can be found that the classification map obtained by CTAFNet keeps highly consistent with the ground truth, and there is little noise in the classification map. Compared with CTAFNet, the other three transformer models (SegFormer, Swin-UNet and FSSANet) produce more noises and misclassifications. As can be seen in the enlarged area, CTAFNet can completely classify the Grass (pink block in the middle) without any omissions or errors, which indicates that CTAFNet can effectively integrate the advantages of CNN and Transformer.

Figure 9.

Visually comparison of different methods on the Xiongan dataset.

Table 7 shows the experimental results of different methods in the Xiongan test set. The experimental results demonstrate that the proposed CTAFNet achieves the best performance with 96.17% pixel accuracy and 86.84% mIoU. Specifically, the pixel accuracy and mIoU of CTAFNet is 1.80% and 3.98% higher than the second-best model ENLFCN, respectively. SegFormer has the worst performance, achieving only 66.58% pixel accuracy and 42.37% mIoU. In terms of classification accuracy of each class, CTAFNet obtained the best score in 10 of the 18 classes. Among them, its IoU scores on the 3th, 12th, 13th, 16th, and 17th categories of ground objects far exceeded values for the second-best model, which were 6.43%, 6.93%, 11.04%, 4.29% and 14.70% higher, respectively.

Table 7.

Classification results for the Xiongan test set.

4. Discussion

4.1. Ablation Study

In order to verify the effectiveness of the proposed adaptive hybrid strategy, we conducted ablation experiments on CTAFK. Models with different hybrid strategies were used as backbone networks in CTAFNet. The experimental results on three datasets are shown in Table 8. Among them, CCTT means two layers of convolution and two layers of Transformer encoder stacking. Add represents the direct element-wise addition of local and global feature maps. Cat represents concatenate of local and global feature maps along the spectral axis. Adapt means the adaptive hybrid strategy proposed in this paper. Comparing the experimental results, it can be found that the pure CNN model has achieved much better performance than the pure Transformer model, and a possible explanation is that Transformer model requires more training data, and the data size of the current HSI open-source dataset is not enough to train a pure Transformer model with good performance [33]. When using the CNN–Transformer hybrid architecture, selecting an unsuitable hybrid strategy may cause performance degradation. For example, the hybrid model with the add strategy performs worse than pure CNN model on the three datasets, the hybrid model with the CCTT strategy performs poorly on the aerial dataset and DFC2018 dataset, and the hybrid model with the cat strategy performs worse than pure CNN model on the DFC2018 dataset. The reason for this phenomenon might be that the attention mechanism has mixed the intra-class and inter-class contexts when extracting the global information [58], and the artificially specified hybrid strategies cannot distinguish between different contexts, which leads to poor generalization ability of the model. By contrast, the proposed adaptive hybrid strategy can enhance the useful context information by adjusting the weight, thus extracting more generalized and discriminative features and overcoming the limitations of artificially specified hybrid strategies. The model using adaptive hybrid strategy achieves the best classification accuracy on three datasets, which proves the effectiveness of the proposed adaptive hybrid strategy.

Table 8.

Backbone network ablation experiment.

4.2. Parameter Sensitivity Analysis

CTAFNet contains two hyperparameters, i.e., the head numbers in MSA and the number of channels. To understand the sensitivity of CTAFNet to the hyperparameters, we tested the performance of different configurations in HSI classification. Table 9 shows the impact of head numbers in MSA on CTAFNet performance. With the increase in head numbers, the two performance indicators increase first and then decrease. This tendency is consistent across datasets. Table 10 shows the impact of different channel numbers on the performance of CTAFNet. The results show that, with the increase in channel numbers, the performance of the model also increases first and then decreases. This is possibly because the complexity of model increases together with the head numbers in MSA and the number of channels, and overfitting problem may occur when the model becomes too complex. When the head numbers of MSA is set to 2, and the model channel number is set to 96, CTAFNet performs best on the three datasets. Since the optimal hyperparameters are constants and no additional parameter tuning is needed, and the proposed CTAFNet is highly applicable in different scenes.

Table 9.

Influence of the number of heads in MSA on the performance of CTAFNet.

Table 10.

Influence of number of channels on the performance of CTAFNet.

4.3. Generalization of CTAFNet on Small Dataset

In order to verify the generalization of CTAFNet on small datasets, this section compares CTAFNet with nine methods on the widely used the Salinas dataset, and the results are shown in Table 11. The results demonstrate that the proposed CTAFNet method achieves the best performance, with 94.62% pixel accuracy and 95.70% mIoU, indicating that CTAFNet generalizes well to small datasets. In addition, Deeplab v3+, which performed well on the three large datasets, performed poorly on the Salinas dataset. It may be because the Deeplab v3+ has a large number of parameters, and overfitting occurs when there are fewer training samples.

Table 11.

Comparison of generalization performance on the Salinas dataset.

4.4. Limitations and Future Works

Although the proposed CTAFNet outperforms the other models, there are still some limitations to overcome in the future. For example, the boundary of ground objects generated by this method is not as clear as that generated by SS3FCN. This is possibly because CTAFNet uses max pooling layer to reduce the calculation of the model, resulting in the loss of boundary information. By comparison, SS3FCN does not involve any downsampling operation, which helps preserve the shapes and boundaries. Current research in the field of deep learning shows that introducing post-processing (such as conditional random fields [59]) or improving loss functions (such as using Hausdorff distance loss [60]) can help solve this problem. It is of interest to further improve the CTAFNet through the above-mentioned methods to better preserve the boundaries.

Although the proposed CTAFNet achieves the best overall accuracy, the classification results are relatively poor on the categories with a small number of samples (for example, vegetation in the aerial dataset, crosswalks in the DFC2018 dataset, and Fraxinus chinensis in the Xiongan dataset). A possible reason is that the objective of model training is to optimize the overall accuracy, rather than the accuracy of a specific class. Therefore, the trained model will bias towards the classes with large numbers of samples and away from and the classes with small numbers of samples. Future works will be focused on addressing the problem of sample imbalance through data enhancement and dynamic weighting in loss function [61].

In this paper, we compared the HSI classification accuracy of nine state-of-the-art models on three large-scale and challenging datasets to provide an insight into their performance. In addition to these models, many deep learning-based HSI classification methods have been developed in recent years [17,18,19,20,23,24,25,27,28,30,35,36,37]. It is of interest to conduct a cross-comparison to guide users to select the most suitable methods in specific applications and assist scholars in designing advanced models. Such a comparison requires large number of datasets with challenging cases in HSI classification, e.g., images with fine-grained object, sample imbalance, inter-class variation, and cloud contamination. However, due to the high cost of HSI acquisition and labeling [62], the amount of samples in the used datasets is still small as compared with the datasets in the field of computer vision [63,64]. A recent study has developed a high-quality HSI classification benchmark dataset [65], which provides the starting point to construct a standard dataset. We plan to evaluate more HSI classification models after this dataset is released for public use so as to further understand the comprehensive performance differences between models.

5. Conclusions

In this article, a novel CTAFNet is proposed for HSI classification. We designed a CTAF module to capture the local high-frequency features using a convolution module and extracts the global and sequential low-frequency information using a Transformer module. Afterwards, the local high-frequency and global low-frequency features are adaptively weighted and fused to provide a more robust and discriminative representation of the HSI data. An encoder–decoder structure was adopted in the CTAFNet to improve the flow of fused local-global information between different stages, thus ensuring the generalization ability of the model. Experimental results conducted on three large-scale challenging HSI datasets demonstrate that the proposed network is superior to nine state-of-the-art approaches. The developed adaptive feature fusion strategy can effectively overcome the limitations of the existing hybrid strategy and improve the accuracy of HSI classification. Our research provides promising methods for HSI classification and keen insights into the comprehensive performance differences between models.

Author Contributions

Conceptualization, J.L., H.X. and H.W.; methodology, J.L.; data curation, J.L.; writing—original draft preparation, J.L. and Z.A.; writing—review and editing, J.L., Z.A. and W.L.; funding acquisition, H.X. and A.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (Nos. 41971406, 42071246, 42271470), the Natural Science Foundation of Hebei Province, China (No. D2021402007) and the Guangdong Basic and Applied Basic Research Foundation (No. 2022A1515011586).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The AeroRIT dataset utilized in this study are freely available at https://github.com/aneesh3108/AeroRIT (accessed on 25 October 2022). The DFC2018 dataset utilized in this study is freely available at https://hyperspectral.ee.uh.edu/?page_id=1075 (accessed on 25 October 2022). The Xiongan dataset utilized in this study is freely available at http://www.hrs-cas.com/a/share/shujuchanpin/2019/0501/1049.html (accessed on 25 October 2022). The Salinas dataset utilized in this study is freely available at http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 25 October 2022).

Acknowledgments

The authors thank the Hyperspectral Image Analysis Laboratory at the University of Houston, as well as the IEEE GRSS Image Analysis and Data Fusion Technical Committee for acquiring and providing the HSI data used in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, X.; Li, Z.; Qiu, H.; Hou, G.; Fan, P. An Overview of Hyperspectral Image Feature Extraction, Classification Methods and The Methods Based on Small Samples. Appl. Spectrosc. Rev. 2021, 11, 1–34. [Google Scholar] [CrossRef]

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Krupnik, D.; Khan, S. Close-Range, Ground-Based Hyperspectral Imaging for Mining Applications at Various Scales: Review and Case Studies. Earth-Sci. Rev. 2019, 198, 102952. [Google Scholar] [CrossRef]

- Liu, B.; Liu, Z.; Men, S.; Li, Y.; Ding, Z.; He, J.; Zhao, Z. Underwater Hyperspectral Imaging Technology and Its Applications for Detecting and Mapping the Seafloor: A Review. Sensors 2020, 20, 4962. [Google Scholar] [CrossRef]

- Chen, Y.; Xing, Z.; Jia, X. Spectral-Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep Learning for Hyperspectral Image Classification: An Overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Prasad, S.; Bruce, L.M. Limitations of Principal Components Analysis for Hyperspectral Target Recognition. IEEE Geosci. Remote Sens. Lett. 2008, 5, 625–629. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowler, J.E.; Bruce, L.M. Locality-Preserving Dimensionality Reduction and Classification for Hyperspectral Image Analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1185–1198. [Google Scholar] [CrossRef]

- Liao, W.; Pizurica, A.; Scheunders, P.; Philips, W.; Pi, Y. Semisupervised Local Discriminant Analysis for Feature Extraction in Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 184–198. [Google Scholar] [CrossRef]

- Al-khafaji, S.L.; Zhou, J.; Zia, A.; Liew, A.W. Spectral-Spatial Scale Invariant Feature Transform for Hyperspectral Images. IEEE Trans. Image Process. 2018, 27, 837–850. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Chen, C.; Su, H.; Du, Q. Local Binary Patterns and Extreme Learning Machine for Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Gu, Y.; Liu, H. Sample-Screening MKL Method via Boosting Strategy for Hyperspectral Image Classification. Neurocomputing 2016, 173, 1630–1639. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of Hyperspectral Remote Sensing Images with Support Vector Machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the Random Forest Framework for Classification of Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Zhou, Y.; Peng, J.; Chen, C.L.P. Extreme Learning Machine with Composite Kernels for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2351–2360. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral-Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Y.; Zhang, Y.; Shen, Q. Spectral-Spatial Classification of Hyperspectral Imagery Using a Dual-Channel Convolutional Neural Network. Remote Sens. Lett. 2017, 8, 438–447. [Google Scholar] [CrossRef]

- Li, J.S.; Xia, X.; Li, W. Next-ViT: Next Generation Vision Transformer for Efficient Deployment in Realistic Industrial Scenarios. arXiv 2022, arXiv:2207.05501v2. [Google Scholar]

- Wang, H.; Wu, X.; Huang, Z.; Xing, E.P. High-Frequency Component Helps Explain the Generalization of Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8681–8691. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, J.; Meng, Z.; Liu, H. Densely Connected Pyramidal Dilated Convolutional Network for Hyperspectral Image Classification. Remote Sens. 2021, 13, 3396. [Google Scholar] [CrossRef]

- Liu, D.; Han, G.; Liu, P.; Yang, H.; Sun, X.; Li, Q.; Wu, J. A Novel 2D-3D CNN with Spectral-Spatial Multi-Scale Feature Fusion for Hyperspectral Image Classification. Remote Sens. 2021, 13, 4621. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.-W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sens. 2019, 11, 159. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, S.; Chen, C.; Du, Q.; Xiao, L.; Chen, J.; Pan, D. Efficient Deep Learning of Nonlocal Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6029–6043. [Google Scholar] [CrossRef]

- Wang, L.; Peng, J.; Sun, W. Spatial-Spectral Squeeze-and-Excitation Residual Network for Hyperspectral Image Classification. Remote Sens. 2019, 11, 884. [Google Scholar] [CrossRef]

- Xue, Z.H.; Xu, Q.; Zhang, M.X. Local Transformer with Spatial Partition Restore for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4307–4325. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Qing, Y.; Liu, W.; Feng, L.; Gao, W. Improved Transformer Net for Hyperspectral Image Classification. Remote Sens. 2021, 13, 2216. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, J.; Gao, X.; Wang, M.; Ou, D.; Wu, X.; Zhang, D. Fusing Spatial Attention with Spectral-Channel Attention Mechanism for Hyperspectral Image Classification via Encoder-Decoder Networks. Remote Sens. 2022, 14, 1968. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 12 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- D’Ascoli, S.; Touvron, H.; Leavitt, M.; Morcos, A.; Biroli, G.; Sagun, L. ConViT: Improving Vision Transformers with Soft Convolutional Inductive Biases. arXiv 2021, arXiv:2103.10697. [Google Scholar] [CrossRef]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in Transformer. arXiv 2021, arXiv:2103.00112. [Google Scholar]

- Yang, L.; Yang, Y.; Yang, J.; Zhao, N.; Wu, L.; Wang, L.; Wang, T. FusionNet: A Convolution-Transformer Fusion Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 4066. [Google Scholar] [CrossRef]

- Sun, L.; Zhao, G.; Zheng, Y.; Wu, Z. Spectral-Spatial Feature Tokenization Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5522214. [Google Scholar] [CrossRef]

- Song, R.; Feng, Y.; Cheng, W.; Mu, Z.; Wang, X. BS2T: Bottleneck Spatial-Spectral Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5532117. [Google Scholar] [CrossRef]

- Park, N.; Kim, S. How Do Vision Transformers Work? arXiv 2022, arXiv:2202.06709. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Nalepa, J.; Myller, M.; Kawulok, M. Validating Hyperspectral Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1264–1268. [Google Scholar] [CrossRef]

- Liang, J.; Zhou, J.; Qian, Y.; Wen, L.; Bai, X.; Gao, Y. On the Sampling Strategy for Evaluation of Spectral-Spatial Methods in Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 862–880. [Google Scholar] [CrossRef]

- Zou, L.; Zhu, X.; Wu, C.; Liu, Y.; Qu, L. Spectral-Spatial Exploration for Hyperspectral Image Classification via the Fusion of Fully Convolutional Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 659–674. [Google Scholar] [CrossRef]

- Rangnekar, A.; Mokashi, N.; Ientilucci, E.J.; Kanan, C.; Hoffman, M.J. AeroRIT: A New Scene for Hyperspectral Image Analysis. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8116–8124. [Google Scholar] [CrossRef]

- Cen, Y.; Zhang, L.; Zhang, X.; Wang, Y.; Qi, W.; Tang, S.; Zhang, P. Aerial Hyperspectral Remote Sensing Classification Dataset of Xiongan New Aera (Matiwan Village). J. Remote Sens. 2020, 24, 10–17. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science in Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y.S. Deep Sparse Rectifier Neural Networks. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. Available online: http://proceedings.mlr.press/v15/glorot11a/glorot11a.pdf (accessed on 25 October 2022).

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian Error Linear Units (GELUs). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611v3. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:2105.15203v2. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar]

- Zhu, Q.; Deng, W.; Zheng, Z.; Zhong, Y.; Guan, Q.; Lin, W.; Zhang, L.; Li, D. A Spectral-Spatial-Dependent Global Learning Framework for Insufficient and Imbalanced Hyperspectral Image Classification. IEEE Trans. Cybern. 2021, 52, 11709–11723. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Yu, C.; Wang, J.; Gao, C.; Yu, G.; Shen, C.; Sang, N. Context Prior for Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12413–12422. [Google Scholar] [CrossRef]

- Sun, Z.; Liu, M.; Liu, P.; Li, J.; Yu, T.; Gu, X.; Yang, J.; Mi, X.; Cao, W.; Zhang, Z. SAR Image Classification Using Fully Connected Conditional Random Fields Combined with Deep Learning and Superpixel Boundary Constraint. Remote Sens. 2021, 13, 271. [Google Scholar] [CrossRef]

- Karimi, D.; Salcudean, S.E. Reducing the Hausdorff Distance in Medical Image Segmentation with Convolutional Neural Networks. IEEE Trans. Med. Imaging 2020, 39, 499–513. [Google Scholar] [CrossRef] [PubMed]

- Sinha, S.; Ohashi, H.; Nakamura, K. Class-Wise Difficulty-Balanced Loss for Solving Class-Imbalance. In Proceedings of the Asian Conference on Computer Vision (ACCV), Kyoto, Japan, 30 November–4 December 2020; pp. 549–565. [Google Scholar] [CrossRef]

- Wambugua, N.; Chen, Y.; Xiao, Z.; Tan, K.; Wei, M.; Liu, X.; Li, J. Hyperspectral Image Classification on Insufficient-Sample and Feature Learning Using Deep Neural Networks: A Review. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102603. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Mottaghi, R.; Chen, X.; Liu, X.; Cho, N.; Lee, S.; Fidler, S.; Urtasun, R.; Yuille, A.L. The Role of Context for Object Detection and Semantic Segmentation in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 891–898. [Google Scholar] [CrossRef]

- Xu, Y.; Gong, J.; Huang, X.; Hu, X.; Li, J.; Li, Q.; Peng, M. Luojia-HSSR: A High Spatial-Spectral Resolution Remote Sensing Dataset for Land-Cover Classification with a New 3D-HRNet. Geo-Spat. Inf. Sci. 2022. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).