Abstract

Transcutaneous injection laryngoplasty is a well-known procedure for treating a paralyzed vocal fold by injecting augmentation material to it. Hence, vocal fold localization plays a vital role in the preoperative planning, as the fold location is required to determine the optimal injection route. In this communication, we propose a mirror environment based reinforcement learning (RL) algorithm for localizing the right and left vocal folds in preoperative neck CT. RL-based methods commonly showed noteworthy outcomes in general anatomic landmark localization problems in recent years. However, such methods suggest training individual agents for localizing each fold, although the right and left vocal folds are located in close proximity and have high feature-similarity. Utilizing the lateral symmetry between the right and left vocal folds, the proposed mirror environment allows for a single agent for localizing both folds by treating the left fold as a flipped version of the right fold. Thus, localization of both folds can be trained using a single training session that utilizes the inter-fold correlation and avoids redundant feature learning. Experiments with 120 CT volumes showed improved localization performance and training efficiency of the proposed method compared with the standard RL method.

1. Introduction

Vocal fold paresis is a common condition among older patients [1], characterized by the paralysis of any of the right (RVF) and left vocal folds (LVF) or both. In addition to causing voice discomfort and breathing and swallowing difficulty, it can cause harm to the respiratory organs because of the glottal gap created by the paralyzed fold, therefore requiring immediate treatment [2]. One of the most common treatment procedures for this is transcutaneous injection laryngoplasty (TIL) [3], where the glottal gap is filled by injecting augmentation material into the affected vocal fold. During the preoperative planning with neck CT, accurate localization of the vocal folds is required for estimating the optimal injection route [4]. Thus, an automatic vocal fold localization method using neck CT can be potentially useful for guiding and accelerating the preoperative planning process.

To the best of our knowledge, no computational approach currently exists for vocal fold localization or injection route identification. Some manual approaches can be found where vocal fold and needle routes are studied using neck CT across different patients [4] or a 3D printed larynx [5,6]. In general anatomical landmark localization problems, deep learning based heatmap regression methods are widely used, where spatial heatmaps around the landmarks are usually regressed [7]. However, such heatmaps constitute a negligible foreground region compared to the huge 3D background, causing sample bias [8]. In contrast, deep reinforcement learning (RL) based localization approaches suggest a sequential decision process involving a finer pixel-to-pixel navigation combined with deep feature learning, thereby producing improved predictions [9,10,11].

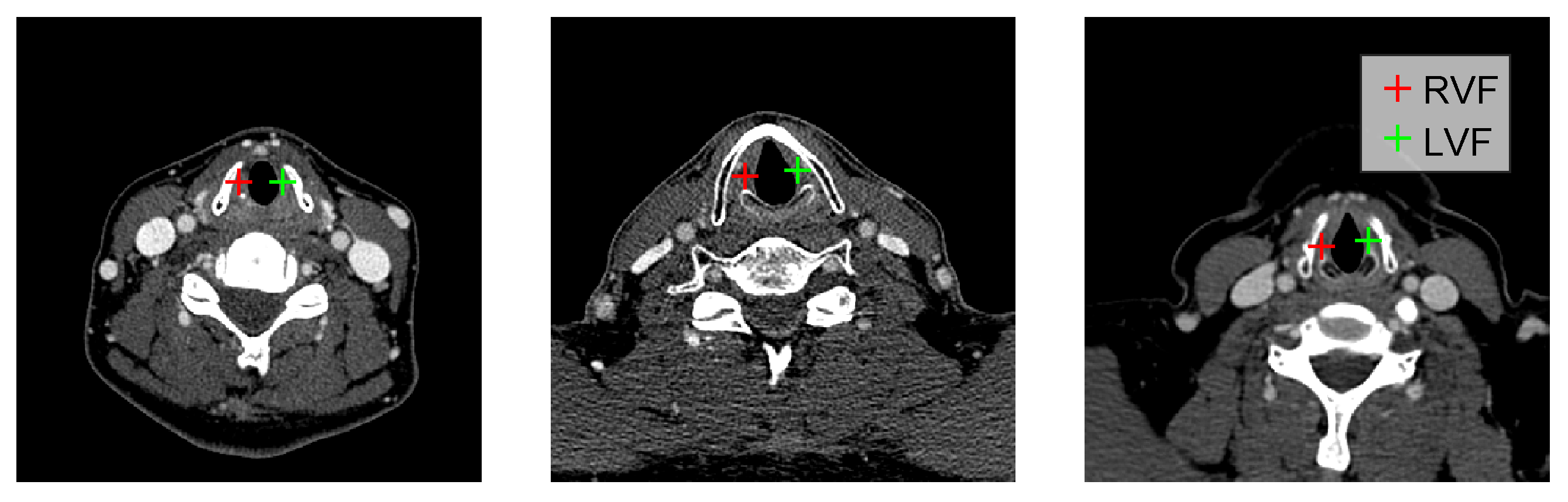

In this communication, we propose a RL-based method to localize the right and left folds in preoperative neck CT. Due to the existing RL formulation [8,10,11], the proposed agent takes sequential steps to navigate through the voxel space to finally converge to the target fold location. Starting from a random initial position, the steps are decided based on local features learned by a deep convolutional neural network (CNN). However, existing RL-based localization methods commonly require an independent agent to be separately trained for each target landmark. However, the vocal folds have high feature similarity as a result of laryngeal symmetry (see Figure 1). Moreover, they are located close to one another in the same area of the larynx. Therefore, redundant features would be learned during the separate training sessions of the two agents. Such training would also fail to utilize the high feature correlation between the folds.

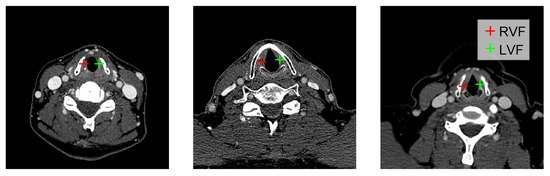

Figure 1.

Right and left vocal folds (RVF and LVF) shown in axial slices of three neck CT volumes.

To benefit from the inter-fold similarity, we propose a mirror environment that allows for a single agent to localize both the RVF and LVF position. The agent localizes the RVF in the original volume and the LVF in the laterally flipped volume, essentially considering the flipped LVF to be the same target as the RVF due to laryngeal symmetry. In this mirror environment, the agent can be efficiently trained in a single session, avoiding redundant feature learning while improving localization performance by sharing correlated features from both the right and left folds during training.

2. Methodology

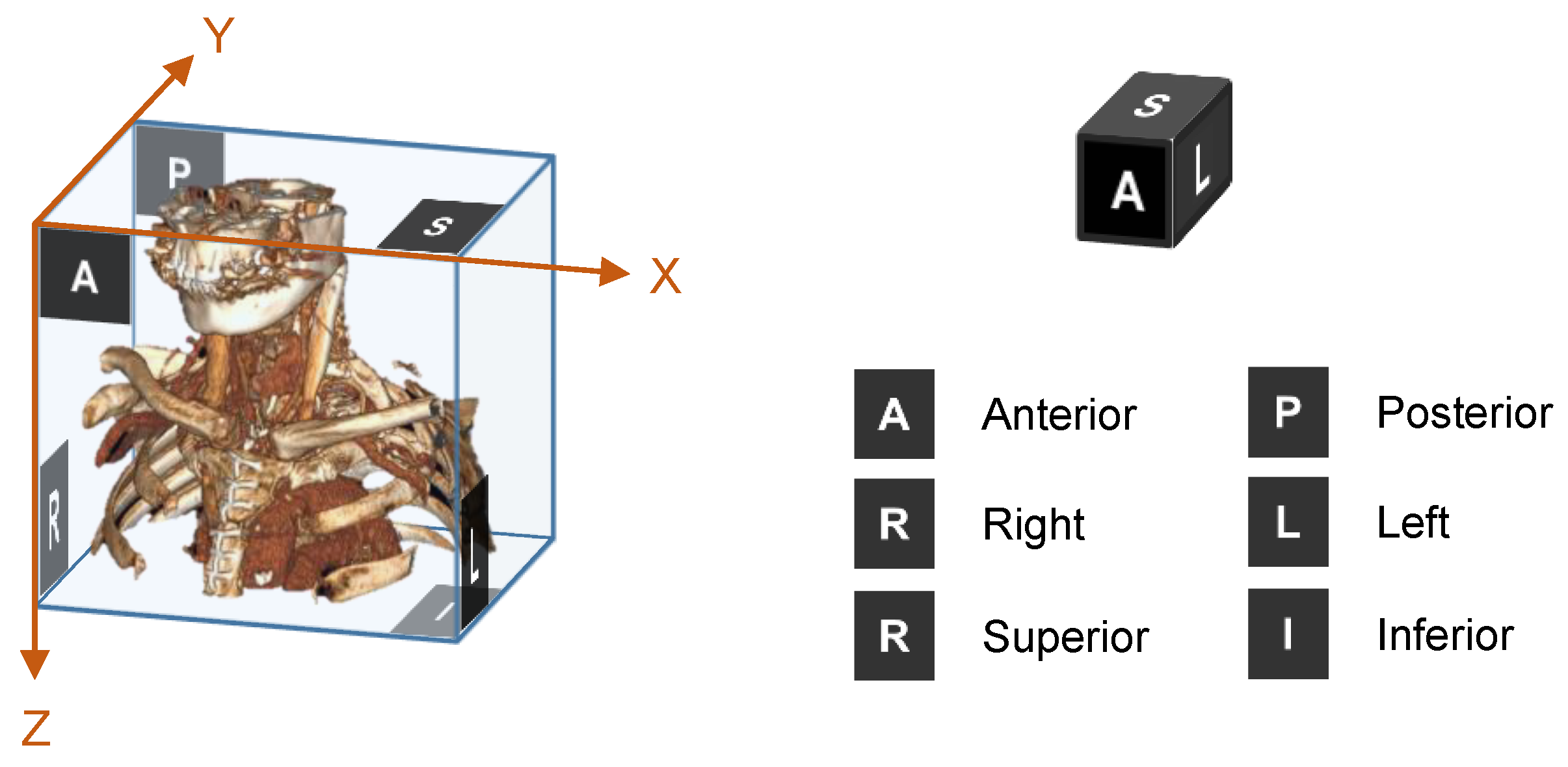

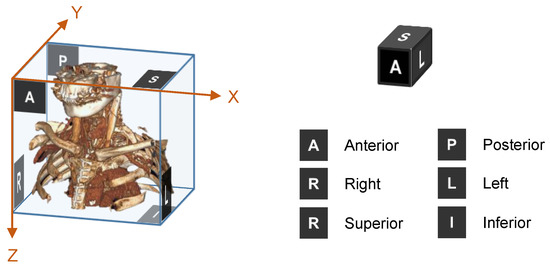

In our deep RL-based method, we automatically localize the RVF and LVF in preoperative neck CT. Neck CT can be represented by a 3D volume V with the three Cartesian axes X, Y, and Z indicating towards right-to-left, anterior-to-posterior, and superior-to-inferior, respectively (see Figure 2). Inside this volume, we denote the right and left fold positions by and . Specifically, these positions are identified as the posterior vocal fold landmarks.

Figure 2.

Cartesian axes directions in neck CT volume. On the left, volume rendering of a neck CT using RadiAnt Dicom Viewer (http://www.radiantviewer.com (accessed on 15 December 2022)) is shown with axes annotation.

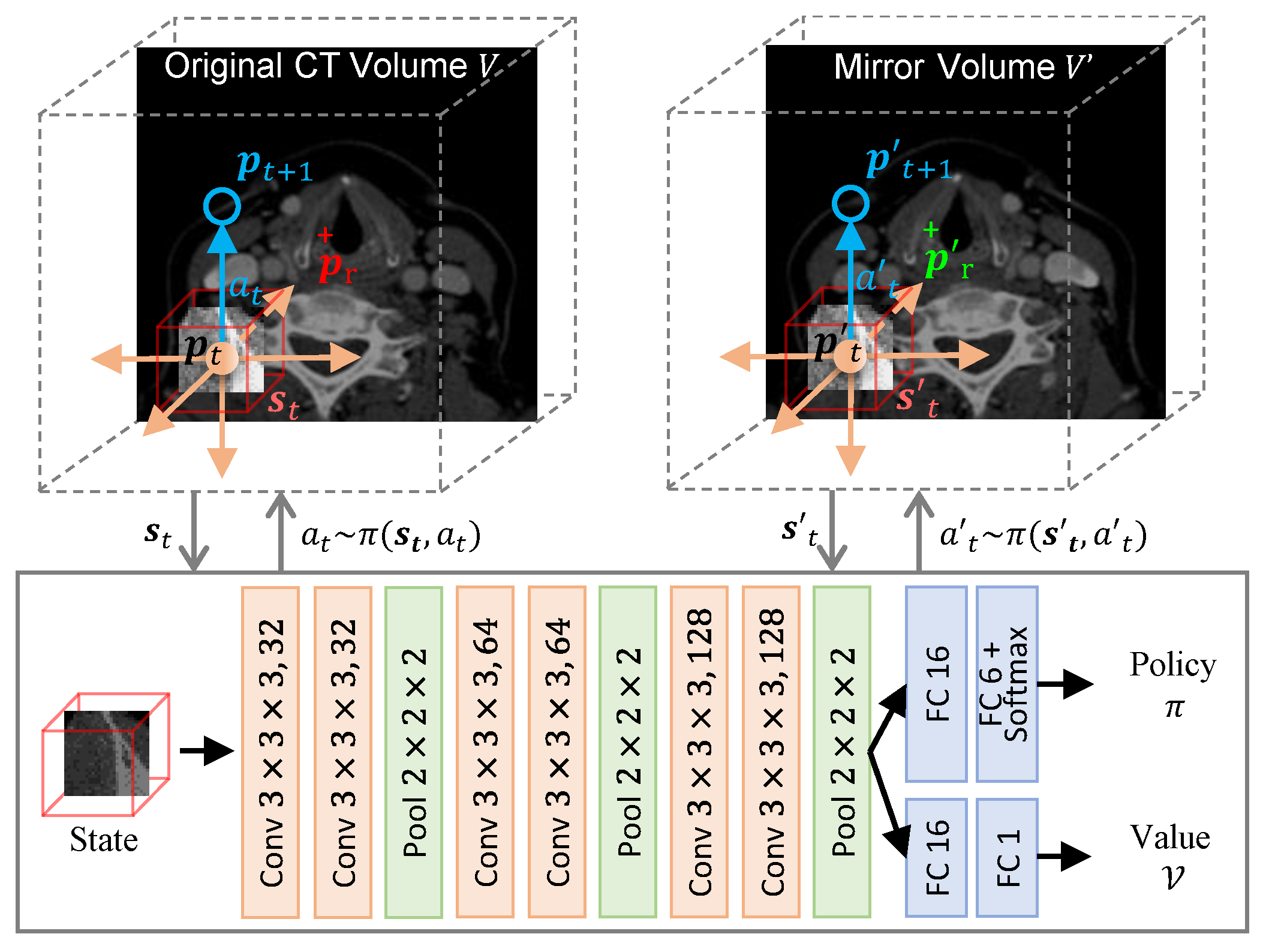

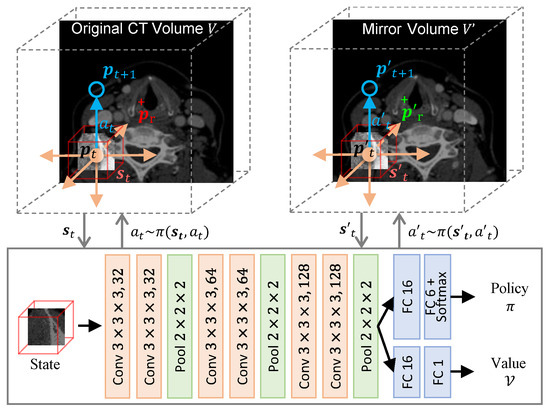

The basic agent interaction in the proposed RL environment is similar to the standard formulation commonly found in all existing RL-based studies [8,10,11]. Usually, an agent localizes a target landmark by taking an episode of sequential steps starting from a random initial voxel inside the environment (i.e., input CT volume). At any step t, it observes the current state as the local patch centered at the current voxel and takes an action to move one voxel forward or backward along any of the axes. Thus, it updates to a neighboring voxel and transitions to a new state . A policy gives the optimal probability for choosing an action in a given state . Figure 3 illustrates the agent-interaction with the volume. Similar to the existing studies [8,10,11], the local patch size of the state was set to voxels.

Figure 3.

Proposed mirror environment. A single RL agent localizes the right fold in the original volume and left fold in the flipped volume.

During training, a scalar reward is provided after each step, in which a positive reward is assigned for a step towards the target and a negative reward is assigned otherwise. Specifically, the reward for transition from position to by action can be indicated as follows:

where represents a small distance within which the agent constantly receives positive rewards, indicating the convergence state. For training, the agent-trajectories after multiple episodes of transitions is recorded, where each transition can be denoted by where , and . The goal of training is to optimize the policy so that the expected cumulative reward over such trajectories is maximized.

In a multi-landmark situation, individual agent-policies are usually trained on different trajectories rather than for different landmarks [8,10,11]. However, this is sub-optimal and inefficient because RVF and LVF in our problem have high similarity in features and high proximity in location. In the following, we describe the mirror environment that allows for efficient and improved training of a single agent-policy to localize both the RVF and LVF.

Mirror Environment

The proposed mirror environment is solely based on the laryngeal symmetry where each of the two vocal folds is almost a mirrored version of the other. We first model the left vocal fold in volume V as the right fold in volume , a laterally (along the X-axis) flipped version of the original volume V. Then, we propose a single RL agent to localize both the right vocal fold (as ) and left vocal fold (as ), essentially arguing and to be the same target landmark with similar features.

With this new model, agent interaction with the environment during localization remains the same. Except, the environment now can either be the original volume V or the mirrored volume , depending on the target being the right fold or the (mirrored) left fold , respectively. Consequently, the reward in (1) is also calculated as or based on the target.

Similar to previous RL frameworks [8,10,11], we represent our policy function by a 3D CNN that outputs optimal action-probabilities for an input state . The CNN consists of three convolutional blocks, each having two convolutional layers followed by a max-pooling layer (see Figure 3). The last convolutional block is connected to two fully connected layers to produce the action probabilities. Algorithm 1 summarizes the policy training process with the mirror environment.

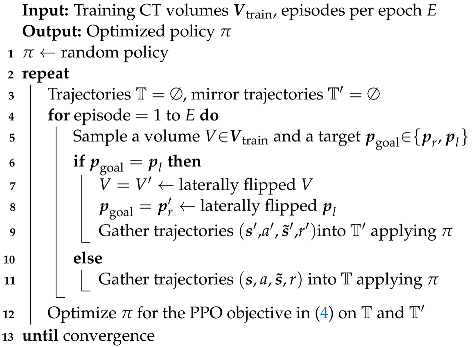

| Algorithm 1: RL policy training with mirror environment |

|

At each epoch, we conduct a number of localization episodes. Each episode is initiated by sampling a training volume V and a target landmark between the right and left folds. Based on the sampled target, the environment is set with either the original volume V or mirrored volume . Then, subsequent steps are performed by applying the current policy. Running multiple episodes, we record the trajectories for the original and mirror volumes as and , respectively.

Now, the policy is updated so that the expected cumulative reward on both and is maximized. We follow the widely used proximal policy optimization (PPO) framework [12] where advantage (i.e., the improvement of the current policy over the previous) is maximized to reduce variance during training. Instead of using the reward directly, the improvement is estimated using a value function. Whereas the reward function only gives the immediate reward at a state, the value function for a state roughly approximates the total amount of reward that can be accumulated over the future episodic-steps starting from state applying policy . Thus, the value function actually encodes the idea of long-term return in the sequential decision process instead of only focusing on the immediate reward.

With the value function, the cumulative reward can now be computed for a transition as: , i.e., the current reward added to the value of the next state. However, a discount factor is used to gradually reduce the values of the next states and to avoid an infinite sum. This discounted cumulative reward is expressed as follows:

where is used. Note that the value network is also built on top of the final convolutional layer of the policy (see Figure 3). This value network is also updated at each epoch to minimize the difference between the predicted value and the observed discounted reward in the gathered trajectories applying the policy in the previous epoch. Here, the value network updated in the last epoch is used to compute the discounted rewards for the observed transitions.

In PPO, the improvement (i.e., advantage) of the current policy over the previous policy (i.e., the policy from the previous epoch) is computed by comparing their corresponding cumulative rewards. For a transition , the cumulative reward of the current policy refers to the observed discounted reward . On the other hand, the cumulative reward of the previous policy refers to the value of the current state, i.e., since the value was optimized to approximate the discounted rewards in the previous epoch. Now the advantage can be simply computed as follows:

The training objective of PPO is to maximize this advantage, thereby updating the policy towards improvement in the expected cumulative reward. For more details on the advantage and objective function, please refer to [12]. In our mirror environment, the PPO training objective for maximizing the advantage can be expressed as follows:

Usually, the policy ratio in (4) is clipped between to avoid large policy updates [12]. Note that the is the target policy to be optimized, whereas the previous policy is a scalar constant holding the optimal probability of action a at state as observed while gathering experiences. Thus, this previous policy is simply the policy updated in the last epoch and used for gathering experiences in the current epoch. Therefore, the above objective suggests that the policy for an action is increased compared to the previous policy if the advantage is positive, and decreased otherwise. Simultaneously utilizing the experiences from both the original and mirror environments, a single policy is optimized to localize both the RVF and the flipped LVF.

3. Results

3.1. Data

To evaluate the performance of the proposed method, we collected 120 neck CT volumes from Seoul National University Bundang Hospital, South Korea. Among the 120 patients ( years old, female), 27 patients had vocal fold paresis. The axial slice (X-Y axes) dimension was consistently voxels, whereas the number axial slices per volume (Z-axis dimension) was 172 on average. The average voxel spacing was mm × mm × mm. The vocal fold locations were annotated by an expert with more than 10 years of experience in injection laryngoplasty.

3.2. Evaluation Method and Performance Metric

We performed a 4-fold cross validation on the 120 volumes to assess the average performance of our algorithm. We hypothesized that the proposed algorithm can result in improved localization and training because of its utilization of the feature similarity between the two folds. Therefore, we use two metrics to validate our proposed contributions: (i) training efficiency (number of episodes explored until convergence) and (ii) localization performance (localization error and accuracy). Localization error is measured by the Euclidean distance of the localized landmark from the expert annotation. Localization accuracy is measured by the percentage of acceptable localization results as evaluated by the expert.

For comparison, we also applied the standard RL-based localization [11] (with the individual training method) as our main baseline method. Furthermore, we applied the widely used deep learning based end-to-end localization approaches, e.g., direct location/coordinate regression and heatmap regression [7], to compare the localization performance.

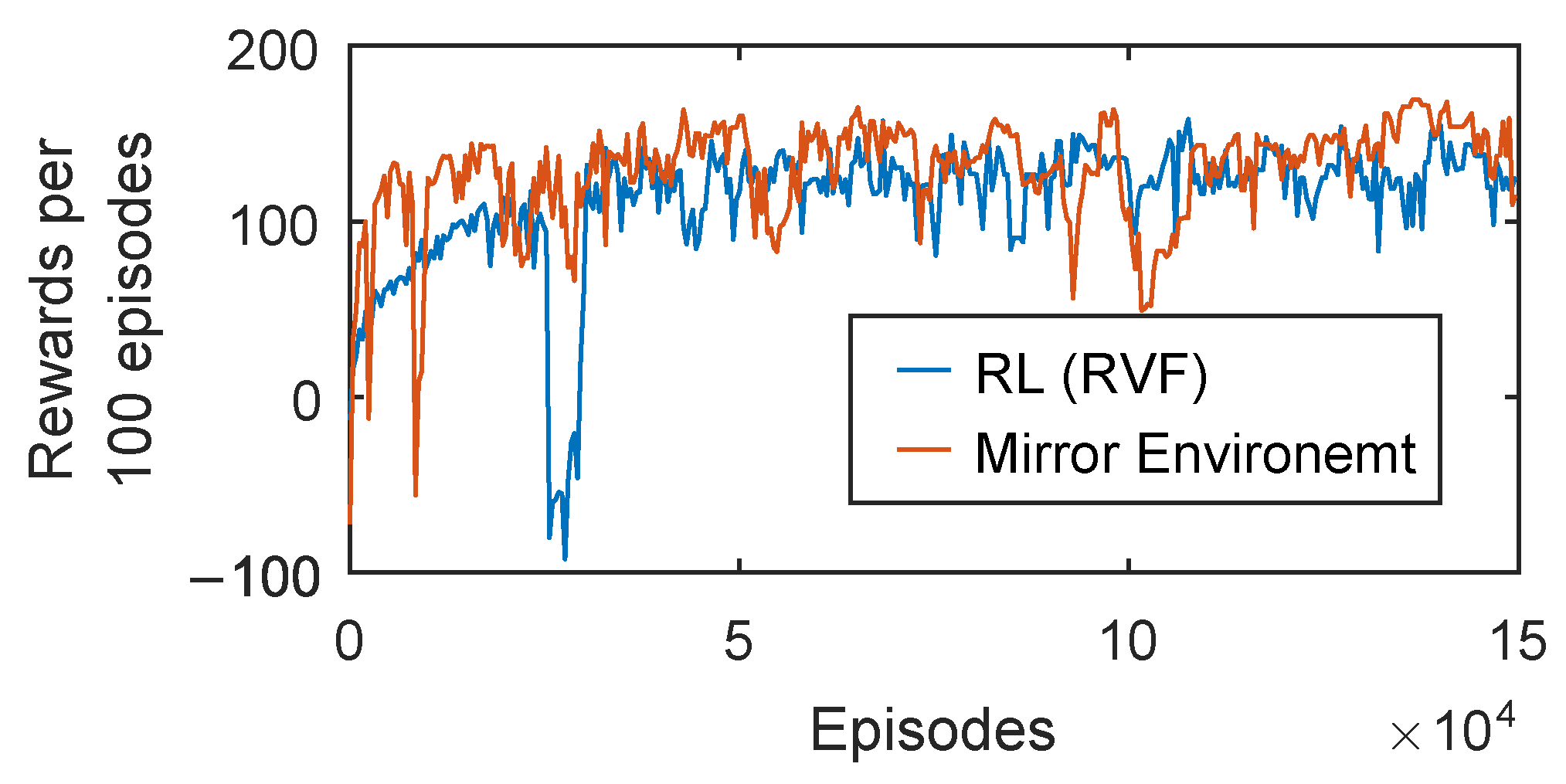

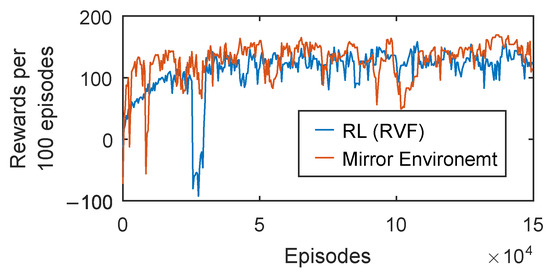

3.3. Training Efficiency

We plot the average episodic rewards over the training epochs of the standard RL agents along with the proposed one in Figure 4. For all the agents, 400 episodes were explored at each epoch, where each episode consisted of 200 steps. All other hyperparameters were kept identical among all the agents for fair comparison. The learning rate and the discount factor were set to and , following the convention of previous work [8,10,11]. The average number of episodes required for training the independent agents was approximately 206k (110k for RVF and 96k for LVF). In contrast, the proposed RL agent only required about 122k episodes to successfully learn to localize both folds. Thus, we could achieve almost two times faster training by the simultaneous training.

Figure 4.

Reward plot over the training episodes. Proposed agent can learn to localize both folds in a similar time the usual agent learns a single fold.

In Table 1, we also report the average final reward when the training converges in different sessions of the 4-fold cross validation process. The final reward achieved by the individual agent method was , whereas the proposed agent could achieve a significantly higher reward of (p-value ).

Table 1.

Number of required episodes and final reward.

3.4. Localization Performance

In addition to the higher training efficiency, the proposed method also significantly outperformed the standard RL-based localization approach (p-value ). The individual agents in the usual RL method [11] could give an average localization error of mm, yielding improvement over the end-to-end deep learning methods [7,13] (see Table 2). The proposed mirror environment based RL could further lower the error to mm with its single agent utilizing the inter-fold similarity. Table 2 also presents the corresponding localization accuracy for each method. The proposed agent showed similar performance for localizing the RVF and LVF, showing an error of approximately and mm, respectively (p-value ). The corresponding localization accuracies were and .

Table 2.

Localization error and accuracy.

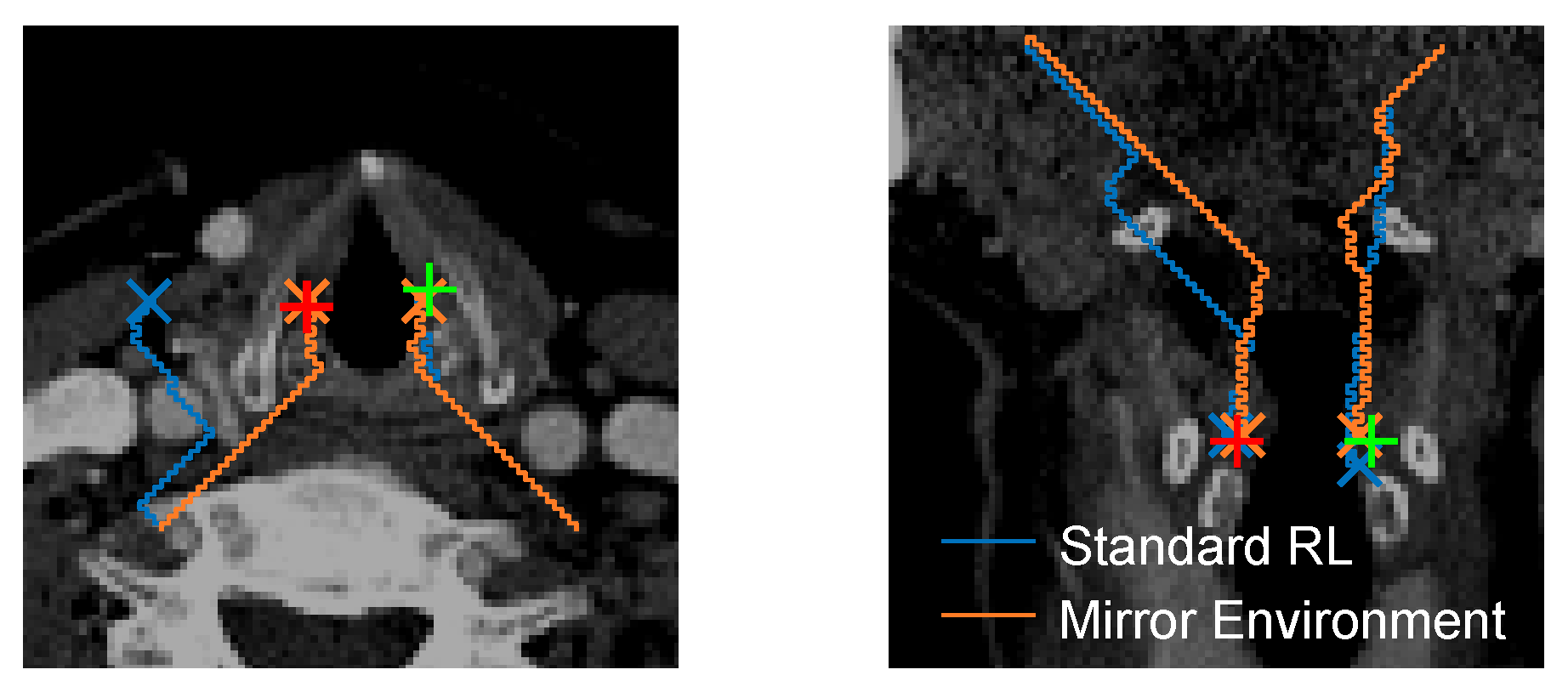

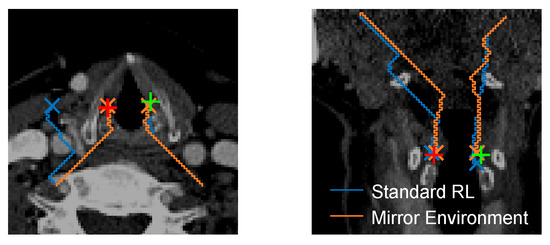

In Figure 5, we present two examples of improvements by the proposed method, where the localization trajectories of the standard RL agents and the mirror environment agent are plotted. Being ignorant of the inter-fold symmetry and similarity, the standard RL agents gave localization failure (Figure 5, left) or higher error (Figure 5, right) for one fold despite correctly localizing the other. In contrast, the proposed agent could improve on both cases by enforcing a similar and more general policy for both folds, resulting from the implicit sharing of RVF and LVF features during the simultaneous training.

Figure 5.

Agent-trajectories for localizing the vocal folds in two CT volumes ((left): axial view, (right): coronal view). Localization failure (left) or higher localization error (right) for one fold despite correct localization of the other fold by the standard RL. The mirror environment showed improvement by employing similar policies for both. Red and green plus (+) signs indicate the expert-annotated RVF and LVF locations, respectively. The cross (×) signs indicate the agent-positions at the end of different trajectories.

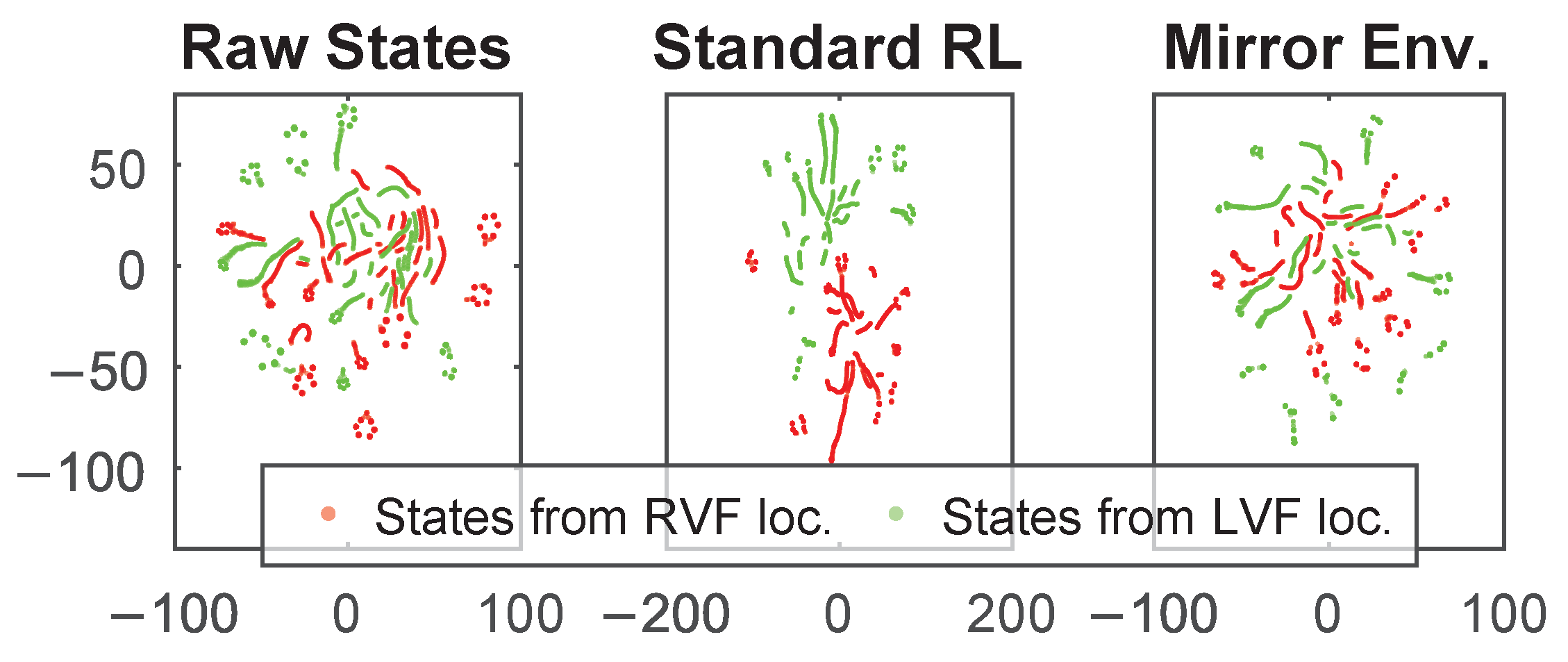

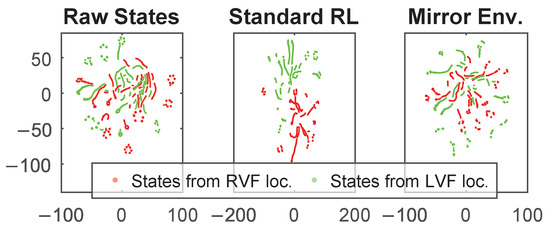

To further illustrate the improved generalization and feature-similarity utilization of the proposed method, we compared the learned feature representation of different agents (Figure 6). We collected approximately 6000 states from multiple episodes employing the standard RL agents to localize the right and left folds on different volumes. For these states, we then extracted the corresponding features (outputs of the final convolution layer) of the policy networks of the standard and proposed agents. Manifold representation [14] is used to effectively plot these high dimensional features into 2D space.

Figure 6.

Manifold representation of the learned features by different agents. Inter-fold similarity was better retained in features of the proposed agent.

State similarity between right and left folds can clearly be observed in Figure 6 (left), where the raw voxel array of the state is represented. However, the standard RL agents learned different representations for the two folds despite their general similarity. On the other hand, feature representation of the proposed agent better retained the general trend of the original state-similarity, resulting in improved localization policies.

4. Conclusions

We proposed a mirror environment for localizing the RVF and LVF in neck CT using RL. Modeling the LVF as a flipped RVF, the proposed method could train a single agent for both folds. Compared to the individual agents in existing RL methods, the proposed method could give higher training efficiency and localization accuracy by effectively utilizing the inter-fold similarity and anatomical symmetry. For our future work, we plan to collect datasets from different sites and expand the method for actual injection route planning based on the localized folds.

Author Contributions

Conceptualization, methodology, W.A.A. and I.D.Y.; software, W.A.A.; validation, W.C.; formal analysis, W.A.A.; investigation, W.C. and I.D.Y.; resources, data curation, W.C.; writing—original draft preparation, W.A.A.; writing—review and editing, W.C. and I.D.Y.; visualization, W.A.A.; supervision, I.D.Y. and W.C.; project administration, I.D.Y.; funding acquisition, I.D.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education, Science, Technology (No. 2019R1A2C1085113).

Institutional Review Board Statement

Approval of all ethical and experimental procedures and protocols was granted by the Institutional Review Board (IRB) of Seoul National University Bundang Hospital (IRB approval number: B-2202-738-105).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| LVF | Left vocal fold |

| RVF | Right vocal fold |

| RL | Reinforcement learning |

References

- Ahmad, S.; Muzamil, A.; Lateef, M. A study of incidence and etiopathology of vocal cord paralysis. Indian J. Otolaryngol. Head Neck Surg. 2002, 54, 294–296. [Google Scholar] [CrossRef] [PubMed]

- Tsai, M.S.; Yang, Y.H.; Liu, C.Y.; Lin, M.H.; Chang, G.H.; Tsai, Y.T.; Li, H.Y.; Tsai, Y.H.; Hsu, C.M. Unilateral vocal fold paralysis and risk of pneumonia: A nationwide population-based cohort study. Otolaryngol.—Head Neck Surg. 2018, 158, 896–903. [Google Scholar] [CrossRef] [PubMed]

- Chhetri, D.K.; Jamal, N. Percutaneous injection laryngoplasty. Laryngoscope 2014, 124, 742. [Google Scholar] [CrossRef] [PubMed]

- Nasir, Z.M.; Azman, M.; Baki, M.M.; Mohamed, A.S.; Kew, T.Y.; Zaki, F.M. A proposal for needle projections in transcutaneous injection laryngoplasty using three-dimensionally reconstructed CT scans. Surg. Radiol. Anat. 2021, 43, 1225–1233. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Ang, C.; Andreadis, K.; Shin, J.; Rameau, A. An Open-Source Three-Dimensionally Printed Laryngeal Model for Injection Laryngoplasty Training. Laryngoscope 2021, 131, E890–E895. [Google Scholar] [CrossRef] [PubMed]

- Hamdan, A.L.; Haddad, G.; Haydar, A.; Hamade, R. The 3D printing of the paralyzed vocal fold: Added value in injection laryngoplasty. J. Voice 2018, 32, 499–501. [Google Scholar] [CrossRef] [PubMed]

- Payer, C.; Štern, D.; Bischof, H.; Urschler, M. Integrating spatial configuration into heatmap regression based CNNs for landmark localization. Med. Image Anal. 2019, 54, 207–219. [Google Scholar] [CrossRef] [PubMed]

- Abdullah Al, W.; Yun, I.D. Partial Policy-Based Reinforcement Learning for Anatomical Landmark Localization in 3D Medical Images. IEEE Trans. Med. Imaging 2020, 39, 1245–1255. [Google Scholar] [CrossRef] [PubMed]

- Ghesu, F.C.; Georgescu, B.; Mansi, T.; Neumann, D.; Hornegger, J.; Comaniciu, D. An artificial agent for anatomical landmark detection in medical images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 229–237. [Google Scholar]

- Ghesu, F.C.; Georgescu, B.; Grbic, S.; Maier, A.; Hornegger, J.; Comaniciu, D. Towards intelligent robust detection of anatomical structures in incomplete volumetric data. Med. Image Anal. 2018, 48, 203–213. [Google Scholar] [CrossRef] [PubMed]

- Alansary, A.; Oktay, O.; Li, Y.; Le Folgoc, L.; Hou, B.; Vaillant, G.; Kamnitsas, K.; Vlontzos, A.; Glocker, B.; Kainz, B.; et al. Evaluating reinforcement learning agents for anatomical landmark detection. Med. Image Anal. 2019, 53, 156–164. [Google Scholar] [CrossRef] [PubMed]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Lv, J.; Shao, X.; Xing, J.; Cheng, C.; Zhou, X. A deep regression architecture with two-stage re-initialization for high performance facial landmark detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3317–3326. [Google Scholar]

- LJPvd, M.; Hinton, G. Visualizing high-dimensional data using t-SNE. J. Mach. Learn. Res. 2008, 9, 9. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).