Modeling Liquid Thermal Conductivity of Low-GWP Refrigerants Using Neural Networks

Abstract

1. Introduction

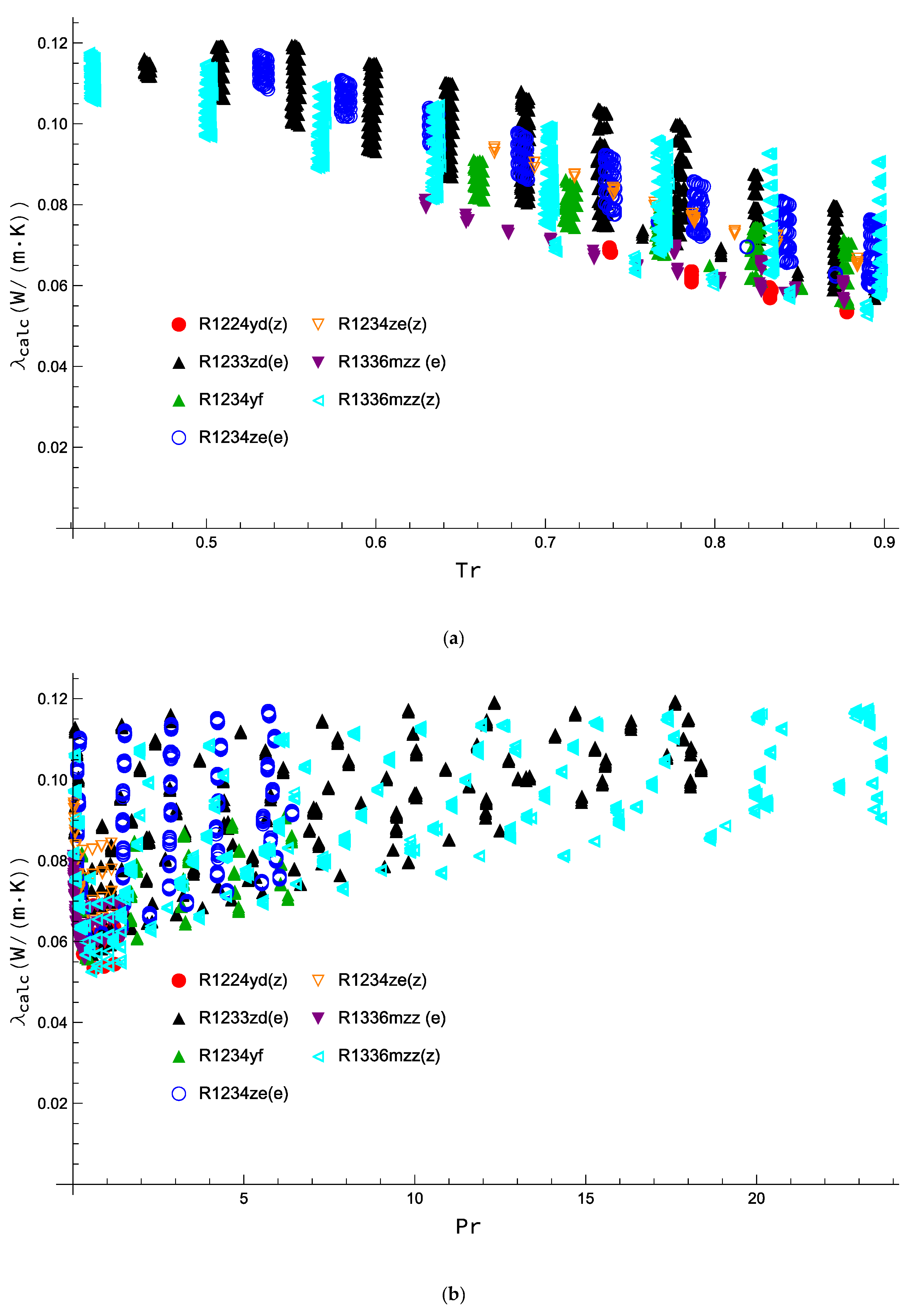

2. Data Analysis

| Refrigerant | Number of Data | T Range K | P Range MPa | λL Range W m−1 K−1 | Source |

|---|---|---|---|---|---|

| R1224yd(Z) | 53 | 316.25–376.37 | 1.00–4.07 | 0.05379–0.06979 | [32] |

| R1233zd(E) | 1132 | 203.56–393.22 | 0.18–66.62 | 0.05719–0.11991 | [33,34] |

| R1234yf | 267 | 241.92–324.00 | 0.44–21.64 | 0.05607–0.09158 | [35,36] |

| R1234ze(E) | 494 | 203.18–343.31 | 0.31–23.32 | 0.05893–0.11727 | [35,36,37] |

| R1234ze(Z) | 61 a | 283.54–374.24 | 0.10–4.01 | 0.06520–0.09457 | [38] |

| R1336mzz(E) | 118 | 253.68–353.51 | 0.03–4.06 | 0.05610–0.08160 | [39,40] |

| R1336mzz(Z) | 1279 | 191.58–399.42 | 0.05–68.52 | 0.05294–0.11810 | [41,42] |

| Refrigerant | M kg kmol−1 | Tb K | Tc K | pc MPa | ω | Source |

|---|---|---|---|---|---|---|

| R1224yd(Z) | 148.487 | 287.77 | 428.69 a | 3.33 a | 0.3220 | [43] |

| R1233zd(E) | 130.496 | 291.41 | 439.60 | 3.62 | 0.3025 | [44] |

| R1234yf | 114.042 | 243.67 | 367.85 | 3.38 | 0.2760 | [44] |

| R1234ze(E) | 114.042 | 254.18 | 382.51 | 3.63 | 0.3130 | [45] |

| R1234ze(Z) | 114.042 | 282.88 | 423.27 | 3.53 | 0.3274 | [46] |

| R1336mzz(E) | 164.056 | 280.58 | 403.37 | 2.77 | 0.4053 | [47] |

| R1336mzz(Z) | 164.056 | 306.55 | 444.50 | 2.90 b | 0.3867 | [48] |

3. Artificial Neural Network

- The threshold function has a simple mechanism; the function returns 1 when the weighted sum of the input signals is greater than or equal to zero and 0 in the remaining cases. It is useful if a binary output signal is needed.

- The rectified linear unit is a widely used activator function. It returns 0 if the weighted sum of the input signals is less than or equal to zero, and in the other cases.

- The hyperbolic tangent is useful for non-linear problems and has the following form:In this function, the codomain ranges from −1 to +1.

- The sigmoid is very similar to the hyperbolic tangent, but it differs in the simple fact that its codomain ranges from 0 to +1:

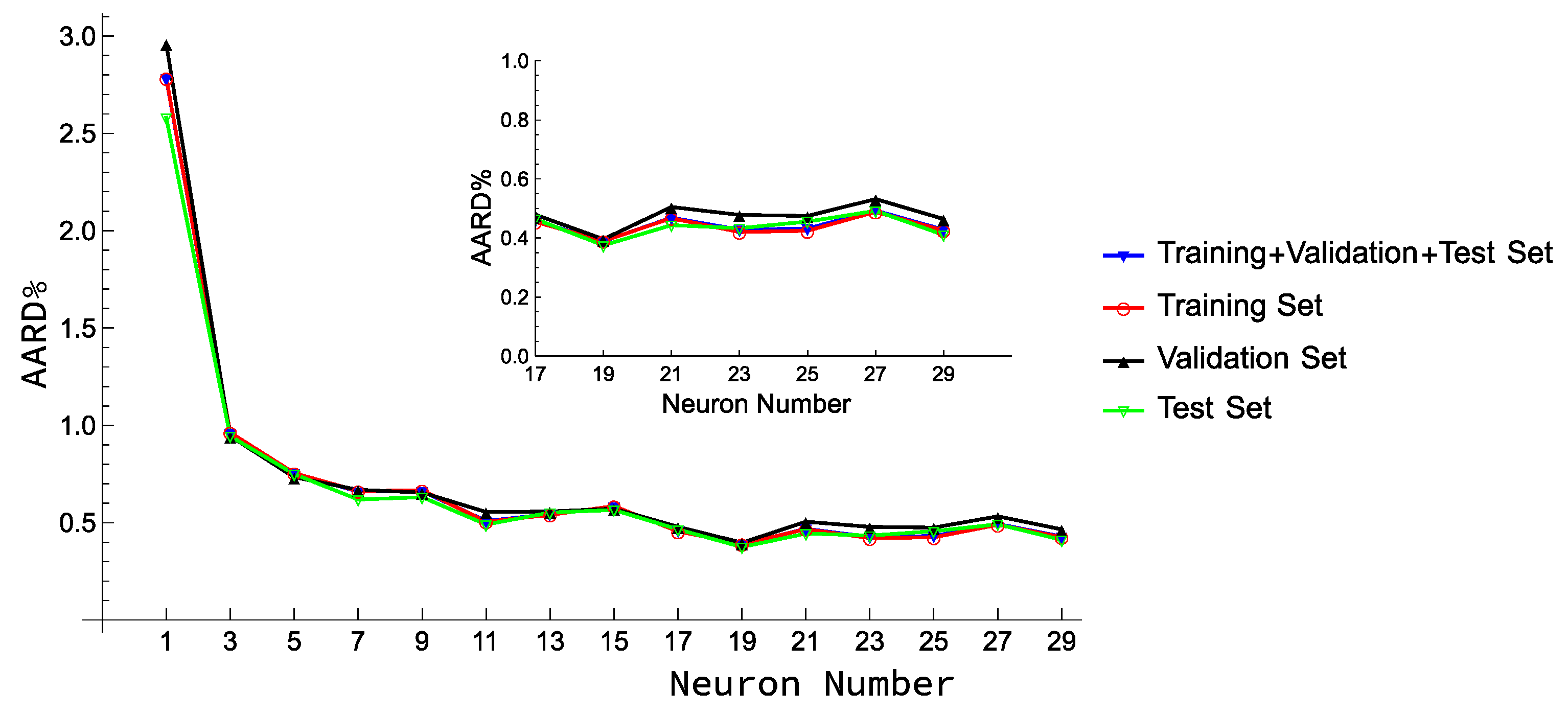

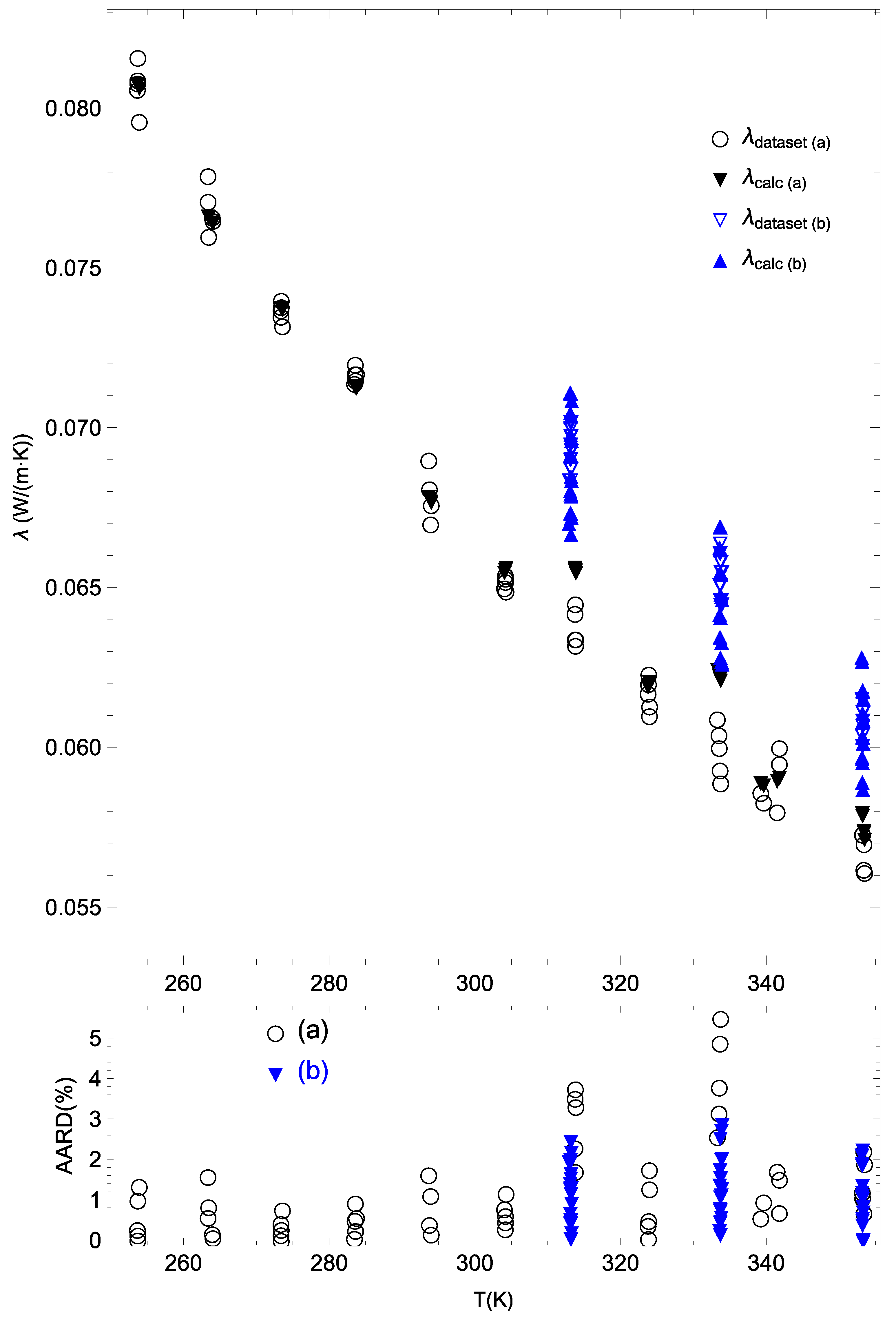

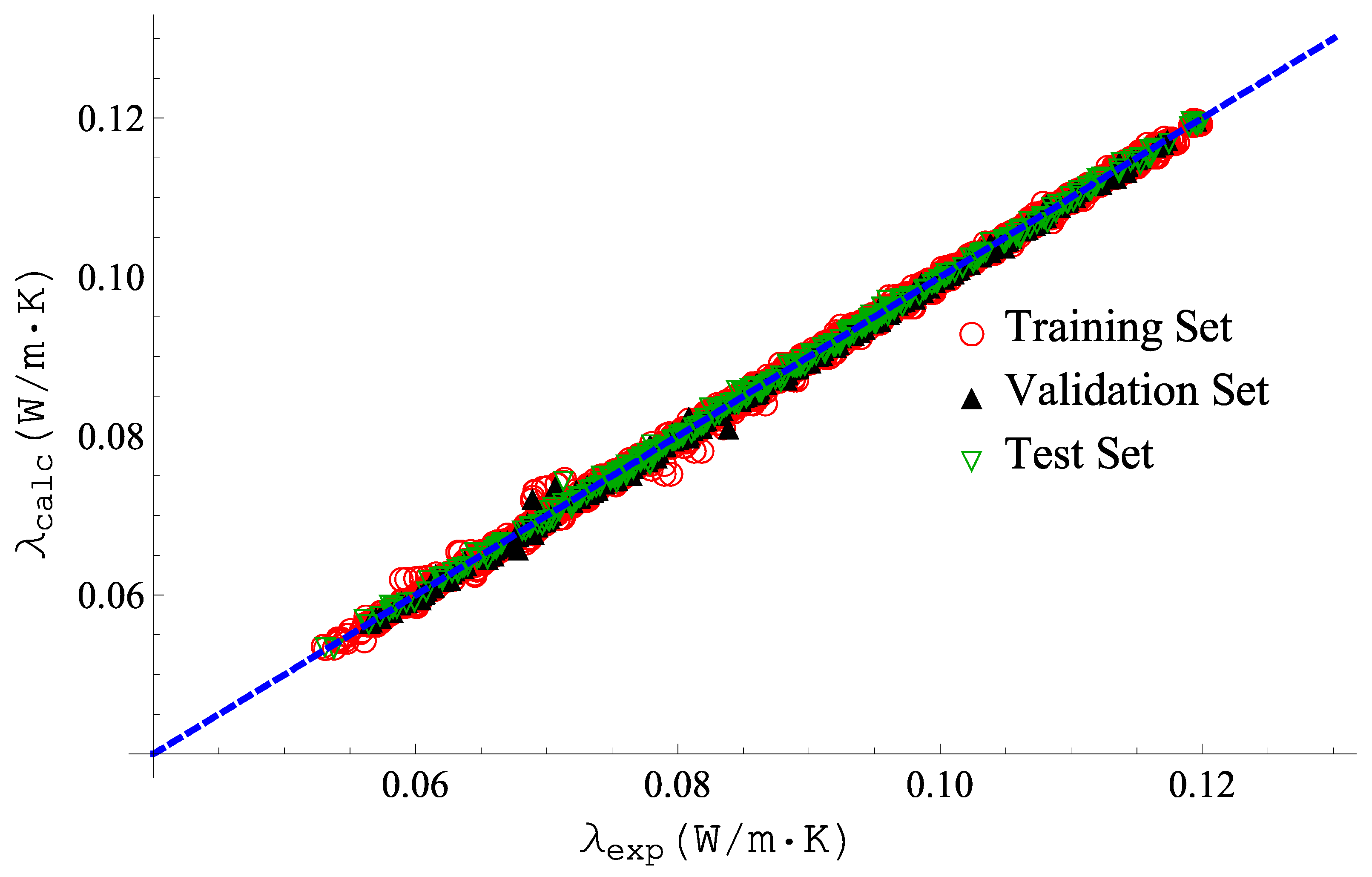

4. Experimental Settings and Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Regulation, E. No 517/2014 of the European Parliament and the Council of 16 April 2014 on Fluorinated Greenhouse Gases and Repealing Regulation (EC) No 842/2006. 2014. Available online: http//eur-lex.Eur.eu/legal-content/EN/TXT/PDF (accessed on 15 May 2016).

- UNEP. Amendment to the Montreal Protocol on Substances that Deplete the Ozone Layer (Kigali Amendment). Int. Leg. Mater. 2017, 56, 193–205. [Google Scholar] [CrossRef]

- McLinden, M.O.; Huber, M.L. (R) Evolution of refrigerants. J. Chem. Eng. Data 2020, 65, 4176–4193. [Google Scholar] [CrossRef] [PubMed]

- McLinden, M.O.; Brown, J.S.; Brignoli, R.; Kazakov, A.F.; Domanski, P.A. Limited options for low-global-warming-potential refrigerants. Nat. Commun. 2017, 8, 14476. [Google Scholar] [CrossRef] [PubMed]

- Domanski, P.A.; Brignoli, R.; Brown, J.S.; Kazakov, A.F.; McLinden, M.O. Low-GWP refrigerants for medium and high-pressure applications. Int. J. Refrig. 2017, 84, 198–209. [Google Scholar] [CrossRef]

- Uddin, K.; Saha, B.B. An Overview of Environment-Friendly Refrigerants for Domestic Air Conditioning Applications. Energies 2022, 15, 8082. [Google Scholar] [CrossRef]

- Uddin, K.; Saha, B.B.; Thu, K.; Koyama, S. Low GWP refrigerants for energy conservation and environmental sustainability. In Advances in Solar Energy Research; Springer: Berlin/Heidelberg, Germany, 2019; pp. 485–517. [Google Scholar]

- Poling, B.E.; Prausnitz, J.M.; O’Connell, J.P. The Properties of Gases and Liquids, 5th ed.; McGraw-Hill: New York, NY, USA, 2001; ISBN 9780070116825. [Google Scholar]

- Huber, M.L. Models for Viscosity, Thermal Conductivity, and Surface Tension of Selected Pure Fluids as Implemented in REFPROP v10.0; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018.

- Kang, K.; Li, X.; Gu, Y.; Wang, X. Thermal conductivity prediction of pure refrigerants and mixtures based on entropy-scaling concept. J. Mol. Liq. 2022, 368, 120568. [Google Scholar] [CrossRef]

- Yang, X.; Kim, D.; May, E.F.; Bell, I.H. Entropy Scaling of Thermal Conductivity: Application to Refrigerants and Their Mixtures. Ind. Eng. Chem. Res. 2021, 60, 13052–13070. [Google Scholar] [CrossRef]

- Fouad, W.A.; Vega, L.F. Transport properties of HFC and HFO based refrigerants using an excess entropy scaling approach. J. Supercrit. Fluids 2018, 131, 106–116. [Google Scholar] [CrossRef]

- Liu, H.; Yang, F.; Yang, X.; Yang, Z.; Duan, Y. Modeling the thermal conductivity of hydrofluorocarbons, hydrofluoroolefins and their binary mixtures using residual entropy scaling and cubic-plus-association equation of state. J. Mol. Liq. 2021, 330, 115612. [Google Scholar] [CrossRef]

- Khosharay, S.; Khosharay, K.; Di Nicola, G.; Pierantozzi, M. Modelling investigation on the thermal conductivity of pure liquid, vapour, and supercritical refrigerants and their mixtures by using Heyen EOS. Phys. Chem. Liq. 2018, 56, 124–140. [Google Scholar] [CrossRef]

- Niksirat, M.; Aeenjan, F.; Khosharay, S. Introducing hydrogen bonding contribution to the Patel-Teja thermal conductivity equation of state for hydrochlorofluorocarbons, hydrofluorocarbons and hydrofluoroolefins. J. Mol. Liq. 2022, 351, 118631. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, C.; Zheng, X.; Li, Q. Modeling thermal conductivity of liquid hydrofluorocarbon, hydrofluoroolefin and hydrochlorofluoroolefin refrigerants. Int. J. Refrig. 2022, 140, 139–149. [Google Scholar] [CrossRef]

- Di Nicola, G.; Coccia, G.; Tomassetti, S. A modified Kardos equation for the thermal conductivity of refrigerants. J. Theor. Comput. Chem. 2018, 17, 1850012. [Google Scholar] [CrossRef]

- Yang, S.; Tian, J.; Jiang, H. Corresponding state principle based correlation for the thermal conductivity of saturated refrigerants liquids from Ttr to 0.90 Tc. Fluid Phase Equilibria 2020, 509, 112459. [Google Scholar] [CrossRef]

- Latini, G.; Sotte, M. Refrigerants of the methane, ethane and propane series: Thermal conductivity calculation along the saturation line. Int. J. Air-Cond. Refrig. 2011, 19, 37–43. [Google Scholar] [CrossRef]

- Latini, G.; Sotte, M. Thermal conductivity of refrigerants in the liquid state: A comparison of estimation methods. Int. J. Refrig. 2012, 35, 1377–1383. [Google Scholar] [CrossRef]

- Di Nicola, G.; Ciarrocchi, E.; Coccia, G.; Pierantozzi, M. Correlations of thermal conductivity for liquid refrigerants at atmospheric pressure or near saturation. Int. J. Refrig. 2014, 45, 168–176. [Google Scholar] [CrossRef]

- Tomassetti, S.; Coccia, G.; Pierantozzi, M.; Di Nicola, G. Correlations for liquid thermal conductivity of low GWP refrigerants in the reduced temperature range 0.4 to 0.9 from saturation line to 70 MPa. Int. J. Refrig. 2020, 117, 358–368. [Google Scholar] [CrossRef]

- Rykov, S.V.; Kudryavtseva, I. V Heat Conductivity of Liquid Hydrofluoroolefins and Hydrochlorofluoroolefins on the Line of Saturation. Russ. J. Phys. Chem. A 2022, 96, 2098–2104. [Google Scholar] [CrossRef]

- Pierantozzi, M.; Petrucci, G. Modeling thermal conductivity in refrigerants through neural networks. Fluid Phase Equilibria 2018, 460, 36–44. [Google Scholar] [CrossRef]

- Ghaderi, F.; Ghaderi, A.H.; Ghaderi, N.; Najafi, B. Prediction of the thermal conductivity of refrigerants by computational methods and artificial neural network. Front. Chem. 2017, 5, 99. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Li, Y.; Yan, Y.; Wright, E.; Gao, N.; Chen, G. Prediction on the viscosity and thermal conductivity of hfc/hfo refrigerants with artificial neural network models. Int. J. Refrig. 2020, 119, 316–325. [Google Scholar] [CrossRef]

- Ogedjo, M.; Kapoor, A.; Kumar, P.S.; Rangasamy, G.; Ponnuchamy, M.; Rajagopal, M.; Banerjee, P.N. Modeling of sugarcane bagasse conversion to levulinic acid using response surface methodology (RSM), artificial neural networks (ANN), and fuzzy inference system (FIS): A comparative evaluation. Fuel 2022, 329, 125409. [Google Scholar] [CrossRef]

- Khamparia, A.; Pandey, B.; Pandey, D.K.; Gupta, D.; Khanna, A.; de Albuquerque, V.H.C. Comparison of RSM, ANN and Fuzzy Logic for extraction of Oleonolic Acid from Ocimum sanctum. Comput. Ind. 2020, 117, 103200. [Google Scholar] [CrossRef]

- Latini, G.; Nicola, G.D.; Pierantozzi, M.; Coccia, G.; Tomassetti, S. Artificial Neural Network Modeling of Liquid Thermal Conductivity for alkanes, ketones and silanes. Proc. J. Phys. Conf. Ser. 2017, 923, 012054. [Google Scholar] [CrossRef]

- Mulero, Á.; Pierantozzi, M.; Cachadiña, I.; Di Nicola, G. An Artificial Neural Network for the surface tension of alcohols. Fluid Phase Equilibria 2017, 449, 28–40. [Google Scholar] [CrossRef]

- Hosseini, S.M.; Pierantozzi, M.; Moghadasi, J. Viscosities of some fatty acid esters and biodiesel fuels from a rough hard-sphere-chain model and artificial neural network. Fuel 2019, 235, 1083–1091. [Google Scholar] [CrossRef]

- Alam, M.J.; Yamaguchi, K.; Hori, Y.; Kariya, K.; Miyara, A. Measurement of thermal conductivity and viscosity of cis-1-chloro-2, 3, 3, 3-tetrafluoropropene (R-1224yd (Z)). Int. J. Refrig. 2019, 104, 221–228. [Google Scholar] [CrossRef]

- Perkins, R.A.; Huber, M.L.; Assael, M.J. Measurement and Correlation of the Thermal Conductivity of trans-1-Chloro-3, 3, 3-trifluoropropene (R1233zd (E)). J. Chem. Eng. Data 2017, 62, 2659–2665. [Google Scholar] [CrossRef]

- Alam, M.J.; Islam, M.A.; Kariya, K.; Miyara, A. Measurement of thermal conductivity and correlations at saturated state of refrigerant trans-1-chloro-3, 3, 3-trifluoropropene (R-1233zd (E)). Int. J. Refrig. 2018, 90, 174–180. [Google Scholar] [CrossRef]

- Perkins, R.A.; Huber, M.L. Measurement and Correlation of the Thermal Conductivity of 2, 3, 3, 3-Tetrafluoroprop-1-ene (R1234yf) and trans-1, 3, 3, 3-Tetrafluoropropene (R1234ze (E)). J. Chem. Eng. Data 2011, 56, 4868–4874. [Google Scholar] [CrossRef]

- Miyara, A.; Fukuda, R.; Tsubaki, K. Thermal conductivity of saturated liquid of R1234ze (E)+ R32 and R1234yf+ R32 mixtures. Trans. Jpn. Soc. Refrig. Air Cond. Eng. 2011, 28, 435–443. [Google Scholar]

- Miyara, A.; Tsubaki, K.; Sato, N.; Fukuda, R. Thermal conductivity of saturated liquid HFO-1234ze (E) and HFO-1234ze (E)+ HFC-32 mixture. In Proceedings of the 23rd IIR International Congress of Refrigeration, Prague, Czech Republic, 21–26 August 2011. [Google Scholar]

- Ishida, H.; Mori, S.; Kariya, K.; Miyara, A. Thermal conductivity measurements of low GWP refrigerants with hot-wire method. In Proceedings of the 24th International Congress of Refrigeration (ICR), Yokohama, Japan, 16–22 August 2015. [Google Scholar]

- Mondal, D.; Kariya, K.; Tuhin, A.R.; Miyoshi, K.; Miyara, A. Thermal conductivity measurement and correlation at saturation condition of HFO refrigerant trans-1, 1, 1, 4, 4, 4-hexafluoro-2-butene (R1336mzz (E)). Int. J. Refrig. 2021, 129, 109–117. [Google Scholar] [CrossRef]

- Haowen, G.; Xilei, W.; Yuan, Z.; Zhikai, G.; Xiaohong, H.; Guangming, C. Experimental and Theoretical Research on the Saturated Liquid Thermal Conductivity of HFO-1336mzz (E). Ind. Eng. Chem. Res. 2021, 60, 9592–9601. [Google Scholar] [CrossRef]

- Perkins, R.A.; Huber, M.L. Measurement and Correlation of the Thermal Conductivity of cis-1, 1, 1, 4, 4, 4-hexafluoro-2-butene. Int. J. Thermophys. 2020, 41, 103. [Google Scholar] [CrossRef]

- Alam, M.J.; Islam, M.A.; Kariya, K.; Miyara, A. Measurement of thermal conductivity of cis-1, 1, 1, 4, 4, 4-hexafluoro-2-butene (R-1336mzz (Z)) by the transient hot-wire method. Int. J. Refrig. 2017, 84, 220–227. [Google Scholar] [CrossRef]

- Akasaka, R.; Fukushima, M.; Lemmon, E.W. A Helmholtz Energy Equation of State for cis-1-chloro-2, 3, 3, 3-tetrafluoropropene (R-1224yd (Z)). In Proceedings of the European Conference on Thermophysical Properties, Graz, Austria, 3–8 September 2017. [Google Scholar]

- Richter, M.; McLinden, M.O.; Lemmon, E.W. Thermodynamic Properties of 2,3,3,3-Tetrafluoroprop-1-ene (R1234yf): Vapor Pressure and p–ρ–T Measurements and an Equation of State. J. Chem. Eng. Data 2011, 56, 3254–3264. [Google Scholar] [CrossRef]

- Thol, M.; Lemmon, E.W. Equation of State for the Thermodynamic Properties of trans-1, 3, 3, 3-Tetrafluoropropene [R-1234ze (E)]. Int. J. Thermophys. 2016, 37, 28. [Google Scholar] [CrossRef]

- Akasaka, R.; Lemmon, E.W. Fundamental Equations of State for cis-1, 3, 3, 3-Tetrafluoropropene [R-1234ze (Z)] and 3, 3, 3-Trifluoropropene (R-1243zf). J. Chem. Eng. Data 2019, 64, 4679–4691. [Google Scholar] [CrossRef]

- Tanaka, K.; Ishikawa, J.; Kontomaris, K.K. Thermodynamic properties of HFO-1336mzz (E)(trans-1, 1, 1, 4, 4, 4-hexafluoro-2-butene) at saturation conditions. Int. J. Refrig. 2017, 82, 283–287. [Google Scholar] [CrossRef]

- Lemmon, E.W.; Bell, I.H.; Huber, M.L.; McLinden, M.O. NIST Standard Reference Database 23: Reference Fluid Thermodynamic and Transport Properties-REFPROP, Version 10.0, National Institute of Standards and Technology, 2018. 2018. Available online: http//www.nist.gov/srd/nist23.cfm (accessed on 25 October 2022).

- Sakoda, N.; Higashi, Y. Measurements of PvT Properties, Vapor Pressures, Saturated Densities, and Critical Parameters for cis-1-Chloro-2, 3, 3, 3-tetrafluoropropene (R1224yd (Z)). J. Chem. Eng. Data 2019, 64, 3983–3987. [Google Scholar] [CrossRef]

- Basheer, I.A.; Hajmeer, M. Artificial neural networks: Fundamentals, computing, design, and application. J. Microbiol. Methods 2000, 43, 3–31. [Google Scholar] [CrossRef] [PubMed]

- Krogh, A. What are artificial neural networks? Nat. Biotechnol. 2008, 26, 195–197. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Neural networks and their applications. Rev. Sci. Instrum. 1994, 65, 1803–1832. [Google Scholar] [CrossRef]

| Data Set | Point N. | AARD% | MARD% | RMSE |

|---|---|---|---|---|

| Training set | 2723 | 0.390 | 6.074 | 0.0005 |

| Validation Set | 340 | 0.396 | 4.945 | 0.0005 |

| Test Set | 341 | 0.375 | 4.598 | 0.0005 |

| Overall | 3404 | 0.389 | 2.070 | 0.0003 |

| Model | Training Set Point N. | Test Set Point N. | Training Set AARD% RMSE | Test Set AARD% RMSE |

|---|---|---|---|---|

| Cross Validation 1 | 2553 | 851 | 0.557 0.0007 | 0.643 0.0022 |

| Cross Validation 2 | 2553 | 851 | 0.904 0.0011 | 0.853 0.0026 |

| Cross Validation 3 | 2553 | 851 | 0.354 0.0004 | 0.932 0.0032 |

| Cross Validation 4 | 2553 | 851 | 0.417 0.0005 | 1.087 0.0032 |

| Fluid Name | N. of Points | This Work | Equation (1) | REFPROP 10.0 | |||

|---|---|---|---|---|---|---|---|

| AARD% | MARD% | AARD% | MARD% | AARD% | MARD% | ||

| R1224yd(Z) | 53 | 0.451 | 1.299 | 3.041 | 5.896 | 6.365 | 8.861 |

| R1233zd(E) | 1132 | 0.260 | 1.545 | 1.155 | 3.930 | 0.337 | 1.584 |

| R1234yf | 267 | 0.290 | 1.163 | 1.446 | 7.242 | 0.303 | 1.557 |

| R1234ze(E) | 494 | 0.251 | 2.235 | 1.634 | 5.941 | 0.336 | 2.041 |

| R1234ze(Z) | 61 | 0.560 | 1.574 | 1.771 | 5.080 | 1.775 | 5.705 |

| R1336mzz(E) | 118 | 1.223 | 5.499 | 6.456 | 13.197 | - | - |

| R1336mzz(Z) | 1279 | 0.490 | 6.074 | 4.120 | 19.018 | 9.390 | 13.84 |

| Overall | 3404 | 0.389 | - | 2.585 | - | 3.82 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pierantozzi, M.; Tomassetti, S.; Di Nicola, G. Modeling Liquid Thermal Conductivity of Low-GWP Refrigerants Using Neural Networks. Appl. Sci. 2023, 13, 260. https://doi.org/10.3390/app13010260

Pierantozzi M, Tomassetti S, Di Nicola G. Modeling Liquid Thermal Conductivity of Low-GWP Refrigerants Using Neural Networks. Applied Sciences. 2023; 13(1):260. https://doi.org/10.3390/app13010260

Chicago/Turabian StylePierantozzi, Mariano, Sebastiano Tomassetti, and Giovanni Di Nicola. 2023. "Modeling Liquid Thermal Conductivity of Low-GWP Refrigerants Using Neural Networks" Applied Sciences 13, no. 1: 260. https://doi.org/10.3390/app13010260

APA StylePierantozzi, M., Tomassetti, S., & Di Nicola, G. (2023). Modeling Liquid Thermal Conductivity of Low-GWP Refrigerants Using Neural Networks. Applied Sciences, 13(1), 260. https://doi.org/10.3390/app13010260