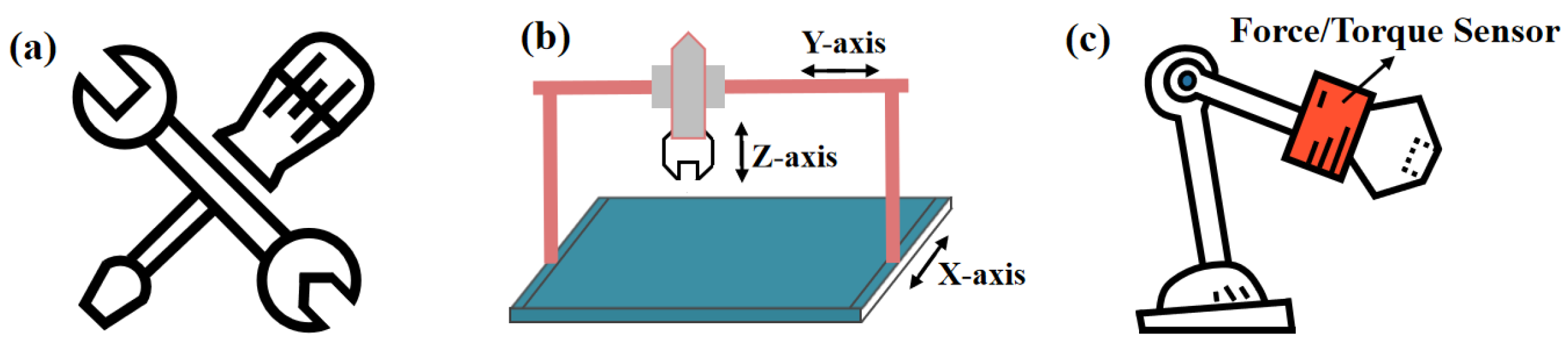

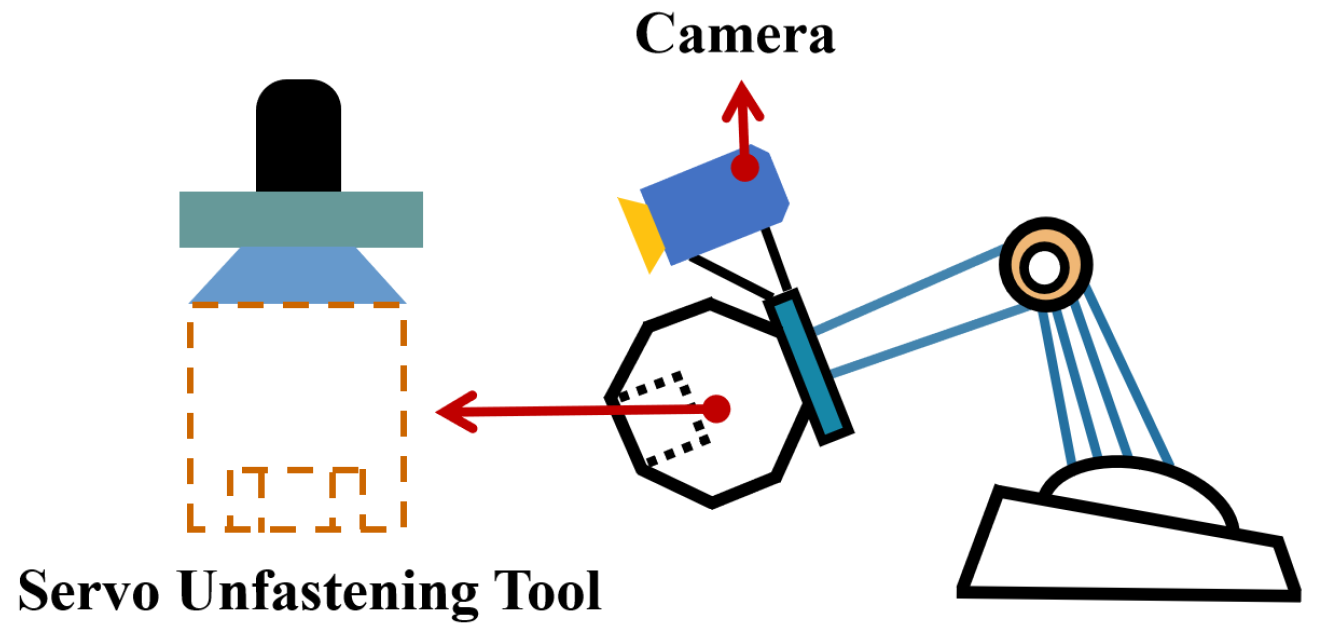

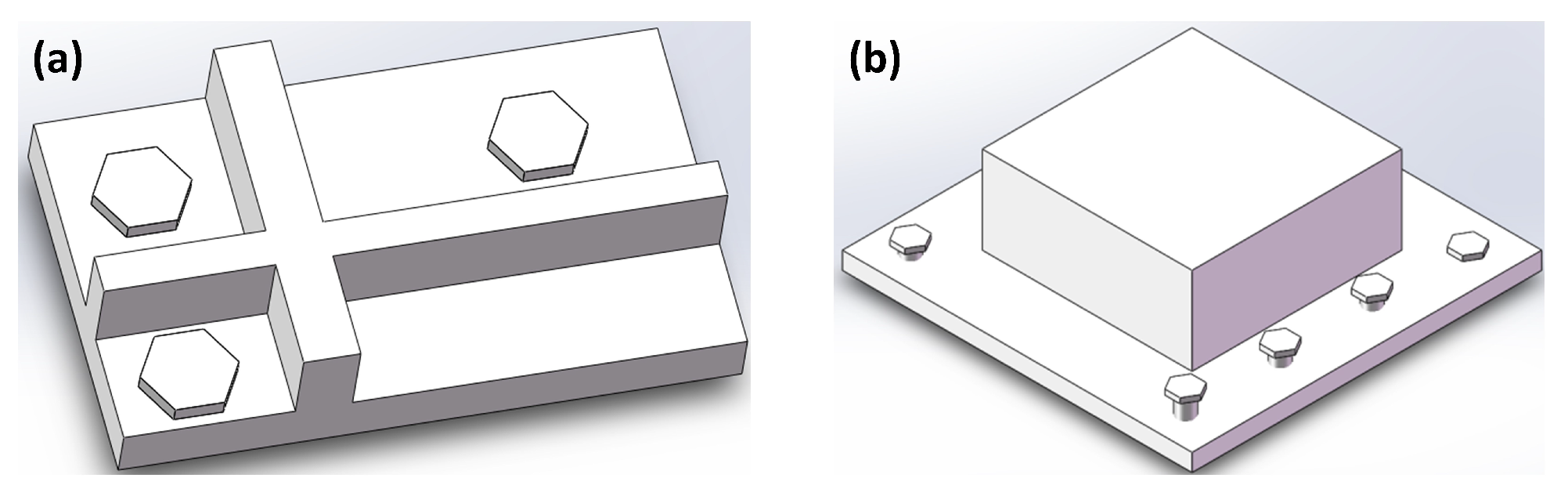

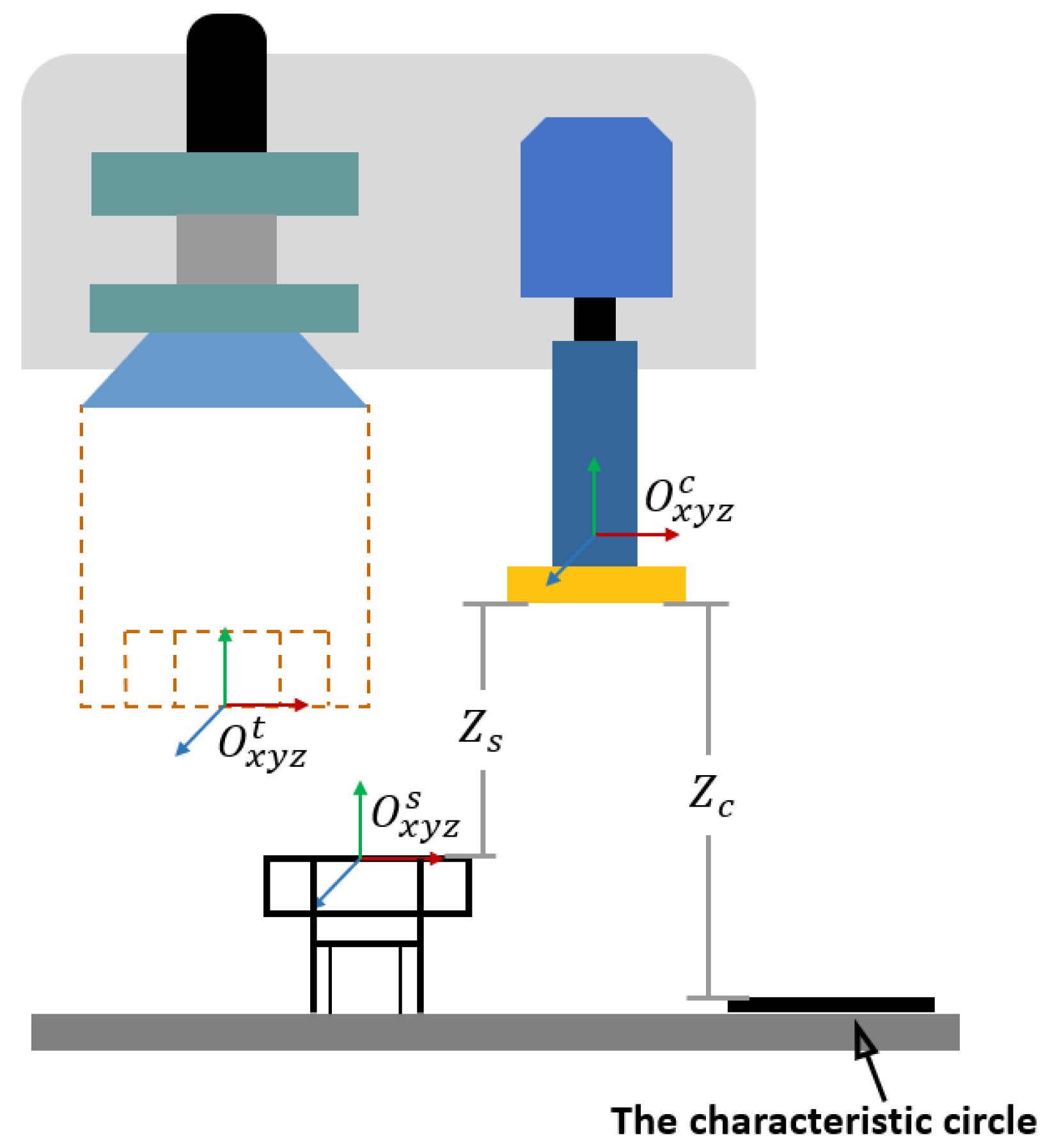

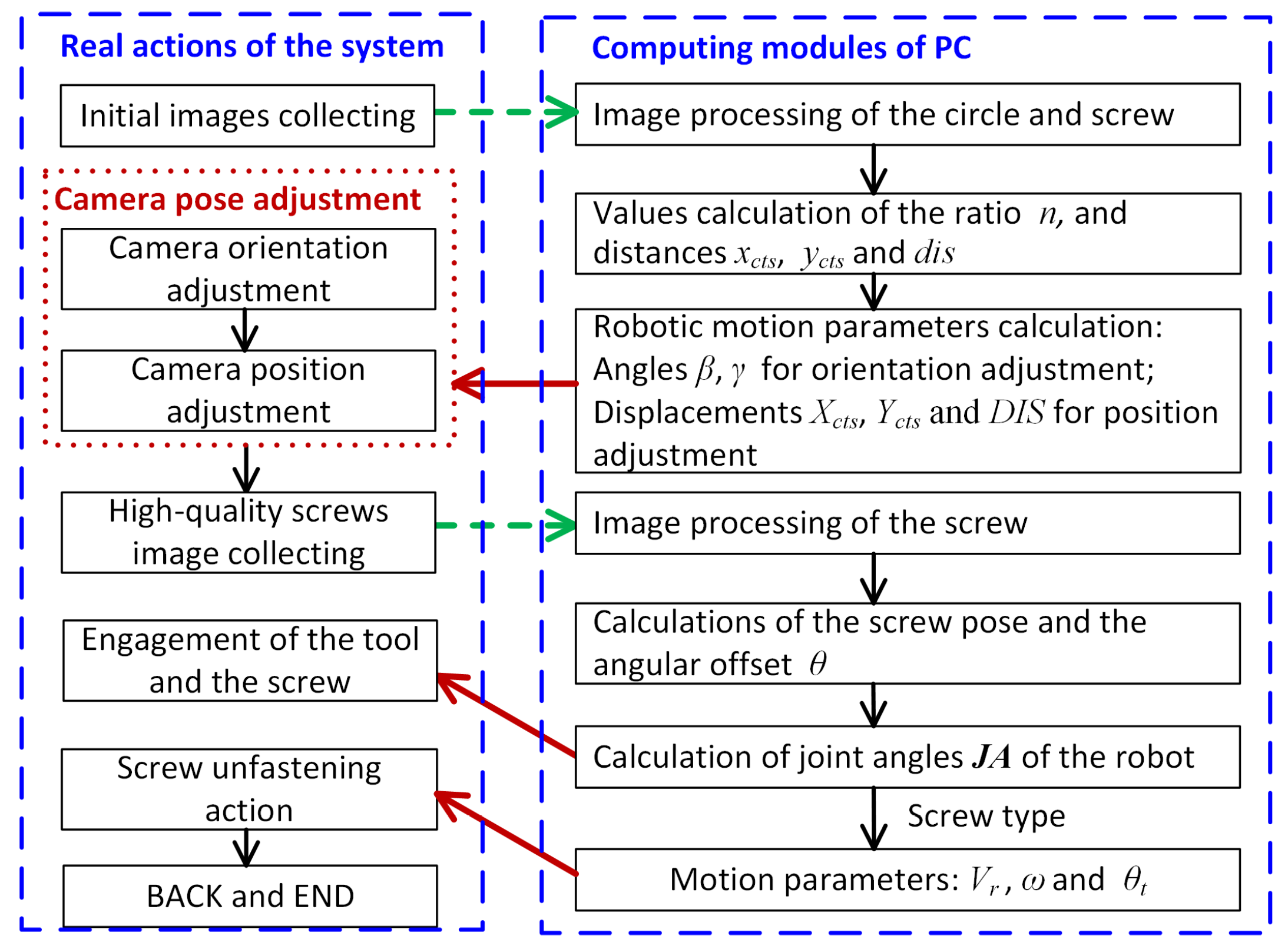

As introduced above, this study presents an effective screw unfastening system for a six-DOFs robot equipped with an end effector composed of a special screw-tightening tool and a camera, as shown in

Figure 4. In this system, a servo unfastening tool consists of a motor, a reducer, inner displacement and torque sensors, and an output rotation shaft. There is a regular hexagonal groove at the end of the rotation shaft, having a slightly larger size than the target screw head and an elastic mechanism, as shown in

Figure 5. To realize the disassembly operation, the tool is mounted at the end of the robot so that it can guide it to the target screw. It has its control system, which communicates with the robot through the Beckhoff module and exchanges messages with a PC through the TCP/IP protocol. During the operation, a signal for performing the unfastening operation is sent to the unfastening tool when a robot arrives at the desired location. Namely, the screw-unfastening operation is performed through the cooperated motion of the tool and robot. The signals for stopping motions are sent to the tool and robot at the same time. Finally, the robot finishes the disassembly operation and prepares for the next unfastening task.

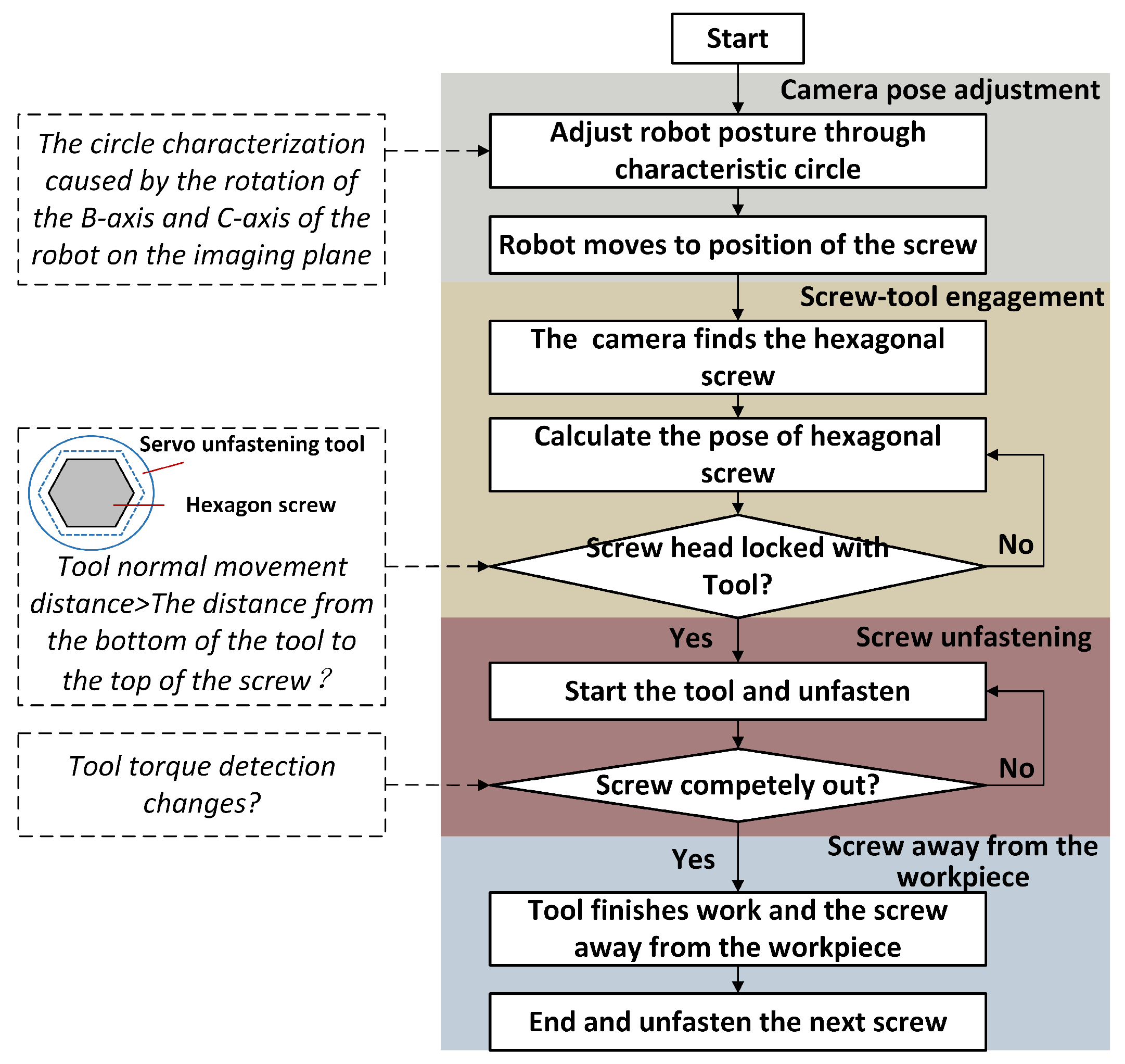

The second step is to calculate the screw pose and then move the robotic end effector forward and lock the screw, which is a pre-action for the third step. In this step, a hexagon screw’s pose in the robotic base frame is calculated based on the geometry analysis results of a hexagonal shape. The calculated pose is used to plan the robotic motion in the locking action of a screw by the end-effector tool. This step makes the proposed strategy adaptable to the disassembly operation of screws with different loose states by providing the correct feedback on the target screw’s pose for the robotic motion.

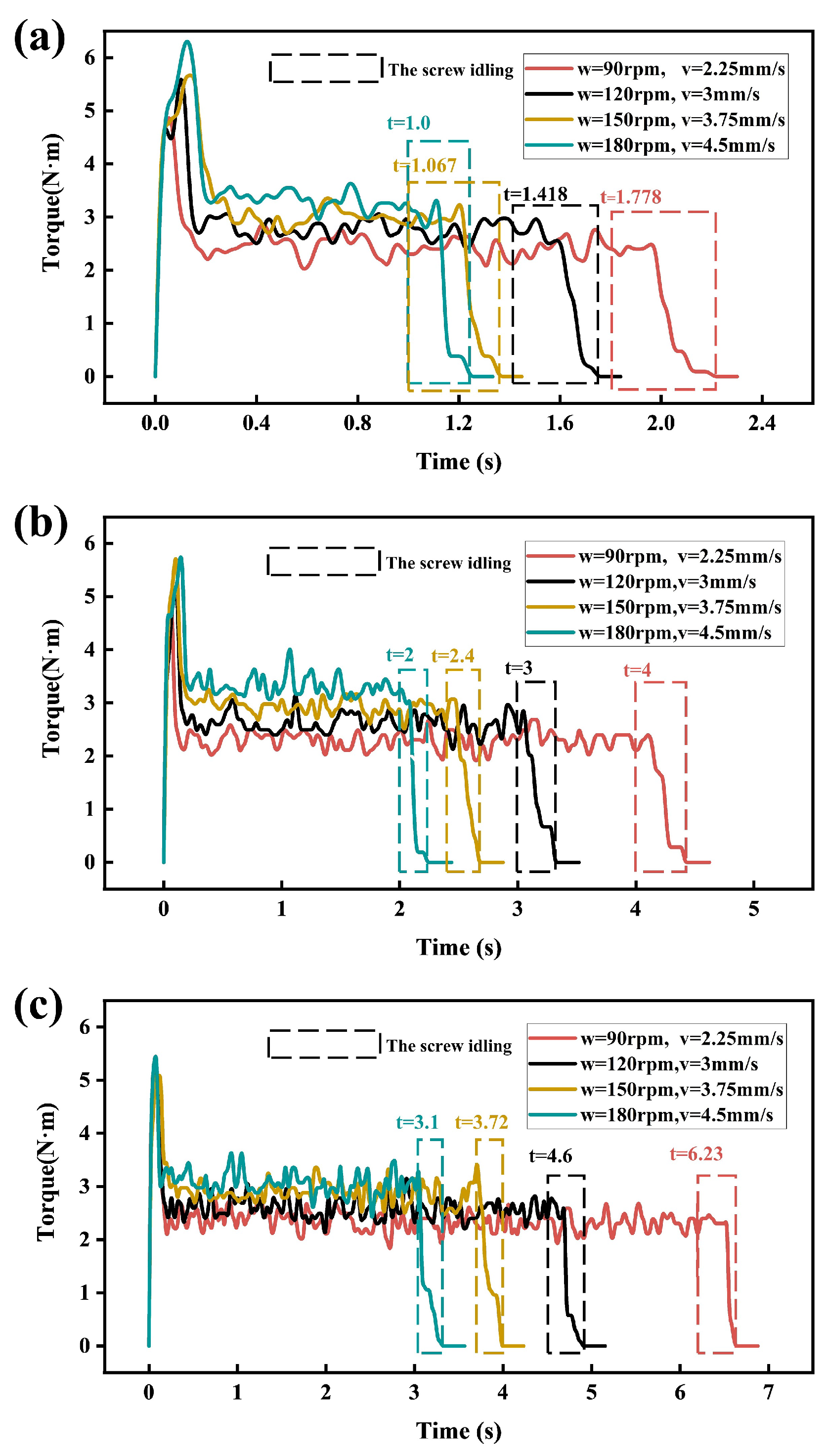

The third step is to unfasten a screw through the cooperated motion of the robot and unfastening tool. In this step, the disassembly quality can be improved by selecting proper values of the motion parameters, which can be obtained by the analysis of the torque variation over time during the unfastening action.

The fourth step is to move a screw away from the workpiece. In this step, the robot continues its motion along a planned trajectory; the tool stops its rotating motion but maintains the normal force so that the screw does not fall. Finally, the robot puts the screw into a container and proceeds to the next unfastening operation.

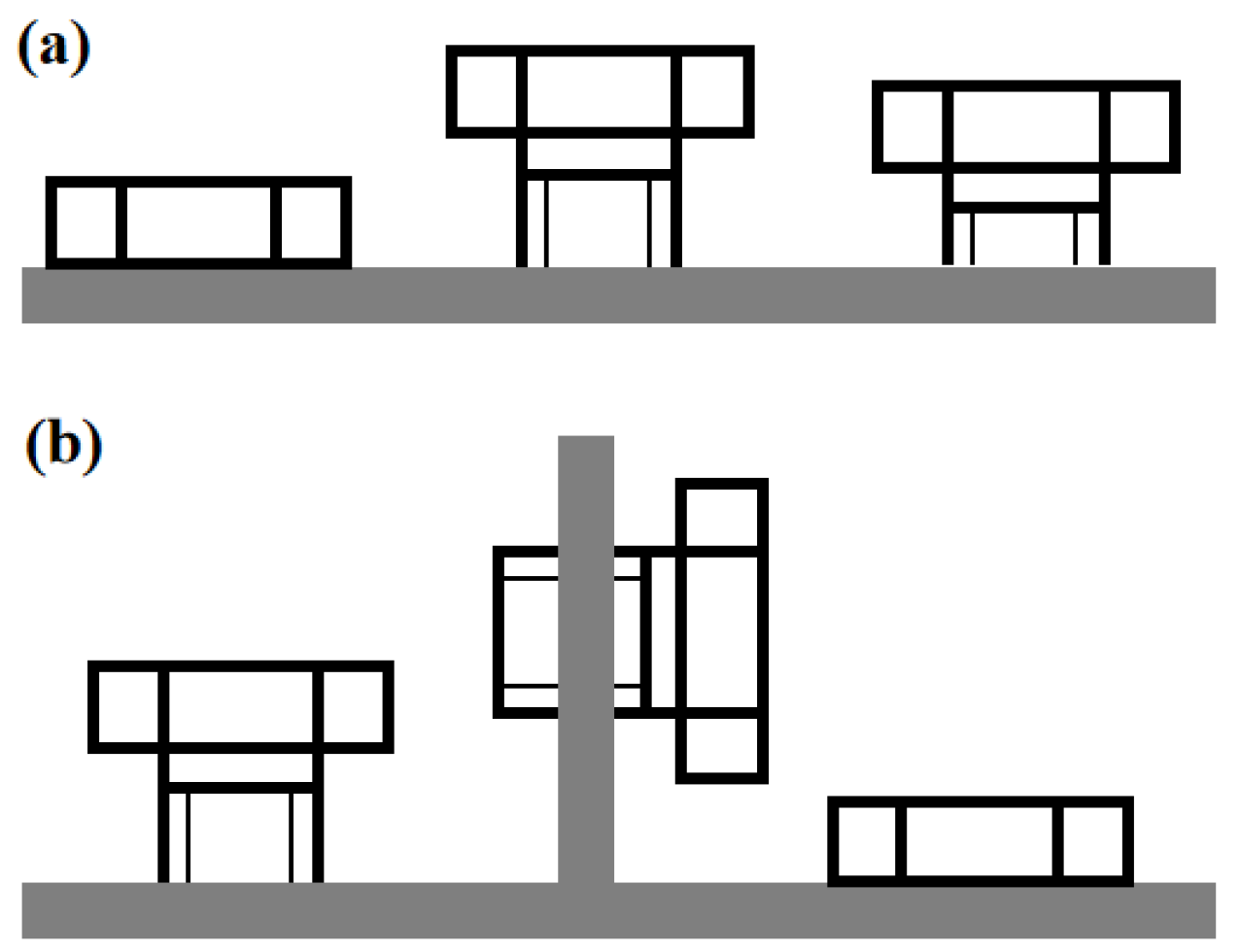

In addition to the efficiency and quality, applicability is also an important feature of a disassembly technique. Obviously, the vision-torque-based methods are limited when there exist obstacles between target screws, as shown in

Figure 8a. This makes it impossible for a robot to complete the spiral motion smoothly and also makes it less efficient for screws with different loose states because more time is needed to search a screw along the axial direction, as shown in

Figure 8b. In addition, some of the latest studies have used vision-based methods to perform the disassembly task using a Cartesian robot when screws are located on a plane [

21,

25]. Therefore, the existing studies have certain limitations in complex environments. To extend the applicability, this work uses a vision system to determine the 3D pose of a target screw accurately [

26]. The identification and positioning of target can be realized by global recognition and positioning using binocular camera system. The screws were searched and matched by the Gaussian pyramid hierarchical method and greedy algorithm, and the optimal matching results were obtained based on vision calibration and picture correction. However, the pose of the screws obtained by this method [

23] are not accurate enough, so the strategy proposed in this paper is needed to accurately obtain the pose of screws. Then, the system performs the engagement and unfastening actions based on the calculated pose using a robot, which can effectively avoid problems existing in the previous studies.

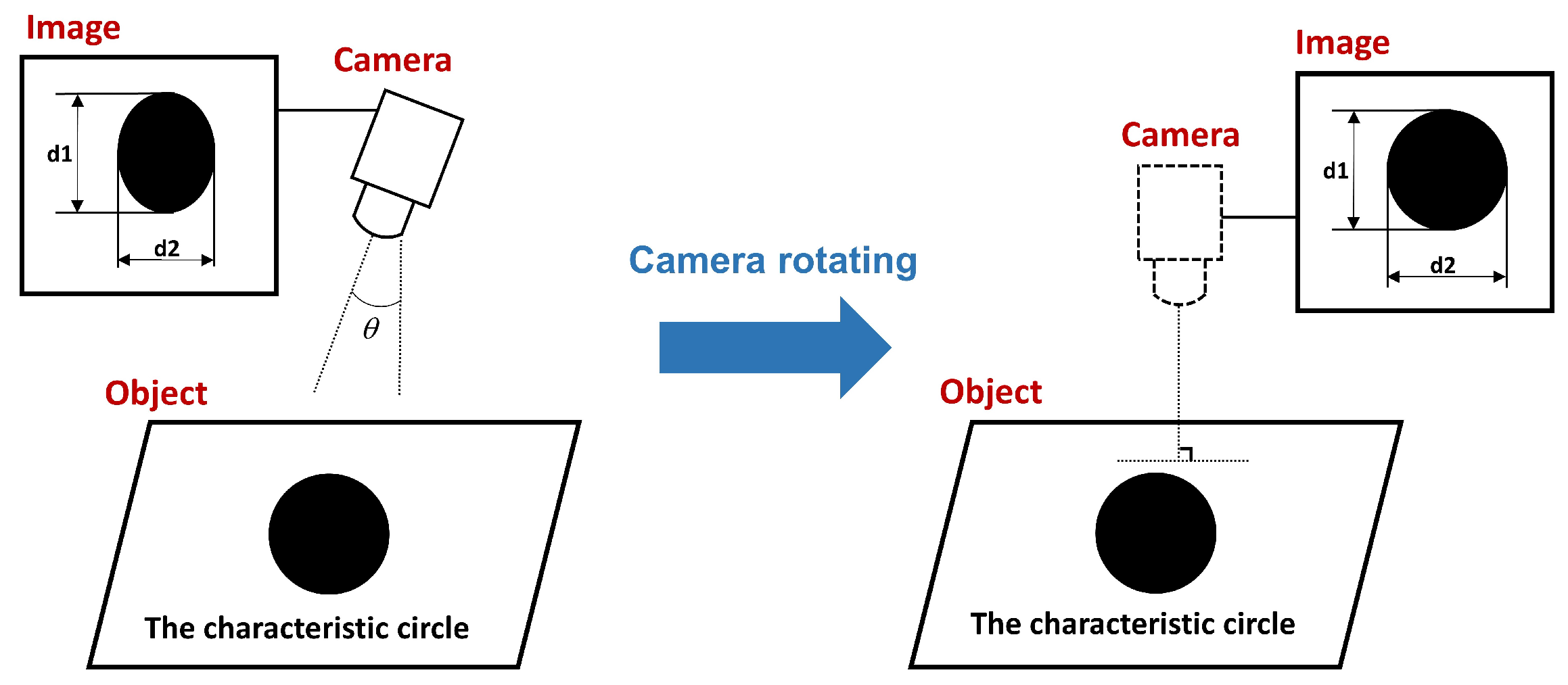

2.1. Robotic Motion-Guided Camera Pose Adjustment Method

This work aims to develop an effective vision-based disassembly strategy for screws located in 3D complex structures, including different working planes. The measurement accuracy of a vision system has a major impact on the effectiveness and efficiency of the proposed strategy. This section proposes a camera pose-adjustment method guided by robotic motion, aiming to improve the image quality of a screw. To solve this problem, a characteristic circle needs to be placed on the structure to be disassembled. The image processing of the characteristic circle aims to realize the camera pose adjustment to obtain high-quality images of the target screw, which has a significant impact on the calculation accuracy of a screw pose. In the proposed strategy, there is one characteristic circle on each working plane, and screws on the same plane can be disassembled.

The camera pose adjustment is completed by three steps: the initial camera view adjustment, the orientation adjustment and the position adjustment. These are presented as follows in detail.

(1) Initial camera view field adjustment: The camera view is firstly adjusted by regular searching of the characteristic circle through the robotic motion based on the global recognition and positioning, aiming to make a screw and the characteristic circle located in the same image. According to the imaging principle, the object distance is positively related to the camera view field but negatively to the area covered by the target in an image, which means that the object distance adjustment should consider both the camera view field and the image quality. In this study, the general adjustment is performed by the robotic motion based on the above principle, which makes the characteristic circle and the target screw be located in the same image and their image area as large as possible.

Generally, when the distance between the characteristic circle and a screw is very long, the image quality will be greatly affected, which will reduce the location accuracy of a screw and can result in a failure of the engagement of the screw and unfastening tool. To analyze the allowable distance range of the proposed robotic system, experimental tests were performed. In these tests, the distance between the hole and a screw was set to be in a range from 20 mm to 300 mm. Testing results are given in

Table 2, from which it can be seen that the accuracy was greatly affected by the distance

and area percentage

. Therefore, the strategy is only applicable to cases when the distance is less than a certain value, which is defined by a specific requirement for the measurement accuracy.

For realizing the unfastening action successfully, the sizes of the screw and tool end should satisfy the condition of

, as shown in

Figure 9. Accordingly, the minimal gap

g is limited by the following condition,

where values of two parameters

e and

s are defined by the nut size specification standard.

In this study, the target screws’ parameters are as follows:

mm and

mm. The minimal gap satisfies the condition of

mm according to the standard GB/T5783-2000. In the proposed robotic system,

mm, which indicates that the location error cannot exceed the minimal gap of

mm. Therefore, aiming to improve the reliability of the proposed strategy, based on the test results in

Table 2, the distance between the hole and a screw cannot be larger than 200 mm. For most industrial robots, such as the KUKA robot used in this work, their positioning accuracy is always high enough considering structural flexibility and control resolution, and can reach ±0.02 mm to ±0.05 mm. The accuracy of the positioning of the robot is far less than the minimal gap when performing screw unfastening. Therefore, the robot positioning accuracy has little influence on screw–tool engagement.

After achieving the camera view field adjustment, the characteristic circle and a screw are located in the same camera view field, as shown in

Figure 10. This adjustment can help the subsequent camera pose adjustment.

(2) Camera orientation adjustment: During the camera pose adjustment step, the recognition and positioning of the characteristic circle and a target screw are completed in the image coordinate system first, as shown in

Figure 10. The camera pose can be adjusted by the end-effector motion guided by a robot. For accurate adjustment, the motion of the robotic end effector is divided into three rotational motions along the A-axis, B-axis, and C-axis, as shown in

Figure 4.

Particularly, when an image of the characteristic circle is taken by a camera under a certain imaging angle

, the circle edge will be an ellipse curve, as shown in the left scheme in

Figure 11. However, when

, the edge of the circle will be a circle, as shown in the right scheme in

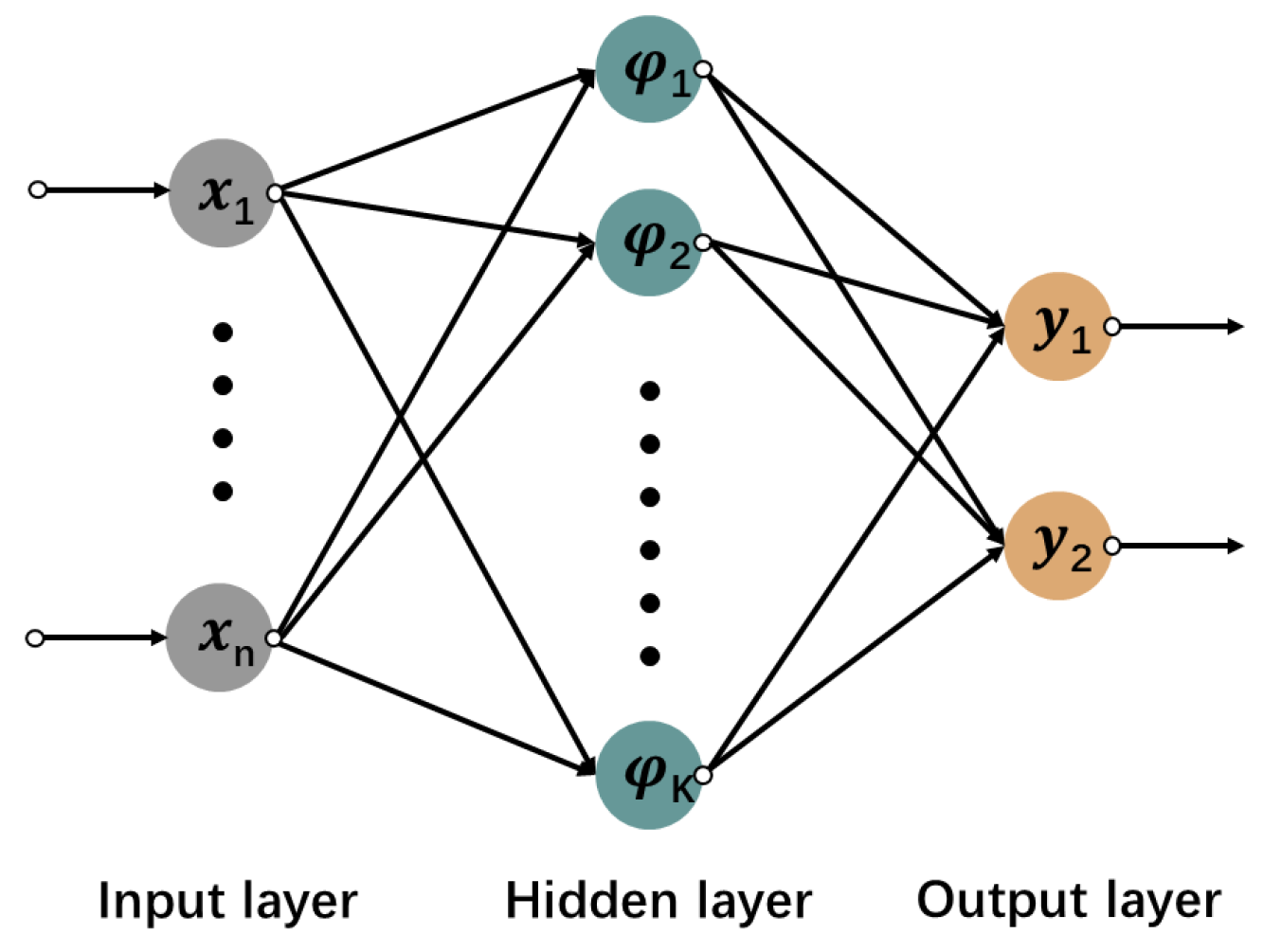

Figure 11. Therefore, this parameter is used for the camera orientation adjustment based on the robotic motion. In the proposed strategy, the rotational motions along the B-axis and C-axis of a robotic tool are planned to complete this adjustment by establishing the radial basis forward network (RBF) neural network [

28]. The RBF neural network has strong nonlinear mapping ability, fast computing speed, and good prediction ability. The RBF network is different from other neural networks, which has only one hidden layer [

28,

29]. The network is composed of an input layer, hidden layer and output layer, as shown in

Figure 12. The activation function of the hidden layer is a Gaussian function. The specific form of RBF neural network algorithm is

where

x and

denote the input of the network and the output of the hidden layer.

and

mean the Gaussian basis center, the base width parameter of the hidden layer node.

y and

represent the output of the network and the connection weight between the

neuron of the hidden layer and the network output. The good convergence of the results is the premise of the neural network. Therefore, the gradient descent method is used to adjust the center, width and weight of the RBF network so as to speed up its convergence.

The serial number, major axis, and minor axis of characteristic circle are considered the input, and rotational angles

and

are considered the output. In this paper, the number of hidden layers is one, and the final number of neurons is three. The experimental data (

Table 3) used in this paper are from the real screw removal platform. In the data sample, 855 groups were randomly selected, 755 groups were used as the training set, and the remaining 100 groups were used as the prediction set. After network training and testing, linear regression analysis is conducted between the desired target and the actual output of the network model. The weight from the input layer to the hidden layer is

. The weight from the hidden layer to the output layer is

. As shown in

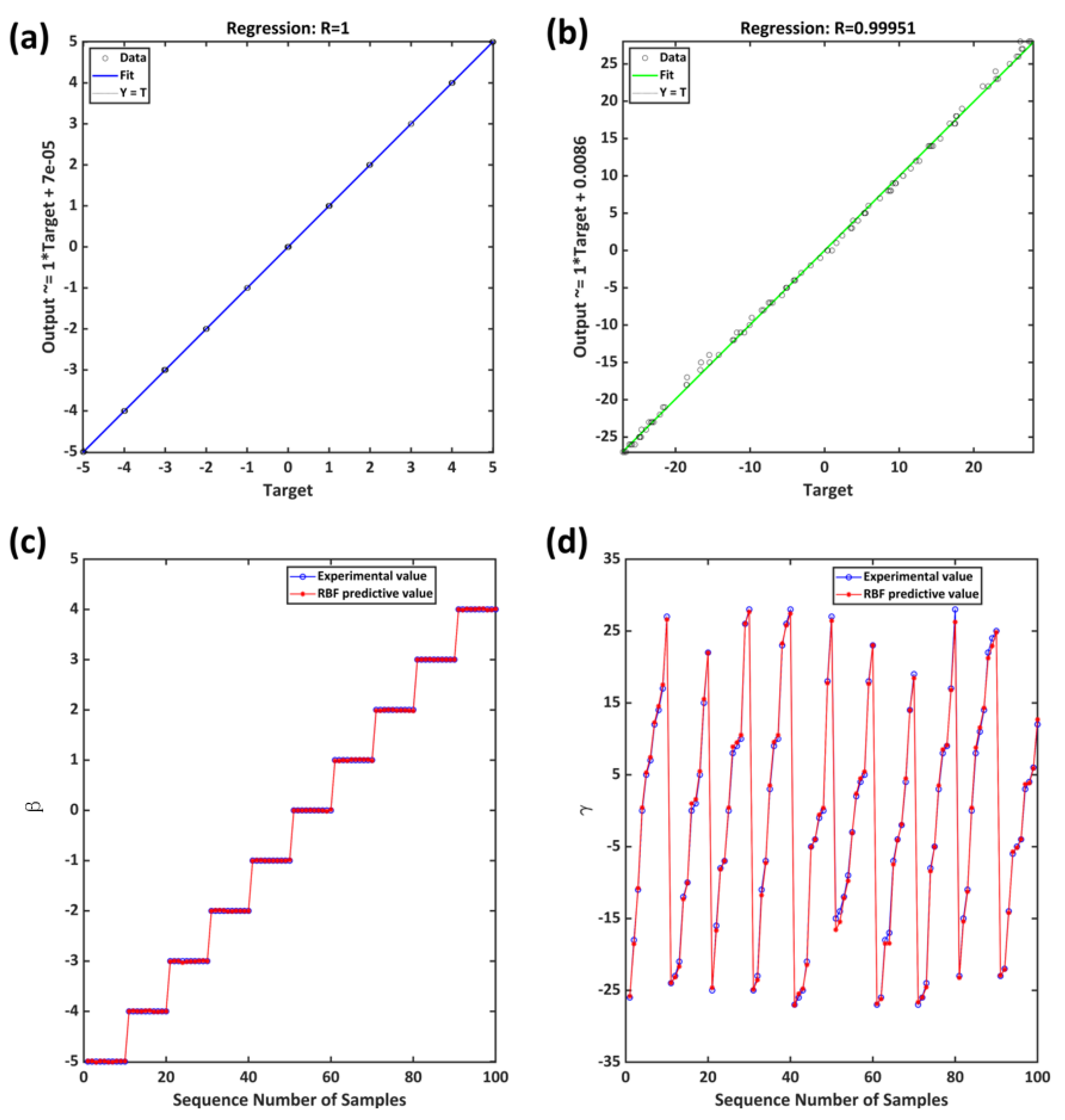

Figure 13a,b, the regression coefficient between the expected output and the actual output is very close to 1, which indicates that the network has a good prediction effect.

Figure 13c,d show the comparison between the predicted results and the experimental results. The maximum relative errors of

and

are 0.2% and 1.7%, the average absolute errors are 0.02 and 0.31. Based on the obtained neural network, values of

and

can be predicted. Then, the camera orientation adjustment can be completed.

(3) Camera position adjustment: A rough position of a screw relative to the characteristic circle is determined to adjust the camera position relative to the screw. In this step, real distances

and

of the end effector travelled in two axial directions of an image can be obtained based on the corresponding image distance and the pixel physical size

, as follows

and

. The current object distance

is calculated based on the ratio of the real size to the image size of the characteristic circle. Then, this distance is used to adjust the camera shooting distance

of a screw to achieve a high-quality image, which can be realized by moving the robotic end effector along the direction of the camera optical axis by a distance of

. Based on the above adjustments, a screw can appear in the image field of a camera completely and clearly, as shown in

Figure 14, which can help the screw location calculation in the next step.

The robotic motions needed for the camera orientation and position adjustments can be obtained based on the elliptical feature of the characteristic circle image and the rough position of a screw relative to the characteristic circle in the image coordinate system. Therefore, a 3D model can help to locate a screw that is difficult to appear in the same camera view with the characteristic circle but is not necessary for all screw disassembly tasks. In the experimental tests of this study, all adjustment motions are performed based on the image processing of an image that includes both the characteristic circle and the target screw.

2.2. Screw–Tool Engagement Method Based on Screw Pose Calculation

The screw–tool engagement includes two steps—the screw pose calculation and the engagement motion guided by a robot—which are presented in detail in the following.

(1) Screw pose calculation: The screw pose is calculated based on feature points of its pixel image, which can be extracted and located using the HALCON [

30]. The pose calculation accuracy directly determines the success rate and efficiency of the engagement operation.

In this work, a

Canny filter [

31] is used to extract the hexagonal edge of a target screw from the whole image. For this algorithm, the filter value, which is in the range of 1∼200, has a crucial impact on the effect of the image noise removal and affects the accuracy of the edge extraction. In this work, the artificial experience is obtained from the previous image processing tests, and it can be described as follows: the more elements in an image exist, the larger the filter value should be, and the more calculations are required. In the experimental tests of this work, there existed only screws and the background with a single feature, so the filter value was set to 20, which could ensure the edge detection accuracy at an acceptable computing cost.

It should be noted that a proper filter value is determined based on the artificial experience mainly because the structure is simple. However, in real applications, there always exist complex assembly structures with bolted connections, so an adaptive adjustment method for the filter value should be selected according to the structural features. Therefore, an adaptive image processing method of screws is further studied to improve the effectiveness of the proposed control strategy.

After the edge extraction, all edges

of an image can be extracted, and the longest among them

is selected as an edge of a hexagonal screw head. Edges of an image before and after the edge selection are shown in

Figure 15. The length

of the target edge can be obtained as follows:

where

i denotes the number of edges extracted from the image, and

represents the length calculation function of an edge.

The target edge is divided into several parts based on the point number of the edge contour. The maximum and minimum distances between the contour and the approximate line are set to 10 pixels and 5 pixels, respectively. As shown in

Figure 16a, the set of lines and curves is used as an approximate contour, and this contour is further divided into sub-contours using the contour length as a feature. Finally, some of the curves are removed to make a screw have at least one line on each edge, setting the lower and upper limits of the contour length to 100 pixels and 1000 pixels, respectively. Hexagon edges of the screw head obtained by the presented procedures are shown in

Figure 16b.

The obtained multiple sub-contours are linearly fitted using the ordinary least squares linear fitting operator of

Tukey [

32]. The abscissa

and ordinate

of the starting point, as well as the abscissa

and ordinate

of the end point, in the pixel coordinate system are obtained by applying the linear fitting operator. A line between a beginning point and the corresponding end point can be fitted using a linear equation, which can form six sides of a hexagon through extensions. The linear equation includes the following relations:

where

and

means function parameters of the

i-th line.

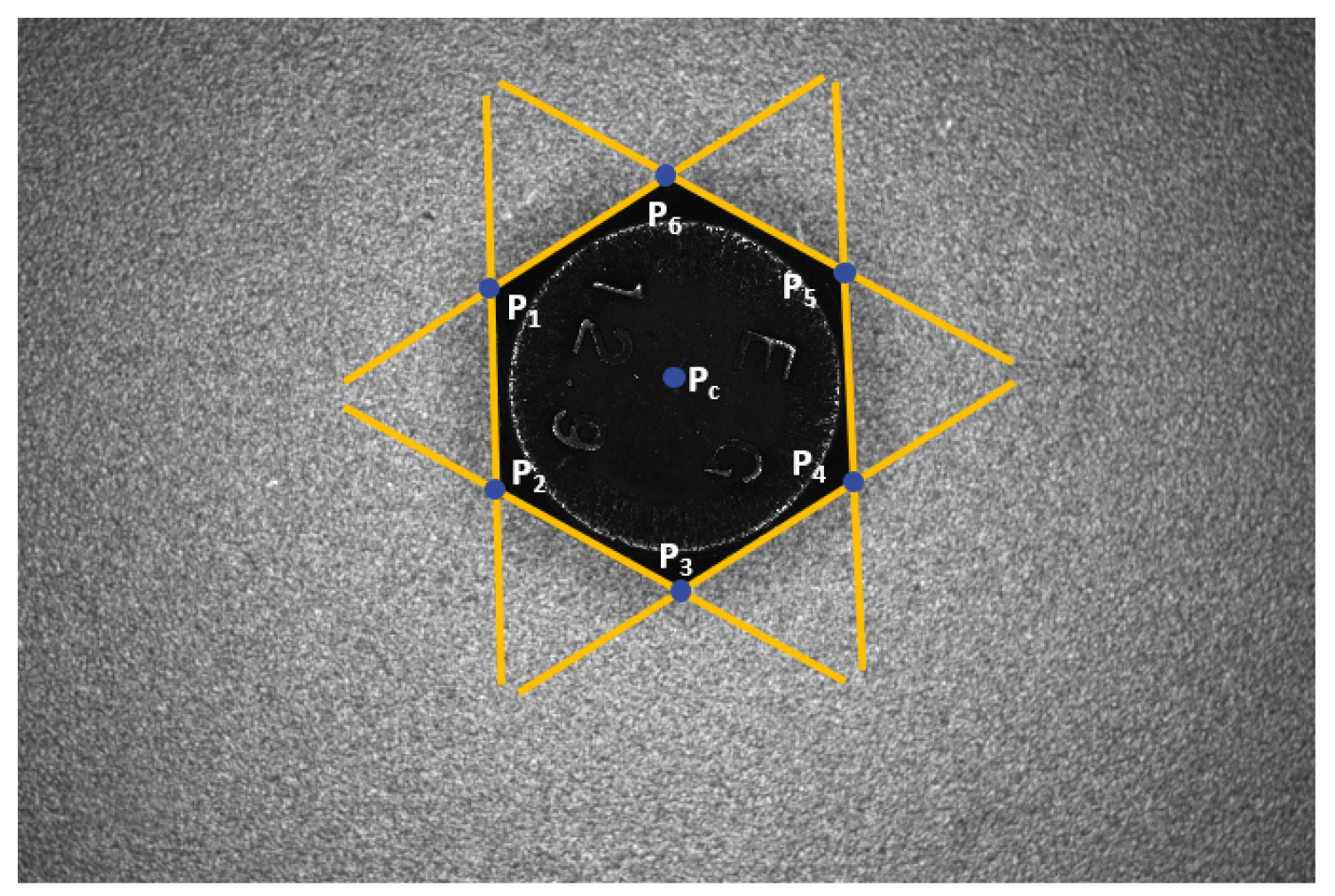

As shown in

Figure 17, an intersection

and its 2D location denoted by

can be obtained by Equations (3) and (4), based on which the hexagonal center

can be determined as follows:

Based on the above-presented processing and calculations, a 2D location of a screw

in the camera frame can be obtained. Relative relationships between the centers of the screw, tool frame, and camera frame are shown in

Figure 18.

(2) Engagement motion guided by robot: In real situations, the bottom of loose screws is always not tightly close to the top surface of a workpiece, which means that it is difficult to estimate the distance between the bottom of the robotic tool and the top of the screw precisely based only on the above calculation equations. To solve this problem, an estimation method of distance

is proposed to avoid the damage of the end effector on a screw or workpiece. In the proposed method, the length of a hexagonal side is used to represent the screw size, and its value

in the image plane can be calculated as an average length of six sides based on 2D locations of intersections

. Then, the distance

between the screw and the camera can be calculated according to the imaging principle of a monocular camera as follows:

where

and

f denote the real length of one side and the camera focus, respectively.

Then, a 3D location of a screw

in the camera frame can be obtained. Based on the conversion matrix

obtained from the hand-eye calibration of the monocular camera [

33], the screw position

in the tool frame can be calculated. Based on the screw position calculation result, the tool center can reach the position of the screw head. However, six vertexes

of the screw cannot correspond to points

of the tool, which is significant for the screw–tool engagement, as shown in

Figure 19. To achieve a perfect engagement of the screw head and the tool, an angular offset

between the coordinate systems of the screw and tool is introduced and calculated based on the directional vectors of

and

, which can be determined by the location of the screw center and one intersection

, and by the forward kinematics of the robot, respectively. Then, the screw can engage with the tool by rotating

along the tool axis. Based on the screw pose

and the rotational angle

, joint angles

of a robot can be calculated based on its inverse kinematics model, which was presented in detail by Xu [

34].

Finally, the following condition is used to determine if the screw–tool engagement is achieved:

where

and

denote the axial distance the tool travels and the distance between the top of the screw and the bottom of the tool end in the same direction, respectively.

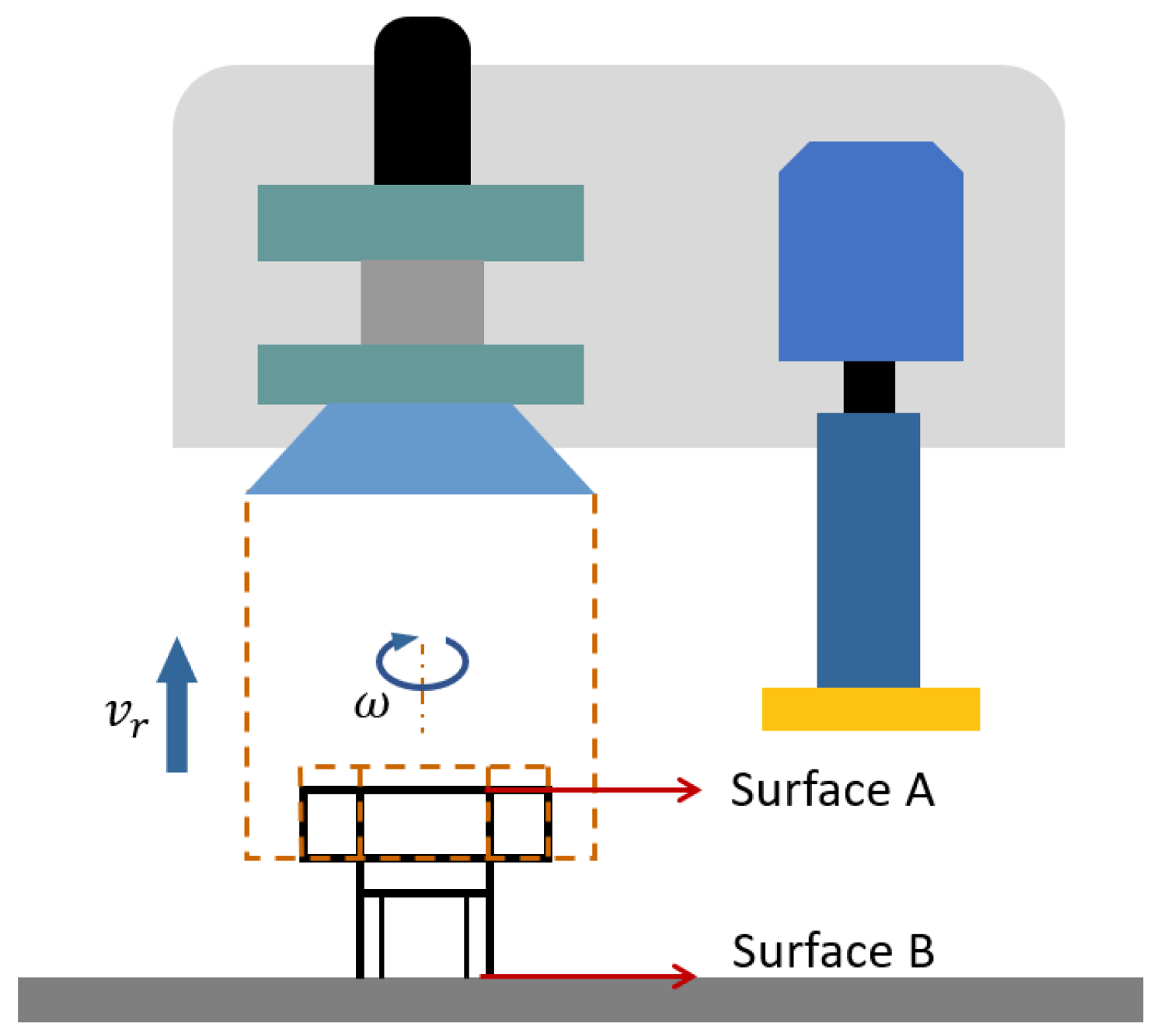

2.3. Screw Unfastening Method Based on Cooperated Motion

As shown in

Figure 20, the unfastening motion is realized through the cooperation of the rotational motion of the unfastening tool and the robotic motion along the axial direction of a screw. For the cooperated motion, the angular velocity

w of the unfastening tool and the linear velocity

of the robotic end effector should be set properly. If the value of

is much higher than the normal speed

of a screw, the screw will break away from the tool before the screw leaves the hole. In contrast, if the value of

is too small, there can be two negative effects. First, the spinning of the hexagonal screw may seriously damage the hole. Second, the normal force

F can be generated between the tool and the workpiece, which can severely damage the thread surface quality of a workpiece. In this work, values of

w and

are set based on Equation (

8), which can help to improve the motion stability. Using Equation (

8), the cooperated motion of a robot and the unfastening tool is planned based on the screw pitch, which is determined according to the national standard gb/t 5783-2000 and a specific type of screw. Therefore, in the proposed strategy, the screw type needs to be known in advance. Additionally, the proposed system is applicable only to screws with the same size

since the end size of the unfastening tool is fixed.

In Equation (

8),

P denotes the pitch of a screw.

It should be noted that if a target structure includes different types of threaded components, disassembly can be realized using a combination of the two following techniques: fuzzy decision, which is introduced for the adaptive determination of the screw pitch according to the vision measurement result and applicable conditions, and an advanced unfastening tool, which is designed to be applicable to screws with a wide size range.

The rotational angle of the unfastening tool can be determined based on the distance from

surface A to

surface B, which can be estimated based on values of heights

and

, as shown in

Figure 18. The length

of the engaged thread can be calculated by

, where

L denotes the total length of a screw. To ensure the complete removal of the screw, the unfastening angle

of the tool can be determined by

where

is the safety rotational angle of the unfastening tool, which represents the increased angle based on the calculated angle determined by the cooperated motion planning of the robot and the unfastening tool. The definition angle

aims to ensure complete screw disassembly. In this work, the value of

is set to 960 deg, which means the unfastening tool will rotate three more times after the cooperated motion. Based on the experimental test results, the assembly reliability can be improved based on this strategy.

The rotational orientation of the disassembly screw can be determined based on the screw thread rotational direction, including the left-handed and right-handed cases. In most applicable cases, screws with the same rotational direction are used. Most industrial applications use right-handed screws, which are also used in this work.