1. Introduction

The quadrupeds are outstanding in high-speed running, weight-bearing, and terrain passing. For example, cheetahs’ sprints have been measured at a maximum of , and they routinely reach velocities of while pursuing prey. Goliath frogs can jump up to high while their body length is only from 17 to 32 cm. Blue sheep are usually found near cliffs, in preparation to run toward rugged slopes to avoid danger. Camels are known as the “ships of the desert”, they are able to carry hundreds of kilos of cargo for long trips in harsh desert environments. These striking features have inspired researchers’ enthusiasm for the bionic quadruped robots. It is hoped that one day such quadruped robots can surpass animals in movement skills and perform tasks in challenging environments. These visions put forward higher requirements for the performance of robot controllers.

Half a century has witnessed the development of legged robots, and many excellent quadruped robots have emerged. The issue of controller design has shifted from static position planning [

1] to highly dynamic optimization [

2,

3]. Among numerous optimization algorithms, Model Predictive Control (MPC) has emerged as the most widely used control algorithm in the robot field.

There are three main elements of MPC [

4]: the predictive model, the reference trajectory, and the control algorithm. This is now more clearly stated as model-based prediction, receding horizontal optimization, and feedback correction. The vast literature invariably says that the greatest attraction of predictive control is its ability to deal explicitly with control and state quantity constraints, this ability arises from the predictions of future dynamic behavior based on the analytical model of the control object, by adding the constraints to the future inputs, outputs, and state. The receding horizontal optimization ensures that the system can quickly respond to the uncertainty from the internal system or external environment.

Since MIT Biomimetic Robotics Lab has open-sourced Cheetah-Software [

5], MPC has become a new baseline method for the locomotion control of quadruped robots. Bledt proposed the policy-regularized MPC [

6] to stabilize different gaits with several heuristic reference policies. Ding presented the representation-free MPC [

7] framework that directly represents orientation using the rotation matrix and can stabilize dynamic motions that involve the singularity. Liu proposed a design approach of gait parameters with minimum energy consumption [

8]. Chang presented the Proportional Differential MPC (PDMPC) controller [

9] that has the ability to compensate for the unmodeled leg mass or payload. Although MPC has made significant progress in the field of legged-robot control, it still faces many challenges. The precise predictive model is difficult to establish; the simplified model will introduce model mismatch, and the fixed parameters controller does not have strong generalization ability.

In recent years, with the revival of artificial intelligence, achieving autonomous learning control for robots has become one of the research highlights. Kolter [

10] learned parameters through policy search based on fixed strategies and realized the action of jumping from the ground to obstacles on Boston Dynamics’ little dag. Tuomas Haarnoja [

11] developed a variant of the soft actor-critic algorithm to realize the level walking of a quadruped robot with only 8 Degree-of-Freedom (DOF). Yao proposed a video imitation adaptation network [

12] that can imitate the action of animals and adapt it to the robot from a few seconds of video. Hwangbo applied model-free reinforcement learning on ANYmal, realized omnidirectional motion on flat ground, and fall recovery. The trained controller can follow the command speed well in any direction. In order to enable the simulation results to be transferred to the real robots, domain randomization, actuator modeling, and random noise during training were used [

13]. Additionally, the two-stage training process makes the student policy reconstruct the latent information which not directly observable, such as contact states, terrain shape, and external disturbances. Without vision and environmental information, the robot successfully passed through various complex terrains only with proprioceptive information [

14]. Training of reinforcement learning requires a large number of samples, the end-to-end controller policy lack of interpretability, the curse of dimensionality, and the curse of goal specification challenge the usage of reinforcement learning [

15]. More importantly, conventional model-based control methods concentrate the intelligence of human researchers, and cannot be discarded roughly.

This work is an extension of our previous work [

9]. In this work, we proposed a novel approach to combine the advantages of model predictive control and reinforcement learning. PDMPC with a dozen parameters is considered a parametric controller that provides stable control to generate samples, reinforcement learning training the policy networks to modify parameters online. Compared with the fixed parameters controller, the learned controller has better performance in command tracking and equilibrium stability.

The rest of this paper is organized as follows.

Section 2 briefly presents the MPC with Proportional Differential (PD) compensator.

Section 3 presents the details of the reinforcement learning framework for PDMPC. The simulation setup and results are illustrated in

Section 4. Finally,

Section 5 concludes this paper.

2. PDMPC Formulation

Our quadruped robot is Unitree A1, as shown in

Figure 1. It has four elbow-like legs, and each leg has three degrees of freedom, called hip side, hip front and knee respectively according to the order of attachment. The first two joints are directly driven, and the knee joint is driven by a connecting rod. This design concentrates the majority of quality on the body.

In order to reduce the computational consumption and the difficulty of optimization solution, the dynamic model of the quadruped robot used as a predictive model is simplified to a single rigid body model with four massless variable-length rods:

where

and

are the mass and body inertia matrix,

and

are the positions and angular velocity of the body,

is the gravitational acceleration vector,

and

are the foot reaction force and the position vector from the foot end to the center of mass (CoM) in the world coordinate system.

is neglected under the assumption that small angular velocity during the robot locomotion.

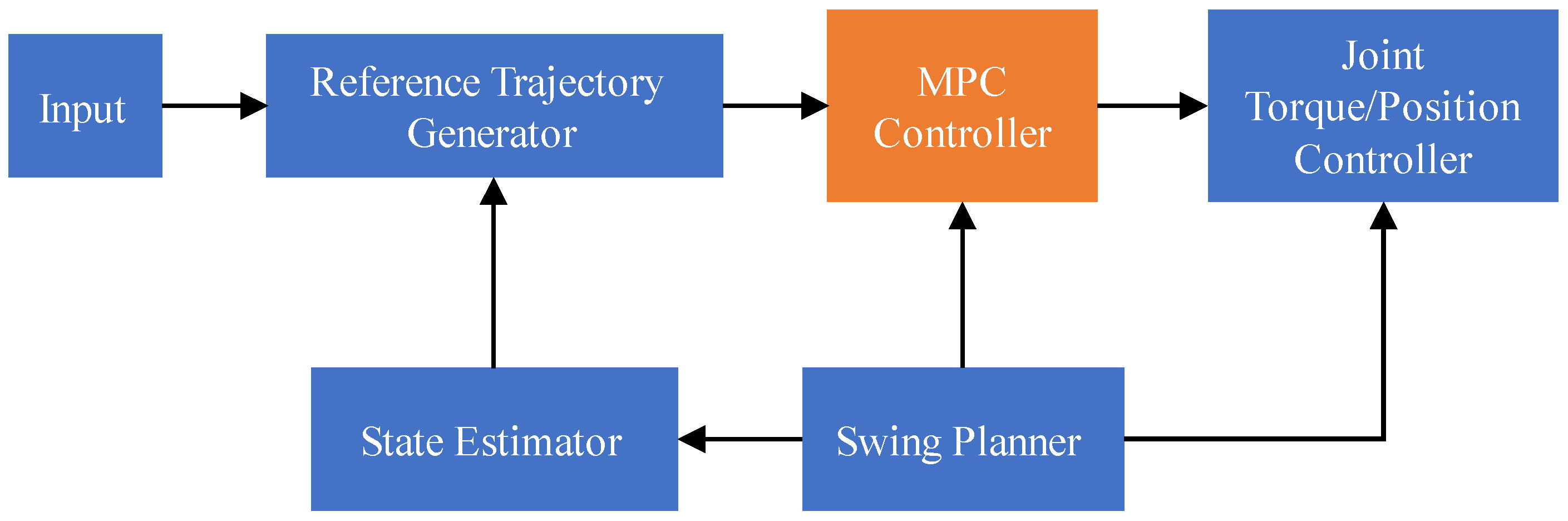

The control framework of MPC is shown in

Figure 2. The estimated states of the quadruped robot are 6-DOF pose and corresponding 6-DOF velocity, the inputs are gait pattern, desired speed, and attitude angle, the reference trajectory generator plans a desired path within the prediction horizon based on the user inputs, and the current state, the swing planner schedules the legs’ phase and the foot trajectory, the MPC controller outputs the desired ground reaction force

, at last, the low-level joint torque/position controller executes the control commands to drive the robot.

where

is the joint torque vector,

is the rotation matrix of body, the leg jacobian matrix

maps the force in operation space to the torque in joint space.

and

are the desired trajectory of joint,

and

are the gain of the PD controller.

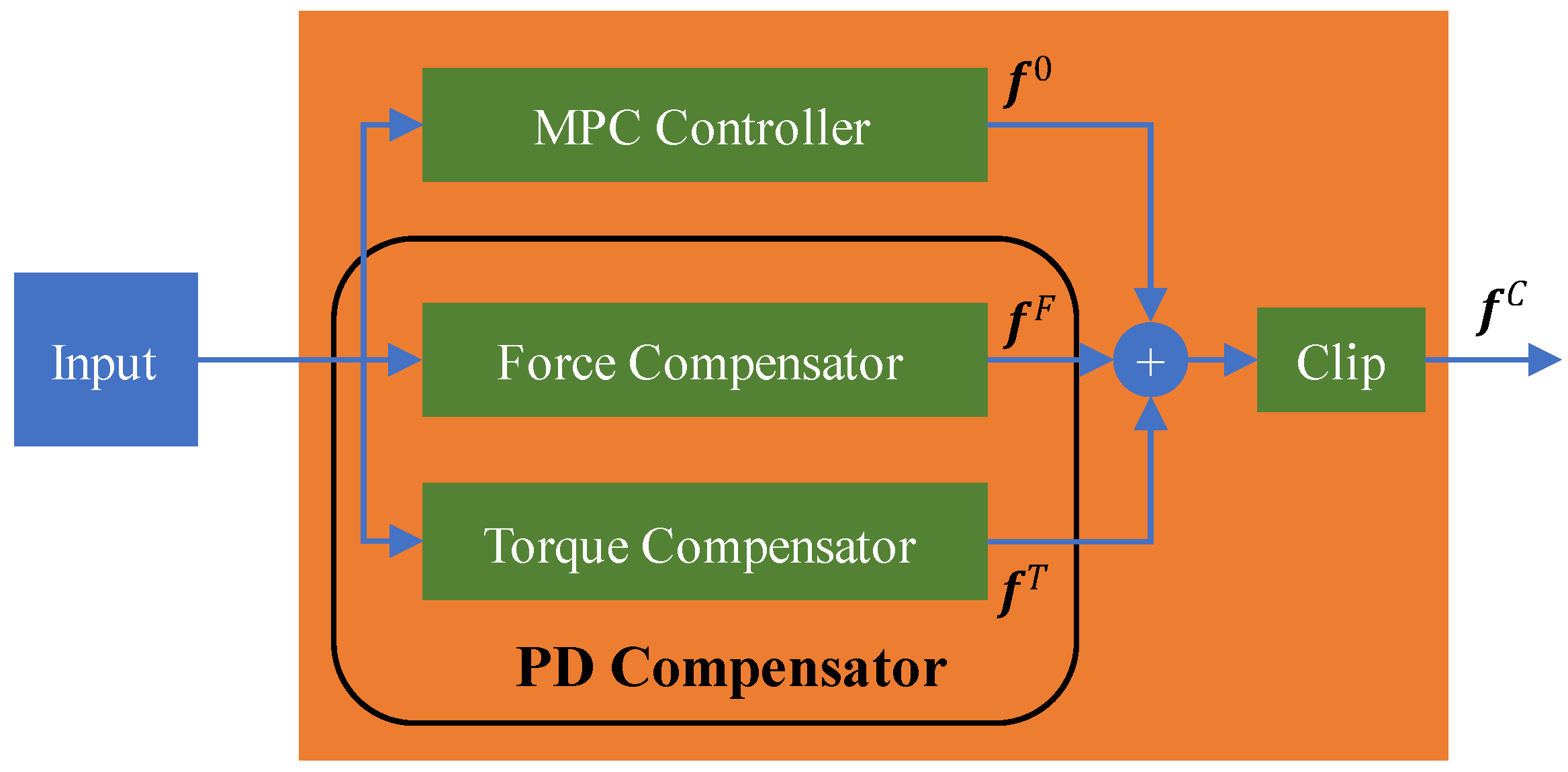

Due to the above simplification, model mismatch will inevitably occur, especially when the leg mass is large or the dynamic parameter estimation is inaccurate, and the conventional MPC is no longer effective to the uncertainty. Therefore, PDMPC is designed to solve this problem. The structure of the PDMPC controller is shown in

Figure 3.

Based on the above-simplified model in Equations (1) and (2), the MPC controller obtains the expected force

of the stance leg through linear optimization. As well, our PD compensator is proposed to compensate for the model uncertainty. We divide the compensator into two parts based on force and torque.

where

is the Euler angle,

and

are the additional virtual force and torque acting on the body.

and

are the diagonal gain matrix with corresponding dimensions.

The force compensator is used to strengthen the tracking of linear motion. In order to reasonably distribute the virtual force to each stance leg, we describe this problem as the following optimal control problem.

where

and [r] is the cross-product matrix.

is the regulatory factor to adjust the uniform distribution of foot force. The first item of objective function aiming resultant the whole moment as zero as possible, and the second item for reducing effort.

.

and

are the lower and upper bounds of force. By solving a quadratic convex programming problem, we obtain additional foot force

. So as the torque compensator generate

for rotational motion.

At last, based on the consideration of the limited joint torque and friction constrain, a clipper makes the desired ground reaction force to meet the physical feasibility.

3. Reinforcement Learning for PDMPC

Controllers with fixed parameters make it difficult to adapt the robot to different states of motion. For example, the gait cycle of the robot will be different when it moves at diverse speeds, so the parameters need to change adaptively. Manual adjustment for the control framework with large-scale parameters is laborious and time-consuming, and the results of parameter adjustment are sometimes tricky to achieve the intended goals.

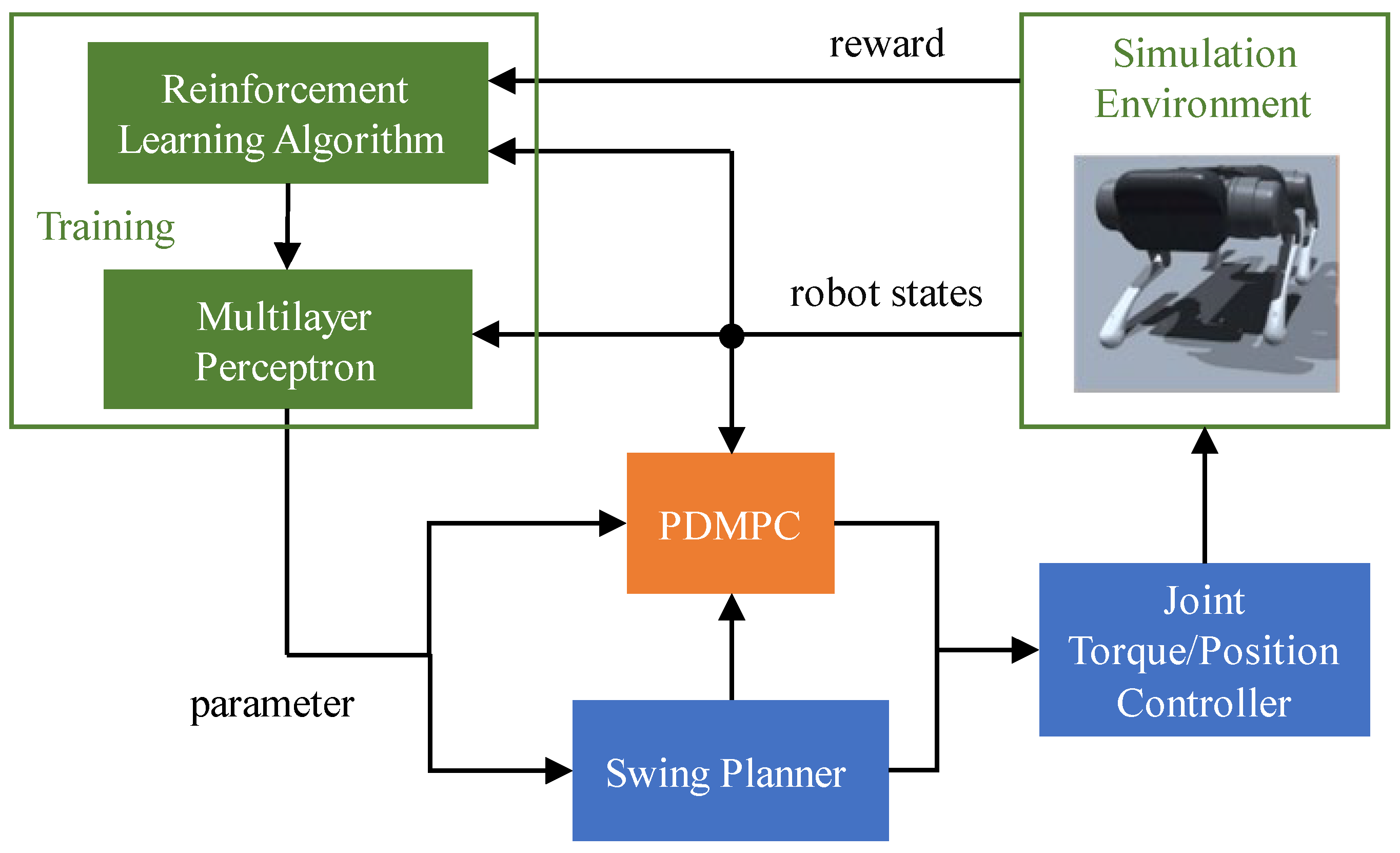

In this section, the reinforcement learning method is used to establish the relationship between the robot states and the controller parameters, so that the multiple parameters can be automatically adjusted.

Figure 4 shows the framework of reinforcement learning based on PDMPC.

We utilize the open-source PPO algorithm [

16] to train the policy according to the states and the reward provided by the simulation environment. The action policy network is an MLP neural network that receives the current robot states and outputs the parameters for the PDMPC controller and the swing planner. According to the current parameters, the swing planner determines the gait frequency and the target position of the swing leg, the PDMPC controller determines the desired ground reaction force for the stance leg. Finally, the joint controller performs joint control.

3.1. Parameters to Be Larned

3.1.1. Swing Planner

The swing planner is used to choose the gait pattern, and determines the phase relation and lift-off schedule. The duty cycle is 0.5, and the result schedule information will be transmitted to the PDMPC controller. The foot point position vector

in the world coordinate system is as follows.

where

is the initial foot point position vector at the lift-off event. The foot trajectory is determined by the heuristic of single inverted pendulum model,

is the duration of the support period,

and

is the heuristic coefficient in different motion directions (normally set to 0.5).

is the maximum height of foot in vertical direction during swing phase. The gait phase variable

,

is an indicator function, 1 stands for swing period, 0 stands for support period.

So, we have 7 parameters to be learned for swing planner, including the four lift heights of each leg , , and , the two heuristic coefficients and , the half support duration .

3.1.2. PDMPC Controller

The PDMPC controller solves the required ground reaction force according to the robot states and the schedule information provided by the swing planner. In our previous work, the manual parameter adjustment takes a long time and has no adaptability. Therefore, it is helpful to improve control performance by incorporating these parameters into the learning process.

There are nine parameters to be learned for PDMPC, including the vertical force coefficients and , the horizontal velocity coeffificients and , the roll and picth torque coeffificients , , and , the yaw angluar velocity coeffificient .

For the low-level joint torque/position controller, the PD gain for position tracking is eazy to turn, therefore, it is unnecessary to put it into our learning process.

3.2. Policy Network

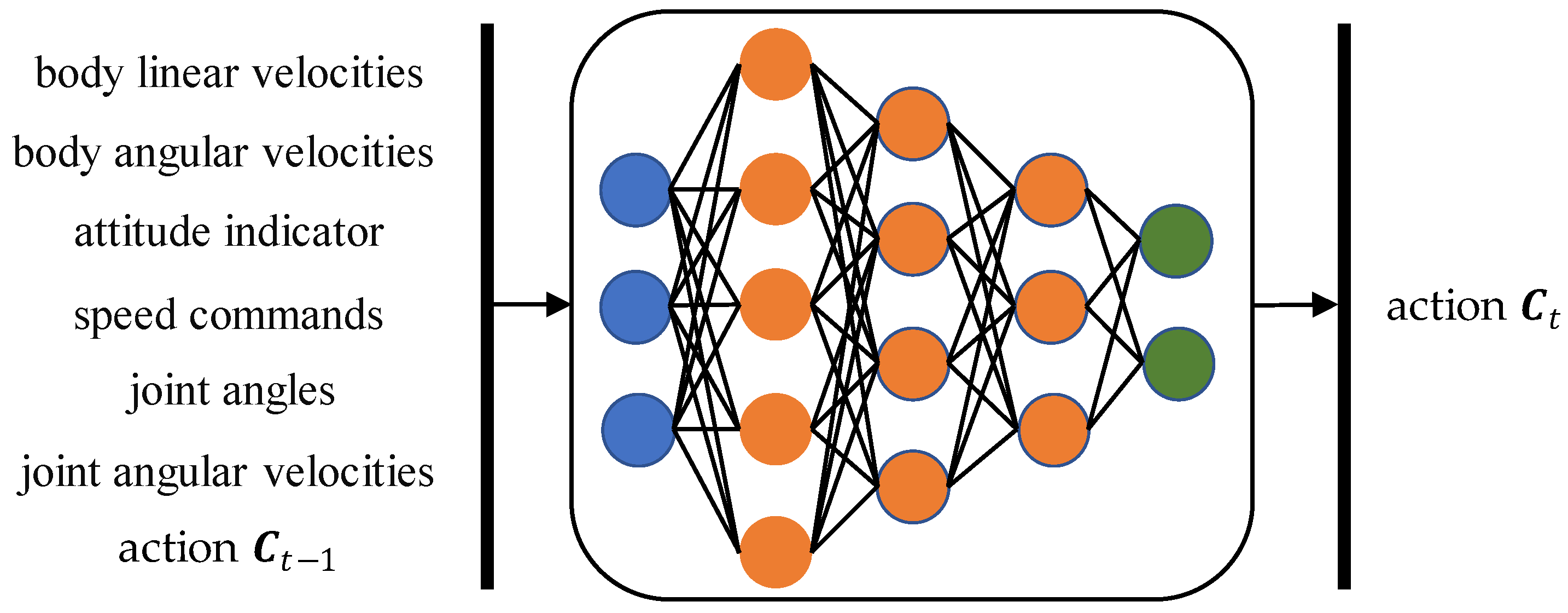

The action policy obeys a multidimensional normal distribution , The mean vector is , and the covariance matrix is . The covariance matrix is used for exploration, and its elements can be gradually reduced with time to make the training converge. , consists of a full connect neural network, are the network parameters.

Figure 5 shows the schematic diagram of the neural network structure of the action policy, including three hidden layers, the orange balls, the number of units is 256, 128 and 64, respectively. Batch regularization processing is used for inputs [

17], and exponential linear units (ELU) are used as activation function.

The input is a 52-dimensional vector, including a 3-dimensional robot body linear velocity vector, a 3-dimensional body angular velocity vector, a 3-dimensional attitude indicator vector, a 3-dimensional speed command vector, a 12-dimensional joint position vector, a 12-dimensional joint angular velocity vector and a 16-dimensional network output action vector at the previous step

. The attitude indicator vector refers to the projection of the unit vector in the gravity direction under the body coordinate system. The speed command includes two linear speeds in the horizontal direction and the yaw angular velocity.

is not used directly, its elements are converted to the required parameters for swing planner and PDMPC controller through appropriate mapping, as shown in

Table 1.

The pseudo code of reinforcement learning based on PDMPC is as follow (Algorithm 1).

| Algorithm 1 Reinforcement learning based on PDMPC |

Input initial parameter of action network

initial parameter of state value function

for do

sample parameter vector from

swing planner, assign leg states and target positions

PDMPC, desired ground reaction force

joint Controll

sample trajectory

if reset_flag then

reset robot

end if

if data sufficient then

compute reward

estimate advantage function based on state value function

compute Clipped Surrogate Objective (PPO)

update policy by gradient ascent algorithm (G):

fitting

by quadratic mean square regression, update parameter:

end if

end for |

4. Simulation and Result

4.1. Simulation Platform

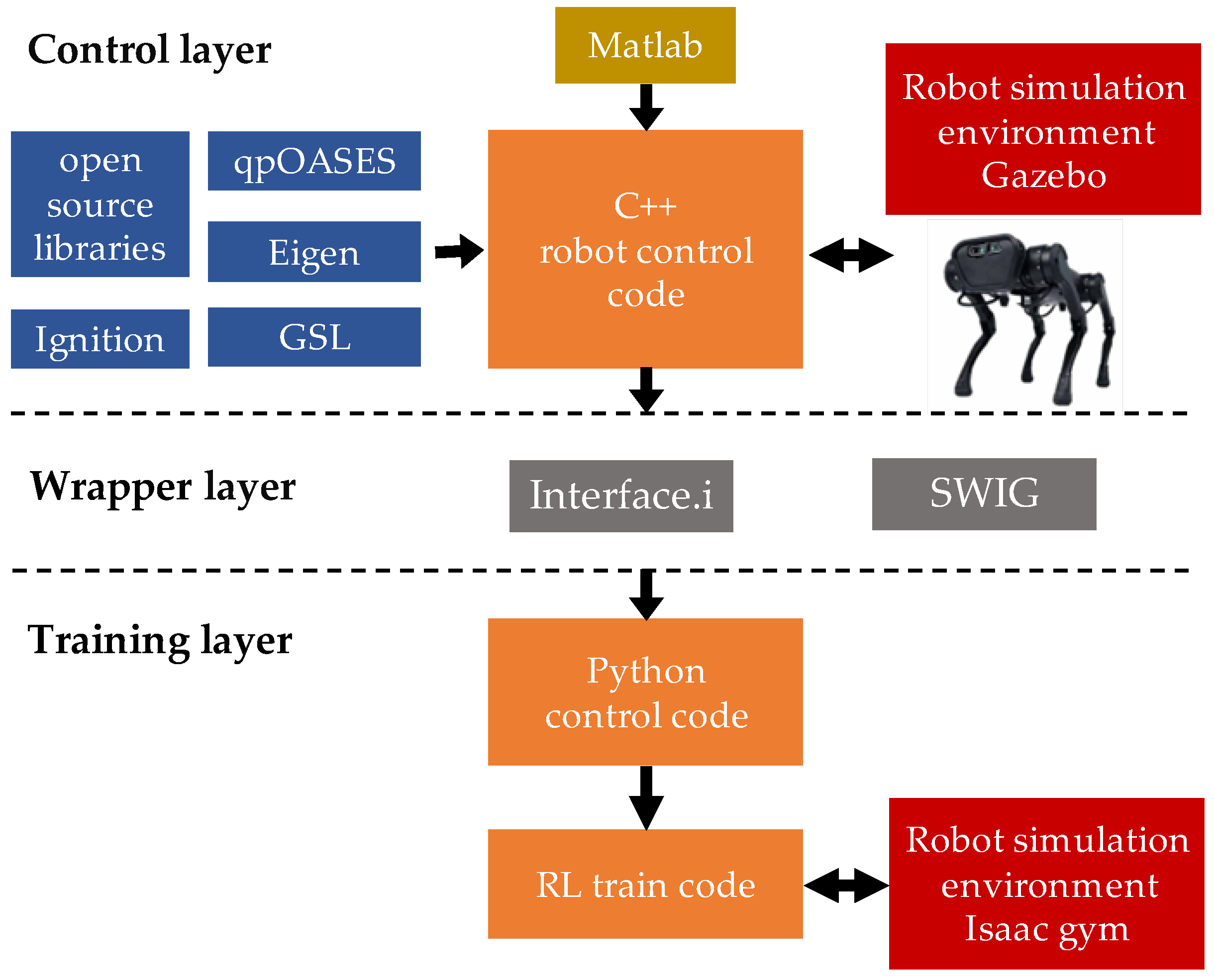

We constructed a quadruped robot control algorithm software platform, and its architecture is shown in

Figure 6. The platform can be divided into three layers according to functions, namely control architecture layer, conversion layer and training layer.

The control layer runs the traditional manually designed controllers, the code is written in C++ and integrates many open-source mathematical libraries (GSL, Eigen, and qpOASES), which improve the efficiency of algorithm development. The algorithm code has good transplant characteristics and can be rapidly deployed to different dynamic environments, such as Gazebo and other robot simulation environments, as well as real quadruped robots.

The wrapper layer converts the C++ code of the control layer to other types of programming languages to meet the needs of different environments. SWIG-4.0.2 (Simplified Wrapper and Interface Generator) is a software development tool used to build C and C++ program script language interfaces. It can quickly package C/C++ code into Python, Perl, Ruby, Java and other languages. The reinforcement learning we used requires Python language. Declare the C++ control algorithm function in the interface file Interface.i as required, and then convert it by SWIG.

The training layer consists of the converted Python control code library, the learning and training algorithm and Isaac Gym, where Isaac Gym is a physical simulation environment specially developed for reinforcement learning research [

18].

4.2. Training and Rewards

The simulation was conducted on a desktop laptop with eight CPU cores (Intel Core I7-7700HQ) and single GPU (NVIDIA GeForce GTX 1070). In Isaac Gym environment, we train 20 quadruped robots in parallel. The robot uses a diagonal trotting gait, the simulation time step is 0.005 s, the control frequency of PDMPC is 100 Hz, the maximum alive duration is 15 s, the linear speed command range is , and the angular speed command range is .

Algorithm 1 and Adam optimizer [

19] are used to train the policy network, and the corresponding hyper-parameters are listed in

Table 2.

The reward function we designed is as follows:

For the task of locomotion with the command speed, we encourage the robot with smaller speed error respect to expectation. The nonlinear exponential function makes the robot obtain much more score when the speed tracking performance improved a little, especially when the robot have medium tracking ability.

On the other hand, we punish the robot with Equation (14).

where

is the rotation matrix from the world coordinate system to the body coordinate system, and

is the unit vector in gravity direction. The first item punishing the robot is unable to maintain the body height stable; the remaining item punishes the robot with unnecessary roll and pitch movement.

All

coefficients adjust each item to form the whole reward to evaluate the current policy.

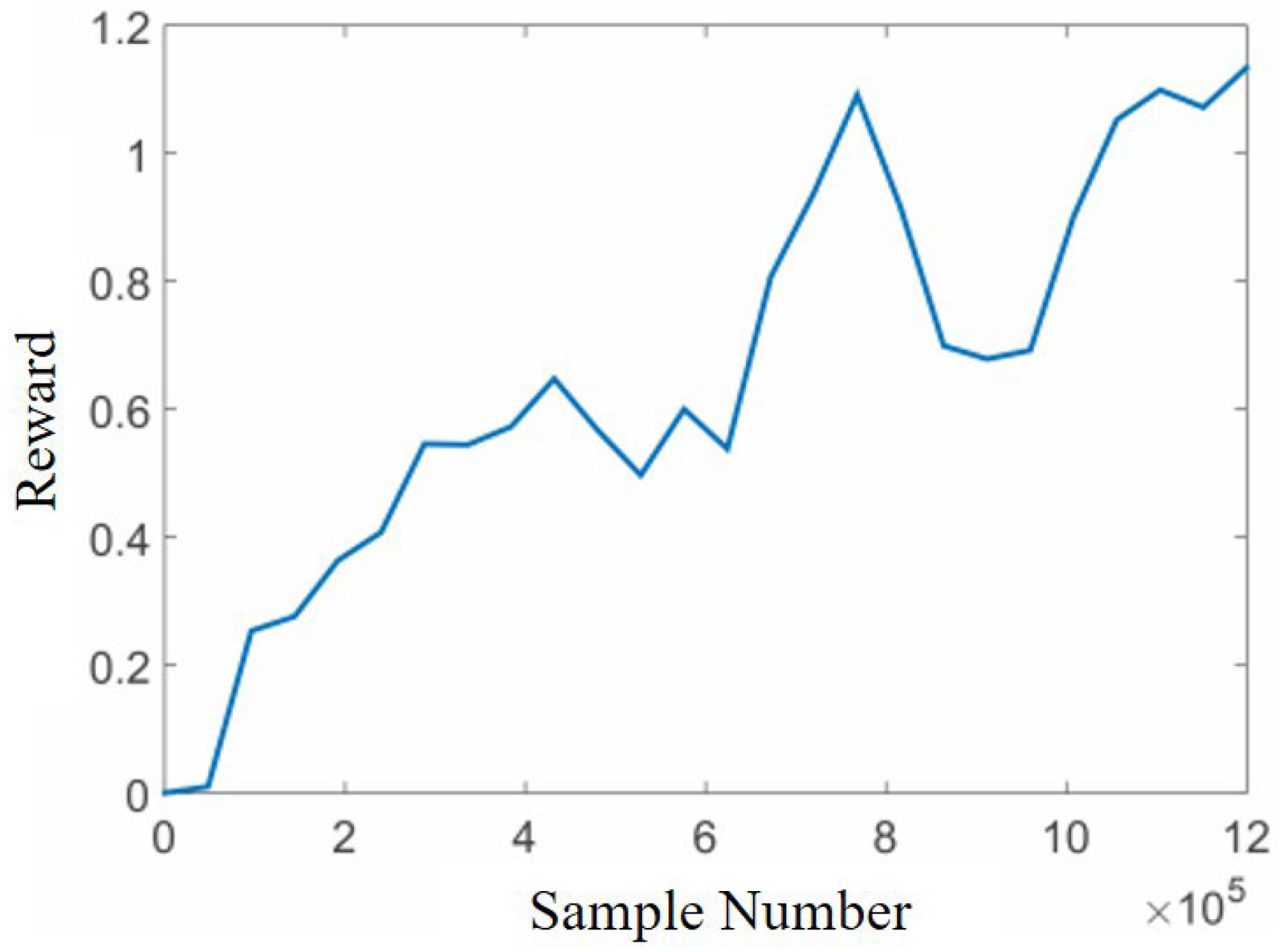

Figure 7 shows that the training reward gradually increases with the increase of the number of samples, and the number of samples required is

.

4.3. Result and Discussion

We first compare the locomotion performance of the fixed parameter PDMPC and the learned PDMPC.

Figure 8 shows the velocity tracking performance of each controller. Obviously, the robot under the learned controller has smaller tracking error, and the motion is more smooth.

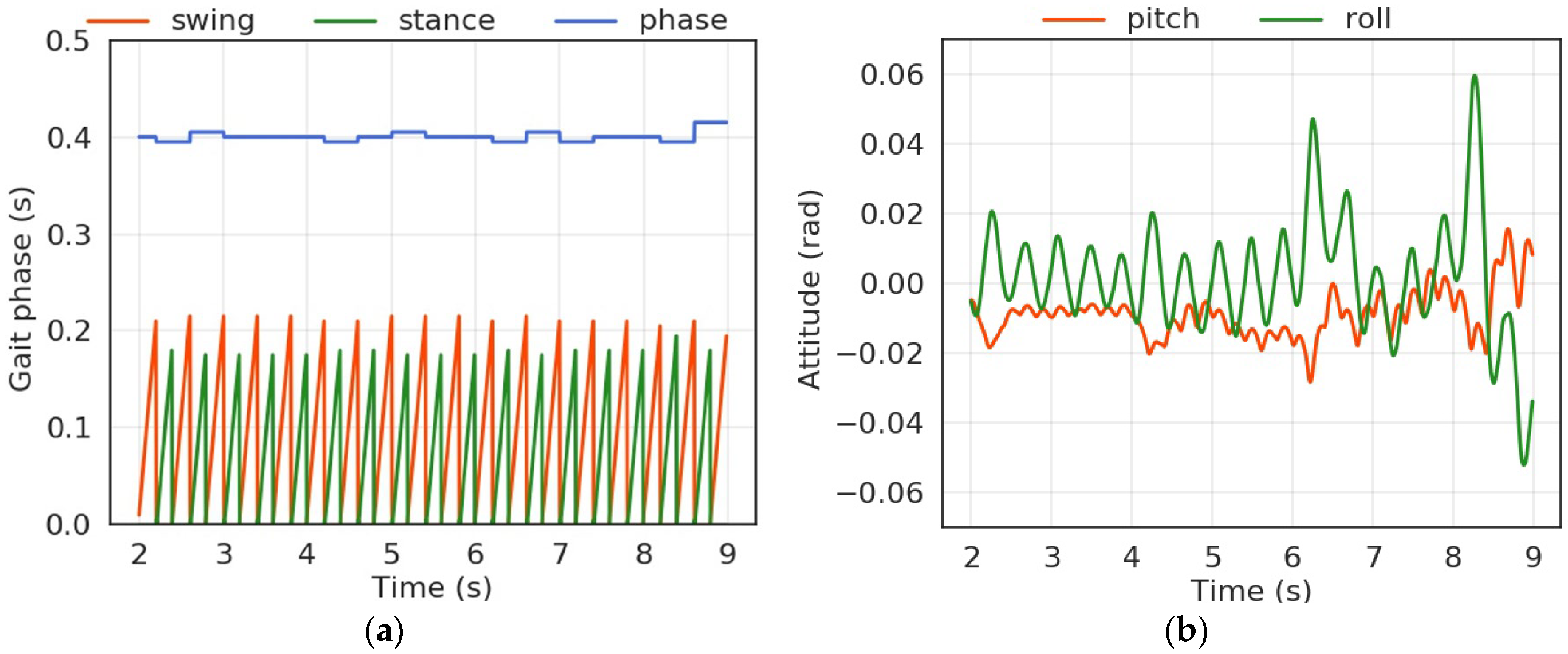

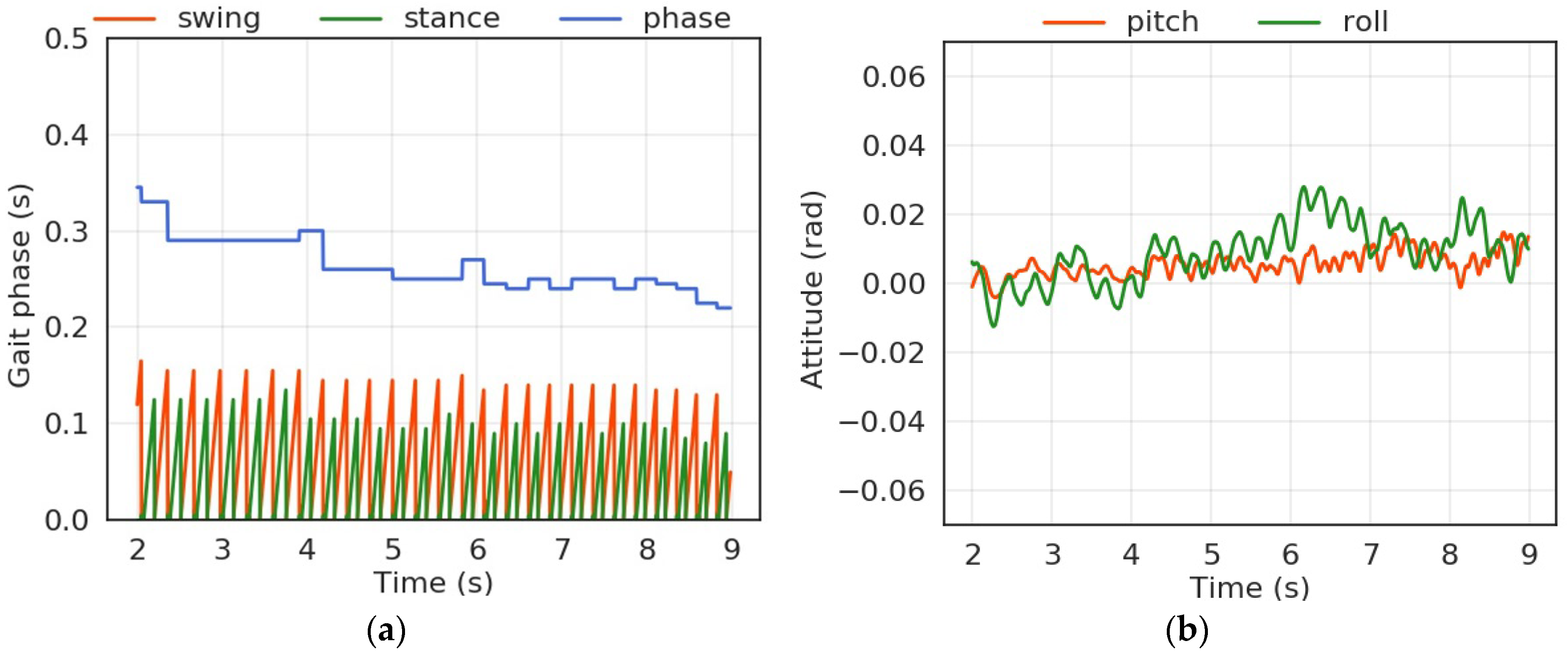

Next, we check the gait and stability at different speeds. The forward velocity command gradually increases from

to

. As shown in

Figure 9 and

Figure 10, when the command speed increases, the fixed parameter controller holds the same gait, but the learned controller reduces its phase time. This change is in line with the biological norm that animals have a higher frequency of gait at high speeds. In addition, the attitude angle of the robot under the learned controller is closer to 0, and its oscillation amplitude is smaller. This phenomenon indicates that the learned controller is more capable of absorbing the impact of the swing leg when it touches down.

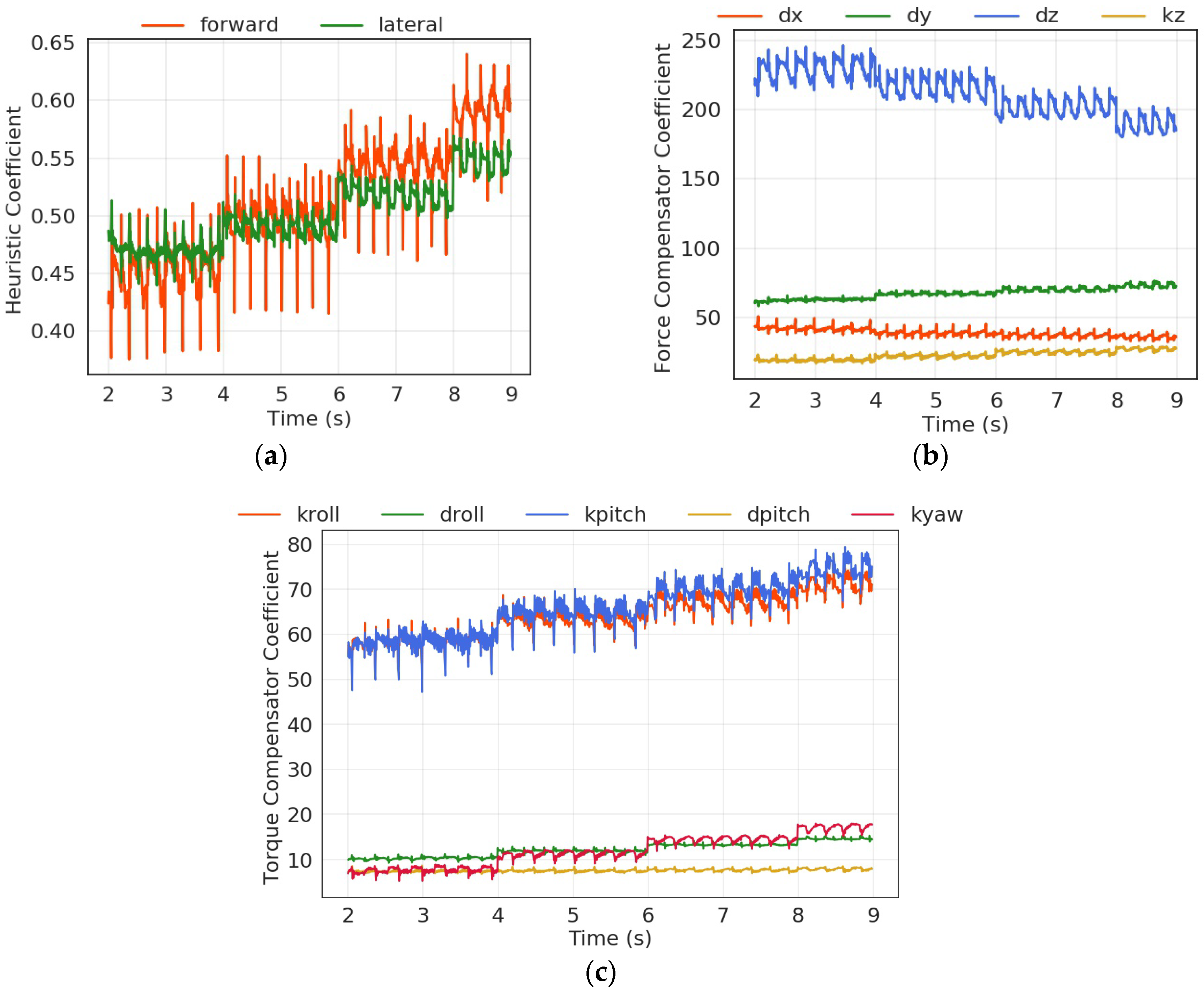

Figure 11 shows the adaptive changes of parameters of PDMPC during the acceleration of the quadruped robot.

Figure 11a shows the adaptive heuristic coefficients obtained through reinforcement learning. The forward and lateral coefficients increase with the increase of speed. Manual-designed swing trajectory usually sets the heuristic coefficient of the inverted pendulum heuristic to a fixed value of 0.5. Because the model cannot be strictly regarded as a single-stage inverted pendulum during the motion, it is not reasonable to use fixed coefficients.

It can be seen in

Figure 11b that when the robot speed increases, the decreasing parameter

makes the forward speed tracking of the robot softer and the increasing

strengthens the lateral control at the same time; the decreasing parameter

and

reduce the position compensation in the height direction of the robot and increase the damping, making the position of the center of mass more flexible. At the same time, the parameters of the torque compensator are all increased, which is conducive to making the robot’s attitude more stable during acceleration.

5. Conclusions and Future Work

In this work, we proposed a novel locomotion control algorithm for a quadruped robot which combines the advantages of model-based MPC and model-free reinforcement learning. PDMPC controller performs a fundamental locomotion capability that provides a safe exploration region, and reinforcement learning endows robots with evolutionary ability. The trained policy chooses the parameters for the PDMPC controller adaptively according to the current state. The simulation results show the effectiveness of our algorithm, compared with the fixed parameters controller, the adaptive parameters make the learned controller have better performance in command tracking and equilibrium stability, and the gait of robot changes with speed just as quadrupeds do.

Model-free RL learns an end-to-end control policy based on the reward function to maximize performance. This learning from scratch requires massive samples, which means a large number of robots and time consumption. In this work, with the model-based controller to generate good samples, our training process has been greatly shortened. It requires fewer robots and has a faster learning speed. On the other hand, the manually designed controller will guide the policy into a local optimal region, limiting the powerful exploration, discovery and learning ability of reinforcement learning. As two major frameworks for solving optimal control problems, conventional control and learning-based control have their own advantages and can promote and develop together. We will explore more ways to merge them and make online quick learning possible on the physical platform.