Abstract

Facial skin condition is perceived as a vital indicator of the person’s apparent age, perceived beauty, and degree of health. Machine-learning-based software analytics on facial skin conditions can be a time- and cost-efficient alternative to the conventional approach of visiting facial skin care shops or dermatologist’s offices. However, the conventional CNN-based approach is shown to be limited in the diagnosis performance due to the intrinsic characteristics of facial skin problems. In this paper, the technical challenges in facial skin problem diagnosis are first addressed, and a set of 5 effective tactics are proposed to overcome the technical challenges. A total of 31 segmentation models are trained and applied to the experiments of validating the proposed tactics. Through the experiments, the proposed approach provides 83.38% of the diagnosis performance, which is 32.58% higher than the performance of conventional CNN approach.

1. Introduction

Facial skin condition is perceived as a vital indicator of the person’s apparent age, perceived beauty, and degree of health. A face with shining, silky, bright, hydrated, and trouble-free skin indicates a high degree of beauty and thus attractiveness, which creates an initial impression of the person. As people get older, their facial skin also ages, revealing symptoms of aging such as wrinkles, age spots, and visible pores. The biological age from his or her facial skin condition can intuitively be predicted. For this reason, people wish to maintain youthful facial skin without aging symptoms.

The conventional way of assessing our facial skin condition is to visit facial skin care shops or dermatologists’ offices. However, this requires a burden of locating the right facial skin clinic, making appointments, and visiting the clinics. In addition, the cost incurred for the visit can be substantial.

Machine learning-based software analytics on facial skin conditions can be a time- and cost-efficient alternative to the conventional approach of visiting the clinics. In recent years, researchers have applied Convolutional Neural Network (CNN) based deep learning models to diagnose facial skin problems [1,2,3,4,5,6,7,8,9,10,11,12]. However, current CNN-based approaches have been shown to be limited in delivering a diagnosis with high performance and, hence, limited in their applicability in clinics. This is mainly due to the following technical challenges in diagnosing facial skin problems with CNN models.

- Detecting small-sized skin problems such as pores, moles, and acne

- Handling the high complexity of detecting about 20 different facial skin problem types

- Handling appearance variability of the same facial skin problem type among people

- Handling appearance similarity of different facial skin problem types

- Handling false segmentations on non-facial areas

The goal of this study is to devise effective software methods to overcome the technical challenges that can be provided as a clinic-level high performance of facial skin diagnosis. This paper is to propose a set of five software tactics that can effectively remedy the challenges and provide a high level of performance in diagnosing facial skin problems. The paper also presents a technical assessment of the proposed methods through experiments and comparison to other approaches.

The paper is organized as the following. Section 2 is to summarize related works and their contributions. Section 3 is to present the intrinsic limitations of conventional CNN approach to diagnosing facial skin problems. Section 4 is to elaborate the set of five tactics that can effectively overcome the technical limitations and provide a high performance of diagnosis. Section 5 is to present the datasets used for training facial skin diagnosis models and the results of the experiments for evaluating the proposed tactics and comparing to other approaches.

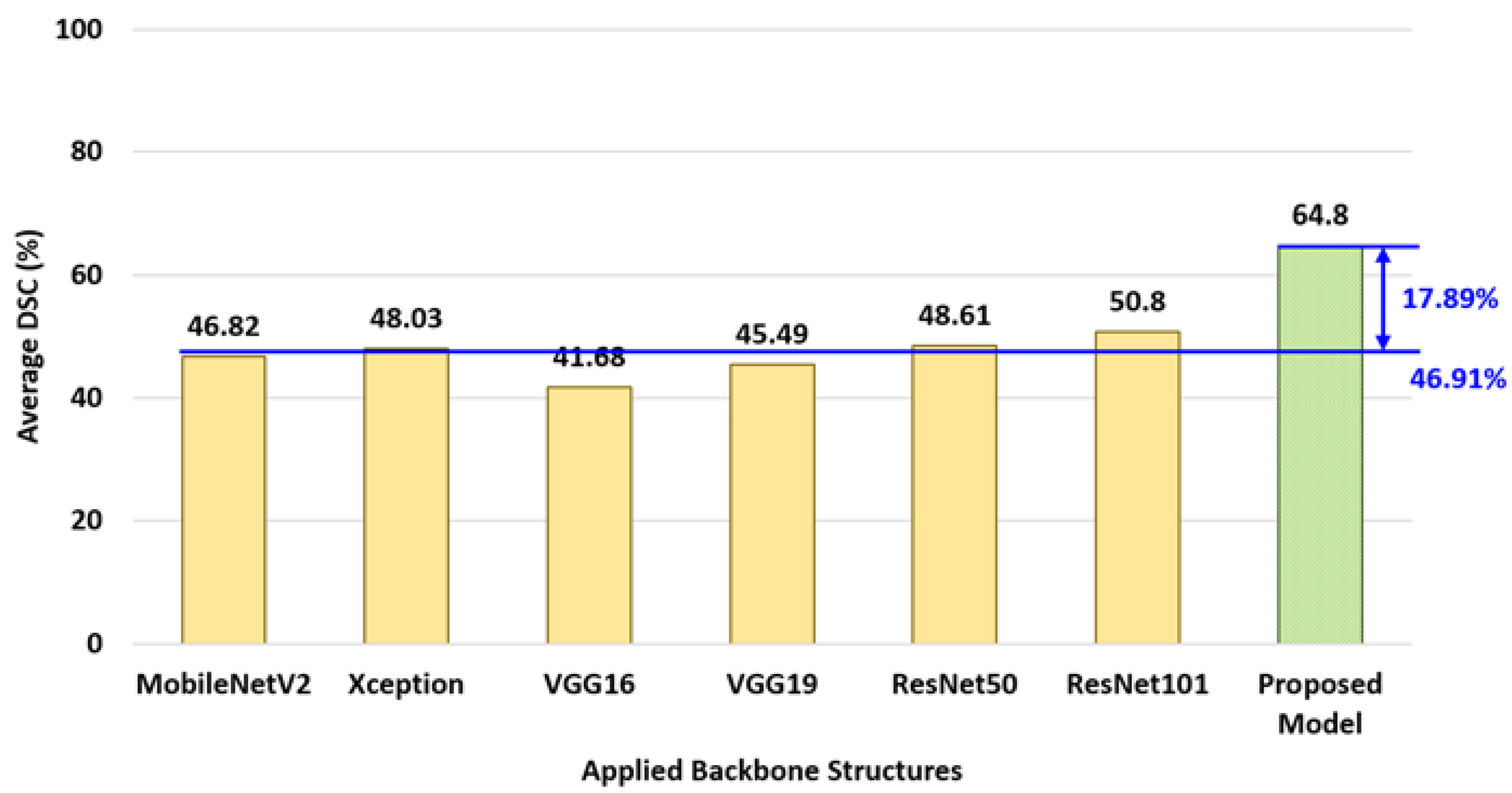

The contribution of this study is twofold: (1) proposing a set of give tactics that overcoming the intrinsic limitations of CNN models in diagnosing face skin problems and (2) seamlessly integrating the tactics into software implementation. Through a proof-of-concept implementation of the system and experiments with it, the set of proposed tactics is shown to provide 83.38% of the diagnosis, improve the diagnosis performance by 32.58% compared to the conventional CNN approach, and outperform by an average of 17.89% compared to diagnosis models with MobileNetV2, Xception, ResNet, VGG16, and VGG19.

The proposed facial skin diagnosis system with the tactics can potentially be utilized as a supplementary approach in face skin care clinics and a cost-effective alternative to visiting clinics by individuals.

2. Related Works

There have been a number of studies for diagnosing facial skin problems with deep neural networks. Shen’s study [1], Quattrini’s study [2], and Zhao’s study [3] explored the diagnosis of a specific facial skin problem, such as acne or rosacea, using CNN models. VGG-19 models were utilized in Shen’s work and Quattrini’s work. Liu’s study proposed a system for detecting moles [4] using UNet segmentation models.

There have been studies to analyze multiple types of facial skin problems, including Wu [5] and Gerges [6]. Both works utilize a CNN network for diagnosing multiple types of facial skin problems.

There are works to utilize effective methods to improve the performance of analyzing spatial information in the domain of facial skin diagnosis. Yadav’s study [7] and Junayed’s study [8] proposed a pre-processing method for improving the performance of diagnosing facial skin problems. Yadav applied a method of changing image color space from RGB to HSV to emphasize the acne area. Junayed applied a method for generating multiple images by changing color spaces to reduce noise and emphasize the acne scar areas. Bekmirzaev [9] proposed a segmentation model structure for multiple facial skin problems, which consists of Long Short-Term Memory (LSTM) layers and convolutional layers. Gessert [10] proposed a deep neural network structure whose input is multiple divided sections from high-resolution skin photos and consists of convolutional layers and recurrent layers. Gulzar [11] proposed a segmentation model for skin lesions, which combines a vision transformer and U-Net structure.

There exist works to effectively detect small-sized objects with CNN models [13,14,15,16,17,18,19,20,21]. Cui [13] proposed a CNN structure for detecting small-size objects by revising the Single Shot Detector (SSD) structure by applying fusion layers for multiple convolutional layers with deconvolutional layers. Liu [14] proposed a modified mask R-CNN model to detect cracks in asphalt pavement using ground-penetrating radar images by adding a feature pyramid network to the original backbone network of the mask R-CNN.

The studies applying the small-sized object detection model in specific domains are summarized as shown in Table 1.

Table 1.

Representative Studies for Detecting Small Size Objects.

The small-sized object detections and segmentations are required in multiple domains. The proposed studies enhanced the original CNN models to add or modify networks.

There exist works to enhance the quality of input images, such as face photos. They proposed to enlarge images and improve the quality of the image. Various approaches for enhancing resolutions have been proposed in [22,23,24,25,26,27].

There exist works to analyze directional images including [28,29,30]. Wei [28] proposed a CNN model for segmenting brain areas from MRI by segmenting the brain MRI image into coronal, sagittal, and transverse axials. The feature maps for each direction are applied to generate the 3D brain segmentation result.

There exist works to handle false segmentation with machine learning including [31,32,33,34,35]. Sander proposed a process to discard the failure detection area using the CNN model and knowledge-based filtering [31].

There exist works to distinguish the target class images among similar images including [36,37]. Khan’s study proposed a medical image classification by the appearance similarity of each organ in different medical images using a method fused scale-invariant feature transform (SIFT) descriptor and Harris corner algorithm [36].

The current related works provide various deep-learning approaches to diagnose facial skin problems, but they do not address the technical hardship in diagnosing facial skin problems. Our study is distinct on identifying the specific challenges of the diagnosis problems and proposing a set of 5 practical tactics to handle the challenges. As a result, the diagnosis performance is high enough to be utilized in clinics.

3. Technical Challenges in Diagnosing Facial Skin Problems

Due to the intrinsic characteristics of facial skin problems, the diagnosis of skin problems—even with advanced machine learning algorithms—presents the following technical challenges.

3.1. Challenge #1: Detecting Small-Sized Skin Problems Such as Pores, Moles, and Acne

The CNN algorithm can effectively analyze spatial information. CNN is based on the shared-weight architecture of the convolution kernels and filters that slide along input features and provide translation-equivariant responses known as feature maps [38].

However, the performance of CNN models drops when the size of the target object in an image is considerably small. Some studies have proposed the problems of detecting small-sized objects by CNN models and proceeded experiments to find better algorithms for detecting the objects [39,40,41]. This is due to the limited spatial features exposed in the small-sized object image, and consequently, the limited extraction of spatial features with filters.

Using our collection of 2225 facial photos (at a resolution of 576 × 576 pixels), the average occupation ratios of facial skin problem areas on the photos are measured, as shown in Table 2.

Table 2.

Comparing occupation ratios of face, face section, and facial skin problem areas.

The whole face occupies an average of 33.06% of the photo. A face section such as eye, nose, mouth, or ear occupies an average of 3.36% of the photo. A facial skin problem, such as pore, mole, or acne, occupies an average of 0.02% of the photo. The average radius of a pore is 0.02~0.05 mm [42], and the average radius of a mole is about 6 mm [43].

The hypothesis from this observation is that the detection of such small-sized facial skin problems with CNN models results in significantly low performance. To validate our hypothesis, CNN models using the Mask R-CNN algorithm [44] were trained to detect and visually segment 3 different types of objects. The model was trained with ResNet as the backbone, 0.001 for the learning rate, and (16, 32, 64, 128, 256) as the RPN anchor size. The performance of detection results using the Dice Similarity Coefficient (DSC) metric is shown in Table 3.

Table 3.

Performance measurements of detecting objects in different sizes.

The average DSC of a mole is shown to be only 31.5%, which is considerably lower than the average DSC of 95.6% for the entire facial area and the DSC of 90.9% for the mouth area.

This is due to the size of the mole, which is too small for the filters in a CNN model to detect the spatial characteristics. If a mole is represented with 25 pixels and the size of a filter is (5 × 5 (i.e., 25 pixels)), then the visual features of the mole are not captured enough by the filter; rather, the features are even simplified and lost through the process of convolution. The resulting feature map could not represent the mole with sufficient information.

Moreover, the mask placed around such a small object like a mole by a Mask R-CNN model cannot present its boundary with a high distinction.

3.2. Challenge #2: Detecting about 20 Different Types of Facial Skin Problems

There are about 20 different types of commonly known facial skin problems, including acne, hyperpigmentation, scars, birthmarks, spider veins, white spots, rosacea, ingrown hair, moles, wrinkles, dark circles, eye bags, dry skin, oily skin, dull skin, large pores, and black heads. Some of the common skin problems are shown in Figure 1.

Figure 1.

Different types of facial skin problems.

It is challenging to train a CNN model that can detect all the different types of facial skin problems with a high level of performance. This is because the 20 facial skin problem types are not distinct in their appearances; rather, they have a high similarity. Consequently, training a CNN model from a training set of instances from different classes but a high similarity would result in a low performance of classification.

Moreover, because facial skin problem areas are quite small in size, training a CNN model for detecting all the skin types with a high performance becomes infeasible.

3.3. Challenge #3: Variability on Appearances of Same Facial Skin Problem Type

There also exists a high variability on the appearances of a facial skin problem type among people. Figure 2 shows four different appearances for acne.

Figure 2.

Different appearances of acnes.

For a given facial skin problem type, there can be tens of different appearances, varying in the shape, size, depth, darkness, borderline vividness, and direction. When considering about 20 different facial skin problem types and an average of ‘m’ different appearances for each facial skin problem type, there exist (20 × m) spatial patterns to be recognized by a CNN model. When ‘m’ is 50, there are 1000 spatial patterns to handle.

It is challenging to train a CNN model that can detect that many different spatial patterns with a high level of performance. This is because the variability in appearances for the same facial skin problem type expands the heterogeneity of spatial features within a facial skin problem type and the complexity of the spatial features to handle for all 20 facial skin problem types.

3.4. Challenge #4: Similarity on Appearances of Different Facial Skin Problem Types

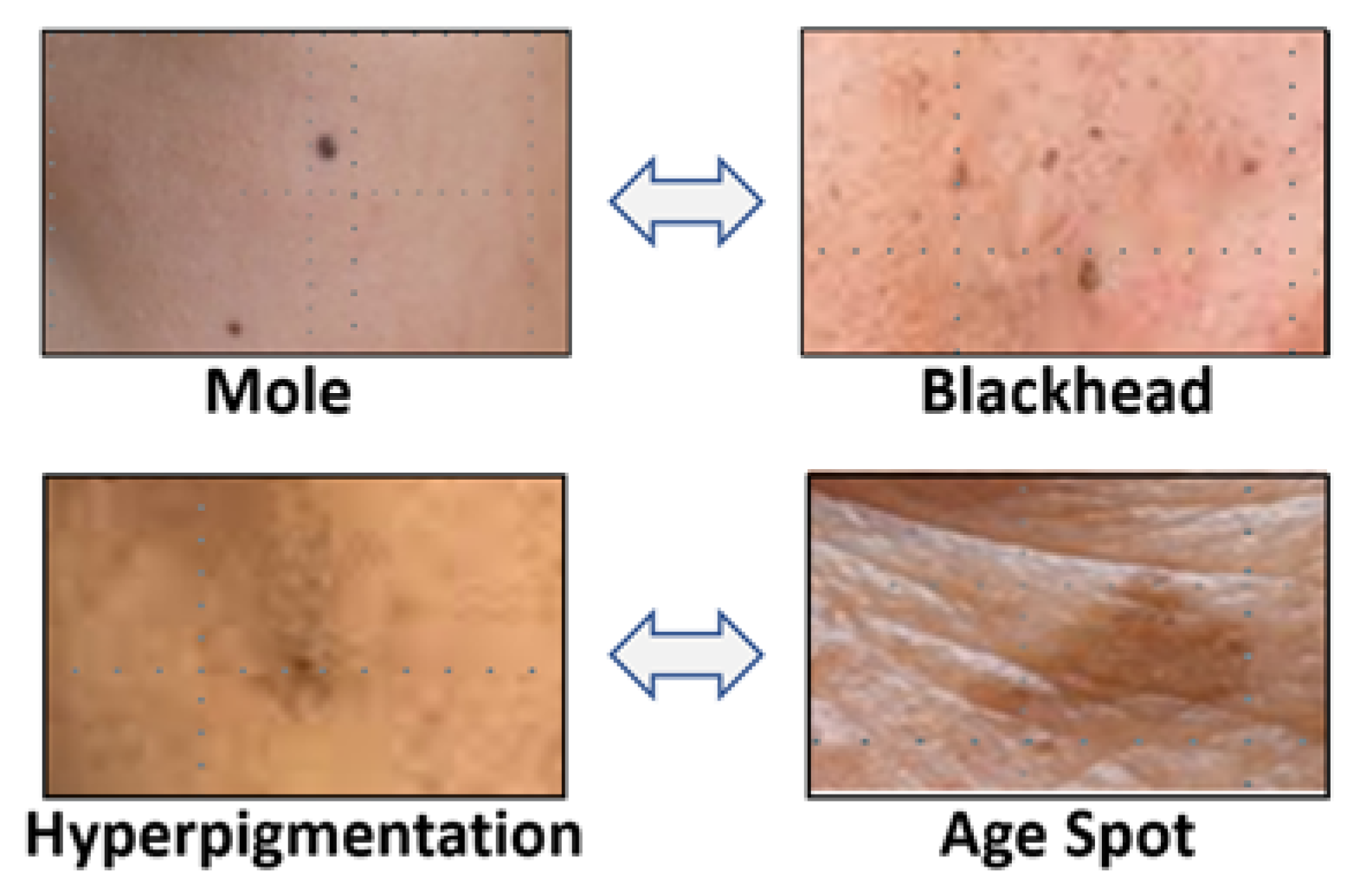

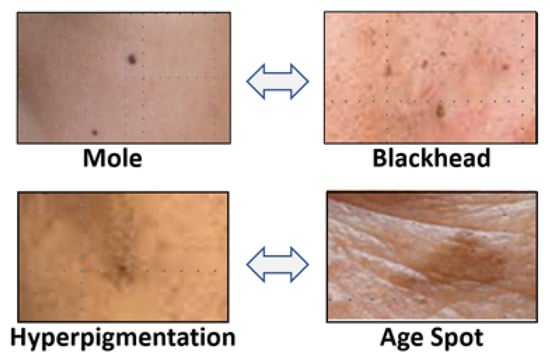

As discussed earlier, some facial skin problem types are not highly distinguishable; rather, they show some similarities. As an example, consider the instances of a mole, blackhead, hyperpigmentation, and age spot as shown in Figure 3.

Figure 3.

Appearance similarity among 4 different facial skin problem types.

They are not highly distinguishable, but exhibit a considerable level of similarities. In the figure, the mole is similar to the blackhead and the concentral part of hyperpigmentation, which is similar to the age spot.

It is challenging to train a CNN model that can distinguish among different facial skin problem types with a high appearance similarity. This is because this appearance similarity between different facial skin problem types should be learned by a CNN model and training the model requires a sufficiently large training set that is configured to represent all appearance variants. Moreover, this similarity adds the complexity of the spatial features to recognize.

3.5. Challenge #5: False Segmentations on Non-Facial Areas

Facial skin problems occur only on the facial area, and hence detection of the skin problems should occur on the facial area. A photo or image for a face typically includes images of eyebrows and hairs on the head. Consequently, a trained CNN model for the purpose of detecting facial skin problems could falsely-detect skin problem instances on non-facial areas.

Figure 4 shows examples of the false-detection of facial skin problems on non-facial areas using a Mask R-CNN model.

Figure 4.

Examples of false detection on non-facial areas.

The left image shows a false detection of a winkle around hairs on the forehead and the right image shows a false detection of acne on the nose. This type of false detection could occur whenever a non-facial area contains shapes that are similar to the facial skin problem types.

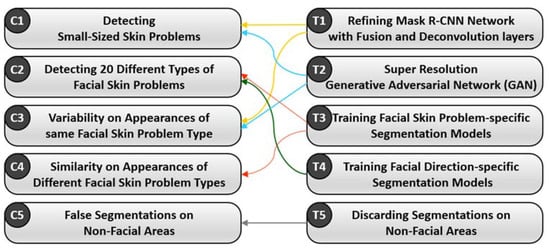

4. Design of Tactics for Remedying the Technical Challenges

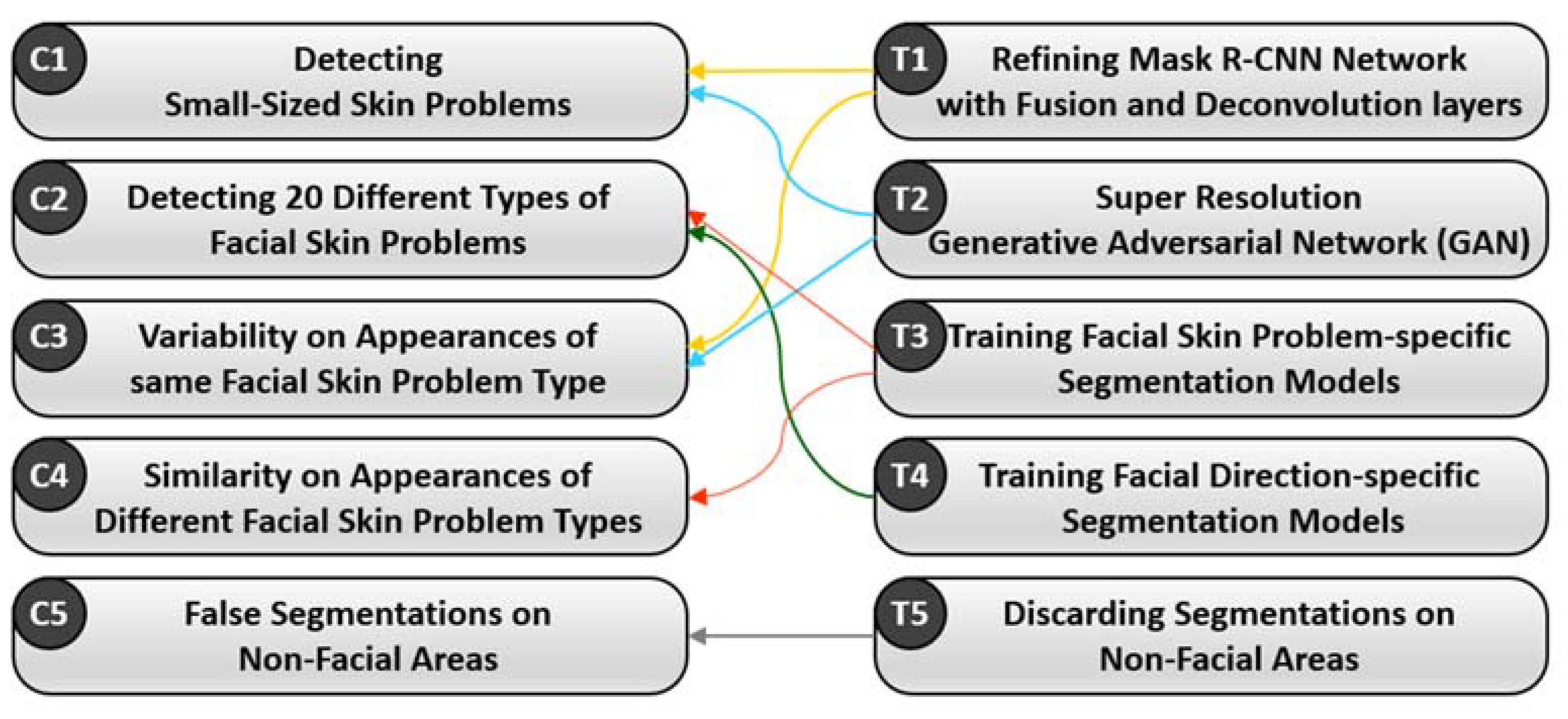

To remedy the technical challenges presented earlier and yield a high performance of detecting and segmenting facial skin problems, a set of 5 effective technical tactics are presented in this section. Each tactic is used to handle one or more technical challenges as shown in Figure 5.

Figure 5.

Effectiveness of the tactics on remedying the technical challenges.

4.1. Design of Tactic #1: Refining Mask R-CNN Network with Fusion and Deconvolution Layers

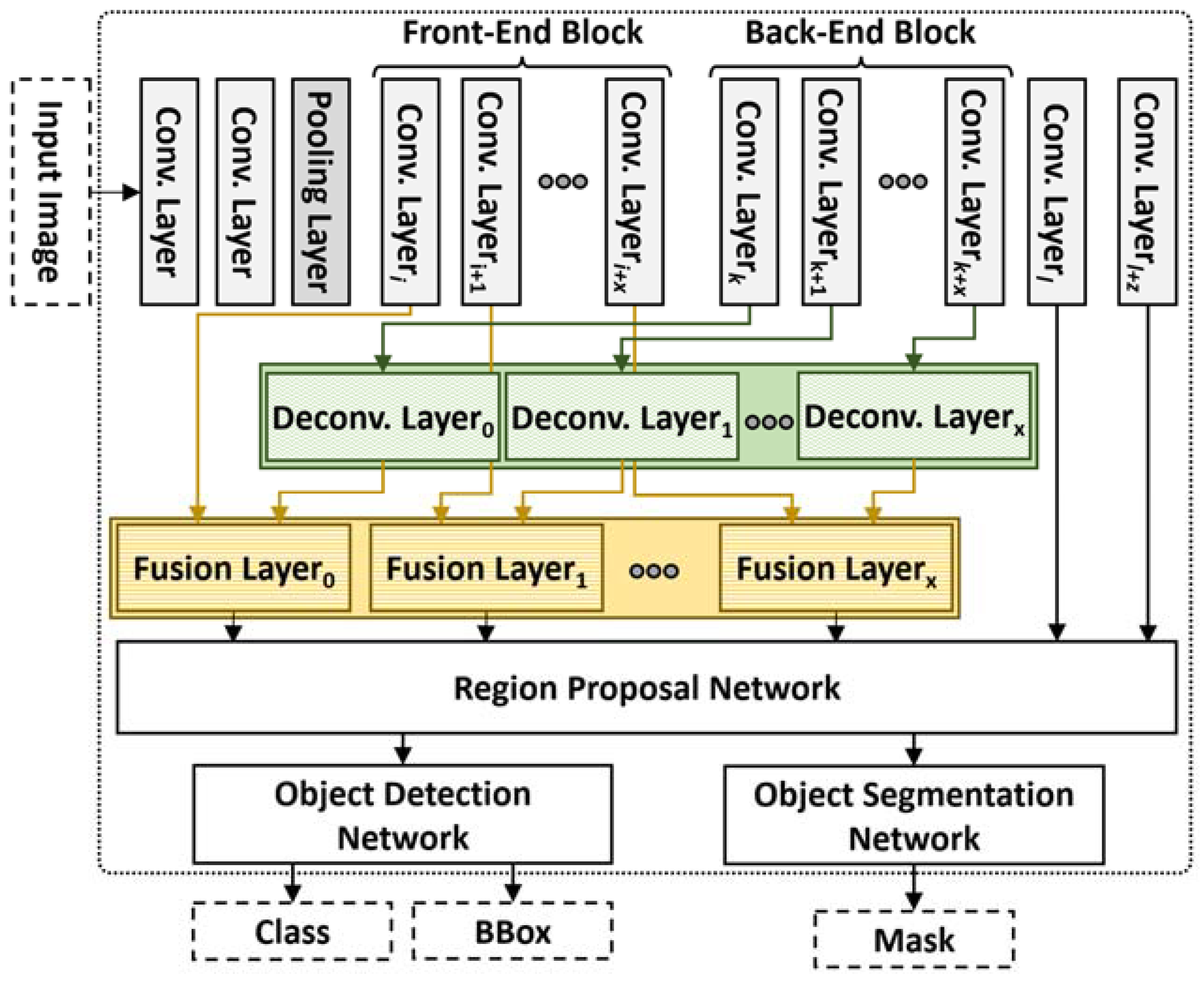

This tactic is to devise a refined version of Mask R-CNN network structure that is suitable for detecting small-sized objects such as facial skin problems. To overcome the limitations of CNN algorithms in detecting small-sized objects, the Mask R-CNN structure is refined with two elements: Fusion Layers and Deconvolution Layers.

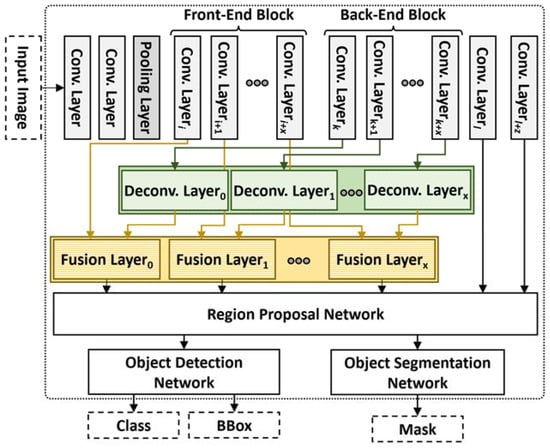

The structure of our refined Mask R-CNN structure is shown in Figure 6.

Figure 6.

Structure of refined mask R-CNN for small-sized objects.

The network structure of a CNN model is shown on the top of the figure, consisting of convolution layers and pooling layers. The CNN structure is refined by performing the following six steps.

Step 1 is to identify the Front-end Block that captures finer-grained features of the input image. The block consists of ‘x’ number of layers that perform convolution and pooling operations to extract features from the input image. The size of this block is determined by the kernel size of each layer in this block and the average size of annotated facial skin problem instances. Front-end Block is defined by the layers that have a smaller kernel size rather than the average size of facial skin problem areas captured on its immediately preceding feature map.

Step 2 is to identify the Back-end Block that captures coarser-grained features of the input image. The block consists of the same ‘x’ number of layers that extract the features of larger-sized objects. The size of this block is same as the same size of the Front-end Block because the Fusion Block requires pairs of a layer in Front-end Block layer and a layer in Back-end Block layer as shown in Figure 6.

Step 3 is to generate Deconvolution Block that consists of ‘x’ deconvolution layers. This block is used to enlarge the size of input feature maps from Back-end Block, which are fed into a Fusion Block. A deconvolution layer performs the reverse operation of convolution, i.e., enlarging the size of the feature map created by a convolution layer. That is, each vector in a feature map is padded with a value of zero.

Step 4 is to generate a Fusion Block that consists of ‘x’ fusion layers. This block is used to fuse two feature maps from two sources: Front-end Block and Back-end Block. That is, each fusion layer receives a feature map from (i + t)th layer in Front-end Block and a feature map from (i + x + t)th layer in Back-end Block, sums up the two feature maps and returns a feature map.

Step 5 is to refine the structure of the Region Proposal Network of the Mask R-CNN model by entering the feature maps from the Fusion Block as inputs to the Regional Proposal Network. Note that the basic structure of the Regional Proposal Network in the Mask R-CNN model is constructed from the feature maps from layers appearing later in the network. In contrast, our refined Regional Proposal Network is enhanced with features maps from Front-end Block that capture the spatial features of small-sized objects.

Step 6 is to apply the Object Detection Network of the Mask R-CNN model to detect facial skin problem instances and the Object Segmentation Network of Mask R-CNN to segment the detected problem areas.

By applying this process of six steps, the enhanced version of the Mask R-CNN model can detect target objects of a small size, and consequently, the performance of facial skin problem diagnosis can significantly be increased.

Hyperparameters of the proposed network are set to 0.001 as the learning rate, 0.3 as the detection non-maximum suppression (NMS) threshold, 0.5 for the region of interest (ROI) positive ratio, 0.7 as a threshold for RPN and NMS, (0.5, 1, 2) as RPN anchor ratio, (8, 16, 32, 64, 128) as the RPN anchor size, localization loss (smooth L1) and confidence loss (Softmax) for loss functions in proposed front-end and back-end blocks, localization, and average binary cross-entropy loss. In addition, the loss function for the refined mask R-CNN is computed by the sum of loss functions for classification, bounding box detection, and segmentation.

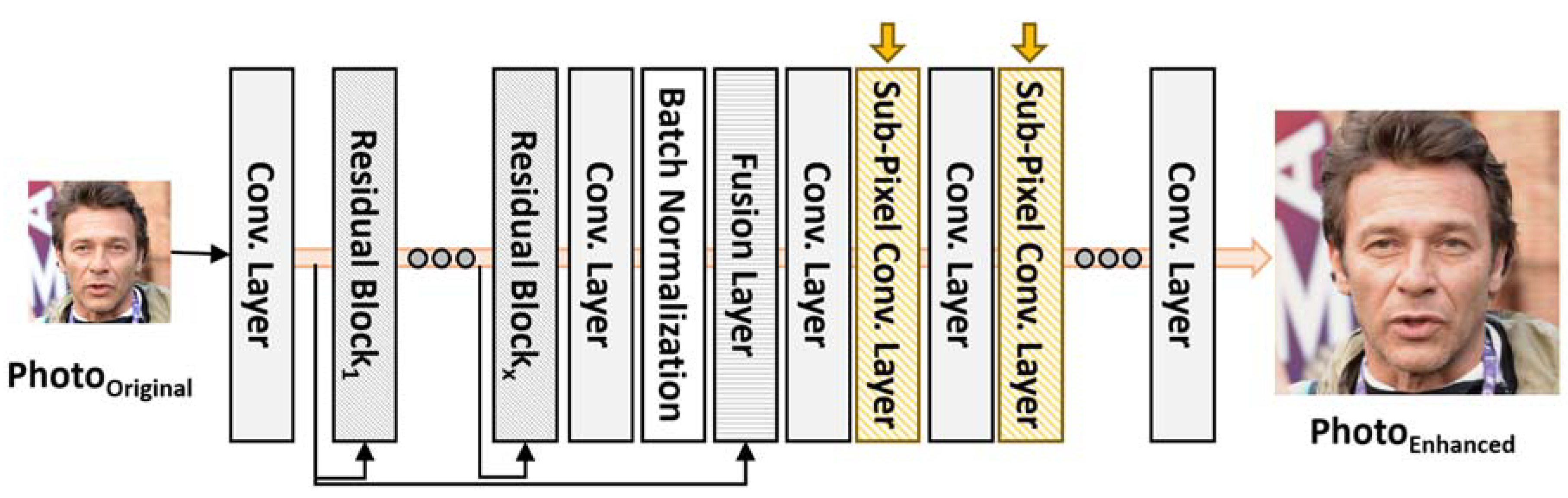

4.2. Design of Tactic #2: Super Resolution Generative Adversarial Network (GAN) for Small-sized and Blurry Images

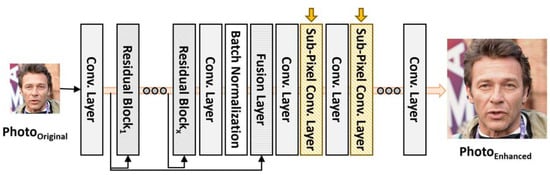

This tactic is to enhance the quality of small-sized object images, i.e., facial skin problem instances, by applying a Super-Resolution Generative Adversarial Network (SR-GAN) [45]. Generative Adversarial Network (GAN) consists of a generator network and a discriminator network to compete with each other to generate accurate predictions. GR-GAN is a GAN model that upscales and improves the quality of low-resolution images. That is, the structure of the Generator in GAN is enhanced with Sub-Pixel Convolution Layers as shown in Figure 7.

Figure 7.

Generator in SR-GAN with Sub-Pixel convolution layers.

A Sub-Pixel Convolutional Layer is to enlarge the size of the feature map by combining the vectors in feature maps into a single feature map. Then, the resulting feature map consists of a larger number of vectors than the input feature map. Accordingly, an original image is enhanced with more detailed image features.

The effect of applying SR-GAN on facial skin problem diagnosis is to enlarge the small-sized facial skin problem instances and to result in a more accurate problem diagnosis with Mask R-CNN models.

4.3. Design of Tactic #3: Training Facial Skin Problem-Specific Segmentation Models

This tactic is to train a segmentation model for each type of facial skin problems. This is based on the observation on the feature extraction schemes of CNN algorithm. CNN network consists of convolution layers to learn spatial characteristics of objects, pooling layers to reduce the dimensions of the feature maps, flattening layers to convert the resultant 2-dimensional arrays into a single long continuous linear vector, and fully connected layers to connect every input neuron to every output neuron [46,47].

However, CNN models provide lower performance for detecting multi-class objects due to the learning scheme of spatial features with convolution layers and the dimension reduction scheme with pooling layers [46,47]. Generating this phenomenon, detecting multi-class objects is harder than detecting single-class objects [48,49]. For example, a CNN model detecting people would perform better than a CNN model detecting the people with gender information, i.e., male and female.

Another example is to detect animals in a zoo. Detecting a single-class object, such as detecting ‘dog’, should outperform comparing to detecting 100 different animal types in a zoo. Then, the CNN model for 100-class objects must handle the appearance features of all 100 types of animals. Through the repetition of applying convolution and pooling in CNN, the spatial features of 100 animal types are abstracted by cancelling some of the acquired features through activation functions, such as ReLU.

Another cause of the lower performance of the multi-class CNN model is the technical hardship in distinguishing objects of different types but having some degree of appearance similarity [49,50,51,52]. For example, domestic dogs, wolves, coyotes, foxes, jackals, and dingoes belong to different animal classes, but there exist a number of similar appearance features among different types of animals, such as between domestic dogs and wolves and between coyotes and foxes.

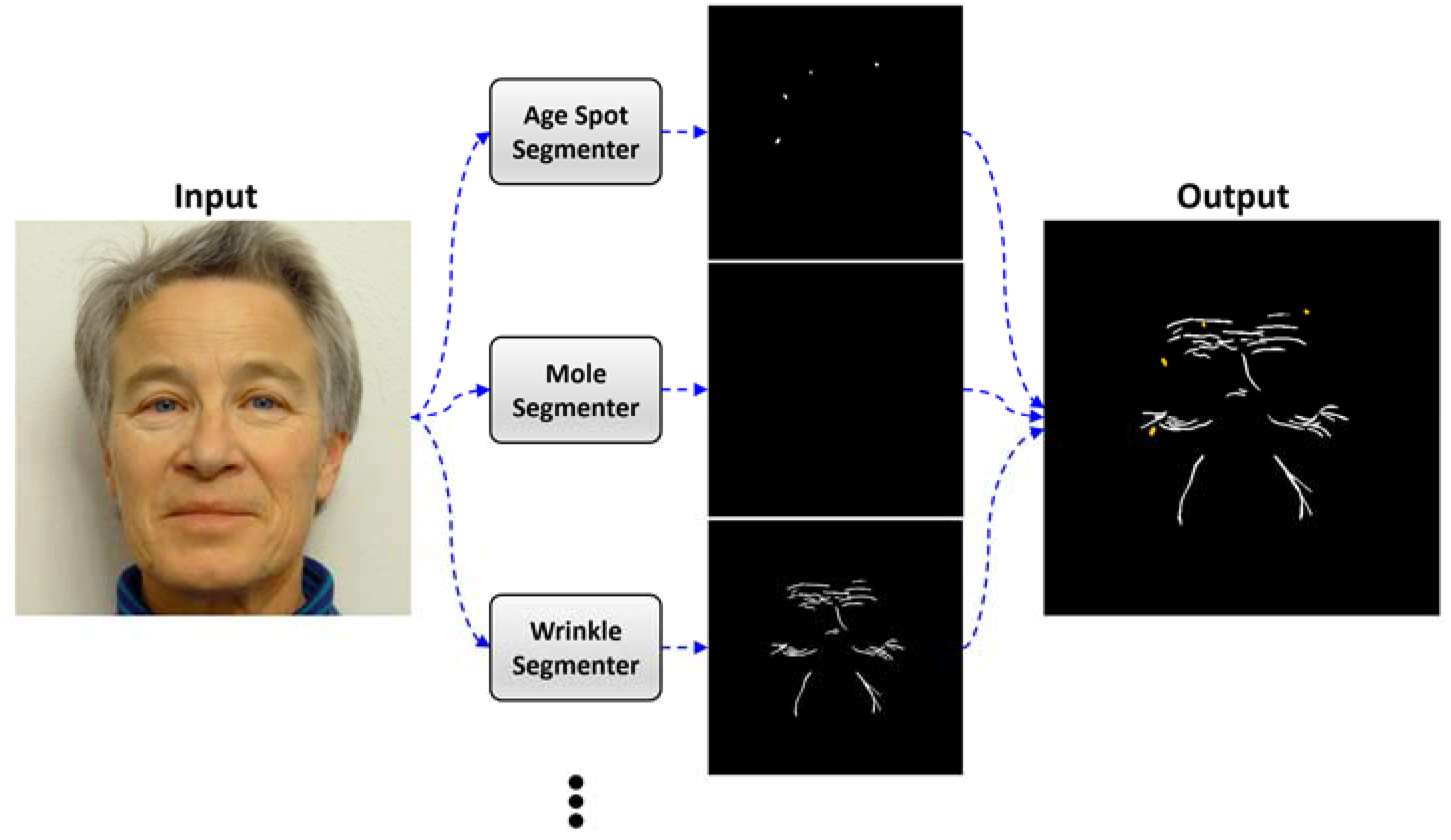

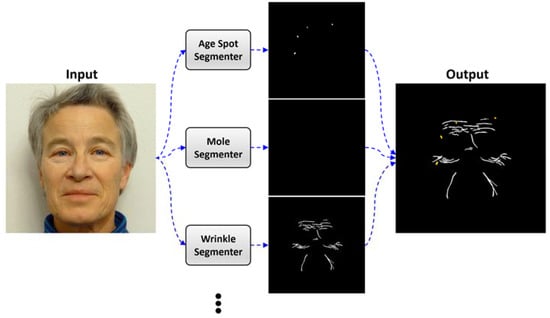

Hence, this tactic is to train k segmentation models for k types of facial skin problems, rather than training a single segmentation model to recognize all k types of facial skin problems. That is, for a given facial image, the k segmentation models are individually applied to detect and segment its specific facial skin problem type. Then, the results of applying k segmentation models are integrated into a single output as shown in Figure 8.

Figure 8.

Applying k segmentation models and integrating the results of all segmentations.

In the figure, the facial image is fed into k segmentation models, which will detect and segment its specific facial skin problem type. The results are integrated into a single output.

By specializing segmentation models by their facial skin problem types, the performance of facial skin diagnosis is increased over employing a single integrated segmentation model.

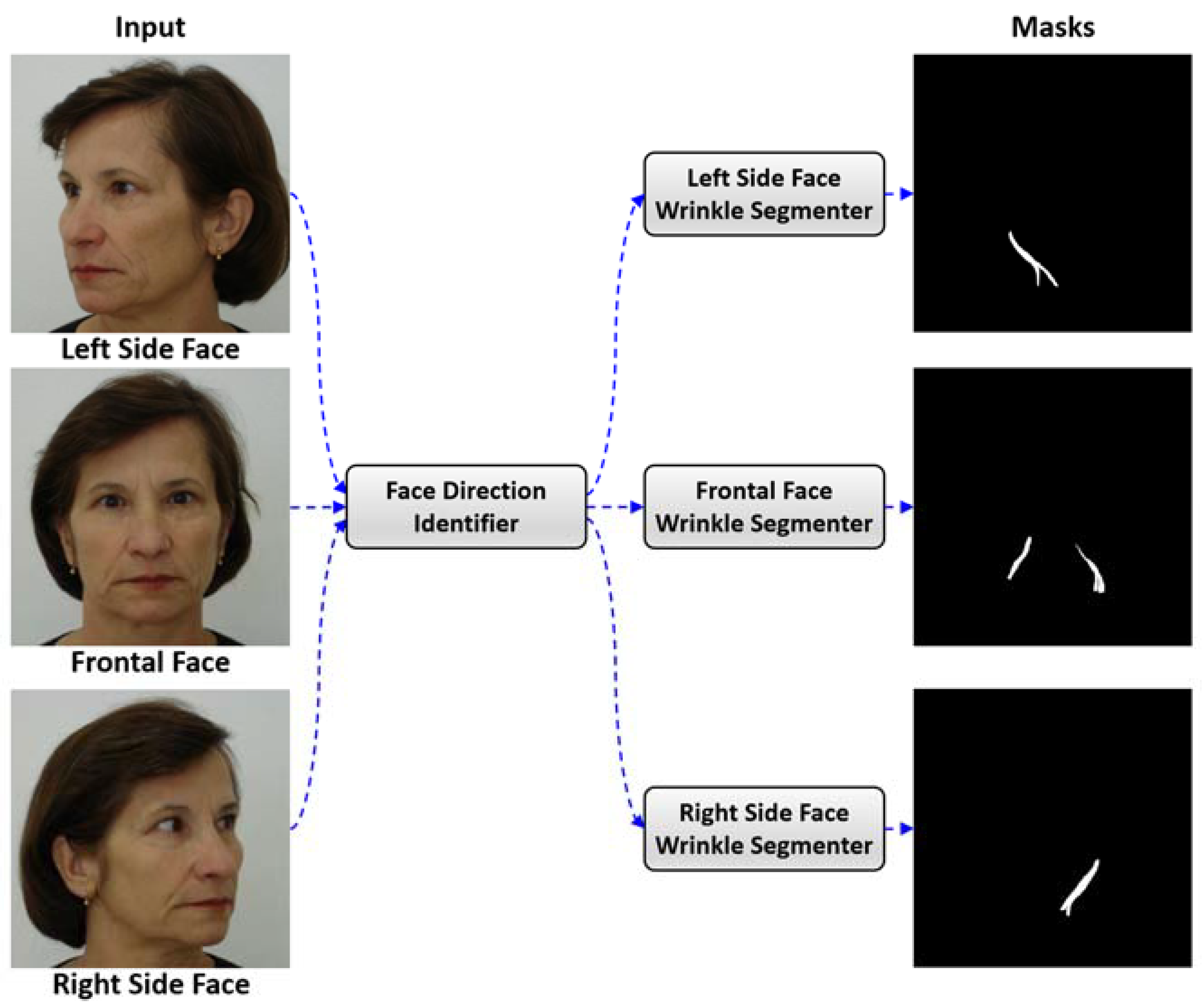

4.4. Design of Tactic #4: Training Face Direction-Specific Segmentation Models

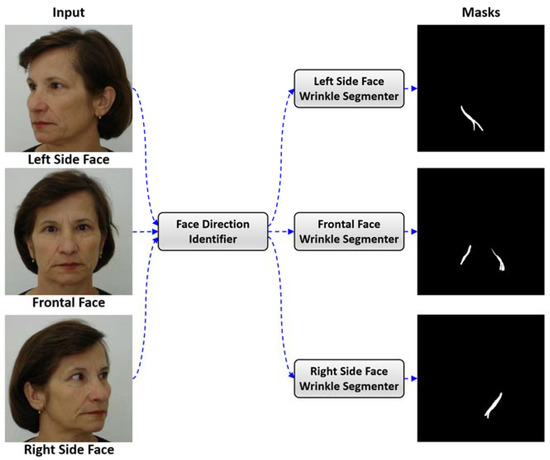

This tactic is to train a segmentation model for each direction of a face, i.e., left-side face, frontal face, and right-side face. A face photo is taken from one direction, and the photo cannot capture the facial skin problem instances on other directions, such as instances near ears or side-cheeks. As a result, a single segmentation model to detect facial skin problem instances on all different areas cannot correctly detect all the skin problem instances.

This tactic is to handle this problem by applying face direction-specific segmentation models. To utilize this tactic, a set of 3 facial photos is required as input for the facial skin problem diagnosis system. In order to determine the direction of the face photo, a Face Direction Identifier is designed by applying Facial Landmark Detection model [53]. Using the face contours and locations of nose, mouth, and the eyes, its direction can automatically be identified.

An example of applying face direction-specific segmentation models is shown in Figure 9.

Figure 9.

Applying face direction-specific segmentation models.

As shown in the figure, Face Direction Identifier determines the direction of each input face photo. Then, its specific segmentation model is applied to diagnose the facial skin problem instances, and their results are integrated.

By specializing segmentation models by the directions of a face photo, the performance of facial skin diagnosis is increased over employing a single integrated segmentation model.

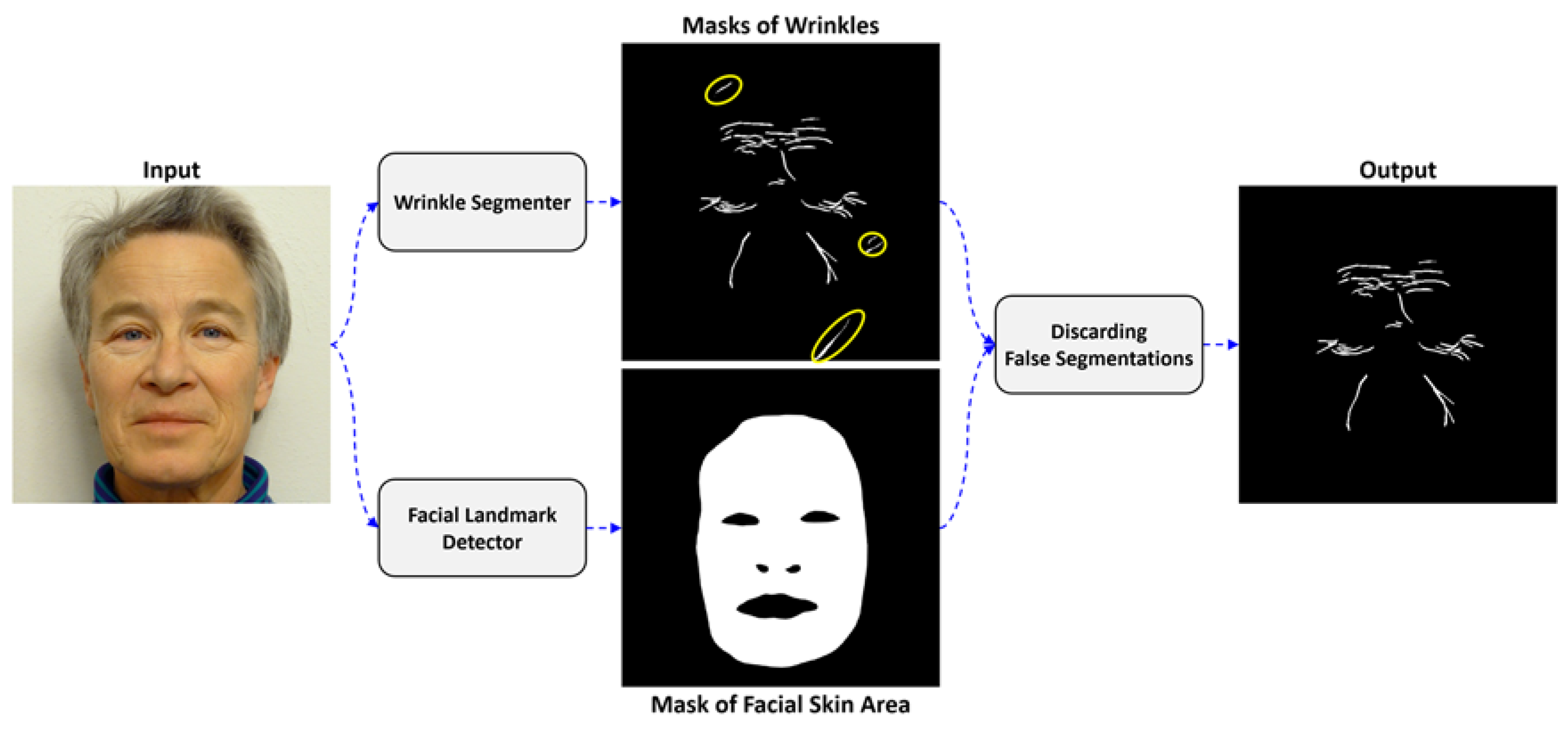

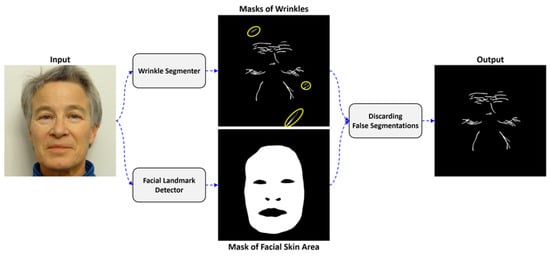

4.5. Design of Tactic #5: Discarding Segmentations on Non-Facial Areas Using Facial Landmark Model

This tactic is to discard the false segmentations made on non-facial areas by applying a Facial Landmark Detection model. The false segmentations can effectively be discarded with the following steps.

Step 1 is to detect facial skin problem instances and facial landmarks for each facial image. The Facial Landmark Detection model is used to detect the landmarks on the face image.

Step 2 is to generate a mask around only the skin area of the facial image. That is, the eyes, eyebrows, mouth, nostril, and hairs are excluded in this mark.

Step 3 is to remove the facial skin problem instances in non-facial areas. This is done by overlaying the two types of masks, masks of facial skin problem instances and the mask of the facial skin area, and discarding segmentations made on the outside of the mask of the facial skin area.

An example of discarding false segmentations is shown in Figure 10.

Figure 10.

Example of discarding false segmentations.

In the figure, the Wrinkle Segmenter model produces marks of detected wrinkles. Three of the wrinkle segmentations are made on a non-facial area. The Facial Landmark Detector produces a mask of facial skin area. By overlaying two types of masks, the false segmented wrinkles are discarded.

4.6. Design of the Main Control Flow

The main control flow of the facial skin diagnosis system is to invoke the functional components that implement the proposed 5 tactics. The control flow is shown in the following algorithm as shown in Algorithm 1.

| Algorithm 1. Main control flow of ‘Facial Skin Problem Diagnosis’ system. | |

| Input: photos: A list of 3 face photos (per person) Output: FSPResults: A list of detected facial skin problem instances | |

| 1: | Main() { |

| 2: | FSPResults = []; |

| 3: | SRGAN = // SR-GAN Model for upscaling and improving quality of Images |

| 4: | FaceLandmarkDetector = // Model for Face Landmark Detector |

| 5: | upscalingRatio = // Ratio of upscaling image by SRGAN |

| 6: | segmenters = // set of segmentation models for face direction and face skin problems |

| 7: | |

| 8: | for (photo in photos){ |

| 9: | // Step 1. Identify Face Directions (regarding the tactic #4) |

| 10: | landmarks = FaceLandmarkDetector.identify(photo); |

| 11: | locMouth = // Location of Mouth from detected Landmarks |

| 12: | locNose = // Location of Nose from Detected Landmarks |

| 13: | locRt = // Location of right side of face in detected landmarks |

| 14: | locLt = // Location of Left side of face in detected landmarks |

| 15: | if ((|locMouth-locLT| < |locMouth-locRT|) & (|locNose-locLT| < |locNose-locRT|)) |

| 16: | curDirection = LEFT; |

| 17: | else if ((|locMouth-locLT| > |locMouth-locRT|) & (|locNose-locLT| > |locNose- |

| 18: | locRT|)) |

| 19: | curDirection = RIGHT; |

| 20: | else curDirection = FRONTAL; |

| 21: | |

| 22: | // Step 2. Invoke Facial Skin Problem-specific Segmenters (regarding the tactic #3) |

| 23: | listFSPs = []; |

| 24: | for (segmenter_type in segmenters[curDirection]){ |

| 25: | SEGRef_type = // Segmenter based on Refined mask R-CNN from segmenter_type |

| 26: | SEGOrg_type = // Segmenter based on Original mask R-CNN from segmenter_type |

| 27: | // Step 3. Applying Refined mask R-CNN (regarding the tactic #1) |

| 28: | resultRef = SegRef_type.segment(photo); |

| 29: | |

| 30: | // Step 4. Enhance the Quality of Facial Images with SR-GAN model (regarding |

| 31: | the tactic #2) |

| 32: | sections = {SECi| SEC in photo, ∀SEC = photo}; // Divide Photos |

| 33: | resultOrg = []; |

| 34: | for (SEC ∈ sections){ |

| 35: | enlargedSEC = SRGAN.enlarge(SEC); |

| 36: | result = SEGOrg_type.segment(enlargedSEC); |

| 37: | resultOrg ← ( result // After Decreasing size of result to (1/upscalingRatio) |

| 38: | } |

| 39: | // Determine the facial skin problem |

| 40: | // (1) Select a result from step 3 and Step 4 |

| 41: | if(size(resultOrg) < thInstanceSize) |

| 42: | result = resultRef; |

| 43: | else |

| 44: | result = resultOrg; |

| 45: | // (2) Check whether the segmented instances classified by different FSP |

| 46: | for (fsp in listFSPs){ |

| 47: | if (size(fsp∧result)/max(size(fsp), size(result)) > thSize){ |

| 48: | if ((confidence score of fsp) > (confidence score of result)) |

| 49: | // Remain fsp |

| 50: | else{ |

| 51: | // discard fsp from listFSPs and add result |

| 52: | } |

| 53: | } |

| 54: | } |

| 55: | } |

| 56: | // Step 5. Discard false segmentations on non-facial skin area (regarding the tactic |

| 57: | #5) |

| 58: | faceArea = // Mask for face skin area excepting eyes, nostrils, and mouth. |

| 59: | segResult = FSPArea ∧ faceArea; // Overlay both segmented area |

| 60: | FSPResults ← ( (curDirection, segResult); |

| } | |

| return FSPResults; | |

| } | |

As shown in the algorithm, the main control of the diagnosis system reads facial photos as the input and invokes the functional components that implement the 5 tactics.

5. Experiments and Assessment

This section is to present the results of experiments by applying the facial skin segmentation models trained with the proposed tactics.

5.1. Datasets for Training Models

The data collection of face photos used for training, evaluation, and experiments consists of 2225 face photos at a resolution of 576 × 576 pixels. Each photo contains one or more instances of acne, age spots, moles, rosacea, and wrinkles.

In the experiments, only 5 types of facial skin problem types are considered for the following criteria.

- A minimal set of essential facial skin problem types is needed; performing experiments with photos of all 20 different facial skin problem types requires a dataset of more than 10,000 photo images and annotating the facial skin problem areas on each photo manually by researchers would require an effort of more than 30 person-months.

- A dataset including facial skin problem types that are relatively large in size and also small in size is needed. Hence, photos showing wrinkles and rosacea are selected for the large-sized types and acne, mole, and age spots are selected for the small-sized types.

- A dataset including different facial skin problem types but having some similarities in their appearances is needed. Hence, photos showing acne, age spots, and moles are selected.

- A dataset including blurry boundaries between the problem-free skin areas and facial skin problem areas is needed. Hence, photos showing acne, rosacea, and wrinkles are selected.

The face photos were acquired from 3 different sources: Acne 04 dataset [54] and Flickr-Faces-HQ dataset [55] available on GitHub repositories [56,57] and FEI face dataset available on Centro University website [58].

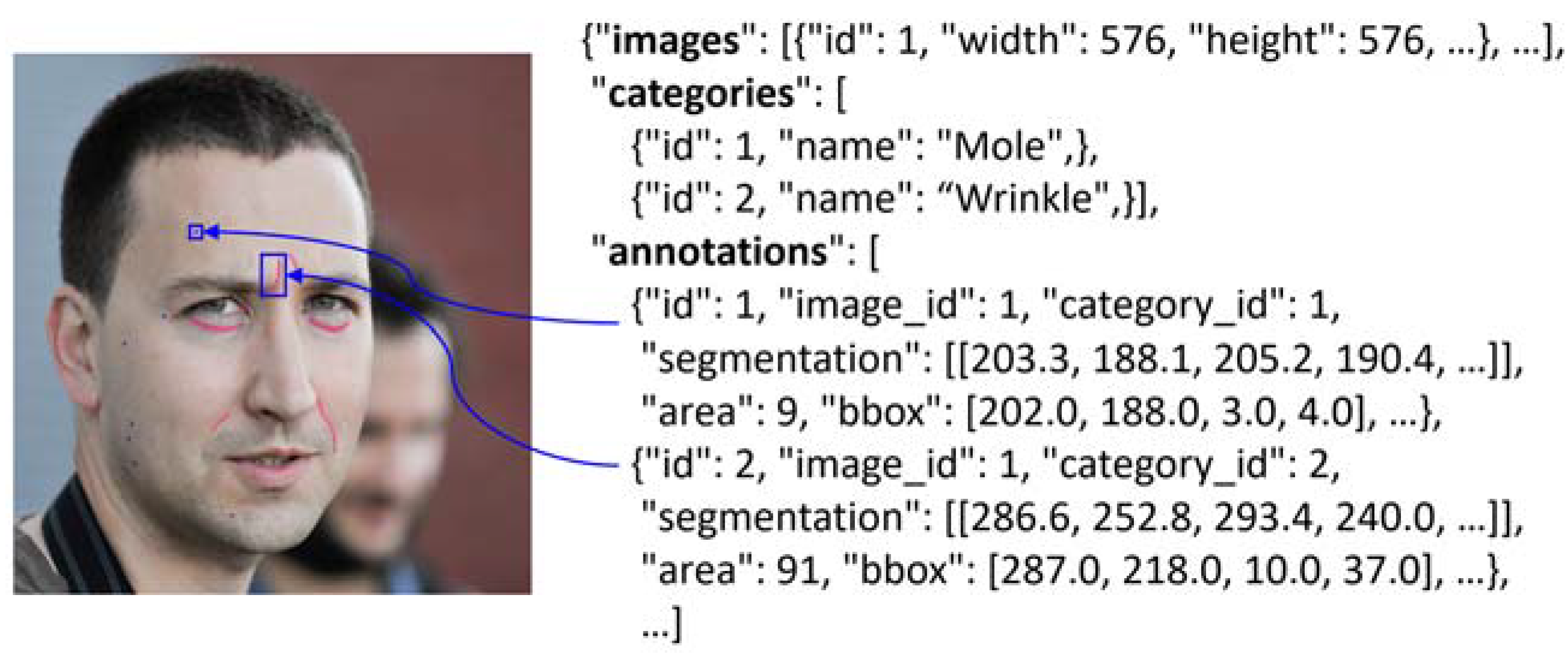

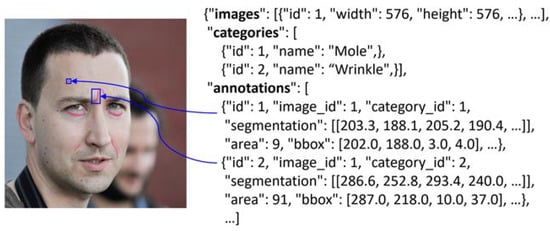

To train CNN models to detect facial skin problems, each photo had to be manually annotated in all the areas of facial skin problem instances. For this task, COCO Annotator was utilized as an annotation software tool [59] and an XP-Pen Artist 15.6 Pro stylus pen was used as a touch-pad device. Since a photo may contain several kin problem instances, the task of manually annotating the 2225 photos demanded a high effort of 8 person-months.

An example of manual annotations for facial skin problem instances is shown in Figure 11.

Figure 11.

Face photo with annotations and its JSON representation.

The left-side of figure shows annotations for two facial skin problems. Moles are annotated in Blue, and wrinkles are annotated in Red. The right-side of the figure shows the JSON representation of the annotations, which is required to train the model.

By using our collection of 2225 face photos with annotations on facial skin problem instances, a total of 31 Mask R-CNN models were trained. The data collection is utilized as a training set, validation set, and test set, as shown in Table 4.

Table 4.

Distribution of Data Collection.

The training set consists of 1557 photos that are 70% of the data collection. The validation set consists of 223 photos that are 10% of the data collection. The test set consists of 445 photos that are 30% of the data collection.

Hyperparameters for training models are set to 0.001 as the learning rate, 1000 as epochs, and 5 as batch size. The early stopping is applied to prohibit overfitting the model to the training set.

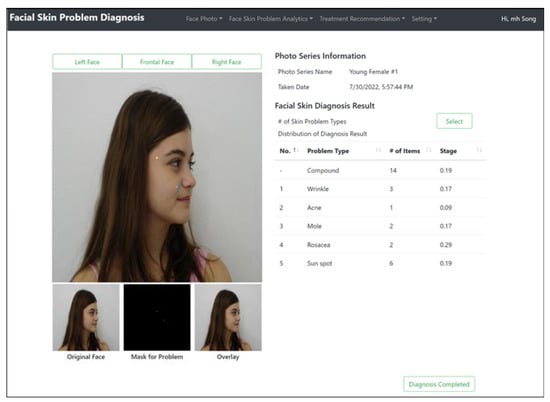

5.2. Proof-of-Concept Implementation

A web-based system of facial skin diagnosis has been implemented in Python using the following libraries: TensorFlow for developing CNN models, NumPy for processing operations for mask data, OpenCV for processing facial photos, MySQL for managing database, and Django framework for building the web site.

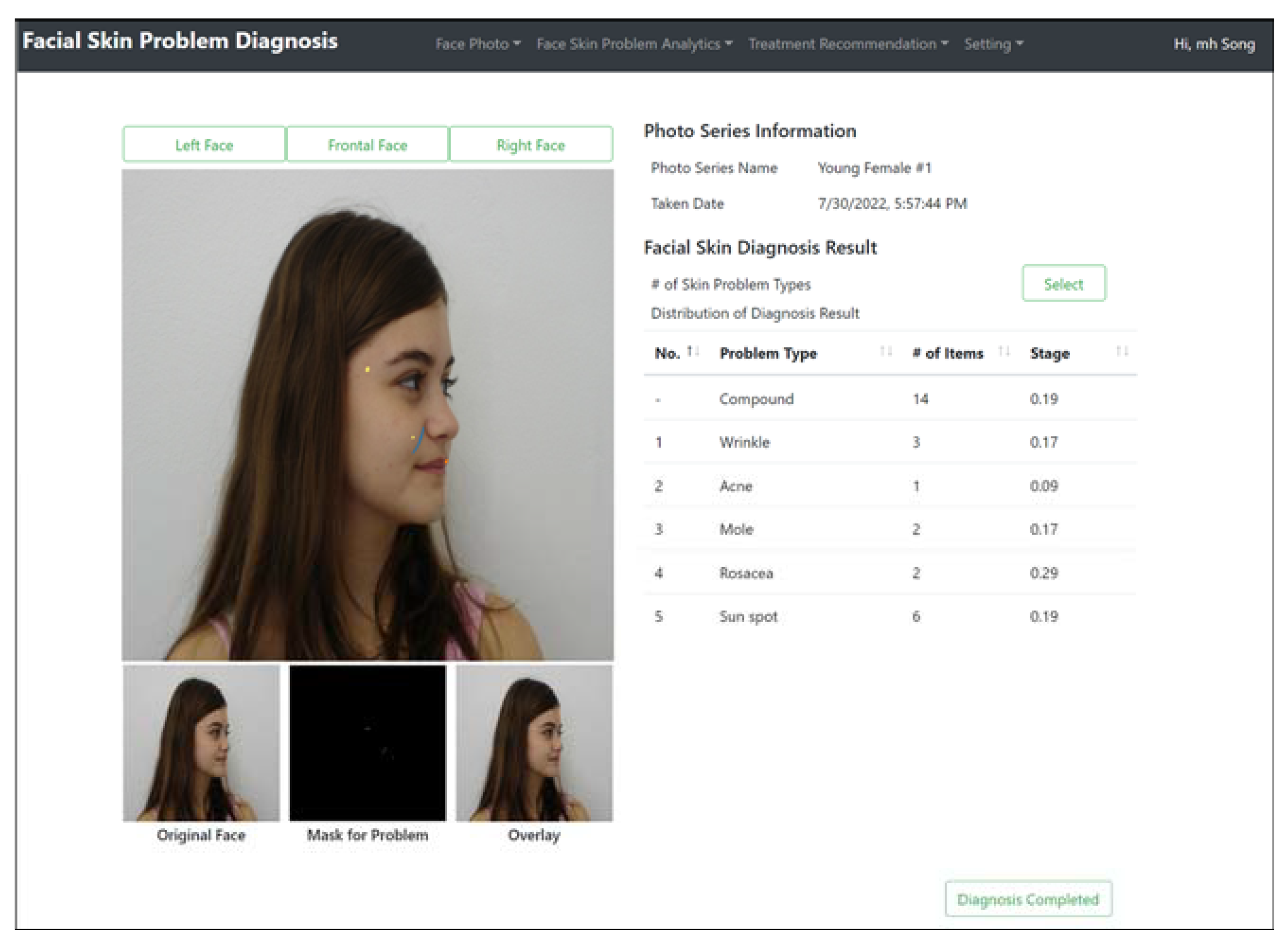

Figure 12 shows the web user interface of this system.

Figure 12.

User Interface of Facial Skin Problem Diagnosis System.

The original image, masks generated around the facial skin problems, and overlay of the mask on the original image are shown on the left-side of the figure. The right-side of the figure shows the results of identifying facial skin problem instances.

5.3. Performance Metric for Facial Skin Problem Diagnosis

Dice Similarity Coefficient (DSC) is an appropriate measure in evaluating the performance of segmenting the models. DSC is to measure the ratio of matching the segmented areas on the labeled areas on images. Its metric is given below.

Let LB be a mask for the label data of a photo, and Let SEG be a mask of the segmentation results from the photo. Let be the area of masked instances in an input mask X. DSC is measured by the sum of masked areas for LB and SEG over the area containing the intersection area in LB and SEG. The range of DSC is between 0 and 1. The more accurate the segmented results, a DSC value close to 1 is returned.

5.4. Experiment Scenarios and Results

A set of experiments were conducted to evaluate the effectiveness of the 5 tactics, an experiment to evaluate the integration of all the tactics, and an experiment to compare our approach to other known approaches.

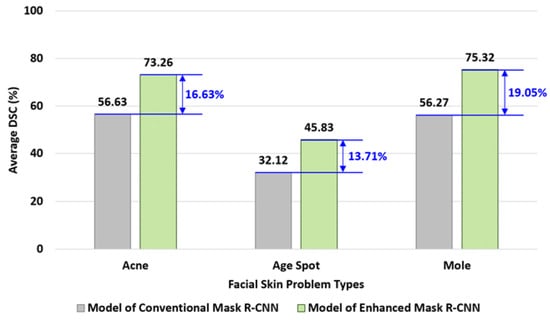

5.4.1. Experiment for Tactic #1: Refined Mask R-CNN Segmentation Models

This experiment is to evaluate the effectiveness of the Refined Mask R-CNN model. This is done by training two segmentation models: a model with Mask R-CNN structure and a model with the refined Mask R-CNN structure with fusion and deconvolution layers.

The dataset of 410 face photos was used and each photo contains one or more facial skin problems of acne, age spots, and moles. These facial skin problem types are quite small in size, and the bounding box of the largest problem instance in the dataset is sized to (12 × 12) pixels.

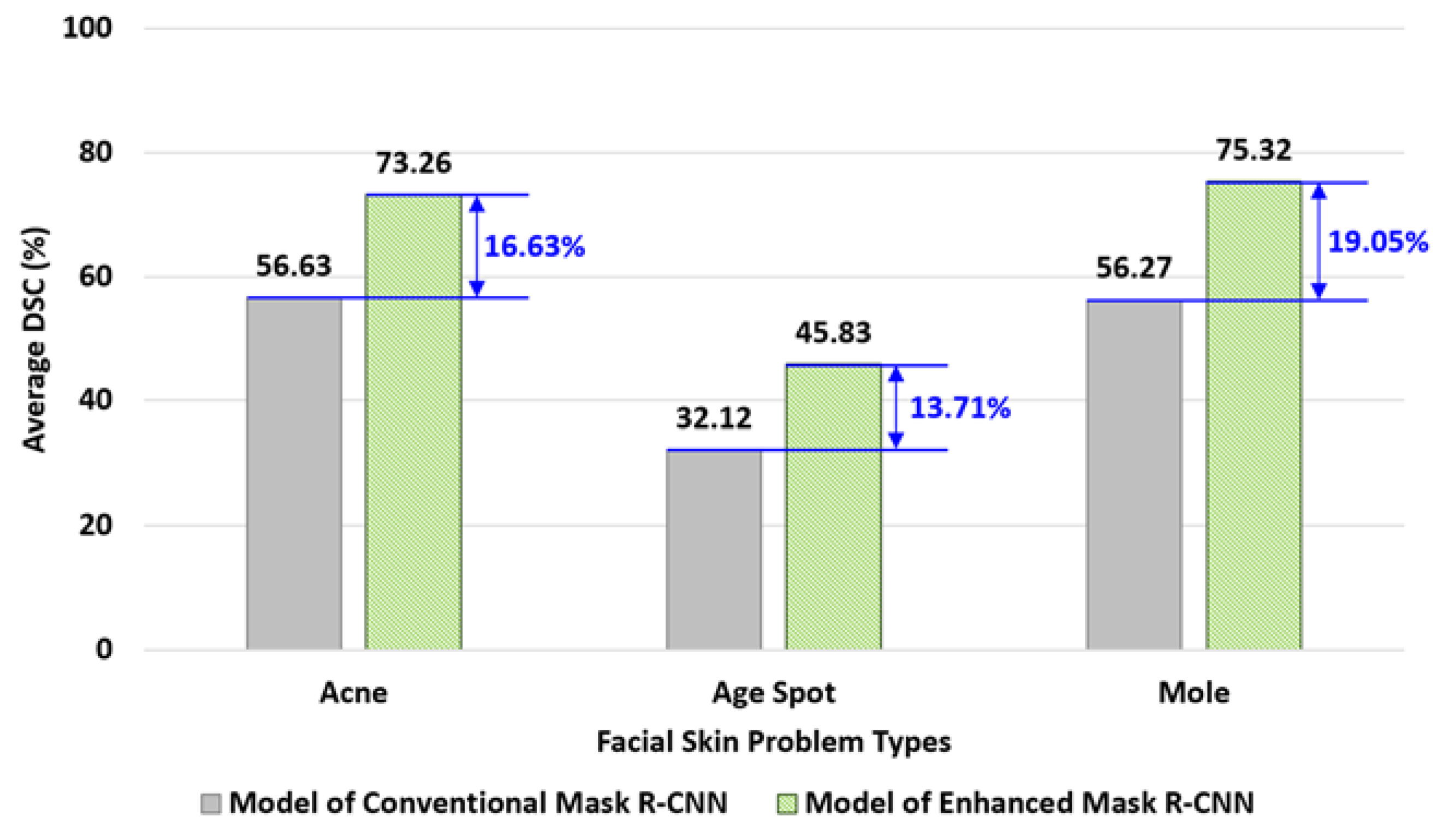

The performances of the two models are compared in Figure 13.

Figure 13.

Comparing performances of conventional Mask R-CNN and enhanced Mask R-CNN models.

In this experiment, a segmentation model of conventional Mask R-CNN was applied to detect facial skin problems on all the face photos, the performances were measured with DSC, and computed the average of all DSC measurements. Then, a segmentation model trained with the enhanced Mask R-CNN was trained and applied to measure its performance in DSC.

For the facial photos with acne problems, the conventional Mask R-CNN model yielded 56.63% of DSC where the Enhanced Mask R-CNN model yielded 73.26% as shown in the figure. A significant degree of performance has been gained.

As the summary of the experiment, the performances of segmenting acne, age spots, and mole problems were increased by 16.63%, 13.7%, and 19.05%, respectively, and the average of performance gains for all 3 types of facial skin problems is 16.46%.

5.4.2. Experiment for Tactic #2: Super Resolution GAN Model

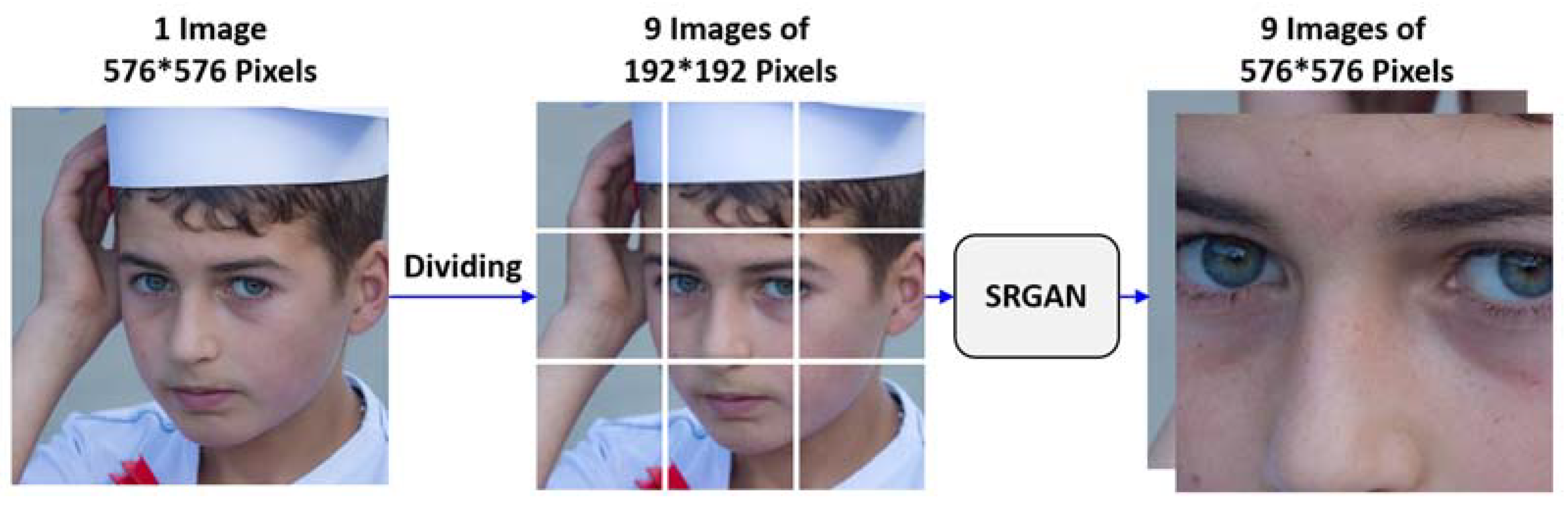

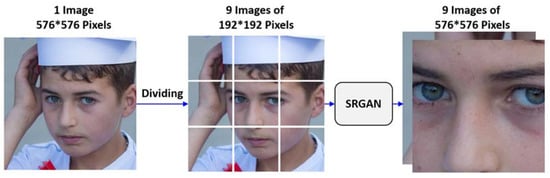

This experiment is to evaluate the effectiveness of applying Super Resolution GAN model in enhancing the quality of facial images. A dataset of 3 facial skin problem types was made: acne, rosacea, and wrinkle. A segmentation model was trained with both the Mask R-CNN and the SR-GAN structure and performed the experiments. The results of applying the super resolution tactic with SR-GAN are shown in Figure 14.

Figure 14.

Result of applying super resolution with SR-GAN.

In the figure, the original face image is partitioned into 9 images of (192 × 192) size, which is required by the trained Mask R-CNN model. Then, each image is fed into the SR-GAN model that will enhance the quality of images as shown in the figure.

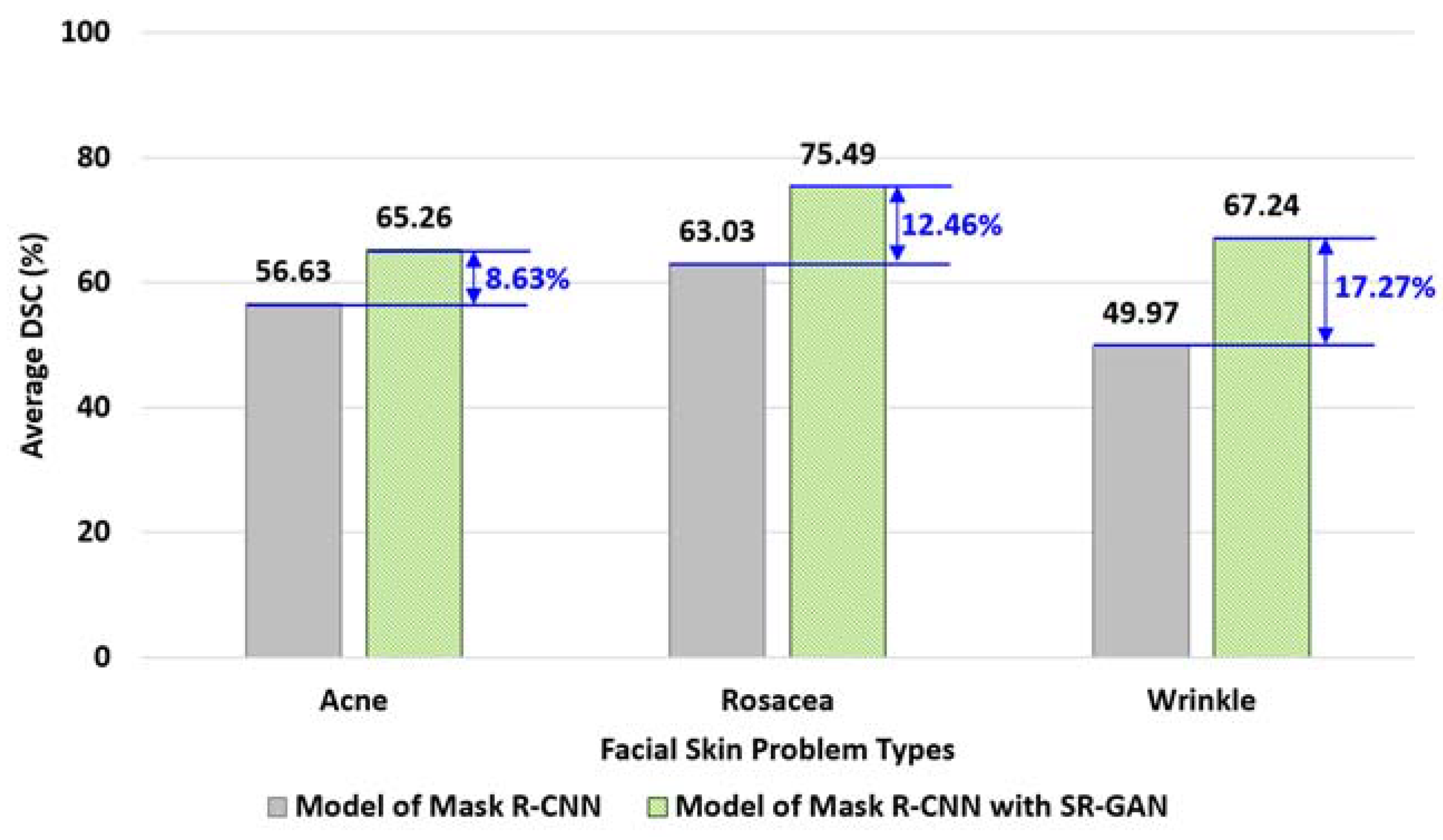

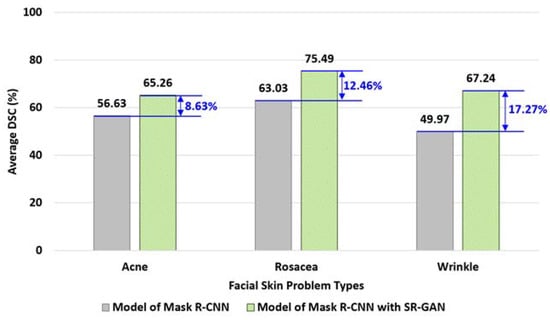

To compare the performance of super resolution, a segmentation model was trained with conventional Mask R-CNN and another segmentation model with both Mask R-CNN and SR-GAN. The comparison of the performances of the two models is shown in Figure 15.

Figure 15.

Comparing performances of segmentation with and without SR-GAN.

The Mask R-CNN segmentation model was applied to detect all the face photos, the performance was measured in DSC, and the average of all the DSC measurements was computed. Then, a segmentation model with both Mask R-CNN and SR-GAN was trained and applied to perform the same operations.

For wrinkle problems, the DSC with the Mask R-CNN model yielded 49.97% of DSC where the Enhanced Mask R-CNN model yielded 67.24% of DSC, as shown in the figure. A significant degree of performance has been gained.

As the summary of the experiment, the performances of segmenting acne, rosacea, and wrinkle problems were increased by 8.63%, 12.46%, and 17.27%, respectively, and the average of performance gains for all 3 types of facial skin problems is 12.79%.

5.4.3. Experiment for Tactic #3: Facial Skin Problem-Specific Models

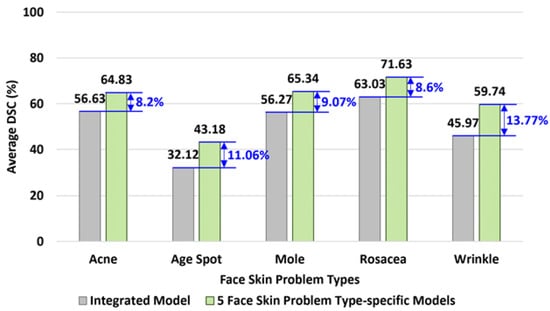

This experiment is to evaluate the effectiveness of applying facial skin problem-specific models instead of using a single integrated model. A dataset of face photos for 5 types of skin problems was used: acne, age spots, moles, rosacea, and wrinkles.

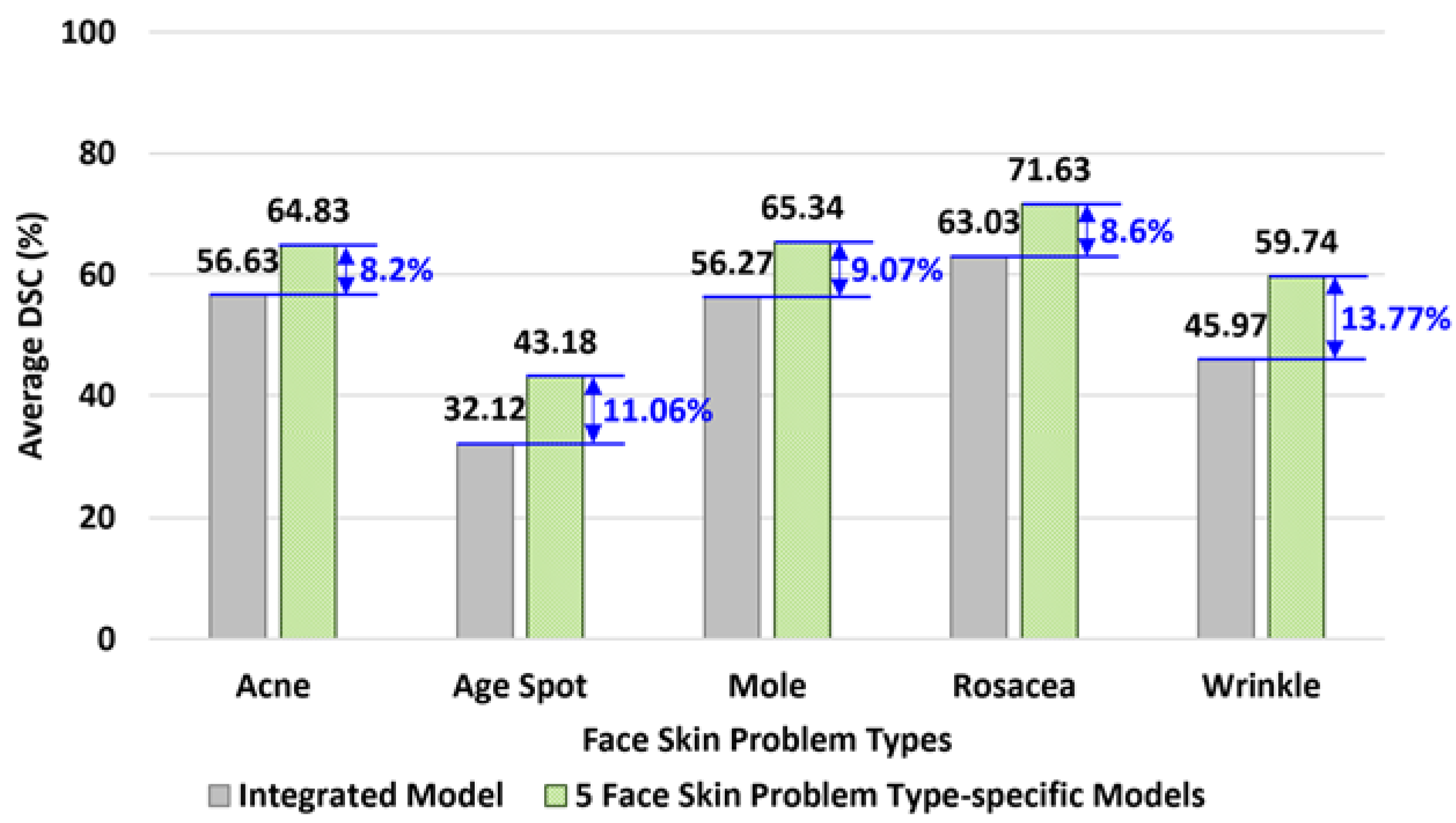

An integrated Mask R-CNN model for all 5 types of facial skin problem types was trained. Then, a set of 5 individual segmentation models for 5 different facial skin problem types were trained. Then, their performances were measured and compared as shown in Figure 16.

Figure 16.

Comparing performance of integrated model and facial skin problem type-specific model.

For wrinkle problems, the integrated segmentation model yielded 45.97% of DSC where the wrinkle-specific segmentation model yielded 59.74% of DSC, as shown in the figure. A significant degree of performance has been gained.

The performances of segmenting acne, age spots, moles, rosacea, and wrinkles were increased by 8.2%, 11.06%, 9.07%, 8.6%, and 13.77%, respectively, and the average of performance gains for all 5 types of facial skin problems is 10.14%.

5.4.4. Experiment for Tactic #4: Face Direction-Specific Models

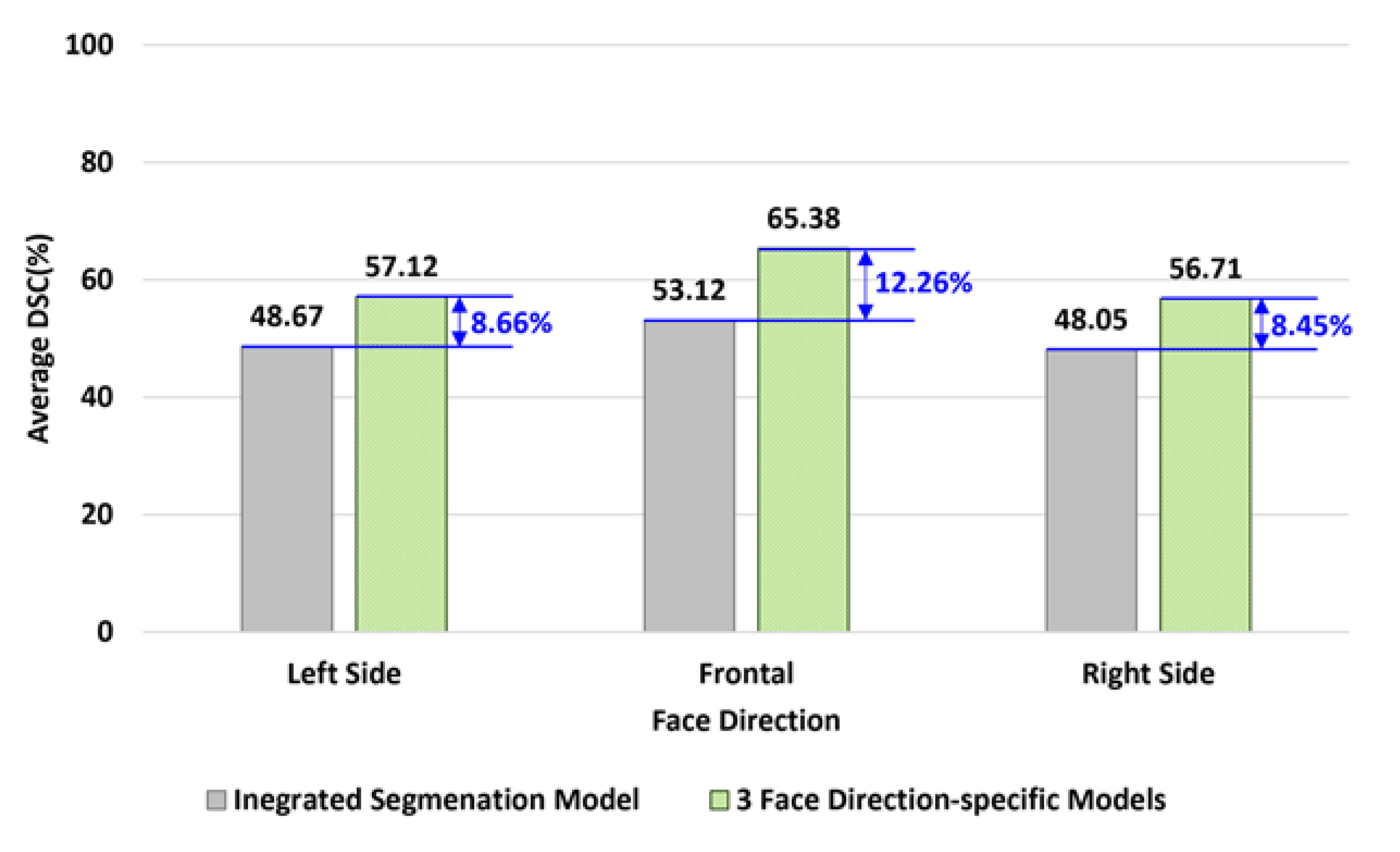

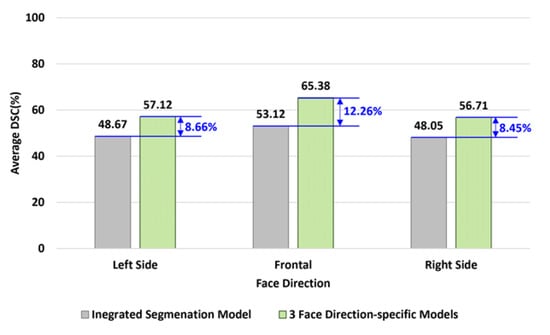

This experiment is to evaluate the effectiveness of applying face direction-specific models instead of using a single integrated model. A dataset of face photos taken in 3 different directions was used in this experiment.

A Mask R-CNN model was trained to segment face photos in any direction. Then, a set of 3 individual models for 3 face directions were trained: left-side, frontal, and right-side directions. Then, their performances were measured and compared, as shown in Figure 17.

Figure 17.

Comparing performances of integrated model and face direction-specific models.

For the photos of frontal face, the integrated segmentation model yielded 53.12% of DSC where the frontal direction-specific model yielded 65.38%, as shown in the figure. A significant degree of performance has been gained.

The average performances of segmenting face photos showing the left side face, frontal face, and right side face were increased by 8.45%, 12.26%, and 8.66%, respectively, and the average of performance gains for all 3 face directions is 9.79%

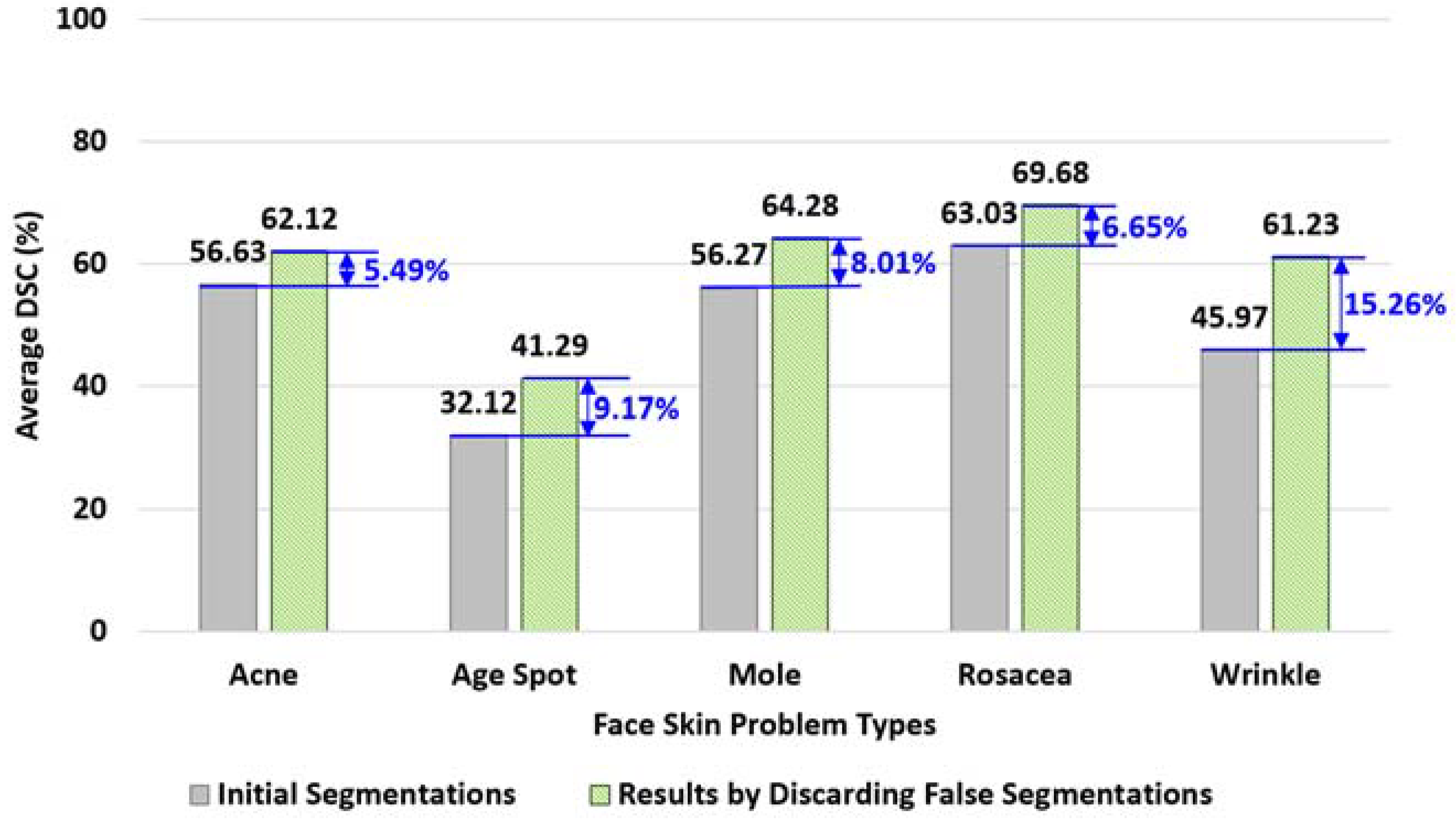

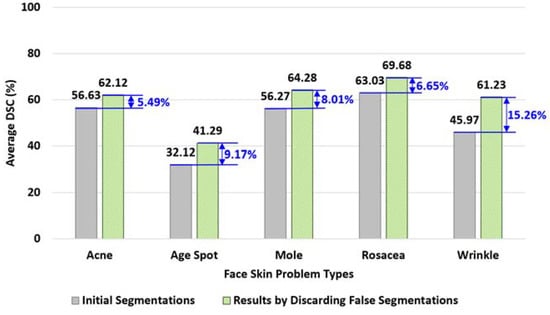

5.4.5. Experiment for Tactic #5: Discarding False Segmentations

This experiment is to evaluate the effectiveness of discarding false segmentations made on non-facial areas. A Mask R-CNN model was trained and applied to detect facial skin problems. Then, the software component implementing the tactic of discarding false segmentation was implemented and applied to discard any resulting false segmentations.

The performance of the diagnosis without applying this tactic and the performance of the diagnosis by applying this tactic were measured and compared as shown in Figure 18.

Figure 18.

Comparing performances of segmentations with- and without discarding false segmentation.

For the wrinkle problems, the segmentation with the Mask R-CNN yielded 45.97% of DSC where the performance measure after discarding false segmentations was 61.23%, as shown in the figure. A significant degree of performance has been gained.

The performances of segmenting acne, age spots, moles, rosacea, and wrinkles were increased by 5.49%, 9.17%, 8.01%, 6.65%, and 15.26%, respectively, and the average of performance gains for all 5 types of facial skin problems is 8.92%.

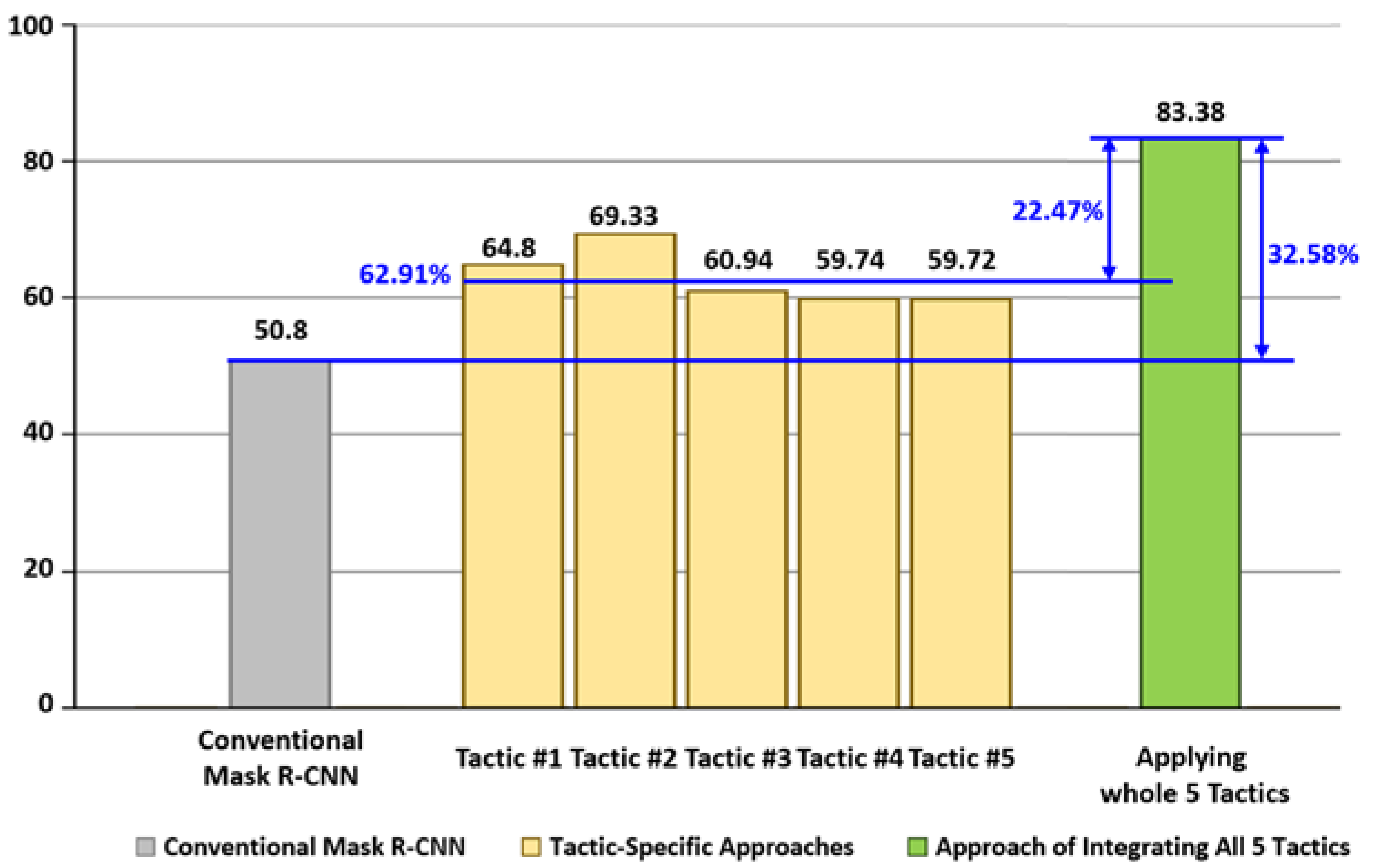

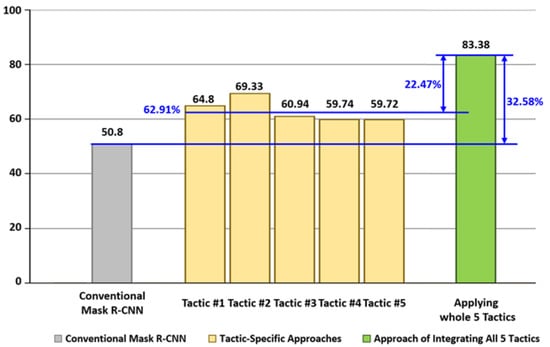

5.4.6. Experiment for Integrating all 5 Tactics

This experiment is to evaluate the performance of facial skin problem diagnosis by integrating all 5 tactics. A conventional Mask R-CNN segmentation model was trained for all the skin problem types. Then, a total of 30 individual segmentation models were trained for the 5 different types of tactics and the 3 facial photo directions.

Then, the performances of 3 different approaches were measured: (1) performance of the conventional Mask R-CNN model, (2) the average performances of 5 different tactics, and (3) the average performance of applying all 30 segmentation models.

The performances of the three approaches are compared in Figure 19.

Figure 19.

Comparing performances of the three approaches.

The conventional Mask R-CNN yielded 50.8% of DSC, the tactic-specific approaches yielded (64.6%, 69.33%, 60.94%, 59.74%, and 59.72%) of DSC, and the approach of integrating all 5 tactics yielded 83.38% of DSC.

The integrated approach outperformed the conventional Mask R-CNN approach by 32.58%, and it outperformed the tactic-specific approach by an average of 22.47%.

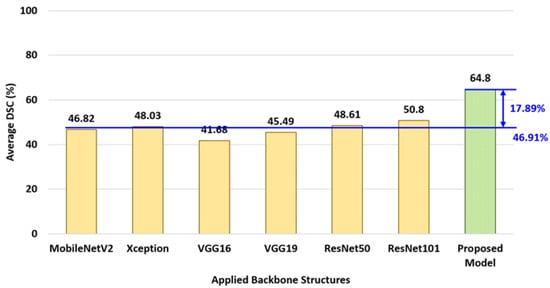

5.4.7. Experiment for Comparing with Other Backbone Networks

This experiment was to compare the performance of our proposed approach with diagnosis models trained with 6 different backbone networks: MobileNetV2, Xception, VGG16, VGG19, ResNet50, and ResNet101.

Table 5 shows the code segments of training the segmentation models using the 6 different backbone networks.

Table 5.

Code segment of training segmentation models using the 6 backbone networks.

The code segment is to configure (6 + 1) segmentation backbone structures inside of the build method (lines 17 to 23) and to configure the remaining network structures of Mask R-CNN inside in build_rpn_and_mrcnn methods (lines 26–32).

Once the network structures are configured, then all 7 segmentation models are trained and applied to detecting facial skin problems using the test set of 445 photos.

The comparison of their average performances is shown in Figure 20.

Figure 20.

Comparing the proposed approach with 6 other backbone networks.

As shown in the figure, each of the segmentation models with MobileNetV2, Xception, VGG16, VGG19, ResNet50, and ResNet101 shows an average 46.91% of detection performance. It is 17.89% lower than the performance of our proposed model.

6. Concluding Remarks

The condition of the facial skin is perceived as a vital indicator of the person’s apparent age, perceived beauty, and degree of health. For this reason, people wish to maintain youthful facial skin without aging symptoms.

Machine-learning-based software analytics on facial skin conditions can be a time- and cost-efficient alternative to the conventional approach of visiting the clinics. However, the current CNN-based approaches have been shown to be limited in the diagnosis performance and, hence, limited in their applicability in clinics.

In this paper, the set of 5 technical challenges in diagnosing facial skin problems were addressed. Then, a set of 5 effective design tactics to overcome the technical challenges in diagnosing facial skin problems were presented. Each proposed tactic is devised to resolve one or more technical challenges.

Using a data collection of 2225 photos, a total of 30 segmentation models were trained and applied to the experiments. The experiments showed 83.38% of the diagnosis performance when applying all 5 tactics, which outperforms conventional CNN approaches by 32.58%. The diagnosis system presented in this study can potentially be utilized in developing clinical diagnosis systems.

Author Contributions

Conceptualization, M.K.; Methodology, M.K. and M.H.S.; Software, M.K. and M.H.S.; Investigation, M.H.S.; Writing—original draft, M.K. and M.H.S.; Supervision, M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shen, X.; Zhang, J.; Yan, C.; Zhou, H. An Automatic Diagnosis Method of Facial Acne Vulgaris Based on Convolutional Neural Network. Sci. Rep. 2018, 8, 5839. [Google Scholar] [CrossRef]

- Quattrini, A.; Boër, C.; Leidi, T.; Paydar, R. A Deep Learning-Based Facial Acne Classification System. Clin. Cosmet. Investig. Dermatol. 2022, 15, 851–857. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Wu, C.-M.; Zhang, S.; He, F.; Liu, F.; Wang, B.; Huang, Y.; Shi, W.; Jian, D.; Xie, H.; et al. A Novel Convolutional Neural Network for the Diagnosis and Classification of Rosacea: Usability Study. JMIR Public Health Surveill. 2021, 9, e23415. [Google Scholar] [CrossRef]

- Liu, S.; Chen, Z.; Zhou, H.; He, K.; Duan, M.; Zheng, Q.; Xiong, P.; Huang, L.; Yu, Q.; Su, G.; et al. DiaMole: Mole Detection and Segmentation Software for Mobile Phone Skin Images. J. Health Eng. 2021, 2021, 6698176. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Yin, H.; Chen, H.; Sun, M.; Liu, X.; Yu, Y.; Tang, Y.; Long, H.; Zhang, B.; Zhang, J.; et al. A deep learning, image based approach for automated diagnosis for inflammatory skin diseases. Ann. Transl. Med. 2020, 8, 581. [Google Scholar] [CrossRef]

- Gerges, F.; Shih, F.; Azar, D. Automated Diagnosis of Acne and Rosacea using Convolution Neural Networks. In Proceedings of the 2021 4th International Conference on Artificial Intelligence and Pattern Recognition (AIPR 2021), Xiamen, China, 24–26 September 2021. [Google Scholar] [CrossRef]

- Yadav, N.; Alfayeed, S.M.; Khamparia, A.; Pandey, B.; Thanh, D.N.H.; Pande, S. HSV model-based segmentation driven facial acne detection using deep learning. Expert Syst. 2021, 39, e12760. [Google Scholar] [CrossRef]

- Junayed, M.S.; Islam, B.; Jeny, A.A.; Sadeghzadeh, A.; Biswas, T.; Shah, A.F.M.S. ScarNet: Development and Validation of a Novel Deep CNN Model for Acne Scar Classification With a New Dataset. IEEE Access 2021, 10, 1245–1258. [Google Scholar] [CrossRef]

- Bekmirzaev, S.; Oh, S.; Yo, S. RethNet: Object-by-Object Learning for Detecting Facial Skin Problems. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Gessert, N.; Sentker, T.; Madesta, F.; Schmitz, R.; Kniep, H.; Baltruschat, I.; Werner, R.; Schlaefer, A. Skin Lesion Classification Using CNNs With Patch-Based Attention and Diagnosis-Guided Loss Weighting. IEEE Trans. Biomed. Eng. 2019, 67, 495–503. [Google Scholar] [CrossRef]

- Gulzar, Y.; Khan, S.A. Skin Lesion Segmentation Based on Vision Transformers and Convolutional Neural Networks—A Comparative Study. Appl. Sci. 2022, 12, 5990. [Google Scholar] [CrossRef]

- Li, H.; Pan, Y.; Zhao, J.; Zhang, L. Skin disease diagnosis with deep learning: A review. Neurocomputing 2021, 464, 364–393. [Google Scholar] [CrossRef]

- Cui, L.; Ma, R.; Lv, P.; Jiang, X.; Gao, Z.; Zhou, B.; Xu, M. MDSSD: Multi-scale deconvolutional single shot detector for small objects. Sci. China Inf. Sci. 2020, 63, 120113. [Google Scholar] [CrossRef]

- Liu, Z.; Yeoh, J.K.; Gu, X.; Dong, Q.; Chen, Y.; Wu, W.; Wang, L.; Wang, D. Automatic pixel-level detection of vertical cracks in asphalt pavement based on GPR investigation and improved mask R-CNN. Autom. Constr. 2023, 146, 104689. [Google Scholar] [CrossRef]

- Nie, X.; Duan, M.; Ding, H.; Hu, B.; Wong, E.K. Attention Mask R-CNN for Ship Detection and Segmentation From Remote Sensing Images. IEEE Access 2020, 8, 9325–9334. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.; Huang, C.; Bassenne, M.; Xiao, R.; Xing, L. Modified U-Net (mU-Net) with Incorporation of Object-Dependent High Level Features for Improved Liver and Liver-Tumor Segmentation in CT Images. IEEE Trans. Med. Imaging 2019, 39, 1316–1325. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Zhang, Y.; Zheng, J.; Li, B.; Shen, J.; Li, M.; Liu, L.; Qiu, B.; Chen, D.Z. IMIIN: An Inter-modality Information Interaction Network for 3D Multi-modal Breast Tumor Segmentation. Comput. Med. Imaging Graph. 2021, 95, 102021. [Google Scholar] [CrossRef]

- Tian, G.; Liu, J.; Zhao, H.; Yang, W. Small object detection via dual inspection mechanism for UAV visual images. Appl. Intell. 2021, 52, 4244–4257. [Google Scholar] [CrossRef]

- Chen, C.; Zhong, J.; Tan, Y. Multiple-Oriented and Small Object Detection with Convolutional Neural Networks for Aerial Image. Remote Sens. 2019, 11, 2176. [Google Scholar] [CrossRef]

- Amudhan, A.N.; Sudheer, A.P. Lightweight and computationally faster Hypermetropic Convolutional Neural Network for small size object detection. Image Vis. Comput. 2022, 119, 104396. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, G.; Zhang, G. Collaborative Network for Super-Resolution and Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 21546971. [Google Scholar] [CrossRef]

- Da Wang, Y.; Blunt, M.J.; Armstrong, R.T.; Mostaghimi, P. Deep learning in pore scale imaging and modeling. Earth-Sci. Rev. 2021, 215, 103555. [Google Scholar] [CrossRef]

- Guo, Z.; Wu, G.; Song, X.; Yuan, W.; Chen, Q.; Zhang, H.; Shi, X.; Xu, M.; Xu, Y.; Shibasaki, R.; et al. Super-Resolution Integrated Building Semantic Segmentation for Multi-Source Remote Sensing Imagery. IEEE Access 2019, 7, 99381–99397. [Google Scholar] [CrossRef]

- Aboobacker, S.; Verma, A.; Vijayasenan, D.; Suresh, P.K.; Sreeram, S. Semantic Segmentation on Low Resolution Cytology Images of Pleural and Peritoneal Effusion. In Proceedings of the 2022 National Conference on Communications (NCC 2022), Mumbai, India, 24–27 May 2022. [Google Scholar] [CrossRef]

- Fromm, M.; Berrendorf, M.; Faerman, E.; Chen, Y.; Schüss, B.; Schubert, M. XD-STOD: Cross-Domain Super resolution for Tiny Object Detection. In Proceedings of the 2019 International Conference on Data Mining Workshops (ICDMW 2019), Beijing, China, 8–11 November 2019. [Google Scholar]

- Wei, S.; Zeng, X.; Zhang, H.; Zhou, Z.; Shi, J.; Zhang, X. LFG-Net: Low-Level Feature Guided Network for Precise Ship Instance Segmentation in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 21865424. [Google Scholar] [CrossRef]

- Wei, J.; Xia, Y.; Zhang, Y. M3Net: A multi-model, multi-size, and multi-view deep neural network for brain magnetic resonance image segmentation. Pattern Recognit. 2019, 91, 366–378. [Google Scholar] [CrossRef]

- Zhou, X.; Takayama, R.; Wang, S.; Hara, T.; Fujita, H. Deep learning of the sectional appearances of 3D CT images for anatomical structure segmentation based on an FCN voting method. Med. Phys. 2017, 44, 5221–5233. [Google Scholar] [CrossRef]

- Liu, Y.; Kwak, H.-S.; Oh, I.-S. Cerebrovascular Segmentation Model Based on Spatial Attention-Guided 3D Inception U-Net with Multi-Directional MIPs. Appl. Sci. 2022, 12, 2288. [Google Scholar] [CrossRef]

- Sander, J.; de Vos, B.D.; Išgum, I. Automatic segmentation with detection of local segmentation failures in cardiac MRI. Sci. Rep. 2020, 10, 21769. [Google Scholar] [CrossRef] [PubMed]

- Scherr, T.; Löffler, K.; Böhland, M.; Mikut, R. Cell segmentation and tracking using CNN-based distance predictions and a graph-based matching strategy. PLoS ONE 2020, 15, e0243219. [Google Scholar] [CrossRef]

- Larrazabal, A.J.; Martinez, C.; Ferrante, E. Anatomical Priors for Image Segmentation via Post-processing with Denoising Autoencoders. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2019), Shenzhen, China, 13–17 October 2019. [Google Scholar] [CrossRef]

- Chan, R.; Rottmann, M.; Gottschalk, H. Entropy Maximization and Meta Classification for Out-of-Distribution Detection in Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 1–17 October 2021; pp. 5128–5137. [Google Scholar] [CrossRef]

- Shuvo, B.; Ahommed, R.; Reza, S.; Hashem, M. CNL-UNet: A novel lightweight deep learning architecture for multimodal biomedical image segmentation with false output suppression. Biomed. Signal Process. Control. 2021, 70, 102959. [Google Scholar] [CrossRef]

- Khan, S.A.; Gulzar, Y.; Turaev, S.; Peng, Y.S. A Modified HSIFT Descriptor for Medical Image Classification of Anatomy Objects. Symmetry 2021, 13, 1987. [Google Scholar] [CrossRef]

- Hamid, Y.; Elyassami, S.; Gulzar, Y.; Balasaraswathi, V.R.; Habuza, T.; Wani, S. An improvised CNN model for fake image detection. Int. J. Inf. Technol. 2022, 2022. [Google Scholar] [CrossRef]

- Zhang, W.; Itoh, K.; Tanida, J.; Ichioka, Y. Parallel distributed processing model with local space-invariant interconnections and its optical architecture. Appl. Opt. 1990, 29, 4790–4797. [Google Scholar] [CrossRef]

- Nguyen, N.-D.; Do, T.; Ngo, T.D.; Le, D.-D. An Evaluation of Deep Learning Methods for Small Object Detection. J. Electr. Comput. Eng. 2020, 2020, 3189691. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, P.; Wergeles, N.; Shang, Y. A survey and performance evaluation of deep learning methods for small object detection. Expert Syst. Appl. 2021, 172, 114602. [Google Scholar] [CrossRef]

- Sun, C.; Ai, Y.; Wang, S.; Zhang, W. Mask-guided SSD for small-object detection. Appl. Intell. 2020, 51, 3311–3322. [Google Scholar] [CrossRef]

- Flament, F.; Francois, G.; Qiu, H.; Ye, C.; Hanaya, T.; Batisse, D.; Cointereau-Chardon, S.; Seixas, M.D.G.; Belo, S.E.D.; Bazin, R. Facial skin pores: A multiethnic study. Clin. Cosmet. Investig. Dermatol. 2015, 8, 85–93. [Google Scholar] [CrossRef] [PubMed]

- National Cancer Institute. Common Moles, Dysplastic Nevi, and Risk of Melanoma. Available online: https://www.cancer.gov/types/skin/moles-fact-sheet (accessed on 4 January 2023).

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Indolia, S.; Goswami, A.K.; Mishra, S.; Asopa, P. Conceptual Understanding of Convolutional Neural Network- A Deep Learning Approach. Procedia Comput. Sci. 2018, 132, 679–688. [Google Scholar] [CrossRef]

- Luo, C.; Li, X.; Wang, L.; He, J.; Li, D.; Zhou, J. How Does the Data set Affect CNN-based Image Classification Performance? In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI 2018), Nanjing, China, 10–12 November 2018. [CrossRef]

- Zheng, X.; Qi, L.; Ren, Y.; Lu, X. Fine-Grained Visual Categorization by Localizing Object Parts With Single Image. IEEE Trans. Multimedia 2020, 23, 1187–1199. [Google Scholar] [CrossRef]

- Avianto, D.; Harjoko, A. Afiahayati CNN-Based Classification for Highly Similar Vehicle Model Using Multi-Task Learning. J. Imaging 2022, 8, 293. [Google Scholar] [CrossRef]

- Ju, M.; Moon, S.; Yoo, C.D. Object Detection for Similar Appearance Objects Based on Entropy. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA 2019), Daejeon, Republic of Korea, 1–3 November 2019. [Google Scholar] [CrossRef]

- Jang, W.; Lee, E.C. Multi-Class Parrot Image Classification Including Subspecies with Similar Appearance. Biology 2021, 10, 1140. [Google Scholar] [CrossRef] [PubMed]

- Facial Landmarks Shape Predictor. Available online: https://github.com/codeniko/shape_predictor_81_face_landmarks (accessed on 4 January 2023).

- Wu, X.; Wen, N.; Liang, J.; Lai, Y.K.; She, D.; Cheng, M.; Yang, J. Joint Acne Image Grading and Counting via Label Distribution Learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV 2019), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4217–4228. [Google Scholar] [CrossRef] [PubMed]

- Wu, X. Pytorch Implementation of Joint Acne Image Grading and Counting via Label Distribution Learning. Available online: https://github.com/xpwu95/LDL (accessed on 4 January 2023).

- NVDIA Research Lab. FFHQ Datase. Available online: https://github.com/NVlabs/ffhq-dataset (accessed on 4 January 2023).

- Thomaz, C.E. FEI Face Database. Available online: https://fei.edu.br/~cet/facedatabase.html (accessed on 4 January 2023).

- COCO Annotator. Available online: https://github.com/jsbroks/coco-annotator (accessed on 4 January 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).