Spam Reviews Detection in the Time of COVID-19 Pandemic: Background, Definitions, Methods and Literature Analysis

Abstract

:1. Introduction

- Articles whose titles are related to some or all of the search keywords.

- Articles that contain keywords that are subsets of the search keywords.

- Articles that their abstract portray spam reviews detection (or its synonym).

- Articles that suggest new models or techniques for spam reviews detection or alter an existing one.

- Articles that used already-existing spam reviews detection methods in their phase of the experiment.

- Articles published before 2020 or those not published during the COVID-19 pandemic.

- Articles that do not have or include experiments on spam reviews detection.

- Articles that do not meet any of the inclusion criteria.

- The increase of reviews amounts due to the rise of people staying inside.

- The behavior of reviews differentiates between the two periods in products, services, and consumers’ target, style of context, and their important impact.

- Spam reviews also show growth in numbers thanks to the increase of individuals reading and searching for reviews.

- Detection technique models improve and become more varied as can be seen in Section 3.

- Outline the previous spam detection surveys and their contributions.

- Provide a background investigation about the concepts of spam reviews detection environment and specify their definitions and motivations.

- Address the works and studies during the COVID-19 situation (2020 & 2021) in order to analyze the detection methods and reviews type at that time.

- Analyze all works and address their limitations, advantages, and how to improve them.

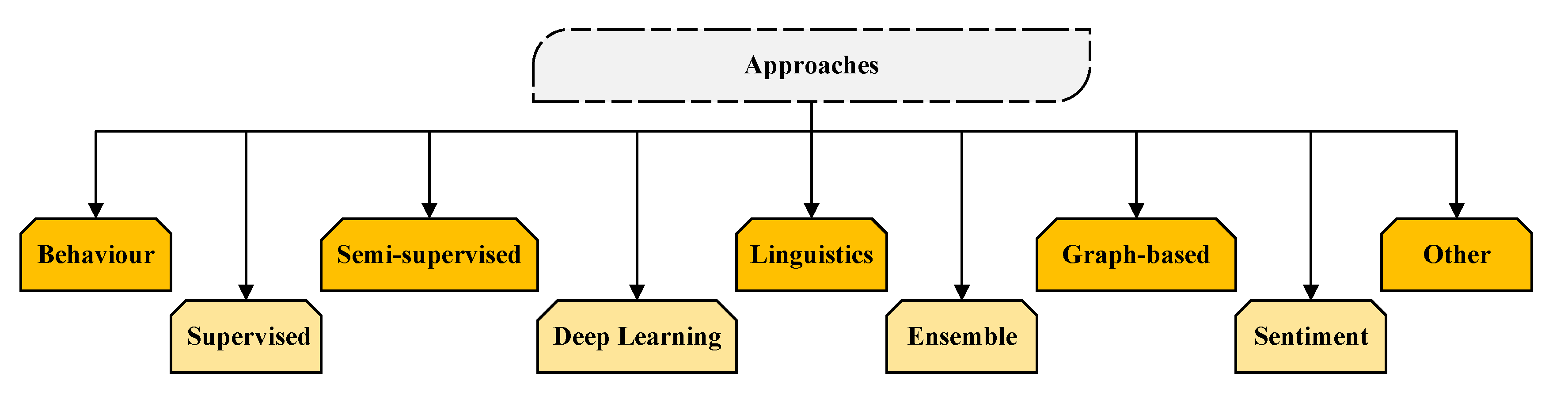

- Classify the detection methods into nine different categories.

- Present a literature analysis, discussion, and future directions of the spam reviews detection.

2. Background, Definitions and Motivation

2.1. Online Reviews

2.2. Spam Reviews

2.3. Detection

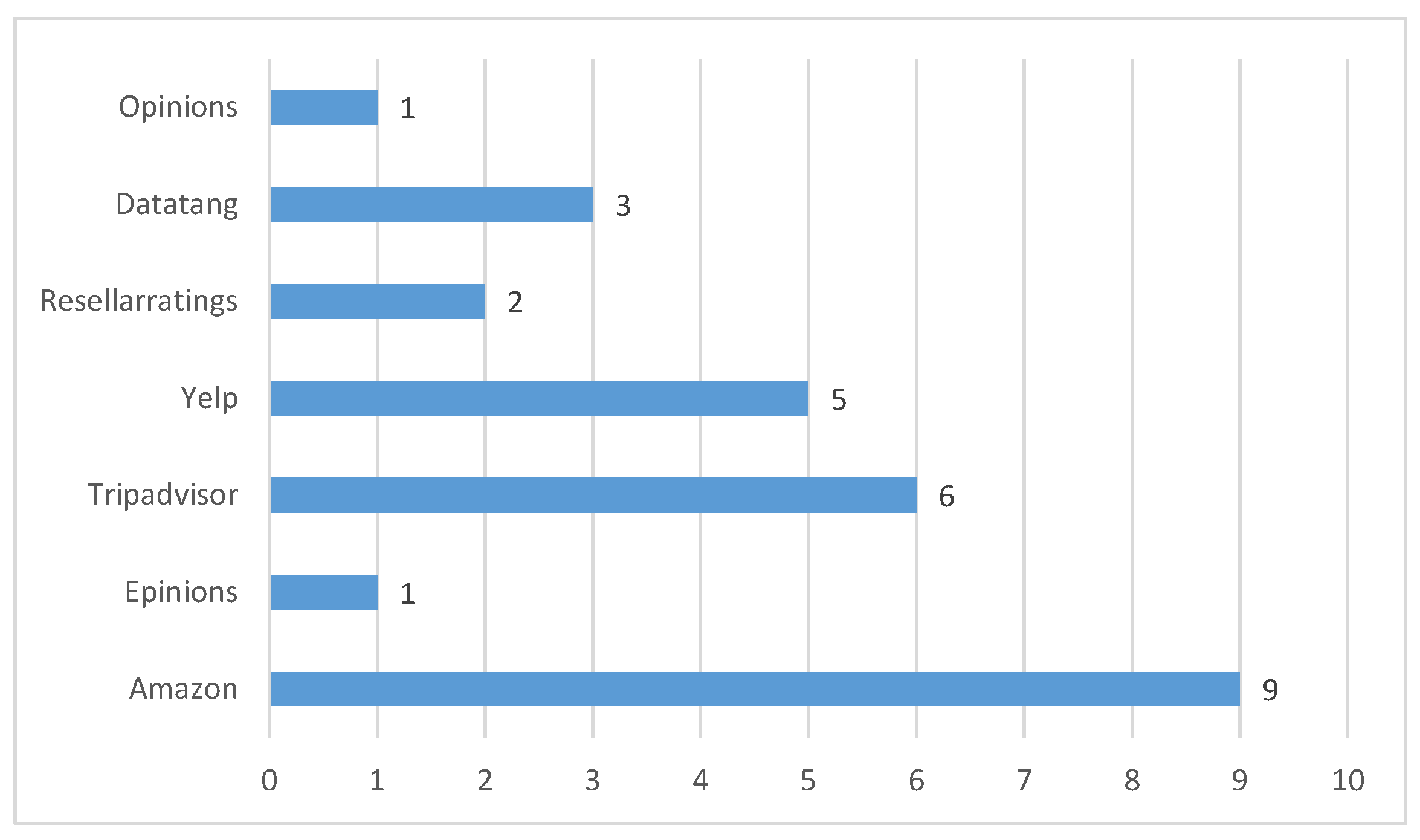

2.4. Datasets

3. Approaches

3.1. Semi-Supervised

3.2. Deep Learning

3.3. Linguistics

3.4. Ensemble

3.5. Graph-Based

3.6. Sentiment-Based

3.7. Other Approaches

4. Literature Analysis, Discussion and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gewin, V. Five tips for moving teaching online as COVID-19 takes hold. Nature 2020, 580, 295–296. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eccleston, C.; Blyth, F.M.; Dear, B.F.; Fisher, E.A.; Keefe, F.J.; Lynch, M.E.; Palermo, T.M.; Reid, M.C.; de Williams, A.C.C. Managing patients with chronic pain during the COVID-19 outbreak: Considerations for the rapid introduction of remotely supported (eHealth) pain management services. Pain 2020, 161, 889. [Google Scholar] [CrossRef] [PubMed]

- Papanikolaou, D.; Schmidt, L.D. Working Remotely and the Supply-Side Impact of COVID-19; Technical Report; National Bureau of Economic Research: Cambridge, MA, USA, 2020. [Google Scholar]

- Kim, R.Y. The impact of COVID-19 on consumers: Preparing for digital sales. IEEE Eng. Manag. Rev. 2020, 48, 212–218. [Google Scholar] [CrossRef]

- Eriksson, N.; Stenius, M. Changing behavioral patterns in grocery shopping in the initial phase of the COVID-19 crisis—A qualitative study of news articles. Open J. Bus. Manag. 2020, 8, 1946. [Google Scholar] [CrossRef]

- Xu, R.; Xia, Y.; Wong, K.F.; Li, W. Opinion annotation in on-line Chinese product reviews. In Proceedings of the Sixth International Conference on Language Resources and Evaluation (LREC’08), Marrakech, Morocco, 28–30 May 2008; Volume 8, pp. 26–30. [Google Scholar]

- Samha, A.K.; Li, Y.; Zhang, J. Aspect-based opinion extraction from customer reviews. arXiv 2014, arXiv:1404.1982. [Google Scholar]

- Ren, Y.; Ji, D. Learning to detect deceptive opinion spam: A survey. IEEE Access 2019, 7, 42934–42945. [Google Scholar] [CrossRef]

- Ho-Dac, N.N.; Carson, S.J.; Moore, W.L. The effects of positive and negative online customer reviews: Do brand strength and category maturity matter? J. Mark. 2013, 77, 37–53. [Google Scholar] [CrossRef] [Green Version]

- Penalver-Martinez, I.; Garcia-Sanchez, F.; Valencia-Garcia, R.; Rodriguez-Garcia, M.A.; Moreno, V.; Fraga, A.; Sanchez-Cervantes, J.L. Feature-based opinion mining through ontologies. Expert Syst. Appl. 2014, 41, 5995–6008. [Google Scholar] [CrossRef]

- Popescu, A.M.; Etzioni, O. Extracting product features and opinions from reviews. In Natural Language Processing and Text Mining; Springer: Berlin/Heidelberg, Germany, 2007; pp. 9–28. [Google Scholar]

- Hu, M.; Liu, B. Mining and summarizing customer reviews. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 168–177. [Google Scholar]

- Kirkpatrick, D.; Roth, D.; Ryan, O. Why there’s no escaping the blog. Fortune 2005, 151, 44–50. [Google Scholar]

- Luo, Y.; Xu, X. Comparative study of deep learning models for analyzing online restaurant reviews in the era of the COVID-19 pandemic. Int. J. Hosp. Manag. 2021, 94, 102849. [Google Scholar] [CrossRef]

- Heydari, A.; ali Tavakoli, M.; Salim, N.; Heydari, Z. Detection of review spam: A survey. Expert Syst. Appl. 2015, 42, 3634–3642. [Google Scholar] [CrossRef]

- Lau, R.Y.; Liao, S.; Kwok, R.C.W.; Xu, K.; Xia, Y.; Li, Y. Text mining and probabilistic language modeling for online review spam detection. ACM Trans. Manag. Inf. Syst. (TMIS) 2012, 2, 1–30. [Google Scholar] [CrossRef]

- Serrano-Guerrero, J.; Olivas, J.A.; Romero, F.P.; Herrera-Viedma, E. Sentiment analysis: A review and comparative analysis of web services. Inf. Sci. 2015, 311, 18–38. [Google Scholar] [CrossRef]

- Elmogy, A.M.; Tariq, U.; Mohammed, A.; Ibrahim, A. Fake Reviews Detection using Supervised Machine Learning. Int. J. Adv. Comput. Sci. Appl 2021, 12, 601–606. [Google Scholar] [CrossRef]

- Amin, I.; Dubey, M.K. An overview of soft computing techniques on Review Spam Detection. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 91–96. [Google Scholar]

- Deshmukh, N.; Dhumal, V.; Gavasane, N.; Jadhav, S.V. Spam Detection by Using Knn Algorithm Techniques. Int. J. 2021, 6, 27–33. [Google Scholar]

- Tupe, R.; Palapure, G.; Kambli, P.; Pawar, S.; Singh, M. A review paper on fake reviews detection system for online product reviews using machine learning. Int. J. 2021, 6, 5. [Google Scholar]

- Gadkari, C.; Ahmed, S.; Darekar, D.; Agnihotri, D.; Pagare, R. Scrutiny of Fraudulent Product Reviews and Approach to Filtrate the Aforementioned. Int. Res. J. Eng. Technol. 2021, 8, 387. [Google Scholar]

- Caron, B. Detecting Online Review Fraud Using Sentiment Analysis; Minnesota State University: Mankato, MN, USA, 2021. [Google Scholar]

- Singh, D.; Memoria, M. Enhanced Classifier Model for Detecting Spam Reviews Using Advanced Machine Learning Techniques. Des. Eng. 2021, 1–12. [Google Scholar] [CrossRef]

- Hassan, R.; Islam, M.R. Impact of Sentiment Analysis in Fake Online Review Detection. In Proceedings of the 2021 International Conference on Information and Communication Technology for Sustainable Development (ICICT4SD), Dhaka, Bangladesh, 27–28 February 2021; pp. 21–24. [Google Scholar]

- Vachane, D. Online Products Fake Reviews Detection System Using Machine Learning. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 29–39. [Google Scholar]

- Chernyaeva, O.; Hong, T. The Detection of Deception Reviews Using Sentiment Analysis and Machine Learning. In Proceedings of the 2020 Korea Management Information Society Fall Conference (KMIS); 2020; pp. 291–293. Available online: https://www.earticle.net/Article/A392615 (accessed on 31 March 2022).

- Tang, S.; Jin, L.; Cheng, F. Fraud Detection in Online Product Review Systems via Heterogeneous Graph Transformer. IEEE Access 2021, 9, 167364–167373. [Google Scholar] [CrossRef]

- Zhu, Y.; Liu, H.; Du, Y.; Wu, Z. IFSpard: An Information Fusion-based Framework for Spam Review Detection. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 507–517. [Google Scholar]

- Yin, C.; Cuan, H.; Zhu, Y.; Yin, Z. Improved Fake Reviews Detection Model Based on Vertical Ensemble Tri-Training and Active Learning. ACM Trans. Intell. Syst. Technol. (TIST) 2021, 12, 1–19. [Google Scholar] [CrossRef]

- Bian, P.; Liu, L.; Sweetser Kyburz, P. Detecting Spam Game Reviews on Steam with a Semi-Supervised Approach. In Proceedings of the International Conference on the Foundations of Digital Game, Montreal, QC, Canada, 3–6 August 2021. [Google Scholar]

- Zhong, M.; Li, Z.; Liu, S.; Yang, B.; Tan, R.; Qu, X. Fast Detection of Deceptive Reviews by Combining the Time Series and Machine Learning. Complexity 2021, 2021, 9923374. [Google Scholar] [CrossRef]

- Rathore, P.; Soni, J.; Prabakar, N.; Palaniswami, M.; Santi, P. Identifying Groups of Fake Reviewers Using a Semisupervised Approach. IEEE Trans. Comput. Soc. Syst. 2021, 8, 1369–1378. [Google Scholar] [CrossRef]

- Manaskasemsak, B.; Tantisuwankul, J.; Rungsawang, A. Fake review and reviewer detection through behavioral graph partitioning integrating deep neural network. Neural Comput. Appl. 2021, 1–14. [Google Scholar] [CrossRef]

- Alsubari, S.N.; Deshmukh, S.N.; Al-Adhaileh, M.H.; Alsaade, F.W.; Aldhyani, T.H. Development of Integrated Neural Network Model for Identification of Fake Reviews in E-Commerce Using Multidomain Datasets. Appl. Bionics Biomech. 2021, 2021, 5522574. [Google Scholar] [CrossRef]

- Ghoshal, M. Fake Users and Reviewers Detection System. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 2090–2098. [Google Scholar]

- Sahoo, R.K.; Sethi, S.; Udgata, S.K. A Smartphone App Based Model for Classification of Users and Reviews (A Case Study for Tourism Application). In Intelligent Systems; Springer: Singapore, 2020; p. 337. [Google Scholar]

- Kim, J.; Kang, J.; Shin, S.; Myaeng, S.H. Can You Distinguish Truthful from Fake Reviews? User Analysis and Assistance Tool for Fake Review Detection. In Proceedings of the First Workshop on Bridging Human–Computer Interaction and Natural Language Processing, Online, 10 December 2021; pp. 53–59. [Google Scholar]

- Crawford, M.; Khoshgoftaar, T.M.; Prusa, J.D.; Richter, A.N.; Al Najada, H. Survey of review spam detection using machine learning techniques. J. Big Data 2015, 2, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Rajamohana, S.P.; Umamaheswari, K.; Dharani, M.; Vedackshya, R. A survey on online review SPAM detection techniques. In Proceedings of the 2017 International Conference on INNOVATIONS in Green Energy and Healthcare Technologies (IGEHT), Coimbatore, India, 16–18 March 2017; pp. 1–5. [Google Scholar]

- Aslam, U.; Jayabalan, M.; Ilyas, H.; Suhail, A. A survey on opinion spam detection methods. Int. J. Sci. Technol. Res. 2019, 8, 1355–1363. [Google Scholar]

- Hussain, N.; Turab Mirza, H.; Rasool, G.; Hussain, I.; Kaleem, M. Spam review detection techniques: A systematic literature review. Appl. Sci. 2019, 9, 987. [Google Scholar] [CrossRef] [Green Version]

- Istanto, R.S.; Mahmudy, W.F.; Bachtiar, F.A. Detection of online review spam: A literature review. In Proceedings of the 5th International Conference on Sustainable Information Engineering and Technology, Malang, Indonesia, 16–17 November 2020; pp. 57–63. [Google Scholar]

- Wu, Y.; Ngai, E.W.; Wu, P.; Wu, C. Fake online reviews: Literature review, synthesis, and directions for future research. Decis. Support Syst. 2020, 132, 113280. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Yang, L.; Zhang, P.; Yang, D. Identifying ground truth in opinion spam: An empirical survey based on review psychology. Appl. Intell. 2020, 50, 3554–3569. [Google Scholar] [CrossRef]

- Rodrigues, J.C.; Rodrigues, J.T.; Gonsalves, V.L.K.; Naik, A.U.; Shetgaonkar, P.; Aswale, S. Machine & Deep Learning Techniques for Detection of Fake Reviews: A Survey. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–8. [Google Scholar]

- Farooq, M.S. Spam Review Detection: A Systematic Literature Review. TechRxiv, 2020. [Google Scholar]

- Thahira, A.; Sabitha, S. A Survey on Online Review Spammer Group Detection. In Second International Conference on Networks and Advances in Computational Technologies; Springer: Berlin/Heidelberg, Germany, 2021; pp. 13–23. [Google Scholar]

- Mohawesh, R.; Xu, S.; Tran, S.N.; Ollington, R.; Springer, M.; Jararweh, Y.; Maqsood, S. Fake Reviews Detection: A Survey. IEEE Access 2021, 9, 65771–65802. [Google Scholar] [CrossRef]

- Paul, H.; Nikolaev, A. Fake review detection on online E-commerce platforms: A systematic literature review. Data Min. Knowl. Discov. 2021, 35, 1830–1881. [Google Scholar] [CrossRef]

- De Pelsmacker, P.; Van Tilburg, S.; Holthof, C. Digital marketing strategies, online reviews and hotel performance. Int. J. Hosp. Manag. 2018, 72, 47–55. [Google Scholar] [CrossRef]

- Lo, A.S.; Yao, S.S. What makes hotel online reviews credible? Int. J. Contemp. Hosp. Manag. 2019, 31, 41–60. [Google Scholar] [CrossRef]

- Hu, N.; Liu, L.; Zhang, J.J. Do online reviews affect product sales? The role of reviewer characteristics and temporal effects. Inf. Technol. Manag. 2008, 9, 201–214. [Google Scholar] [CrossRef]

- Brown, J.J.; Reingen, P.H. Social ties and word-of-mouth referral behavior. J. Consum. Res. 1987, 14, 350–362. [Google Scholar] [CrossRef]

- Kusumasondjaja, S.; Shanka, T.; Marchegiani, C. Credibility of online reviews and initial trust: The roles of reviewer’s identity and review valence. J. Vacat. Mark. 2012, 18, 185–195. [Google Scholar] [CrossRef]

- Valant, J. Online Consumer Reviews: The Case of Misleading or Fake Reviews. Eur. Parliam. Res. Serv. 2015, 2. Available online: https://policycommons.net/artifacts/1335788/online-consumer-reviews/1942502/ (accessed on 31 March 2022).

- Yang, Y.; Park, S.; Hu, X. Electronic word of mouth and hotel performance: A meta-analysis. Tour. Manag. 2018, 67, 248–260. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, X. Impact of online consumer reviews on sales: The moderating role of product and consumer characteristics. J. Mark. 2010, 74, 133–148. [Google Scholar] [CrossRef]

- Duan, W.; Gu, B.; Whinston, A.B. Do online reviews matter?—An empirical investigation of panel data. Decis. Support Syst. 2008, 45, 1007–1016. [Google Scholar] [CrossRef]

- Kim, W.G.; Li, J.J.; Brymer, R.A. The impact of social media reviews on restaurant performance: The moderating role of excellence certificate. Int. J. Hosp. Manag. 2016, 55, 41–51. [Google Scholar] [CrossRef]

- Chevalier, J.A.; Mayzlin, D. The effect of word of mouth on sales: Online book reviews. J. Mark. Res. 2006, 43, 345–354. [Google Scholar] [CrossRef] [Green Version]

- Torres, E.N.; Singh, D.; Robertson-Ring, A. Consumer reviews and the creation of booking transaction value: Lessons from the hotel industry. Int. J. Hosp. Manag. 2015, 50, 77–83. [Google Scholar] [CrossRef] [Green Version]

- Blal, I.; Sturman, M.C. The differential effects of the quality and quantity of online reviews on hotel room sales. Cornell Hosp. Q. 2014, 55, 365–375. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Y.H.; Ho, H.Y. Social influence’s impact on reader perceptions of online reviews. J. Bus. Res. 2015, 68, 883–887. [Google Scholar] [CrossRef]

- Sitaula, C.; Basnet, A.; Mainali, A.; Shahi, T. Deep Learning-Based Methods for Sentiment Analysis on Nepali COVID-19-Related Tweets. Comput. Intell. Neurosci. 2021, 2021, 2158184. [Google Scholar] [CrossRef]

- Shahi, T.; Sitaula, C.; Paudel, N. A Hybrid Feature Extraction Method for Nepali COVID-19-Related Tweets Classification. Comput. Intell. Neurosci. 2022, 2022, 5681574. [Google Scholar] [CrossRef]

- Martens, D.; Maalej, W. Towards understanding and detecting fake reviews in app stores. Empir. Softw. Eng. 2019, 24, 3316–3355. [Google Scholar] [CrossRef] [Green Version]

- Hu, N.; Bose, I.; Koh, N.S.; Liu, L. Manipulation of online reviews: An analysis of ratings, readability, and sentiments. Decis. Support Syst. 2012, 52, 674–684. [Google Scholar] [CrossRef]

- Banerjee, S.; Chua, A.Y. Theorizing the textual differences between authentic and fictitious reviews: Validation across positive, negative and moderate polarities. Internet Res. 2017, 27, 321–337. [Google Scholar] [CrossRef]

- Luca, M.; Zervas, G. Fake it till you make it: Reputation, competition, and Yelp review fraud. Manag. Sci. 2016, 62, 3412–3427. [Google Scholar] [CrossRef] [Green Version]

- Schuckert, M.; Liu, X.; Law, R. Insights into suspicious online ratings: Direct evidence from TripAdvisor. Asia Pac. J. Tour. Res. 2016, 21, 259–272. [Google Scholar] [CrossRef]

- Munzel, A. Assisting consumers in detecting fake reviews: The role of identity information disclosure and consensus. J. Retail. Consum. Serv. 2016, 32, 96–108. [Google Scholar] [CrossRef]

- Salehi-Esfahani, S.; Ozturk, A.B. Negative reviews: Formation, spread, and halt of opportunistic behavior. Int. J. Hosp. Manag. 2018, 74, 138–146. [Google Scholar] [CrossRef]

- Reporter, T. TripAdvisor Told to Stop Claiming Reviews Are ‘Trusted and Honest’. 2012. Available online: https://www.dailymail.co.uk/travel/article-2094766/TripAdvisor-told-stopclaiming-reviews-trusted-honest-Advertising-Standards-Authority.html (accessed on 31 March 2022).

- Bates, D. Samsung Ordered to Pay $340,000 after It Paid People to Write Negative Online Reviews about HTC Phones. Daily Mail. 24 October 2013. Available online: https://www.dailymail.co.uk/sciencetech/article-2476630/Samsung-ordered-pay-340-000-paid-people-write-negative-online-reviews-HTC-phones.html (accessed on 31 March 2022).

- Gani, A. Amazon Sues 1000 ‘Fake Reviewers’. 2015. Available online: https://www.theguardian.com/technology/2015/oct/18/amazon-sues-1000-fake-reviewers (accessed on 31 March 2022).

- Zhao, R. Mafengwo Accused of Faking 85% of All User-Generated Content. 2018. Available online: https://technode.com/2018/10/22/mafengwo-fake-comments-blog-comment/ (accessed on 31 March 2022).

- Lee, S.Y.; Qiu, L.; Whinston, A. Sentiment manipulation in online platforms: An analysis of movie tweets. Prod. Oper. Manag. 2018, 27, 393–416. [Google Scholar] [CrossRef]

- Whitney, L. Companies to Pay $350,000 Fine over Fake Online Reviews. 2013. Available online: https://www.cnet.com/tech/services-and-software/companies-to-pay-350000-fine-over-fake-online-reviews/ (accessed on 31 March 2022).

- CIRS. China’s First e-Commerce Law Published. 2018. Available online: https://www.cirs-group.com/en/cosmetics/china-s-first-e-commerce-law-published (accessed on 31 March 2022).

- Shehnepoor, S.; Salehi, M.; Farahbakhsh, R.; Crespi, N. NetSpam: A network-based spam detection framework for reviews in online social media. IEEE Trans. Inf. Forensics Secur. 2017, 12, 1585–1595. [Google Scholar] [CrossRef]

- Saumya, S.; Singh, J.P. Detection of spam reviews: A sentiment analysis approach. CSI Trans. ICT 2018, 6, 137–148. [Google Scholar] [CrossRef]

- Karami, A.; Zhou, B. Online review spam detection by new linguistic features. In iConference 2015 Proceedings; iSchools: Grandville, MI, USA, 2015. [Google Scholar]

- Bajaj, S.; Garg, N.; Singh, S.K. A novel user-based spam review detection. Procedia Comput. Sci. 2017, 122, 1009–1015. [Google Scholar] [CrossRef]

- Habib, M.; Faris, H.; Hassonah, M.A.; Alqatawna, J.; Sheta, A.F.; Ala’M, A.Z. Automatic email spam detection using genetic programming with SMOTE. In Proceedings of the 2018 Fifth HCT Information Technology Trends (ITT), Dubai, United Arab Emirates, 28–29 November 2018; pp. 185–190. [Google Scholar]

- Al-Ahmad, B.; Al-Zoubi, A.; Abu Khurma, R.; Aljarah, I. An evolutionary fake news detection method for COVID-19 pandemic information. Symmetry 2021, 13, 1091. [Google Scholar] [CrossRef]

- Alzubi, O.A.; Alzubi, J.A.; Al-Zoubi, A.; Hassonah, M.A.; Kose, U. An efficient malware detection approach with feature weighting based on Harris Hawks optimization. Cluster Comput. 2021, 1–19. [Google Scholar] [CrossRef]

- Comito, C.; Forestiero, A.; Pizzuti, C. Word embedding based clustering to detect topics in social media. In Proceedings of the 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Thessaloniki, Greece, 14–17 October 2019; pp. 192–199. [Google Scholar]

- Forestiero, A. Bio-inspired algorithm for outliers detection. Multimed. Tools Appl. 2017, 76, 25659–25677. [Google Scholar] [CrossRef]

- Forestiero, A. Metaheuristic algorithm for anomaly detection in Internet of Things leveraging on a neural-driven multiagent system. Knowl.-Based Syst. 2021, 228, 107241. [Google Scholar] [CrossRef]

- Klein, G.; Pliske, R.; Crandall, B.; Woods, D.D. Problem detection. Cogn. Technol. Work 2005, 7, 14–28. [Google Scholar] [CrossRef]

- Cowan, D.A. Developing a process model of problem recognition. Acad. Manag. Rev. 1986, 11, 763–776. [Google Scholar] [CrossRef]

- Rapid7. Threat Detection. 2021. Available online: https://www.rapid7.com/fundamentals/threat-detection (accessed on 31 March 2022).

- Smith, C.; Brooks, D.J. Security Science: The Theory and Practice of Security; Butterworth-Heinemann: Oxford, UK, 2012. [Google Scholar]

- Navarro, J.; Deruyver, A.; Parrend, P. A systematic survey on multi-step attack detection. Comput. Secur. 2018, 76, 214–249. [Google Scholar] [CrossRef]

- Katzenbeisser, S.; Kinder, J.; Veith, H. Malware Detection. In Encyclopedia of Cryptography and Security; van Tilborg, H.C.A., Jajodia, S., Eds.; Springer: Boston, MA, USA, 2011; pp. 752–755. [Google Scholar] [CrossRef]

- Qaddoura, R.; Al-Zoubi, M.; Faris, H.; Almomani, I. A Multi-Layer Classification Approach for Intrusion Detection in IoT Networks Based on Deep Learning. Sensors 2021, 21, 2987. [Google Scholar] [CrossRef]

- Ghai, R.; Kumar, S.; Pandey, A.C. Spam detection using rating and review processing method. In Smart Innovations in Communication and Computational Sciences; Springer: Singapore, 2019; pp. 189–198. [Google Scholar]

- Shahariar, G.; Biswas, S.; Omar, F.; Shah, F.M.; Hassan, S.B. Spam review detection using deep learning. In Proceedings of the 2019 IEEE 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 17–19 October 2019; pp. 27–33. [Google Scholar]

- Liu, Y.; Pang, B.; Wang, X. Opinion spam detection by incorporating multimodal embedded representation into a probabilistic review graph. Neurocomputing 2019, 366, 276–283. [Google Scholar] [CrossRef]

- Salminen, J.; Kandpal, C.; Kamel, A.M.; Jung, S.g.; Jansen, B.J. Creating and detecting fake reviews of online products. J. Retail. Consum. Serv. 2022, 64, 102771. [Google Scholar] [CrossRef]

- Jindal, N.; Liu, B. Review spam detection. In Proceedings of the 16th International Conference on World Wide Web, Banff, AL, Canada, 8–12 May 2007; pp. 1189–1190. [Google Scholar]

- Li, F.H.; Huang, M.; Yang, Y.; Zhu, X. Learning to identify review spam. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Li, J.; Ott, M.; Cardie, C.; Hovy, E. Towards a general rule for identifying deceptive opinion spam. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 1566–1576. [Google Scholar]

- Mukherjee, A.; Venkataraman, V.; Liu, B.; Glance, N. What yelp fake review filter might be doing? In Proceedings of the International AAAI Conference on Web and Social Media, Cambridge, MA, USA, 8–11 July 2013; Volume 7. [Google Scholar]

- Ott, M.; Cardie, C.; Hancock, J.T. Negative deceptive opinion spam. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 497–501. [Google Scholar]

- Abu Hammad, A.S. An Approach for Detecting Spam in Arabic Opinion Reviews; The Islamic University Gaza: Gaza, Palestine, 2013. [Google Scholar]

- Fayazbakhsh, S.; Sinha, J. Review Spam Detection: A Network-Based Approach; Final Project Report; CSE: Shibuya, Tokyo, 2012; Volume 590. [Google Scholar]

- Peng, Q. Store review spammer detection based on review relationship. In Proceedings of the International Conference on Conceptual Modeling, Atlanta, GA, USA, 11–13 November 2013; pp. 287–298. [Google Scholar]

- Wang, G.; Xie, S.; Liu, B.; Philip, S.Y. Review graph based online store review spammer detection. In Proceedings of the 2011 IEEE 11th International Conference on Data Mining, Vancouver, BC, Canada, 11–14 December 2011; pp. 1242–1247. [Google Scholar]

- Jiang, B.; Cao, R.; Chen, B. Detecting product review spammers using activity model. In Proceedings of the 2013 International Conference on Advanced Computer Science and Electronics Information (ICACSEI 2013), Beijing, China, 25–26 July 2013. [Google Scholar]

- Huang, J.; Qian, T.; He, G.; Zhong, M.; Peng, Q. Detecting professional spam reviewers. In Proceedings of the International Conference on Advanced Data Mining and Applications, Hangzhou, China, 14–16 December 2013; pp. 288–299. [Google Scholar]

- Wang, J.; Liang, X. Discovering the rating pattern of online reviewers through data coclustering. In Proceedings of the 2013 IEEE International Conference on Intelligence and Security Informatics, Seattle, WA, USA, 4–7 June 2013; pp. 374–376. [Google Scholar]

- Mukherjee, A.; Liu, B.; Glance, N. Spotting fake reviewer groups in consumer reviews. In Proceedings of the 21st International Conference on World Wide Web, Lyon France, 16–20 April 2012; pp. 191–200. [Google Scholar]

- Lu, Y.; Zhang, L.; Xiao, Y.; Li, Y. Simultaneously detecting fake reviews and review spammers using factor graph model. In Proceedings of the 5th Annual ACM Web Science Conference, Paris France, 2–4 May 2013; pp. 225–233. [Google Scholar]

- Aye, C.M.; Oo, K.M. Review spammer detection by using behaviors based scoring methods. In Proceedings of the International Conference on Advances in Engineering and Technology, Nagapattinam, India, 2–3 May 2014. [Google Scholar]

- Choo, E.; Yu, T.; Chi, M. Detecting opinion spammer groups through community discovery and sentiment analysis. In Proceedings of the IFIP Annual Conference on Data and Applications Security and Privacy, Fairfax, VA, USA, 13–15 July 2015; pp. 170–187. [Google Scholar]

- Fornaciari, T.; Poesio, M. Identifying fake Amazon reviews as learning from crowds. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics, Gothenburg, Sweden, 26–30 April 2014. [Google Scholar]

- Ren, Y.; Ji, D.; Yin, L. Deceptive reviews detection based on semi-supervised learning algorithm. J. Sichuan Univ. (Eng. Sci. Ed.) 2014, 46, 62–69. [Google Scholar]

- Li, H.; Chen, Z.; Liu, B.; Wei, X.; Shao, J. Spotting fake reviews via collective positive-unlabeled learning. In Proceedings of the 2014 IEEE International Conference on Data Mining, Shenzhen, China, 14 December 2014; pp. 899–904. [Google Scholar]

- Rayana, S.; Akoglu, L. Collective opinion spam detection: Bridging review networks and metadata. In Proceedings of the 21th ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 985–994. [Google Scholar]

- Ott, M.; Choi, Y.; Cardie, C.; Hancock, J.T. Finding deceptive opinion spam by any stretch of the imagination. arXiv 2011, arXiv:1107.4557. [Google Scholar]

- Blitzer, J.; Dredze, M.; Pereira, F. Biographies, bollywood, boom-boxes and blenders: Domain adaptation for sentiment classification. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech Republic, 25–27 June 2007; pp. 440–447. [Google Scholar]

- Ni, J.; Li, J.; McAuley, J. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 188–197. [Google Scholar]

- Barbado, R.; Araque, O.; Iglesias, C.A. A framework for fake review detection in online consumer electronics retailers. Inf. Process. Manag. 2019, 56, 1234–1244. [Google Scholar] [CrossRef] [Green Version]

- Tang, X.; Qian, T.; You, Z. Generating behavior features for cold-start spam review detection with adversarial learning. Inf. Sci. 2020, 526, 274–288. [Google Scholar] [CrossRef]

- Dong, M.; Yao, L.; Wang, X.; Benatallah, B.; Huang, C.; Ning, X. Opinion fraud detection via neural autoencoder decision forest. Pattern Recognit. Lett. 2020, 132, 21–29. [Google Scholar] [CrossRef] [Green Version]

- Fazzolari, M.; Buccafurri, F.; Lax, G.; Petrocchi, M. Experience: Improving Opinion Spam Detection by Cumulative Relative Frequency Distribution. J. Data Inf. Qual. (JDIQ) 2021, 13, 1–16. [Google Scholar] [CrossRef]

- Kennedy, S.; Walsh, N.; Sloka, K.; Foster, J.; McCarren, A. Fact or factitious? Contextualized opinion spam detection. arXiv 2020, arXiv:2010.15296. [Google Scholar]

- Möhring, M.; Keller, B.; Schmidt, R.; Gutmann, M.; Dacko, S. HOTFRED: A Flexible Hotel Fake Review Detection System. In Information and Communication Technologies in Tourism 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 308–314. [Google Scholar]

- Zhu, X.; Goldberg, A.B. Introduction to semi-supervised learning. Synth. Lect. Artif. Intell. Mach. Learn. 2009, 3, 1–30. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Mirzabagheri, M.; Tirandazi, P.; Bamakan, S.M.H. A non-convex semi-supervised approach to opinion spam detection by ramp-one class SVM. Inf. Process. Manag. 2020, 57, 102381. [Google Scholar] [CrossRef]

- Wu, Z.; Cao, J.; Wang, Y.; Wang, Y.; Zhang, L.; Wu, J. hPSD: A Hybrid PU-Learning-Based Spammer Detection Model for Product Reviews. IEEE Trans. Cybern. 2020, 50, 1595–1606. [Google Scholar] [CrossRef] [PubMed]

- Irissappane, A.A.; Yu, H.; Shen, Y.; Agrawal, A.; Stanton, G. Leveraging GPT-2 for Classifying Spam Reviews with Limited Labeled Data via Adversarial Training. arXiv 2020, arXiv:2012.13400. [Google Scholar]

- Ligthart, A.; Catal, C.; Tekinerdogan, B. Analyzing the effectiveness of semi-supervised learning approaches for opinion spam classification. Appl. Soft Comput. 2021, 101, 107023. [Google Scholar] [CrossRef]

- Kumaran, N.; Haritha, C.; Chowdary, D.S. Detection of fake online reviews using semi supervised and supervised learning. IJARST 2021, 8, 650–656. [Google Scholar]

- Anass, F.; Jamal, R.; Mahraz, M.A.; Ali, Y.; Tairi, H. Deceptive Opinion Spam based On Deep Learning. In Proceedings of the 2020 Fourth International Conference On Intelligent Computing in Data Sciences (ICDS), Fez, Morocco, 21–23 October 2020; pp. 1–5. [Google Scholar]

- Fahfouh, A.; Riffi, J.; Mahraz, M.A.; Yahyaouy, A.; Tairi, H. PV-DAE: A hybrid model for deceptive opinion spam based on neural network architectures. Expert Syst. Appl. 2020, 157, 113517. [Google Scholar] [CrossRef]

- Deng, L.; Wei, J.; Liang, S.; Wen, Y.; Liao, X. Review Spam Detection Based on Multi-dimensional Features. In Proceedings of the International Conference on AI and Mobile Services, online, 18–20 September 2020; pp. 106–123. [Google Scholar]

- Saumya, S.; Singh, J.P. Spam review detection using LSTM autoencoder: An unsupervised approach. Electron. Commer. Res. 2020, 22, 1–21. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, Q. Recognition of false comments in E-commerce based on deep learning confidence network algorithm. Inf. Syst.-Bus. Manag. 2021, 1–18. [Google Scholar] [CrossRef]

- Bhuvaneshwari, P.; Rao, A.N.; Robinson, Y.H. Spam review detection using self attention based CNN and bi-directional LSTM. Multimed. Tools Appl. 2021, 80, 18107–18124. [Google Scholar] [CrossRef]

- Hussain, N.; Mirza, H.T.; Iqbal, F.; Hussain, I.; Kaleem, M. Detecting Spam Product Reviews in Roman Urdu Script. Comput. J. 2020, 64, 432–450. [Google Scholar] [CrossRef]

- Hussain, N.; Mirza, H.T.; Hussain, I.; Iqbal, F.; Memon, I. Spam review detection using the linguistic and spammer Behavioral methods. IEEE Access 2020, 8, 53801–53816. [Google Scholar] [CrossRef]

- Jayathunga, D.P.; Ranasinghe, R.I.S.; Murugiah, R. A Comparative Study of Supervised Machine Learning Techniques for Deceptive Review Identification Using Linguistic Inquiry and Word Count. In Proceedings of the International Conference on Computational Intelligence in Information System, Darussalam, Brunei, 5–27 January 2021; pp. 97–105. [Google Scholar]

- Li, J.; Zhang, P.; Yang, L. An unsupervised approach to detect review spam using duplicates of images, videos and Chinese texts. Comput. Speech Lang. 2021, 68, 101186. [Google Scholar] [CrossRef]

- Ansari, S.; Gupta, S. Customer perception of the deceptiveness of online product reviews: A speech act theory perspective. Int. J. Inf. Manag. 2021, 57, 102286. [Google Scholar] [CrossRef]

- Evans, A.M.; Stavrova, O.; Rosenbusch, H. Expressions of doubt and trust in online user reviews. Comput. Hum. Behav. 2021, 114, 106556. [Google Scholar] [CrossRef]

- Oh, Y.W.; Park, C.H. Machine cleaning of online opinion spam: Developing a machine-learning algorithm for detecting deceptive comments. Am. Behav. Sci. 2021, 65, 389–403. [Google Scholar] [CrossRef]

- Fayaz, M.; Khan, A.; Rahman, J.U.; Alharbi, A.; Uddin, M.I.; Alouffi, B. Ensemble Machine Learning Model for Classification of Spam Product Reviews. Complexity 2020, 2020, 8857570. [Google Scholar] [CrossRef]

- Mohammadi, S.; Mousavi, M.R. Investigating the Impact of Ensemble Machine Learning Methods on Spam Review Detection Based on Behavioral Features. J. Soft Comput. Inf. Technol. 2020, 9, 132–147. [Google Scholar]

- Yao, J.; Zheng, Y.; Jiang, H. An Ensemble Model for Fake Online Review Detection Based on Data Resampling, Feature Pruning, and Parameter Optimization. IEEE Access 2021, 9, 16914–16927. [Google Scholar] [CrossRef]

- Javed, M.S.; Majeed, H.; Mujtaba, H.; Beg, M.O. Fake reviews classification using deep learning ensemble of shallow convolutions. J. Comput. Soc. Sci. 2021, 4, 883–902. [Google Scholar] [CrossRef]

- Budhi, G.S.; Chiong, R.; Wang, Z. Resampling imbalanced data to detect fake reviews using machine learning classifiers and textual-based features. Multimed. Tools Appl. 2021, 80, 13079–13097. [Google Scholar] [CrossRef]

- Bidgolya, A.J.; Rahmaniana, Z. A Robust Opinion Spam Detection Method Against Malicious Attackers in Social Media. arXiv 2020, arXiv:2008.08650. [Google Scholar]

- Sundar, A.P.; Lilt, F.; Zou, X.; Gao, T. DeepDynamic Clustering of Spam Reviewers using Behavior-Anomaly-based Graph Embedding. In Proceedings of the GLOBECOM 2020-2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Noekhah, S.; binti Salim, N.; Zakaria, N.H. Opinion spam detection: Using multi-iterative graph-based model. Inf. Process. Manag. 2020, 57, 102140. [Google Scholar] [CrossRef]

- Wen, J.; Hu, J.; Shi, H.; Wang, X.; Yuan, C.; Han, J.; Guo, T. Fusion-based Spammer Detection Method by Embedding Review Texts and Weak Social Relations. In Proceedings of the 2020 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), Exeter, UK, 17–19 December 2020. [Google Scholar]

- Byun, H.; Jeong, S.; Kim, C.k. SC-Com: Spotting Collusive Community in Opinion Spam Detection. Inf. Process. Manag. 2021, 58, 102593. [Google Scholar] [CrossRef]

- Nejad, S.J.; Ahmadi-Abkenari, F.; Bayat, P. Opinion Spam Detection based on Supervised Sentiment Analysis Approach. In Proceedings of the 2020 10th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29–30 October 2020; pp. 209–214. [Google Scholar]

- Patil, P.P.; Phansalkar, S.; Ahirrao, S.; Pawar, A. ALOSI: Aspect-Level Opinion Spam Identification. In Data Science and Security; Springer: Berlin/Heidelberg, Germany, 2021; pp. 135–147. [Google Scholar]

- Li, J.; Lv, P.; Xiao, W.; Yang, L.; Zhang, P. Exploring groups of opinion spam using sentiment analysis guided by nominated topics. Expert Syst. Appl. 2021, 171, 114585. [Google Scholar] [CrossRef]

- Shan, G.; Zhou, L.; Zhang, D. From conflicts and confusion to doubts: Examining review inconsistency for fake review detection. Decis. Support Syst. 2021, 144, 113513. [Google Scholar] [CrossRef]

- Anas, S.M.; Kumari, S. Opinion Mining based Fake Product review Monitoring and Removal System. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; pp. 985–988. [Google Scholar]

- Wang, J.; Wen, R.; Wu, C.; Xiong, J. Analyzing and Detecting Adversarial Spam on a Large-scale Online APP Review System. In Proceedings of the Companion Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 409–417. [Google Scholar]

- Plotkina, D.; Munzel, A.; Pallud, J. Illusions of truth—Experimental insights into human and algorithmic detections of fake online reviews. J. Bus. Res. 2020, 109, 511–523. [Google Scholar] [CrossRef]

- Ya, Z.; Qingqing, Z.; Yuhan, W.; Shuai, Z. LDA_RAD: A Spam review detection method based on topic model and reviewer anomaly degree. In Journal of Physics: Conference Series; IOP Publishing: Tokyo, Japan, 2020; Volume 1550, p. 022008. [Google Scholar]

- Mohawesh, R.; Tran, S.; Ollington, R.; Xu, S. Analysis of concept drift in fake reviews detection. Expert Syst. Appl. 2021, 169, 114318. [Google Scholar] [CrossRef]

- Banerjee, S.; Chua, A.Y. Calling out fake online reviews through robust epistemic belief. Inf. Manag. 2021, 58, 103445. [Google Scholar] [CrossRef]

- Rastogi, A.; Mehrotra, M.; Ali, S.S. Effect of Various Factors in Context of Feature Selection on Opinion Spam Detection. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 778–783. [Google Scholar]

- Pradhan, S.; Amatya, E.; Ma, Y. Manipulation of online reviews: Analysis of negative reviews for healthcare providers. In Proceedings of the International Conference on Information Resources Management, Singapore, 18–19 November 2021. [Google Scholar]

- de Gregorio, F.; Fox, A.K.; Yoon, H.J. Pseudo-reviews: Conceptualization and consumer effects of a new online phenomenon. Comput. Hum. Behav. 2021, 114, 106545. [Google Scholar] [CrossRef]

- Zelenka, J.; Azubuike, T.; Pásková, M. Trust Model for Online Reviews of Tourism Services and Evaluation of Destinations. Adm. Sci. 2021, 11, 34. [Google Scholar] [CrossRef]

- Gao, Y.; Gong, M.; Xie, Y.; Qin, A. An attention-based unsupervised adversarial model for movie review spam detection. arXiv 2021, arXiv:2104.00955. [Google Scholar] [CrossRef]

- Krishnaveni, N.; Radha, V. Performance Evaluation of Clustering-Based Classification Algorithms for Detection of Online Spam Reviews. In Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2021; pp. 255–266. [Google Scholar]

| # | Source | Volume | Reference |

|---|---|---|---|

| 1 | Amazon | 5.8 million | [102] |

| 2 | Epinions | 6000 | [103] |

| 3 | Tripadvisor | 3032 | [104] |

| 4 | Yelp | - | [105] |

| 5 | Tripadvisor | 1600 | [106] |

| 6 | Tripadvisor | 2848 | [107] |

| 7 | Tripadvisor | 27,952 | [108] |

| 8 | Resellarratings | 628,707 | [109] |

| 9 | Resellarratings | 408,470 | [110] |

| 10 | Datatang | 10,020 | [111] |

| 11 | Datatang | 493,982 | [112] |

| 12 | Amazon | 65,098 | [113] |

| 13 | Amazon | 109,518 | [114] |

| 14 | Amazon | 195,174 | [115] |

| 15 | Amazon | 3 million | [116] |

| 16 | Amazon | 542,085 | [117] |

| 17 | Amazon | 6819 | [118] |

| 18 | Tripadvisor | 3000 | [119] |

| 19 | Yelp | 67,395 | [105] |

| 20 | Yelp | 359,052 | [120] |

| 21 | Yelp | 608,598 | [120] |

| 22 | Datatang | 9765 | [121] |

| 23 | Tripadvisor | 800 | [122] |

| 24 | Amazon | - | [123] |

| 25 | Amazon | 142.8 million | [124] |

| 26 | Yelp | 18,912 | [125] |

| 27 | Opinions | 6000 | [103] |

| Approach | Advantages | Disadvantages |

|---|---|---|

| Behavior features | Identify spam reviews by using the attitude of the spammers. | Modifying users’ behavior (changing the style of writing). |

| Supervised learning | Powerful to recognize the hidden pattern of reviews, including the relation of reviews, reviewers, products, features, and labels between each other. | Lack of many labeled data and the preparation of the data. |

| Semi-supervised | Solve the supervised learning weakness, handling unlabeled data. | Instability of iteration results and obtain low accuracy. |

| Deep learning | Handling different types of features, applications, and datasets. | Requires huge data (big data) to perform. |

| Linguistics approaches | Handles different linguistics, context, culture, regions, and multilingual spam reviews. | Hard to perform without the right persons that can 726 understand the used language. |

| Ensemble | Can handle complex and difficult problems that needs multiple hypotheses. | Hard to interpret and understand the predictions (why these reviews are spam). |

| Graph-based | Consecrate on the relationships of the entities. Depends on a low structure complexity alongside non-labeled data required. | Needs more analyses to perform than other approaches (data misinterpretation could occur easily) |

| Sentiment-based | Knowing different and unique aspects can improve the interpretation of some reviews content that is hard to understand on other methods | Not mature enough and needs more investigation |

| # | Method | Category | Publication Channel | Year | Reference |

|---|---|---|---|---|---|

| 1 | GAN | Behaviour | Journal (Elsevier) | 2020 | [126] |

| 2 | DT | Behaviour | Journal (Elsevier) | 2020 | [127] |

| 3 | CRFD | Supervised | Journal (ACM) | 2021 | [128] |

| 4 | NN | Supervised | Conference | 2020 | [129] |

| 5 | HOTFRED | Supervised | Conference | 2021 | [130] |

| 6 | Ramp One-Class SVM | Semi-supervised | Journal (Elsevier) | 2020 | [132] |

| 7 | hPSD | Semi-supervised | Journal (IEEE) | 2020 | [133] |

| 8 | GPT-2 | Semi-supervised | Journal | 2021 | [134] |

| 9 | Self-training | Semi-supervised | Journal (Elsevier) | 2020 | [135] |

| 10 | Hadoop | Semi-supervised | Journal (IJARST) | 2020 | [136] |

| 11 | CNN | Deep Learning | Conference | 2020 | [137] |

| 12 | PV-DBOW | Deep Learning | Journal (Elsevier) | 2020 | [138] |

| 13 | LSTM | Deep Learning | Conference | 2020 | [139] |

| 14 | LSTM | Deep Learning | Journal (Springer) | 2020 | [140] |

| 15 | LSTM + DBN | Deep Learning | Journal (Springer) | 2021 | [141] |

| 16 | CNN-BiLSTM | Deep Learning | Journal (Springer) | 2021 | [142] |

| 17 | Soft Voting | Linguistics | Journal | 2020 | [143] |

| 18 | SRD-LM | Linguistics | Journal (IEEE) | 2020 | [144] |

| 19 | PCA | Linguistics | Conference | 2021 | [145] |

| 20 | Duplication | Linguistics | Journal (Elsevier) | 2021 | [146] |

| 21 | Speech Act Theory | Linguistics | Journal (Elsevier) | 2021 | [147] |

| 22 | LIWC | Linguistics | Journal (Elsevier) | 2021 | [148] |

| 23 | SVM + DNN | Linguistics | Journal (SAGE) | 2021 | [149] |

| 24 | RF, MLP, and K-NN | Ensemble | Journal (Hindawi) | 2020 | [150] |

| 25 | ET, RF, Bagging and Boosting | Ensemble | Journal | 2020 | [151] |

| 26 | Lightgbm, RF and GBDT | Ensemble | Journal (IEEE) | 2021 | [152] |

| 27 | CNN | Ensemble | Journal (Springer) | 2021 | [153] |

| 28 | Adaptive Boosting | Ensemble | Journal (Springer) | 2021 | [154] |

| 29 | ROSD | Graph-based | Journal | 2020 | [155] |

| 30 | DDC | Graph-based | Conference | 2020 | [156] |

| 31 | MGSD | Graph-based | Journal (Elsevier) | 2020 | [157] |

| 32 | WS | Graph-based | Conference | 2020 | [158] |

| 33 | SC-Com | Graph-based | Journal (Elsevier) | 2021 | [159] |

| 34 | DT | Sentiment-based | Conference | 2020 | [160] |

| 35 | ALOSI | Sentiment-based | Conference | 2021 | [161] |

| 36 | GSDNT | Sentiment-based | Journal (Elsevier) | 2021 | [162] |

| 37 | RF | Sentiment-based | Journal (Elsevier) | 2021 | [163] |

| 38 | NB | Sentiment-based | Conference | 2021 | [164] |

| 39 | CBM and BBM | Other approaches | Conference | 2020 | [165] |

| 40 | AT | Other approaches | Journal (Elsevier) | 2020 | [166] |

| 41 | LDA | Other approaches | Conference | 2020 | [167] |

| 42 | SVM, LR and PNN | Other approaches | Journal (Elsevier) | 2021 | [168] |

| 43 | REB | Other approaches | Journal (Elsevier) | 2021 | [169] |

| 44 | CM | Other approaches | Conference | 2021 | [170] |

| 45 | - | Other approaches | Conference | 2021 | [171] |

| 46 | EI | Other approaches | Journal (Elsevier) | 2021 | [172] |

| 47 | SWOT | Other approaches | Journal (MDPI) | 2021 | [173] |

| 48 | (GAN | Other approaches | Journal | 2021 | [174] |

| 49 | SVM and k-NN | Other approaches | Conference | 2021 | [175] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Zoubi, A.M.; Mora, A.M.; Faris, H. Spam Reviews Detection in the Time of COVID-19 Pandemic: Background, Definitions, Methods and Literature Analysis. Appl. Sci. 2022, 12, 3634. https://doi.org/10.3390/app12073634

Al-Zoubi AM, Mora AM, Faris H. Spam Reviews Detection in the Time of COVID-19 Pandemic: Background, Definitions, Methods and Literature Analysis. Applied Sciences. 2022; 12(7):3634. https://doi.org/10.3390/app12073634

Chicago/Turabian StyleAl-Zoubi, Ala’ M., Antonio M. Mora, and Hossam Faris. 2022. "Spam Reviews Detection in the Time of COVID-19 Pandemic: Background, Definitions, Methods and Literature Analysis" Applied Sciences 12, no. 7: 3634. https://doi.org/10.3390/app12073634

APA StyleAl-Zoubi, A. M., Mora, A. M., & Faris, H. (2022). Spam Reviews Detection in the Time of COVID-19 Pandemic: Background, Definitions, Methods and Literature Analysis. Applied Sciences, 12(7), 3634. https://doi.org/10.3390/app12073634