A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses

Abstract

:1. Introduction

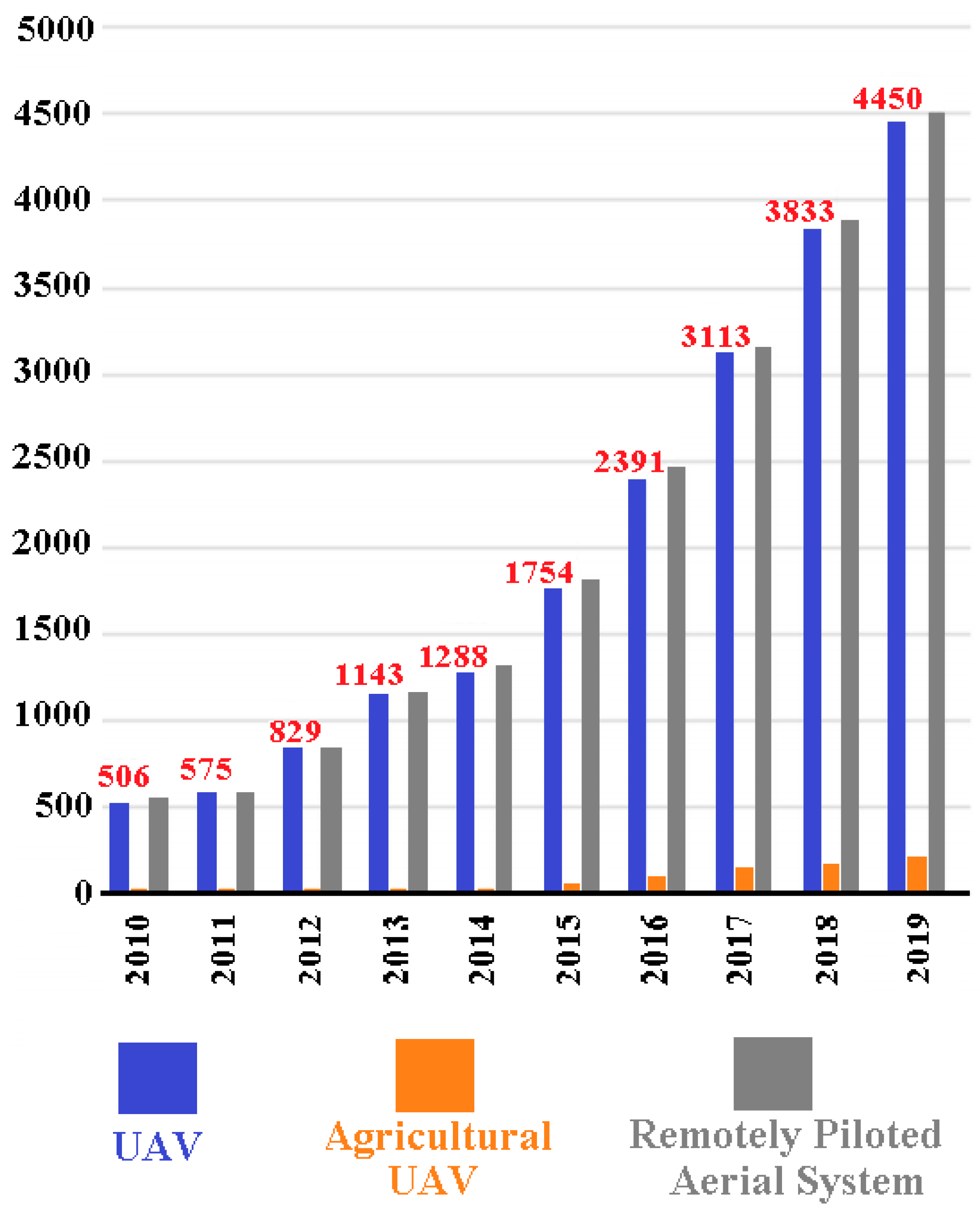

1.1. UAV and Precision Agriculture

- A UAV that works in a large agricultural area for crop monitoring, spraying, etc., should fully monitor the field, but is the UAV battery sufficient for this duty period?

- Are the size of the land and the flight time of the UAV compatible?

- Can the UAV operate autonomously in a closed environment and is it reliable?

- Is communication loss possible during the UAV mission?

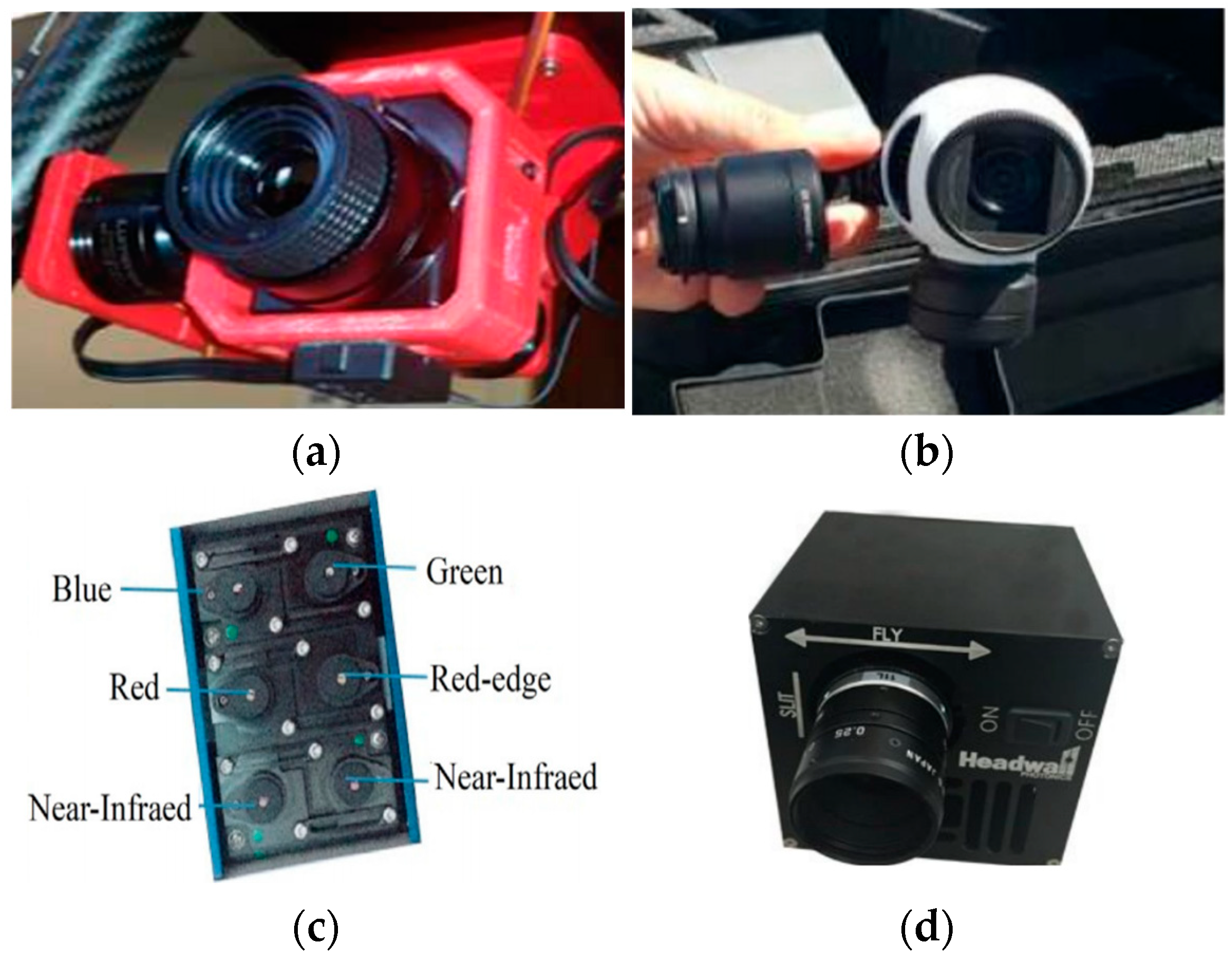

- Can UAV carry loads (RGB camera, multispectral camera, etc.) for different missions?

1.2. Our Study and Contributions

- Agricultural practices carried out with UAVs recently, mostly in 2020, are extensively discussed.

- UAV agricultural applications are discussed in two categories, i.e., indoor and outdoor environments.

- The importance, necessity and inadequacy of greenhouse UAV missions are emphasized.

- The importance of SLAM for autonomous agricultural UAV solutions in the greenhouse is explained.

2. Survey for Outdoor Agricultural UAV Applications

2.1. Crop Monitoring

2.2. Mapping

2.3. Spraying

2.4. Irrigation

2.5. Weed Detection

2.6. Remote Sensing

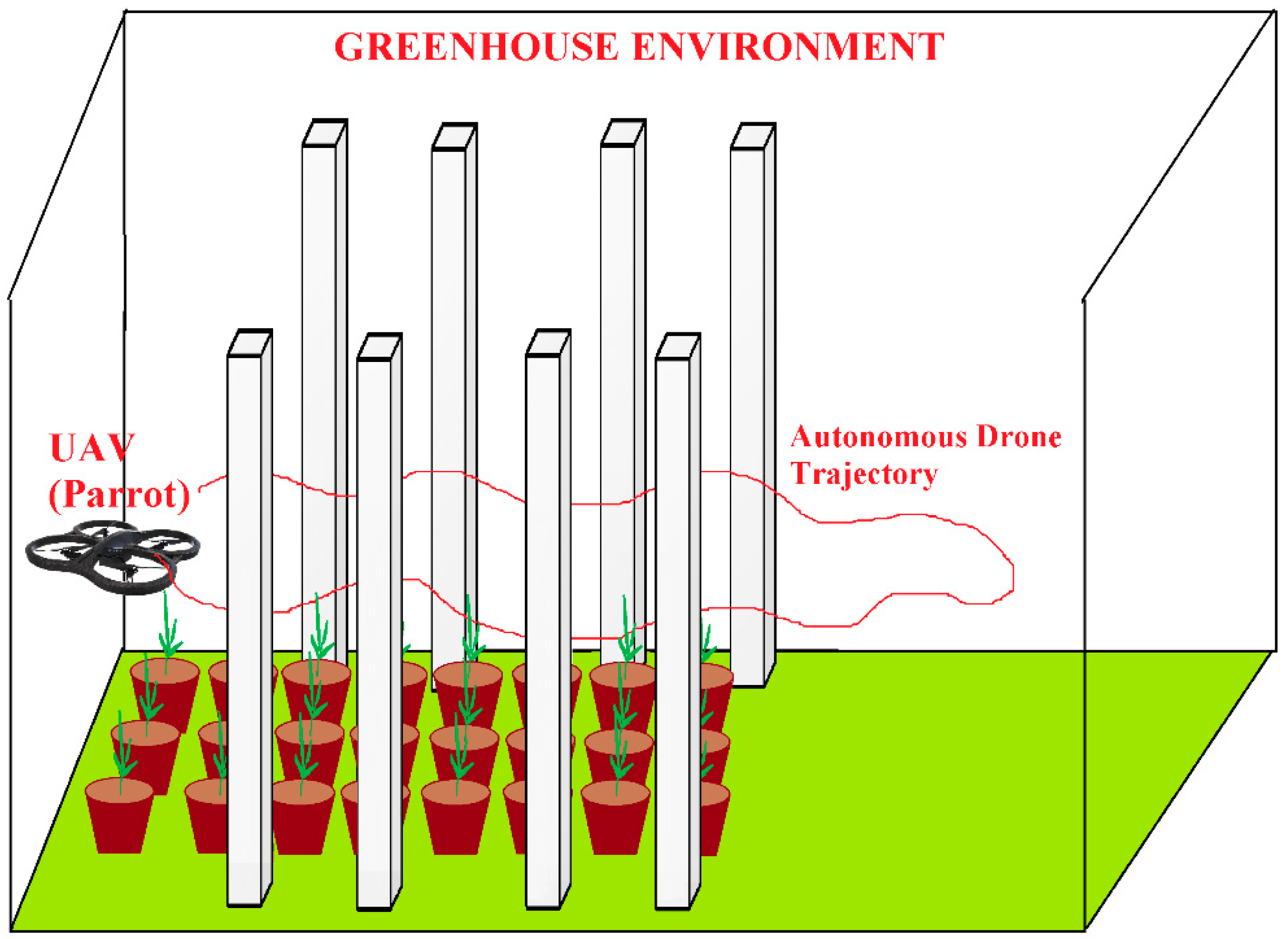

3. UAV Solutions in Greenhouses

4. Solution Proposal for UAV Applications in Greenhouses

5. Conclusions

5.1. Evaluation of Outdoor UAV Applications

5.2. Evaluation of Indoor UAV Applications (Greenhouse)

5.3. UAV Solution Proposal for Smart Greenhouses

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hunter, M.C.; Smith, R.G.; Schipanski, M.E.; Atwood, L.W.; Mortensen, D.A. Agriculture in 2050: Recalibrating targets for sustainable intensification. Bioscience 2017, 67, 386–391. [Google Scholar] [CrossRef] [Green Version]

- Cisternas, I.; Velásquez, I.; Caro, A.; Rodríguez, A. Systematic literature review of implementations of precision agriculture. Comput. Electron. Agric. 2020, 176, 105626. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Quaglia, G.; Visconte, C.; Scimmi, L.S.; Melchiorre, M.; Cavallone, P.; Pastorelli, S. Design of a UGV powered by solar energy for precision agriculture. Robotics 2020, 9, 13. [Google Scholar] [CrossRef] [Green Version]

- Pedersen, M.; Jensen, J. Autonomous Agricultural Robot: Towards Robust Autonomy. Master’s Thesis, Aalborg University, Aalborg, Denmark, 2007. [Google Scholar]

- Peña, C.; Riaño, C.; Moreno, G. RobotGreen: A teleoperated agricultural robot for structured environments. J. Eng. Sci. Technol. Rev. 2019, 12, 144–145. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Zhao, L.; Luo, T. Virtual Simulation of Fruit Picking Robot Based on Unity3d. In Proceedings of the 2nd International Conference on Artificial Intelligence and Computer Science, Hangzhou, China, 25–26 July 2020; p. 012033. [Google Scholar]

- Ishibashi, M.; Iida, M.; Suguri, M.; Masuda, R. Remote monitoring of agricultural robot using web application. IFAC Proc. Vol. 2013, 46, 138–142. [Google Scholar] [CrossRef]

- Han, L.; Ruijuan, C.; Enrong, M. Design and simulation of a handling robot for bagged agricultural materials. IFAC-PapersOnLine 2016, 49, 171–176. [Google Scholar] [CrossRef]

- Chen, M.; Sun, Y.; Cai, X.; Liu, B.; Ren, T. Design and implementation of a novel precision irrigation robot based on an intelligent path planning algorithm. arXiv 2020, arXiv:2003.00676. [Google Scholar]

- Adamides, G.; Katsanos, C.; Parmet, Y.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. HRI usability evaluation of interaction modes for a teleoperated agricultural robotic sprayer. Appl. Ergon. 2017, 62, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Chen, M.S.; Li, B. A visual navigation algorithm for paddy field weeding robot based on image understanding. Comput. Electron. Agric. 2017, 143, 66–78. [Google Scholar] [CrossRef]

- Jiang, K.; Zhang, Q.; Chen, L.; Guo, W.; Zheng, W. Design and optimization on rootstock cutting mechanism of grafting robot for cucurbit. Int. J. Agric. Biol. Eng. 2020, 13, 117–124. [Google Scholar] [CrossRef]

- Jayakrishna, P.V.S.; Reddy, M.S.; Sai, N.J.; Susheel, N.; Peeyush, K.P. Autonomous Seed Sowing Agricultural Robot. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bengaluru, India, 19–22 September 2018; pp. 2332–2336. [Google Scholar]

- Norasma, C.; Fadzilah, M.; Roslin, N.; Zanariah, Z.; Tarmidi, Z.; Candra, F. Unmanned Aerial Vehicle Applications in Agriculture. In Proceedings of the 1st South Aceh International Conference on Engineering and Technology (SAICOET), Tapaktuan, Indonesia, 8–9 December 2018; p. 012063. [Google Scholar]

- Honrado, J.; Solpico, D.B.; Favila, C.; Tongson, E.; Tangonan, G.L.; Libatique, N.J. UAV imaging with Low-Cost Multispectral İmaging System for Precision Agriculture Applications. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Hose, CA, USA, 19–22 October 2017; pp. 1–7. [Google Scholar]

- Pinguet, B. The Role of Drone Technology in Sustainable Agriculture. Available online: https://www.precisionag.com/in-field-technologies/drones-uavs/the-role-of-drone-technology-in-sustainable-agriculture/ (accessed on 10 October 2021).

- Alexandris, S.; Psomiadis, E.; Proutsos, N.; Philippopoulos, P.; Charalampopoulos, I.; Kakaletris, G.; Papoutsi, E.-M.; Vassilakis, S.; Paraskevopoulos, A. Integrating drone technology into an ınnovative agrometeorological methodology for the precise and real-time estimation of crop water requirements. Hydrology 2021, 8, 131. [Google Scholar] [CrossRef]

- López, A.; Jurado, J.M.; Ogayar, C.J.; Feito, F.R. A framework for registering UAV-based imagery for crop-tracking in Precision Agriculture. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102274. [Google Scholar] [CrossRef]

- Yan, X.; Zhou, Y.; Liu, X.; Yang, D.; Yuan, H. Minimizing occupational exposure to pesticide and increasing control efficacy of pests by unmanned aerial vehicle application on cowpea. Appl. Sci. 2021, 11, 9579. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Cerro, J.D.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned aerial vehicles in agriculture: A survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned aerial vehicles in agriculture: A review of perspective of platform, control, and applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Ju, C.; Son, H.I. Multiple UAV systems for agricultural applications: Control, implementation, and evaluation. Electronics 2018, 7, 162. [Google Scholar] [CrossRef] [Green Version]

- Raeva, P.L.; Šedina, J.; Dlesk, A. Monitoring of crop fields using multispectral and thermal imagery from UAV. Eur. J. Remote Sens. 2019, 52, 192–201. [Google Scholar] [CrossRef] [Green Version]

- Erdelj, M.; Saif, O.; Natalizio, E.; Fantoni, I. UAVs that fly forever: Uninterrupted structural inspection through automatic UAV replacement. Ad Hoc Netw. 2019, 94, 101612. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Liu, J.; Guo, H.; Kato, N. Toward robust and ıntelligent drone swarm: Challenges and future directions. IEEE Netw. 2020, 34, 278–283. [Google Scholar] [CrossRef]

- Gago, J.; Estrany, J.; Estes, L.; Fernie, A.R.; Alorda, B.; Brotman, Y.; Flexas, J.; Escalona, J.M.; Medrano, H. Nano and micro unmanned aerial vehicles (UAVs): A new grand challenge for precision agriculture? Curr. Protoc. Plant Biol. 2020, 5, e20103. [Google Scholar] [CrossRef] [PubMed]

- Torres-Sánchez, J.; Pena, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and mapping individual plants in a highly diverse high-elevation ecosystem using UAV imagery and deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Johansen, K.; Duan, Q.; Tu, Y.-H.; Searle, C.; Wu, D.; Phinn, S.; Robson, A.; McCabe, M.F. Mapping the condition of macadamia tree crops using multi-spectral UAV and WorldView-3 imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 28–40. [Google Scholar] [CrossRef]

- Park, S.; Lee, H.; Chon, J. Sustainable monitoring coverage of unmanned aerial vehicle photogrammetry according to wing type and image resolution. Environ. Pollut. 2019, 247, 340–348. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Hassanein, M.; Lari, Z.; El-Sheimy, N. A new vegetation segmentation approach for cropped fields based on threshold detection from hue histograms. Sensors 2018, 18, 1253. [Google Scholar] [CrossRef] [Green Version]

- Bhandari, S.; Raheja, A.; Chaichi, M.; Green, R.; Do, D.; Pham, F.; Ansari, M.; Wolf, J.; Sherman, T.; Espinas, A. Effectiveness of UAV-Based Remote Sensing Techniques in Determining Lettuce Nitrogen and Water Stresses. In Proceedings of the 14th International Conference on Precision Agriculture, Montreal, QC, Canada, 24–27 June 2018; pp. 1066403–1066415. [Google Scholar]

- Dhouib, I.; Jallouli, M.; Annabi, A.; Marzouki, S.; Gharbi, N.; Elfazaa, S.; Lasram, M.M. From immunotoxicity to carcinogenicity: The effects of carbamate pesticides on the immune system. Environ. Sci. Pollut. Res. 2016, 23, 9448–9458. [Google Scholar] [CrossRef]

- Martinez-Guanter, J.; Agüera, P.; Agüera, J.; Pérez-Ruiz, M. Spray and economics assessment of a UAV-based ultra-low-volume application in olive and citrus orchards. Precis. Agric. 2020, 21, 226–243. [Google Scholar] [CrossRef]

- Chartzoulakis, K.; Bertaki, M. Sustainable water management in agriculture under climate change. Agric. Agric. Sci. Procedia 2015, 4, 88–98. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Ryu, D.; Fuentes, S.; Chung, H.; Hernández-Montes, E.; O’Connell, M. Adaptive estimation of crop water stress in nectarine and peach orchards using high-resolution imagery from an unmanned aerial vehicle (UAV). Remote Sens. 2017, 9, 828. [Google Scholar] [CrossRef] [Green Version]

- Islam, N.; Rashid, M.M.; Wibowo, S.; Xu, C.-Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early weed detection using image processing and machine learning techniques in an australian chilli farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Louargant, M.; Villette, S.; Jones, G.; Vigneau, N.; Paoli, J.N.; Gée, C. Weed detection by UAV: Simulation of the impact of spectral mixing in multispectral images. Precis. Agric. 2017, 18, 932–951. [Google Scholar] [CrossRef] [Green Version]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Khanal, S.; Kc, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote sensing in agriculture—Accomplishments, limitations, and opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of unmanned aerial vehicle low-altitude remote sensing (UAV-LARS) use in agricultural monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Noor, N.M.; Abdullah, A.; Hashim, M. Remote Sensing UAV/Drones and İts Applications for Urban Areas: A review. In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP Publishing: London, UK, 2018; p. 012003. [Google Scholar]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of banana fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef] [Green Version]

- Allred, B.; Eash, N.; Freeland, R.; Martinez, L.; Wishart, D. Effective and efficient agricultural drainage pipe mapping with UAS thermal infrared imagery: A case study. Agric. Water Manag. 2018, 197, 132–137. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and testing a UAV mapping system for agricultural field surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [Green Version]

- Gašparović, M.; Zrinjski, M.; Barković, Đ.; Radočaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173, 105385. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Pearse, G.D.; Tan, A.Y.S.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Freitas, H.; Faiçal, B.S.; de Silva, A.V.C.; Ueyama, J. Use of UAVs for an efficient capsule distribution and smart path planning for biological pest control. Comput. Electron. Agric. 2020, 173, 105387. [Google Scholar] [CrossRef]

- Tokekar, P.; Vander Hook, J.; Mulla, D.; Isler, V. Sensor planning for a symbiotic UAV and UGV system for precision agriculture. IEEE Trans. Robot. 2016, 32, 1498–1511. [Google Scholar] [CrossRef]

- Pan, Z.; Lie, D.; Qiang, L.; Shaolan, H.; Shilai, Y.; Yande, L.; Yongxu, Y.; Haiyang, P. Effects of citrus tree-shape and spraying height of small unmanned aerial vehicle on droplet distribution. Int. J. Agric. Biol. Eng. 2016, 9, 45–52. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Meng, Y.; Su, J.; Song, J.; Chen, W.-H.; Lan, Y. Experimental evaluation of UAV spraying for peach trees of different shapes: Effects of operational parameters on droplet distribution. Comput. Electron. Agric. 2020, 170, 105282. [Google Scholar] [CrossRef]

- Fu, Z.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W. Wheat growth monitoring and yield estimation based on multi-rotor unmanned aerial vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef] [Green Version]

- Cao, Y.; Li, G.L.; Luo, Y.K.; Pan, Q.; Zhang, S.Y. Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105331. [Google Scholar] [CrossRef]

- Johansen, K.; Morton, M.J.; Malbeteau, Y.; Aragon, B.; Al-Mashharawi, S.; Ziliani, M.G.; Angel, Y.; Fiene, G.; Negrao, S.; Mousa, M.A. Predicting biomass and yield in a tomato phenotyping experiment using UAV imagery and random forest. Front. Artif. Intell. 2020, 3, 28. [Google Scholar] [CrossRef] [PubMed]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.d.S.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—A case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Yeom, J.; Maeda, M.; Maeda, A.; Dube, N.; Landivar, J.; Hague, S.; et al. Developing a machine learning based cotton yield estimation framework using multi-temporal UAS data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 180–194. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, W.Y.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Gomez Selvaraj, M.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Elmokadem, T. Distributed coverage control of quadrotor multi-uav systems for precision agriculture. IFAC-PapersOnLine 2019, 52, 251–256. [Google Scholar] [CrossRef]

- Hoffmann, H.; Jensen, R.; Thomsen, A.; Nieto, H.; Rasmussen, J.; Friborg, T. Crop water stress maps for an entire growing season from visible and thermal UAV imagery. Biogeosciences 2016, 13, 6545–6563. [Google Scholar] [CrossRef] [Green Version]

- Romero, M.; Luo, Y.; Su, B.; Fuentes, S. Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric. 2018, 147, 109–117. [Google Scholar] [CrossRef]

- Jiyu, L.; Lan, Y.; Jianwei, W.; Shengde, C.; Cong, H.; Qi, L.; Qiuping, L. Distribution law of rice pollen in the wind field of small UAV. Int. J. Agric. Biol. Eng. 2017, 10, 32–40. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Shan, B. Estimation of winter wheat yield from UAV-based multi-temporal imagery using crop allometric relationship and SAFY model. Drones 2021, 5, 78. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Maimaitiyiming, M.; Erkbol, H.; Hartling, S.; Peterson, K.; Peterson, J.; Burken, J.; Fritschi, F. UAV/satellite multiscale data fusion for crop monitoring and early stress detection. ISPRS Arch. 2019. [Google Scholar] [CrossRef] [Green Version]

- Roldán, J.J.; Garcia-Aunon, P.; Garzón, M.; de León, J.; del Cerro, J.; Barrientos, A. Heterogeneous multi-robot system for mapping environmental variables of greenhouses. Sensors 2016, 16, 1018. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hamouda, Y.E.; Elhabil, B.H. Precision Agriculture for Greenhouses Using a Wireless Sensor Network. In Proceedings of the 2017 Palestinian International Conference on Information and Communication Technology (PICICT), Gaza, Palestine, 8–9 May 2017; pp. 78–83. [Google Scholar]

- Erazo-Rodas, M.; Sandoval-Moreno, M.; Muñoz-Romero, S.; Huerta, M.; Rivas-Lalaleo, D.; Naranjo, C.; Rojo-Álvarez, J.L. Multiparametric monitoring in equatorian tomato greenhouses (I): Wireless sensor network benchmarking. Sensors 2018, 18, 2555. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rodríguez, S.; Gualotuña, T.; Grilo, C. A system for the monitoring and predicting of data in precision agriculture in a rose greenhouse based on wireless sensor networks. Procedia Comput. Sci. 2017, 121, 306–313. [Google Scholar] [CrossRef]

- Mat, I.; Kassim, M.R.M.; Harun, A.N.; Yusoff, I.M. IoT in Precision Agriculture Applications Using Wireless Moisture Sensor Network. In Proceedings of the 2016 IEEE Conference on Open Systems (ICOS), Kedah, Malaysia, 10–12 October 2016; pp. 24–29. [Google Scholar]

- Komarchuk, D.S.; Gunchenko, Y.A.; Pasichnyk, N.A.; Opryshko, O.A.; Shvorov, S.A.; Reshetiuk, V. Use of Drones in Industrial Greenhouses. In Proceedings of the 2021 IEEE 6th International Conference on Actual Problems of Unmanned Aerial Vehicles Development (APUAVD), Kyiv, Ukraine, 19–21 October 2021; pp. 184–187. [Google Scholar]

- Jiang, J.-A.; Wang, C.-H.; Liao, M.-S.; Zheng, X.-Y.; Liu, J.-H.; Chuang, C.-L.; Hung, C.-L.; Chen, C.-P. A wireless sensor network-based monitoring system with dynamic convergecast tree algorithm for precision cultivation management in orchid greenhouses. Precis. Agric. 2016, 17, 766–785. [Google Scholar] [CrossRef]

- Roldán, J.J.; Joossen, G.; Sanz, D.; del Cerro, J.; Barrientos, A. Mini-UAV based sensory system for measuring environmental variables in greenhouses. Sensors 2015, 15, 3334–3350. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neumann, P.P.; Kohlhoff, H.; Hüllmann, D.; Lilienthal, A.J.; Kluge, M. Bringing Mobile Robot Olfaction to the Next Dimension—UAV-Based Remote Sensing of Gas Clouds and Source Localization. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3910–3916. [Google Scholar]

- Khan, A.; Schaefer, D.; Tao, L.; Miller, D.J.; Sun, K.; Zondlo, M.A.; Harrison, W.A.; Roscoe, B.; Lary, D.J. Low power greenhouse gas sensors for unmanned aerial vehicles. Remote Sens. 2012, 4, 1355–1368. [Google Scholar] [CrossRef] [Green Version]

- Khan, A.; Schaefer, D.; Roscoe, B.; Sun, K.; Tao, L.; Miller, D.; Lary, D.J.; Zondlo, M.A. Open-Path Greenhouse Gas Sensor for UAV Applications. In Proceedings of the Conference on Lasers and Electro-Optics 2012, San Jose, CA, USA, 6 May 2012; p. JTh1L.6. [Google Scholar]

- Berman, E.S.F.; Fladeland, M.; Liem, J.; Kolyer, R.; Gupta, M. Greenhouse gas analyzer for measurements of carbon dioxide, methane, and water vapor aboard an unmanned aerial vehicle. Sens. Actuators B Chem. 2012, 169, 128–135. [Google Scholar] [CrossRef]

- Malaver, A.; Motta, N.; Corke, P.; Gonzalez, F. Development and integration of a solar powered unmanned aerial vehicle and a wireless sensor network to monitor greenhouse gases. Sensors 2015, 15, 4072–4096. [Google Scholar] [CrossRef] [PubMed]

- Simon, J.; Petkovic, I.; Petkovic, D.; Petkovics, A. Navigation and applicability of hexa rotor drones in greenhouse environment. Teh. Vjesn. 2018, 25, 249–255. [Google Scholar]

- Shi, Q.; Liu, D.; Mao, H.; Shen, B.; Liu, X.; Ou, M. Study on Assistant Pollination of Facility Tomato by UAV. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2019; p. 1. [Google Scholar]

- Amador, G.J.; Hu, D.L. Sticky solution provides grip for the first robotic pollinator. Chem 2017, 2, 162–164. [Google Scholar] [CrossRef] [Green Version]

- Simmonds, W.; Fesselet, L.; Sanders, B.; Ramsay, C.; Heemskerk, C. HiPerGreen: High Precision Greenhouse Farming, Inholland University of Applied Sciences: Diemen, The Netherlands, 2017.

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef] [Green Version]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef] [Green Version]

- Durdu, A.; Korkmaz, M. A novel map-merging technique for occupancy grid-based maps using multiple robots: A semantic approach. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3980–3993. [Google Scholar] [CrossRef]

- Taheri, H.; Xia, Z.C. SLAM; definition and evolution. Eng. Appl. Artif. Intell. 2021, 97, 104032. [Google Scholar] [CrossRef]

- Yusefi, A.; Durdu, A.; Aslan, M.F.; Sungur, C. LSTM and Filter Based Comparison Analysis for Indoor Global Localization in UAVs. IEEE Access 2021, 9, 10054–10069. [Google Scholar] [CrossRef]

- Jinyu, L.; Bangbang, Y.; Danpeng, C.; Nan, W.; Guofeng, Z.; Hujun, B. Survey and evaluation of monocular visual-inertial SLAM algorithms for augmented reality. Virtual Real. Intell. Hardw. 2019, 1, 386–410. [Google Scholar] [CrossRef]

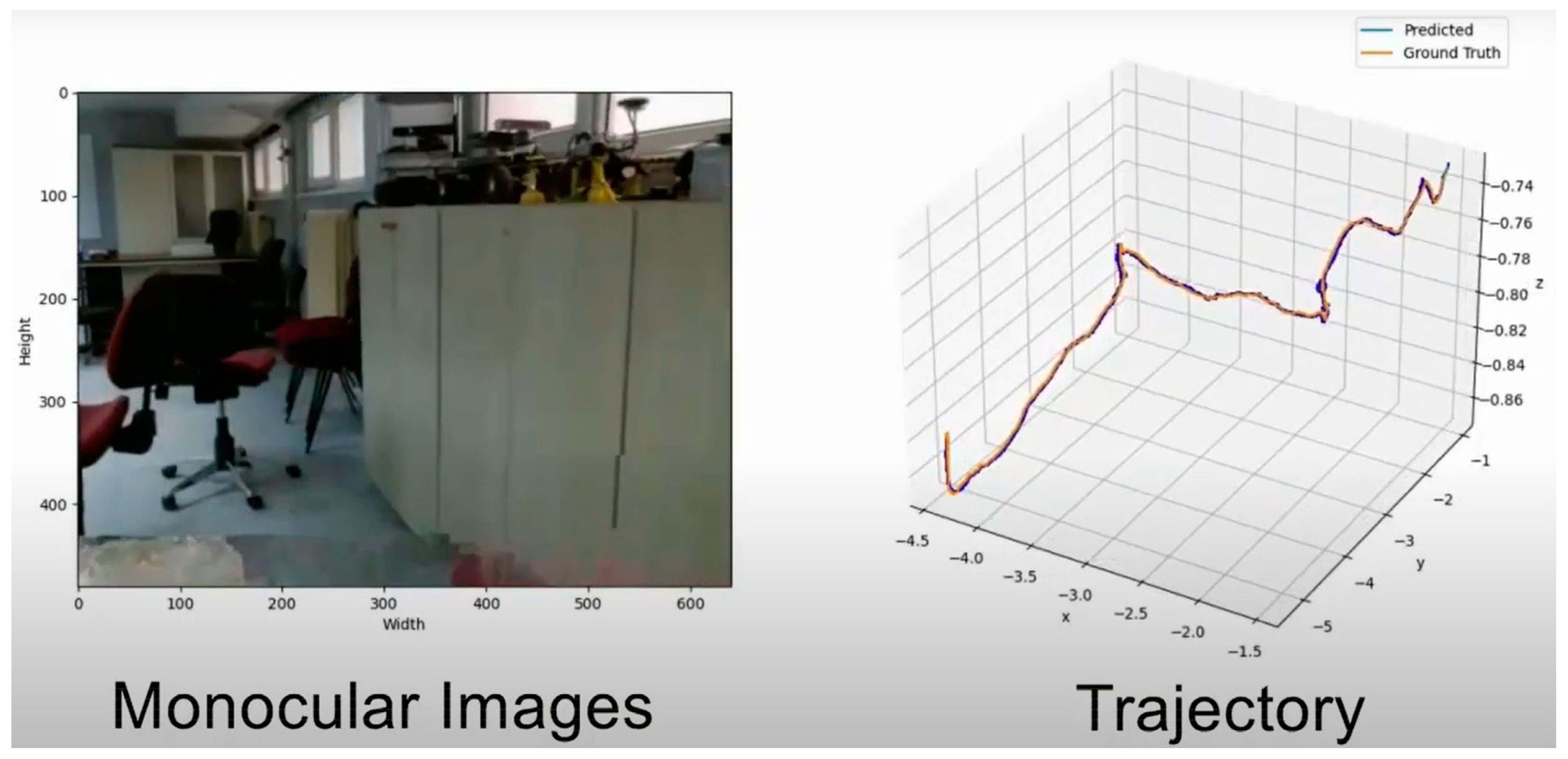

- Gurturk, M.; Yusefi, A.; Aslan, M.F.; Soycan, M.; Durdu, A.; Masiero, A. The YTU dataset and recurrent neural network based visual-inertial odometry. Measurement 2021, 184, 109878. [Google Scholar] [CrossRef]

- Dowling, L.; Poblete, T.; Hook, I.; Tang, H.; Tan, Y.; Glenn, W.; Unnithan, R.R. Accurate indoor mapping using an autonomous unmanned aerial vehicle (UAV). arXiv 2018, arXiv:1808.01940. [Google Scholar]

- Qin, H.; Meng, Z.; Meng, W.; Chen, X.; Sun, H.; Lin, F.; Ang, M.H. Autonomous exploration and mapping system using heterogeneous UAVs and UGVs in GPS-denied environments. IEEE Trans. Veh. Technol. 2019, 68, 1339–1350. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Delmerico, J.; Scaramuzza, D. A Benchmark Comparison of Monocular Visual-Inertial Odometry Algorithms for Flying Robots. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2502–2509. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-Aided Inertial Navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef] [Green Version]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust Visual Inertial Odometry Using a Direct EKF-Based Approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef] [Green Version]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A Robust and Modular Multi-Sensor Fusion Approach Applied to mav Navigation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3923–3929. [Google Scholar]

- Faessler, M.; Fontana, F.; Forster, C.; Mueggler, E.; Pizzoli, M.; Scaramuzza, D. Autonomous, vision-based flight and live dense 3D mapping with a quadrotor micro aerial vehicle. J. Field Robot. 2016, 33, 431–450. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual—Inertial odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Heo, S.; Cha, J.; Park, C.G. EKF-based visual inertial navigation using sliding window nonlinear optimization. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2470–2479. [Google Scholar] [CrossRef]

| No | Study | Study Name | Task | Year | Product/Focus | UAV Type | Purpose of Study |

|---|---|---|---|---|---|---|---|

| 1 | Zhang, Atkinson, George, Wen, Diazgranados and Gerard [31] | Identifying and mapping individual plants in a highly diverse high-elevation ecosystem using UAV imagery and deep learning | Mapping | 2020 | Frailejones | Single UAV | In this study, frailejones plants were classified from UAV images using a newly proposed SS Res U-Net deep learning method. Later, the proposed model was compared with other deep learning-based semantic segmentation methods and was shown to be superior to these methods. |

| 2 | Johansen, Duan, Tu, Searle, Wu, Phinn, Robson and McCabe [32] | Mapping the condition of macadamia tree crops using multi-spectral UAV and WorldView-3 imagery | Mapping | 2020 | Macadamia tree | Single UAV | This study used both multispectral UAV and WorldView-3 images to map the condition of macadamia tree crops. A random forest classifier achieved 98.5% correct matching for both UAV and WorldView-3 images. |

| 3 | Allred et al. [50] | Effective and efficient agricultural drainage pipe mapping with UAS thermal infrared imagery: A case study | Mapping | 2018 | Agricultural underground drainage systems | Single UAV | The Pix4D software and Pix4Dmapper Pro were employed to determine drainage pipe locations using visible (VIS), thermal infrared (TIR) and near-infrared (NIR) imagery obtained by UAV. The study claimed that TIR imagery from UAV has considerable potential for detecting drain line locations under dry-surface conditions. |

| 4 | Christiansen et al. [51] | Designing and Testing a UAV Mapping System for Agricultural Field Surveying | Mapping | 2017 | Winter wheat | Single UAV | Data from sensors such as light detection and ranging (LIDAR), global navigation satellite system (GNSS) and inertial measurement unit (IMU) mounted on a UAV were fused to conduct mapping of winter wheat field. IMU, GNSS and UAV data were used to estimate the orientation and position (pose). The point cloud data from LIDAR were combined with the estimated pose for three-dimensional (3D) mapping. |

| 5 | Gašparović et al. [52] | An automatic method for weed mapping in oat fields based on UAV imagery | Mapping | 2020 | Weed | Single UAV | Four independent classification algorithms derived from the random forest algorithm were tested for the creation of weed maps. Input data were collected using a low-cost RGB camera mounted on a UAV. The automatic object-based classification algorithm had the highest classification accuracy with an overall accuracy of 89.0%. |

| 6 | Schiefer et al. [53] | Mapping forest tree species in high-resolution UAV-based RGB-imagery by means of convolutional neural networks | Mapping | 2020 | Forest tree species | Single UAV | RGB imagery taken from a UAV was assessed with the learning capabilities of convolutional neural networks (CNNs) and a semantic segmentation approach (U-Net) for the mapping of tree species in the forest environment. Nine tree species, deadwood, three genus-level classes and forest floor were accurately and quickly mapped. |

| 7 | Pearse et al. [54] | Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data | Mapping | 2020 | Tree seedlings | Single UAV | A deep learning-based method applied to data from an RGB camera mounted on a UAV was presented for large-scale and rapid mapping of young conifer seedlings. CNN-based models were trained on two sites to detect seedlings with an overall accuracy of 99.5% and 98.8%. |

| 8 | Freitas et al. [55] | Use of UAVs for an efficient capsule distribution and smart path planning for biological pest control | Path planning | 2020 | Exotic pests | Single UAV | A UAV-based coverage algorithm was proposed to cover all areas and to detect exotic pests damaging the area. The capsule deposition sites were calculated in the whole environment and generated a path for the cup distribution location of the UAV in the algorithm. This planned distribution was more advantageous and preferable than a zigzag distribution in this study. |

| 9 | Tokekar et al. [56] | Sensor Planning for a Symbiotic UAV and UGV System for Precision Agriculture | Remote sensing | 2016 | Nitrogen level prediction | Single UAV + UGV | This study aimed to predict the nitrogen (N) map of an environment and to plan an optimum path to apply fertilizer with a UAV. A UGV helped to measure each point visited by the UAV. The total time spent was minimized according to traveling and measuring. They applied the method of the traveling salesperson problem with neighborhoods (TSPN) for this path-minimization problem. |

| 10 | Pan et al. [57] | Effects of citrus tree-shape and spraying height of small unmanned aerial vehicle on droplet distribution | Spraying | 2016 | Citrus trees | Single UAV | The effects of spraying height of a UAV and citrus tree shape were investigated for droplet distribution in this study. The UAV performance at a 1.0 m working height was better than at the other heights. Additionally, to increase the droplet distribution, open center shape citrus trees were advised based on the results of the study. |

| 11 | Faiçal et al. [58] | An adaptive approach for UAV-based pesticide spraying in dynamic environments | Spraying | 2017 | Pesticide | Single UAV | A computer-based system that controls a UAV for precise pesticide deposition in the field and metaheuristic route-planning method based on particle swarm optimization, genetic algorithms, hill-climbing and simulated annealing was evaluated for autonomous adaptation of route changes. The spray deposition was tracked by sensors, and the system was controlled by wireless sensor networks (WSNs). The proposed system resulted in less environmental damage, more precise changes in the route of flight and more accurate deposition of the pesticide. |

| 12 | Meng et al. [59] | Experimental evaluation of UAV spraying for peach trees of different shapes: Effects of operational parameters on droplet distribution | Spraying | 2020 | Peach trees | Single UAV | The effects of UAV operational parameters on droplet distribution for orchard trees were evaluated in this work. A UAV was experimentally used for the aerial spraying of Y-shape and CL-shape peach trees, and improvement on the droplet coverage was shown by the increase in nozzle flow rate at the end of the study. |

| 13 | Ye, Huang, Huang, Cui, Dong, Guo, Ren and Jin [49] | Recognition of banana fusarium wilt based on UAV remote sensing | Crop monitoring | 2020 | Banana | Single UAV | UAV-based multispectral imagery was used to determine infested banana regions in this work. Banana fusarium wilt disease was identified with a red-edge band multispectral camera sensor. The binary logistic regression method was used to establish the spatial relationships between infested plants and non-infested plants on the known map. |

| 14 | Fu et al. [60] | Wheat growth monitoring and yield estimation based on multi-rotor unmanned aerial vehicle | Crop monitoring | 2020 | Wheat | Single UAV | This study was performed on wheat trials treated with seeding densities and different nitrogen levels in the area. The images were collected by a multispectral camera mounted on the UAV. Multiple linear regression (MLR), simple linear regression (LR), partial least squares regression (PLSR), stepwise multiple linear regression (SMLR), random forest (RF) and artificial neural network (ANN) modeling methods were used to estimate wheat yield. The experimental results showed that machine learning methods had a better performance for predicting wheat yield. |

| 15 | Cao et al. [61] | Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images | Crop monitoring | 2020 | Sugar beet | Single UAV | A UAV equipped with a multispectral camera sensor was used for the experiments. In this study, four wide-dynamic-range vegetation indices (WDRVIs) were calculated by adding α weight coefficients to the normalized vegetation index (NDVI) to estimate the fresh weight of leaves (FWL), the fresh weight of beet LAI and the fresh weight of roots (FWR) of the sugar beet. Next, the effect of different indices on sugar beet was compared. According to the study, WDRVI1 can be used as a vegetation index to monitor beet growth. |

| 16 | Johansen et al. [62] | Predicting Biomass and Yield in a Tomato Phenotyping Experiment Using UAV Imagery and Random Forest | Crop monitoring | 2020 | Wild tomato species | Single UAV | In this study, UAV images were used with random forest learning to estimate the biomass and yield of 1200 tomato plants. The results of RGB and multispectral UAV images collected 1 and 2 weeks before harvest were compared. |

| 17 | Tetila et al. [63] | Detection and classification of soybean pests using deep learning with UAV images | Crop monitoring | 2020 | Soybean pests | Single UAV | This study applied five deep learning architectures to classify soybean pest images and compared their results. Accuracy reaching 93.82% was achieved with transfer learning-based methods performed on a dataset consisting of 5000 images. |

| 18 | Zhang et al. [64] | Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery | Crop monitoring | 2020 | Maize | Single UAV | In this study, color images captured remotely by a UAV imaging system were used to estimate maize yield. Various linear regression models were developed for three sample area sizes (21, 106 and 1058 m2). In the yield estimation using linear regression models, a mean absolute percentage error (MAPE) varying between 6.2% and 15.1% was obtained. |

| 19 | Wan et al. [65] | Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—a case study of small farmlands in the South of China | Crop monitoring | 2020 | Rice | Single UAV | A UAV platform with RGB and multispectral cameras was used to predict grain yield in rice. Spectral and structural information was obtained from RGB and multispectral images to evaluate grain yield and monitor crop growth status. It was then evaluated using random forest models. |

| 20 | Kerkech et al. [66] | Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach | Crop monitoring | 2020 | Vine | Single UAV | In this study, deep learning segmentation was used in UAV images to detect mildew disease in vines. A combination of visible and infrared images is used in this method. With the proposed method, the disease was detected with an accuracy of 92% at the grapevine level and 87% at the leaf level. |

| 21 | Ashapure et al. [67] | Developing a machine learning-based cotton yield estimation framework using multi-temporal UAS data | Crop monitoring | 2020 | Cotton | Single UAV | In this study, multitemporal remote sensing data collected from a UAV were used for cotton yield estimation. In the cotton yield estimation made using artificial neural networks (ANNs), the highest value of R2 was 0.89. |

| 22 | Li et al. [68] | Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging | Crop monitoring | 2020 | Potato | Single UAV | RGB and hyperspectral images were obtained with a low-altitude UAV to estimate biomass and crop yield in potatoes. High accuracy was obtained in biomass estimation using random forest regression models. |

| 23 | Zheng et al. [69] | Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images | Crop monitoring | 2021 | Palm trees | Single UAV | A classification method was proposed that reveals both the presence and the growth state of oil palm trees. This approach, based on Faster RCNN and called multiclass oil palm detection (MOPAD), produced effective results by using a refined pyramid feature (RPF) and hybrid class-balanced loss together. In this study, palm trees in two regions in Indonesia were classified into five groups using MOPAD. In the classification in two regions, F1-score values were determined to be 87.91% and 99.04%. |

| 24 | Gomez Selvaraj et al. [70] | Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and the Republic of Benin | Crop monitoring | 2020 | Banana plants | Single UAV | In this study, banana groups and diseases were classified by using pixel-based and machine learning models using multilevel satellite images and UAV platforms on the mixed-complex surface of Africa. Banana bunchy top disease (BBTD), Xanthomonas wilt of banana (BXW), healthy banana cluster and individual banana plants were determined as 4 classes and classified with 99.4%, 92.8%, 93.3% and 90.8% accuracy, respectively. This approach was reported to have an important potential as a decision support system in identifying the major banana diseases encountered in Africa. |

| 25 | Elmokadem [71] | Distributed Coverage Control of Quadrotor Multi-UAV Systems for Precision Agriculture | Field monitoring | 2019 | Region-based UAV control | Multiple UAVs | In this study, multiple UAV control strategies were presented for precision agriculture applications. Using Voronoi partitions, the positions of the UAVs were determined, and collisions with each other were prevented. Simulations were run in Gazebo and Robot Operating System (ROS) to show the performance of the proposed method. |

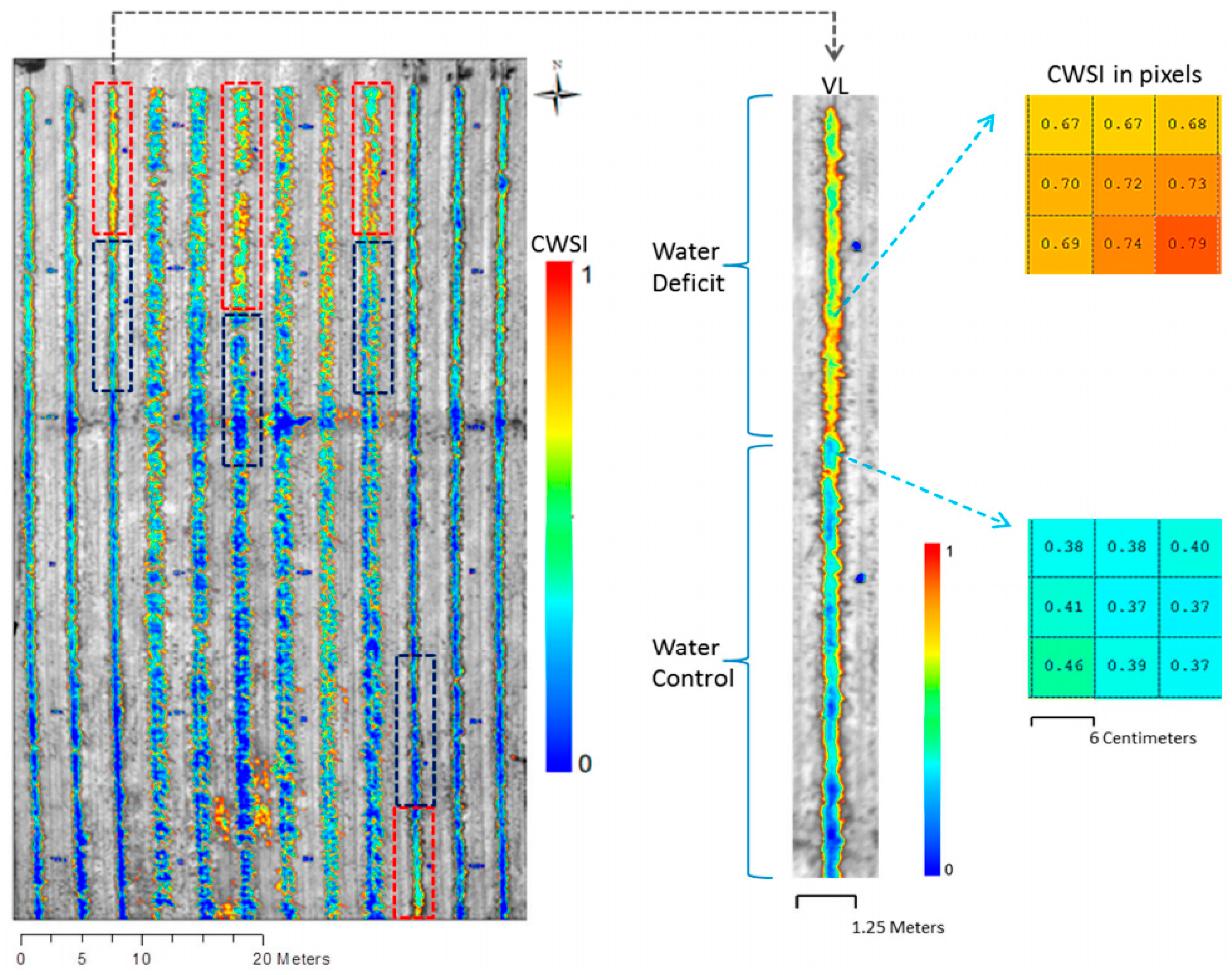

| 26 | Hoffmann et al. [72] | Crop water stress maps for an entire growing season from visible and thermal UAV imagery | Irrigation | 2016 | Barley | Single UAV | A water deficit index (WDI) was obtained using images collected by a UAV. Using this index, the water stress of plants was measured. Both early and growing plant images were used to determine WDI. The WDI index is different from the commonly used vegetation index, which is based on the greenery of the surface. The resulting WDI map had a spatial resolution of 0.25 m in this study. |

| 27 | Romero et al. [73] | Vineyard water status estimation using multispectral imagery from a UAV platform and machine learning algorithms for irrigation scheduling management | Irrigation | 2018 | Vine | Single UAV | In this study, a relationship was established between the vegetation index derived from multiband images taken using UAVs and the midday stem water potential of grapes. For this, the pattern recognition ANN model classified the results as severe water stress, moderate water stress and no water stress for certain thresholds. It was determined that this model is a suitable method for optimum irrigation. |

| 28 | Jiyu et al. [74] | Distribution law of rice pollen in the wind field of small UAV | Artificial pollination | 2017 | Rice | Single UAV | In this study, the required flight speed of the UAVs to have a positive effect on the pollination of rice was determined. The flight speed of the UAV, which offers the best pollination opportunity, was determined to be 4.53 m/s. SPSS’s Q-Q plot was used to verify this situation. The findings provided the velocity parameters that should be used by agricultural UAVs to have a positive effect on rice pollination. |

| 29 | dos Santos Ferreira et al. [75] | Weed detection in soybean crops using ConvNets | Weed detection | 2017 | Soybean crops | Single UAV | Images were taken in a soybean field in Brazil using UAVs. With these images, a database was created with classes such as soil, soybean and broadleaf grasses. The classification was made using convolutional neural networks. The best result was achieved by using ConvNets, and the accuracy was 98%. |

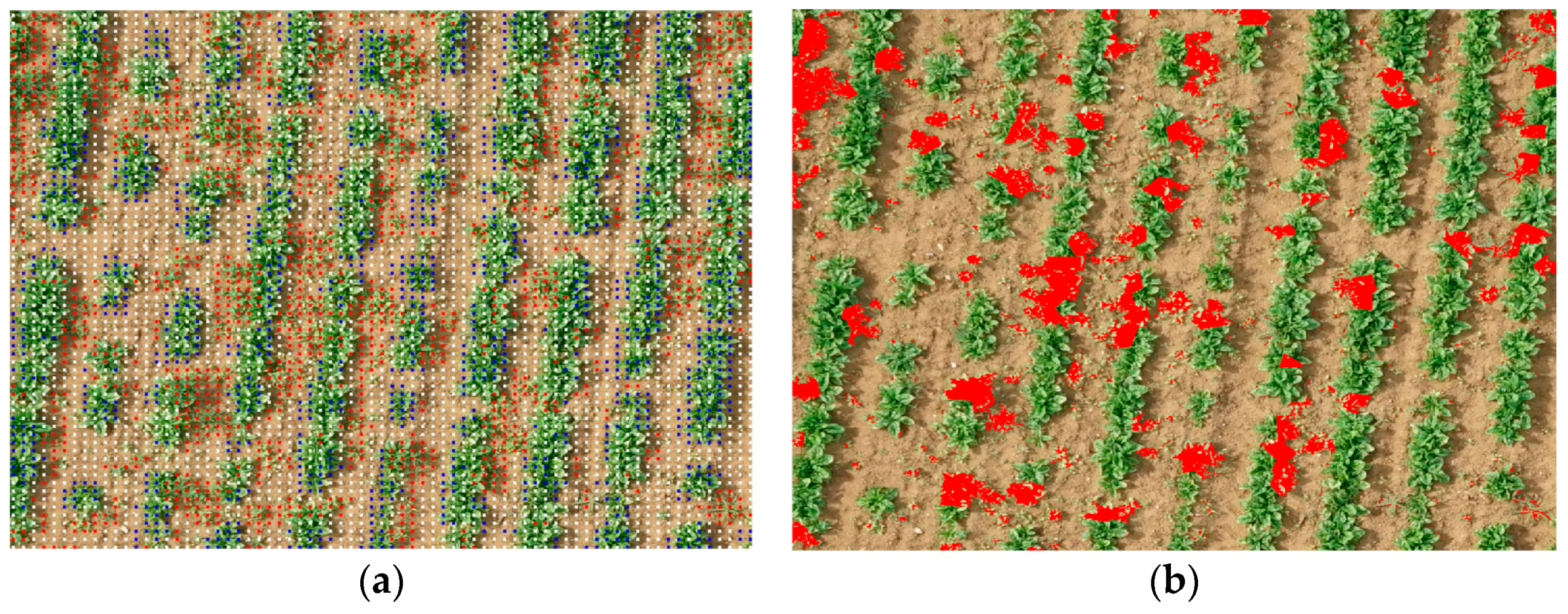

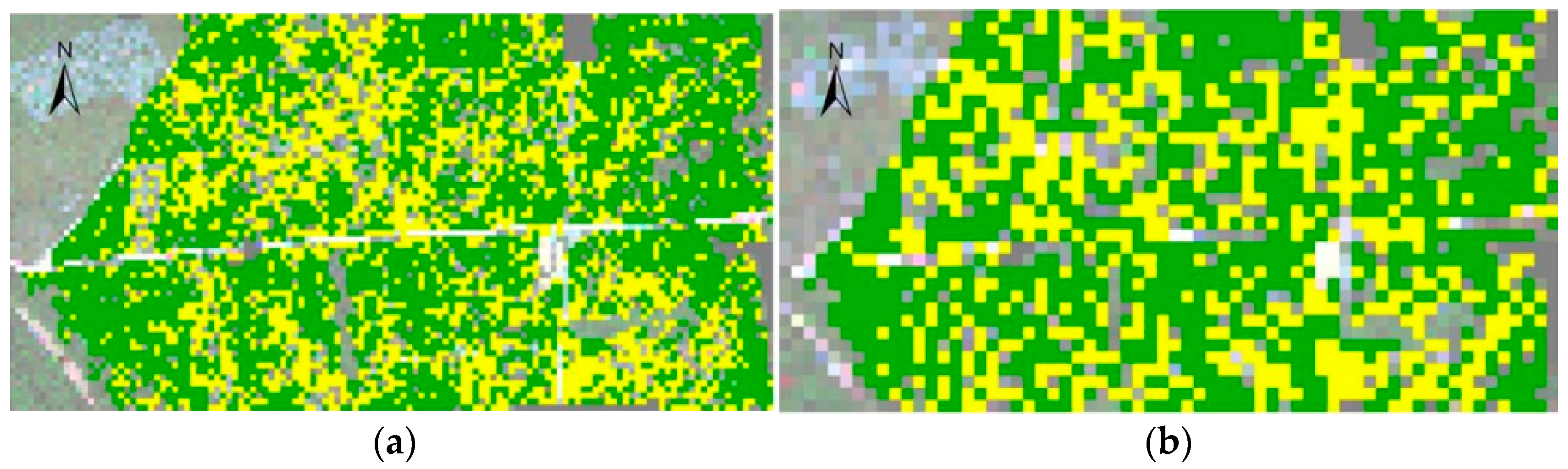

| 30 | Stroppiana et al. [76] | Early season weed mapping in rice crops using multi-spectral UAV data | Weed detection | 2018 | Rice | Single UAV | Shortly after planting rice, the authors mapped the weeds found in the field using a UAV. The images taken by using the Parrot Sequoia sensor were classified as weed or not weed with an unsupervised clustering algorithm. The herbicide was applied by comparing the amount of weed on this map with a certain threshold level. |

| No | Study | Study Name | Task | Year | Product/ Focus | UAV Type |

|---|---|---|---|---|---|---|

| 1 | Shi, Liu, Mao, Shen, Liu and Ou [93] | Study on Assistant Pollination of Facility Tomato by UAV | Pollination | 2019 | Tomato | Single UAV |

| 2 | Roldán, Garcia-Aunon, Garzón, De León, Del Cerro and Barrientos [79] | Heterogeneous Multi-Robot System for Mapping Environmental Variables of Greenhouses | Mapping | 2016 | Environmental variables | Single UAV |

| 3 | Roldán, Joossen, Sanz, Del Cerro and Barrientos [86] | Mini-UAV Based Sensory System for Measuring Environmental Variables in Greenhouses | Monitoring | 2015 | Environmental variables | Single UAV |

| 4 | Simon, Petkovic, Petkovic and Petkovics [92] | Navigation and Applicability of Hexa Rotor Drones in Greenhouse Environment | Navigation | 2018 | Positioning | Single UAV |

| No | Study | Information | Sensors | App. Environment | Result |

|---|---|---|---|---|---|

| 1 | Dowling et al. [103] | UAV study based on an extended Kalman filter (EKF) that can navigate independently in a closed indoor environment, create an area map using 2D laser scan data for navigation and record live video. | LIDAR, ultrasonic sensor (SLAM) | ROS | The map was created by a planar laser scanner using a UAV indoors, and it was shown that the UAV avoided obstacles correctly. |

| 2 | Qin et al. [104] | UAV and UGV were used together for autonomous exploration, mapping and navigation in the indoor environment. To take advantage of heterogeneous robots, the exploration and mapping tasks are divided into two layers. In the first layer, the aim is to carry out a preliminary exploration and produce a rough mapping with the UGV mounted on 3D LIDAR. The map created by the UGV is shared with the UAV. The UAV then performs complementary precision mapping using an inclined 2D laser module and visual sensors, filling the remaining gaps in the previous map. The application was applied both in simulation and experimentally. | LIDAR, stereo camera (ZED) (SLAM) | ROS | UAV and UGV advantages were utilized. The structure of the environment was successfully obtained. |

| 3 | Mur-Artal et al. [105] | Feature-based monocular ORB-SLAM was presented for indoor and outdoor environments. For feature extraction, ORB with directed multiscale FAST corners was used. While the ORB provided good invariance from the point of view, its calculation and matching were extremely fast. This enabled the powerful optimization of mapping. The system combined monitoring, local mapping and loop closing threads running in parallel. The distributed bag of words (DBoW) location recognition module was used in the system to perform loop detection. | Monocular camera (VSLAM) | ROS | A very reliable and successful solution for monocular SLAM was developed with ORB-SLAM. |

| 4 | Engel et al. [106] | This study presented the monocular large-scale direct SLAM (LSD-SLAM), which is popular among direct SLAM methods. Direct SLAM algorithms do not extract key points in the image but, instead, use image densities to predict location and map. That is, they are more robust and detailed than feature-based methods (MonoSLAM, PTAM, ORB-SLAM, etc.), but this causes high computational costs. The map of the environment is created based on specific key frames containing the camera image, an inverted depth map and the variance of the inverted depth map. The depth map and its variance are created not for all pixels, but only for pixels located near large image density gradients, which therefore have a semi-dense structure. | Monocular camera (VSLAM) | ROS | Successful real-time monocular SLAM was performed with LSD-SLAM without feature extraction. |

| 5 | Forster et al. [107] | This study introduced the semi-direct visual odometry (SVO) algorithm, which is very fast and powerful. It eliminates feature extraction and matching techniques that reduce the speed of visual odometry. SVO combines the properties of feature-based methods (tracking multiple features, parallel tracking and mapping, keyframe selection) with the accuracy and speed of direct methods. | Monocular camera (VSLAM) | ROS | A successful real-time SLAM algorithm was realized by combining the advantages of direct and indirect SLAM algorithms. |

| 6 | Qin et al. [108] | This study proposed a monocular visual-inertial system (VINS) for 6-degrees-of-freedom (DoF) state prediction using a camera and a low-cost IMU. The initialization procedure provides all necessary values, including pose, velocity, gravity vector, gyroscope deflection and 3D feature position, to bootstrap the next nonlinear optimization-based VIO. Initial values were obtained by matching the IMU values with the vision-only structure. After initialization of the predictor, sliding window-based monocular VIO was performed for high accuracy and robust state prediction. A nonlinear optimization-based method was used to combine IMU measurements and visual features. | Monocular camera, IMU (VISLAM) | ROS | A successful VISLAM was achieved with efficient IMU pre-integration, automatic estimator initialization, online external calibration, error detection and recovery, loop detection and pose graph optimization. |

| 7 | Delmerico and Scaramuzza [109] | This study performed the evaluation of open code VIO algorithms on flying robot hardware configurations. The methods used were multi-state constraint Kalman filter (MSCKF) [110], open keyframe-based visual-inertial SLAM (OKVIS) [111], robust visual-inertial odometry (ROVIO) [112], monocular visual-inertial system (VINS-Mono) [108], semi-direct visual odometry (SVO) [113] + multisensor fusion (MSF) [114], (SVO-MSF) [115] and SVO + Georgia Tech Smoothing and Mapping Library (GTSAM) (SVO-GTSAM) [116]. These algorithms were implemented on the EuRoC Micro Aerial Vehicle (MAV) dataset, which contains 6-DoF motion trajectories for flying robots. | Monocular camera, IMU (VISLAM) | Matlab | The comparison revealed that SVO + MSF had the most accurate performance. In addition, processing time per frame, CPU usage and memory usage criteria were taken into consideration in the study. |

| 8 | Heo et al. [117] | In this study, a new measurement model named local-optimal-multi-state constraint Kalman filter (LOMSCKF) was designed. With this model, the nonlinear optimization method was fused with MSCKF to perform VIO. In addition, unlike MSCKF, all of the measurements and information available in the sliding window were used. | Monocular camera, IMU (VISLAM) | Matlab | The performance of the proposed LOMSCKF was evaluated using both virtual and real-world datasets. LOMSCKF outperformed MSCKF. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aslan, M.F.; Durdu, A.; Sabanci, K.; Ropelewska, E.; Gültekin, S.S. A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Appl. Sci. 2022, 12, 1047. https://doi.org/10.3390/app12031047

Aslan MF, Durdu A, Sabanci K, Ropelewska E, Gültekin SS. A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Applied Sciences. 2022; 12(3):1047. https://doi.org/10.3390/app12031047

Chicago/Turabian StyleAslan, Muhammet Fatih, Akif Durdu, Kadir Sabanci, Ewa Ropelewska, and Seyfettin Sinan Gültekin. 2022. "A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses" Applied Sciences 12, no. 3: 1047. https://doi.org/10.3390/app12031047

APA StyleAslan, M. F., Durdu, A., Sabanci, K., Ropelewska, E., & Gültekin, S. S. (2022). A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Applied Sciences, 12(3), 1047. https://doi.org/10.3390/app12031047