Abstract

This paper presents an estimation method for a sound source of pre-recorded mallard calls from acoustic information using two microphone arrays combined with delay-and-sum beamforming. Rice farming using mallards saves labor because mallards work instead of farmers. Nevertheless, the number of mallards declines when they are preyed upon by natural enemies such as crows, kites, and weasels. We consider that efficient management can be achieved by locating and identifying the locations of mallards and their natural enemies using acoustic information that can be widely sensed in a paddy field. For this study, we developed a prototype system that comprises two sets of microphone arrays. We used 64 microphones in all installed on our originally designed and assembled sensor mounts. We obtained three acoustic datasets in an outdoor environment for our benchmark evaluation. The experimentally obtained results demonstrated that the proposed system provides adequate accuracy for application to rice–duck farming.

1. Introduction

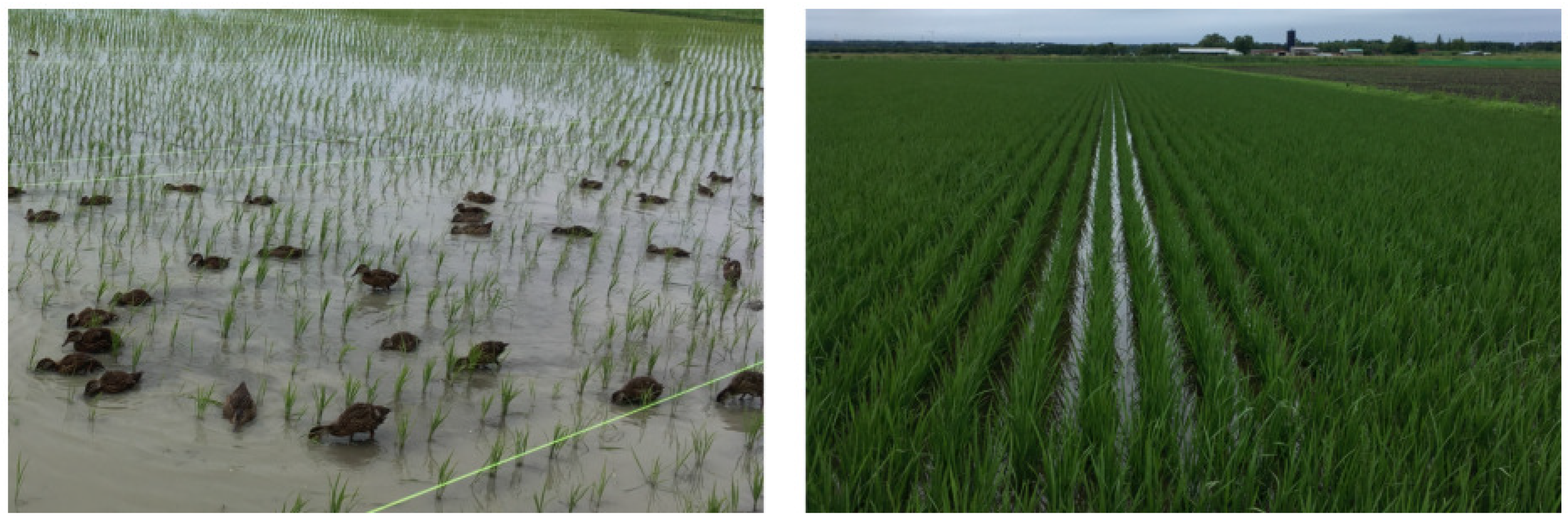

Rice–duck farming, a traditional organic farming method, uses hybrid ducks released in a paddy field for weed and pest control [1]. Farmers in northern Japan use mallards because of their utility value as a livestock product [2]. Moreover, mallard farming saves labor because mallards work instead of farmers. Mallard farming is a particularly attractive approach for farmers, especially in a regional society that faces severe difficulties posed by the population decrease and rapid progress of aging [3]. As mallard farming has attracted attention, it has become popular not only in Japan, but also in many Asian countries.

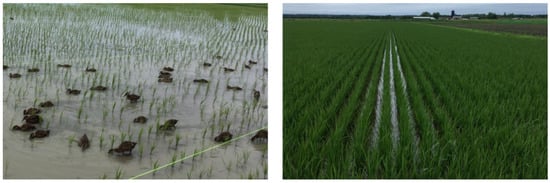

One difficulty posed by mallard farming is that a sord of mallards must be gathered to a specific area in a paddy field. They trample down rice, which produces stepping ponds in which it is difficult to grow rice. Another shortcoming is that weed control effects are not obtained in areas outside the range of mallard activities. For this case, accurate position estimation of mallards in a paddy field is necessary. However, as depicted in the right photograph of Figure 1, detecting mallards among grown rice plants involves difficulties because the targets are not visible. Moreover, the number of mallards decreases because of predation by their natural enemies such as crows, kites, and weasels. to protect mallards from their natural enemies, specifying and managing mallard positions in real time are crucially important tasks.

Figure 1.

Mallards for rice–duck farming (left). After some growth of rice plants (right), mallards are hidden by the plants. Detecting mallards visually would be extremely difficult.

To mitigate or resolve these difficulties, we evaluated an efficient management system created by locating and identifying mallards and their natural enemies using acoustic information that can be sensed widely in a paddy field. Furthermore, one can provide effective control to elucidate exactly when, where, and what kinds of natural enemies are approaching. This study was conducted to develop a position estimation system for mallards in a paddy field. Developing stable production technology is strongly demanded because rice produced by mallard farming trades at a high price on the market. For this system, we expect technological development and its transfer to remote farming [2], which is our conceptual model for actualizing smart farming.

This paper presents a direction and position estimation method for a sound source of pre-recorded mallard calls using acoustic information with arrayed microphones combined with delay-and-sum (DAS) beamforming. Using acoustic information, the approach can detect mallards that are occluded by stalks or grass. Based on the results, we inferred that an efficient management system can be actualized from locating and identifying mallards and their natural enemies using acoustic information that can widely sense a paddy field. We developed a prototype system with a 64-microphone array installed on our originally designed and assembled mount. To reproduce the sounds obtained using a microphone in advance, we conducted a simulated experiment in an actual environment to evaluate the estimation accuracy of the method.

This paper is structured as follows. Section 2 briefly reviews state-of-the-art acoustic and multimodal methods of automatic bird detection from vision and audio modalities. Subsequently, Section 3 and Section 4 present the proposed localization method using an originally developed microphone system based on DAS beamforming. The experimental results obtained using our original acoustic benchmark datasets obtained using two drones are presented in Section 5. Finally, Section 6 presents the conclusions and highlights future work.

2. Related Studies

Detecting birds that fly across the sky in three-dimensional space is a challenging task for researchers and developers. Over a half-century ago, bird detection using radar [4] was studied to prevent bird strikes by aircraft at airports. By virtue of advances in software technology and improvements in the performance and affordability of sensors and computers, bird detection methods have diversified, especially in terms of offering improved accuracy and cost-effective approaches. Recent bird-detection methods can be categorized into two major modalities based on survey articles: visual [5,6,7,8] and audio [9,10,11]. Numerous outstanding methods [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49] have been proposed from both modalities.

Benchmark datasets and challenges for the verification and comparison of detection performance have been expanded in both vision [50,51,52,53] and acoustic [54,55,56,57,58,59,60,61,62,63,64,65] modalities. During the first half of the 2010s, probability models and conventional machine-learning (ML) models combined with part-based features obtained using local descriptors were used widely and popularly. These methods comprise k-means [66], morphological filtering (MF) [67], principal component analysis (PCA), Gaussian mixture models (GMMs), expectation–maximization (EM) algorithms [68], boosting [69], scale-invariant feature transform (SIFT) [70], random forests (RFs) [71], bag-of-features (BoF) [72], histograms of oriented gradients (HOGs) [73], support vector machines (SVMs) [74], local binary patterns (LBPs) [75], background subtraction (BS) [76], and multi-instance, multi-label (MIML) [77]. These methods require preprocessing of input signals for the enhancement of the features. Moreover, algorithm selection and parameter optimizations conducted in advance for preprocessing have often relied on the subjectivity and experience of the developers. If data characteristics differ even slightly, then performance and accuracy drop drastically. Therefore, parameter calibration is necessary and represents a great deal of work. In the latter half of the 2010s, deep-learning (DL) algorithms have been predominant, especially after the implementation of the error back-propagation learning algorithm [78] in convolutional neural networks (CNNs) [79].

Table 1 and Table 2 respectively present representative studies of vision-based and sound-based bird detection methods reported during the last decade. As presented in the fourth columns, the representative networks and backbones used in bird detection methods are the following: regions with CNN (RCNN) [80], VGGNet [81], Inception [82], ResNet [83], XNOR-Net [84], densely connected convolutional neural networks (DC-CNNs) [85], fast RCNN [86], faster RCNN [87], you only look once (YOLO) [88], and weakly supervised data augmentation network (WS-DAN) [89]. End-to-end DL models require no pre-processing for feature extraction of the input signals [90]. Moreover, one-dimensional acoustic signals can be input to the DL model as two-dimensional images [91].

Table 1.

Representative studies of vision-based bird detection methods reported during the last decade.

Table 2.

Representative studies of sound-based bird detection methods reported during the last decade.

Recently, vision-based detection methods have targeted not only birds, but also drones [92]. In 2019, the Drone-vs-Bird Detection Challenge (DBDC) was held at the 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS) [93]. The goal of this challenge was the detection of drones that appear at several image coordinates in videos without detecting birds that are present in the same frames. The developed algorithms must alert detected drones only, without alerting birds. Moreover, it is necessary to provide estimates of drone locations on the image coordinates. As a representative challenge for acoustic bird detection, bird acoustic detection [65] was conducted at the Detection and Classification of Acoustic Scenes and Events (DCASE) in 2018. Among the 33 submissions evaluated for this challenge, the team of Lasseck et al. [44] was awarded the champion title. For this detection task, the organizer provided 10 h of sound clips, including negative samples with human imitations of bird sounds. The algorithms produced a binary output of whether or not the sounds were birds, based on randomly extracted sound clips of 10 s. There are differences between our study and the extraction challenge of the coordinates of birds inferred from sound clips of the same period. To improve the accuracy for wide applications, the algorithms developed for the challenge incorporated domain adaptation, transfer learning [94], and generative adversarial networks (GANs) [95]. By contrast, our study is specialized for application to mallard farming in remote farming [2].

In comparison to a large number of previous studies mentioned above, the novelty and contributions of this study are as follows:

- The proposed method can detect the position of a sound source of pre-recorded mallard calls output from a speaker using two parameters obtained from our originally developed microphone arrays;

- Compared with existing sound-based methods, our study results provide a detailed evaluation that comprises 57 positions in total through three evaluation experiments;

- To the best of our knowledge, this is the first study to demonstrate and evaluate mallard detection based on DAS beamforming in the wild.

Based on the underlying method used in drone detection [96] and our originally developed single-sensor platform [97], this paper presents a novel sensing system and shows experimentally obtained results obtained from outdoor experiments.

3. Proposed Method

3.1. Position Estimation Principle

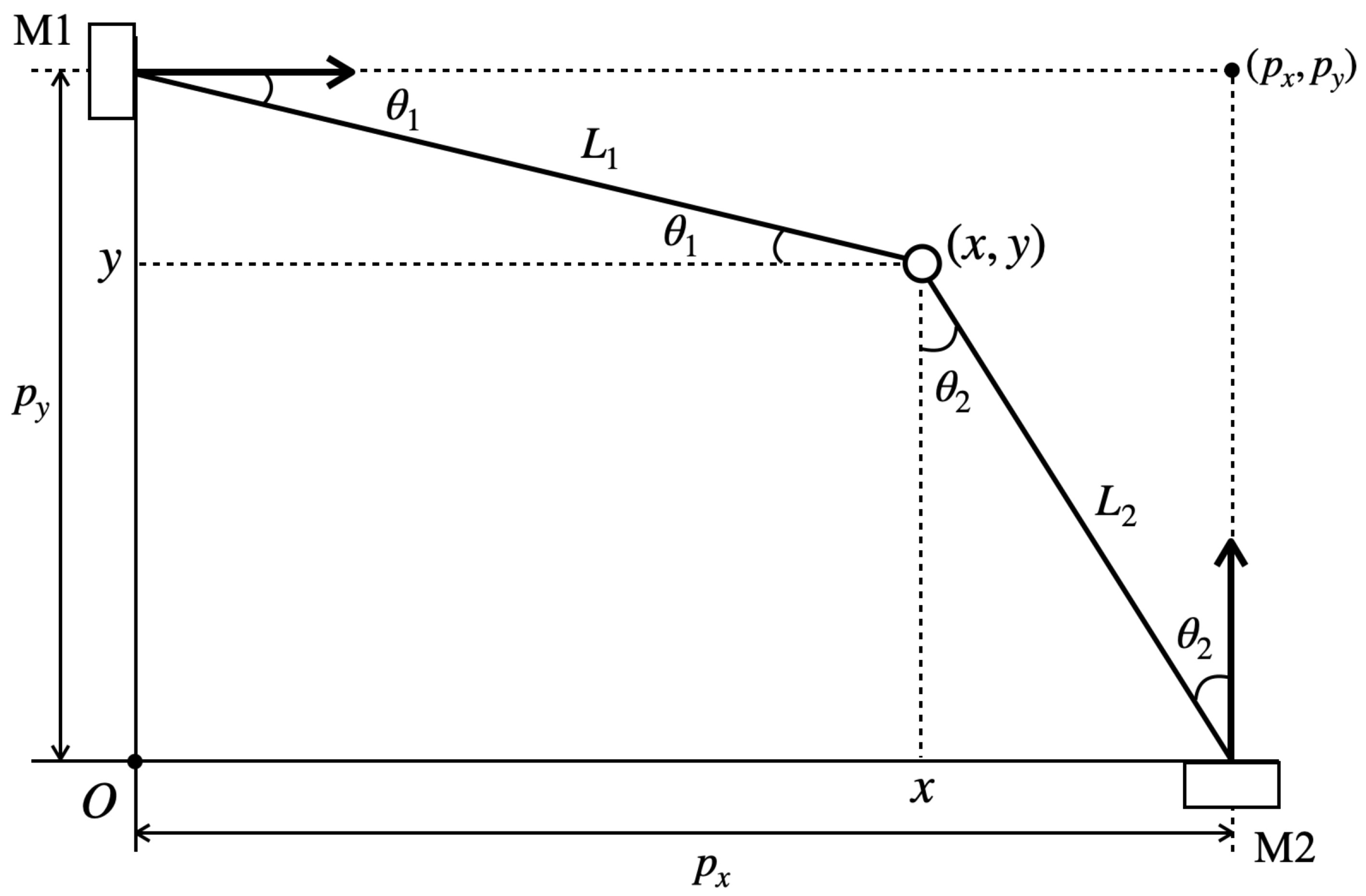

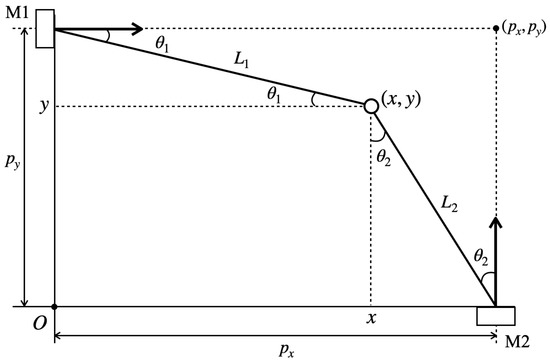

Figure 2 depicts the assignment of two microphone arrays for position estimation. The direction perpendicular to the array was set to 0° of the beam. Let be the coordinate of an estimation target position based on origin O. The two microphone arrays, designated as M1 and M2, are installed at distance horizontally and at distance vertically.

Figure 2.

Arrangement of two microphone arrays M1 and M2 for position estimation.

Assuming and as the angles between the lines perpendicular to and and the straight line to , and letting and represent the straight distances between the respective microphone array systems and , then using trigonometric functions, and are given as presented below [98].

The following expanded equation is obtained by solving for .

One can obtain as follows:

Alternatively, using , one can obtain as follows:

The following expanded equation is obtained by solving for .

Using and , one can obtain as follows:

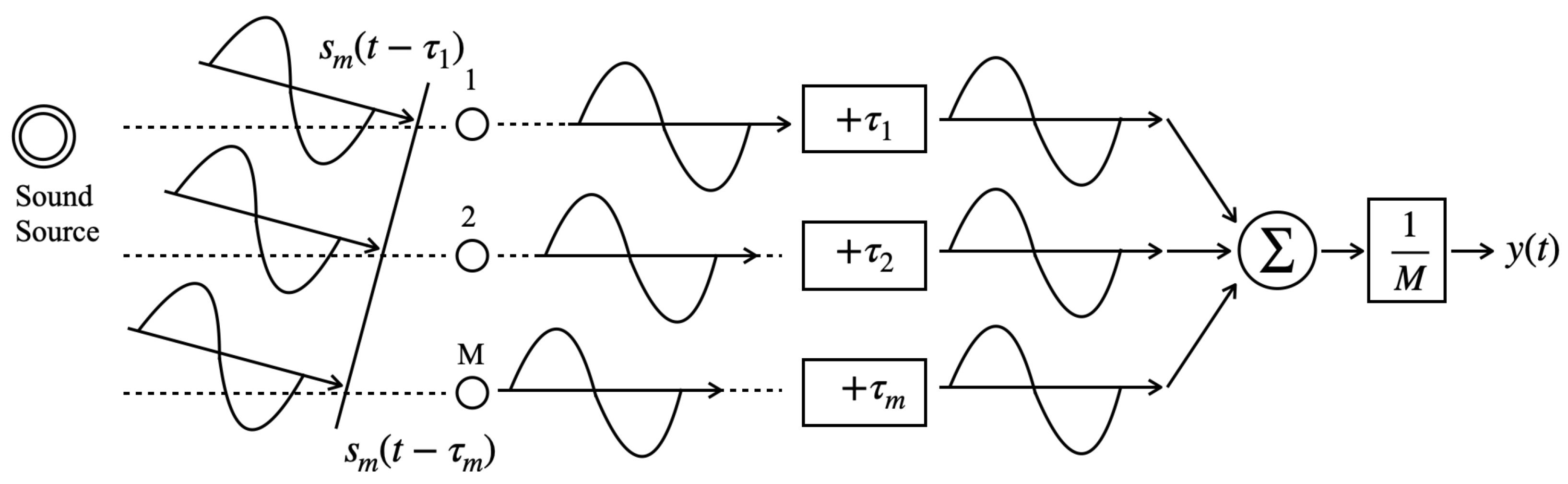

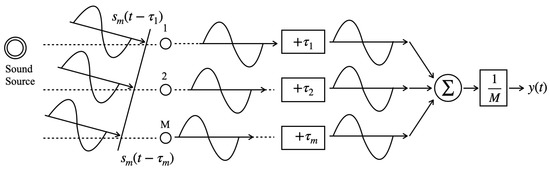

Angles and are calculated using the DAS beamforming method, as shown in Figure 3.

Figure 3.

Delay-and-sum (DAS) beamforming in the time domain [96].

3.2. DAS Beamforming Algorithm

Beamforming is a versatile technology used for directional signal enhancement with sensor arrays [99]. Letting be a beamformer output signal at time t and letting M be the number of microphones, then for and , which respectively denote a measured signal and a filter of the m-th sensor, is calculated as:

where symbol ⊗ represents convolution.

Based on our previous study [96], we considered DAS beamforming in the temporal domain. Assuming that single plane waves exist and letting be a set of acoustic signals, then delay as expressed for the formula below occurs for incident waves observed for the m-th sensor.

where M represents the total number of microphones.

The delayed of incident waves was offset by the advanced + of incident waves for a filter. Signals from the direction of are enhanced because of the gathered phases of signal in all channels. The temporal compensated filter is defined as:

where is Dirac’s delta function.

Letting be an angle obtained using beamforming, then, for the comparison of acoustic directions, the relative mean power level of is defined as:

where T represents the interval time length.

We changed from −90° to 90° with 1° intervals. Let and respectively denote obtained from M1 and M2. Using and , one can obtain and as:

4. Measurement System

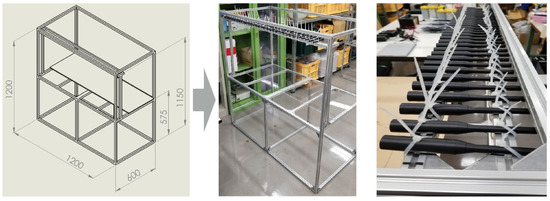

4.1. Mount Design

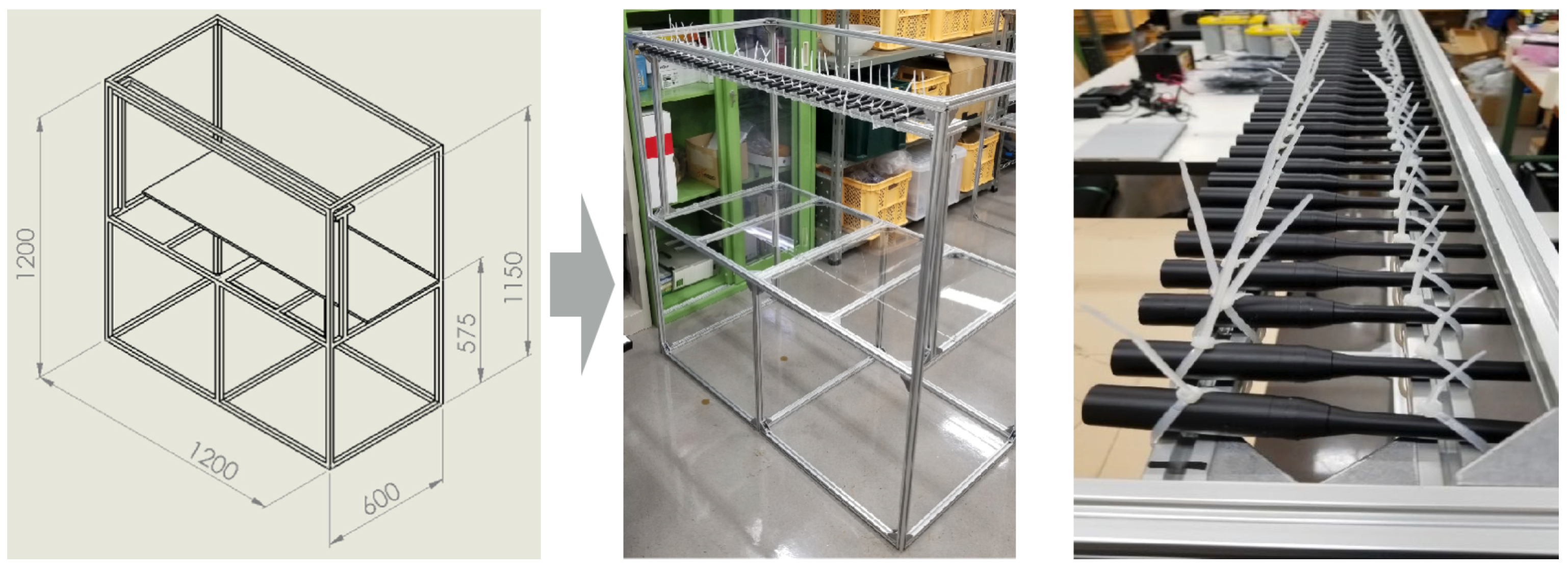

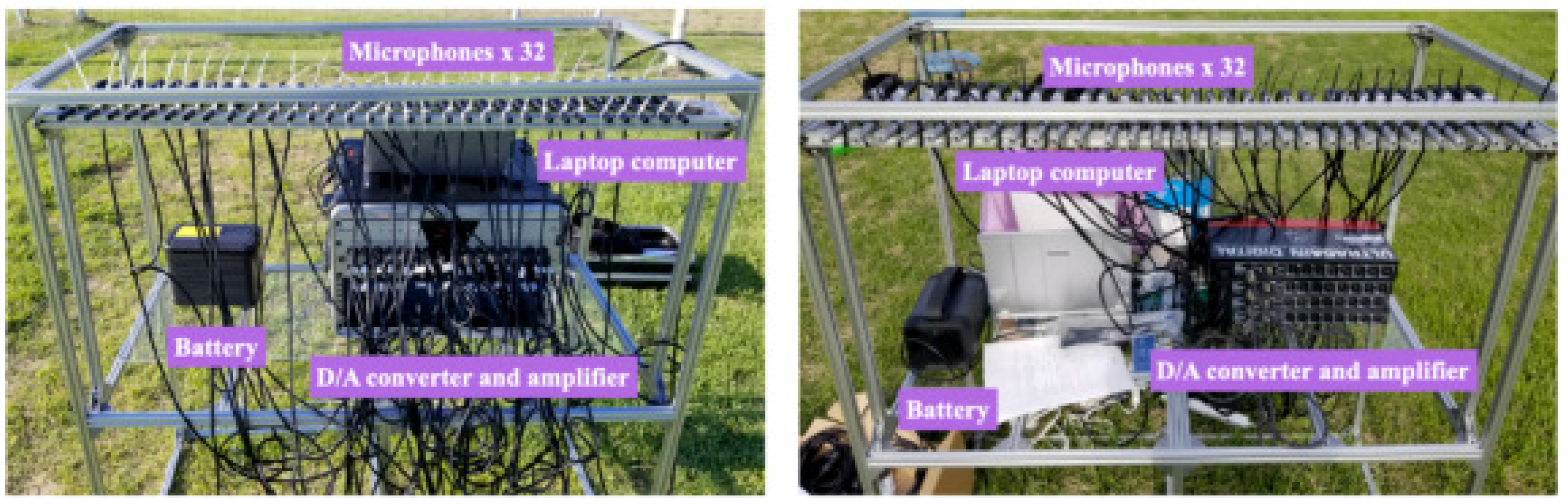

For this study, we designed an original sensor mount with installed microphones and electric devices: an amplifier, an analog–digital converter, a battery, an inverter, and a laptop computer. Figure 4 depicts the design and assembled mount. We used 20 mm square aluminum pipes for the main frame. The terminals were joined with L-shaped connectors, bolts, and nuts. To set the electrical devices, acrylic boards were used for the plate located at a 575 mm height. Microphones were installed on a bracket arm as an array for a straight 1200 mm long line with a 30 mm gap.

Figure 4.

Originally designed and assembled sensor mount.

As for the installation of microphones, a spatial foldback occurs in high-frequency signals if the gap is wide. In general [98], the distance d between microphones and the upper frequency f where no foldback occurs is defined as:

where the constant c is the speed of sound. We confirmed from our preliminary experimental results that the power of mallard calls was concentrated in the band below 5 kHz. Therefore, we installed the microphones in 30 mm intervals because the upper limit is approximately 5.6 kHz for . In addition, the directivity is reduced for low frequencies, if d is narrow.

The height from the ground to the microphones was 1150 mm. We used cable ties to tighten the microphones to the bracket arm. We also labeled the respective microphones with numbers that made it easy to check the connector cables to the converter terminals.

4.2. Microphone Array

Table 3 presents the components and their quantities for the respective microphone arrays. We introduced different devices for M1 and M2 because of the various circumstances such as an introduced period, research budget preparation, and coordination with our related research projects. The microphones used for M1 and M2 were, respectively, DBX RTA-M (Harman International Inds., Inc., Stamford, CT, USA) and ECM-8000 (Behringer Music Tribe Makati, Metro Manila, Philippines). Table 4 presents detailed specifications for both microphones. The common specification parameters are an omnidirectional polar pattern and the 20–20,000 Hz frequency range. For the amplifier and analog-to-digital (AD) converter, we used separate devices for M1 and an integrated device for M2. Here, both converters include a built-in anti-aliasing filter. Under the condition of the arrayed microphone interval, m, grating lobes may appear for the upper limit of 5.6 kHz and above. However, we assumed that the effect is relatively small because the target power of mallard calls is concentrated in the band below 5 kHz. For outdoor data acquisition experiments, we used portable lithium-ion batteries of two types. Moreover, to supply measurement devices with 100 V electrical power, the commercial power supply voltage in Japan, we used an inverter to convert direct current into alternating current.

Table 3.

System components and quantities for the respective microphone arrays: M1 and M2.

Table 4.

Detailed specifications of microphones of two types.

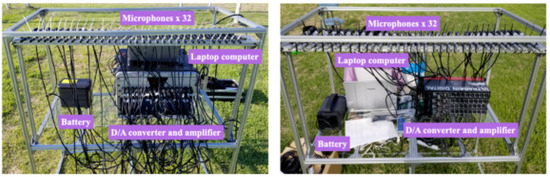

Figure 5 portrays photographs of all the components in Table 3 installed on the mount depicted in Figure 4. In all, the 64 microphones were connected to their respective amplifiers with 64 cables in parallel.

Figure 5.

Photographs showing the appearance of M1 (left) and M2 (right) after the setup.

5. Evaluation Experiment

5.1. Experiment Setup

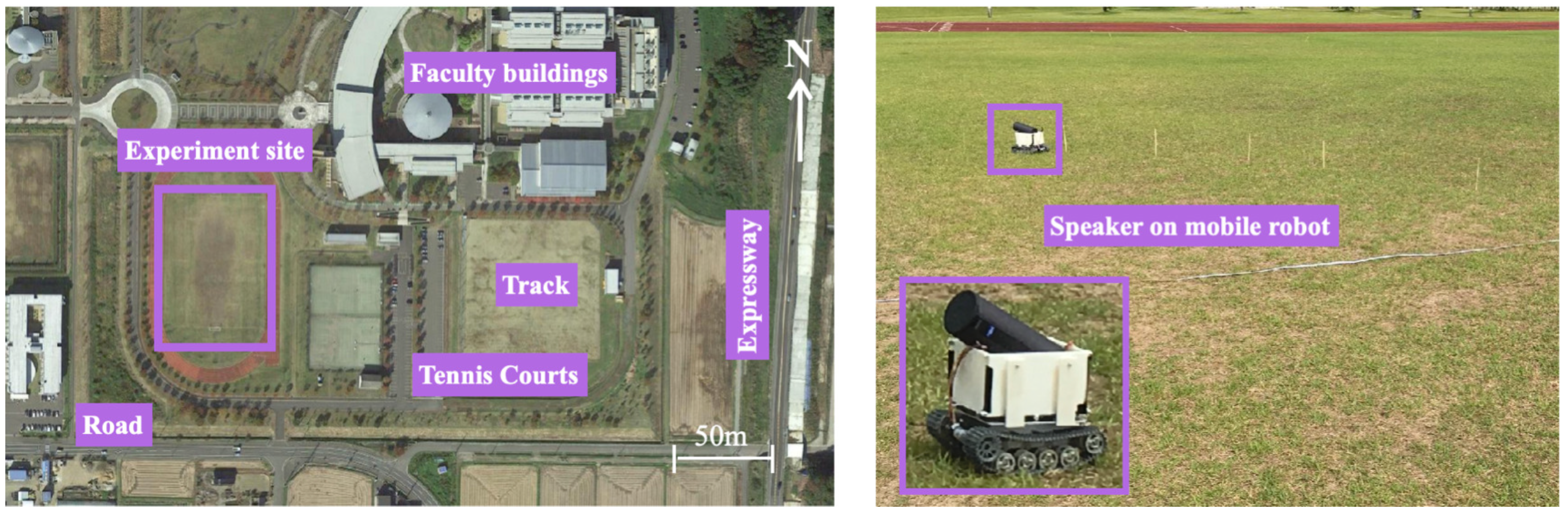

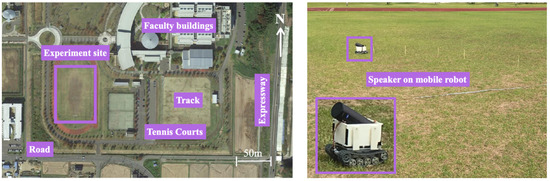

Acoustic data acquisition experiments were conducted at the Honjo Campus of Akita Prefectural University (Yurihonjo City, Akita Prefecture, Japan). This campus, located at 39°23′37″ north latitude and 140°4′23″ east longitude, has a size of 204,379 m2. The left panel of Figure 6 portrays an aerial photograph of the campus. The surroundings comprise an expressway to the east and a public road to the south. The experiment was conducted at the athletic field on the west side of the campus, as depicted in the right panel of Figure 6.

Figure 6.

Aerial photograph showing the experimental environment (left) and UGV used to move the sound source positions (right).

We used a pre-recorded sound of a mallard as the sound source. We played this sound on a Bluetooth wireless speaker that had been loaded on a small unmanned ground vehicle (UGV) to move to the respective measurement positions. In our earlier study, we developed this UGV as a prototype for mallard navigation, particularly for remote farming [2]. We moved it remotely via a wireless network. Our evaluation target was the detection of stationary sound sources. While the UGV was stopped at an arbitrary position, we played a mallard call sound file for 10 s. The sound pressure level produced from the speaker was dB.

We conducted experiments separately on 19, 26, and 28 August 2020. Table 5 shows the meteorological conditions on the respective experimental days. All days were clear and sunny under a migratory anticyclone. Experiments were conducted during 14:00 to 15:00 Japan Standard Time (JST), which is 9 h ahead of the Coordinated Universal Time (UTC). The temperature was approximately 30 °C. The humidity was in the typical mean range for Japan. The wind speed was less than 5 m/s.

Table 5.

Meteorological conditions on the respective experiment days.

We conducted three evaluation experiments, designated as Experiments A–C. Experiment A comprised a sound source orientation estimation experiment using a single microphone array. The objective of this experiment was to verify the angular resolutions. Subsequently, Experiments B and C provided position estimation experimental results obtained using two microphone arrays. The difference between the two experimental setups was the field size and the orientations of the respective microphone arrays.

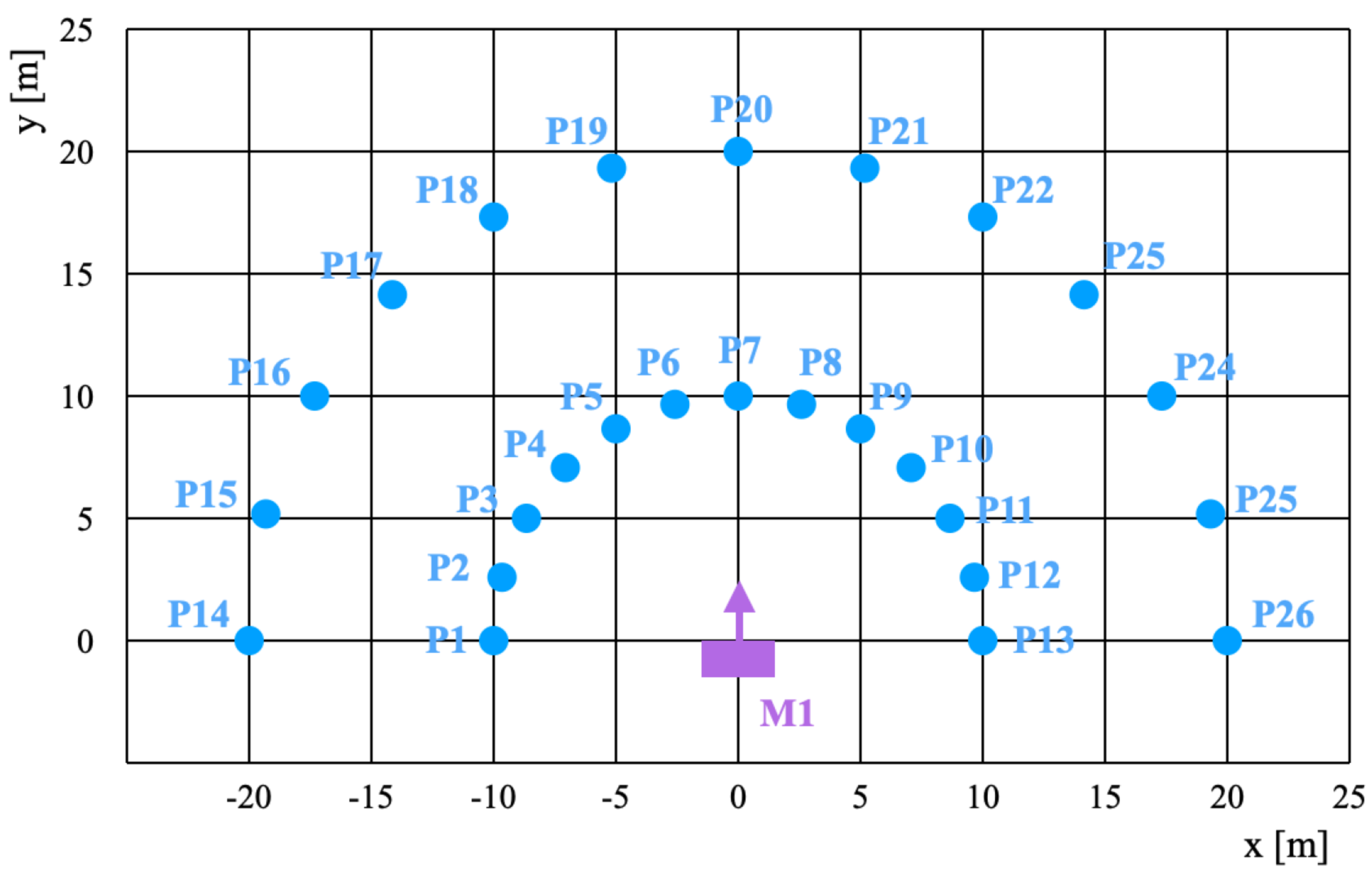

5.2. Experiment A

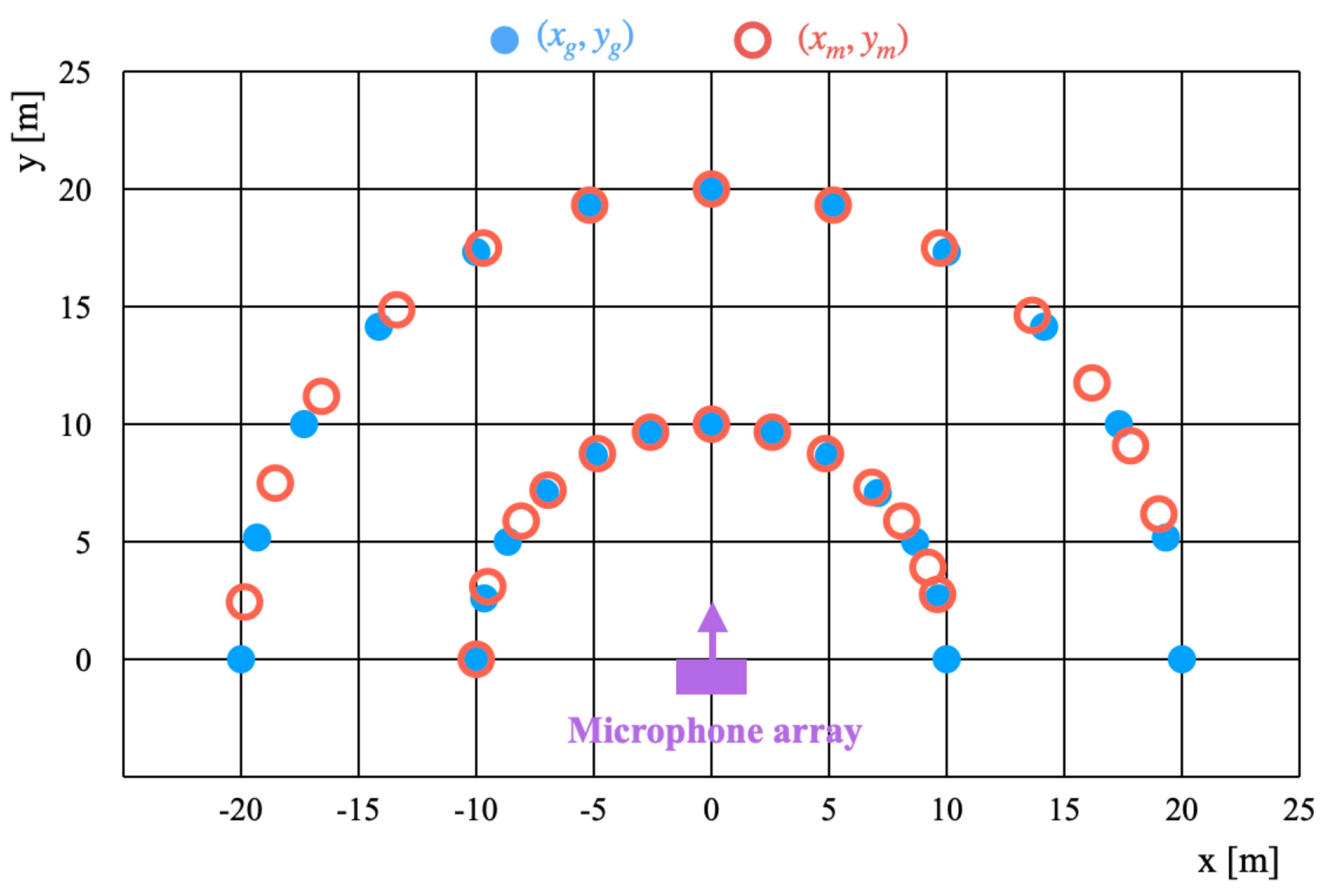

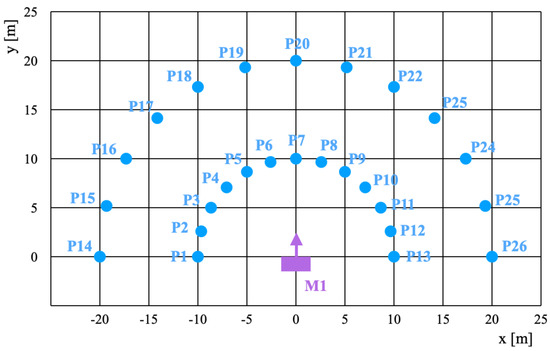

Figure 7 depicts the setup of the sound source positions and the microphone array for Experiment A. We used M1 alone to evaluate the angular resolution as a preliminary experiment. The sound source comprises 26 positions: P1–P26. We divided these positions into two groups as P1–P13 and P14–P26 which were located, respectively, on the circumferences of half circles with radii of 10 m and 20 m. The angle interval of the respective positions from the origin was 15°. Here, P1–P13 existed on the straight lines of P14–P26 from the origin.

Figure 7.

Setup of sound source positions and the microphone array M1 for Experiment A.

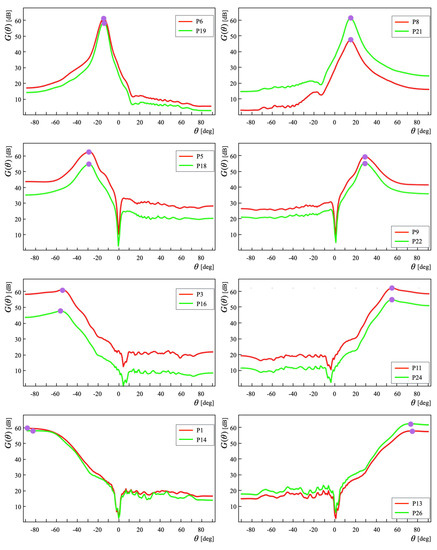

Let denote the ground-truth (GT) angle of . Figure 8 depicts the angle estimation results for 16 positions at θg = ±15°, ±30°, ±60°, and ±90°. The experiment results demonstrated a unimodal output distribution of . Moreover, at positions with the radius of 10 m were found to be greater than those of 20 m. The purple-filled circles denote the vertices of the respective output waves. We let represent the maximum angle of in calculated from the Formula (21).

Figure 8.

Angle estimation results at 16 positions (, , , and ).

Table 6.

Angle estimation results and error values found from Experiment A (°).

The experimentally obtained results demonstrated that in . As an overall tendency, the increase of the angles and E demonstrated a positive correlation apart from the negative angles of the 10 m radius.

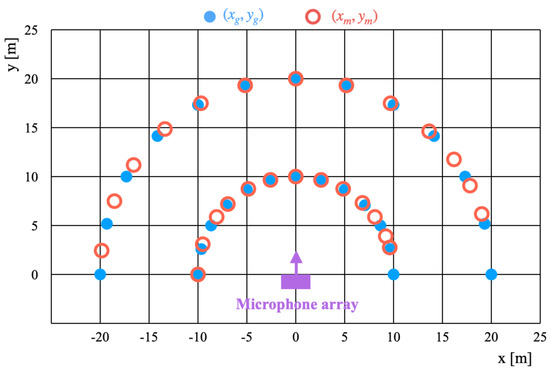

Figure 9 presents the two-dimensional distributions of for comparison with , which represents the GT coordinates of the respective positions. The theoretical distance between M1 and the respective sound source positions was unobtainable for this experimental setup. Therefore, provisional positions were calculated by substituting as 10 m or 20 m.

Figure 9.

Comparative positional distributions between and found from Experiment A.

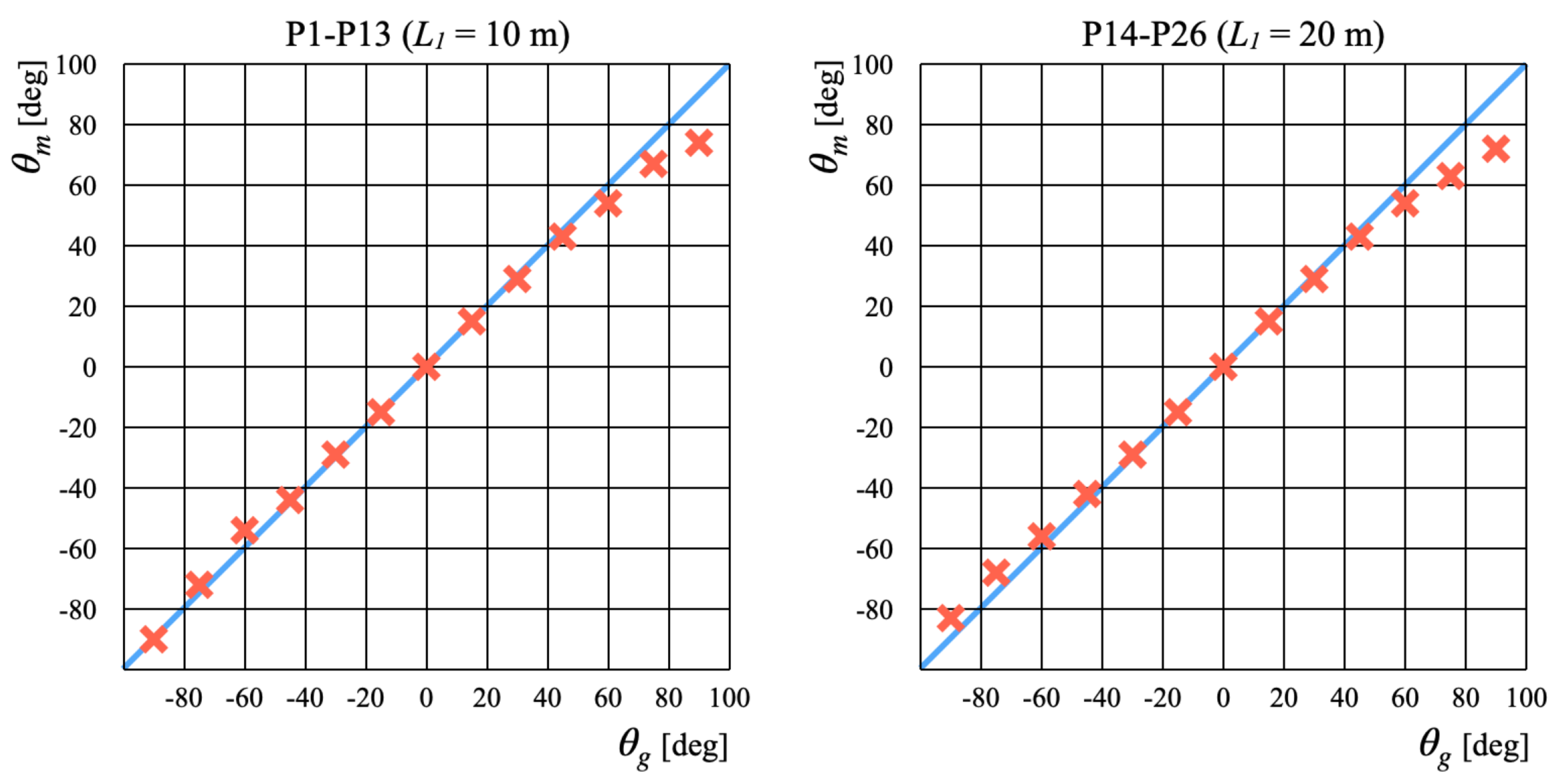

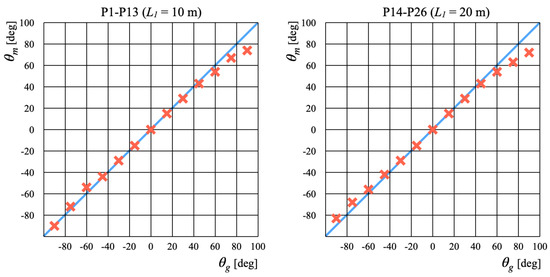

Figure 10 presents scatter plots of and . The distribution results for = 10 m and = 20 m are presented, respectively, in the left and right panels. On the plus side, for exhibited smaller values as the angle increased. By contrast, on the minus side, it exhibited greater values as the angle increased. As the angle increased, the absolute for absolute tended to decrease.

Figure 10.

Scatter plots of and for P1–13 (left) and P14–26 (right).

5.3. Experiment B

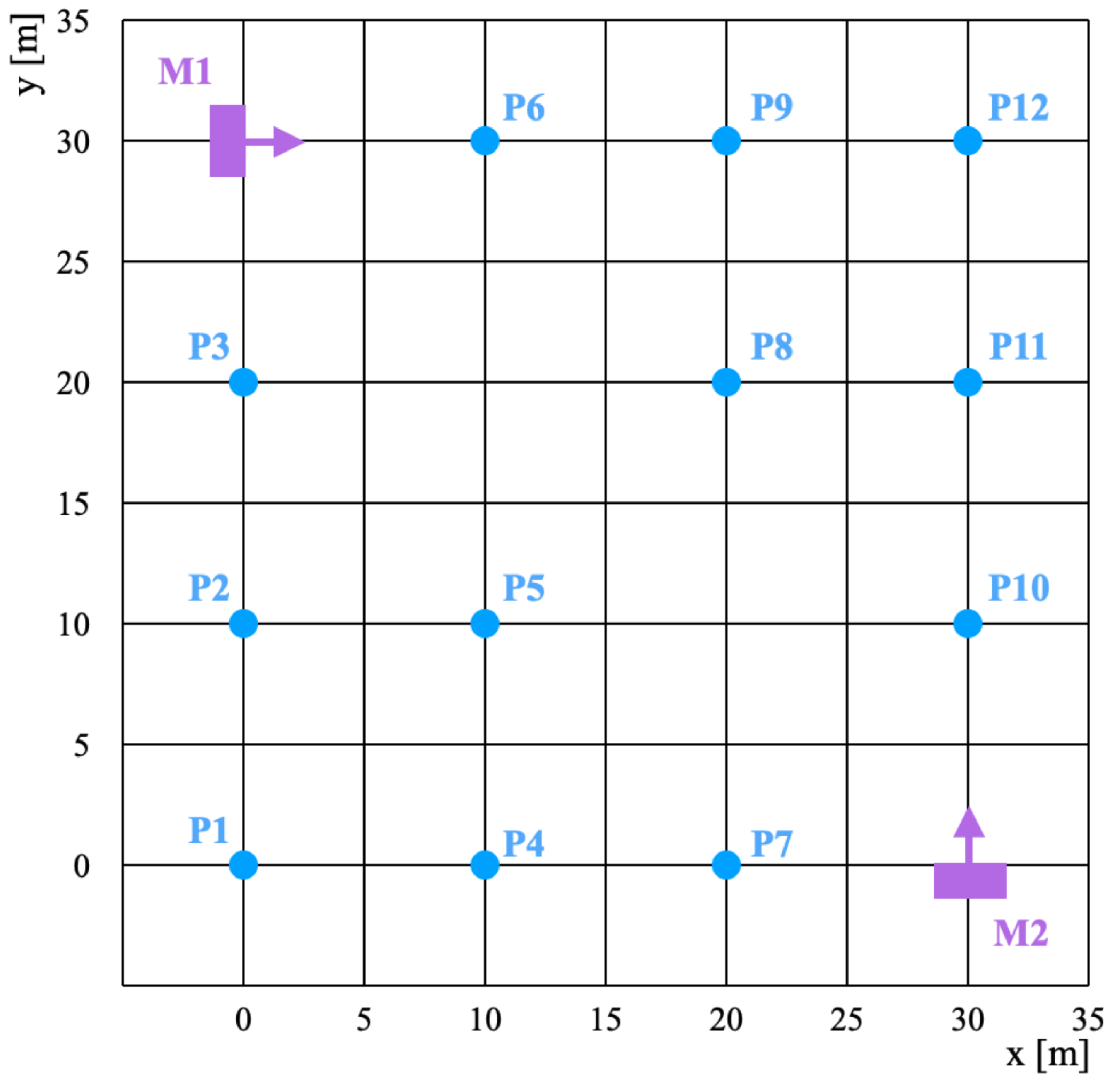

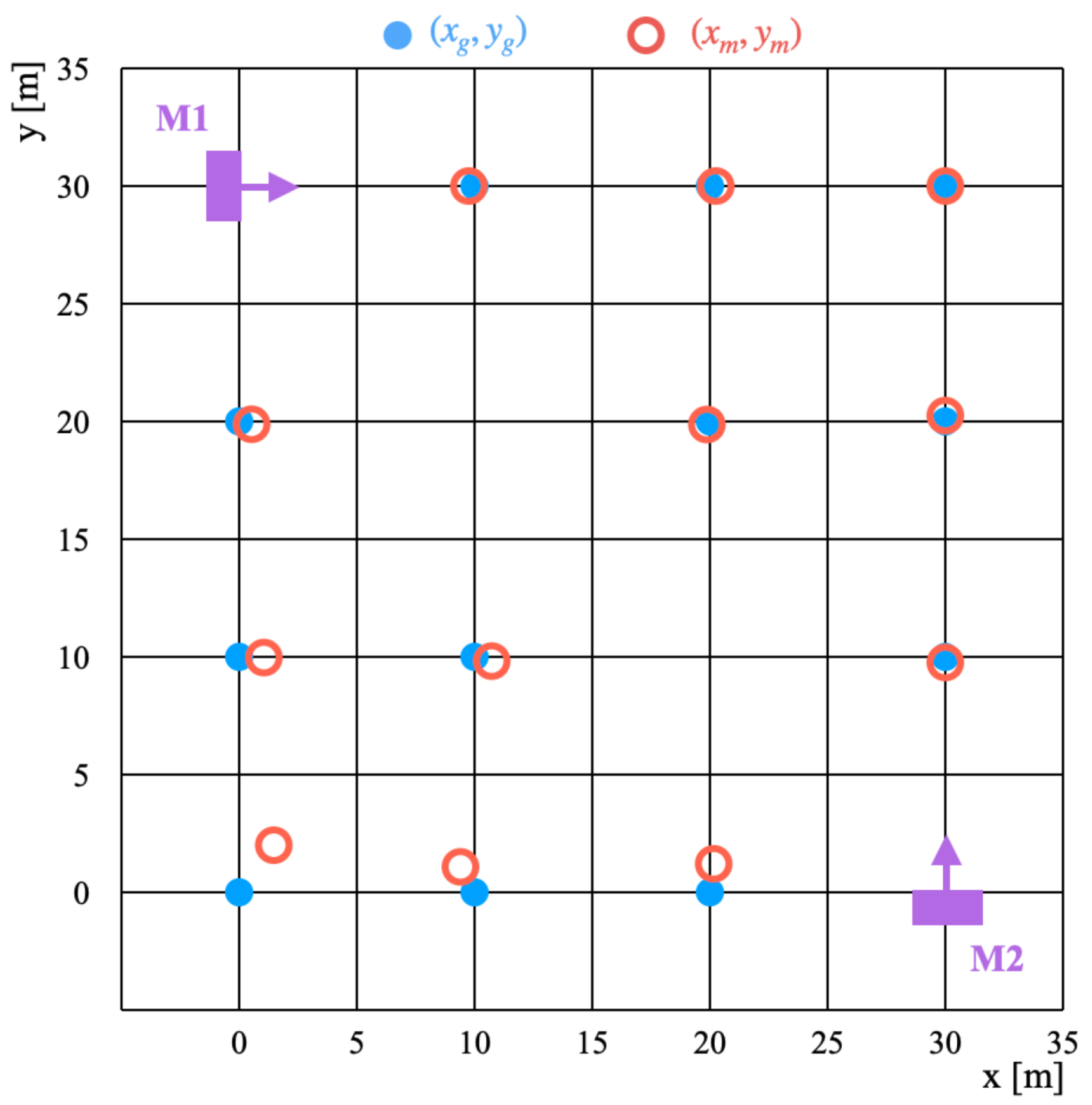

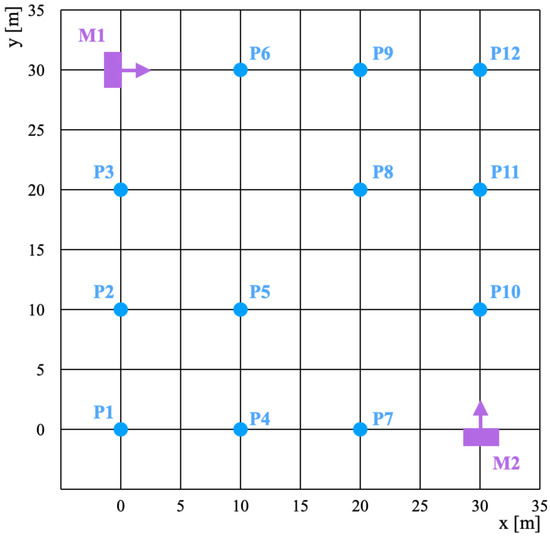

Experiment B was performed to evaluate the position estimation using two microphone arrays: M1 and M2. Figure 11 depicts the setup of the sound source positions and the microphone arrays for Experiment B. The experiment field was a square area of 30 m in length and width. The coordinates of M1 and M2 were, respectively, (0, 30) in the upper left corner and (30, 0) in the lower right corner. The microphone frontal orientations of M1 and M2 were, respectively, horizontal and vertical. For this arrangement, the positions on the diagonal between M1 and M2 were undetermined because the intersection of and was indefinite in the condition of . Therefore, the estimation target comprised 12 positions at 10 m intervals along the vertical and horizontal axes, excluding coordinates on the diagonal.

Figure 11.

Setup of sound source positions and microphone arrays for Experiment B.

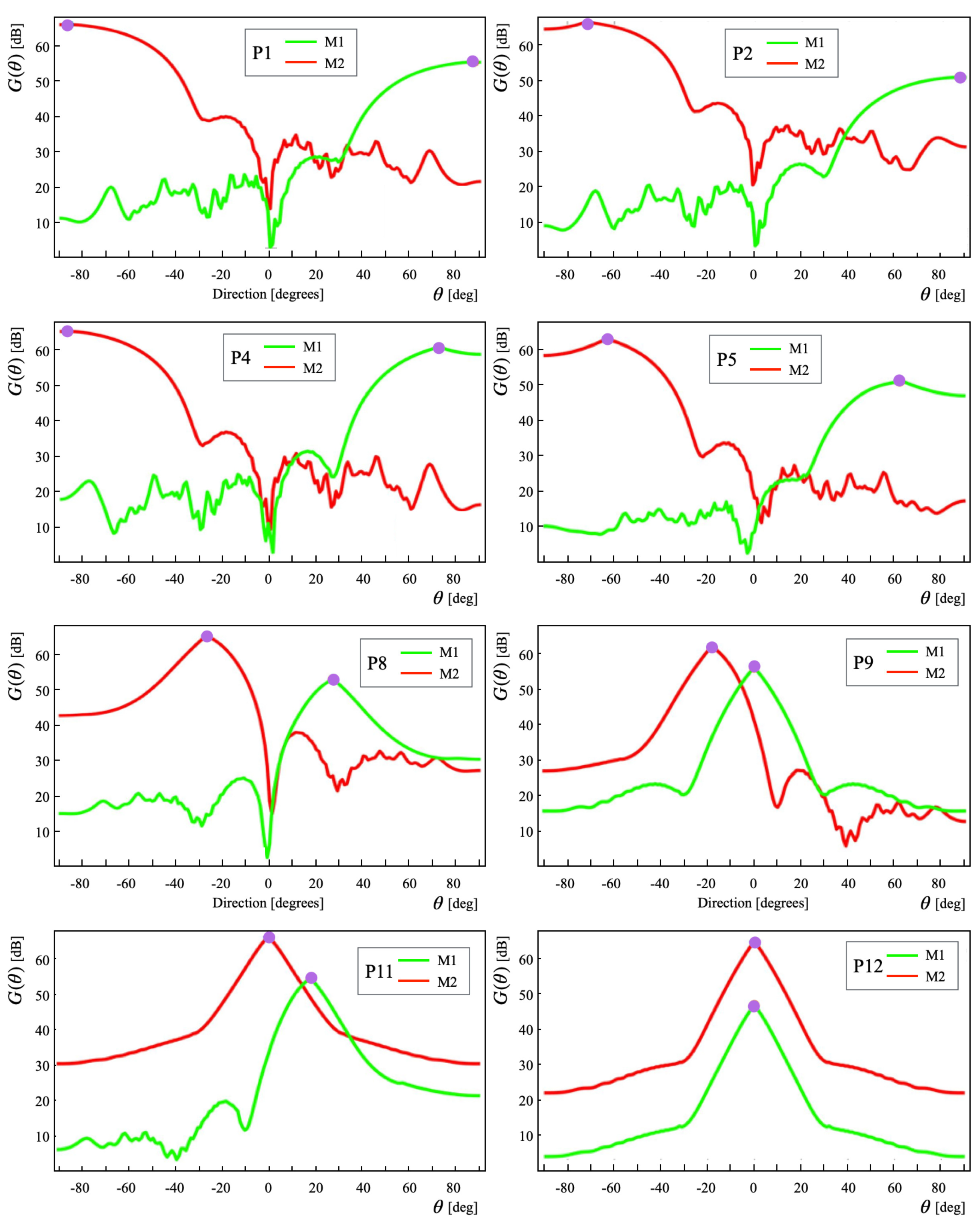

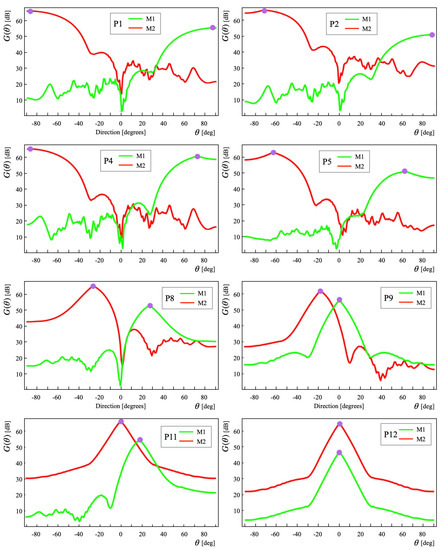

Figure 12 depicts the angle estimation results for 8 of 12 positions. The respective output waves exhibited a distinct peak, especially for shallow angles.

Figure 12.

Angle estimation results obtained at eight positions (P1, P2, P4, P5, P8, P9, P11, and P12).

Table 7 presents the experiment results: , , , , , and . The mean error values of M1 and M2 were, respectively, 1.25° and 0.92°. The highest error of M1 was 7° at P7. This error increased the mean M1 error.

Table 7.

Angle estimation results and error values found from Experiment B (°).

Table 8 presents the position estimation results. Coordinates (,) were calculated from and based on the proposed method (21). The error values and were calculated from the following.

Table 8.

Position estimation results obtained from Experiment B (°).

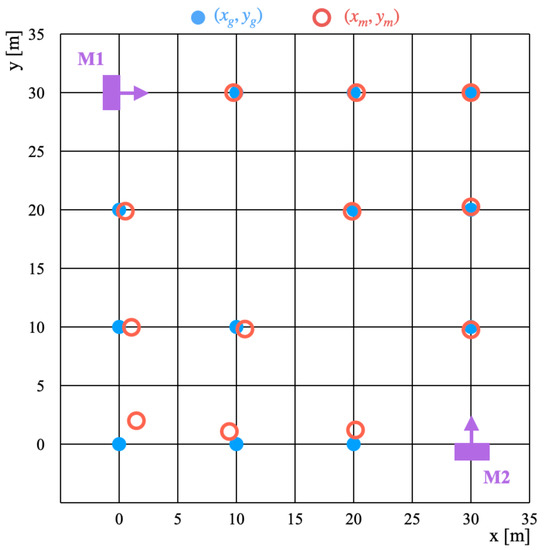

The mean error values of and are, respectively, 0.43 m and 0.42 m. The details showed that P11 and P12 had no error. The error at P1 was the highest. Figure 13 presents the distributions of the GT coordinates and estimated coordinates . The six positions on the upper right indicate that the error values were small. This resulting tendency was attributed to the measurement angles of M1 and M2 being within 45°. The six positions on the bottom left indicate that the error values were large. This resultant tendency was attributed to the measurement angles of M1 and M2, which were greater than 45°.

Figure 13.

Positional distributions for the GT positions and estimated positions for Experiment A.

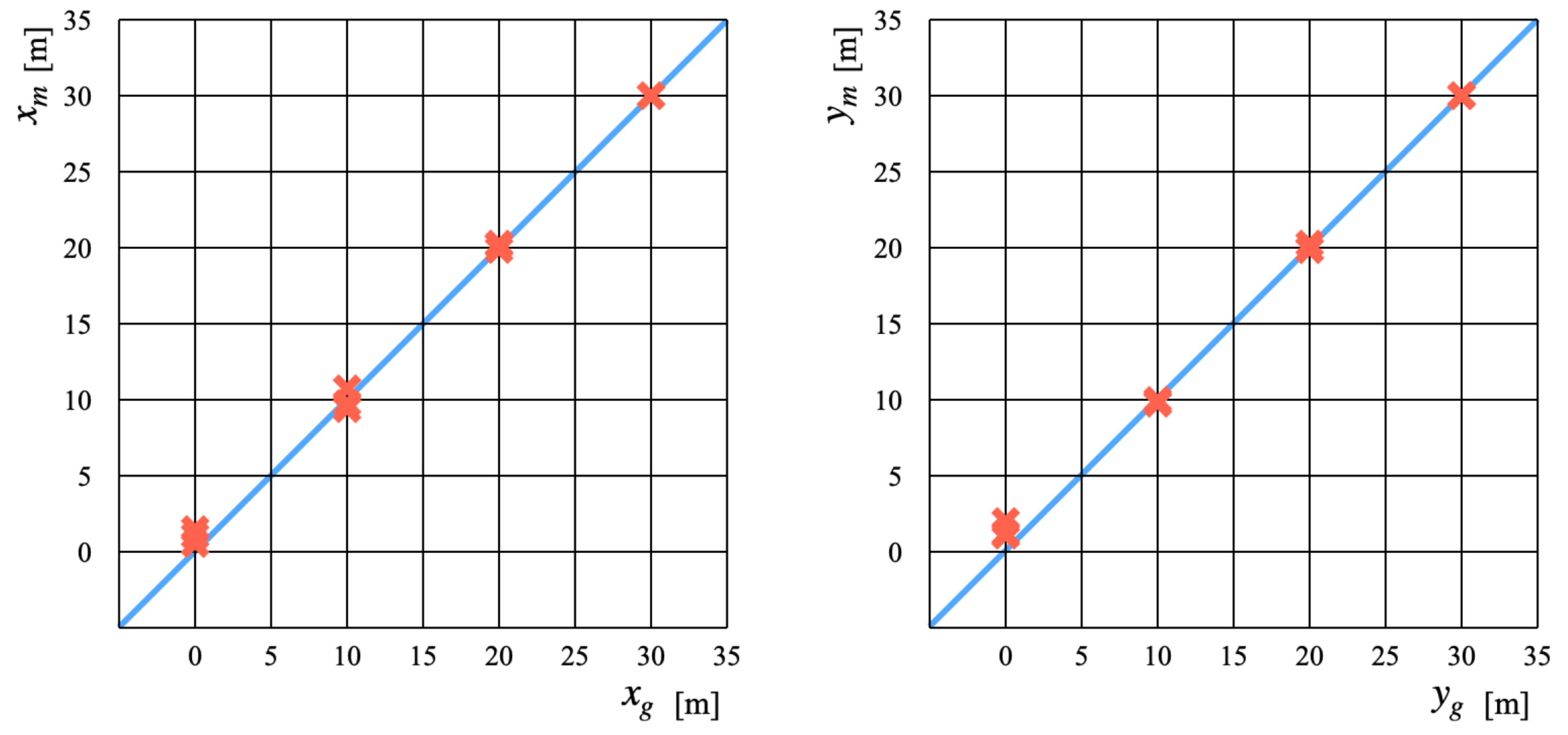

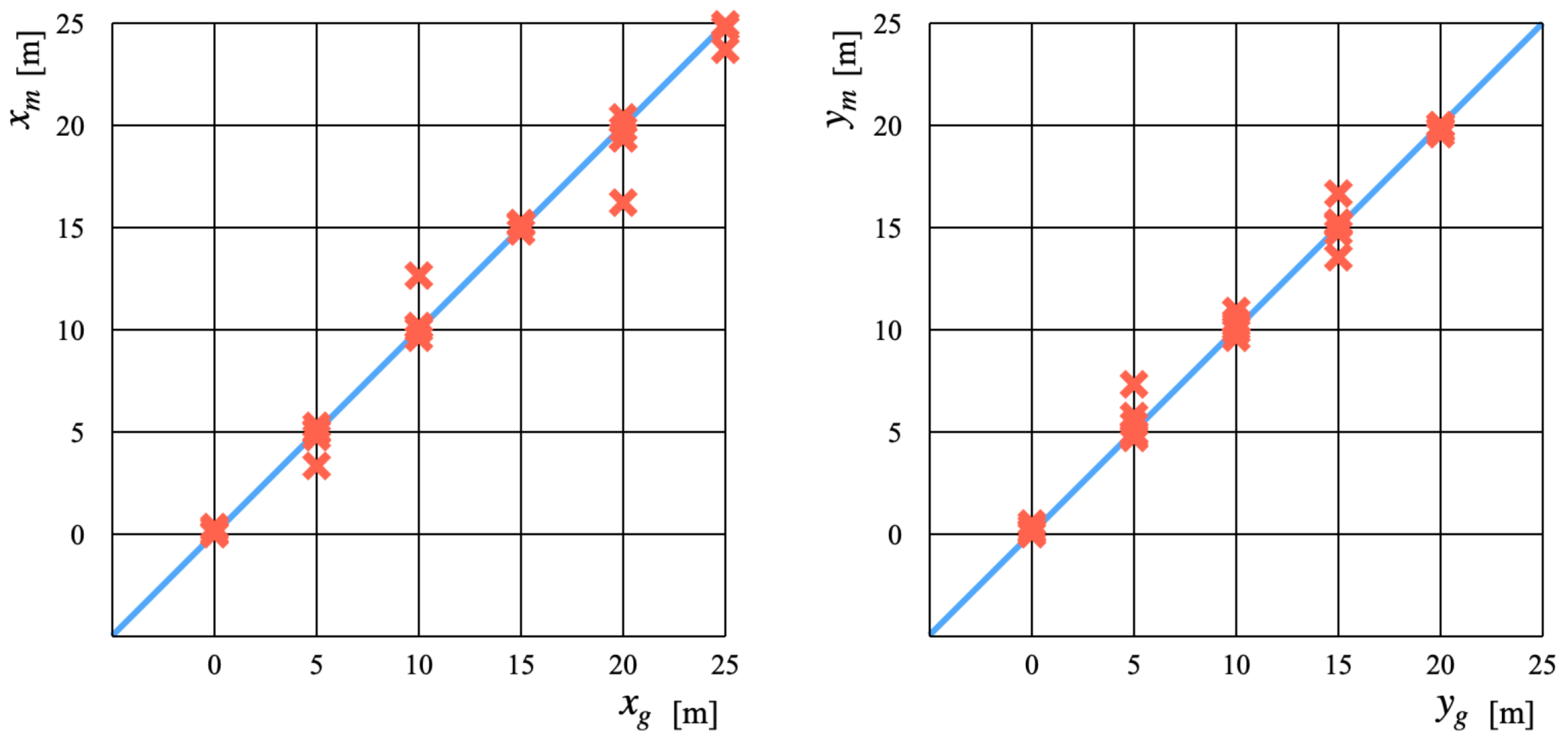

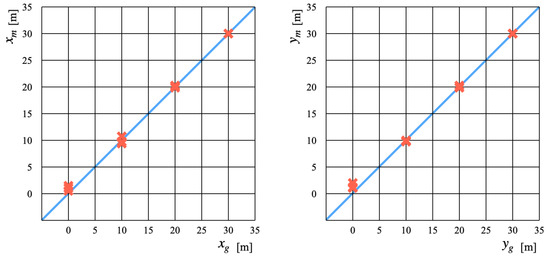

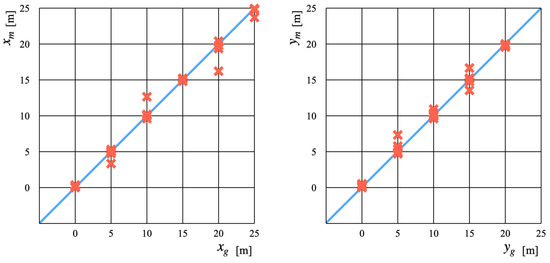

Figure 14 presents scatter plots of the GT coordinates and estimated coordinates. The distribution results for x and y are presented, respectively, in the left and right panels. Compared to Experiment A, the arrangement of the microphone array for Experiment B used 90°, which is half of the effective measurement range. However, the estimated angle error increased as it approached 90°. The error distribution trend demonstrated that the estimated position coordinates were calculated as a larger value than the GT position coordinates.

Figure 14.

Scatter plots showing the GT coordinates and estimated coordinates for Experiment B.

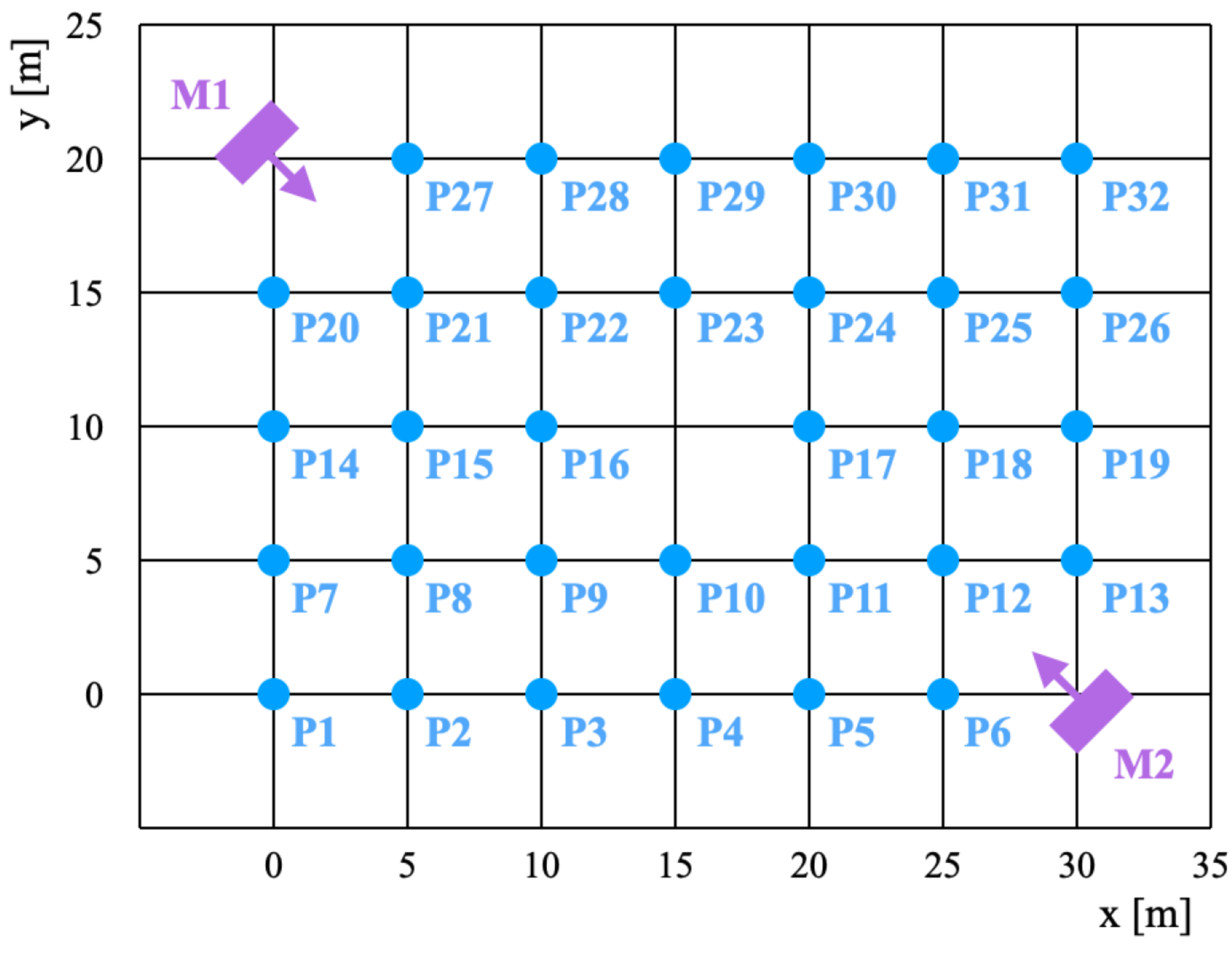

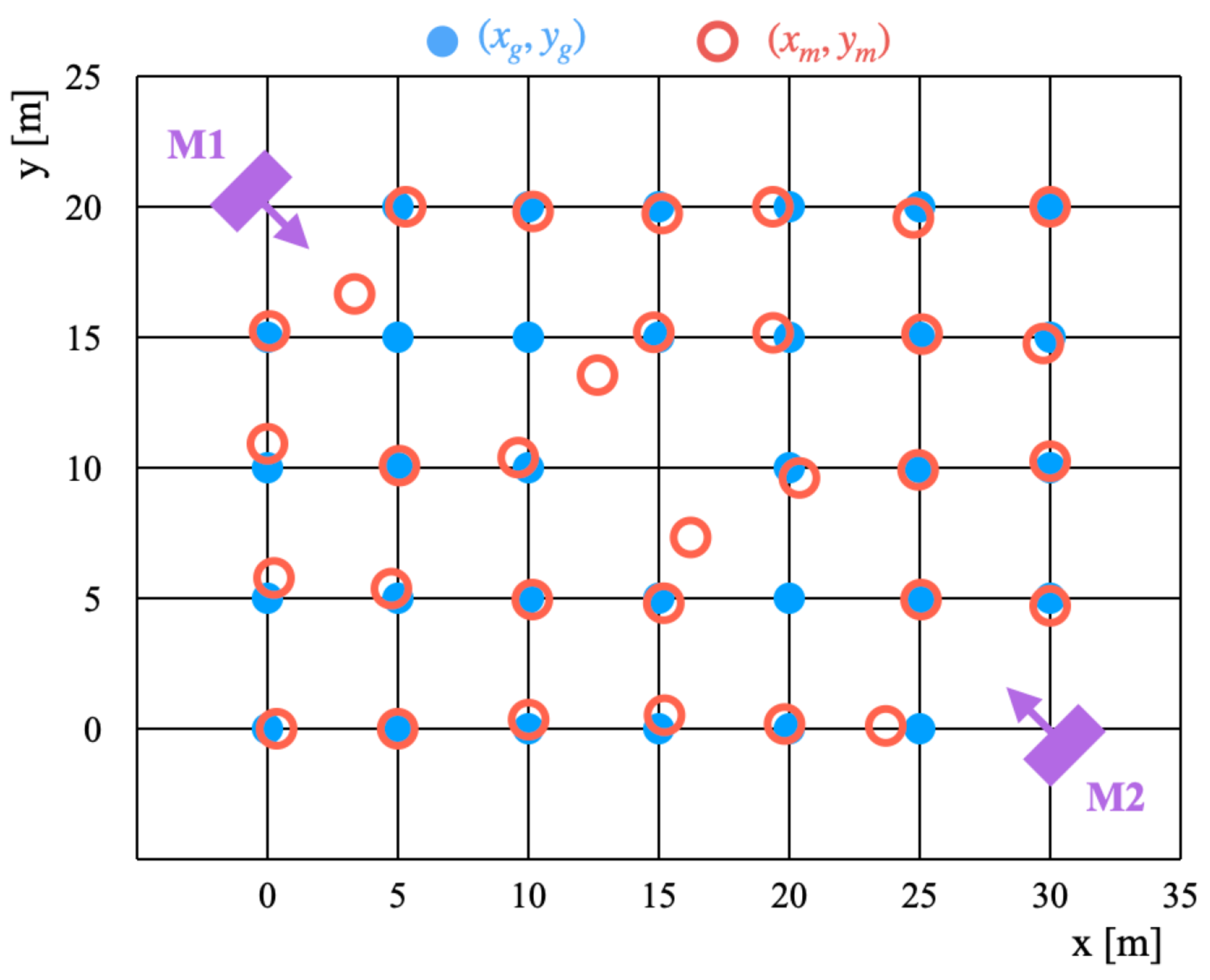

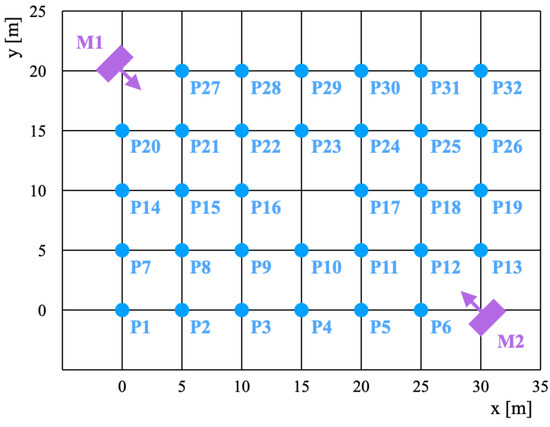

5.4. Experiment C

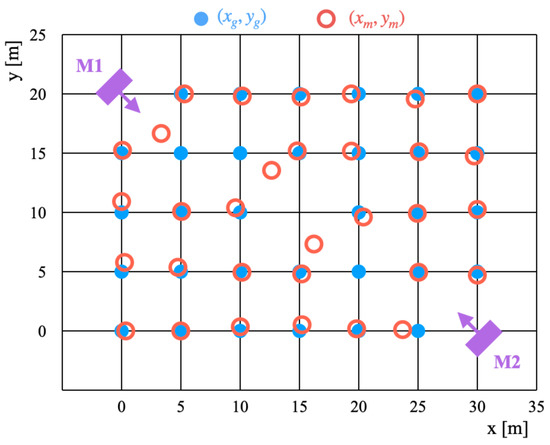

Figure 15 shows the experimental setup used for Experiment C. Position estimation was conducted in a rectangular area of 30 m in width and 20 m in length. The two microphone arrays, M1 and M2, were installed, respectively, at coordinates (0, 20) and (30, 0). The microphone frontal orientation of M1 and M2 was set to the equivalent of their diagonals to use the small error range around 0° effectively. The position detection interval was 5 m horizontally and vertically. For Experiment C, the position that could not be calculated on the diagonal was merely (15, 10). This field consists of a rectangular shape, unlike the square field for Experiment A. The estimation target for Experiment C had 32 positions: P1–P32.

Figure 15.

Setup of sound source positions and microphone arrays for Experiment C.

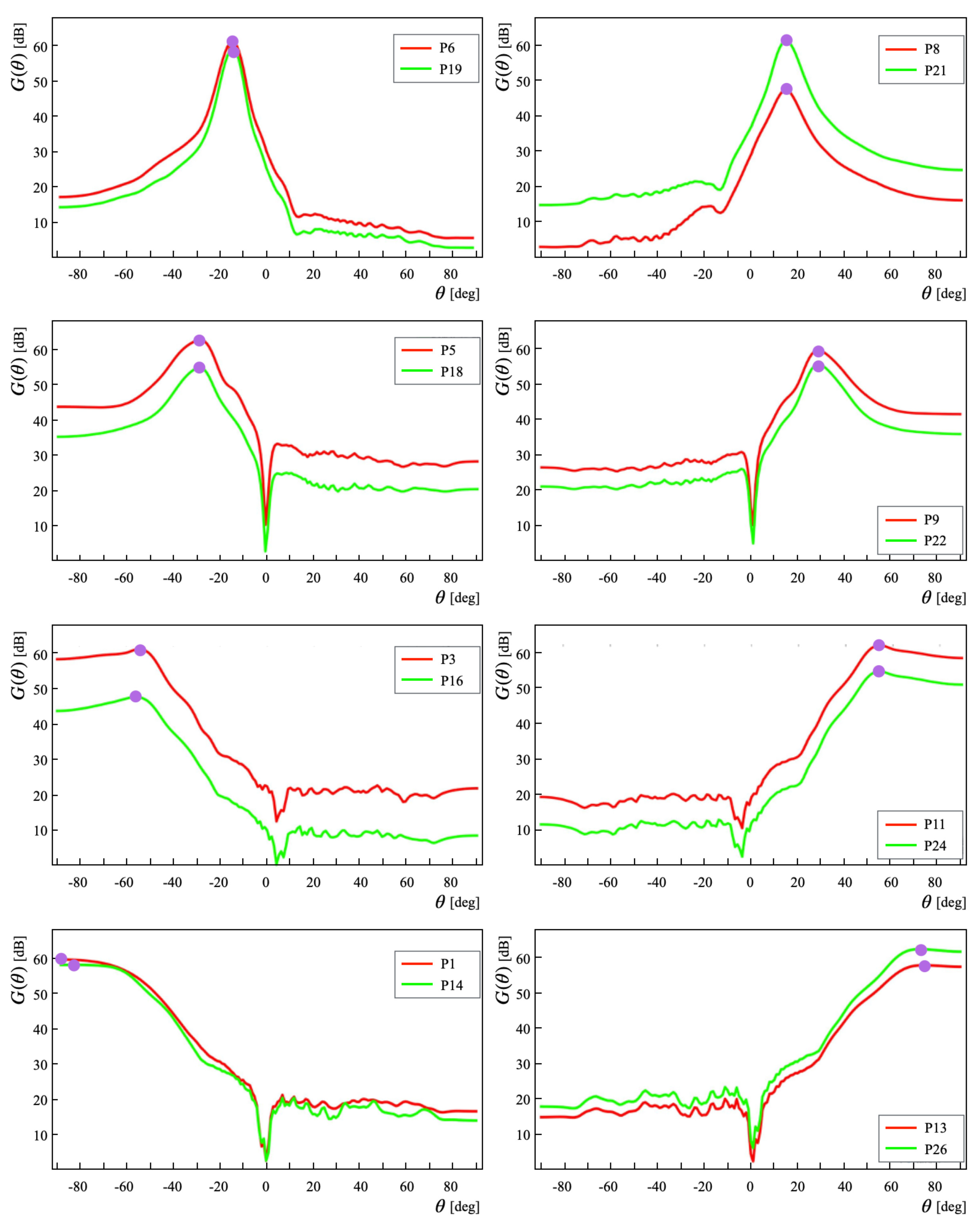

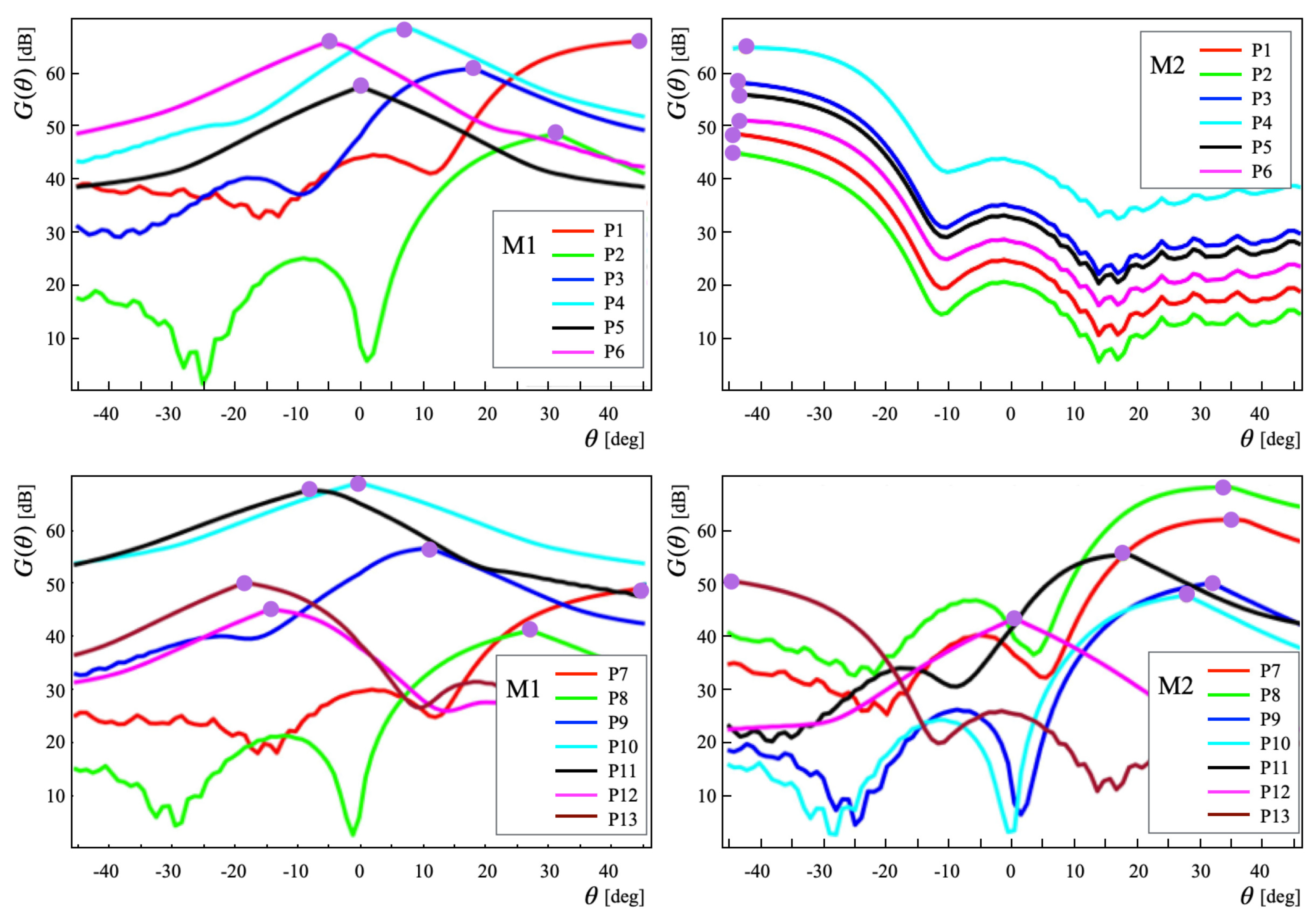

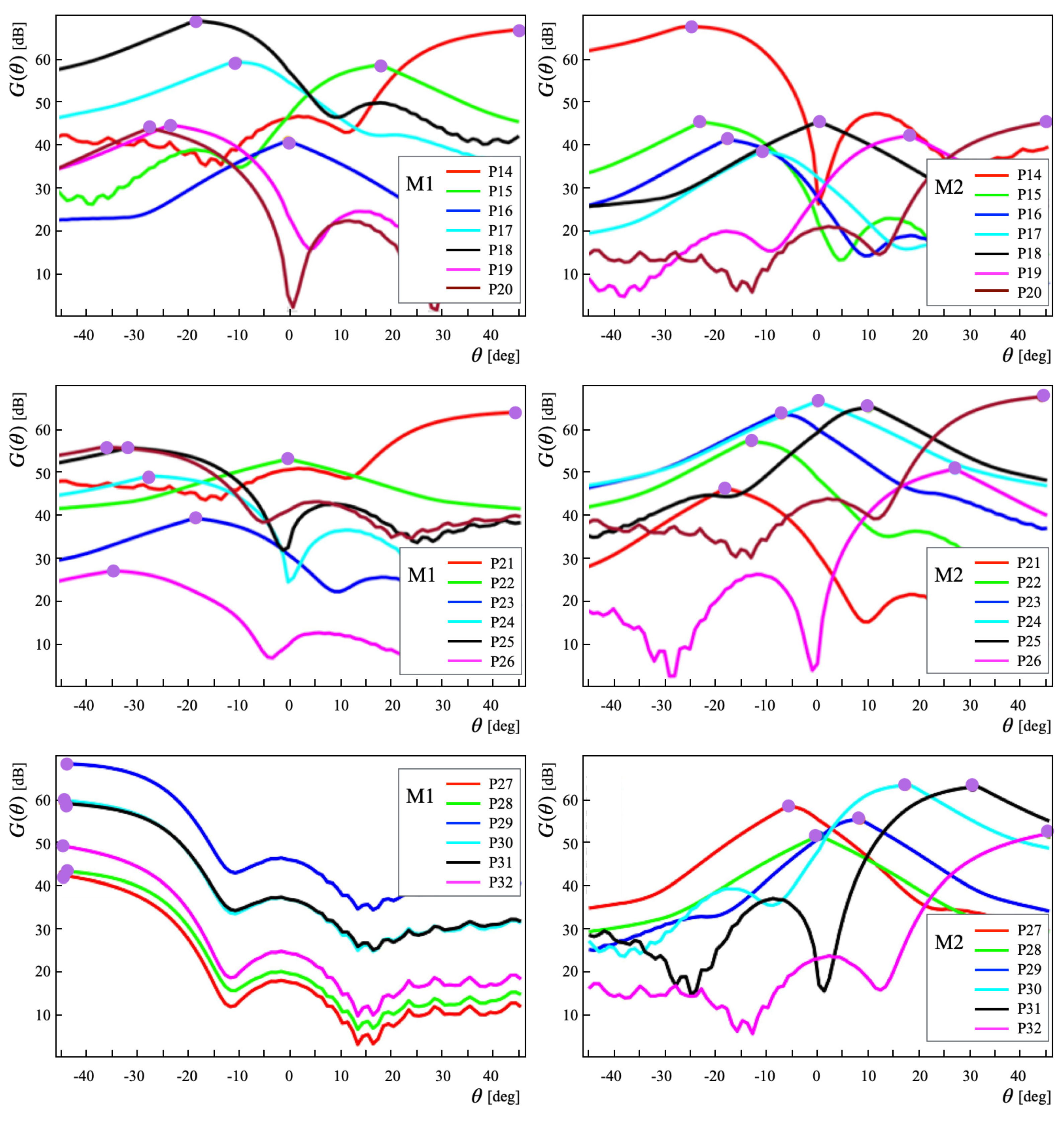

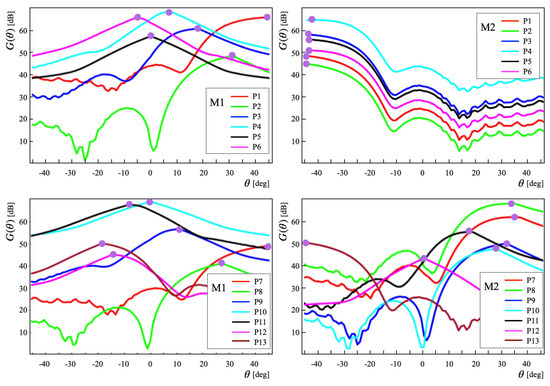

Figure 16 depicts the angle estimation results obtained for each group with similar vertical positions to those shown in Figure 15. The respective results demonstrated that distinct peaks shown as filled purple circles from the output waves were obtained at the source direction angles.

Figure 16.

Angle estimation results obtained for P1–P32 (, 5, 10, 15, and 20).

Table 9 presents the estimated angles and error values. The mean error values of M1 and M2 were, respectively, 0.28° and 0.53°. The error values were smaller than 2° because an effective angular range smaller than ±45° was used.

Table 9.

Angle estimation results and errors obtained from Experiment C (°).

Table 10 presents the position estimation results. The mean error values of and were, respectively, 0.46 m and 0.38 m. The error value at P11 was the highest. Moreover, the error values at P6, P21, and P22 were high. These positions were present in the shallow angles. For this setup of the microphone frontal directions, slight angular errors induced greater coordinate errors.

Table 10.

Position estimation results obtained from Experiment C (m).

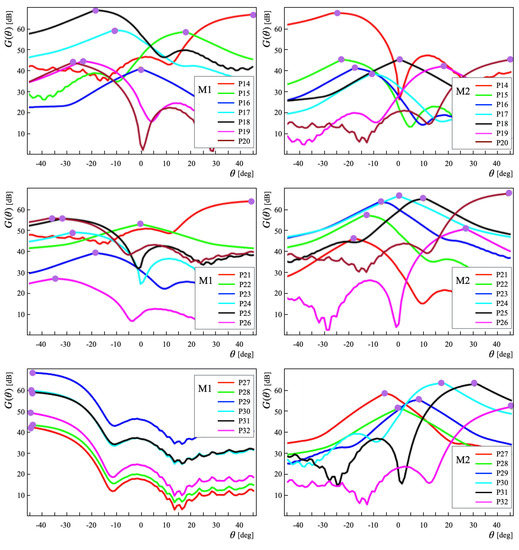

Figure 17 portrays the distributions for the GT positions and estimated positions. This tendency indicated that the error values were significant at positions near the diagonal between M1 and M2.

Figure 17.

Positional distributions between the GT positions and estimated positions for Experiment C.

Figure 18 portrays the scatter plots between the GT coordinates and estimated coordinates for the respective axes. The distribution variation shown for the results of Experiment C was higher than that of the scatter plots for Experiment B. By contrast, the distribution variation was low in the lower left and upper right regions, which were more distant from both microphone arrays.

Figure 18.

Scatter plots showing the GT coordinates and estimated coordinates for Experiment C.

5.5. Discussion

In Japanese rice cultivation, paddy rice seedlings are planted with approximately a 0.3 m separation. Therefore, mallards move in a grid up to 0.3 m. Table 11 presents the simulated evaluation results of the estimation accuracy for mallard detection within grids up to the third neighborhood in each coordinate for Experiments B and C. The experimentally obtained results revealed that the proposed method can detect mallards with 67.7% accuracy in a similar grid. Moreover, accuracies of 78.9%, 83.3%, and 88.3% were obtained, respectively, in the first, second, and third neighborhood regions. We considered that the allowable errors can be set widely for various mallard group sizes. Although this study was conducted to assess the application of the method in a rice paddy field, the accuracy can be expected to vary greatly depending on other applications.

Table 11.

Estimation accuracies within grids up to the third neighborhood.

6. Conclusions

This study was undertaken to detect mallards based on acoustic information. We developed a prototype system comprising two sets of microphone arrays. In all, we used 64 microphones installed on our originally designed and assembled sensor mounts. For the benchmark evaluation, we obtained three acoustic datasets in an outdoor environment. For the first experiment, the angular resolutions were evaluated using a single microphone array. An error accumulation tendency was demonstrated as the angle increased from the front side of the microphones. For the second experiment, the sound sources were estimated at 12 positions with 10 m grids in a square area. The accumulated position errors were affected by the frontal orientation settings of the two microphone arrays. For the third experiment, sound sources were at 23 positions with 5 m grids in a rectangular area. To minimize blind positions, the two microphone arrays were installed with their front sides facing diagonally. Although the error increased in the diagonal direction because of the effect of the angular resolution of shallow angles, the positional errors were reduced compared to the second experiment. These experimentally obtained results revealed that the proposed system demonstrated adequate accuracy for application to rice–duck farming.

As future work aimed at practical use, we expect to improve our microphone array system in terms of miniaturization and waterproofing to facilitate its practical use for remote farming. For the problem of missing sound sources on the diagonal line between the two microphone arrays, we must consider superposition approaches using probability maps. We would like to distinguish mallard calls from noises such as the calls of larks, herons, and frogs in paddy fields. Moreover, for the protection of the ducks, we expect to combine acoustic information and visual information to detect and recognize the natural enemies of mallards. For this task, we plan on developing a robot that imitates natural enemies such as crows, kites, and weasels. We expect to improve the resolution accuracy of multiple mallard sound sources. Furthermore, we would like to discriminate mallards from crows and other birds and predict the time series mobility paths of mallards using a DL-based approach combine with state-of-the-art backbones. Finally, we would like to actualize the efficient protection of mallards by developing a system that notifies farmers of predator intrusion based on escape behavior patterns. This is because physical protection of mallards, such as electric fences and nylon lines around over paddy fields, requires a significant effort from farmers.

Author Contributions

Conceptualization, H.M.; methodology, K.W. and M.N.; software, S.N.; validation, K.W. and M.N.; formal analysis, S.Y.; investigation, S.Y.; resources, K.W. and M.N.; data curation, S.N.; writing—original draft preparation, H.M.; writing—review and editing, H.M.; visualization, H.W.; supervision, H.M.; project administration, K.S.; funding acquisition, H.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Numbers 17K00384 and 21H02321.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Datasets described as the results of this study are available upon request to the corresponding author.

Acknowledgments

We would like to express our appreciation to Masumi Hashimoto and Tsubasa Ebe, who are graduates of Akita Prefectural University, for their great cooperation with experiments.

Conflicts of Interest

The authors declare that they have no conflict of interest. The funders had no role in the design of the study, in the collection, analyses, or in interpretation of data, in the writing of the manuscript, nor in the decision to publish the results.

Abbreviations

The following abbreviations and acronyms are used for this report.

| AD | analog-to-digital |

| AVSS | advanced video and signal-based surveillance |

| BoF | bag-of-features |

| BS | background subtraction |

| CNN | convolutional neural network |

| DAS | delay-and-sum |

| DBDC | Drone-vs-Bird Detection Challenge |

| DCASE | detection and classification of acoustic scenes and events |

| DC-CNN | densely connected convolutional neural network |

| DL | deep learning |

| EM | expectation–maximization |

| GAN | generative adversarial network |

| GMM | Gaussian mixture models |

| HOG | histogram of oriented gradients |

| JST | Japan Standard Time |

| LBP | local binary pattern |

| MF | morphological filtering |

| MIML | multi-instance, multi-label |

| ML | machine learning |

| PCA | principal component analysis |

| RCNN | regions with convolutional neural network |

| RF | random forest |

| SIFT | scale-invariant features transform |

| SVM | support vector machine |

| UGV | unmanned ground vehicle |

| UTC | Coordinated Universal Time |

| WS-DAN | weakly supervised data augmentation network |

| YOLO | you only look once |

References

- Hossain, S.; Sugimoto, H.; Ahmed, G.; Islam, M. Effect of Integrated Rice-Duck Farming on Rice Yield, Farm Productivity, and Rice-Provisioning Ability of Farmers. Asian J. Agric. Dev. 2005, 2, 79–86. [Google Scholar]

- Madokoro, H.; Yamamoto, S.; Nishimura, Y.; Nix, S.; Woo, H.; Sato, K. Prototype Development of Small Mobile Robots for Mallard Navigation in Paddy Fields: Toward Realizing Remote Farming. Robotics 2021, 10, 63. [Google Scholar] [CrossRef]

- Reiher, C.; Yamaguchi, T. Food, agriculture and risk in contemporary Japan. Contemp. Jpn. 2017, 29, 2–13. [Google Scholar] [CrossRef]

- Lack, D.; Varley, G. Detection of Birds by Radar. Nature 1945, 156, 446. [Google Scholar] [CrossRef]

- Chabot, D.; Francis, C.M. Computer-automated bird detection and counts in high-resolution aerial images: A review. J. Field Ornithol. 2016, 87, 343–359. [Google Scholar] [CrossRef]

- Goel, S.; Bhusal, S.; Taylor, M.E.; Karkee, M. Detection and Localization of Birds for Bird Deterrence Using UAS. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017. [Google Scholar]

- Siahaan, Y.; Wardijono, B.A.; Mukhlis, Y. Design of Birds Detector and Repellent Using Frequency Based Arduino Uno with Android System. In Proceedings of the 2017 2nd International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 1–3 November 2017; pp. 239–243. [Google Scholar]

- Aishwarya, K.; Kathryn, J.C.; Lakshmi, R.B. A Survey on Bird Activity Monitoring and Collision Avoidance Techniques in Windmill Turbines. In Proceedings of the 2016 IEEE Technological Innovations in ICT for Agriculture and Rural Development, Chennai, India, 15–16 July 2016; pp. 188–193. [Google Scholar]

- Bas, Y.; Bas, D.; Julien, J.F. Tadarida: A Toolbox for Animal Detection on Acoustic Recordings. J. Open Res. Softw. 2017, 5, 6. [Google Scholar] [CrossRef] [Green Version]

- Dong, X.; Jia, J. Advances in Automatic Bird Species Recognition from Environmental Audio. J. Phys. Conf. Ser. 2020, 1544, 012110. [Google Scholar] [CrossRef]

- Kahl, S.; Clapp, M.; Hopping, W.; Goëau, H.; Glotin, H.; Planqué, R.; Vellinga, W.P.; Joly, A. Overview of BirdCLEF 2020: Bird Sound Recognition in Complex Acoustic Environments. In Proceedings of the 11th International Conference of the Cross-Language Evaluation Forum for European Languages, Thessaloniki, Greece, 20–25 September 2020. [Google Scholar]

- Qing, C.; Dickinson, P.; Lawson, S.; Freeman, R. Automatic nesting seabird detection based on boosted HOG-LBP descriptors. In Proceedings of the 18th IEEE International Conference on Image, Brussels, Belgium, 11–14 September 2011; pp. 3577–3580. [Google Scholar]

- Descamps, S.; B’echet, A.; Descombes, X.; Arnaud, A.; Zerubia, J. An Automatic Counter for Aerial Images of Aggregations of Large Birds. Bird Study 2011, 58, 302–308. [Google Scholar] [CrossRef]

- Farrell, R.; Oza, O.; Zhang, N.; Morariu, V.I.; Darrell, T.; Davis, L.S. Birdlets: Subordinate categorization using volumetric primitives and pose-normalized appearance. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 161–168. [Google Scholar]

- Mihreteab, K.; Iwahashi, M.; Yamamoto, M. Crow birds detection using HOG and CS-LBP. In Proceedings of the International Symposium on Intelligent Signal Processing and Communications Systems, New Taipei City, Taiwan, 4–7 November 2012. [Google Scholar]

- Liu, J.; Belhumeur, P.N. Bird Part Localization Using Exemplar-Based Models with Enforced Pose and Subcategory Consistency. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3–6 December 2013; pp. 2520–2527. [Google Scholar]

- Xu, Q.; Shi, X. A simplified bird skeleton based flying bird detection. In Proceedings of the 11th World Congress on Intelligent Control and Automation, Shenyang, China, 27–30 June 2014; pp. 1075–1078. [Google Scholar]

- Yoshihashi, R.; Kawakami, R.; Iida, M.; Naemura, T. Evaluation of Bird Detection Using Time-Lapse Images around a Wind Farm. In Proceedings of the European Wind Energy Association Conference, Paris, France, 17–20 November 2015. [Google Scholar]

- T’Jampens, R.; Hernandez, F.; Vandecasteele, F.; Verstockt, S. Automatic detection, tracking and counting of birds in marine video content. In Proceedings of the Sixth International Conference on Image Processing Theory, Tools and Applications, Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Takeki, A.; Trinh, T.T.; Yoshihashi, R.; Kawakami, R.; Iida, M.; Naemura, T. Combining Deep Features for Object Detection at Various Scales: Finding Small Birds in Landscape Images. IPSJ Trans. Comput. Vis. Appl. 2016, 8, 5. [Google Scholar] [CrossRef] [Green Version]

- Takeki, A.; Trinh, T.T.; Yoshihashi, R.; Kawakami, R.; Iida, M.; Naemura, T. Detection of Small Birds in Large Images by Combining a Deep Detector with Semantic Segmentation. In Proceedings of the 2016 IEEE International Conference on Image Processing, Phoenix, AR, USA, 25–28 September 2016; pp. 3977–3981. [Google Scholar]

- Yoshihashi, R.; Kawakami, R.; Iida, M.; Naemura, T. Bird Detection and Species Classification with Time-Lapse Images around a Wind Farm: Dataset Construction and Evaluation. Wind Energy 2017, 20, 1983–1995. [Google Scholar] [CrossRef]

- Tian, S.; Cao, X.; Zhang, B.; Ding, Y. Learning the State Space Based on Flying Pattern for Bird Detection. In Proceedings of the 2017 Integrated Communications, Navigation and Surveillance Conference, Herndon, VA, USA, 18–20 April 2017; pp. 5B3-1–5B3-9. [Google Scholar]

- Wu, T.; Luo, X.; Xu, Q. A new skeleton based flying bird detection method for low-altitude air traffic management. Chin. J. Aeronaut. 2018, 31, 2149–2164. [Google Scholar] [CrossRef]

- Lee, S.; Lee, M.; Jeon, H.; Smith, A. Bird Detection in Agriculture Environment using Image Processing and Neural Network. In Proceedings of the 6th International Conference on Control, Decision and Information Technologies, Paris, France, 23–26 April 2019; pp. 1658–1663. [Google Scholar]

- Vishnuvardhan, R.; Deenadayalan, G.; Vijaya Gopala Rao, M.V.; Jadhav, S.P.; Balachandran, A. Automatic Detection of Flying Bird Species Using Computer Vision Techniques. In Proceedings of the International Conference on Physics and Photonics Processes in Nano Sciences, Eluru, India, 20–22 June 2019. [Google Scholar]

- Hong, S.J.; Han, Y.; Kim, S.Y.; Lee, A.Y.; Kim, G. Application of Deep-Learning Methods to Bird Detection Using Unmanned Aerial Vehicle Imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boudaoud, L.B.; Maussang, F.; Garello, R.; Chevallier, A. Marine Bird Detection Based on Deep Learning using High-Resolution Aerial Images. In Proceedings of the OCEANS 2019—Marseille, Marseille, France, 17–20 June 2019; pp. 1–7. [Google Scholar]

- Jo, J.; Park, J.; Han, J.; Lee, M.; Smith, A.H. Dynamic Bird Detection Using Image Processing and Neural Network. In Proceedings of the 7th International Conference on Robot Intelligence Technology and Applications, Daejeon, Korea, 1–3 November 2019; pp. 210–214. [Google Scholar]

- Fan, J.; Liu, X.; Wang, X.; Wang, D.; Han, M. Multi-Background Island Bird Detection Based on Faster R-CNN. Cybern. Syst. 2020, 52, 26–35. [Google Scholar] [CrossRef]

- Akcay, H.G.; Kabasakal, B.; Aksu, D.; Demir, N.; Öz, M.; Erdoǧan, A. Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping. Animals 2020, 10, 1207. [Google Scholar] [CrossRef]

- Mao, X.; Chow, J.K.; Tan, P.S.; Liu, K.; Wu, J.; Su, Z.; Cheong, Y.H.; Ooi, G.L.; Pang, C.C.; Wang, Y. Domain Randomization-Enhanced Deep Learning Models for Bird Detection. Sci. Rep. 2021, 11, 639. [Google Scholar] [CrossRef]

- Marcoň, P.; Janoušek, J.; Pokorný, J.; Novotný, J.; Hutová, E.V.; Širůčková, A.; Čáp, M.; Lázničková, J.; Kadlec, R.; Raichl, P.; et al. A System Using Artificial Intelligence to Detect and Scare Bird Flocks in the Protection of Ripening Fruit. Sensors 2021, 21, 4244. [Google Scholar] [CrossRef] [PubMed]

- Jančovič, P.; Köküer, M. Automatic Detection and Recognition of Tonal Bird Sounds in Noisy Environments. EURASIP J. Adv. Signal Process. 2011, 2011, 982936. [Google Scholar] [CrossRef] [Green Version]

- Briggs, F.; Lakshminarayanan, B.; Neal, L.; Fern, X.Z.; Raich, R.; Hadley, S.J.K.; Hadley, A.S.; Betts, M.G. Acoustic Classification of Multiple Simultaneous Bird Species: A Multi-Instance Multi-Label Approach. J. Acoust. Soc. Am. 2012, 131, 4640–4650. [Google Scholar] [CrossRef] [Green Version]

- Stowell, D.; Plumbley, M.D. Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ Life Environ. 2014, 2, E488. [Google Scholar] [CrossRef] [Green Version]

- Papadopoulos, T.; Roberts, S.; Willis, K. Detecting bird sound in unknown acoustic background using crowdsourced training data. arXiv 2015, arXiv:1505.06443v1. [Google Scholar]

- de Oliveira, A.G.; Ventura, T.M.; Ganchev, T.D.; de Figueiredo, J.M.; Jahn, O.; Marques, M.I.; Schuchmann, K.-L. Bird acoustic activity detection based on morphological filtering of the spectrogram. Appl. Acoust. 2015, 98, 34–42. [Google Scholar] [CrossRef]

- Adavanne, S.; Drossos, K.; Cakir, E.; Virtanen, T. Stacked Convolutional and Recurrent Neural Networks for Bird Audio Detection. In Proceedings of the 25th European Signal Processing Conference, Kos, Greece, 28 August–2 September 2017; pp. 1729–1733. [Google Scholar]

- Pellegrini, T. Densely connected CNNs for bird audio detection. In Proceedings of the 25th European Signal Processing Conference, Kos, Greece, 28 August–2 September 2017; pp. 1734–1738. [Google Scholar]

- Cakir, E.; Adavanne, S.; Parascandolo, G.; Drossos, K.; Virtanen, T. Convolutional Recurrent Neural Networks for Bird Audio Detection. In Proceedings of the 25th European Signal Processing Conference, Kos, Greece, 28 August–2 September 2017; pp. 1744–1748. [Google Scholar]

- Kong, Q.; Xu, Y.; Plumbley, M.D. Joint detection and classification convolutional neural network on weakly labelled bird audio detection. In Proceedings of the 25th European Signal Processing Conference, Kos, Greece, 28 August–2 September 2017; pp. 1749–1753. [Google Scholar]

- Grill, T.; Schlüter, J. Two Convolutional Neural Networks for Bird Detection in Audio Signals. In Proceedings of the 25th European Signal Processing Conference, Kos, Greece, 28 August–2 September 2017; pp. 1764–1768. [Google Scholar]

- Lasseck, M. Acoustic Bird Detection with Deep Convolutional Neural Networks. In Proceedings of the IEEE AASP Challenges on Detection and Classification of Acoustic Scenes and Events, Online, 30 March–31 July 2018. [Google Scholar]

- Liang, W.K.; Zabidi, M.M.A. Bird Acoustic Event Detection with Binarized Neural Networks. Preprint 2020. [Google Scholar] [CrossRef]

- Solomes, A.M.; Stowell, D. Efficient Bird Sound Detection on the Bela Embedded System. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal, Barcelona, Spain, 4–8 May 2020; pp. 746–750. [Google Scholar]

- Hong, T.Y.; Zabidi, M.M.A. Bird Sound Detection with Convolutional Neural Networks using Raw Waveforms and Spectrograms. In Proceedings of the International Symposium on Applied Science and Engineering, Erzurum, Turkey, 7–9 April 2021. [Google Scholar]

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- Zhong, M.; Taylor, R.; Bates, N.; Christey, D.; Basnet, B.; Flippin, J.; Palkovitz, S.; Dodhia, R.; Ferres, J.L. Acoustic detection of regionally rare bird species through deep convolutional neural networks. Ecol. Inform. 2021, 64, 101333. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Anil, R. CSE 252: Bird’s Eye View: Detecting and Recognizing Birds Using the BIRDS 200 Dataset. 2011. Available online: https://cseweb.ucsd.edu//classes/sp11/cse252c/projects/2011/ranil_final.pdf (accessed on 21 December 2021).

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech–UCSD Birds-2000-2011 Dataset. Computation & Neural Systems Technical Report, CNS-TR-2011-001. 2011. Available online: http://www.vision.caltech.edu/visipedia/papers/CUB_200_2011.pdf (accessed on 21 December 2021).

- Yoshihashi, R.; Kawakami, R.; Iida, M.; Naemura, T. Construction of a bird image dataset for ecological investigations. In Proceedings of the IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015; pp. 4248–4252. [Google Scholar]

- Buxton, R.T.; Jones, I.L. Measuring nocturnal seabird activity and status using acoustic recording devices: Applications for island restoration. J. Field Ornithol. 2012, 83, 47–60. [Google Scholar] [CrossRef]

- Glotin, H.; LeCun, Y.; Artieŕes, T.; Mallat, S.; Tchernichovski, O.; Halkias, X. Neural Information Processing Scaled for Bioacoustics, from Neurons to Big Data; Neural Information Processing Systems Foundation: San Diego, CA, USA, 2013. [Google Scholar]

- Stowell, D.; Plumbley, M.D. An open dataset for research on audio field recording archives: Freefield1010. arXiv 2013, arXiv:1309.5275v2. [Google Scholar]

- Goëau, H.; Glotin, H.; Vellinga, W.-P.; Rauber, A. LifeCLEF bird identification task 2014. In Proceedings of the 5th International Conference and Labs of the Evaluation Forum, Sheffield, UK, 15–18 September 2014. [Google Scholar]

- Salamon, J.; Jacoby, C.; Bello, J.P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 1041–1044. [Google Scholar]

- Vellinga, W.P.; Planqué, R. The Xeno-Canto collection and its relation to sound recognition and classification. In Proceedings of the 6th International Conference and Labs of the Evaluation Forum, Toulouse, France, 8–11 September 2015. [Google Scholar]

- Stowell, D.; Giannoulis, D.; Benetos, E.; Lagrange, M.; Plumbley, M.D. Detection and Classification of Audio Scenes and Events. IEEE Trans. Multimed. 2015, 17, 1733–1746. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P.; Farnsworth, A.; Robbins, M.; Keen, S.; Klinck, H.; Kelling, S. Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring. PLoS ONE 2016, 11, e0166866. [Google Scholar] [CrossRef]

- Stowell, D.; Wood, M.; Stylianou, Y.; Glotin, H. Bird Detection in Audio: A Survey and a Challenge. In Proceedings of the IEEE 26th International Workshop on Machine Learning for Signal Processing, Salerno, Italy, 13–16 September 2016; pp. 1–6. [Google Scholar]

- Darras, K.; Pütz, P.; Fahrurrozi; Rembold, K.; Tscharntke, T. Measuring sound detection spaces for acoustic animal sampling and monitoring. Biol. Conserv. 2016, 201, 29–37. [Google Scholar] [CrossRef]

- Hervás, M.; Alsina-Pagés, R.M.; Alias, F.; Salvador, M. An FPGA-Based WASN for Remote Real-Time Monitoring of Endangered Species: A Case Study on the Birdsong Recognition of Botaurus stellaris. Sensors 2017, 17, 1331. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stowell, D.; Stylianou, Y.; Wood, M.; Pamula, H.; Glotin, H. Automatic acoustic detection of birds through deep learning: The first Bird Audio Detection challenge. Methods Ecol. Evol. 2018, 10, 368–380. [Google Scholar] [CrossRef] [Green Version]

- MacQueen, J.B. Some Methods for classification and Analysis of Multivariate Observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Serra, J.; Vincent, L. An overview of morphological filtering. Circuits Syst. Signal Process. 1992, 11, 47–108. [Google Scholar] [CrossRef] [Green Version]

- Moon, T.K. The expectation-maximization algorithm. IEEE Signal Process. Mag. 1996, 13, 47–60. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Csurka, G.; Dance, C.R.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 1–22. [Google Scholar]

- Dalall, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 886–893. [Google Scholar]

- Boser, B.; Guyon, I.; Vapnik, V. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Heikkila, M.; Schmid, C. Description of interest regions with local binary patterns. Pattern Recognit. 2009, 42, 425–436. [Google Scholar] [CrossRef] [Green Version]

- Cristani, M.; Farenzena, M.; Bloisi, D.; Murino, V. Background Subtraction for Automated Multisensor Surveillance: A Comprehensive Review. EURASIP J. Adv. Signal Process. 2010, 1, 24. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.H.; Zhang, M.L.; Huang, S.J.; Li, Y.F. Multi-Instance Multi-Label Learning. Artif. Intell. 2012, 176, 2291–2320. [Google Scholar] [CrossRef] [Green Version]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556v6. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 525–542. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2606–2615. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Hu, T.; Qi, H.; Huang, Q.; Lu, Y. See Better before Looking Closer: Weakly Supervised Data Augmentation Network for Fine-Grained Visual Classification. arXiv 2019, arXiv:1901.09891. [Google Scholar]

- Zinemanas, P.; Cancela, P.; Rocamora, M. End-to-end Convolutional Neural Networks for Sound Event Detection in Urban Environments. In Proceedings of the 24th Conference of Open Innovations Association, Moscow, Russia, 8–12 April 2019; pp. 533–539. [Google Scholar]

- Purwins, H.; Li, B.; Virtanen, T.; Schlüter, J.; Chang, S.; Sainath, T. Deep Learning for Audio Signal Processing. IEEE J. Sel. Top. Signal Process. 2019, 13, 206–219. [Google Scholar] [CrossRef] [Green Version]

- Seidailyeva, U.; Akhmetov, D.; Ilipbayeva, L.; Matson, E.T. Real-Time and Accurate Drone Detection in a Video with a Static Background. Sensors 2020, 20, 3856. [Google Scholar] [CrossRef]

- Coluccia, A.; Ghenescu, M.; Piatrik, T.; De Cubber, G.; Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J.; Farhadi, M.; et al. Drone-vs-Bird Detection Challenge at IEEE AVSS2019. In Proceedings of the 16th IEEE International Conference on Advanced Video and Signal Based Surveillance, Taipei, Taiwan, 18–21 September 2019; pp. 1–7. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, W.; Yi, X.; Khan, A.; Yuan, F.; Zheng, Y. Recent Progress on Generative Adversarial Networks (GANs): A Survey. IEEE Access 2019, 7, 36322–36333. [Google Scholar] [CrossRef]

- Madokoro, H.; Yamamoto, S.; Watanabe, K.; Nishiguchi, M.; Nix, S.; Woo, H.; Sato, K. Prototype Development of Cross-Shaped Microphone Array System for Drone Localization Based on Delay-and-Sum Beamforming in GNSS-Denied Areas. Drones 2021, 5, 123. [Google Scholar] [CrossRef]

- Hashimoto, M.; Madokoro, H.; Watanabe, K.; Nishiguchi, M.; Yamamoto, S.; Woo, H.; Sato, K. Mallard Detection using Microphone Array and Delay-and-Sum Beamforming. In Proceedings of the 19th International Conference on Control, Automation and Systems, Jeju, Korea, 15–18 October 2019; pp. 1566–1571. [Google Scholar]

- Van Trees, H.L. Optimum Array Processing; Wiley: New York, NY, USA, 2002. [Google Scholar]

- Veen, B.; Buckley, K. Beamforming: A versatile approach to spatial filtering. IEEE ASSP Mag. 1988, 5, 4–24. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).