1. Introduction

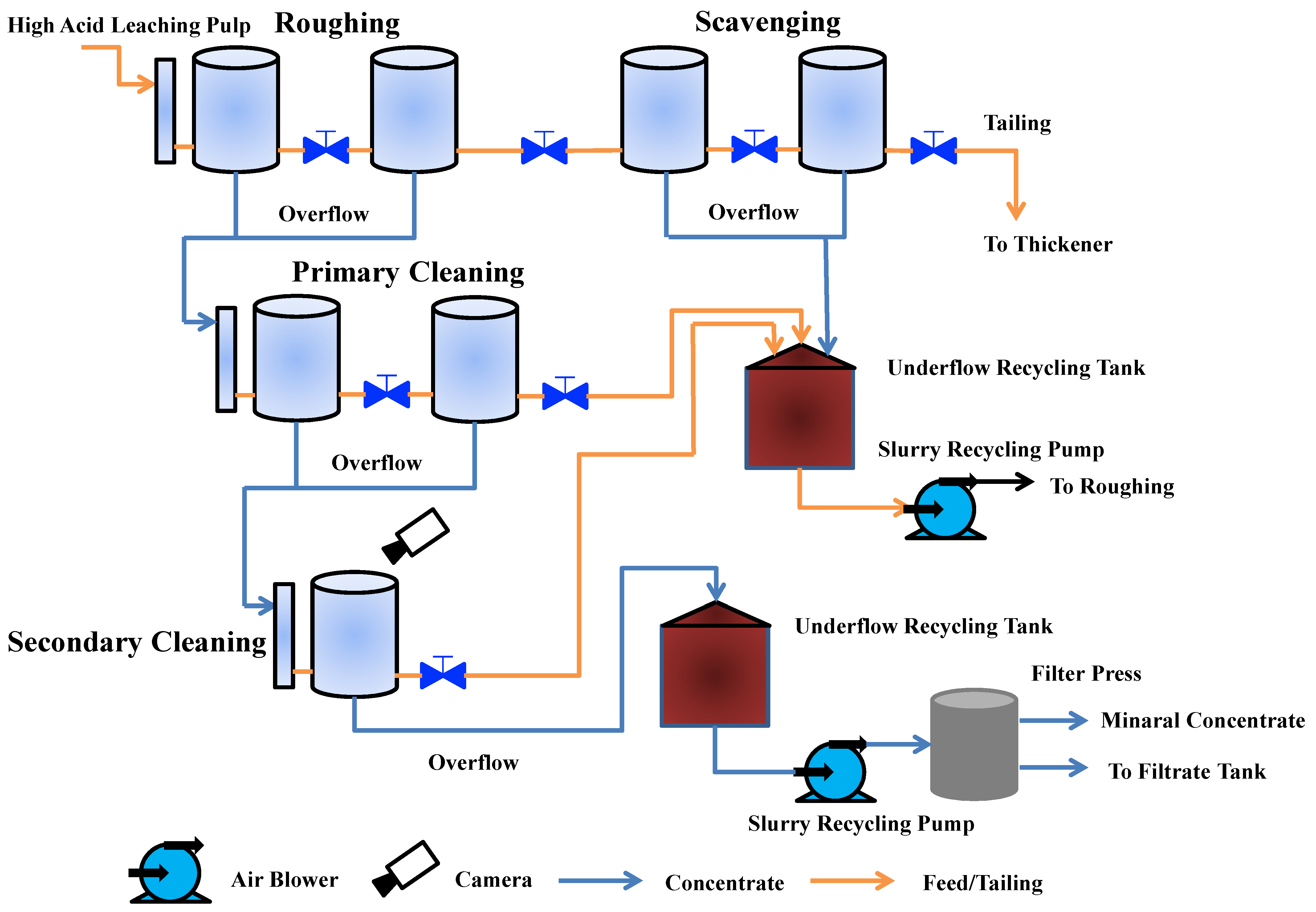

The aim of the mineral flotation process is to separate valuable minerals from useless materials or other minerals so as to obtain upgraded minerals. The concentrate grade is measured by calculating the percentage of the recovered useful element mass in the total concentrate mass. As a key performance indicator to evaluate the flotation effect in metallurgical enterprises, the concentrate grade directly reflects the quality of flotation working conditions. When the grade of the concentrate is too low, the working conditions of the flotation process will be in an abnormal state. The operator should adjust the flotation operating variables such as the inlet air flow and pulp level, related to the flotation working conditions in order to improve the concentrate grade. The better the flotation working conditions, the higher the concentrate grade. Thus, the concentrate grade reflects the flotation working conditions.

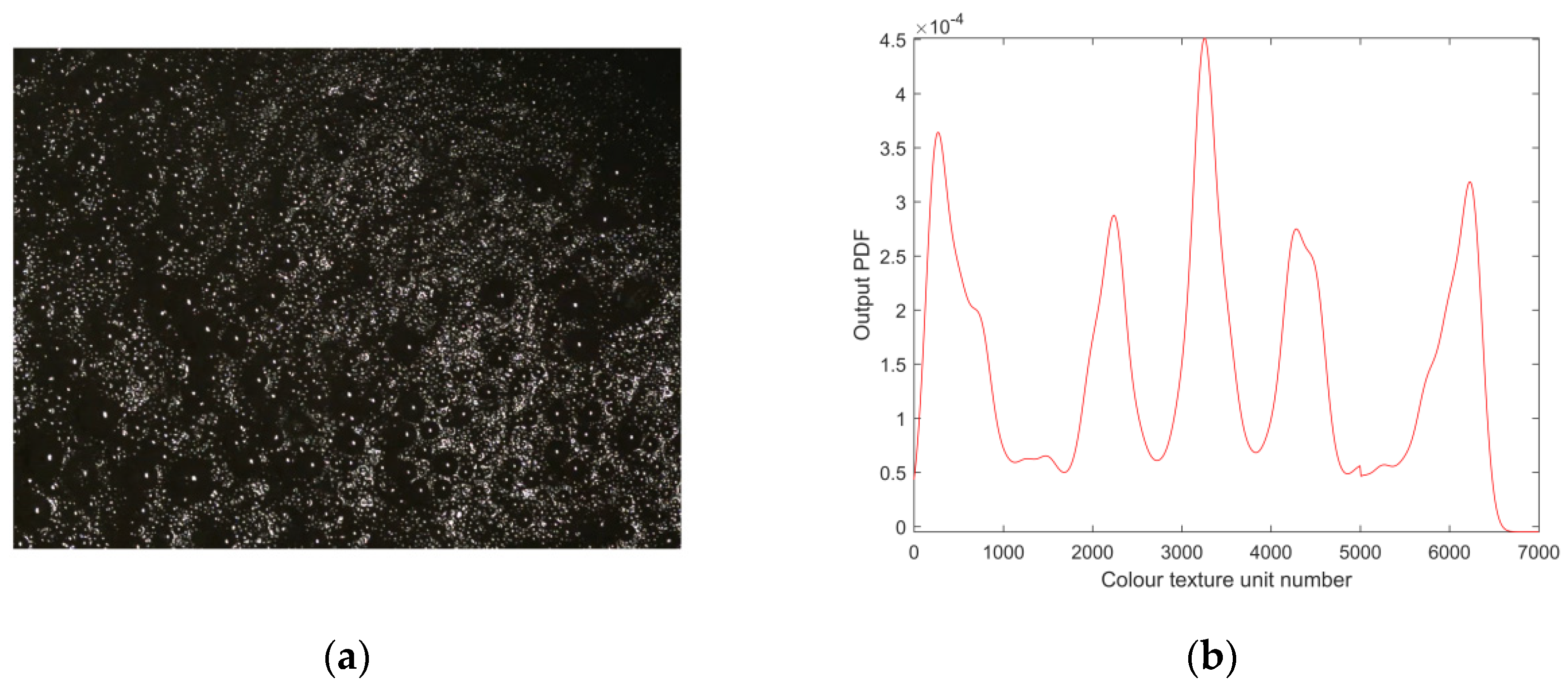

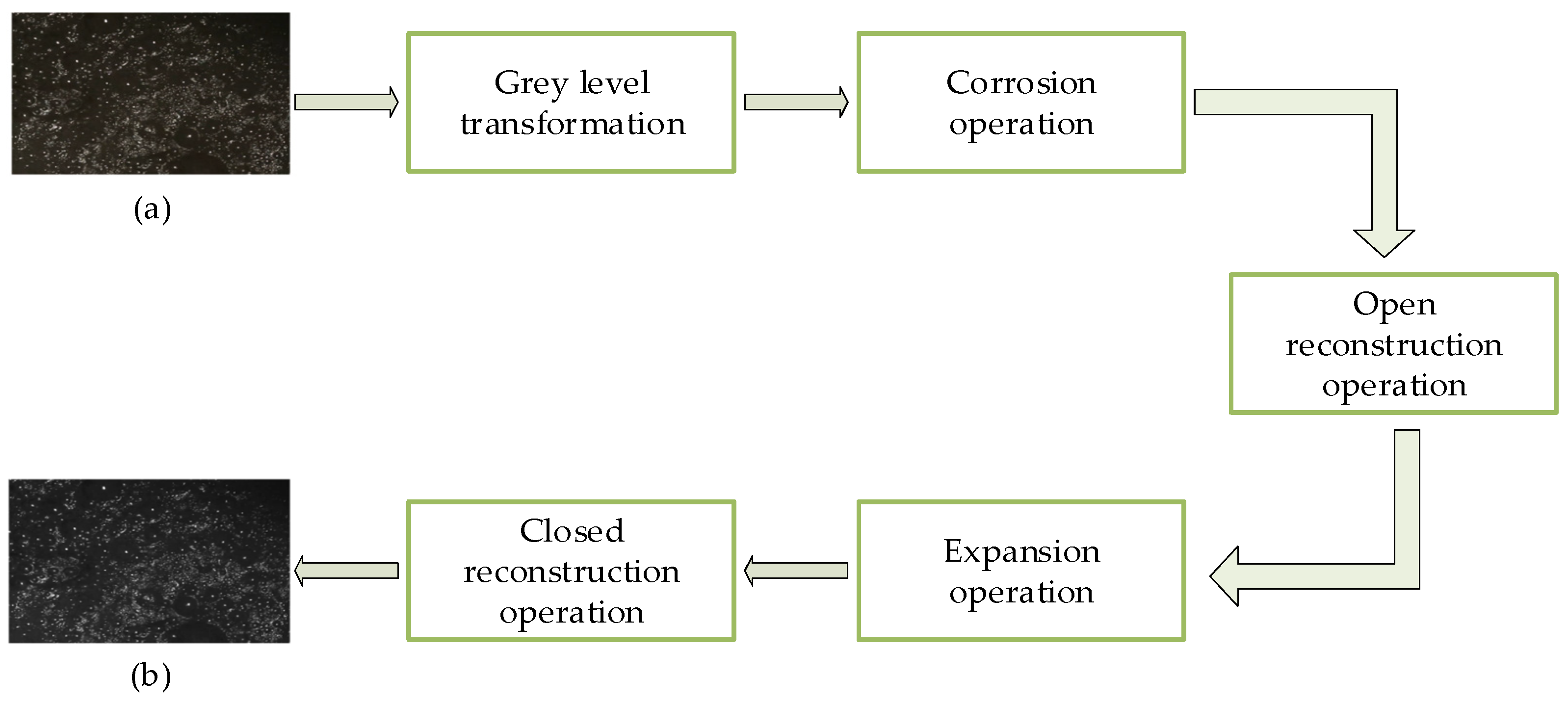

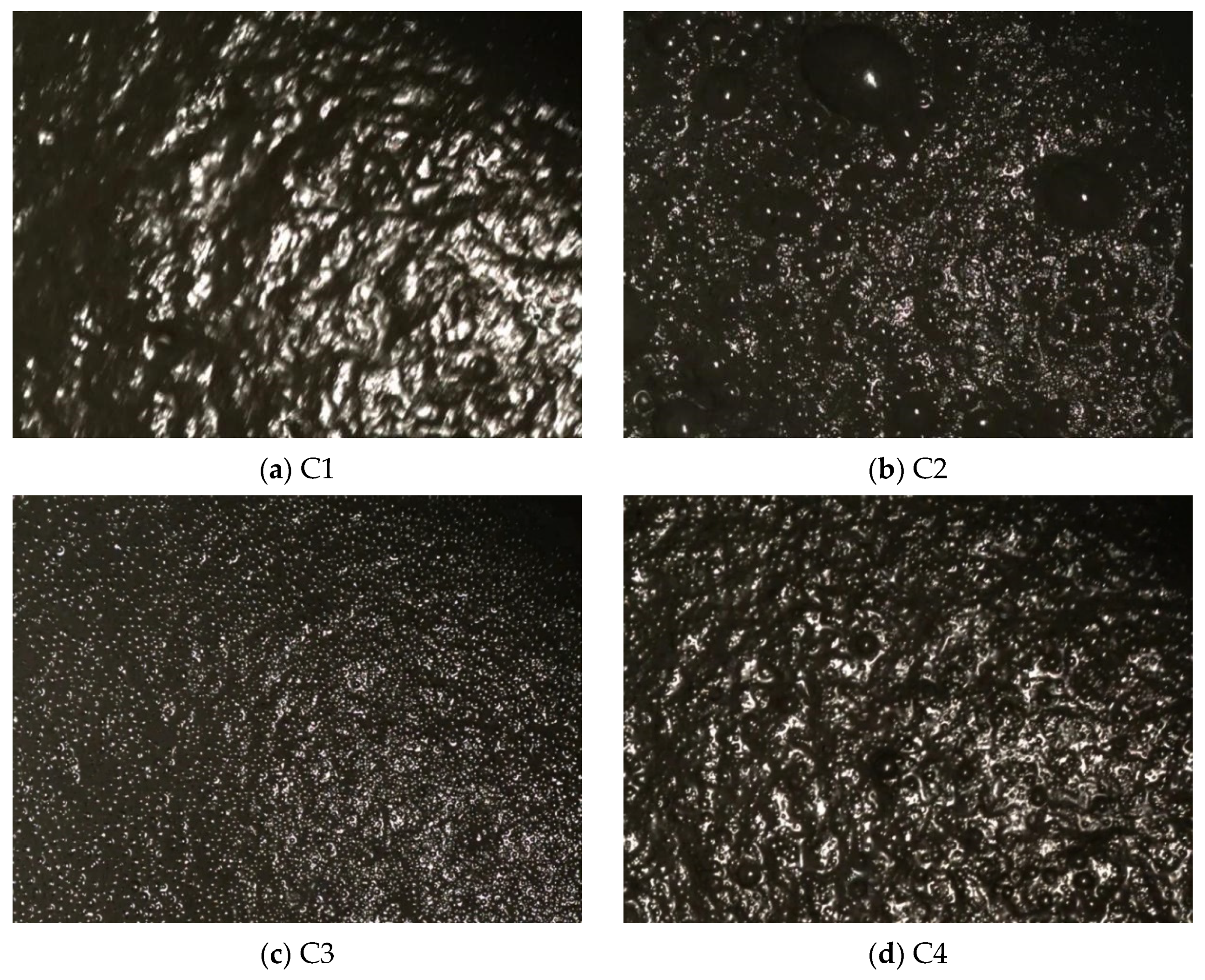

In the process of mineral flotation, the process working conditions are judged mainly according to the color, size and other features of the flotation froth, and operating parameters such as the air blowing amount are adjusted to ensure that the concentrate grade reaches the standard. Due to strong subjective and labor-intensive manual control, the efficiency of production is low, and the grade of the concentrate cannot be guaranteed. Therefore, researchers have studied the features of flotation froth images and found that the image features of mineral flotation froth are closely related to the operating parameters, working conditions and concentrate grade in the flotation process. Researchers continue to obtain shallow features, such as the color, texture and size of mineral flotation froth images, through image processing technology to identify the working conditions of the flotation process [

1,

2,

3,

4,

5,

6] and realize the optimal control of the flotation process. The extracted flotation froth image features, however, contain an excessive amount of redundant and noisy information, resulting in the low accuracy of working condition recognition and affecting flotation production efficiency. Therefore, it is of great significance to achieve the optimal control of the flotation process and improve the mineral flotation concentrate grade by studying a more effective mineral flotation process working condition recognition method.

The key to realizing the optimal control of the flotation process is the accurate extraction of flotation froth image features. Therefore, researchers are committed to extracting the features of flotation froth images and identifying the working conditions of the flotation process on this basis [

7,

8,

9,

10]. Such features include the following.

The color of the froth can reflect the types of particles carried by the froth and the quality of the flotation working conditions during the flotation process. In the past, many researchers have described color information mainly by calculating statistics such as the mean and standard deviation of each component of the color space, such as RGB, HSV and HIS [

11,

12,

13]. However, the extraction of color information is susceptible to lighting.

The texture of the froth, which is primarily represented by calculating the arrangement rules and local patterns of the local gray value of the image, partially reflects the quality of the flotation process. In the past, domestic and foreign researchers extracted the texture features of flotation froth mainly by using the gray-level co-occurrence matrix (GLCM) [

14], wavelet transform [

15] and texture spectrum [

16]. The GLCM describes the texture features through the calculation of second-order statistics in all directions, leading to the problem that high-dimensional matrices are computationally intensive, and it is difficult to completely describe the froth texture features through single-direction statistics. The color information is not taken into account when extracting froth texture features using the wavelet transform and texture spectrum, and the texture is instead described by conventional statistics (such as uniformity, entropy, etc.), which makes it difficult to completely and accurately represent the local differences in the froth image texture under various working conditions.

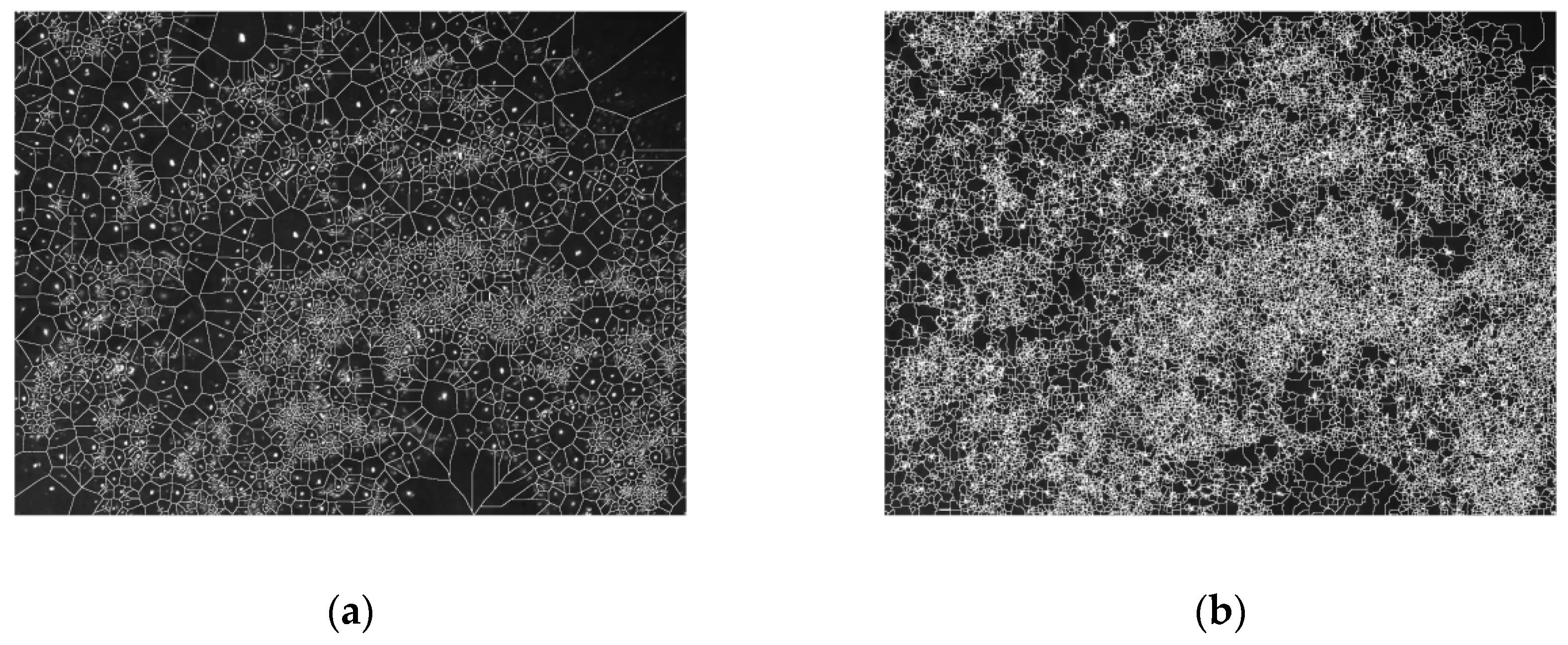

The working conditions of the flotation process can also be reflected by the size features of the froth, which mainly include the average size and deflection of the froth [

17]. Beginning with the accurate segmentation of the froth image, froth size features can be accurately acquired. In the past, researchers have conducted extensive research on froth segmentation methods, including threshold segmentation [

18], watershed segmentation [

19] and valley edge detection [

20], three widely used froth image segmentation methods in the flotation process. According to the features of flotation froth images, researchers concluded that the watershed segmentation algorithm is suitable for segmenting mineral flotation froth images among many segmentation algorithms. However, the traditional watershed segmentation algorithm is prone to over-segmentation or under-segmentation. Therefore, researchers subsequently improved the watershed segmentation algorithm to promote the accuracy of flotation froth image segmentation and solved this problem to a certain extent [

21,

22,

23,

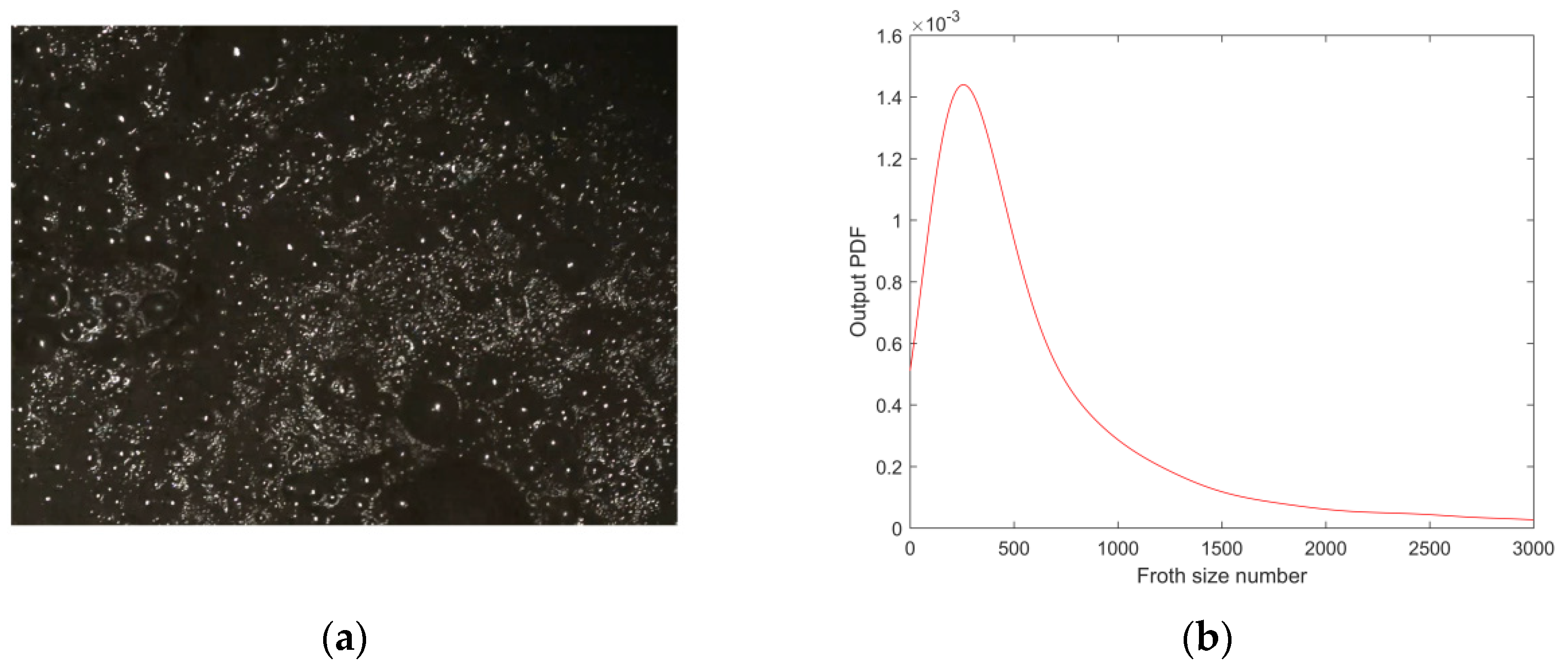

24]. In order to accurately extract the size features of flotation froth, further improvements are needed in the segmentation of mineral flotation froth images. The size features of the froth image are extracted after the froth image segmentation of mineral flotation has been completed. In previous studies, when extracting the size features of froth images, most post-processing analyses only extracted single-valued features, such as the mean, variance and peak value of the froth size, while ignoring the overall distribution of the froth size.

Since flotation froth contains a large amount of information reflecting flotation conditions, scholars, both domestically and internationally, have conducted a great number of studies on the detection of flotation process working conditions based on froth image features [

25,

26]. Zhu et al. [

27] proposed a flotation working condition recognition method using an LVQ neural network and a rough set on the basis of extracting froth texture features. Xu et al. [

28] proposed a fault detection method, which realizes the fault detection and diagnosis of the flotation process by detecting the filter based on the output probability density function and Lyapunov stability analysis. Zhao et al. [

29] proposed a fault working condition identification method for the antimony flotation process based on multi-scale texture features and embedding prior knowledge of the K mean. By first extracting features from the flotation froth image and then feeding the generated feature vectors to the classifier, these techniques enable the recognition of the flotation process working conditions. The results demonstrate that these methods can identify the working conditions of the flotation process to a certain extent. However, excessive are included in the extracted features, which affects the accuracy of recognition. Deep learning networks can now automatically extract deep features from image data with better flexibility and classification ability, so researchers have begun to use deep learning networks to identify the working conditions of the flotation process [

30,

31,

32]. Fu et al. [

33] used convolutional neural networks for pre-training on image databases of common objects for extracting features from flotation froth images and then effectively identified the working conditions of the mineral flotation process. Zarie et al. [

34] studied convolutional neural networks to classify froth images collected from industrial coal flotation towers operating under various process working conditions. Fu et al. [

35] solved the problem that deep learning network training requires a large number of flotation image datasets using transfer learning and network retraining. A large number of studies have proved that the method of identifying working conditions in the mineral flotation process based on deep learning network is effective [

36,

37,

38].

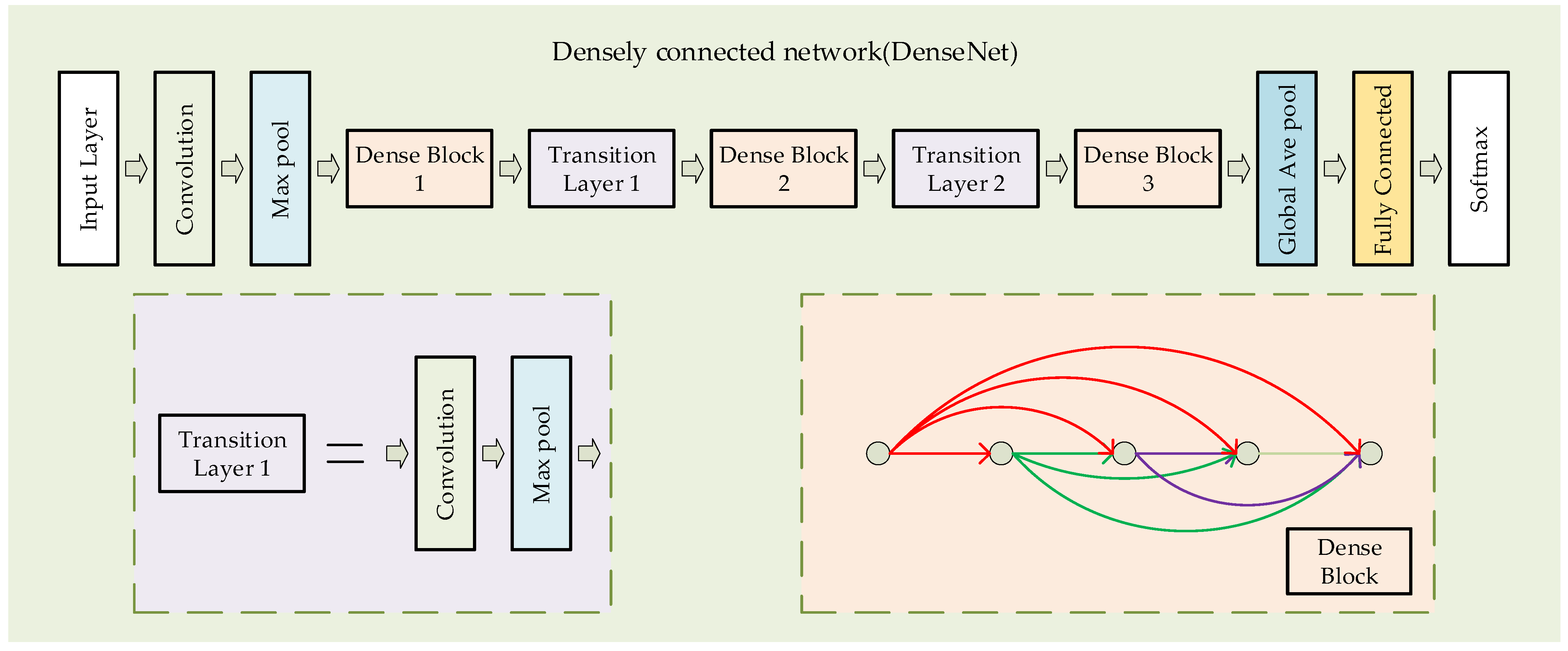

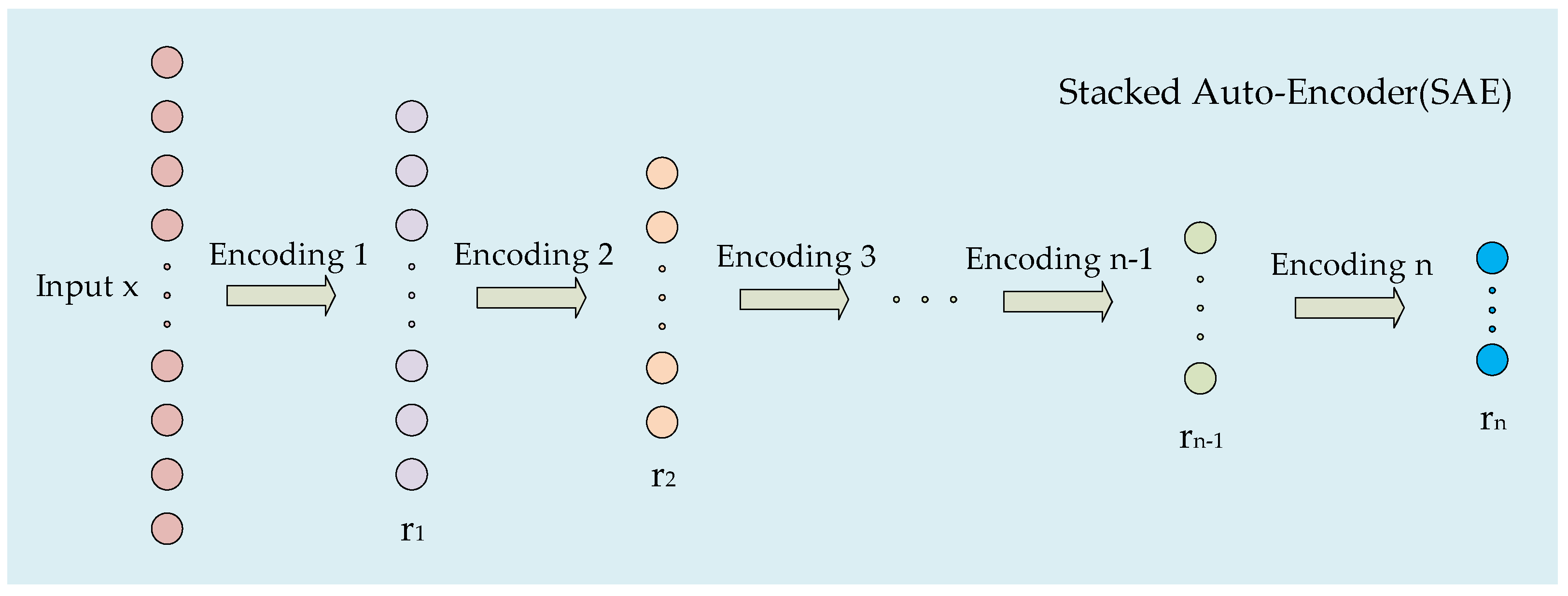

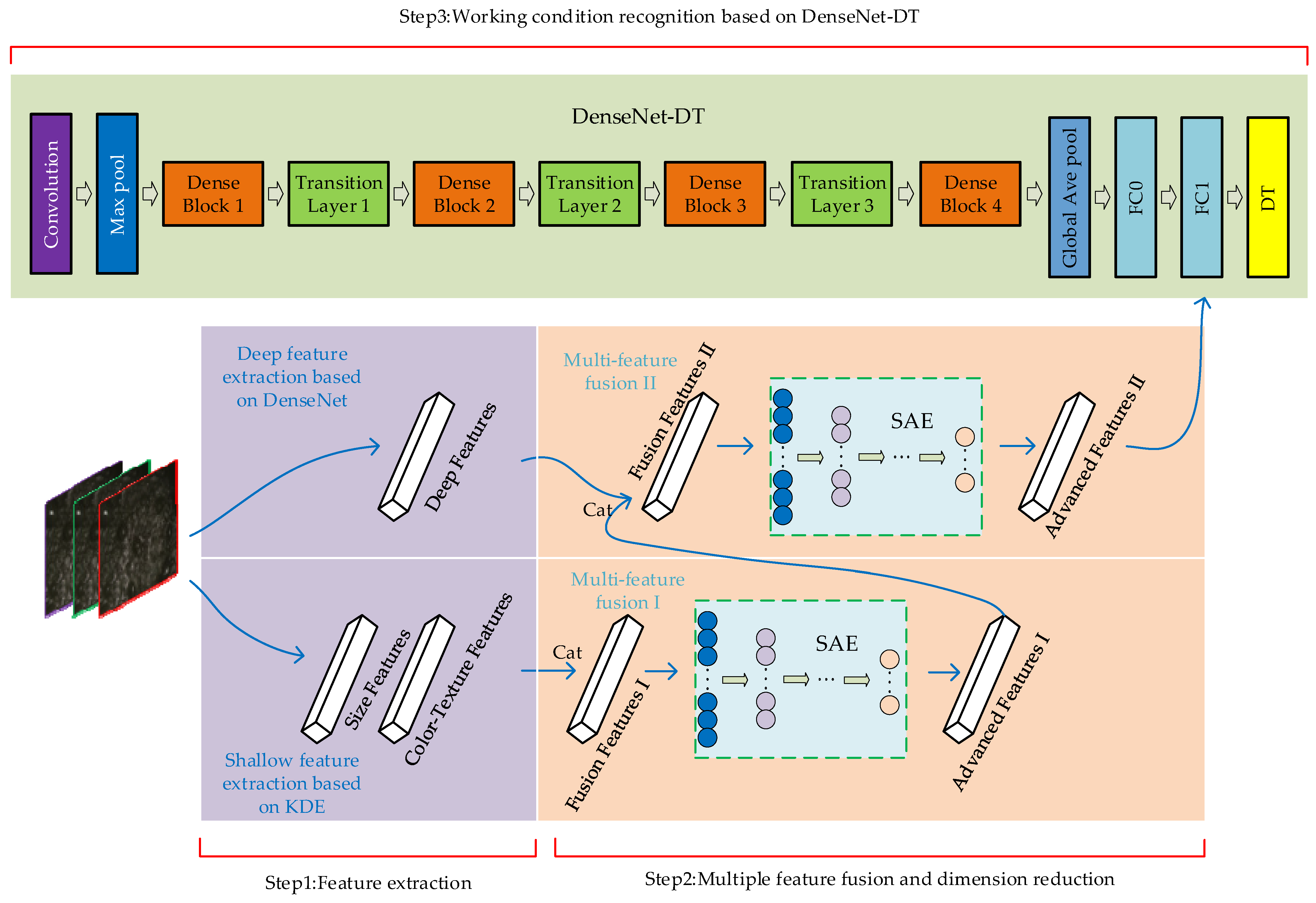

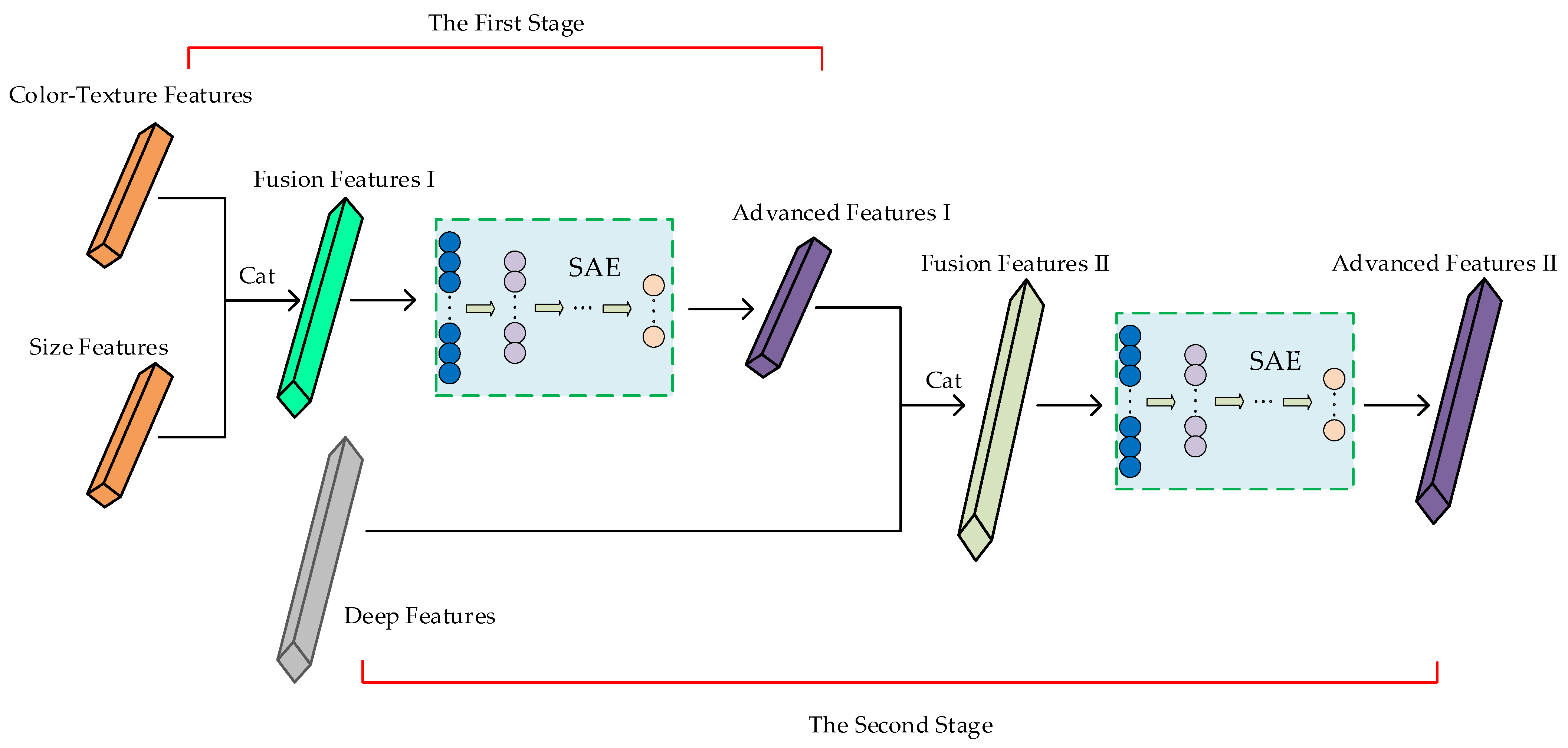

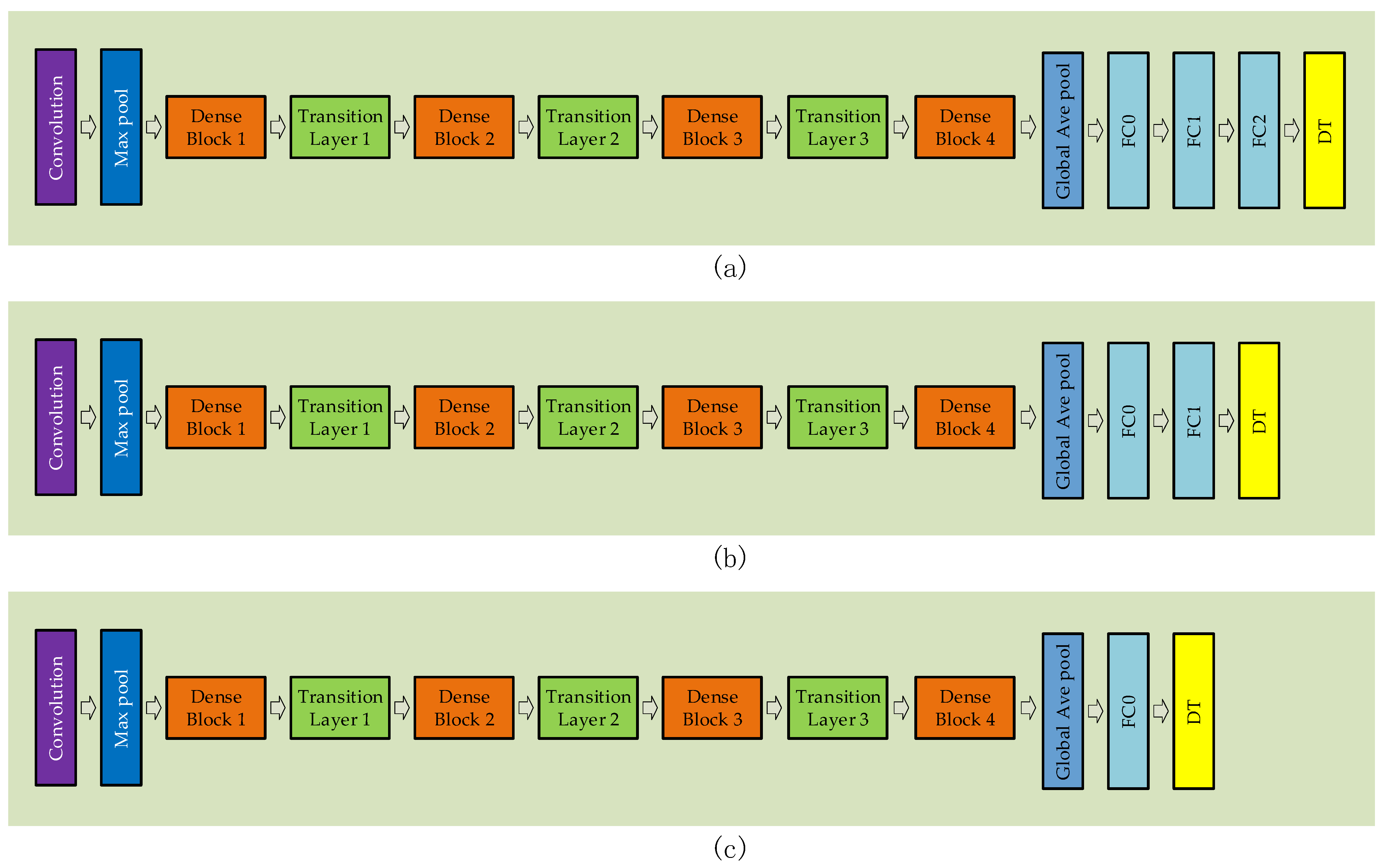

In order to solve the problem that the features extracted by the traditional flotation process working condition recognition method are not comprehensive and contain excessive redundant information and noisy information, a novel recognition method based on DSFF-DenseNet-DT is proposed in this paper. It can effectively identify the working conditions of the mineral flotation process. Firstly, the shallow features (color texture features and size features) and deep features of the froth images based on nonparametric kernel density estimation and DenseNet are extracted, solving the problem that the extracted froth features are not detailed and comprehensive. The extracted shallow features and deep features are then fused and reduced based on the Cat-SAE two-stage feature fusion method, which solves the problem that the extracted froth features have more redundant information and noisy information. Finally, the classification layer of DenseNet is replaced with a decision tree to obtain DenseNet-DT for working condition recognition. Compared with DenseNet, it improves the accuracy of working condition recognition. The main contributions of this paper are summarized as follows:

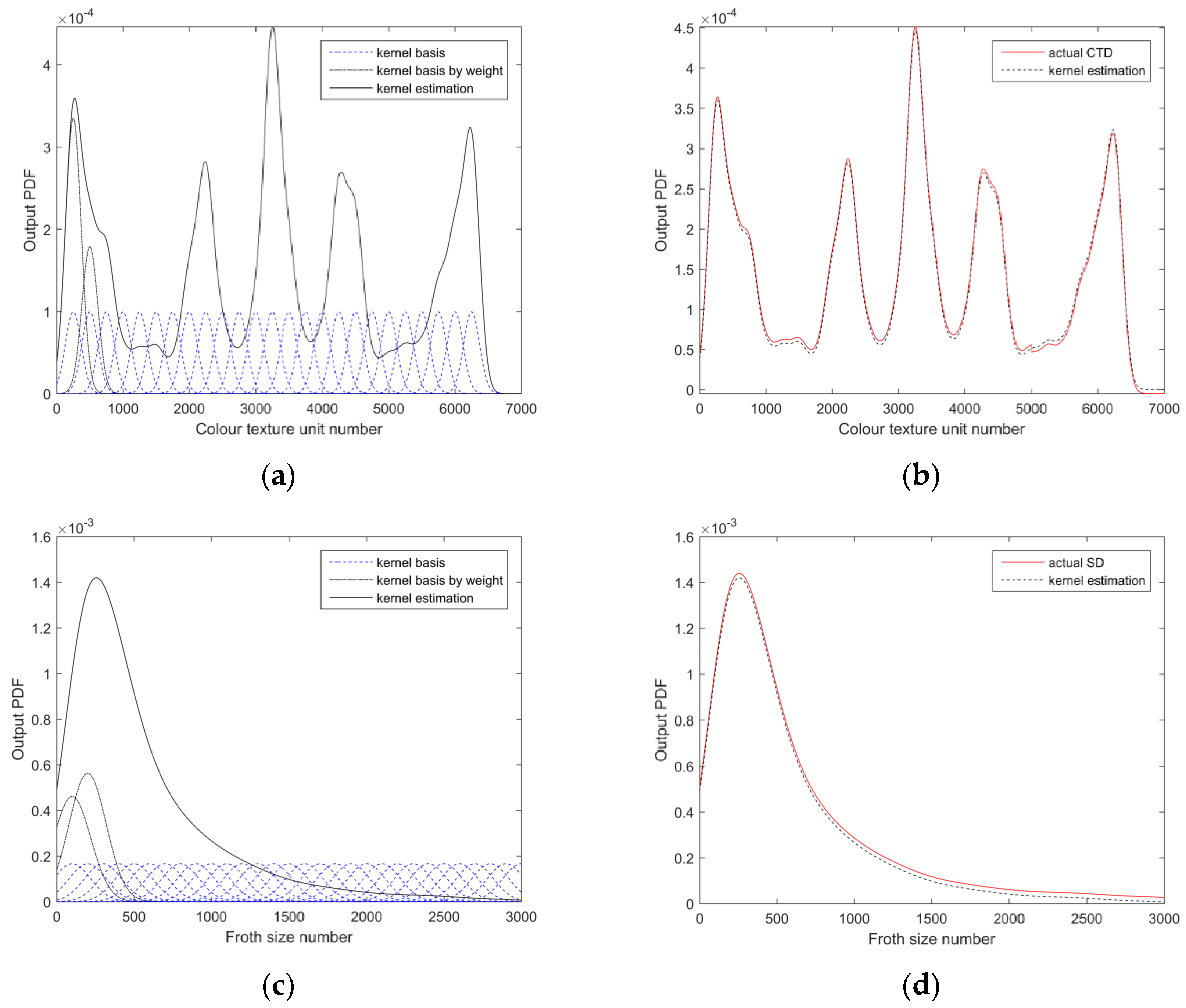

A deep and shallow feature extraction method based on nonparametric kernel density estimation and DenseNet is proposed. The color texture, size and other detail features of the froth image are extracted effectively.

A two-stage multi-feature fusion method based on Cat-SAE is proposed. The shallow features of the froth image are fused with deep features, comprehensively describing the feature information and eliminating feature redundancy and noise.

A working condition recognition method based on DenseNet-DT is proposed, which replaces the classification layer of DenseNet with DT to obtain DenseNet-DT. It can effectively identify the flotation process working conditions.

This paper’s structure is summarized as follows. The theoretical context is introduced in

Section 2.

Section 3 introduces the proposed method as well as the detailed framework.

Section 4 presents the experimental findings and analysis.

Section 5 contains the conclusion.

5. Conclusions

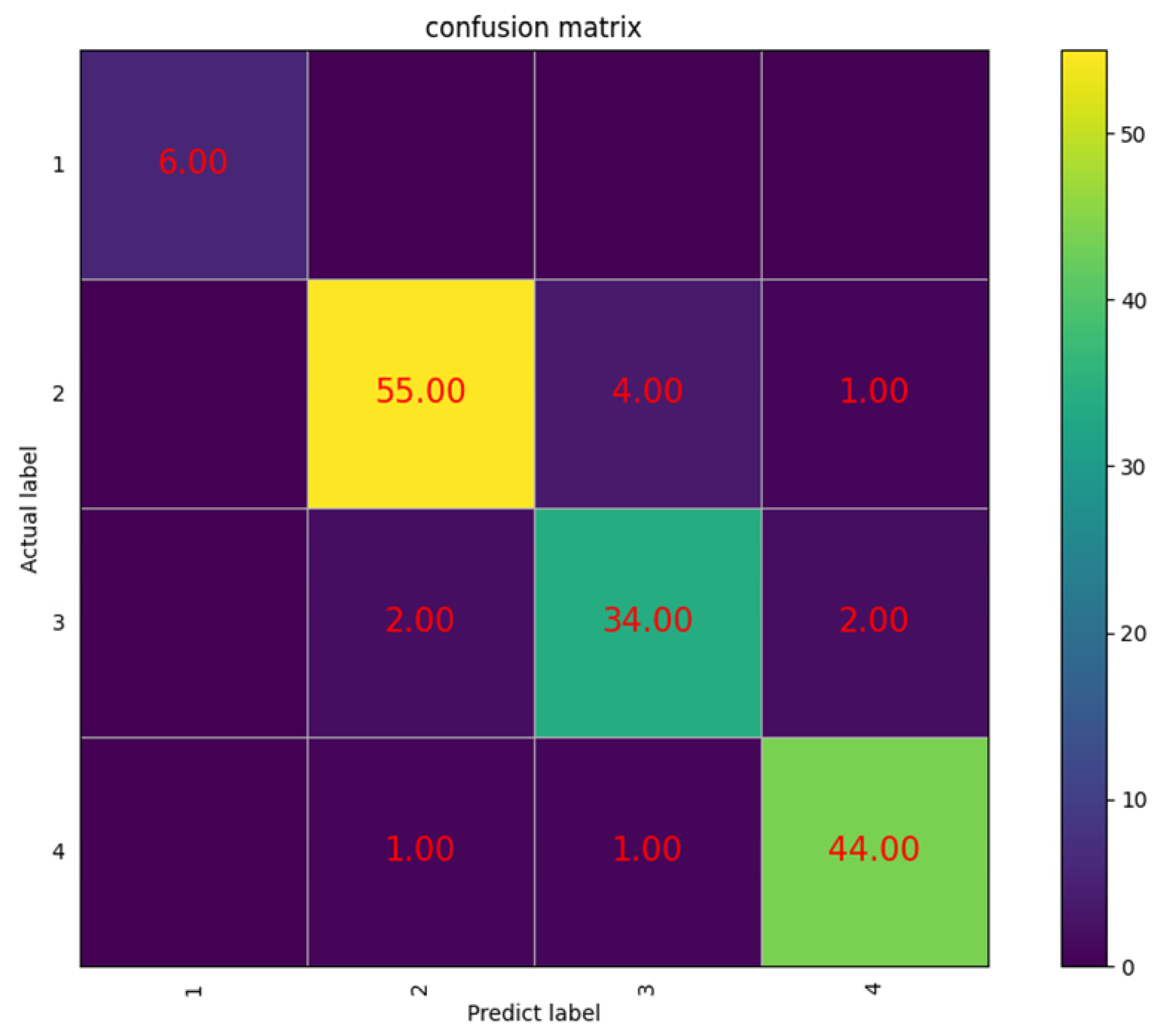

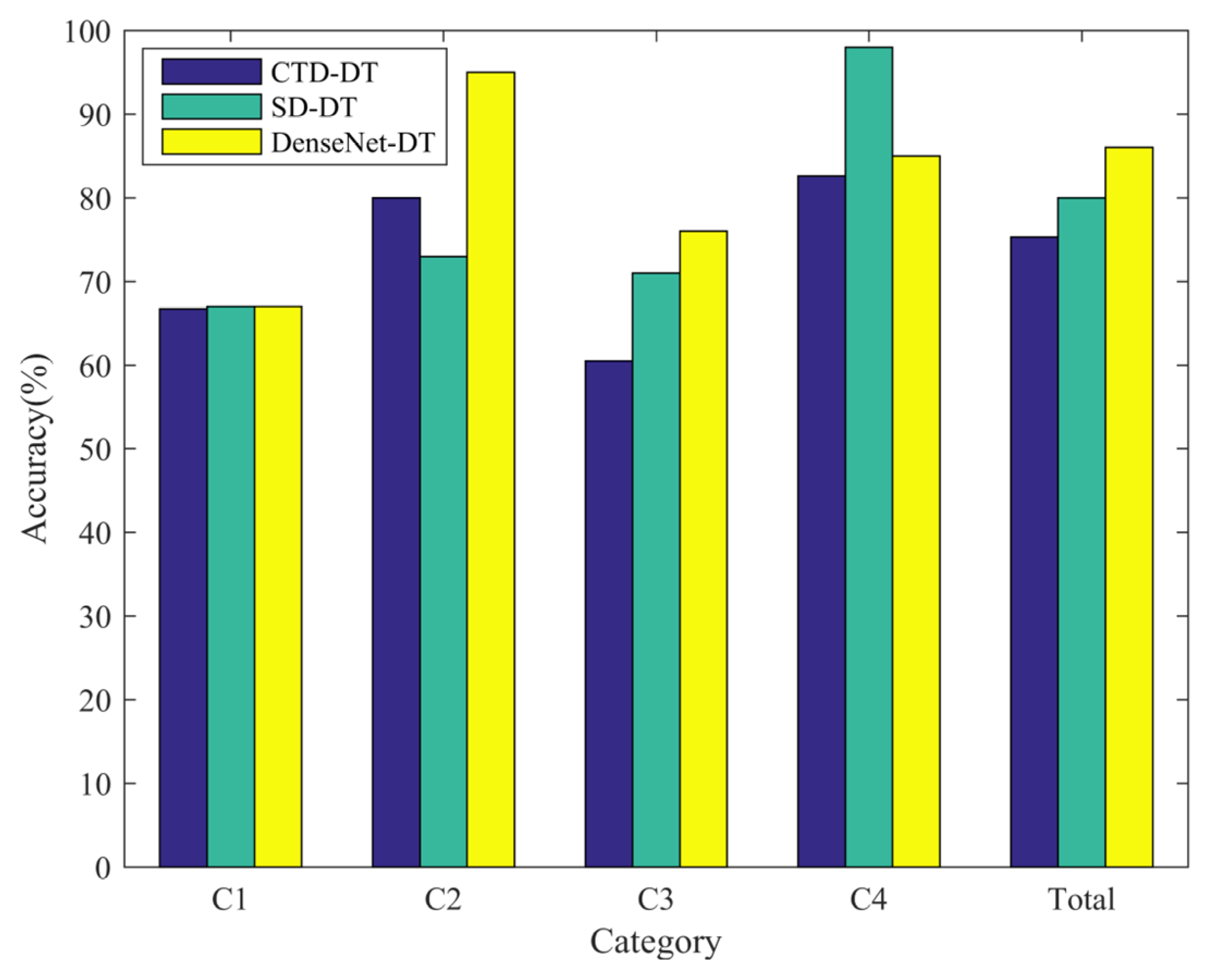

In this paper, a working condition recognition method based on DSFF-DenseNet-DT for the mineral flotation process is proposed, addressing the problem that the shallow features of flotation froth images extracted by digital image processing technology in the past contain excessive redundancy and noisy information. Through the two-stage feature fusion method based on Cat-SAE, extracted shallow features such as color texture and size are first fused to reduce dimensionality, and 15-dimensional fusion features are obtained. Then, the fused 15-dimensional features are fused with the deep features extracted by the deep neural network and reduced to obtain a 205-dimensional feature vector, and the fused features have less noisy information and redundant information. Finally, the fused features are fed into DenseNet-DT to achieve working condition recognition. Classification experiments were conducted by using flotation froth images acquired from industrial sites. Compared with the CTD-DT (75.3%) and SD-DT (80%) recognition methods, the recognition accuracy of DenseNet-DT (86%) has been greatly improved, demonstrating that detailed froth image features can be extracted more effectively using the deep learning network. Compared with other recognition methods, the recognition accuracy of SFF-DT (85.3%) and DSFF-DenseNet-DT (92.67%) is highly improved, indicating that the fusion and dimensionality reduction of features can eliminate redundant features and noisy information so as to improve the robustness of the model. The average recognition accuracy, precision, recall and F1 score of DSFF-DenseNet-DT can reach 92.67%, 93.9%, 94.2% and 0.94, respectively. It is able to accurately pinpoint the working conditions of the mineral flotation process, which is conducive to the optimization control of the flotation process and improves the grade of the concentrate.