Effects of Voice and Lighting Color on the Social Perception of Home Healthcare Robots

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

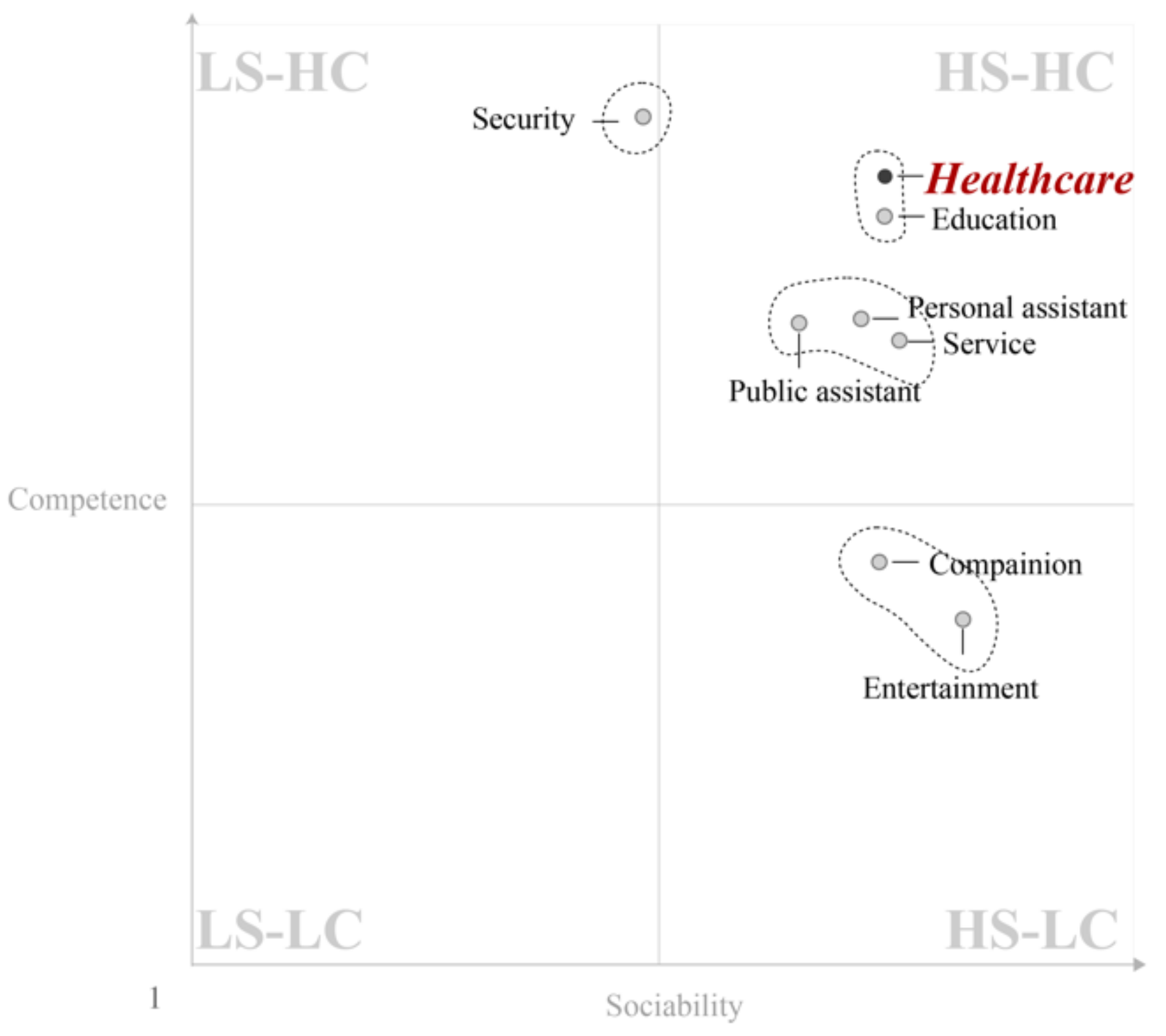

2.1.1. Interaction Context

2.1.2. Voice Stimulus

2.1.3. Lighting Colors

2.2. Experimental Design

2.3. Measurements

2.4. Participants

2.5. Procedures

3. Results

3.1. Manipulation Check

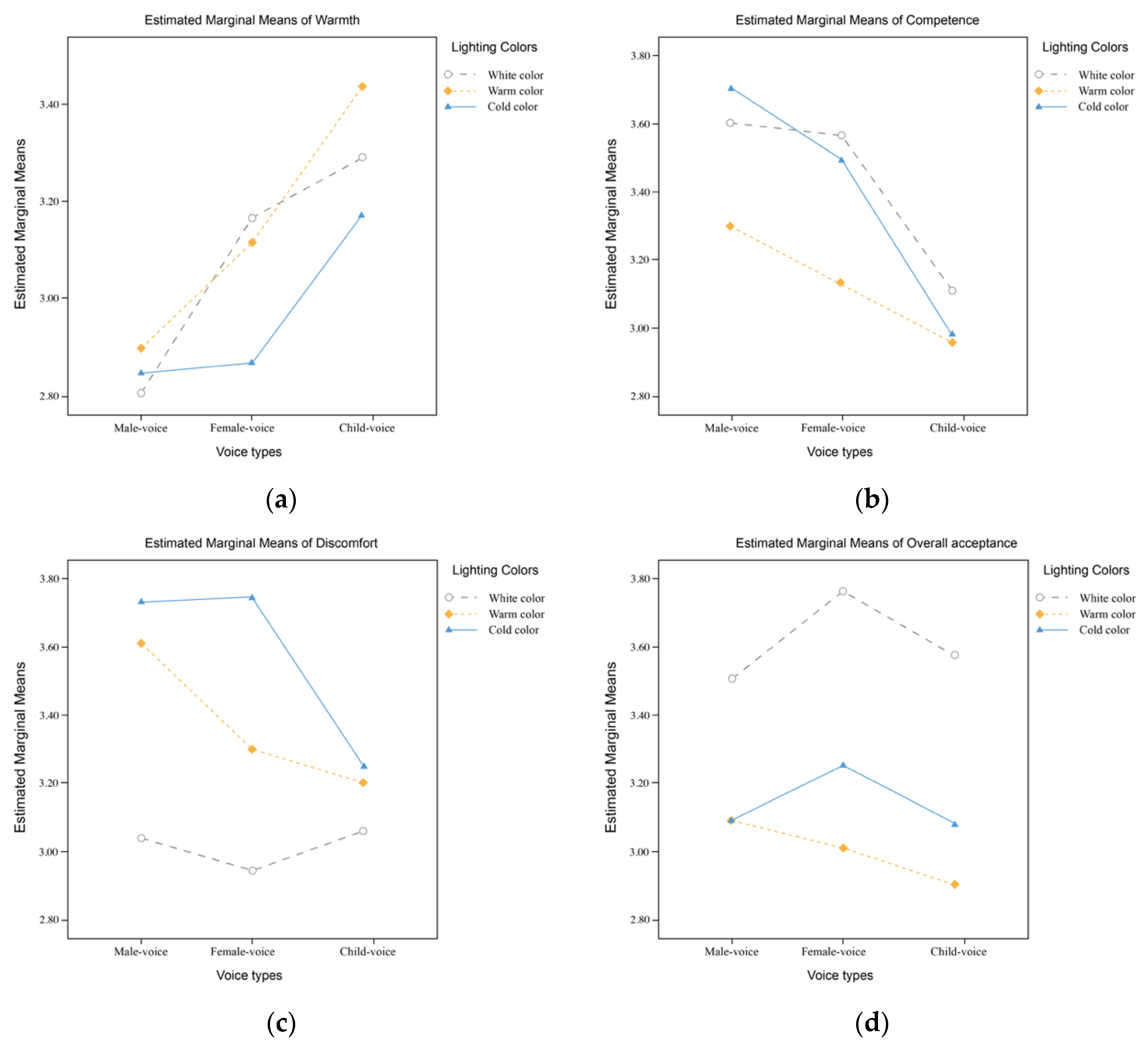

3.2. Effects of Robot Voice Type and Lighting Color on the Participants’ Social Perception and Overall Acceptance of the Healthcare Robot

4. Discussion

Implications and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dou, X.; Wu, C.-F. Are We Ready for “Them” Now? The Relationship Between Human and Humanoid Robots. In Integrated Science; Springer: Berlin/Heidelberg, Germany, 2021; pp. 377–394. [Google Scholar]

- Liu, S.X.; Shen, Q.; Hancock, J. Can a social robot be too warm or too competent? Older Chinese adults’ perceptions of social robots and vulnerabilities. Comput. Hum. Behav. 2021, 125, 106942. [Google Scholar] [CrossRef]

- Song, Y.; Luximon, A.; Luximon, Y. The effect of facial features on facial anthropomorphic trustworthiness in social robots. Appl. Ergon. 2021, 94, 103420. [Google Scholar] [CrossRef] [PubMed]

- Robinson, H.; Macdonald, B.; Broadbent, E. The Role of Healthcare Robots for Older People at Home: A Review. Int. J. Soc. Robot. 2014, 6, 575–591. [Google Scholar] [CrossRef]

- Stafford, R.Q.; MacDonald, B.A.; Jayawardena, C.; Wegner, D.M.; Broadbent, E. Does the Robot Have a Mind? Mind Perception and Attitudes towards Robots Predict Use of an Eldercare Robot. Int. J. Soc. Robot. 2014, 6, 17–32. [Google Scholar] [CrossRef]

- McGinn, C.; Sena, A.; Kelly, K. Controlling robots in the home: Factors that affect the performance of novice robot operators. Appl. Ergon. 2017, 65, 23–32. [Google Scholar] [CrossRef]

- Nass, C.; Steuer, J.; Tauber, E.R. Computers Are Social Actors: Conference Companion on Human Factors in Computing Systems-CHI’94; Association for Computing Machinery: New York, NY, USA, 1994. [Google Scholar]

- Beer, J.M.; Liles, K.R.; Wu, X.; Pakala, S. Affective Human—Robot Interaction. In Emotions and Affect in Human Factors and Human-Computer Interaction; Academic Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Skjuve, M.; Følstad, A.; Fostervold, K.I.; Brandtzaeg, P.B. My chatbot companion-a study of human-chatbot relationships. Int. J. Hum. Comput. Stud. 2021, 149, 102601. [Google Scholar] [CrossRef]

- Chang, R.C.-S.; Lu, H.-P.; Yang, P. Stereotypes or golden rules? Exploring likable voice traits of social robots as active aging companions for tech-savvy baby boomers in Taiwan. Comput. Hum. Behav. 2018, 84, 194–210. [Google Scholar] [CrossRef]

- Cheng, Y.-W.; Sun, P.-C.; Chen, N.-S. The essential applications of educational robot: Requirement analysis from the perspectives of experts, researchers and instructors. Comput. Educ. 2018, 126, 399–416. [Google Scholar] [CrossRef]

- Stroessner, S.J.; Benitez, J. The Social Perception of Humanoid and Non-Humanoid Robots: Effects of Gendered and Machinelike Features. Int. J. Soc. Robot. 2019, 11, 305–315. [Google Scholar] [CrossRef]

- Tay, B.; Jung, Y.; Park, T. When stereotypes meet robots: The double-edge sword of robot gender and personality in human-robot interaction. Comput. Hum. Behav. 2014, 38, 75–84. [Google Scholar] [CrossRef]

- Aronson, E.; Wilson, T.D.; Akert, R.M. Social Psychology, 7th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Paetzel, M.; Perugia, G.; Castellano, G. The persistence of first impressions: The effect of repeated interactions on the perception of a social robot. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020. [Google Scholar]

- Fong, T.; Nourbakhsh, I. Socially interactive robots. Robot. Auton. Syst. 2003, 42, 139–141. [Google Scholar] [CrossRef]

- Powers, A.; Kiesler, S. The advisor robot: Tracing people’s mental model from a robot’s physical attributes. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, HRI 2006, Salt Lake City, UT, USA, 2–3 March 2006. [Google Scholar]

- Powers, A.; Kramer, A.; Lim, S.; Kuo, J.; Lee, S.-L.; Kiesler, S. Eliciting information from people with a gendered humanoid robot. In Proceedings of the IEEE International Workshop on Robot and Human Communication (ROMAN), Nashville, TN, USA, 13–15 August 2005. [Google Scholar]

- Chidambaram, V.; Chiang, Y.-H.; Mutlu, B. Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012. [Google Scholar]

- Hirano, T.; Shiomi, M.; Iio, T.; Kimoto, M.; Tanev, I.; Shimohara, K.; Hagita, N. How Do Communication Cues Change Impressions of Human–Robot Touch Interaction? Int. J. Soc. Robot. 2018, 10, 21–31. [Google Scholar] [CrossRef]

- Morillo-Mendez, L.; Schrooten, M.G.S.; Loutfi, A.; Mozos, O.M. Age-related Differences in the Perception of Eye-gaze from a Social Robot. Soc. Robot. 2021, 350–361. [Google Scholar] [CrossRef]

- Dou, X.; Wu, C.-F.; Lin, K.-C.; Gan, S.; Tseng, T.-M. Effects of different types of social robot voices on affective evaluations in different application fields. Int. J. Soc. Robot. 2021, 13, 615–628. [Google Scholar] [CrossRef]

- Lee, S.-Y.; Lee, G.; Kim, S.; Lee, J. Expressing Personalities of Conversational Agents through Visual and Verbal Feedback. Electronics 2019, 8, 794. [Google Scholar] [CrossRef]

- Belin, P.; Bestelmeyer, P.E.G.; Latinus, M.; Watson, R. Understanding voice perception. Br. J. Psychol. 2011, 102, 711–725. [Google Scholar] [CrossRef]

- Niculescu, A.; van Dijk, B.; Nijholt, A.; Li, H.; See, S.L. Making Social Robots More Attractive: The Effects of Voice Pitch, Humor and Empathy. Int. J. Soc. Robot. 2013, 5, 171–191. [Google Scholar] [CrossRef]

- Berry, D.S. Vocal types and stereotypes: Joint effects of vocal attractiveness and vocal maturity on person perception. J. Nonverbal Behav. 1992, 16, 41–54. [Google Scholar] [CrossRef]

- Vukovic, J.; Feinberg, D.; Jones, B.; DeBruine, L.; Welling, L.; Little, A.; Smith, F. Self-rated attractiveness predicts individual differences in women’s preferences for masculine men’s voices. Personal. Individ. Differ. 2008, 45, 451–456. [Google Scholar] [CrossRef]

- Scherer, K.R. Vocal communication of emotion: A review of research paradigms. Speech Commun. 2003, 40, 227–256. [Google Scholar] [CrossRef]

- Riordan, C.A.; Quigley-Fernandez, B.; Tedeschi, J.T. Some variables affecting changes in interpersonal attraction. J. Exp. Soc. Psychol. 1982, 18, 358–374. [Google Scholar] [CrossRef]

- Zuckerman, M.; Driver, R.E. What sounds beautiful is good: The vocal attractiveness stereotype. J. Nonverbal Behav. 1989, 13, 67–82. [Google Scholar] [CrossRef]

- Baraka, K.; Rosenthal, S.; Veloso, M. Enhancing human understanding of a mobile robot’s state and actions using expressive lights Enhancing Human Understanding of a Mobile Robot’s State and Actions using Expressive Lights. IEEE Explor. IEEE Org. 2018, 652–657. [Google Scholar] [CrossRef]

- Baraka, K.; Veloso, M.M. Mobile Service Robot State Revealing Through Expressive Lights: Formalism, Design, and Evaluation. Int. J. Soc. Robot. 2018, 10, 65–92. [Google Scholar] [CrossRef]

- Baraka, K. Effective Non-Verbal Communication for Mobile Robots Using Expressive Lights. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2016. [Google Scholar]

- Song, S.; Yamada, S. Designing LED lights for a robot to communicate gaze. Adv. Robot. 2019, 33, 360–368. [Google Scholar] [CrossRef]

- Kobayashi, K.; Funakoshi, K.; Yamada, S.; Nakano, M.; Komatsu, T.; Saito, Y. Blinking light patterns as artificial subtle expressions in human-robot speech interaction. In Proceedings of the 20th IEEE International Symposium on Robot and Human Interactive Communication, Atlanta, GA, USA, 31 July–3 August 2011. [Google Scholar]

- Barbato, M.; Liu, L.; Cadenhead, K.S.; Cannon, T.D.; Cornblatt, B.A.; McGlashan, T.H.; Perkins, D.O.; Seidman, L.J.; Tsuang, M.T.; Walker, E.F.; et al. Theory of mind, emotion recognition and social perception in individuals at clinical high risk for psychosis: Findings from the NAPLS-2 cohort. Schizophr. Res. Cogn. 2015, 2, 133–139. [Google Scholar] [CrossRef]

- Ekman, P. What emotion categories or dimensions can observers judge from facial behavior? Emot. Hum. Face 1982, 39–55. [Google Scholar]

- Fromme, D.K.; O’Brien, C.S. A dimensional approach to the circular ordering of the emotions. Motiv. Emot. 1982, 6, 337–363. [Google Scholar] [CrossRef]

- Plutchik, R. A Psychoevolutionary Theory of Emotions; Sage Publications: Thousand Oaks, CA, USA, 1982. [Google Scholar]

- Terada, K.; Yamauchi, A.; Ito, A. Artificial emotion expression for a robot by dynamic color change. In Proceedings of the IEEE International Workshop on Robot and Human Communication (ROMAN), Paris, France, 9–13 September 2012. [Google Scholar]

- Tijssen, I.; Zandstra, E.H.; de Graaf, C.; Jager, G. Why a ‘light’product package should not be light blue: Effects of package colour on perceived healthiness and attractiveness of sugar-and fat-reduced products. Food Qual. Prefer. 2017, 59, 46–58. [Google Scholar] [CrossRef]

- Hemphill, M. A note on adults’ color–emotion associations. J. Genet. Psychol. 1996, 157, 275–280. [Google Scholar] [CrossRef]

- Fiske, S.T.; Cuddy, A.J.C.; Glick, P. Universal dimensions of social cognition: Warmth and competence. Trends Cogn. Sci. 2007, 11, 77–83. [Google Scholar] [CrossRef]

- Dou, X.; Wu, C.-F.; Niu, J.; Pan, K.-R. Effect of Voice Type and Head-Light Color in Social Robots for Different Applications. Int. J. Soc. Robot. 2021, 14, 229–244. [Google Scholar] [CrossRef]

- Dou, X.; Wu, C.-F.; Wang, X.; Niu, J. User expectations of social robots in different applications: An online user study. In Proceedings of the HCI International 2020-Late Breaking Papers: Multimodality and Intelligence, Copenhagen, Denmark, 19–24 July 2020. [Google Scholar]

- Dou, X. A Study on the Application Model of Social Robot’s Dialogue Style and Speech Parameters in Different Industries. Ph.D. Thesis, Tatung University, Taipei, Taiwan, 2020. [Google Scholar]

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive social robots in elderly care: A review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef]

- Wang, X.; Dou, X. Designing a More Inclusive Healthcare Robot: The Relationship Between Healthcare Robot Tasks and User Capability. In Proceedings of the HCI International 2022—Late Breaking Papers: HCI for Health, Well-being, Universal Access and Healthy Aging, Virtual Events, 26 July–1 July 2022. [Google Scholar]

- Walters, M.L.; Syrdal, D.S.; Koay, K.L.; Dautenhahn, K.; Boekhorst, R.T. Human Approach Distances to a Mechanical-Looking Robot with Different Robot Voice Styles. In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN, Munich, Germany, 1–3 August 2008. [Google Scholar]

- Niu, J.; Wu, C.-F.; Dou, X.; Lin, K.-C. Designing Gestures of Robots in Specific Fields for Different Perceived Personality Traits. Front. Psychol 2022, 13. [Google Scholar] [CrossRef]

- Aaron, A.; Eide, E.; Pitrelli, J.F. Conversational computers. Sci. Am. 2005, 292, 64–69. [Google Scholar] [CrossRef]

- Liu, X.; Xu, Y. What makes a female voice attractive? ICPhS 2011, v, 17–21. [Google Scholar]

- Boersma, P.J.G.I. Praat, a system for doing phonetics by computer. Speech Lang. Pathol. 2002, 5, 341–345. [Google Scholar]

- Laver, J.; John, L. Principles of Phonetics; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Kent, R. Anatomical and neuromuscular maturation of the speech mechanism: Evidence from acoustic studies. J. Speech Hear. Res. 1976, 19, 421–447. [Google Scholar] [CrossRef]

- Sheppard, W.C.; Lane, H.L. Development of the prosodic features of infant vocalizing. J. Speech Hear. Res. 1968, 11, 94–108. [Google Scholar] [CrossRef]

- Kobayashi, K.; Funakoshi, K.; Yamada, S.; Nakano, M.; Komatsu, T.; Saito, Y. Impressions made by blinking light used to create artificial subtle expressions and by robot appearance in human-robot speech interaction. In Proceedings of the IEEE International Workshop on Robot and Human Communication (ROMAN), Paris, France, 9–13 September 2012. [Google Scholar]

- Holtzschue, L. Understanding Color: An Introduction for Designers; John Wiley and Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Bellizzi, J.A.; Hite, R.E. Environmental color, consumer feelings, and purchase likelihood. Psychol. Mark. 1992, 9, 347–363. [Google Scholar] [CrossRef]

- Narendran, N.; Deng, L. Color rendering properties of LED light sources. In Solid State Lighting II; SPIE: Bellingham, WA, USA, 2002; Volume 4776, pp. 61–67. [Google Scholar]

- Lee, H. Effects of Light-Emitting Diode (LED) Lighting Color on Human Emotion, Behavior, and Spatial Impression. Ph.D. Thesis, Michigan State University, East Lansing, MI, USA, 2019. [Google Scholar]

- Dautenhahn, K. Methodology & themes of human-robot interaction: A growing research field. Int. J. Adv. Robot. Syst. 2007, 4, 15. [Google Scholar] [CrossRef]

- Brule, R.V.D.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.J.; Haselager, P. Do Robot Performance and Behavioral Style affect Human Trust?: A Multi-Method Approach. Int. J. Adv. Robot. Syst. 2014, 6, 519–531. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Carpinella, C.M.; Wyman, A.B.; Perez, M.A.; Stroessner, S.J. The Robotic Social Attributes Scale (RoSAS). In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction-HRI, ‘17, Vienna, Austria, 6–9 March 2017; pp. 254–262. [Google Scholar] [CrossRef]

- Ko, S.J.; Judd, C.M.; Stapel, D.A. Stereotyping based on voice in the presence of individuating information: Vocal femininity affects perceived competence but not warmth. Personal. Soc. Psychol. Bull. 2009, 35, 198–211. [Google Scholar] [CrossRef]

- Niculescu, A.; Dijk, B.V.; Nijholt, A.; See, S.L. The influence of voice pitch on the evaluation of a social robot receptionist. In Proceedings of the 2011 International Conference on User Science and Engineering (i-USEr), Selangor, Malaysia, 29 November–2 December 2011. [Google Scholar]

- Naz, K.; Helen, H. Color-emotion associations: Past experience and personal preference. Psychology 2004, 5, 31. [Google Scholar]

- Ho, C.-C.; MacDorman, K.F. Measuring the Uncanny Valley Effect: Refinements to Indices for Perceived Humanness, Attractiveness, and Eeriness. Int. J. Soc. Robot. 2017, 9, 129–139. [Google Scholar] [CrossRef]

- Walters, M.L.; Syrdal, D.S.; Dautenhahn, K.; Boekhorst, R.T.; Koay, K.L. Avoiding the uncanny valley: Robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Auton. Robot. 2008, 24, 159–178. [Google Scholar] [CrossRef]

- Prakash, A.; Rogers, W.A. Why some humanoid faces are perceived more positively than others: Effects of human-likeness and task. Int. J. Soc. Robot. 2015, 7, 309–331. [Google Scholar] [CrossRef]

- Paetzel, M. The influence of appearance and interaction strategy of a social robot on the feeling of uncanniness in humans. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016. [Google Scholar]

- Mitchell, W.J.; A Szerszen, K.; Lu, A.S.; Schermerhorn, P.W.; Scheutz, M.; MacDorman, K.F. A mismatch in the human realism of face and voice produces an uncanny valley. i-Perception 2011, 2, 10–12. [Google Scholar] [CrossRef]

- Paetzel, M.; Peters, C.; Nyström, I.; Castellano, G. Effects of multimodal cues on children’s perception of uncanniness in a social robot. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016. [Google Scholar]

- Ko, Y.H. The effects of luminance contrast, colour combinations, font, and search time on brand icon legibility. Appl. Ergon. 2017, 65, 33–40. [Google Scholar] [CrossRef]

- Dou, X.; Wu, C.F.; Lin, K.-C.; Tseng, T.M. The Effects of Robot Voice and Gesture Types on the Perceived Robot Personalities. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019. [Google Scholar]

- Siegel, M.; Breazeal, C.; Norton, M.I. Persuasive robotics: The influence of robot gender on human behavior. In Proceedings of the IEEE International Workshop on Intelligent Robots and Systems (IROS), St Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Piçarra, N.; Giger, J.C. Predicting intention to work with social robots at anticipation stage: Assessing the role of behavioral desire and anticipated emotions. Comput. Hum. Behav. 2018, 86, 129–146. [Google Scholar] [CrossRef]

- Singh, S.; Chaudhary, D.; Gupta, A.D.; Lohani, B.P.; Kushwaha, P.K.; Bibhu, V. Artificial Intelligence, Cognitive Robotics and Nature of Consciousness. In Proceedings of the 3rd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 27–29 April 2022. [Google Scholar]

- Weller, C. Meet the first-ever robot citizen-a humanoid named Sophia that once said it would ‘destroy humans’. Bus. Insid. 2017, 27. [Google Scholar]

| Voice Type | Mean Fundamental Frequency | Normal Range |

|---|---|---|

| Adult male voice | 131.35 | 50–250 [54] |

| Adult female voice | 231.54 | 120–480 [54] |

| Child voice | 339.23 | 200–451 [55,56] |

| Lighting Color | Perceived Color | Wavelength or Color Temperature |

|---|---|---|

| Warm | Yellow | 610–615 nm |

| Cool | Blue | 460–465 nm |

| White | White | 6500–7500 K |

| Construct | Items | Measurement |

|---|---|---|

| Warmth | Happy, Feeling, Social, Organic, Compassionate, Emotional | 5-point Likert |

| Competence | Capable, Responsive, Interactive, Reliable, Competent, Knowledgeable | |

| Discomfort | Scary, Strange, Awkward, Dangerous, Awful, Aggressive |

| Voice Type | female | male | child | F | p |

| 4.92 | 5.00 | 4.83 | 172.89 ** | 0.00 | |

| 0.29 | 0.00 | 0.39 | |||

| Lighting Color | warm | cool | white | F | p |

| 4.67 | 4.83 | 3.83 | 38.89 ** | 0.00 | |

| 0.49 | 0.39 | 1.03 |

| Mean and SD | F Values and Effect Sizes (ηp2) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Voice (V) | Light (L) | Voice (V) | Light (L) | V × L | ||||||||

| M | F | C | WH | WA | CO | F | ηp2 | F | ηp2 | F | ηp2 | |

| Warmth | 2.85 | 3.05 | 3.30 | 3.09 | 3.15 | 2.96 | 20.43 * | 0.07 | 3.68 * | 0.01 | 1.19 | 0.01 |

| (0.62) | (0.72) | (0.71) | (0.77) | (0.66) | (0.68) | C > F > M | WA, WH > CO | |||||

| Competence | 3.53 | 3.40 | 3.31 | 3.42 | 3.13 | 3.39 | 31.68 ** | 0.10 | 11.42 ** | 0.04 | 1.97 | 0.01 |

| (0.63) | (0.63) | (0.70) | (0.68) | (0.66) | (0.73) | M > F > C | WH, CO > WA | |||||

| Discomfort | 2.26 | 2.13 | 1.97 | 1.81 | 2.17 | 2.38 | 6.10 * | 0.02 | 23.51 ** | 0.08 | 3.22 * | 0.02 |

| M: WH > WA, CO | ||||||||||||

| (0.95) | (0.84) | (0.72) | (0.67) | (0.81) | (0.94) | M, F > C | CO > WA > WH | F: WH > WA > CO | ||||

| C: WH > WA, CO | ||||||||||||

| Overall Acceptance | 3.12 | 3.25 | 3.06 | 3.58 | 2.84 | 3.01 | 1.8 | 0.01 | 28.56 ** | 0.09 | 0.75 | 0.01 |

| (1.02) | (0.93) | (1.13) | (0.96) | (0.96) | (1.03) | WH > WA, CO | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dou, X.; Yan, L.; Wu, K.; Niu, J. Effects of Voice and Lighting Color on the Social Perception of Home Healthcare Robots. Appl. Sci. 2022, 12, 12191. https://doi.org/10.3390/app122312191

Dou X, Yan L, Wu K, Niu J. Effects of Voice and Lighting Color on the Social Perception of Home Healthcare Robots. Applied Sciences. 2022; 12(23):12191. https://doi.org/10.3390/app122312191

Chicago/Turabian StyleDou, Xiao, Li Yan, Kai Wu, and Jin Niu. 2022. "Effects of Voice and Lighting Color on the Social Perception of Home Healthcare Robots" Applied Sciences 12, no. 23: 12191. https://doi.org/10.3390/app122312191

APA StyleDou, X., Yan, L., Wu, K., & Niu, J. (2022). Effects of Voice and Lighting Color on the Social Perception of Home Healthcare Robots. Applied Sciences, 12(23), 12191. https://doi.org/10.3390/app122312191