Omni-Directional Semi-Global Stereo Matching with Reliable Information Propagation

Abstract

1. Introduction

2. Omni-Directional SGM with Reliable Cost Propagation

2.1. Semi-Global Matching

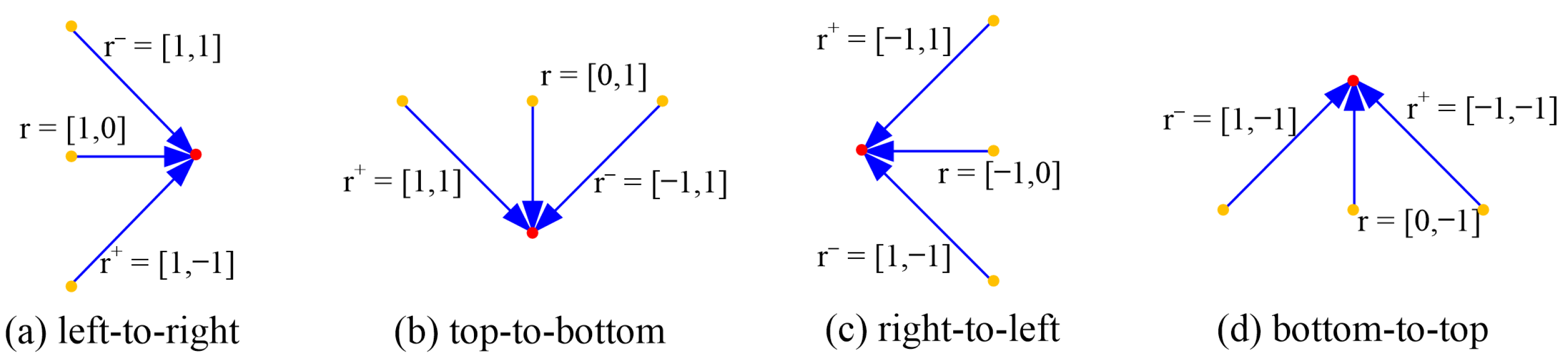

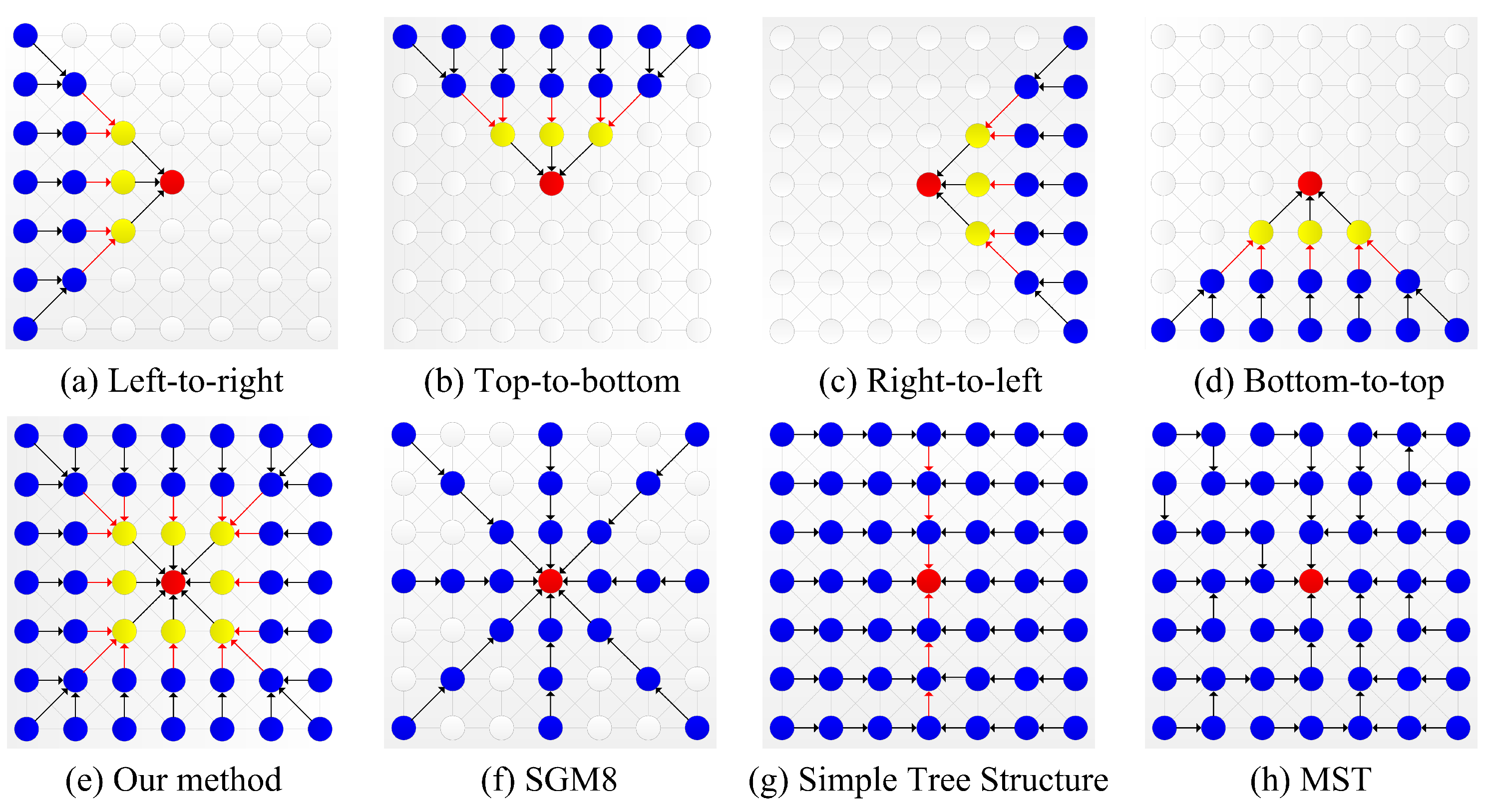

2.2. Omni-Directional SGM

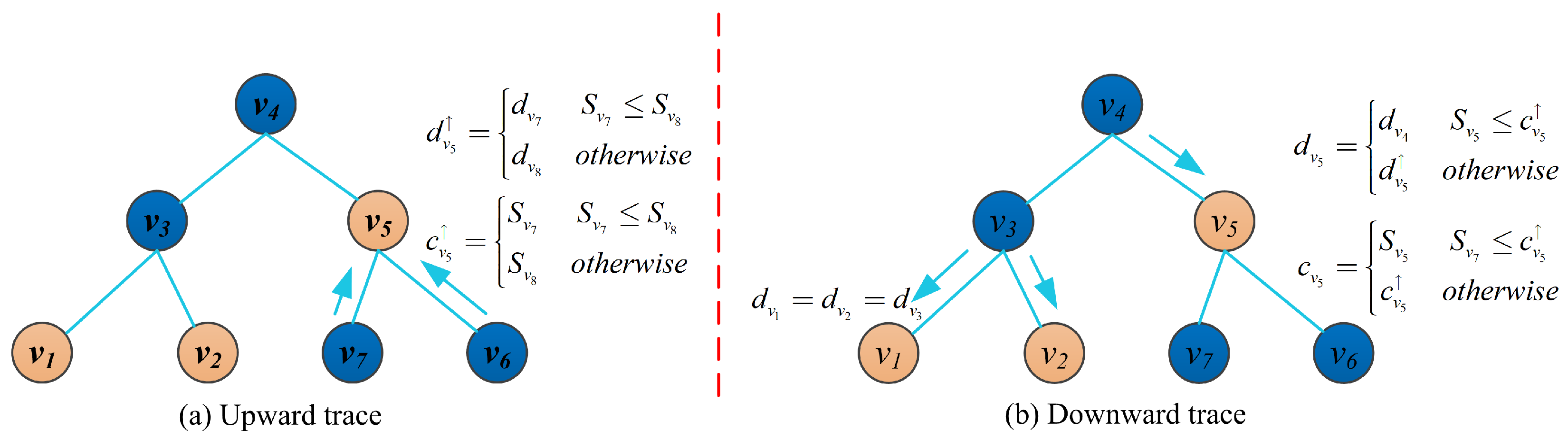

2.2.1. Cost Aggregation on Each Tree

2.2.2. Integrate Results from Multiple Directions

2.3. Cost Volume Update Scheme

2.4. Stable Disparity Propagate along MST

3. Experiments

3.1. Parameter Settings

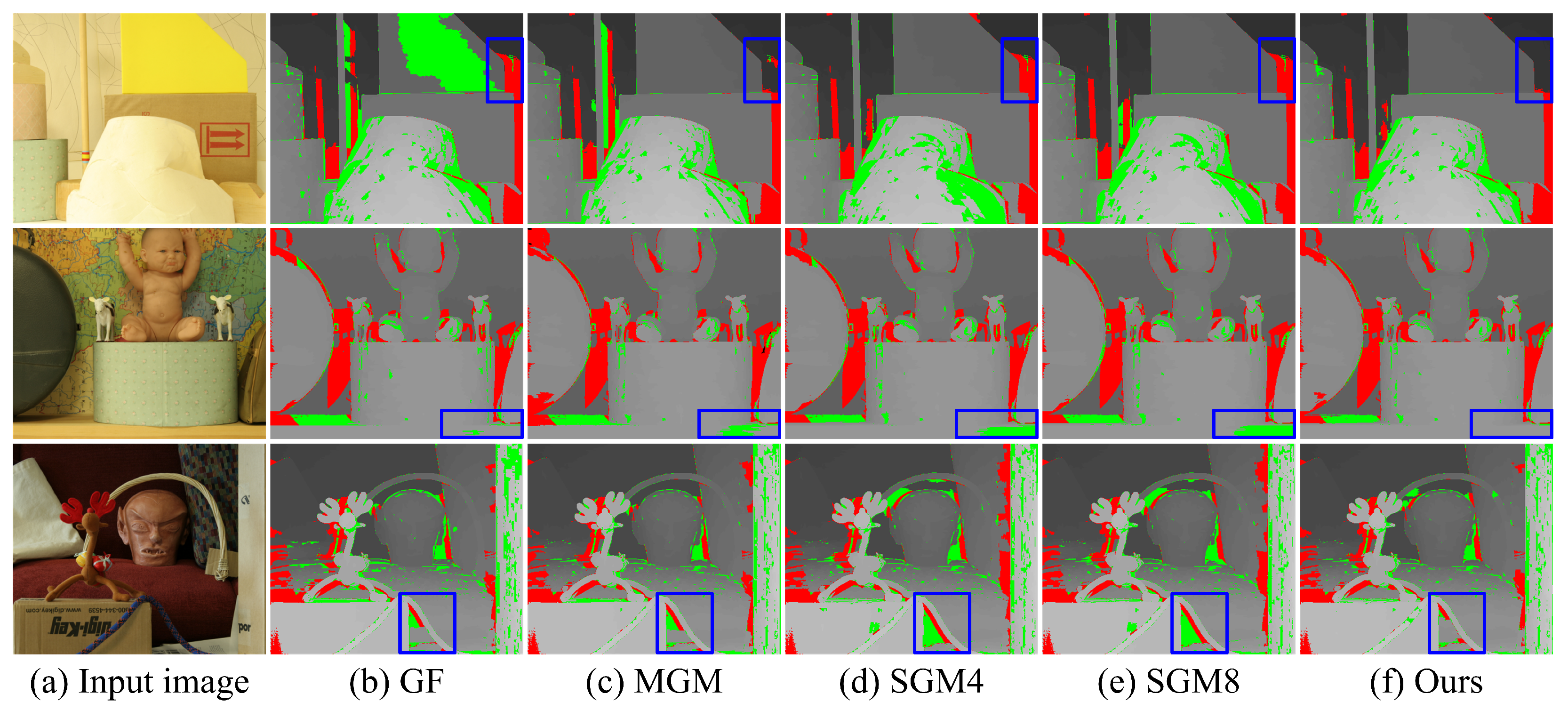

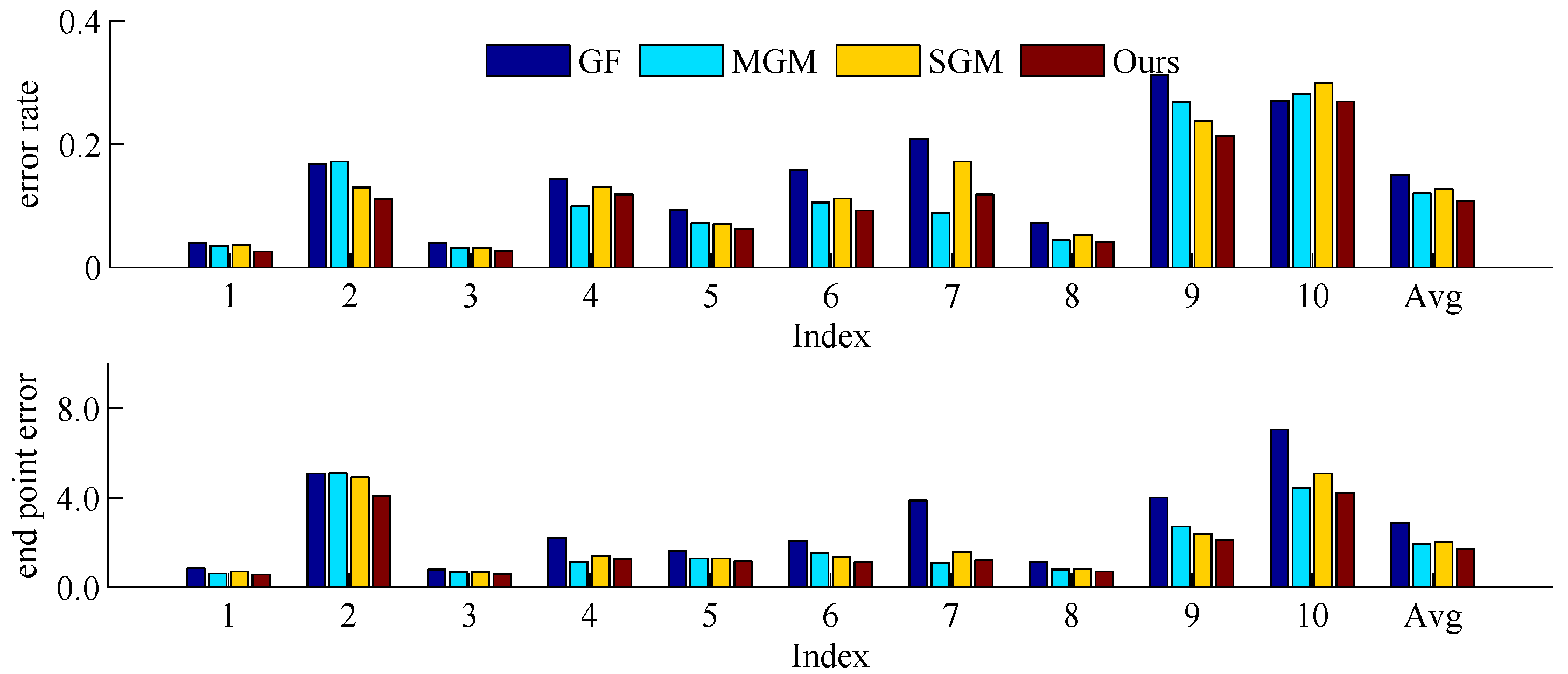

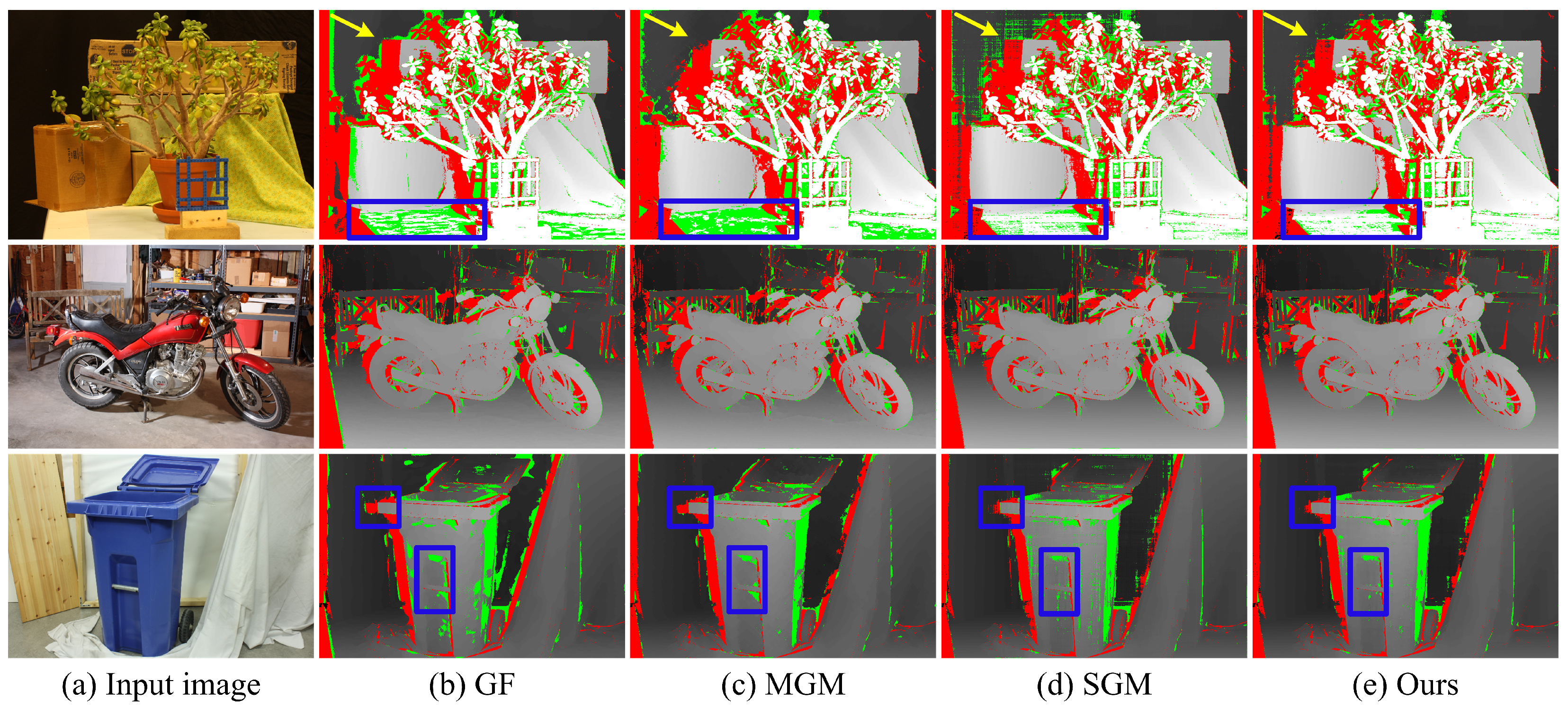

3.2. Middlebury Dataset

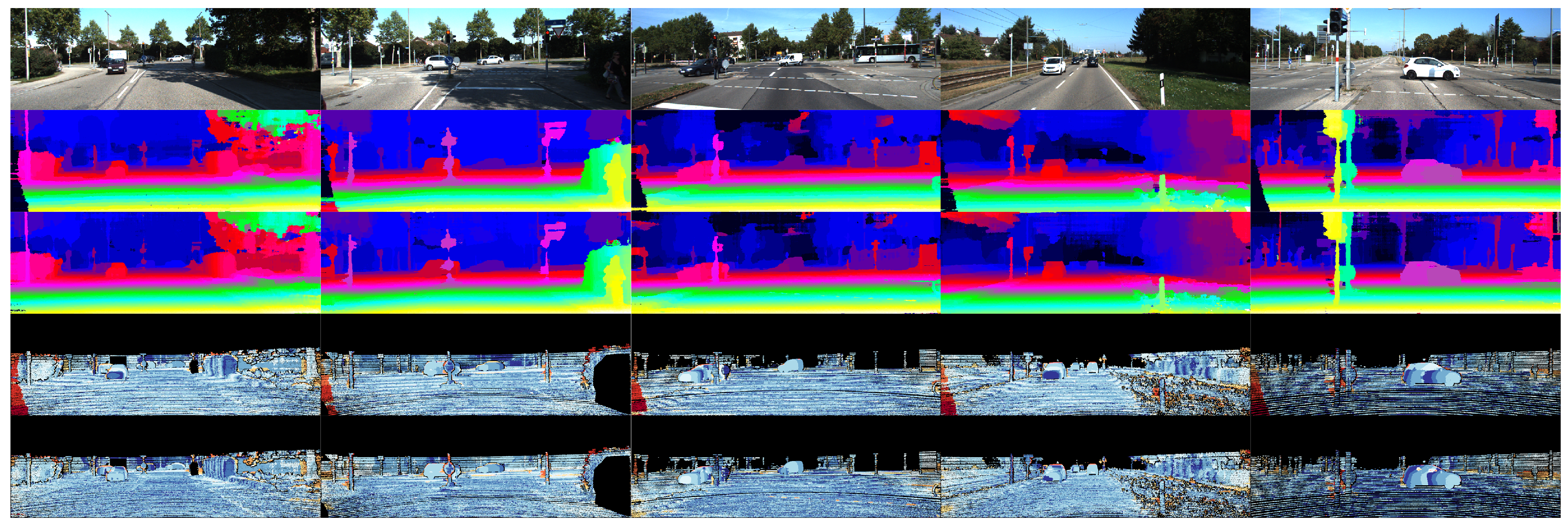

3.3. KITTI Dataset

4. Conclusions and Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BF | Bilateral filter |

| GF | Guided filter |

| NL | Non-local filter |

| ST | Segment-tree filter |

| MGM | More global Semi-global Matching |

References

- Caetano, F.; Carvalho, P.; Cardoso, J. Deep Anomaly Detection for In-Vehicle Monitoring—An Application-Oriented Review. Appl. Sci. 2022, 12, 10011. [Google Scholar] [CrossRef]

- Shehzadi, T.; Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Mask-Aware Semi-Supervised Object Detection in Floor Plans. Appl. Sci. 2022, 12, 9398. [Google Scholar] [CrossRef]

- Xu, B.; Sun, Y.; Meng, X.; Liu, Z.; Li, W. MreNet: A Vision Transformer Network for Estimating Room Layouts from a Single RGB Panorama. Appl. Sci. 2022, 12, 9696. [Google Scholar] [CrossRef]

- Zhang, K.; Fang, Y.; Min, D.; Sun, L.; Yang, S.; Yan, S.; Tian, Q. Cross-scale cost aggregation for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1590–1597. [Google Scholar]

- Hosni, A.; Rhemann, C.; Bleyer, M.; Rother, C.; Gelautz, M. Fast Cost-Volume Filtering for Visual Correspondence and Beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 504–511. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Sun, C.; Wang, D.; Guo, Y.; Pham, T.D. Soft cost aggregation with multi-resolution fusion. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 17–32. [Google Scholar]

- Yang, Q. Stereo Matching Using Tree Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 834–846. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Shi, K.; Min, D.; Lin, L.; Do, M.N. Cross-based local multipoint filtering. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 430–437. [Google Scholar]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Taniai, T.; Matsushita, Y.; Sato, Y.; Naemura, T. Continuous 3D label stereo matching using local expansion moves. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2725–2739. [Google Scholar] [CrossRef]

- Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: Image and video synthesis using graph cuts. ACM Trans. Graph. ToG 2003, 22, 277–286. [Google Scholar] [CrossRef]

- Yang, Q.; Wang, L.; Yang, R.; Stewénius, H.; Nistér, D. Stereo matching with color-weighted correlation, hierarchical belief propagation, and occlusion handling. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 492–504. [Google Scholar] [CrossRef] [PubMed]

- Yoon, K.J.; Kweon, I.S. Adaptive support-weight approach for correspondence search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q. A non-local cost aggregation method for stereo matching. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1402–1409. [Google Scholar]

- Mei, X.; Sun, X.; Dong, W.; Wang, H.; Zhang, X. Segment-tree based cost aggregation for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 313–320. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Bu, P.; Zhao, H.; Jin, Y.; Ma, Y. Linear Recursive Non-Local Edge-Aware Filter. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1751–1763. [Google Scholar] [CrossRef]

- Boykov, Y.; Kolmogorov, V. An experimental comparison of min-cut/max- flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1124–1137. [Google Scholar] [CrossRef] [PubMed]

- Szeliski, R.; Zabih, R.; Scharstein, D.; Veksler, O.; Kolmogorov, V.; Agarwala, A.; Tappen, M.; Rother, C. A comparative study of energy minimization methods for markov random fields with smoothness-based priors. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1068–1080. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient belief propagation for early vision. Int. J. Comput. Vis. 2006, 70, 41–54. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Gehrig, S.K.; Eberli, F.; Meyer, T. A real-time low-power stereo vision engine using semi-global matching. In Proceedings of the International Conference on Computer Vision Systems, Liège, Belgium, 13–15 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 134–143. [Google Scholar]

- Hermann, S.; Klette, R. Iterative semi-global matching for robust driver assistance systems. In Proceedings of the Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 465–478. [Google Scholar]

- Michael, M.; Salmen, J.; Stallkamp, J.; Schlipsing, M. Real-time stereo vision: Optimizing semi-global matching. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 1197–1202. [Google Scholar]

- Rahnama, O.; Cavalleri, T.; Golodetz, S.; Walker, S.; Torr, P. R3sgm: Real-time raster-respecting semi-global matching for power-constrained systems. In Proceedings of the 2018 International Conference on Field-Programmable Technology (FPT), Naha, Japan, 10–14 December 2018; pp. 102–109. [Google Scholar]

- Steinbrücker, F.; Pock, T.; Cremers, D. Large displacement optical flow computation withoutwarping. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1609–1614. [Google Scholar]

- Hernandez-Juarez, D.; Chacón, A.; Espinosa, A.; Vázquez, D.; Moure, J.C.; López, A.M. Embedded real-time stereo estimation via semi-global matching on the GPU. Procedia Comput. Sci. 2016, 80, 143–153. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Sinha, S.N.; Pollefeys, M. Learning to fuse proposals from multiple scanline optimizations in semi-global matching. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 739–755. [Google Scholar]

- Facciolo, G.; De Franchis, C.; Meinhardt, E. MGM: A significantly more global matching for stereovision. In Proceedings of the BMVC 2015, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Kallwies, J.; Engler, T.; Forkel, B.; Wuensche, H.J. Triple-SGM: Stereo Processing using Semi-Global Matching with Cost Fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Pitkin, CO, USA, 1–5 March 2020; pp. 192–200. [Google Scholar]

- Seki, A.; Pollefeys, M. SGM-Nets: Semi-Global Matching with Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6640–6649. [Google Scholar]

- Bleyer, M.; Gelautz, M. Simple but effective tree structures for dynamic programming-based stereo matching. In Proceedings of the International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 22–25 January 2008; Volume 2, pp. 415–422. [Google Scholar]

- Veksler, O. Stereo correspondence by dynamic programming on a tree. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 384–390. [Google Scholar]

- Scharstein, D.; Szeliski, R. High-accuracy stereo depth maps using structured light. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 1, pp. 195–202. [Google Scholar]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nešić, N.; Wang, X.; Westling, P. High-resolution stereo datasets with subpixel-accurate ground truth. In Proceedings of the German Conference on Pattern Recognition, Münster, Germany, 2–5 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 31–42. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Scharstein, D.; Pal, C. Learning conditional random fields for stereo. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid stereo matching network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Nguyen, V.D.; Nguyen, D.D.; Lee, S.; Jeon, J.W. Local Density Encoding for Robust Stereo Matching. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 2049–2062. [Google Scholar] [CrossRef]

- Spangenberg, R.; Langner, T.; Rojas, R. Weighted semi-global matching and center-symmetric census transform for robust driver assistance. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, York, UK, 27–29 August 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 34–41. [Google Scholar]

- Schuster, R.; Bailer, C.; Wasenmuller, O.; Stricker, D. Combining Stereo Disparity and Optical Flow for Basic Scene Flow. In Proceedings of the 5th Commercial Vehicle Technology Symposium, Berlin, Germany, 13–15 March 2018; pp. 90–101. [Google Scholar]

| Method | Computational Complexity | Parallelization | Pixels under Consideration |

|---|---|---|---|

| SGM [22] | O(MND) | Yes | Few |

| MGM [30] | O(MND) | No | All |

| Our method | O(MND) | Yes | All |

| Method | BF | GF | NL | ST | MGM | SGM4 | SGM8 | Ours |

|---|---|---|---|---|---|---|---|---|

| Aloe | 6.93 | 5.29 | 4.79 | 4.79 | 4.44 | 4.29 | 4.26 | 4.19 |

| Baby1 | 4.26 | 3.62 | 7.47 | 4.10 | 2.65 | 2.84 | 2.67 | 2.33 |

| Baby2 | 3.47 | 3.39 | 11.94 | 13.36 | 1.87 | 4.03 | 2.54 | 2.38 |

| Baby3 | 4.47 | 3.98 | 5.00 | 4.29 | 4.50 | 5.42 | 5.23 | 3.18 |

| Bowling1 | 10.38 | 9.36 | 19.49 | 19.44 | 11.20 | 13.64 | 14.79 | 7.56 |

| Bowling2 | 5.84 | 4.70 | 8.21 | 8.25 | 8.38 | 4.89 | 4.78 | 3.37 |

| Cloth1 | 3.19 | 1.20 | 0.62 | 0.63 | 0.96 | 0.73 | 0.67 | 1.15 |

| Cloth2 | 6.11 | 1.89 | 3.87 | 3.74 | 2.25 | 2.47 | 4.29 | 2.75 |

| Cloth3 | 3.39 | 1.70 | 2.39 | 2.57 | 1.92 | 2.09 | 2.06 | 2.35 |

| Cloth4 | 3.23 | 2.92 | 1.81 | 1.80 | 1.97 | 1.99 | 2.00 | 2.21 |

| Flowerpots | 8.33 | 7.52 | 13.56 | 10.13 | 7.42 | 12.17 | 11.21 | 7.84 |

| Lampshade1 | 9.36 | 7.81 | 8.64 | 9.23 | 6.29 | 9.37 | 6.58 | 4.85 |

| Lampshade2 | 17.11 | 16.42 | 11.80 | 11.56 | 8.57 | 9.80 | 8.80 | 6.49 |

| Rocks1 | 5.05 | 2.75 | 2.50 | 2.42 | 1.75 | 1.73 | 1.65 | 1.96 |

| Rocks2 | 4.78 | 1.21 | 1.70 | 1.67 | 1.37 | 1.64 | 1.59 | 1.50 |

| Wood1 | 5.55 | 3.22 | 8.49 | 4.41 | 4.38 | 1.47 | 1.82 | 1.73 |

| Wood2 | 1.91 | 1.38 | 2.05 | 2.57 | 1.33 | 2.27 | 3.04 | 1.29 |

| Art | 8.89 | 8.00 | 9.13 | 9.03 | 8.79 | 8.03 | 8.12 | 6.17 |

| Books | 11.60 | 8.80 | 10.44 | 10.01 | 8.95 | 8.30 | 8.10 | 6.92 |

| Cones | 4.37 | 2.33 | 3.53 | 3.83 | 5.36 | 4.70 | 4.64 | 3.78 |

| Dolls | 6.15 | 4.15 | 4.97 | 4.51 | 3.94 | 5.25 | 5.10 | 4.22 |

| Laundry | 12.21 | 11.31 | 10.46 | 10.42 | 15.59 | 15.60 | 15.10 | 11.70 |

| Moebius | 9.77 | 8.35 | 7.52 | 7.37 | 8.76 | 9.66 | 9.67 | 7.20 |

| Reindeer | 8.93 | 6.26 | 9.50 | 7.55 | 5.41 | 6.94 | 6.69 | 4.90 |

| Teddy | 7.30 | 6.35 | 5.17 | 5.64 | 9.51 | 7.28 | 7.41 | 6.62 |

| Tsukuba | 2.34 | 2.30 | 1.76 | 2.04 | 2.53 | 2.58 | 2.34 | 1.48 |

| Venus | 0.83 | 0.64 | 0.61 | 0.76 | 1.27 | 1.14 | 1.10 | 1.07 |

| Average | 6.51 | 5.07 | 6.57 | 6.15 | 5.24 | 5.56 | 5.42 | 4.10 |

| Method | Initial Disparity | Final Disparity | ||

|---|---|---|---|---|

| O_noc | O_all | O_noc | O_all | |

| NL [7] | 6.57% | 17.20% | 6.64% | 12.67% |

| ST [16] | 6.15% | 17.34% | 6.27% | 12.30% |

| SGM4 [22] | 5.56% | 18.34% | 4.59% | 11.05% |

| SGM8 [22] | 5.42% | 18.17% | 4.78% | 11.21% |

| MGM [30] | 5.24% | 17.62% | 4.47% | 11.21% |

| Our method | 4.10% | 17.43% | 3.71% | 10.57% |

| Method | Initial Disparity | Final Disparity | ||||||

|---|---|---|---|---|---|---|---|---|

| O_all | A_all | O_noc | A_noc | O_all | A_all | O_noc | A_noc | |

| NL [15] | 13.87 | 3.80 | 11.67 | 2.45 | 11.57 | 1.97 | 10.26 | 1.75 |

| ST [16] | 15.66 | 3.86 | 13.50 | 2.53 | 13.44 | 2.15 | 12.16 | 1.93 |

| SGM [22] | / | / | / | / | 9.13 | 2.00 | 7.64 | 1.80 |

| LDESGM [41] | / | / | / | / | 8.22 | 2.40 | 6.01 | 1.40 |

| iSGM [24] | / | / | / | / | 7.15 | 2.10 | 5.11 | 1.20 |

| wSGM [42] | / | / | / | / | 6.18 | 1.60 | 4.97 | 1.30 |

| MGM [30] | 12.03 | 3.10 | 9.84 | 2.00 | 11.02 | 2.59 | 8.77 | 1.61 |

| LRNL [18] | 9.29 | 2.88 | 7.49 | 2.00 | 8.51 | 2.07 | 7.14 | 1.67 |

| Our_Feat | 7.52 | 2.59 | 5.24 | 1.49 | 6.83 | 2.24 | 4.49 | 1.29 |

| Our_Cen | 8.45 | 2.52 | 6.14 | 1.37 | 6.42 | 1.57 | 4.96 | 1.16 |

| Method | Initial Disparity | Final Disparity | ||||||

|---|---|---|---|---|---|---|---|---|

| O_all | A_all | O_noc | A_noc | O_all | A_all | O_noc | A_noc | |

| NL [15] | 11.30 | 2.57 | 10.09 | 2.11 | 8.91 | 1.66 | 8.61 | 1.64 |

| ST [16] | 12.57 | 2.68 | 11.37 | 2.22 | 9.97 | 1.74 | 9.68 | 1.72 |

| SGM [22] | / | / | / | / | 10.86 | / | 8.92 | / |

| SFSGM [43] | / | / | / | / | 13.37 | / | 11.93 | / |

| MFSGM [28] | / | / | / | / | 8.24 | / | 6.91 | / |

| MGM [30] | 13.17 | 2.57 | 12.01 | 2.15 | 11.79 | 2.40 | 10.66 | 1.92 |

| LRNL [18] | 7.79 | 2.29 | 7.06 | 2.05 | 6.35 | 1.55 | 6.13 | 1.53 |

| Our_Feat | 8.07 | 2.07 | 6.88 | 1.65 | 6.90 | 1.78 | 5.74 | 1.43 |

| Our_Cen | 8.09 | 1.92 | 6.85 | 1.50 | 5.88 | 1.30 | 5.55 | 1.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Tian, A.; Bu, P.; Liu, B.; Zhao, Z. Omni-Directional Semi-Global Stereo Matching with Reliable Information Propagation. Appl. Sci. 2022, 12, 11934. https://doi.org/10.3390/app122311934

Ma Y, Tian A, Bu P, Liu B, Zhao Z. Omni-Directional Semi-Global Stereo Matching with Reliable Information Propagation. Applied Sciences. 2022; 12(23):11934. https://doi.org/10.3390/app122311934

Chicago/Turabian StyleMa, Yueyang, Ailing Tian, Penghui Bu, Bingcai Liu, and Zixin Zhao. 2022. "Omni-Directional Semi-Global Stereo Matching with Reliable Information Propagation" Applied Sciences 12, no. 23: 11934. https://doi.org/10.3390/app122311934

APA StyleMa, Y., Tian, A., Bu, P., Liu, B., & Zhao, Z. (2022). Omni-Directional Semi-Global Stereo Matching with Reliable Information Propagation. Applied Sciences, 12(23), 11934. https://doi.org/10.3390/app122311934