Abstract

Providing access to and the protection of cultural goods—intangible and tangible heritage—is carried out primarily by institutions such as museums, galleries or local cultural centres where temporary exhibitions are shown. The international community also attempts to protect architectural objects or entire urban layouts, raising their status by inscribing them on the UNESCO World Heritage List. Contemporary museums, however, are not properly prepared to make museum exhibits available to the blind and visually impaired, which is confirmed by both the literature studies on the subject and the occasional solutions that are put in place. The development of various computer graphics technologies allows for the digitisation of cultural heritage objects by 3D scanning. Such a record, after processing, can be used to create virtual museums accessible via computer networks, as well as to make copies of objects by 3D printing. This article presents an example of the use of scanning, modelling and 3D printing to prepare prototypes of copies of museum objects from the Silk Road area, dedicated to blind people and to be recognised by touch. The surface of an object has information about it written in Braille before the copy-making process is initiated. The results of the pilot studies carried out on a group of people with simulated visual impairment and on a person who is blind from birth indicate that 3D models printed on 3D replicators with the fused filament fabrication technology are useful for sharing cultural heritage objects. The models are light—thanks to which they can be freely manipulated, as well as having the appropriate smoothness—which enables the recognition of decorative details present on them, as well as reading texts in Braille. Integrating a copy of an exhibit with a description about it in Braille into one 3D object is an innovative solution that should contribute to a better access to cultural goods for the blind.

1. Introduction

Museums across the world are institutions that primarily collect various types of material heritage collections. Some of them are made available in the form of permanent exhibitions, thematic collections or temporary exhibitions. Most people perceive art and museum exhibitions in a visual context: an exhibit, an explanatory text of the perceived object, more recently an individual receiver with a recording in the national language of the visitor or a QR code that allows one to connect via the Internet to the actual recording on one’s own smartphone. Traditional exhibitions are widely available for healthy people and people with mobility impairments. However, not everyone is able to receive such displays. The real challenge is to make at least some of the exhibits accessible to visually impaired people. The classic way of securing exhibits items at exhibitions is by placing them in tightly closed cabinets (the easiest way to protect them against damage or theft), which means that visually impaired or blind people have no chance of getting acquainted with them.

It is currently estimated that there are one billion people worldwide with some form of blindness or visual impairment [1], and the number is increasing. Eye diseases cause limitations in everyday life as well as exclusion from social and cultural life. The vast majority of museums are not adapted to serve people with visual impairments. There is a lack of technical preparation, proper labelling of museum rooms, qualified personnel, proper form of information about events and, most importantly, the possibility of a kinaesthetic knowledge of objects.

According to European Union (EU) law, public institutions are required to adapt their institutions to welcome disabled people. This applies to both people with physical disabilities and those with sight and hearing impairments. However, these regulations are not respected, which does not mobilise directors of institutions to introduce changes. The problem is not the financial issues, but the insufficient flow of information about the available technologies for creating displays for the blind [2]. A description of the various activities of museums in the EU and around the world that try to adapt their displays for blind and visually impaired people is presented in Chapter 2. Adapting museums for visually impaired people is relatively inexpensive. It does not require the construction of special driveways, the installation of elevators or any major architectural changes.

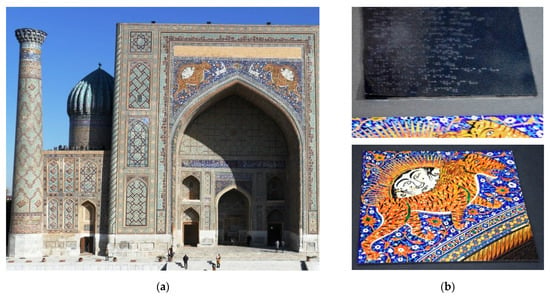

For many people, a visit to a museum can make them feel left out. The traditional display of museum items in glass cases behind glass, despite the information written in Braille, is completely insufficient for the blind or visually impaired person (light reflections significantly impede the observation of the object). The authors encountered such examples in places inscribed on the UNESCO World Heritage List, e.g., in the Shahrisabz Museum of History and Material Culture (Shahrisabz, Uzbekistan), or a museum created in the area of Greco-Roman excavations in Nea Paphos (Cyprus). A much better solution for exposing exhibits to the blind can be found in the museum of Registan (Samarkand, Uzbekistan). The object is a fragment of the famous mosaics on the front of the Sher-Dor Madrasah ivan showing lions, gazelles and the sun, as seen in Figure 1a. The exhibit for the blind is a miniature whose surface has a different texture depending on which object it represents. This solution allows one to feel the outline and shape of individual objects. The facility for the blind is also enriched with an additional description in Braille placed next to the exhibit, as seen in Figure 1b.

Figure 1.

Mosaic from Sher-Dor Madrasah, Registan Ensemble (Samarkand): (a) real object; (b) miniature for tactile cognition intended for visually impaired people.

Each museum has a wealth of fascinating objects that can be presented to viewers in a new convention. Audio and Braille descriptions are good branding tools for the exhibits on display and can help visitors better understand the purpose and history of an object. Contemporary exhibitions must also take care of activating other senses, for example, touch or smell, which will further enhance the experiences of visitors with a visual impairment. Personal contact with a museum artefact—kinaesthetic cognition or the narration of a guide who is able to paint vivid mental pictures—is much more attractive to a person. Museums must communicate with the visually impaired. They need to know their problems and listen to and work with them to understand what their real needs are. Thanks to these changes, museums can make the blind and partially sighted feel socially needed and make them a part of society [3].

The aim of this article is to develop a methodology for activities using 3D computer technologies to prepare copies of historic objects intended to be known by touch for people with visual impairments. This work presents copies made using 3D printing in FFF (fused filament fabrication) technology with the use of 3D modelling and the 3D scanning process. An important aspect of this article is that descriptions made in Braille were incorporated onto the surfaces of the 3D model copy, and furthermore, a pilot study was carried out with the participation of a person who was blind from birth.

2. Related Works

Some museums meet these problems by adapting their exhibitions to the blind and visually impaired and try to introduce to their offer various new solutions to make their exhibits available. The authors of [4] propose a multidisciplinary approach of supplementing the information about historic buildings on museum websites with content for visually impaired people. Such action, however, does not allow for a direct contact with the described object. Research conducted with the participation of visually impaired people shows that museum websites have a low degree of functionality [5]. It is possible for these people to get to know historic objects only by touching them [6], but most museums do not allow such a solution due to the risk of damage to the exhibit.

Study [7] reports research on the behaviour of visitors interacting with replicas of historic museum objects made with additive technology. The research has so far been conducted without the participation of blind people at the Burke Museum of Natural History and Culture in Seattle. For the purposes of the research, copies of four small museum objects were prepared, the size of which did not exceed 15 cm. The obtained results confirm that getting to know the exhibits using the sense of touch is an encouraging and interesting activity, and the copies made with the use of additive technology are safe and work well in practice.

In many museums around the world, low light intensity is used, which is intended to create an appropriate atmosphere of the exhibition, known as the play of lights. Viewing exhibits in such conditions is not convenient for many people, especially if one additionally needs to read information about the object. This situation is burdensome for elderly people with severe myopia. The management of many modern museums are aware about this problem and try to solve it in many different ways. The Mary Rose Museum, located in Historic Dockyards in Portsmouth, UK, hosts monthly dedicated shows for the visually impaired. On this day, the level of light is higher than usual, the sound effects are adapted to the environment and specially trained staff help visitors. Moreover, the museum also uses tactile materials, offering copies of its exhibits as 3D printed models [8]. The Tiflologico Museum located in Madrid, Spain, is a tactile museum created by the Spanish National Organisation for the Blind (ONCE—Organización Nacional de Ciegos de España). The main goal of this museum is to offer blind or partially sighted people normal access to the exhibits by creating a so-called museum without a visual barrier. The museum offers a wide variety of exhibitions, including models of famous buildings and the history of Braille and tactile art (created by visually impaired artists) that can be viewed both with the sense of sight and kinaesthetic cognition [9]. Similar exhibitions are organised by the Victoria and Albert Museum (V&A)—London’s largest arts and crafts museum—which was founded in 1852 as the South Kensington Museum [10]. Once a month, the museum offers a descriptive presentation and a tour of its car exhibition especially for visually impaired people. The museum also offers extensive information (also available online), dedicated guide services for the blind, Braille books and audio descriptions as well as a multisensory backpacks containing objects to be known by touch, such as 3D models [11]. Direct contact with the exhibits is also possible in the DeCordova Sculpture Park (Lincoln, NE, USA), where many contemporary outdoor sculptures are located. Visually impaired people can visit the Sculpture Park through a touch tour [12]. The National Gallery in Prague (Czechia) offers visually impaired visitors the opportunity to “touch” in a virtual world three sculptural masterpieces considered as the largest in the world. Thanks to the appropriate feedback gloves, blind and visually impaired people can “touch” in the VR world to discover digital replicas of: Nefertiti’s heads, Venus de Milo and Michelangelo’s David [13]. Specialised educational programmes for the blind are implemented in Turkey. “Istanbul Modern The Colour I Touch” is a programme for blind and partially sighted children and adolescents. It allows for guided tours of exhibitions, participation in specialist workshops and screenings of films with audio descriptions [14]. The Van Loon House Museum (Amsterdam, The Netherlands) focuses on the kinaesthetic cognition of the exhibits. The museum is open 7 days a week and offers guided tours where one can touch the selected exhibits from the shared collection [15]. The Touch Museum, established in 1984 and located in Kallithea (Greece), in the historic building “Lighthouse for the Blind of Greece” has collected many interesting museum artefacts that can be explored kinaesthetically. All the objects in the museum are copies of the originals exhibited in other museums around the country and, importantly, they can be explored/experienced by touch [16]. The City Museum Trier (“Stadtmuseum Trier”) [17] introduced audio tracks to tactile reproductions and replicas of museum exhibits dedicated to the visually impaired and blind. Article [18] describes the construction of a device intended for visually impaired people. It consists of a ring put on a finger, NFC sensors placed on the surface of the printed 3D model (in FFF technology) and an application for a tablet or smartphone. During kinaesthetic cognition, coupling occurs between the ring and the sensor, which activates the soundtrack through an application running on the smartphone. The authors used scanning and 3D printing to prepare the 3D model.

Study [19] is theoretical and cognitive in nature and concerns collecting opinions from blind people visiting museums in order to collect guidelines for creating tactile exhibits. The authors pay special attention to obtaining the appropriate copy surfaces of the exhibits and the materials used to make them. The authors of [20] emphasise the importance of 3D printing technology in museology, which in the future will lead to a paradigm shift in the museum model. In the future, museum objects or their copies will be accessible by touch. Multisensory forms of communing with culture will be important for the accessibility of cultural heritage, especially for people with learning difficulties, children, the elderly, blind or partially sighted people. As for today, it should be said that the standards for the transfer of 3D objects to multi-sensory feeling have not yet been developed. The proposed solutions require refinement, testing and examination. Publication [21] describes an interactive audio guide using a set of cameras identifying the depression of the analysed surface. The device shown was used to analyse the surface of copies of historic reliefs printed with FFF (fused filament fabrication) technology. The interaction between the finger depressions penetrating the relief and the distance read from the camera provides 2.5D spatial information, which results in the transmission of information in the form of audio. The constructed prototype was pre-tested in pilot studies with the participation of visually impaired people. The process of creating 3D models of museum objects using the Structure from Motion (SfM) method is presented in [22]. The authors presented the limitations of the automatic digitisation process (correction of the 3D model mesh, textures, etc.) and the method of verifying the compliance of the resulting digital copy of the exhibit with the original. However, the topic of 3D printing of replicas of museum artefacts was not discussed in the work. In [23], the authors used 3D scanning to generate copies of real digital models for kinaesthetic cognition through 3D printing (FFF technology). The digital models were post-processed to increase the recesses and emphasise the protruding elements. Printed models of sculptures and an architectural object were tested with a blind person. A prototype of an interesting solution is presented in [24]. The 3D haptic model of the historic Wenceslas Hill in Olomouc (Czechia) was made with 3D printing technology. In addition, the interaction between the user and the object was achieved by applying layers of conductive material responding to touch on selected surfaces of the model. Activating connections by touch leads to the display of detailed information on the tablet located next to the 3D model.

Creating replicas of museum objects, even with complex shapes, using 3D printing technology is possible thanks to the use of 3D scanning and 3D modelling. Technical and practical aspects concerning the use of various scanning techniques for historic museum exhibits were discussed in previous works [25,26,27,28,29,30]. Three-dimensional scanning using structured light technology was used to scan the statue of Hercules from the Antalya Museum in Turkey and the Khmer head from the Rietberg Museum in Zurich [25]. This work also presents the issues of selecting 3D scanning techniques for historic museum exhibits in terms of costs, experience and training of staff. Article [26] concerns checking the suitability of stationary and mobile 3D laser scanners for transferring archaeological objects to the digital world. The research confirmed the inability to collect information about the texture, and in the case of a mobile scanner there was a need to stick markers, and in the case of a stationary scanner there were limitations regarding the size of the artefacts. The authors of [27], apart from the aforementioned aspects, also deal with the issues of digital reconstruction. Work [28] presents a low-cost small object 3D data acquisition system consisting of a set of Kinect v2 devices cooperating with the open source LiveScan3D software. The system is difficult to transport over long distances (for example, by air transport) due to its large number of components. In article [29], the authors describe a significant number of available technologies and devices that in various situations can be used to acquire 3D data of large and small objects of tangible cultural heritage. Monograph [30] presents both various theoretical aspects concerning the issues of 3D digitisation of architectural objects and museum artefacts, as well as many practical implementations. Another approach, alternative to 3D scanning technology, is described by the authors of [31]. The article discusses the creation of digital architectural objects through the use of classic 3D modelling. The replicas made with the 3D printing technology were used to better understand the kinaesthetic in the board game.

The authors of the articles discussed used 3D models printed with FFF technology for kinaesthetic research, but in the conducted survey research in the form of pilot studies, they did not have a group of blind people. The analysis of the articles shows that the issue of making tangible cultural heritage objects available to blind people is a problem that has not yet been properly resolved, both in terms of organisation and technology.

3. Materials and Methods

3.1. The Use of 3D Printing Technology in Museology

People with visual loss increasingly expect to be able to experience visiting a museum in a similar way to a person with normal eyesight. Scientific research shows that the most important senses receiving stimuli from the environment are the senses of sight and touch [32]. Many museums around the world were closed between 2020 and 2021 due to the COVID-19 pandemic [33]. Due to this situation, the availability of museum exhibitions for the blind was drastically limited. Some of the museums made available selected objects of their resources by posting photos on their websites. This method is ineffective. However, there is another option to make the collections available. The availability of 3D printing technology can significantly contribute to the fact that blind people are also fully fledged people visiting museums or experiencing copies of museum artefacts, in their own homes or local cultural centres. Preparing to download files of 3D models of museum exhibits in the STL format makes it possible to print them for oneself. Thus, it is possible to create a private collection of the most famous and sublime works of art. Some museums provide these files for free. Thanks to the idea of museology as an “open source museum”, the material cultural heritage is consciously shared.

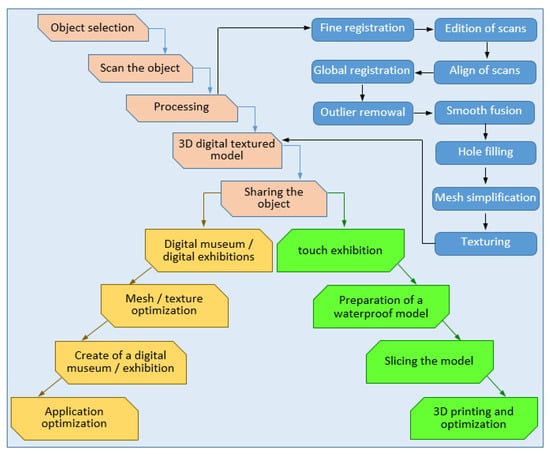

3.2. The Process of Making Museum Exhibits Available

The procedures for making museum objects available are quite complicated, which is why they are presented in the form of a diagram in Figure 2. The diagram shows the developed procedure that allows for the presentation of museum objects in various forms: digital and real copies. The proposed procedure uses 3D scanning technology to obtain digital data about objects and transform them into digital 3D models along with the texturing process. For several years, the authors have been using the 3D scanning technology of museum objects both to protect cultural property and cultural heritage, and to make it available [34,35].

Figure 2.

Procedure for making museum objects available.

The developed procedure proposes two paths for presenting museum objects: (1) digital sharing on websites or creating virtual museums, and (2) 3D printing, which makes it possible to share models with visually impaired people. Each stage is based on knowledge and experience in the field of museology and IT. Some activities are common to both paths, which makes the developed methodology universal.

3.3. Description of Objects

The Afrasiab Museum (Samarkand, Uzbekistan) in its collection has many interesting historical exhibits from the ancient city of Afrasiyab. The city was located in the commercial areas of the Silk Road through which goods were transported from China to Europe. It developed around the 5th century BC and was destroyed in 1220 by the invasion of Genghis Khan [36]. Selected exhibits of this museum were the subject of research, analysis and consideration of various scientific articles [37,38,39]. The work [37] presents a digital reconstruction of a damaged jug, ref. [38] presents an application that allows for the presentation of exhibits from the Afrasiyab Museum in VR, while [39] presents a comparative analysis for obtaining digital 3D models with the use of structured light scanning and the Structure from Motion method. Two objects were selected for the implementation of this study [40].

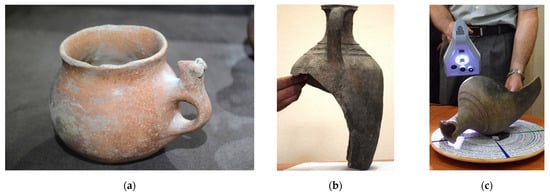

The first object is a damaged clay jug, about 21 cm in diameter and 35 cm high. This object has not been preserved in its entirety. Part of the side wall and the bottom are missing. The fragment of the vessel that has survived has a handle, a neck with a spout and a part of the side wall. The jug does not have any special decorations except for the ribbon, which is below the neck. It was used to transport and store water. The second object is a mug with a handle, also made of clay. The top of the handle is shaped like a ram’s head. The vessel is 8 cm high and 10 cm in diameter. It has been preserved in its entirety and has no visible damage, as seen in Figure 3.

Figure 3.

View of objects for digitisation: (a) mug; (b) jug; (c) work with the Artec Spider scanner.

3.4. Acquisition and Data Processing

Data acquisition was carried out during the first Scientific Expedition of the Lublin University of Technology to Central Asia in 2017 [41] and the University’s fifth such expedition in 2021 [40] to museums in Uzbekistan where both objects were exhibited. The main problem of the 3D digitisation of objects was to build an appropriate place to scan using available furniture, providing access to power for the devices used, relatively good lighting for the artefacts and their safe extraction from showcases, which typically came from the end of the 20th century and were not adapted to opening.

Hand-held 3D scanners working with structured light technology were used to scan the objects. In the case of the jug, the Artec Spieder scanner was used, and for the digitisation of the mug, the Artec Eva. Both scanners allow for relatively fast execution of precise measurements in high resolution and, importantly, do not require sticking positioning markers on the digitised object, which is particularly important when scanning museum objects in in situ conditions. The used structured light is safe for the user and non-invasive for museum artefacts [10,42]. The basic data of these scanners are presented in Table 1.

Table 1.

Comparison of the basic parameters of scanners Artec Eva and Artec Spider.

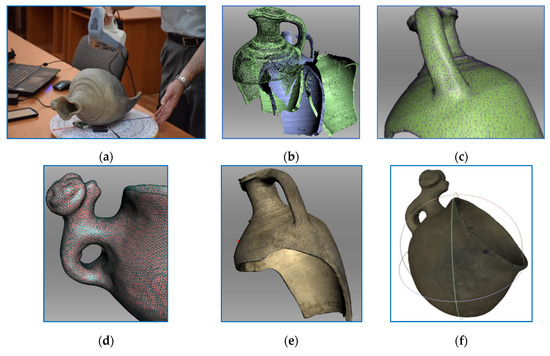

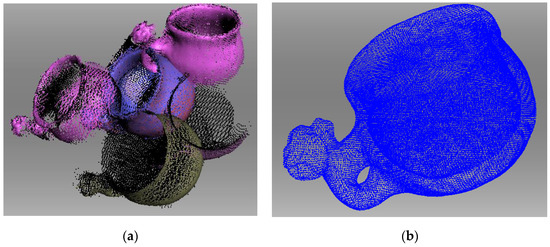

The scanning of the objects in Figure 3 was carried out in in situ conditions. For data acquisition during scanning, a software dedicated to the Artec Studio Professional scanner and a laptop with a 4-core Intel Core i5 processor and 16 GB of RAM were used. Eight scans were made for the jug-type object. This allowed for the precise acquisition of data about the facility. The data file in the form of a point cloud was approx. 5 GB in size. Six scans were taken for the mug and a 1.35 GB file was obtained. The processing of the obtained data was carried out according to the procedure presented in Figure 4. The same software as for data acquisition was used—Artec Studio Professional, as seen in Figure 4b–d.

Figure 4.

View of: (a) the scanning process; (b) data processing from the scanning; (c,d) the 3D mesh model; (e,f) the textured 3D mesh model.

Processing was carried out using a desktop computer with an Intel Core i7 processor, 48 GB of RAM and an NVIDIA Quadro K2200 graphics card with built-in 4 GB GDDR5 memory. The use of equipment with high computing power allowed for efficient data processing activities. As a result, mesh digital 3D models with the texture applied were obtained (Figure 4c–f). The models were exported as files in the .obj format, and textures as .jpg files. The sizes of the obtained files after optimisation were as follows: for the mug model—9.65 MB, for the jug—12.7 MB.

During the data processing, the following stages were followed:

- (i)

- Registration 1—fine registration, introduction of single scans stored in local coordinate systems;

- (ii)

- Edition of scans—removed artefacts, used selection tools such as: Lasso selection, Cutoff-plane selection and 3D selection;

- (iii)

- Alignment of scans—scans were adjusted by specifying 3–4 pairs of characteristic points on the scans;

- (iv)

- Registration 2—global registration, transfer of single scans from local coordinate systems into the global system, Figure 5a,b;

Figure 5. View: (a) single scans in local coordinate systems; (b) scans in the global coordinate system.

Figure 5. View: (a) single scans in local coordinate systems; (b) scans in the global coordinate system. - (v)

- Fusion 1—outlier removal, object surface points acquired during scanning that are too far from other acquired points are deleted;

- (vi)

- Fusion 2—smooth fusion, assembling the partial scans into one object in the new coordinate system, generating a mesh model;

- (vii)

- Postprocessing 1—if there were holes on the surface of the digital model, they were repaired by using the hole filing or fix holes tools;

- (viii)

- Postprocessing 2—mesh simplification, optimization of the number of faces in terms of the complexity of the digital model and the size of the final file;

- (ix)

- Applying texture—mapping the texture obtained when scanning an object to obtain a photorealistic digital model.

3.5. Preparation of 3D Models for Printing

According to Figure 2, digital 3D models can be made available in digital or 3D printed form. The authors of the article have so far focused mainly on the first method of disseminating digital 3D models of museum objects. The objects were made available by publishing them: on the Internet as virtual 3D exhibitions [40], in the form of mobile applications for the presentation of 3D models [43], as a virtual museum [44] and as applications in the VR version [45,46]. The authors presented 3D models for visually impaired people only in [23].

In this study, copies of museum objects (the mug and jug) were made for the purpose of testing the suitability of such replicas for visually impaired people. The FFF printing technology was used due to the widespread availability of printers with an appropriate size of working space.

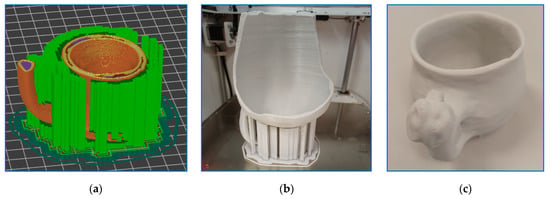

In the first stage of the works (Figure 2), the activities were related to the preparation of the so-called digital waterproof models, because only such models can be directed to 3D printing. The post-processing performed allowed for the closing of all surfaces and the removal of unnecessary edges and protruding surfaces. This task was performed with the use of 3D models generated in the previous stages, saved in the .obj format, and a set of tools for the free Blender program called 3D-Print Toolbox. 3D-Print Toolbox has specialised tools designed to identify surface geometry errors that may cause problems in the printing process. The watertight models prepared (using the “Slicer” type program) were transformed into layered models (Figure 6a) and supports were added in the required places. In order to ensure the stiffness of the object, a filling of the “grid” type was used. The printing parameters shown in Table 2 were adopted for both models. Wall Line Count was set to 3 to improve the quality of the outer surface to be touched. In order to verify the effect of the height of the layers on the roughness of the surfaces palpable by visually impaired persons, the thickness of the layer was 0.12 mm for the mug and 0.25 for the jug.

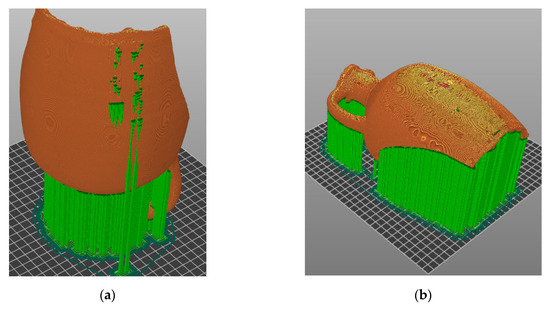

Figure 6.

View of the objects: (a) layered model of the jug ready for printing (25% of the height); (b) a printed replica of the jug with supports; (c) a printed mug after cleaning it off supports.

Table 2.

The printing parameters.

The 1:1 scale was used to print the mug model, while for the jug the 1:2 and 1:1 scales were chosen. Three-dimensional printing was performed on a Makerbot replicator Z18 printer (Figure 6b), by using PLA-type plastic with a diameter of 1.75 mm. Printing the mug took 26 h, and 122 g (41 m) of material was used. Printing the jug in the 1:2 scale took 25 h, using 200 g (68 m running) of plastic, and in the 1:1 scale printing took 102 h (over 820 g of plastic with a wire length of almost 250 m were used). Finally, the printed models were cleaned off the supports (Figure 6c).

3.6. Model with Braille Text

The authors also prepared an experimental digital 3D model of the jug on the surface of which information was written using Braille. These actions were performed in Blender and the followed procedure included the following steps:

A. Preparation of letters in the Braille alphabet in 1:1 scale;

B. Composing a single word from the letters on the plate;

C. Scaling the object (jug) to the size in which it will be printed;

D. Incorporating tiles (in 1:1 scale) with words into the surface of the object;

E. Merging objects into one whole;

F. Generating the object for printing.

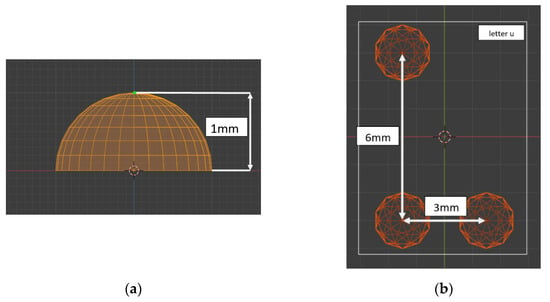

Stage A. Prepared single letters in Braille are 3D objects represented by mesh models. All the models were saved as separate files in the .obj format, with no texture, as seen in Figure 7.

Figure 7.

Preview of the preparation of letters in Blender and dimensioning: (a) a single element for creating letters; (b) letter “u”.

Stage B. Word generation was preceded by designing a plate slightly larger than the height of the 6-point Braille letter, 2 mm thick and 10 letters long. Creating words consisted in inserting subsequent letters as ready files on the designed plate. If the word was shorter than the original length of the plate, the unnecessary length was cut off; otherwise, it was lengthened. The individual words were saved as solid-type objects in separate files in the .obj format without texture, as seen in Figure 8.

Figure 8.

Preview of text preparation in Blender—Stage B: (a) creating inscriptions in Braille; (b) modelling tiles with text in Braille (the text in Polish—Uzbekistan, in ang. Uzbekistan).

Stage C. The existing standards defining the sizes of individual cones (elements) that make up a letter and the distance between them mean that the object with the inscriptions cannot be scaled, because reduced letters may become unreadable for a blind person. Hence, a decision should be made on the final size of the object and its proper scaling.

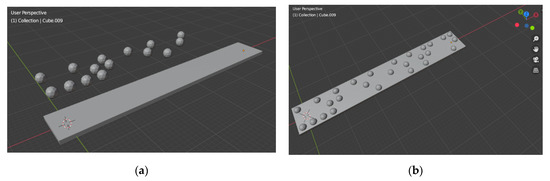

Stage D. Composing the inscriptions with the tiles consists in importing the relevant files and their appropriate placement on the surface of the object. The matter is rather simple when dealing with flat surfaces. In the case of a jug, the outer surfaces form hypersurfaces, so the tiles with the words will stick out more in some places than in others, as seen in Figure 9.

Figure 9.

Preview of inserting inscriptions into the surface of the object in Blender—Stage D: (a) placing the inscription above the surface, (b) introducing the inscription into the surface of the object, (c) merging the objects into a whole—Stage E.

Stage E. Integrating the object into a whole consists in performing a “Union” type Boolean operation on the object model and individual tiles with words, as seen in Figure 9c.

Stage F. The merged model of the object with the inscriptions in Braille was imported into a Slicer program that generated a model for printing with the necessary supports. An important issue is to place the model in the replicator space in such a way that the model supports do not imprint on the inscriptions, because removing them may damage the inscriptions, as seen in Figure 10. The authors chose the upside-down position due to the generation of fewer supports.

Figure 10.

Preview of alternative ways of placing the object in the workspace, simulation in Slicer—Stage F (the green colour represents the supports): (a) placing the object in an inverted position upright; (b) placing the object in a horizontal position with the inscriptions facing up.

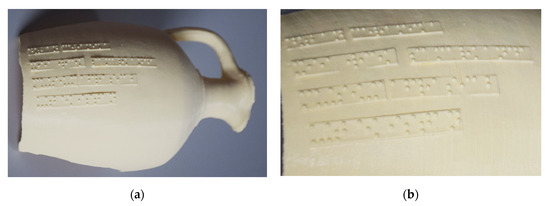

Due to the length of the 1:1 scale printing process of the jug, it was decided to use a version of the model representing 80% of its actual size. Printing time was reduced to approximately 70 h. For the pilot study, the inscriptions in the following lines were scaled to verify whether the use of 3D printing with FFF technology with the selected printing parameters (Table 1) would not introduce some inaccuracies that would make it impossible for a blind person to read the words, as seen in Figure 11.

Figure 11.

(a) A model of a jug with integrated descriptions in Braille; (b) the scales of the inscriptions in the following lines (starting from the top) were: 100%, 110%, 120% and 130%.

The following texts (of course in Polish) have been written in the following lines:

- First line: dzban ceramika (ang. pottery jug);

- Second line: XII wiek samarkanda (ang. 12th century Samarkand);

- Third line: muzeum afrasiab (ang. the Afrasiab museum);

- Fourth line: uzbekistan (ang. Uzbekistan).

It should be remembered that Braille only has lowercase letters.

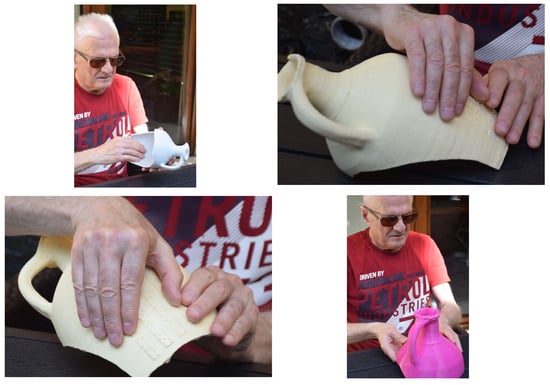

4. Pilot Studies

The pilot studies were carried out in two stages. The first stage consisted in examining four people (two women and two men aged 45–55 years) with simulated pattern dysfunction. In the second stage of the study, one man was completely blind from birth (age group 65+) and had been using Braille books since childhood. All persons consented to the disclosure of their image.

The aim of the research carried out on a group of people with simulated visual impairment was to test the acceptance of 3D printed models for kinaesthetic cognition. Information was collected about the perceived quality of the surface of the object as a whole and about the places of the object where the supports were printed, as well as about the size of the objects and the recognition of its individual details. The research was carried out in “Lab 3D” at the Lublin University of Technology.

In the case of the blind person, the scope of the research was extended by collecting information on the legibility of the descriptions written in Braille (in various scales, as seen in Figure 11b), which were integrated onto the surface of the object. The research was conducted at the respondent’s place of residence.

Stage 1—tests with simulated visual impairment.

The research was carried out according to the following scheme:

- Participants were brought into the room and had a non-translucent eye shield;

- The objects were placed in front of the examined person;

- The participants of the study were asked to try to classify the object into the appropriate group (e.g., mug, jug shell) and to identify individual details of the touched object (handle, spout condition, decorative elements, Braille inscriptions);

- The participants examined the surface roughness of the individual copy elements, as seen in Figure 12;

Figure 12. Studies involving people with simulated visual impairment.

Figure 12. Studies involving people with simulated visual impairment. - The participants were asked to comment on the size of the tested object (this only concerned the jug).

Stage 2—examination of a blind person.

The research concerned the same scope as the research carried out in Stage 1. The recognition and legibility of the texts written in Braille were also examined, as seen in Figure 13.

Figure 13.

Research with the participation of a blind person.

The subjects were asked to comment on their impressions and what they felt through touch out loud. The entire course of the research was recorded on a Dictaphone (the respondents gave their consent). This allowed for their later listening, study and analysis. This solution allowed them to focus on conducting the research and did not distract the respondents. Before starting the research, the participants were informed that they would receive two objects for recognition. There was no information about the technique with which they were made and where they came from. Information was also provided on what they should pay attention to when touching the objects. During the research, the person conducting the experiment asked additional questions and asked about some details of the object. The order in which the objects were given was random.

5. Research Results and Discussion

The research was qualitative due to the small size of the research group. The main results of the research carried out in both stages are summarised in Table 3. This presentation of the results made it easy to compare them.

Table 3.

Answers obtained to research questions.

The results obtained from the pilot studies allow for the evaluation of the prepared copies of 3D models with FFF technology.

- Recognising the decorative element on the handle of the cup (ram’s head) was too difficult. The blind person stated that even the enlargement of the cup together with this element would not contribute to its recognition, as they had never touched the head of a real ram;

- The blind person finding texts with inscriptions in Braille was a pleasant surprise, it had not been expected;

- Not all texts were immediately readable, as there were cases where the distances between individual letters were incorrect, i.e., too small. This concerned the distance between the letters “s” and “m” in the word “Samarkand”. The respondent stated that the intervals should be larger. In another case, a printing defect appeared, which made it difficult to recognise the letters “c” and “e” in the word “ceramics”. A similar situation was encountered with the inscription “museum”—there was a little difficulty when reading this word. There was no problem with reading the word “Afrasiab” (on the same line and scale) even though it was a proper name and the respondent did not understand the word. The word “Uzbekistan” was clearly legible;

- The respondents positively assessed the PLA material used for 3D printing, unequivocally stating that the material was pleasant to the touch and the analysed exhibits were light and could be freely manipulated.

It should be stated that at both stage 1 and 2 of the research, the objects were correctly recognised and named. When examining the cup, it was found that it could be a cup or a deep bowl with a handle. When examining the second object, it was stated that it was some kind of damaged jug (the shape was recognised thanks to the handle and characteristic spout).

6. Summary and Conclusions

Based on the technical work performed and the research carried out, the following conclusions can be drawn:

- The methodology of the solutions proposed in this article, leading to the creation of a copy of a museum exhibit with a description in Braille integrated onto its surface, turned out to be effective.

- The selection of digitisation, modelling and 3D printing technology, along with the software used for scanning, modelling, post-processing and preparation of the layered model for replication turned out to be appropriate and made it possible to effectively solve all emerging problems.

- Incorporating descriptions of objects onto their surfaces using the Braille alphabet will contribute to multifunctional recognition—integration of knowing the shape of the exhibit through recognition by touch and the content written on its surface.

- The procedure of preparing descriptions in Braille on separate plates makes it possible to pre-print them and verify both their correctness and legibility by a blind person. This solution will save time and costs when reprinting the entire 3D model if the descriptions are found to be defective.

- The use of 3D printing technology in the fused filament fabrication version allowed for the production of light 3D models to be recognised by touch, which the respondents could freely manipulate. The results of the pilot studies carried out on a group of people with simulated visual impairment and on a person who is blind from birth indicate that these models are useful for sharing cultural heritage objects.

- Printed museum models in the form of 3D replicas of these objects will allow them to be used in many ways. They can be exhibited in museums, printed at home, used for the training of conservators as well as used as archives.

7. Further Works

The authors intend to continue their work in two directions:

Developing a methodology for automating the creation of texts in Braille and their application on cylindrical surfaces of digital 3D models.

Generating 3D models with digital light processing technology using liquid-crystal displays (LCDs) for photopolymer curing and verifying the suitability of such models for the blind.

Author Contributions

Conceptualization, J.M. and M.B.; methodology, J.M., M.B. and S.K.; software, M.B. and S.K.; validation, J.M., M.B. and S.K.; formal analysis, J.M. and M.B.; investigation, J.M., M.B. and S.K.; resources, J.M. and M.B.; data curation, J.M., M.B. and S.K.; writing—original draft preparation, J.M., M.B. and S.K.; writing—review and editing, J.M., M.B. and S.K.; visualization, J.M., M.B. and S.K.; supervision, J.M.; project administration, J.M.; funding acquisition, J.M., M.B. and S.K. All authors have read and agreed to the published version of the manuscript.

Funding

The work was co-financed by the Lublin University of Technology Scientific Fund FD-20/IT (Technical Information Technology and Telecommunications).

Institutional Review Board Statement

This research was carried out in accordance with the decision No. 5/2020 of the Committee for Ethics of Scientific Research of the Lublin University of Technology of 15 July 2020 on the research project: Research on the usability of the created 3D museum exhibits for kinaesthetic cognition.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data on digital 3D models are stored by M.B. and can be made available after individual notification by email: m.barszcz@pollub.pl.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Elmannai, W.; Elleithy, K. Sensor-Based Assistive Devices for Visually-Impaired People: Current Status, Challenges, and Future Directions. Sensors MDPI 2017, 17, 565. [Google Scholar] [CrossRef] [PubMed]

- Pillow, B.; Banks, M.; Reeves, D. Equal Access for All: Providing for Impaired Stakeholders in a Museum Setting. Available online: http://www.byronpillow.com/uploads/7/6/7/9/76797585/disability_access.pdf (accessed on 18 November 2021).

- Skalska-Cimer, B.; Kudłaczka, A. Virtual Museum. Museum of the Future. Tech. Trans. 2022, 4, e2022004. [Google Scholar] [CrossRef] [PubMed]

- Leporini, B.; Norscia, I. Translating Museum Visual Contents into Descriptions for Blind Users: A Multidisciplinary Approach. Computer-Aided Design of User Interfaces VI; Springer: London, UK, 2009; pp. 47–57. [Google Scholar]

- Petrie, H.; King, N.; Weisen, M. The Accessibility of Museum Web Sites: Results from an English Investigation and International Comparisons. In Museums and the Web 2005; Trant, J., Bearman, D., Eds.; Archives & Museum Informatics: Toronto, ON, Canada, 2005. [Google Scholar]

- Smithsonian Guidelines for Accessible Exhibition Design. Available online: https://www.si.edu/Accessibility/SGAED (accessed on 25 January 2022).

- Williams, T.L. More Than just a Novelty? Museum Visitor Interactions with 3D Printed Artifacts. Master’s Thesis, University of Washington, Washington, DC, USA, 2017. [Google Scholar]

- Ebrahim, M.A.-B. 3D Laser Scanners’. Int. J. Sci. Res 2015, 4, 5–611. [Google Scholar]

- Díaz, T. Un nuevo espacio expositivo dentro del Museo Tiflológico: La sordoceguera. Antecedentes históricos. RdM. Revista de Museología: Publicación científica al servicio de la comunidad museológica. Dialnet 2019, 74, 111–118. [Google Scholar]

- Adams, J.W.; Olah, A.; McCurry, M.R.; Potze, S. Surface model and tomographic archive of fossil primate and other mammal holotype and paratype specimens of the ditsong National Museum of Natural History, Pretoria, South Africa. PLoS ONE 2015, 10, e0139800. [Google Scholar] [CrossRef]

- Stoddart, S.; Caroline, A. The British Museum. Antiquity 2002, 76, 1063. [Google Scholar]

- Segal, H.; Jacobs, W. Computers and Museums: Problems and opportunities of Display and Interpretation. Am. Q. 1990, 42, 637. [Google Scholar] [CrossRef]

- Luximona, A.; Luximonb, Y. New Technologies—3D Scanning, 3D Design, and 3D Printing. In Handbook of Footwear Design and Manufacture, 2nd ed.; Woodhead Publishing: Sawston, UK, 2021; pp. 477–503. [Google Scholar]

- Ada, N.; Pirnar, I.; Huseyin Altin, O. Vitality of Strategic Museum Management: An Application from Turkish Museums. Alanya Akad. Bakis 2022, 6, 1891–1905. [Google Scholar] [CrossRef]

- Museum Van Loon Portal. Available online: https://www.museumvanloon.nl/ (accessed on 18 January 2022).

- Merriman, N. University museums: Problems, policy and progres. Archeaology Int. 2001, 5, 57–59. [Google Scholar]

- Self-Guided Museum Visits for Visually Impaired People. Available online: https://www.rehacare.com/en/Interviews/We_asked_.../Older_We_asked_interviews/Self-guided_museum_visits_for_visually_impaired_people (accessed on 18 November 2021).

- D’Agnano, F.; Balletti, C.; Guerra, F.; Vernier, P. TOOTEKO: A case study of augmented reality for an accessible cultural heritage. Digitization, 3D printing and sensors for an audio-tactile experience. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 3D Virtual Reconstruction and Visualization of Complex Architectures, Avila, Spain, 25–27 February 2015; pp. 207–213. [Google Scholar]

- Reichinger, A.; Schröder, S.; Löw, C.; Sportun, S.; Reichl, P.; Purgathofer, W. Spaghetti, Sink and Sarcophagus: Design Explorations of Tactile Artworks for Visually Impaired People, NordiCHI ’16. In Proceedings of the 9th Nordic Conference on Human-Computer Interaction, Gothenburg, Sweden, 23–27 October 2016; Volume 82, pp. 1–6. [Google Scholar]

- Neumüller, M.; Reichinger, A.; Rist, F.; Kern, C. 3D Printing for Cultural Heritage: Preservation, Accessibility, Research and Education. In 3D Research Challenges in Cultural Heritage, A Roadmap in Digital Heritage Preservation; Ioannides, M., Quak, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 119–134. [Google Scholar]

- Reichinger, A.; Fuhrmann, A.; Maierhofer, S.; Purgathofer, W. Gesture-Based Interactive Audio Guide on Tactile Reliefs. In Proceedings of the ASSETS ’16. 18th International ACM SIGACCESS Conference on Computers and Accessibility, Reno, NV, USA, 23–26 October 2016; pp. 91–100. [Google Scholar]

- Povroznik, N. 3D Models of Ancient Greek Collection of the Perm University History Museum. In Proceedings of the Digital Cultural Heritage, Final Conference of the Marie Skłodowska-Curie Initial Training Network for Digital Cultural Heritage, ITN-DCH, Olimje, Slovenia, 23–25 May 2017; pp. 144–154. [Google Scholar]

- Montusiewicz, J.; Miłosz, M.; Kęsik, J. Technical aspects of museum exposition for visually impaired preparation using modern 3D technologies. In Proceedings of the 2018 IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Canary Islands, Spain, 17–20 April 2018; pp. 774–779. [Google Scholar]

- Lazna, R.; Barvir, R.; Vondrakova, A.; Brus, J. Creating a Haptic 3D Model of Wenceslas Hill in Olomouc. Appl. Sci. 2022, 12, 10817. [Google Scholar] [CrossRef]

- Akça, D.; Grün, A.; Breuckmann, B.; Lahanier, C. High Definition 3D-Scanning of Arts Objects and Paintings. In Proceedings of the Optical 3-D Measurement VIII, Zurich, Switzerland, 9–12 July 2007; Volume II, pp. 50–58. [Google Scholar]

- Montusiewicz, J.; Czyż, Z.; Kayumov, R. Selected methods of making three-dimensional virtual models of museum ceramic objects. Appl. Comput. Sci. 2015, 11, 51–65. [Google Scholar]

- Tucci, G.; Bonora, V.; Conti, A.; Fiorini, L. High-Quality 3d Models and Their Use in a Cultural Heritage Conservation Project. In Proceedings of the International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences Volume XLII-2/W5 26th International CIPA Symposium, Ottawa, ON, Canada, 28 August–1 September 2017. [Google Scholar]

- Kowalski, M.; Naruniec, J.; Daniluk, M. LiveScan3D: A Fast and Inexpensive 3D Data Acquisition System for Multiple Kinect v2 Sensors. In Proceedings of the 2015 International Conference on 3D Vision, Lyon, France, 19–22 October 2015. [Google Scholar]

- Daneshmand, M.; Helmi, A.; Avots, E.; Noroozi, F.; Alisinanoglu, F.; Arslan, H.S.; Gorbova, J.; Haamer, R.E.; Ozcinar, C.; Anbarjafari, G. 3D Scanning: A Comprehensive Survey. arXiv 2018, arXiv:1801.08863v1. [Google Scholar]

- Miłosz, M.; Montusiewicz, J.; Kęsik, J. 3D Information Technology in the Protection and Popularization of the Cultural Heritage of the Silk Road; Wyd. Politechniki Lubelskiej: Lublin, Poland, 2022; p. 134. ISBN 978-83-7947-521-6. Available online: http://bc.pollub.pl/dlibra/publication/14114/edition/13771 (accessed on 23 August 2022).

- Montusiewicz, J.; Barszcz, M.; Dziedzic, K.; Kęsik, J.; Miłosz, M.; Tokovarov, M. The Concept of a 3d Game Board to Recognise Architectural Monuments. In Proceedings of the 11th International Conference of Technology Education and Development, INTED, Valencia, Spain, 6–8 March 2017; pp. 8665–8674. [Google Scholar]

- Bamidele, I.A. Information Needs of Blind and Visually Impaired People; Readers’ Services Librarian, Laz Otti Memorial Library: Ilishan-Remom, Nigeria, 2019. [Google Scholar]

- Maciuk, K.; Jakubiak, M.; Sylaiou, S.; Falk, J. Museums and the pandemic—How COVID-19 impacted museums as seen through the lens of the worlds’ most visited art museums. Int. J. Conserv. Sci. 2022, 2, 609–618. [Google Scholar]

- Montusiewicz, J.; Miłosz, M.; Kęsik, J.; Żyła, K. Structured-light 3D scanning of exhibited historical clothing—A first-ever methodical trial and its results. Herit. Sci. 2021, 9, 74. [Google Scholar] [CrossRef]

- Kęsik, J.; Miłosz, M.; Montusiewicz, J.; Samarov, K. Documenting the geometry of large architectural monuments using 3D scanning—The case of the dome of the Golden Mosque of the Tillya-Kori Madrasah in Samarkand. Digit. Appl. Archaeol. Cult. Herit. 2021, 22, e00199. [Google Scholar] [CrossRef]

- Fedorov-Davydov, G.A. Archaeological research in central Asia of the Muslim period. World Archaeol. 1983, 14, 393–405. [Google Scholar] [CrossRef]

- Montusiewicz, J.; Barszcz, M.; Dziedzic, K. Photorealistic 3D digital reconstruction of a clay pitcher. Adv. Sci. Technol. Res. J. 2019, 13, 255–263. [Google Scholar] [CrossRef]

- Miłosz, M.; Skulimowski, S.; Kęsik, J.; Montusiewicz, J. Virtual and interactive museum of archaeological artefacts from Afrasiyab—An ancient city on the silk road. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00155. [Google Scholar]

- Barszcz, M.; Montusiewicz, J.; Paśnikowska-Łukaszuk, M.; Sałamacha, A. Comparative Analysis of Digital Models of Objects of Cultural Heritage Obtained by the “3D SLS” and “SfM” Methods. Appl. Sci. 2021, 11, 5321. [Google Scholar] [CrossRef]

- 3D Digital Silk Road Portal. Available online: https://silkroad3d.com/ (accessed on 23 August 2022).

- Miłosz, M.; Montusiewicz, J.; Kęsik, J. 3D Information Technologies in Cultural Heritage Preservation and Popularization—A Series of Seminars for Museologists Made by Computer Scientists. In Proceedings of the EDULEARN 20, 12th Annual International Conference on Education and New Learning Technologies, Valencia, Spain, 6–7 July 2020; pp. 544–549. [Google Scholar]

- Graciano, A.; Ortega, L.; Segura, R.J.; Feito, F.R. Digitization of religious artifacts with a structured light scanner. Virtual Archaeol. Rev. 2017, 8, 49–55. [Google Scholar] [CrossRef]

- Skulimowski, S.; Badurowicz, M.; Barszcz, M.; Montusiewicz, J. Design and optimisation methods for interactive mobile VR visualisation. IOP Conf. Ser. Mater. Sci. Eng. 2019, 710, 012015. [Google Scholar] [CrossRef]

- Ciekanowska, A.; Kiszczak-Gliński, A.; Dziedzic, K. Comparative analysis of Unity and Unreal Engine efficiency in creating virtual exhibitions of 3D scanned models. J. Comput. Sci. Inst. 2021, 20, 247–253. [Google Scholar] [CrossRef]

- Montusiewicz, J.; Miłosz, M.; Kęsik, J.; Kayumov, R. Multidisciplinary Technologies for Creating Virtual Museums—A Case of Archaeological Museum Development. In Proceedings of the INTED 2018: 12th International Technology, Education and Development Conference, Valencia, Spain, 5–7 March 2018; pp. 326–336. [Google Scholar]

- Żyła, K.; Montusiewicz, J.; Skulimowski, S.; Kayumov, R. VR Technologies as an extension to the museum exhibition: A case study of the Silk Road museums in Samarkand. Muzeol. Kult. Dedicstvo Museol. Cult. Herit. 2020, 4, 73–93. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).