Co-Operative Binary Bat Optimizer with Rough Set Reducts for Text Feature Selection

Abstract

1. Introduction

- Controlling the population diversity during the search process using the multi-population BBA;

- Handling the high dimensionality of the feature space by using the divide and conquer strategy;

- Initializing a diverse population using the modified Latin Hypercube Sampling (LHS) initialization method;

- Better evaluation of the solutions using the adapted Rough Set (RS)-based fitness function that is independent of any classification method.

2. Related Work

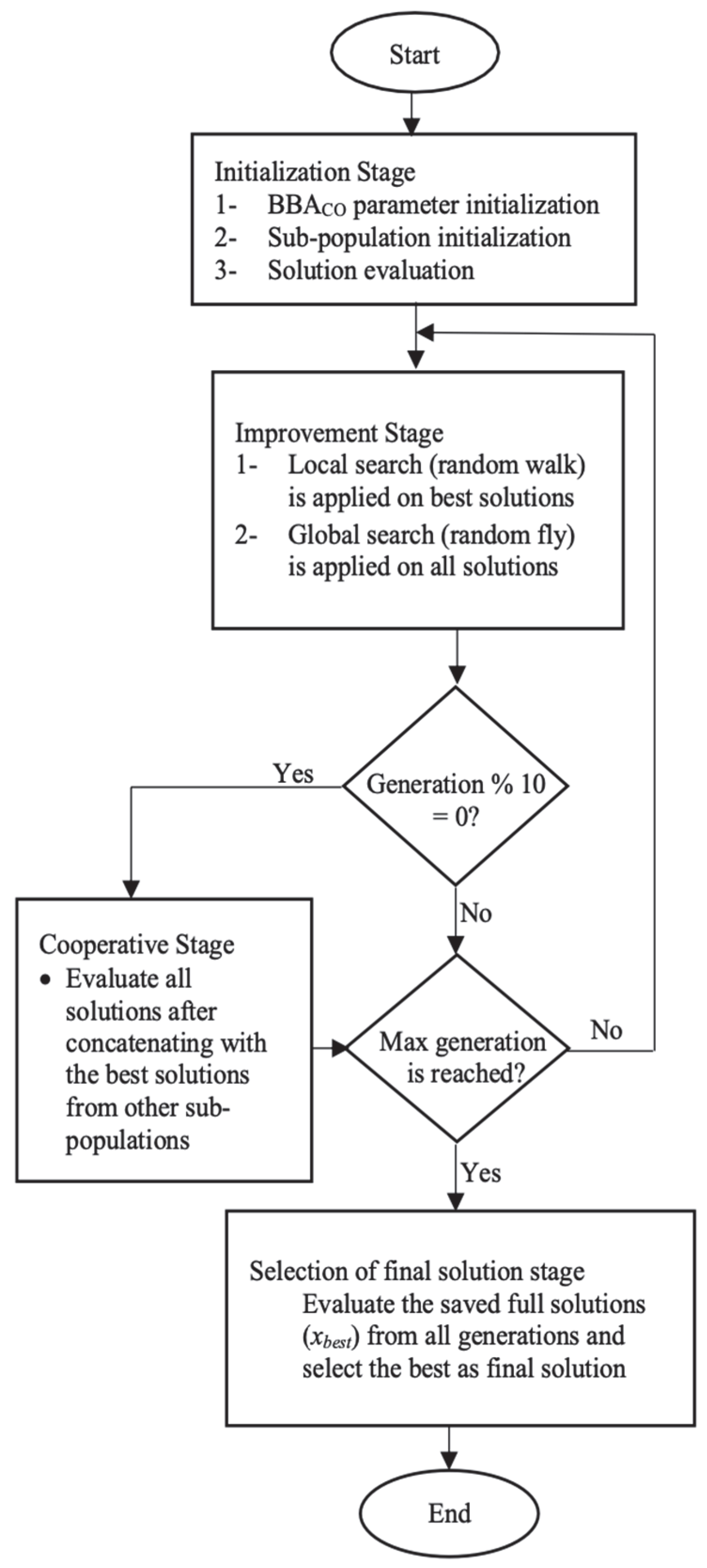

3. The Proposed Algorithm

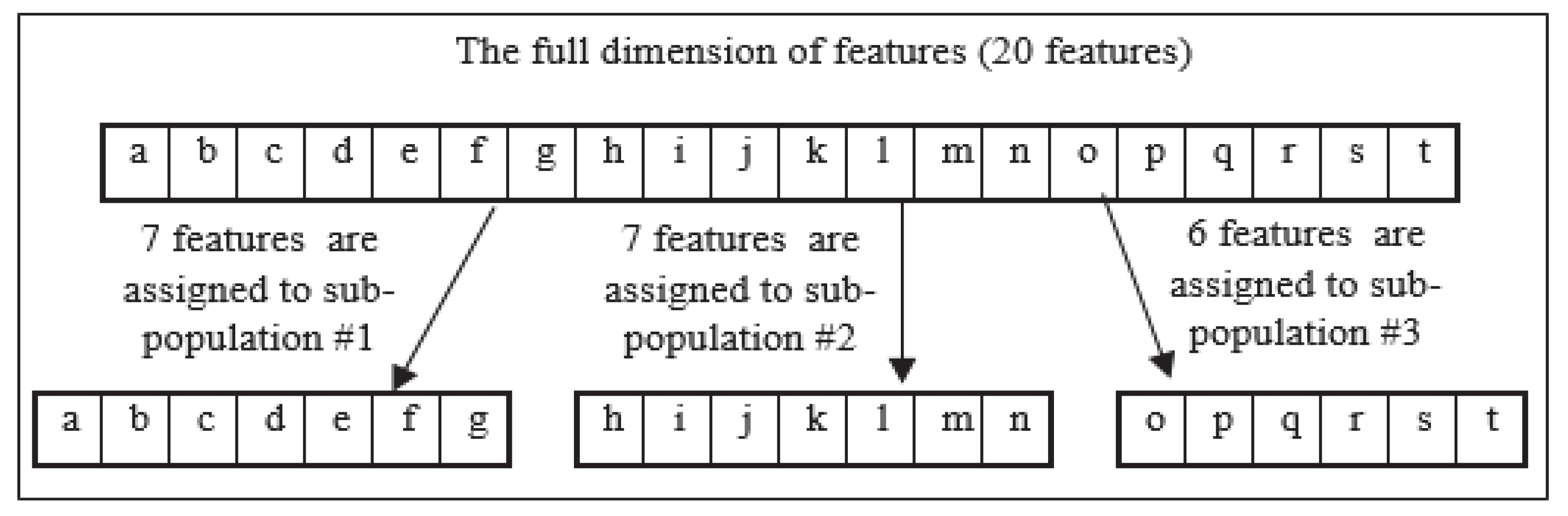

3.1. Initialization Stage

| Algorithm 1. Pseudocode of the initialization stage. |

| Initialization |

| //Step 1: BBACO parameter initialization Initialize SubPop-no, SubPop-size, Evaluate-fullSol-rate //Step 2: Sub-population initialization Size-PartialSolution = F/SubPop-no, where F total represents number of features Remainder = F%SubPop-no Foreach Sub-Population (i), where i represent the index from 0 to SubPop-no Ifi <= Reminder Size-PartialSolution(i) = Size-PartialSolution + 1 Else Size-PartialSolution(i) = Size-PartialSolution For1 to SubPop-size Generate initial solution using modified LHS method Initialize loudness (A), pulse rate (r), minimum frequency (Fmin), velocity (v), maximum frequency (Fmax); //Step 3: Solution evaluation Evaluate each solution in each sub-population using rough-set based objective function Assign the best solution into Combine all into Save in memory |

Modified LHS: Initial Population Generation

- Divide the length of the solution into equal segments, where the length of the solution is equal to the number of features. The following equation is used to determine the number of segments in each solution:where sn refers to the number of segments; F is the number of features and n is the number of solutions in the population. provides the number of segments that guarantees using each feature only one time in one solution. The parameter m is the upper band of the random number, and it ensures that the number of the selected features does not exceed half of the features. It should be noted that m is defined one time at the beginning of the initialization process. The reason behind using two different ways to calculate m (depending on the size of features and population) is to make the method more suitable with datasets of a different size;

- Calculate the length of the segments (sl) for each solution as follows:

| Algorithm 2. The modified LHS initialization method steps. |

| Modified LHS initialization method |

|

3.2. Improvement Stage

| Algorithm 3. Pseudocode of the local search. |

| Local search (random walk) |

| For each If (ri > rand [0, 1]) |

| Algorithm 4. Pseudocode of the global search. |

| Global search (random fly) |

| For each PartialSolution(pSol) in each sub-population (i) |

3.3. Cooperative Stage: FullSolutions Evaluation

| Algorithm 5. Pseudocode of the cooperative stage. |

| Cooperative stage |

| if (generation % 10 == 0) for each sub-population (i) for each PartialSolution concatenate with the best solutions of other sub-populations evaluate FullSolution update the fitness of the PartialSolution update the best PartialSolution |

3.4. Selection of FinalSolution

| Algorithm 6. Steps of selection of the final solution. |

| Selection of final solution |

| If (iter-no >100) For i = 1 to 100 Evaluate the saved FullSolution #i Select the best FullSolution as the final solution |

4. Experimental Setup

4.1. Pre-Processing

4.2. The Dataset

4.2.1. Reuters-21578 Dataset

4.2.2. WebKB Dataset

4.2.3. Mix-DS

4.2.4. Harian Metro Dataset

4.2.5. Al-Jazeera News Dataset

4.3. Evaluation Metric

4.3.1. Internal Evaluation Metric

Solution Quality

Size of the Selected Feature Set

Reduction Rate

Diversity during the Search Process

Convergence Behavior

Statistical Tests

4.3.2. External Evaluation Metric

Classification Performance

Statistical Test

5. Results and Discussion

5.1. Internal Evaluation

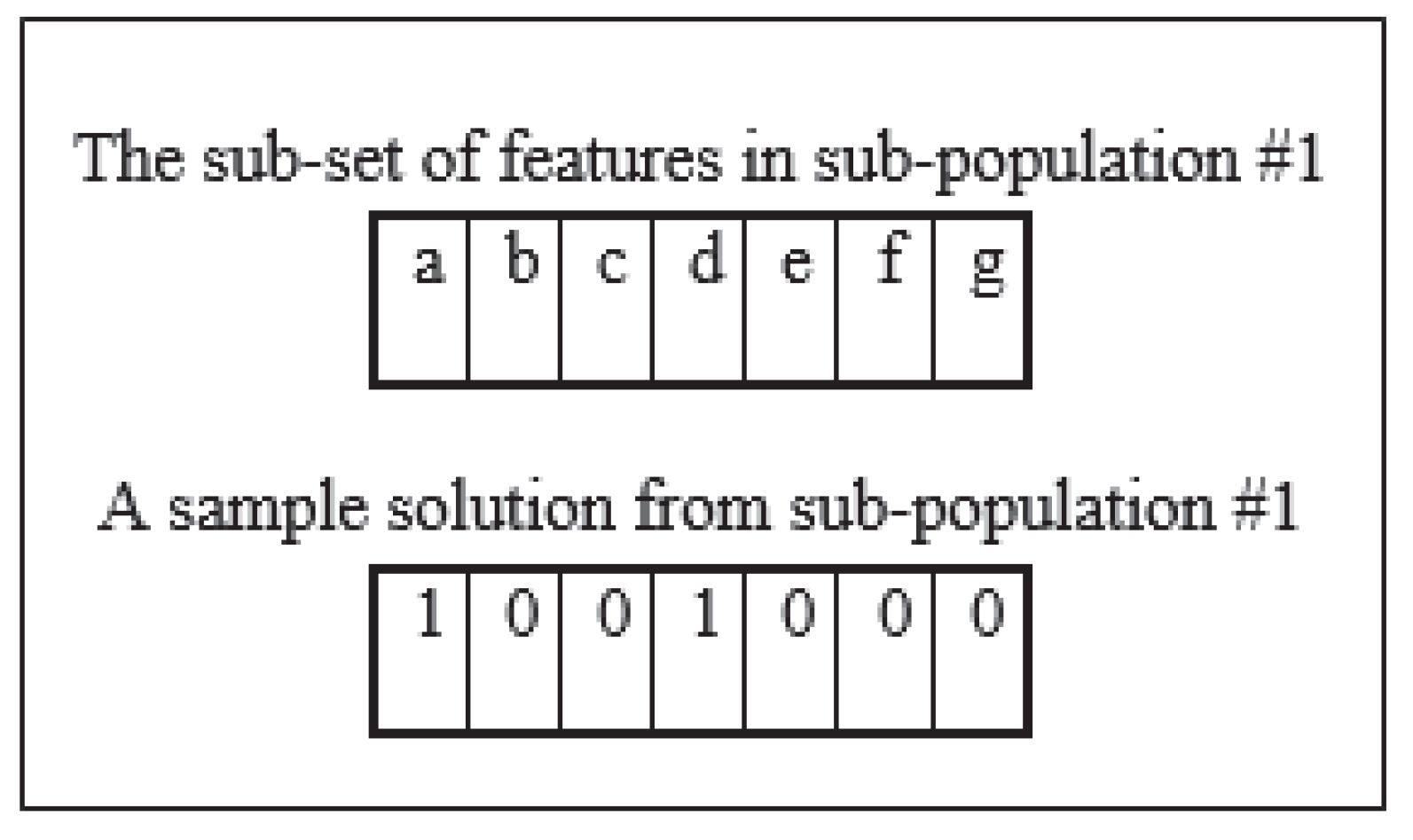

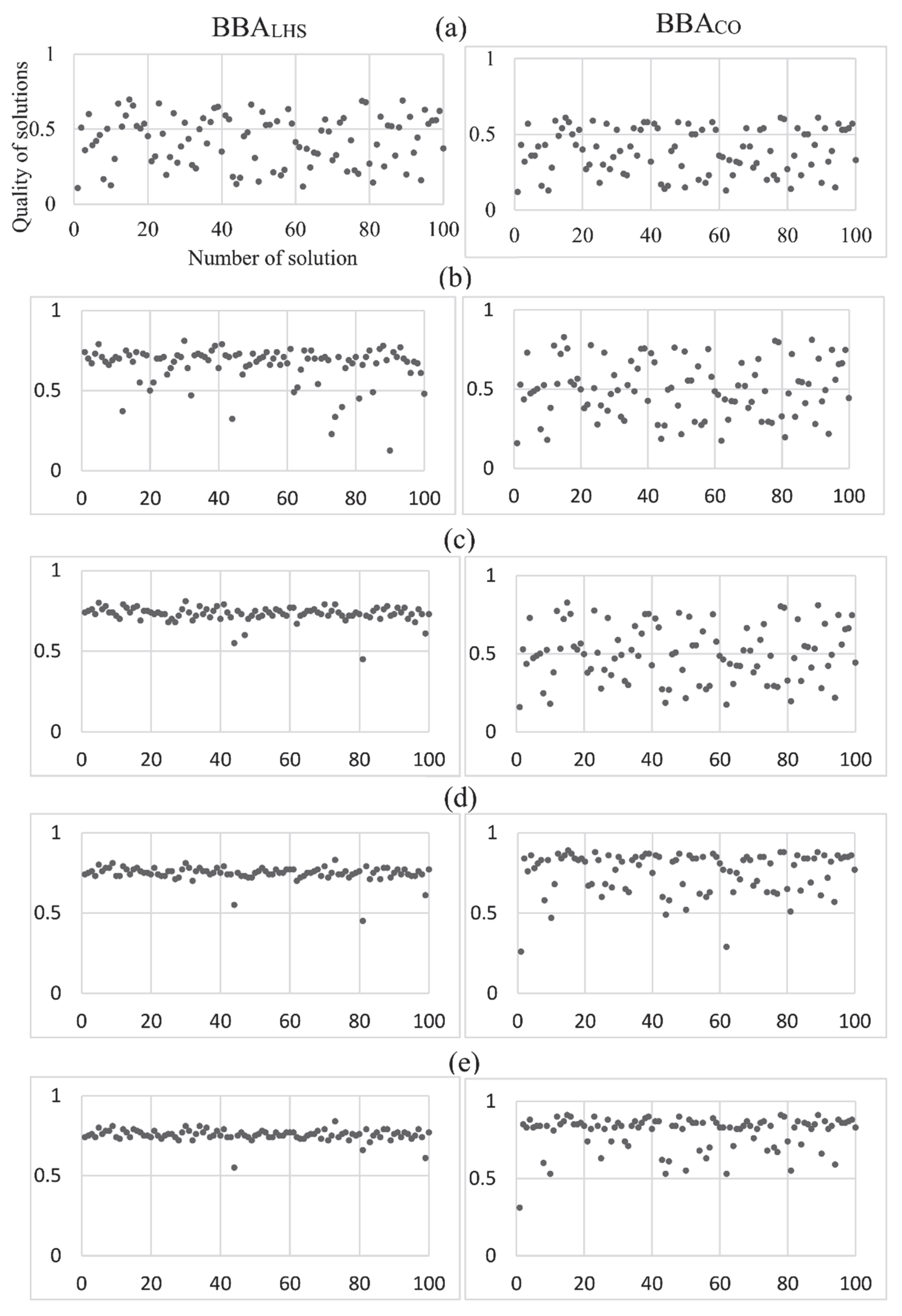

5.1.1. Population Diversity

5.1.2. Convergence Behavior

5.1.3. Statistical Test

Standard Deviation

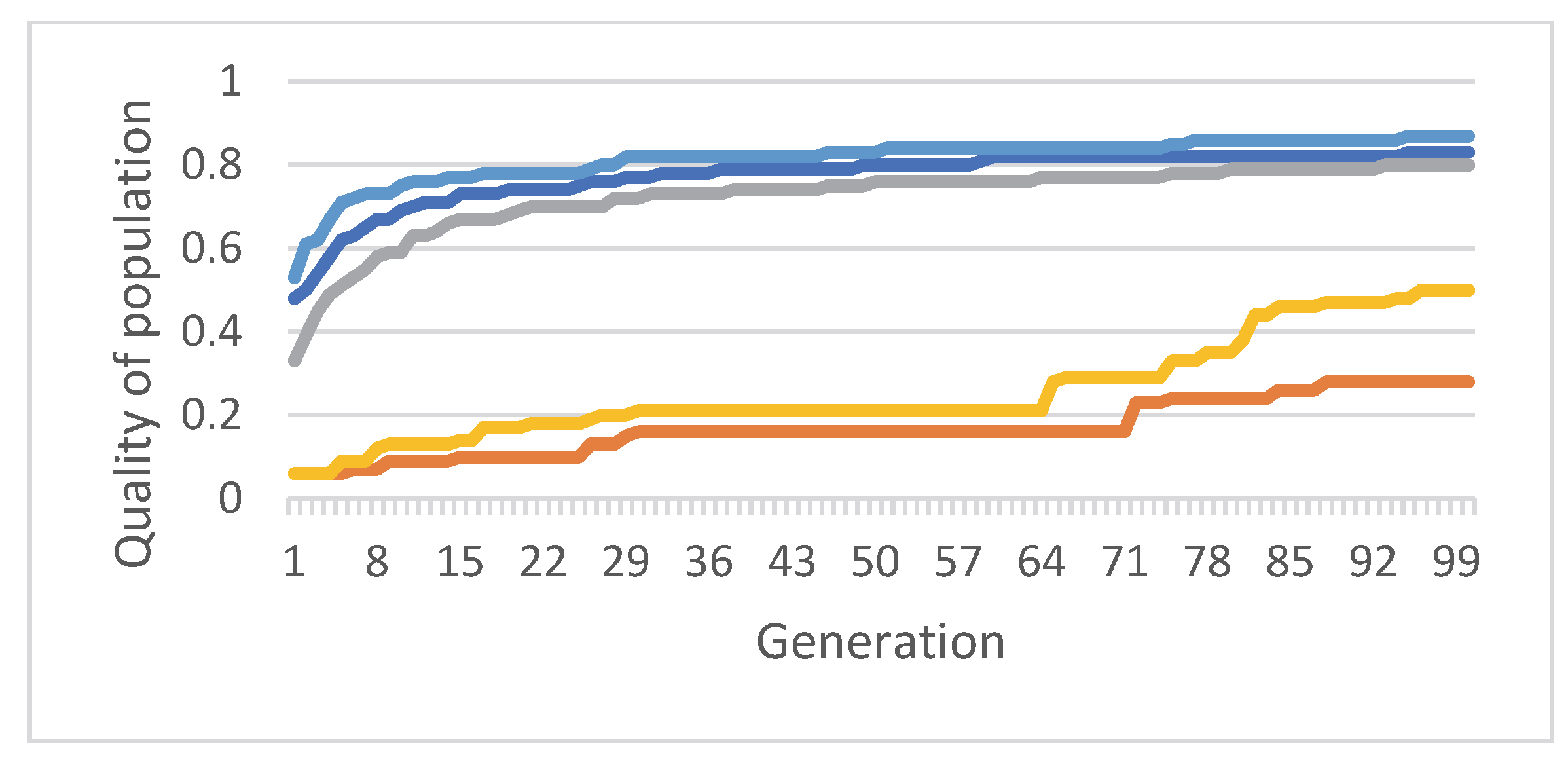

Population Quality

Relative Errors

The Quality and Reduction Rate of the Selected Feature Set

5.2. External Evaluation

5.3. Results on Non-English Datasets

5.3.1. Results of Malay Datasets

5.3.2. Results of Arabic Dataset

| Metric | Classifier | BPSO-KNN | EGA | CRFM | BBALHS | BBACO |

|---|---|---|---|---|---|---|

| Macro Average precision | NB | 85.76 | 91 | 88.77 | 91.34 | 91.64 |

| SVM | 93.7 | - | - | 90.86 | 92.22 | |

| Macro Average Recall | NB | 84.34 | 90.66 | 88.33 | 90.42 | 91.09 |

| SVM | 92.98 | - | - | 91.58 | 92.88 | |

| Macro Average F1 | NB | 84.63 | 90.83 | 88.55 | 90.73 | 91.24 |

| SVM | 93.12 | - | - | 91.07 | 92.42 |

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deng, X.; Li, Y.; Weng, J.; Zhang, J. Feature selection for text classification: A review. Multimed. Tools Appl. 2018, 78, 3797–3816. [Google Scholar] [CrossRef]

- Namous, F.; Faris, H.; Heidari, A.A.; Khalafat, M.; Alkhawaldeh, R.S.; Ghatasheh, N. Evolutionary and swarm-based feature selection for imbalanced data classification. In Evolutionary Machine Learning Techniques; Springer: Berlin/Heidelberg, Germany, 2020; pp. 231–250. [Google Scholar]

- Pervaiz, U.; Khawaldeh, S.; Aleef, T.A.; Minh, V.H.; Hagos, Y.B. Activity monitoring and meal tracking for cardiac rehabilitation patients. Int. J. Med. Eng. Inform. 2018, 10, 252–264. [Google Scholar] [CrossRef]

- Elminaam, D.S.A.; Nabil, A.; Ibraheem, S.A.; Houssein, E.H. An Efficient Marine Predators Algorithm for Feature Selection. IEEE Access 2021, 9, 60136–60153. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; El-Shahat, D.; El-Henawy, I.; de Albuquerque, V.H.C.; Mirjalili, S. A new fusion of grey wolf optimizer algorithm with a two-phase mutation for feature selection. Expert Syst. Appl. 2020, 139, 112824. [Google Scholar] [CrossRef]

- Qaraad, M.; Amjad, S.; Hussein, N.K.; Elhosseini, M.A. Large scale salp-based grey wolf optimization for feature selection and global optimization. Neural Comput. Appl. 2022, 34, 8989–9014. [Google Scholar] [CrossRef]

- Labani, M.; Moradi, P.; Jalili, M. A multi-objective genetic algorithm for text feature selection using the relative discriminative criterion. Expert Syst. Appl. 2020, 149, 113276. [Google Scholar] [CrossRef]

- Al-Dyani, W.Z.; Ahmad, F.K.; Kamaruddin, S.S. Binary Bat Algorithm for text feature selection in news events detection model using Markov clustering. Cogent Eng. 2021, 9, 2010923. [Google Scholar] [CrossRef]

- BinSaeedan, W.; Alramlawi, S. CS-BPSO: Hybrid feature selection based on chi-square and binary PSO algorithm for Arabic email authorship analysis. Knowl.-Based Syst. 2021, 227, 107224. [Google Scholar] [CrossRef]

- Feng, J.; Kuang, H.; Zhang, L. EBBA: An Enhanced Binary Bat Algorithm Integrated with Chaos Theory and Lévy Flight for Feature Selection. Future Internet 2022, 14, 178. [Google Scholar] [CrossRef]

- Hashemi, A.; Joodaki, M.; Joodaki, N.Z.; Dowlatshahi, M.B. Ant colony optimization equipped with an ensemble of heuristics through multi-criteria decision making: A case study in ensemble feature selection. Appl. Soft Comput. 2022, 124, 109046. [Google Scholar] [CrossRef]

- Ibrahim, A.M.; Tawhid, M.A. A new hybrid binary algorithm of bat algorithm and differential evolution for feature selection and classification. In Applications of bat Algorithm and Its Variants; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–18. [Google Scholar]

- Li, A.-D.; Xue, B.; Zhang, M. Improved binary particle swarm optimization for feature selection with new initialization and search space reduction strategies. Appl. Soft Comput. 2021, 106, 107302. [Google Scholar] [CrossRef]

- Ma, W.; Zhou, X.; Zhu, H.; Li, L.; Jiao, L. A two-stage hybrid ant colony optimization for high-dimensional feature selection. Pattern Recognit. 2021, 116, 107933. [Google Scholar] [CrossRef]

- Paul, D.; Jain, A.; Saha, S.; Mathew, J. Multi-objective PSO based online feature selection for multi-label classification. Knowl.-Based Syst. 2021, 222, 106966. [Google Scholar] [CrossRef]

- Tripathi, D.; Reddy, B.R.; Reddy, Y.P.; Shukla, A.K.; Kumar, R.K.; Sharma, N.K. BAT algorithm based feature selection: Application in credit scoring. J. Intell. Fuzzy Syst. 2021, 41, 5561–5570. [Google Scholar] [CrossRef]

- Xue, Y.; Zhu, H.; Liang, J.; Słowik, A. Adaptive crossover operator based multi-objective binary genetic algorithm for feature selection in classification. Knowl.-Based Syst. 2021, 227, 107218. [Google Scholar] [CrossRef]

- Yasaswini, V.; Baskaran, S. An Optimization of Feature Selection for Classification Using Modified Bat Algorithm. In Advanced Computing and Intelligent Technologies; Springer: Berlin/Heidelberg, Germany, 2022; pp. 389–399. [Google Scholar]

- Alim, A.; Naseem, I.; Togneri, R.; Bennamoun, M. The most discriminant subbands for face recognition: A novel information-theoretic framework. Int. J. Wavelets Multiresolution Inf. Process 2018, 16, 1850040. [Google Scholar] [CrossRef]

- Yang, X.-S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Al-Betar, M.A.; Alomari, O.A.; Abu-Romman, S.M. A TRIZ-inspired bat algorithm for gene selection in cancer classification. Genomics 2019, 112, 114–126. [Google Scholar] [CrossRef]

- Alsalibi, B.; Abualigah, L.; Khader, A.T. A novel bat algorithm with dynamic membrane structure for optimization problems. Appl. Intell. 2020, 51, 1992–2017. [Google Scholar] [CrossRef]

- Devi, D.R.; Sasikala, S. Online Feature Selection (OFS) with Accelerated Bat Algorithm (ABA) and Ensemble Incremental Deep Multiple Layer Perceptron (EIDMLP) for big data streams. J. Big Data 2019, 6, 103. [Google Scholar] [CrossRef]

- Dhal, K.G.; Das, S. Local search-based dynamically adapted bat algorithm in image enhancement domain. Int. J. Comput. Sci. Math. 2020, 11, 1–28. [Google Scholar] [CrossRef]

- Gupta, D.; Arora, J.; Agrawal, U.; Khanna, A.; de Albuquerque, V.H.C. Optimized Binary Bat algorithm for classification of white blood cells. Measurement 2019, 143, 180–190. [Google Scholar] [CrossRef]

- Lu, Y.; Jiang, T. Bi-Population Based Discrete Bat Algorithm for the Low-Carbon Job Shop Scheduling Problem. IEEE Access 2019, 7, 14513–14522. [Google Scholar] [CrossRef]

- Nakamura RY, M.; Pereira LA, M.; Rodrigues, D.; Costa KA, P.; Papa, J.P.; Yang, X.S. Binary bat algorithm for feature selection. In Swarm Intelligence and Bio-Inspired Computation; Elsevier: Amsterdam, The Netherlands, 2013; pp. 225–237. [Google Scholar]

- Ebrahimpour, M.K.; Nezamabadi-Pour, H.; Eftekhari, M. CCFS: A cooperating coevolution technique for large scale feature selection on microarray datasets. Comput. Biol. Chem. 2018, 73, 171–178. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Ewees, A.A.; Neggaz, N.; Ibrahim, R.A.; Al-Qaness, M.A.; Lu, S. Cooperative meta-heuristic algorithms for global optimization problems. Expert Syst. Appl. 2021, 176, 114788. [Google Scholar] [CrossRef]

- Karmakar, K.; Das, R.K.; Khatua, S. An ACO-based multi-objective optimization for cooperating VM placement in cloud data center. J. Supercomput. 2021, 78, 3093–3121. [Google Scholar] [CrossRef]

- Li, H.; He, F.; Chen, Y.; Pan, Y. MLFS-CCDE: Multi-objective large-scale feature selection by cooperative coevolutionary differential evolution. Memetic Comput. 2021, 13, 1–18. [Google Scholar] [CrossRef]

- Rashid, A.N.M.B.; Ahmed, M.; Sikos, L.F.; Haskell-Dowland, P. Cooperative co-evolution for feature selection in Big Data with random feature grouping. J. Big Data 2020, 7, 107. [Google Scholar] [CrossRef]

- Valenzuela-Alcaraz, V.M.; Cosío-León, M.; Romero-Ocaño, A.D.; Brizuela, C.A. A cooperative coevolutionary algorithm approach to the no-wait job shop scheduling problem. Expert Syst. Appl. 2022, 194, 116498. [Google Scholar] [CrossRef]

- Jarray, R.; Al-Dhaifallah, M.; Rezk, H.; Bouallègue, S. Parallel Cooperative Coevolutionary Grey Wolf Optimizer for Path Planning Problem of Unmanned Aerial Vehicles. Sensors 2022, 22, 1826. [Google Scholar] [CrossRef]

- Jafarian, A.; Rabiee, M.; Tavana, M. A novel multi-objective co-evolutionary approach for supply chain gap analysis with consideration of uncertainties. Int. J. Prod. Econ. 2020, 228, 107852. [Google Scholar] [CrossRef]

- Zhang, G.; Liu, B.; Wang, L.; Yu, D.; Xing, K. Distributed Co-Evolutionary Memetic Algorithm for Distributed Hybrid Differentiation Flowshop Scheduling Problem. IEEE Trans. Evol. Comput. 2022, 26, 1043–1057. [Google Scholar] [CrossRef]

- Peng, X.; Jin, Y.; Wang, H. Multimodal Optimization Enhanced Cooperative Coevolution for Large-Scale Optimization. IEEE Trans. Cybern. 2018, 49, 3507–3520. [Google Scholar] [CrossRef]

- Camacho-Vallejo, J.-F.; Garcia-Reyes, C. Co-evolutionary algorithms to solve hierarchized Steiner tree problems in telecommunication networks. Appl. Soft Comput. 2019, 84, 105718. [Google Scholar] [CrossRef]

- Xue, X.; Pan, J.-S. A Compact Co-Evolutionary Algorithm for sensor ontology meta-matching. Knowl. Inf. Syst. 2017, 56, 335–353. [Google Scholar] [CrossRef]

- Akinola, A. Implicit Multi-Objective Coevolutionary Algorithm; University of Guelph: Guelph, ON, Canada, 2019. [Google Scholar]

- Costa, V.; Lourenço, N.; Machado, P. Coevolution of generative adversarial networks. In Proceedings of the International Conference on the Applications of Evolutionary Computation (Part of EvoStar), Leipzig, Germany, 24–26 April 2019; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Wen, Y.; Xu, H. A cooperative coevolution-based pittsburgh learning classifier system embedded with memetic feature selection. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Bergh, F.V.D.; Engelbrecht, A. A Cooperative Approach to Particle Swarm Optimization. IEEE Trans. Evol. Comput. 2004, 8, 225–239. [Google Scholar] [CrossRef]

- Krohling, R.A.; Coelho, L.D.S. Coevolutionary Particle Swarm Optimization Using Gaussian Distribution for Solving Constrained Optimization Problems. IEEE Trans. Syst. Man Cybern. Part B 2006, 36, 1407–1416. [Google Scholar] [CrossRef]

- Yang, Z.; Tang, K.; Yao, X. Large scale evolutionary optimization using cooperative coevolution. Inf. Sci. 2008, 178, 2985–2999. [Google Scholar] [CrossRef]

- Goh, C.; Tan, K.; Liu, D.; Chiam, S. A competitive and cooperative co-evolutionary approach to multi-objective particle swarm optimization algorithm design. Eur. J. Oper. Res. 2010, 202, 42–54. [Google Scholar] [CrossRef]

- Li, X.; Yao, X. Cooperatively Coevolving Particle Swarms for Large Scale Optimization. IEEE Trans. Evol. Comput. 2011, 16, 210–224. [Google Scholar] [CrossRef]

- Jiao, L.; Wang, H.; Shang, R.; Liu, F. A co-evolutionary multi-objective optimization algorithm based on direction vectors. Inf. Sci. 2013, 228, 90–112. [Google Scholar] [CrossRef]

- Wang, M.; Wang, X.; Wang, Y.; Wei, Z. An Adaptive Co-evolutionary Algorithm Based on Genotypic Diversity Measure. In Proceedings of the 2014 Tenth International Conference on Computational Intelligence and Security, Kunming, China, 15–16 November 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Jiang, W.-Y.; Lin, Y.; Chen, M.; Yu, Y.-Y. A co-evolutionary improved multi-ant colony optimization for ship multiple and branch pipe route design. Ocean Eng. 2015, 102, 63–70. [Google Scholar] [CrossRef]

- Pan, Q.-K. An effective co-evolutionary artificial bee colony algorithm for steelmaking-continuous casting scheduling. Eur. J. Oper. Res. 2016, 250, 702–714. [Google Scholar] [CrossRef]

- Gong, M.; Li, H.; Luo, E.; Liu, J.; Liu, J. A Multiobjective Cooperative Coevolutionary Algorithm for Hyperspectral Sparse Unmixing. IEEE Trans. Evol. Comput. 2016, 21, 234–248. [Google Scholar] [CrossRef]

- Atashpendar, A.; Dorronsoro, B.; Danoy, G.; Bouvry, P. A scalable parallel cooperative coevolutionary PSO algorithm for multi-objective optimization. J. Parallel Distrib. Comput. 2018, 112, 111–125. [Google Scholar] [CrossRef]

- Jia, Y.-H.; Chen, W.-N.; Gu, T.; Zhang, H.; Yuan, H.-Q.; Kwong, S.; Zhang, J. Distributed Cooperative Co-Evolution With Adaptive Computing Resource Allocation for Large Scale Optimization. IEEE Trans. Evol. Comput. 2018, 23, 188–202. [Google Scholar] [CrossRef]

- Yaman, A.; Mocanu, D.C.; Iacca, G.; Fletcher, G.; Pechenizkiy, M. Limited evaluation cooperative co-evolutionary differential evolution for large-scale neuroevolution. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 569–576. [Google Scholar] [CrossRef]

- Sun, L.; Lin, L.; Gen, M.; Li, H. A Hybrid Cooperative Coevolution Algorithm for Fuzzy Flexible Job Shop Scheduling. IEEE Trans. Fuzzy Syst. 2019, 27, 1008–1022. [Google Scholar] [CrossRef]

- Sun, W.; Wu, Y.; Lou, Q.; Yu, Y. A Cooperative Coevolution Algorithm for the Seru Production With Minimizing Makespan. IEEE Access 2019, 7, 5662–5670. [Google Scholar] [CrossRef]

- Fu, G.; Wang, C.; Zhang, D.; Zhao, J.; Wang, H. A Multiobjective Particle Swarm Optimization Algorithm Based on Multipopulation Coevolution for Weapon-Target Assignment. Math. Probl. Eng. 2019, 2019, 1424590. [Google Scholar] [CrossRef]

- Xiao, Q.-Z.; Zhong, J.; Feng, L.; Luo, L.; Lv, J. A Cooperative Coevolution Hyper-Heuristic Framework for Workflow Scheduling Problem. IEEE Trans. Serv. Comput. 2019, 15, 150–163. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Herrera, F. IFS-CoCo: Instance and feature selection based on cooperative coevolution with nearest neighbor rule. Pattern Recognit. 2010, 43, 2082–2105. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Herrera, F. A first study on the use of coevolutionary algorithms for instance and feature selection. In Proceedings of the International Conference on Hybrid Artificial Intelligence Systems, Salamanca, Spain, 10–12 June 2009; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Tian, J.; Li, M.; Chen, F. Dual-population based coevolutionary algorithm for designing RBFNN with feature selection. Expert Syst. Appl. 2010, 37, 6904–6918. [Google Scholar] [CrossRef]

- Ding, W.-P.; Lin, C.-T.; Prasad, M.; Chen, S.-B.; Guan, Z.-J. Attribute Equilibrium Dominance Reduction Accelerator (DCCAEDR) Based on Distributed Coevolutionary Cloud and Its Application in Medical Records. IEEE Trans. Syst. Man Cybern. Syst. 2015, 46, 384–400. [Google Scholar] [CrossRef]

- Cheng, Y.; Zheng, Z.; Wang, J.; Yang, L.; Wan, S. Attribute Reduction Based on Genetic Algorithm for the Coevolution of Meteorological Data in the Industrial Internet of Things. Wirel. Commun. Mob. Comput. 2019, 2019, 3525347. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Taguchi, G. System of Experimental Design; Engineering Methods to Optimize Quality and Minimize Costs. 1987. Available online: https://openlibrary.org/books/OL14475330M/System_of_experimental_design (accessed on 25 October 2022).

- Conover, W. On a Better Method of Selecting Values of Input Variables for Computer Codes. 1975. Unpublished Manuscript. Available online: https://www.tandfonline.com/doi/abs/10.1080/00401706.2000.10485979 (accessed on 25 October 2022).

- Hamdan, M.; Qudah, O. The initialization of evolutionary multi-objective optimization algorithms. In Proceedings of the International Conference in Swarm Intelligence, Beijing, China, 25–28 June 2015; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Ghareb, A.S.; Abu Bakar, A.; Hamdan, A.R. Hybrid feature selection based on enhanced genetic algorithm for text categorization. Expert Syst. Appl. 2016, 49, 31–47. [Google Scholar] [CrossRef]

- Aghdam, M.H.; Heidari, S. Feature Selection Using Particle Swarm Optimization in Text Categorization. J. Artif. Intell. Soft Comput. Res. 2015, 5, 231–238. [Google Scholar] [CrossRef]

- Abdul-Rahman, S.; Bakar, A.A.; Mohamed-Hussein, Z.-A. An Improved Particle Swarm Optimization via Velocity-Based Reinitialization for Feature Selection. In Proceedings of the International Conference on Soft Computing in Data Science, Putrajaya, Malaysia, 2–3 September 2015; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Rehman, A.; Javed, K.; Babri, H.A. Feature selection based on a normalized difference measure for text classification. Inf. Process. Manag. 2017, 53, 473–489. [Google Scholar] [CrossRef]

- Paul, P.V.; Dhavachelvan, P.; Baskaran, R. A novel population initialization technique for genetic algorithm. In Proceedings of the 2013 International Conference on Circuits, Power and Computing Technologies (ICCPCT), Nagercoil, India, 20–21 March 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Zhai, Y.; Song, W.; Liu, X.; Liu, L.; Zhao, X. A chi-square statistics based feature selection method in text classification. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Ahmad, I.S.; Abu Bakar, A.; Yaakub, M.R. A review of feature selection in sentiment analysis using information gain and domain specific ontology. Int. J. Adv. Comput. Res. 2019, 9, 283–292. [Google Scholar] [CrossRef]

- Algehyne, E.A.; Jibril, M.L.; Algehainy, N.A.; Alamri, O.A.; Alzahrani, A.K. Fuzzy Neural Network Expert System with an Improved Gini Index Random Forest-Based Feature Importance Measure Algorithm for Early Diagnosis of Breast Cancer in Saudi Arabia. Big Data Cogn. Comput. 2022, 6, 13. [Google Scholar] [CrossRef]

- Thaseen, I.S.; Kumar, C.A.; Ahmad, A. Integrated Intrusion Detection Model Using Chi-Square Feature Selection and Ensemble of Classifiers. Arab. J. Sci. Eng. 2018, 44, 3357–3368. [Google Scholar] [CrossRef]

- Chantar, H.K.; Corne, D.W. Feature subset selection for Arabic document categorization using BPSO-KNN. In Proceedings of the 2011 Third World Congress on Nature and Biologically Inspired Computing, Salamanca, Spain, 19–21 October 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Ghareb, A.S.; Abu Bakar, A.; Al-Radaideh, Q.A.; Hamdan, A.R. Enhanced Filter Feature Selection Methods for Arabic Text Categorization. Int. J. Inf. Retr. Res. 2018, 8, 1–24. [Google Scholar] [CrossRef]

- Adel, A.; Omar, N.; Abdullah, S.; Al-Shabi, A. Feature Selection Method Based on Statistics of Compound Words for Arabic Text Classification. Int. Arab J. Inf. Technol. 2019, 16, 178–185. [Google Scholar]

| Parameter | Definition | Level | ||

|---|---|---|---|---|

| 1 | 2 | 3 | ||

| SubPop-size | The sub-population size | 50 | 100 | 150 |

| SubPop-no | The number of sub-populations | 10 | 15 | 20 |

| Evaluate-fulSol-rate | The number of reproduced generations before evaluating the full dimension solutions (referred to as FullSolution) | 10 | 20 | 30 |

| Sukan | Bisnes | Pendidikan | Sains-Teknologi | Hiburan | Politik | Total | |

|---|---|---|---|---|---|---|---|

| utusan online | 170 | 238 | 300 | 220 | 1030 | 0 | 1958 |

| mstar | 3392 | 10 | 0 | 0 | 4533 | 0 | 7935 |

| astroawani | 96 | 96 | 0 | 96 | 96 | 96 | 480 |

| bharian | 527 | 1292 | 25 | 0 | 27 | 25 | 1896 |

| Total | 4185 | 1636 | 325 | 316 | 5686 | 121 | 12,269 |

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBACO | BBALHS | BBACO | BBALHS |

| generation 1 | ||||

| Mean | 0.16 | 0.14 | 0.14 | 0.13 |

| p-Value | 0.13 | 0.36 | 0.74 | 0.29 |

| t State | 3.75 | 4.38 | ||

| t Critical two-tail | 2.00 | 2.01 | ||

| generation 20 | ||||

| Mean | 0.15 | 0.12 | 0.14 | 0.13 |

| p-Value | 0.58 | 0.16 | 0.36 | 0.12 |

| t State | 13.96 | 1.94 | ||

| t Critical two-tail | 2.03 | 2.01 | ||

| generation 50 | ||||

| Mean | 0.14 | 0.08 | 0.11 | 0.09 |

| p-Value | 0.12 | 0.18 | 0.53 | 0.13 |

| t State | 7.93 | 3.61 | ||

| t Critical two-tail | 2.01 | 2.00 | ||

| generation 80 | ||||

| Mean | 0.12 | 0.06 | 0.09 | 0.08 |

| p-Value | 0.20 | 0.29 | 0.18 | 0.08 |

| t State | 15.67 | 2.98 | ||

| t Critical two-tail | 2.01 | 2.00 | ||

| generation 100 | ||||

| Mean | 0.10 | 0.05 | 0.07 | 0.06 |

| p-Value | 0.17 | 0.13 | 0.09 | 0.07 |

| t State | 11.72 | 2.24 | ||

| t Critical two-tail | 2.01 | 2.02 | ||

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBACO | BBALHS | BBACO | BBALHS |

| generation 1 | ||||

| Mean | 37.28 | 44.66 | 32.08 | 45.86 |

| p-Value | 0.39 | 0.35 | 0.26 | 0.31 |

| t State | −7.60 | −18.59 | ||

| t Critical two-tail | 2.02 | 2.00 | ||

| generation 20 | ||||

| Mean | 52.33 | 55.36 | 56.48 | 64.28 |

| p-Value | 0.32 | 0.16 | 0.13 | 0.36 |

| t State | −3.26 | −7.38 | ||

| t Critical two-tail | 2.04 | 2.04 | ||

| generation 50 | ||||

| Mean | 60.23 | 57.54 | 64.15 | 70.78 |

| p-Value | 0.64 | 0.06 | 0.19 | 0.15 |

| t State | 2.14 | −8.38 | ||

| t Critical two-tail | 2.00 | 2.04 | ||

| generation 80 | ||||

| Mean | 72.98 | 70.83 | 73.09 | 71.47 |

| p-Value | 0.43 | 0.13 | 0.16 | 0.29 |

| t State | 3.48 | 2.58 | ||

| t Critical two-tail | 2.03 | 2.03 | ||

| generation 100 | ||||

| Mean | 73.98 | 71.16 | 74.27 | 72.79 |

| p-Value | 0.51 | 0.29 | 0.22 | 0.18 |

| t State | 2.79 | 2.82 | ||

| t Critical two-tail | 2.00 | 2.01 | ||

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBACO | BBALHS | BBACO | BBALHS |

| generation 1 | ||||

| Mean | 35.83 | 31.96 | 47.16 | 34.30 |

| p-Value | 0.63 | 0.39 | 0.08 | 0.15 |

| t State | 2.11 | 18.56 | ||

| t Critical two-tail | 2.03 | 2.03 | ||

| generation 20 | ||||

| Mean | 24.03 | 20.50 | 23.75 | 23.50 |

| p-Value | 0.79 | 0.38 | 0.13 | 0.15 |

| t State | 3.14 | 0.21 | ||

| t Critical two-tail | 2.03 | 2.01 | ||

| generation 50 | ||||

| Mean | 20.42 | 20.00 | 21.86 | 23.37 |

| p-Value | 0.72 | 0.25 | 0.42 | 0.27 |

| t State | 0.45 | −1.04 | ||

| t Critical two-tail | 2.03 | 2.00 | ||

| generation 80 | ||||

| Mean | 14.80 | 18.50 | 18.98 | 21.50 |

| p-Value | 0.54 | 0.29 | 0.36 | 0.16 |

| t State | −7.24 | −3.22 | ||

| t Critical two-tail | 2.01 | 2.04 | ||

| generation 100 | ||||

| Mean | 14.60 | 18.50 | 15.89 | 21.00 |

| p-Value | 0.84 | 0.27 | 0.45 | 0.19 |

| t State | −4.57 | −7.05 | ||

| t Critical two-tail | 2.02 | 2.03 | ||

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBACO | BBALHS | BBACO | BBALHS |

| generation 1 | ||||

| Mean | 90.85 | 86.90 | 90.72 | 86.78 |

| p-Value | 0.14 | 0.38 | 0.40 | 0.16 |

| t State | 7.45 | 3.50 | ||

| t Critical two-tail | 2.00 | 2.02 | ||

| generation 20 | ||||

| Mean | 87.91 | 86.25 | 88.00 | 85.24 |

| p-Value | 0.18 | 0.21 | 0.32 | 0.06 |

| t State | 1.88 | 2.51 | ||

| t Critical two-tail | 2.03 | 2.00 | ||

| generation 50 | ||||

| Mean | 84.73 | 85.61 | 84.00 | 81.65 |

| p-Value | 0.09 | 0.19 | 0.10 | 0.13 |

| t State | −0.90 | 1.86 | ||

| t Critical two-tail | 2.00 | 2.00 | ||

| generation 80 | ||||

| Mean | 78.07 | 68.95 | 75.12 | 79.15 |

| p-Value | 0.17 | 0.32 | 0.07 | 0.16 |

| t State | 6.88 | −2.00 | ||

| t Critical two-tail | 2.02 | 2.00 | ||

| generation 100 | ||||

| Mean | 74.52 | 62.95 | 70.85 | 77.64 |

| p-Value | 0.24 | 0.14 | 0.21 | 0.08 |

| t State | 5.64 | −2.84 | ||

| t Critical two-tail | 2.00 | 2.02 | ||

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBACO | BBALHS | BBACO | BBALHS |

| generation 1 | ||||

| Mean | 90.85 | 86.90 | 90.72 | 86.78 |

| p-Value | 0.14 | 0.38 | 0.40 | 0.16 |

| t State | 7.45 | 3.50 | ||

| t Critical two-tail | 2.00 | 2.02 | ||

| generation 20 | ||||

| Mean | 87.91 | 86.25 | 88.00 | 85.24 |

| p-Value | 0.18 | 0.21 | 0.32 | 0.06 |

| t State | 1.88 | 2.51 | ||

| t Critical two-tail | 2.03 | 2.00 | ||

| generation 50 | ||||

| Mean | 84.73 | 85.61 | 84.00 | 81.65 |

| p-Value | 0.09 | 0.19 | 0.10 | 0.13 |

| t State | −0.90 | 1.86 | ||

| t Critical two-tail | 2.00 | 2.00 | ||

| generation 80 | ||||

| Mean | 78.07 | 68.95 | 75.12 | 79.15 |

| p-Value | 0.17 | 0.32 | 0.07 | 0.16 |

| t State | 6.88 | −2.00 | ||

| t Critical two-tail | 2.02 | 2.00 | ||

| generation 100 | ||||

| Mean | 74.52 | 62.95 | 70.85 | 77.64 |

| p-Value | 0.24 | 0.14 | 0.21 | 0.08 |

| t State | 5.64 | −2.84 | ||

| t Critical two-tail | 2.00 | 2.02 | ||

| Class Name | Quality of Final Solution | # of the Selected Features | Reduction Rate (%) |

|---|---|---|---|

| Earn | 0.87 | 307 | 82.87 |

| Acquisition | 0.88 | 394 | 84.76 |

| Trade | 0.90 | 103 | 89.24 |

| Ship | 0.88 | 56 | 84.09 |

| Grain | 0.73 | 26 | 82.31 |

| Crude | 0.75 | 131 | 85.11 |

| Interest | 0.83 | 45 | 88.64 |

| Money-fx | 0.76 | 78 | 87.62 |

| Corn | 0.74 | 89 | 88.35 |

| Wheat | 0.79 | 157 | 81.06 |

| Class Name | Quality of Final Solution | # of the Selected Features | Reduction Rate (%) |

|---|---|---|---|

| Student | 0.81 | 226 | 90.23 |

| Faculty | 0.87 | 179 | 93.39 |

| Course | 0.74 | 105 | 94.41 |

| Project | 0.71 | 103 | 93.51 |

| Dataset | Metric | Classifier | CHI | IG | GI | BBALHS | BBACO |

|---|---|---|---|---|---|---|---|

| Reuters | Micro average F1 | NB | 88.80 | 90.80 | 90.50 | 92.78 | 93.76 |

| SVM | 86.70 | 89.40 | 89.80 | 92.55 | 94.08 | ||

| KNN | 86.30 | 89.90 | 90.30 | 92.63 | 93.17 | ||

| Macro average F1 | NB | 79.50 | 78.50 | 77.20 | 89.87 | 90.03 | |

| SVM | 81.70 | 77.20 | 75.90 | 88.76 | 90.05 | ||

| KNN | 66.60 | 68.30 | 69.10 | 88.04 | 89.49 | ||

| WebKB | Micro average F1 | NB | 79.50 | 78.20 | 77.50 | 91.79 | 92.72 |

| SVM | 88.30 | 89.30 | 89.10 | 91.64 | 92.84 | ||

| KNN | 65.30 | 66.70 | 65.70 | 90.94 | 92.06 | ||

| Macro average F1 | NB | 78.20 | 76.90 | 75.90 | 89.82 | 91.67 | |

| SVM | 87.00 | 87.90 | 87.80 | 89.37 | 90.51 | ||

| KNN | 60.70 | 62.50 | 61.40 | 88.03 | 90.87 |

| Class | NB | SVM | KNN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | P | R | F | |

| Earn | 99.18 | 98.60 | 98.89 | 98.09 | 95.35 | 96.70 | 94.02 | 92.25 | 93.13 |

| Acquisition | 93.16 | 98.60 | 95.80 | 85.95 | 96.78 | 91.04 | 90.54 | 82.68 | 86.43 |

| Trade | 96.36 | 91.98 | 94.12 | 97.05 | 87.97 | 92.29 | 87.17 | 92.04 | 89.54 |

| Ship | 97.56 | 78.43 | 86.96 | 96.76 | 75.29 | 84.69 | 82.25 | 88.39 | 85.21 |

| Grain | 91.96 | 71.53 | 80.47 | 94.20 | 78.14 | 85.42 | 81.02 | 89.03 | 84.84 |

| Crude | 81.70 | 94.58 | 87.67 | 87.76 | 82.41 | 85.00 | 97.79 | 91.19 | 94.37 |

| Interest | 95.08 | 94.79 | 94.93 | 96.46 | 88.02 | 92.05 | 82.32 | 87.12 | 84.65 |

| Money-fx | 97.37 | 79.20 | 87.35 | 91.10 | 81.99 | 86.31 | 89.20 | 98.67 | 93.70 |

| Corn | 89.63 | 82.59 | 85.97 | 90.15 | 86.23 | 88.15 | 87.87 | 78.35 | 82.84 |

| Wheat | 88.76 | 84.50 | 86.58 | 88.63 | 83.39 | 85.93 | 89.67 | 82.12 | 85.73 |

| Average | 93.08 | 87.48 | 89.87 | 92.61 | 85.56 | 88.76 | 88.18 | 88.18 | 88.04 |

| Class | NB | SVM | KNN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | P | R | F | |

| Project | 96.97 | 85.69 | 90.98 | 83.91 | 97.75 | 90.30 | 88.68 | 97.38 | 92.83 |

| Course | 92.18 | 86.14 | 89.06 | 91.56 | 83.66 | 87.43 | 90.21 | 84.93 | 87.49 |

| Faculty | 84.85 | 91.16 | 87.89 | 88.98 | 82.51 | 85.62 | 85.96 | 76.44 | 80.92 |

| Student | 89.48 | 93.33 | 91.36 | 98.42 | 90.19 | 94.13 | 98.11 | 84.62 | 90.87 |

| Average | 90.87 | 89.08 | 89.82 | 90.72 | 88.53 | 89.37 | 90.74 | 85.84 | 88.03 |

| Class | NB | SVM | KNN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | P | R | F | |

| Earn | 94.31 | 92.66 | 93.48 | 96.54 | 91.41 | 93.90 | 91.74 | 93.94 | 92.83 |

| Acquisition | 90.87 | 97.25 | 93.95 | 89.17 | 98.36 | 93.54 | 91.77 | 96.56 | 94.10 |

| Trade | 94.22 | 91.18 | 92.68 | 96.06 | 91.28 | 93.61 | 94.59 | 88.91 | 91.66 |

| Ship | 97.56 | 78.43 | 86.96 | 93.62 | 77.39 | 84.73 | 95.37 | 70.55 | 81.10 |

| Grain | 94.92 | 77.78 | 85.50 | 93.73 | 82.64 | 87.84 | 89.13 | 76.47 | 82.32 |

| Crude | 96.49 | 92.12 | 94.25 | 95.16 | 91.57 | 93.33 | 93.99 | 95.54 | 94.76 |

| Interest | 93.29 | 89.87 | 91.55 | 91.53 | 88.87 | 90.18 | 93.29 | 93.86 | 93.57 |

| Money-fx | 93.80 | 90.81 | 92.28 | 92.07 | 87.69 | 89.83 | 95.62 | 92.49 | 94.03 |

| Corn | 90.31 | 79.52 | 84.57 | 90.64 | 82.59 | 86.43 | 86.89 | 82.98 | 84.89 |

| Wheat | 82.87 | 87.45 | 85.10 | 88.26 | 85.98 | 87.11 | 86.97 | 84.35 | 85.64 |

| Average | 92.86 | 87.71 | 90.03 | 92.68 | 87.78 | 90.05 | 91.94 | 87.56 | 89.49 |

| Class | NB | SVM | KNN | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | P | R | F | |

| Project | 95.88 | 92.29 | 94.05 | 96.67 | 91.57 | 94.05 | 91.76 | 93.35 | 92.55 |

| Course | 87.72 | 93.57 | 90.55 | 97.18 | 89.33 | 93.09 | 93.55 | 86.74 | 90.02 |

| Faculty | 88.61 | 79.89 | 84.02 | 81.13 | 88.22 | 84.53 | 85.57 | 92.17 | 88.75 |

| Student | 97.69 | 98.42 | 98.05 | 86.85 | 94.20 | 90.38 | 90.79 | 93.58 | 92.16 |

| Average | 92.48 | 91.04 | 91.67 | 90.46 | 90.83 | 90.51 | 90.42 | 91.46 | 90.87 |

| Dataset | Reuters | WebKB | ||

|---|---|---|---|---|

| Method | BBALHS | BBACO | BBALHS | BBACO |

| NB | NB | |||

| Mean | 92.25 | 93.41 | 91.35 | 92.22 |

| p-Value | 0.08 | 0.18 | 0.49 | 0.33 |

| t State | −8.23 | −5.83 | ||

| t Critical two-tail | 2.02 | 2.00 | ||

| KNN | KNN | |||

| Mean | 92.17 | 93.09 | 90.59 | 91.88 |

| p-Value | 0.10 | 0.59 | 0.62 | 0.99 |

| t State | −6.37 | −9.74 | ||

| t Critical two-tail | 2.00 | 2.02 | ||

| SVM | SVM | |||

| Mean | 91.83 | 93.67 | 91.20 | 92.14 |

| p-Value | 0.56 | 0.59 | 0.42 | 0.98 |

| t State | −16.54 | −7.41 | ||

| t Critical two-tail | 2.00 | 2.03 | ||

| Class | Q-BBALHS | Q-BBACO | #F | #F-BBALHS | #F-BBACO | R-BBALHS | R-BBACO |

|---|---|---|---|---|---|---|---|

| Bisnes | 0.55 | 0.63 | 4785 | 1058 | 1014 | 77.89 | 78.81 |

| Hiburan | 0.56 | 0.60 | 10,357 | 1468 | 1328 | 85.83 | 87.18 |

| Pendidikan | 0.63 | 0.71 | 2212 | 564 | 428 | 74.50 | 80.65 |

| Politik | 0.54 | 0.54 | 1534 | 341 | 325 | 77.77 | 78.81 |

| Sains-Teknologi | 0.59 | 0.65 | 3023 | 784 | 751 | 74.07 | 75.16 |

| Sukan | 0.61 | 0.67 | 8320 | 1127 | 946 | 86.45 | 88.63 |

| Class | Q-BBALHS | Q-BBACO | #F | #F-BBALHS | #F-BBACO | R-BBALHS | R-BBACO |

|---|---|---|---|---|---|---|---|

| Addin | 0.416 | 0.483 | 3925 | 315 | 256 | 91.97 | 93.48 |

| Bisnes | 0.536 | 0.547 | 2564 | 335 | 184 | 86.93 | 92.82 |

| Dekotaman | 0.564 | 0.58 | 3396 | 358 | 314 | 89.46 | 90.75 |

| Global | 0.667 | 0.638 | 2052 | 259 | 237 | 87.38 | 88.45 |

| Hiburan | 0.645 | 0.651 | 2947 | 356 | 330 | 87.92 | 88.80 |

| Pendidikan | 0.655 | 0.685 | 3284 | 365 | 303 | 88.89 | 90.77 |

| Santai | 0.517 | 0.592 | 3940 | 428 | 402 | 89.14 | 89.80 |

| Sihat | 0.662 | 0.688 | 3943 | 382 | 374 | 90.31 | 90.51 |

| Sukan | 0.406 | 0.489 | 2041 | 363 | 327 | 82.21 | 83.98 |

| Teknologi | 0.537 | 0.669 | 2818 | 201 | 194 | 92.87 | 93.12 |

| Vroom | 0.616 | 0.668 | 3150 | 362 | 342 | 88.51 | 89.14 |

| Dataset | Metric | Classifier | No FS | Chi-Square | BBALHS | BBACO |

|---|---|---|---|---|---|---|

| Mix-DS | Micro average F1 | NB | 76.32 | 84.32 | 88.50 | 89.34 |

| SVM | 76.01 | 84.6 | 88.04 | 88.72 | ||

| KNN | 75.94 | 84.07 | 87.77 | 88.24 | ||

| Macro average F1 | NB | 73.40 | 83.58 | 86.61 | 88.42 | |

| SVM | 74.72 | 82.15 | 86.98 | 87.38 | ||

| KNN | 73.35 | 82.42 | 86.35 | 87.47 | ||

| Harian-Metro dataset | Micro average F1 | NB | 68.20 | 78.94 | 82.38 | 83.56 |

| SVM | 67.38 | 79.19 | 82.16 | 83.71 | ||

| KNN | 66.77 | 78.51 | 81.64 | 83.18 | ||

| Macro average F1 | NB | 67.49 | 76.09 | 81.10 | 83.61 | |

| SVM | 66.29 | 77.13 | 80.86 | 82.16 | ||

| KNN | 66.02 | 75.84 | 80.55 | 81.98 |

| Class | NB-No FS | SVM-No FS | KNN-No FS | NB-Chi-Square | SVM-Chi-Square | KNN-Chi-Square | ||||||||||||

| P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | |

| Bisnes | 0.93 | 0.79 | 0.85 | 0.91 | 0.80 | 0.85 | 0.93 | 0.78 | 0.85 | 0.93 | 0.91 | 0.92 | 0.91 | 0.89 | 0.90 | 0.95 | 0.93 | 0.94 |

| Hiburan | 0.81 | 0.99 | 0.89 | 0.82 | 0.93 | 0.87 | 0.59 | 0.83 | 0.69 | 0.88 | 0.96 | 0.92 | 0.97 | 0.96 | 0.96 | 0.83 | 0.86 | 0.84 |

| Pendidikan | 0.93 | 0.43 | 0.59 | 0.87 | 0.49 | 0.62 | 0.86 | 0.56 | 0.68 | 0.59 | 0.82 | 0.69 | 0.64 | 0.75 | 0.69 | 0.76 | 0.87 | 0.81 |

| Politik | 0.94 | 0.54 | 0.68 | 0.94 | 0.62 | 0.75 | 0.83 | 0.64 | 0.72 | 0.89 | 0.83 | 0.86 | 0.81 | 0.73 | 0.77 | 0.83 | 0.96 | 0.89 |

| Sains-Teknologi | 0.65 | 0.41 | 0.50 | 0.59 | 0.43 | 0.50 | 0.67 | 0.69 | 0.68 | 0.64 | 0.65 | 0.64 | 0.62 | 0.64 | 0.63 | 0.73 | 0.48 | 0.58 |

| Sukan | 0.99 | 0.82 | 0.90 | 0.96 | 0.84 | 0.89 | 0.85 | 0.73 | 0.78 | 0.98 | 0.98 | 0.98 | 0.97 | 0.98 | 0.98 | 0.82 | 0.93 | 0.87 |

| Average | 0.87 | 0.66 | 0.73 | 0.85 | 0.68 | 0.75 | 0.79 | 0.71 | 0.73 | 0.82 | 0.86 | 0.84 | 0.82 | 0.83 | 0.82 | 0.82 | 0.84 | 0.82 |

| Class | NB-BBALHS | SVM-BBALHS | KNN-BBALHS | NB-BBACO | SVM-BBACO | KNN-BBACO | ||||||||||||

| P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | |

| Bisnes | 0.99 | 0.89 | 0.94 | 0.99 | 0.89 | 0.93 | 0.88 | 0.93 | 0.90 | 0.98 | 0.93 | 0.95 | 0.96 | 0.94 | 0.95 | 0.89 | 0.89 | 0.89 |

| Hiburan | 0.77 | 0.99 | 0.86 | 0.73 | 0.94 | 0.82 | 0.74 | 0.89 | 0.81 | 0.92 | 0.89 | 0.90 | 0.93 | 0.89 | 0.91 | 0.89 | 0.86 | 0.88 |

| Pendidikan | 0.94 | 0.73 | 0.82 | 0.96 | 0.75 | 0.84 | 0.79 | 0.92 | 0.85 | 0.78 | 0.91 | 0.84 | 0.77 | 0.90 | 0.83 | 0.80 | 0.88 | 0.83 |

| Politik | 0.99 | 0.82 | 0.90 | 0.99 | 0.80 | 0.89 | 0.85 | 0.95 | 0.89 | 0.86 | 0.96 | 0.91 | 0.83 | 0.96 | 0.89 | 0.90 | 0.81 | 0.85 |

| Sains-Teknologi | 0.81 | 0.77 | 0.79 | 0.76 | 0.93 | 0.84 | 0.83 | 0.72 | 0.77 | 0.83 | 0.70 | 0.76 | 0.82 | 0.66 | 0.73 | 0.78 | 0.88 | 0.83 |

| Sukan | 0.94 | 0.85 | 0.89 | 0.94 | 0.86 | 0.90 | 0.95 | 0.97 | 0.96 | 0.94 | 0.95 | 0.95 | 0.93 | 0.95 | 0.94 | 0.97 | 0.97 | 0.97 |

| Average | 0.91 | 0.84 | 0.87 | 0.89 | 0.86 | 0.87 | 0.84 | 0.89 | 0.86 | 0.88 | 0.89 | 0.88 | 0.87 | 0.88 | 0.87 | 0.87 | 0.88 | 0.87 |

| Class | NB-No FS | SVM-No FS | KNN-No FS | NB-Chi-Square | SVM-Chi-Square | KNN- Chi-Square | ||||||||||||

| P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | |

| Addin | 0.93 | 0.57 | 0.70 | 0.80 | 0.57 | 0.66 | 0.45 | 0.69 | 0.55 | 0.98 | 0.73 | 0.84 | 0.71 | 0.96 | 0.82 | 0.94 | 0.76 | 0.84 |

| Bisnes | 0.62 | 0.76 | 0.69 | 0.80 | 0.55 | 0.65 | 0.86 | 0.50 | 0.63 | 0.68 | 0.78 | 0.73 | 0.88 | 0.65 | 0.75 | 0.84 | 0.64 | 0.73 |

| Dekotaman | 0.88 | 0.51 | 0.64 | 0.77 | 0.53 | 0.62 | 0.93 | 0.53 | 0.68 | 0.98 | 0.68 | 0.80 | 0.65 | 0.88 | 0.75 | 0.66 | 0.73 | 0.70 |

| Global | 0.93 | 0.50 | 0.65 | 0.65 | 0.64 | 0.64 | 0.95 | 0.52 | 0.67 | 0.81 | 0.74 | 0.77 | 0.73 | 0.81 | 0.77 | 0.87 | 0.66 | 0.75 |

| Hiburan | 0.91 | 0.50 | 0.64 | 0.76 | 0.52 | 0.62 | 0.93 | 0.51 | 0.66 | 0.90 | 0.69 | 0.78 | 0.90 | 0.58 | 0.70 | 0.69 | 0.85 | 0.76 |

| Pendidikan | 0.86 | 0.60 | 0.71 | 0.74 | 0.66 | 0.69 | 0.88 | 0.63 | 0.73 | 0.65 | 0.73 | 0.69 | 0.76 | 0.65 | 0.70 | 0.78 | 0.69 | 0.73 |

| Santai | 0.81 | 0.51 | 0.63 | 0.56 | 0.55 | 0.55 | 0.86 | 0.54 | 0.66 | 0.75 | 0.86 | 0.80 | 0.66 | 0.71 | 0.68 | 0.62 | 0.82 | 0.71 |

| Sihat | 0.87 | 0.57 | 0.69 | 0.54 | 0.79 | 0.64 | 0.42 | 0.79 | 0.54 | 0.74 | 0.62 | 0.68 | 0.75 | 0.87 | 0.81 | 0.87 | 0.76 | 0.81 |

| Sukan | 0.59 | 0.98 | 0.74 | 0.66 | 0.91 | 0.77 | 0.59 | 0.97 | 0.73 | 0.56 | 0.72 | 0.63 | 0.97 | 0.76 | 0.85 | 0.74 | 0.99 | 0.85 |

| Teknologi | 0.78 | 0.64 | 0.70 | 0.85 | 0.58 | 0.69 | 0.78 | 0.66 | 0.71 | 0.98 | 0.68 | 0.81 | 0.91 | 0.73 | 0.81 | 0.88 | 0.69 | 0.77 |

| Vroom | 0.63 | 0.63 | 0.63 | 0.89 | 0.63 | 0.74 | 0.91 | 0.55 | 0.69 | 0.98 | 0.76 | 0.85 | 0.93 | 0.77 | 0.84 | 0.86 | 0.59 | 0.70 |

| Average | 0.80 | 0.62 | 0.67 | 0.73 | 0.63 | 0.66 | 0.78 | 0.63 | 0.66 | 0.82 | 0.73 | 0.76 | 0.80 | 0.76 | 0.77 | 0.80 | 0.74 | 0.76 |

| Class | NB-BBALHS | SVM-BBALHS | KNN-BBALHS | NB-BBACO | SVM-BBACO | KNN-BBACO | ||||||||||||

| P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | |

| Addin | 0.84 | 0.77 | 0.80 | 0.77 | 0.86 | 0.81 | 0.82 | 0.76 | 0.79 | 0.71 | 0.95 | 0.81 | 0.90 | 0.82 | 0.86 | 0.86 | 0.84 | 0.85 |

| Bisnes | 0.77 | 0.94 | 0.85 | 0.87 | 0.77 | 0.82 | 0.85 | 0.76 | 0.80 | 0.89 | 0.73 | 0.80 | 0.82 | 0.78 | 0.80 | 0.84 | 0.79 | 0.82 |

| Dekotaman | 0.92 | 0.76 | 0.83 | 0.91 | 0.81 | 0.86 | 0.94 | 0.81 | 0.87 | 0.89 | 0.92 | 0.90 | 0.75 | 0.86 | 0.80 | 0.83 | 0.87 | 0.85 |

| Global | 0.78 | 0.88 | 0.82 | 0.72 | 0.86 | 0.78 | 0.75 | 0.85 | 0.80 | 0.85 | 0.71 | 0.77 | 0.84 | 0.77 | 0.80 | 0.79 | 0.84 | 0.81 |

| Hiburan | 0.73 | 0.79 | 0.76 | 0.83 | 0.73 | 0.78 | 0.71 | 0.80 | 0.76 | 0.87 | 0.92 | 0.89 | 0.77 | 0.89 | 0.83 | 0.86 | 0.82 | 0.84 |

| Pendidikan | 0.84 | 0.89 | 0.86 | 0.84 | 0.78 | 0.81 | 0.88 | 0.89 | 0.88 | 0.81 | 0.85 | 0.83 | 0.75 | 0.87 | 0.81 | 0.88 | 0.77 | 0.82 |

| Santai | 0.71 | 0.77 | 0.74 | 0.74 | 0.81 | 0.78 | 0.71 | 0.85 | 0.77 | 0.93 | 0.77 | 0.84 | 0.89 | 0.76 | 0.82 | 0.82 | 0.79 | 0.80 |

| Sihat | 0.87 | 0.79 | 0.83 | 0.82 | 0.79 | 0.81 | 0.76 | 0.76 | 0.76 | 0.76 | 0.87 | 0.81 | 0.88 | 0.92 | 0.90 | 0.81 | 0.81 | 0.81 |

| Sukan | 0.79 | 0.83 | 0.81 | 0.89 | 0.74 | 0.81 | 0.74 | 0.88 | 0.81 | 0.89 | 0.92 | 0.90 | 0.90 | 0.74 | 0.81 | 0.88 | 0.74 | 0.80 |

| Teknologi | 0.88 | 0.73 | 0.80 | 0.84 | 0.79 | 0.82 | 0.83 | 0.79 | 0.81 | 0.84 | 0.78 | 0.81 | 0.83 | 0.80 | 0.81 | 0.88 | 0.81 | 0.84 |

| Vroom | 0.84 | 0.79 | 0.81 | 0.91 | 0.76 | 0.83 | 0.88 | 0.76 | 0.81 | 0.78 | 0.85 | 0.81 | 0.72 | 0.89 | 0.80 | 0.84 | 0.73 | 0.78 |

| Average | 0.82 | 0.81 | 0.81 | 0.83 | 0.79 | 0.81 | 0.81 | 0.81 | 0.81 | 0.84 | 0.84 | 0.84 | 0.82 | 0.83 | 0.82 | 0.84 | 0.80 | 0.82 |

| Class | Q-BBALHS | Q-BBACO | #F | #F-BBALHS | #F-BBACO | R-BBALHS | R-BBACO |

|---|---|---|---|---|---|---|---|

| Economy | 0.54 | 0.61 | 1541 | 537 | 397 | 65.15 | 74.24 |

| Politics | 0.59 | 0.64 | 1427 | 343 | 152 | 75.96 | 89.35 |

| Sport | 0.62 | 0.68 | 1874 | 294 | 199 | 84.31 | 89.38 |

| Science | 0.63 | 0.68 | 1505 | 235 | 176 | 84.39 | 88.31 |

| Art | 0.66 | 0.71 | 1693 | 544 | 267 | 67.87 | 84.23 |

| Class | NB-BBALHS | SVM-BBALHS | NB-BBACO | SVM-BBACO | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | P | R | F | P | R | F | |

| Economy | 94.85 | 83.65 | 88.90 | 91.13 | 95.20 | 93.12 | 89.04 | 91.51 | 90.26 | 94.17 | 99.59 | 96.80 |

| Politics | 92.28 | 97.32 | 94.73 | 85.25 | 97.55 | 90.99 | 86.92 | 97.85 | 92.06 | 88.50 | 97.63 | 92.84 |

| Sport | 91.10 | 85.55 | 88.24 | 93.87 | 85.98 | 89.75 | 97.55 | 88.90 | 93.02 | 92.66 | 83.98 | 88.11 |

| Science | 90.94 | 89.11 | 90.02 | 86.30 | 87.50 | 86.90 | 91.05 | 86.72 | 88.83 | 86.44 | 90.50 | 88.42 |

| Art | 87.51 | 96.46 | 91.77 | 97.73 | 91.69 | 94.61 | 93.62 | 90.46 | 92.01 | 99.34 | 92.69 | 95.90 |

| Average | 91.34 | 90.42 | 90.73 | 90.86 | 91.58 | 91.07 | 91.64 | 91.09 | 91.24 | 92.22 | 92.88 | 92.42 |

| BBALHS | BBACO | |

|---|---|---|

| NB | ||

| Mean | 90.78 | 91.23 |

| p-Value | 0.20 | 0.96 |

| t Stat | −6.89 | |

| t Critical two-tail | 2.00 | |

| SVM | ||

| Mean | 91.11 | 92.52 |

| p-Value | 0.07 | 0.46 |

| t Stat | −23.04 | |

| t Critical two-tail | 2.00 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adel, A.; Omar, N.; Abdullah, S.; Al-Shabi, A. Co-Operative Binary Bat Optimizer with Rough Set Reducts for Text Feature Selection. Appl. Sci. 2022, 12, 11296. https://doi.org/10.3390/app122111296

Adel A, Omar N, Abdullah S, Al-Shabi A. Co-Operative Binary Bat Optimizer with Rough Set Reducts for Text Feature Selection. Applied Sciences. 2022; 12(21):11296. https://doi.org/10.3390/app122111296

Chicago/Turabian StyleAdel, Aisha, Nazlia Omar, Salwani Abdullah, and Adel Al-Shabi. 2022. "Co-Operative Binary Bat Optimizer with Rough Set Reducts for Text Feature Selection" Applied Sciences 12, no. 21: 11296. https://doi.org/10.3390/app122111296

APA StyleAdel, A., Omar, N., Abdullah, S., & Al-Shabi, A. (2022). Co-Operative Binary Bat Optimizer with Rough Set Reducts for Text Feature Selection. Applied Sciences, 12(21), 11296. https://doi.org/10.3390/app122111296