Real-Time Risk Assessment for Road Transportation of Hazardous Materials Based on GRU-DNN with Multimodal Feature Embedding

Abstract

1. Introduction

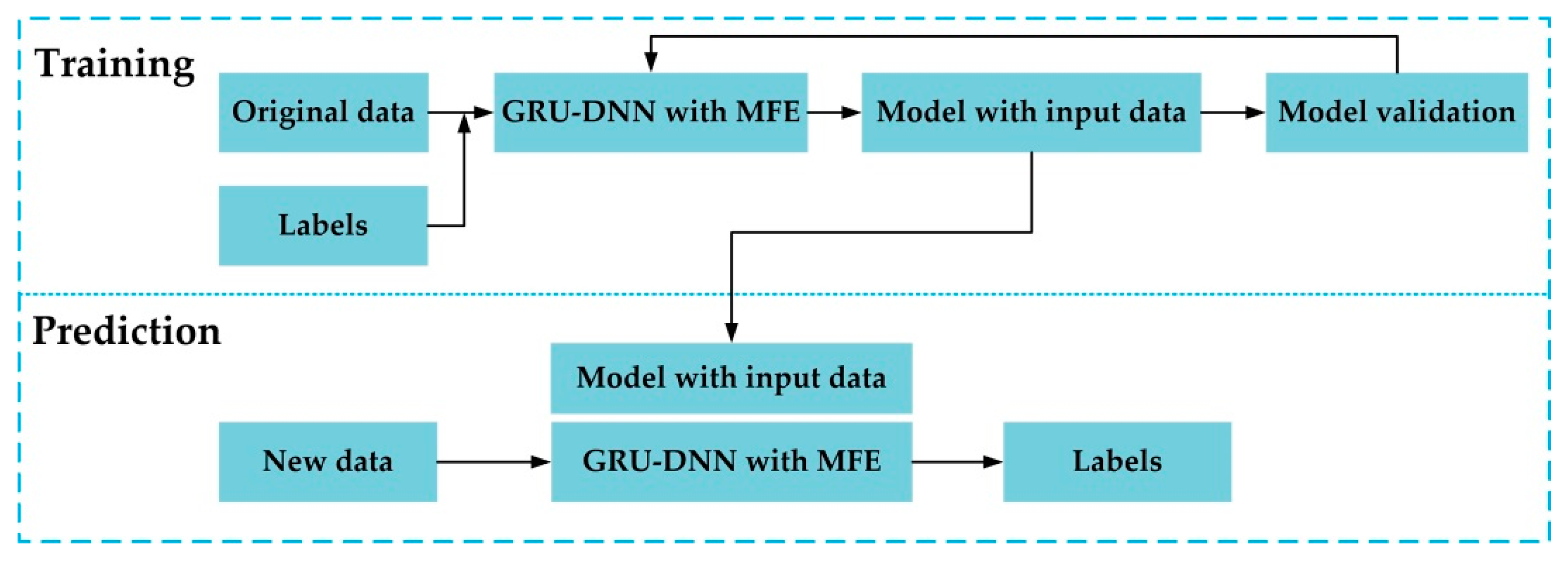

2. Model

2.1. Risk Level and Contributing Factors

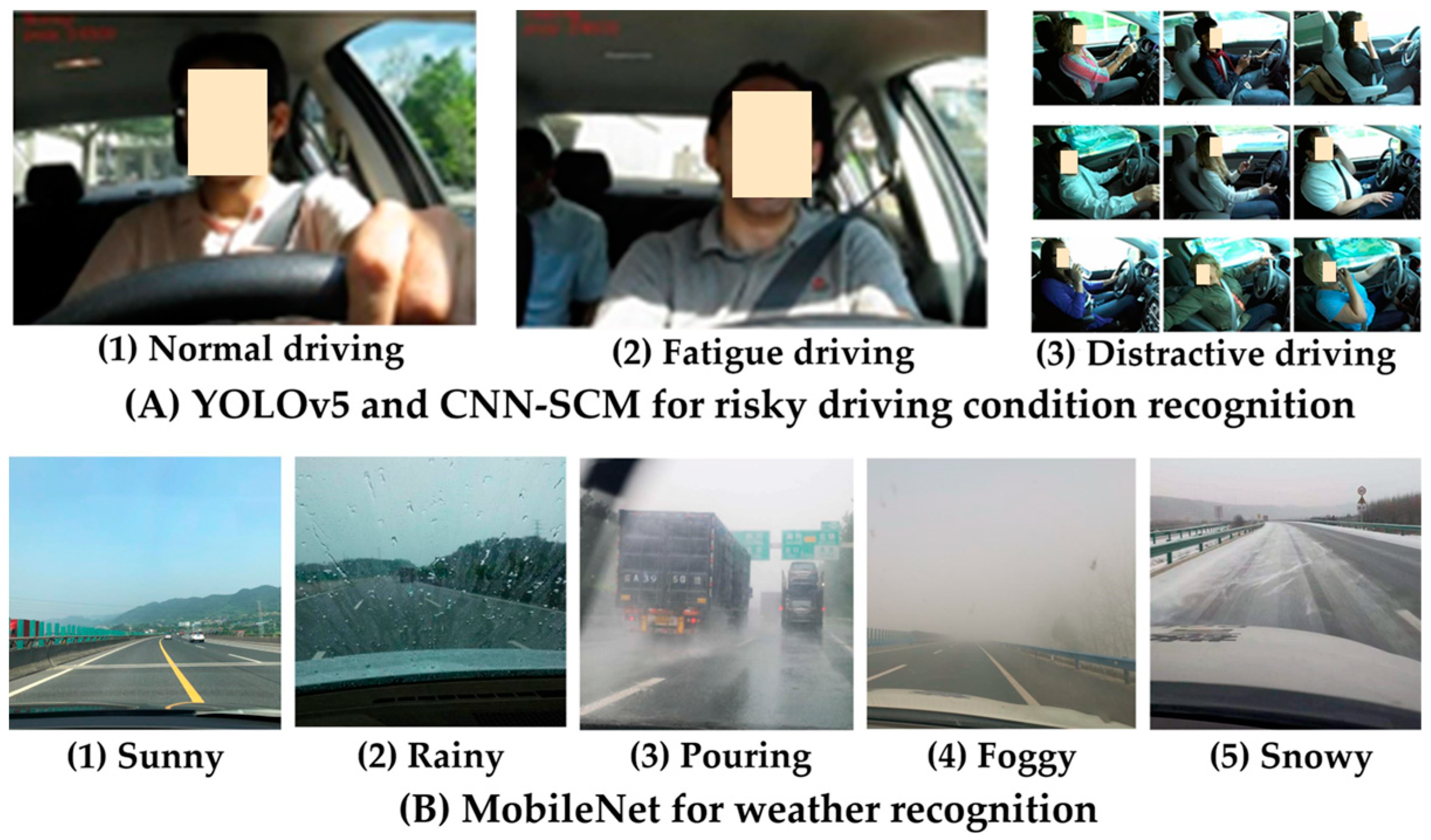

2.2. Multimodal Feature Embedding

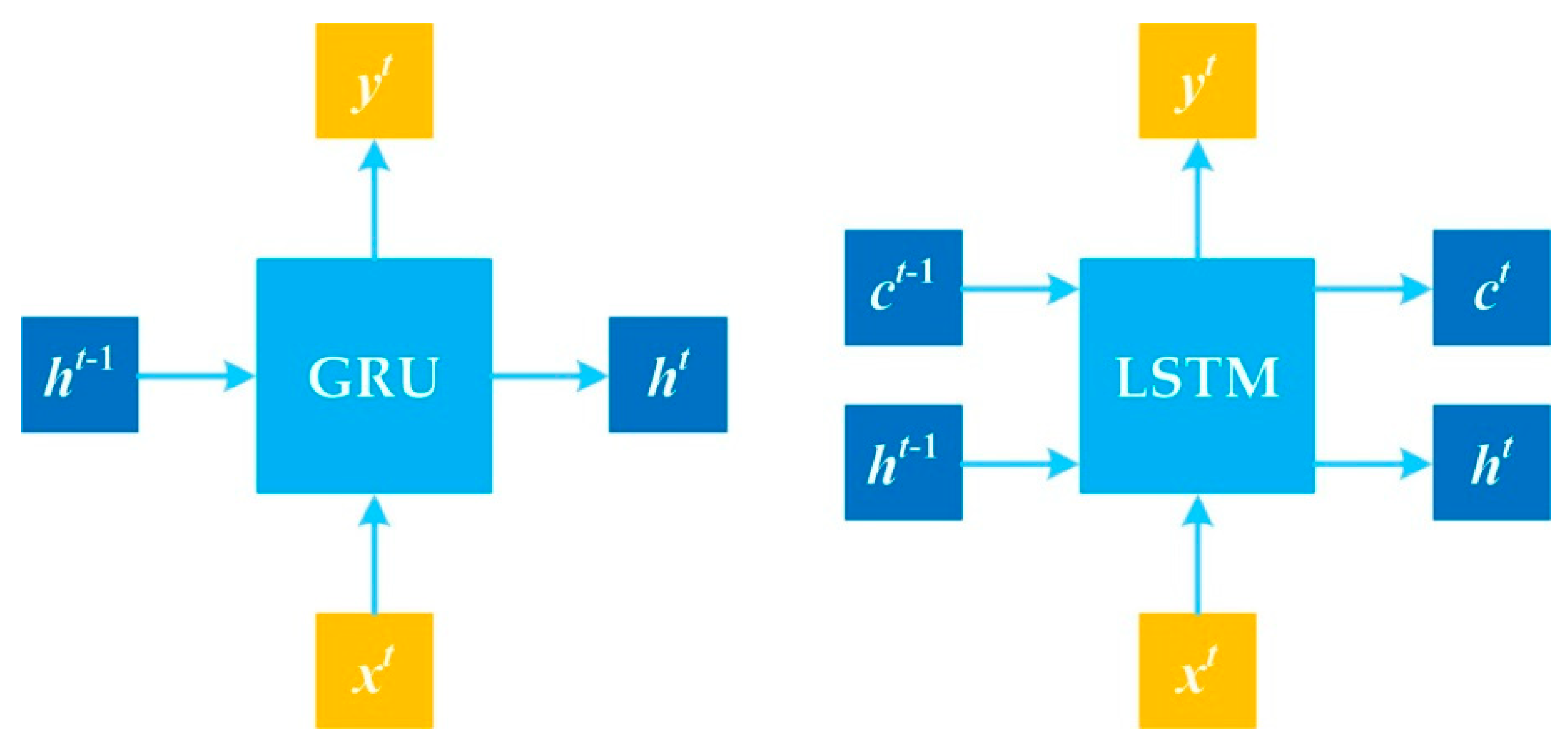

2.3. GRU-DNN

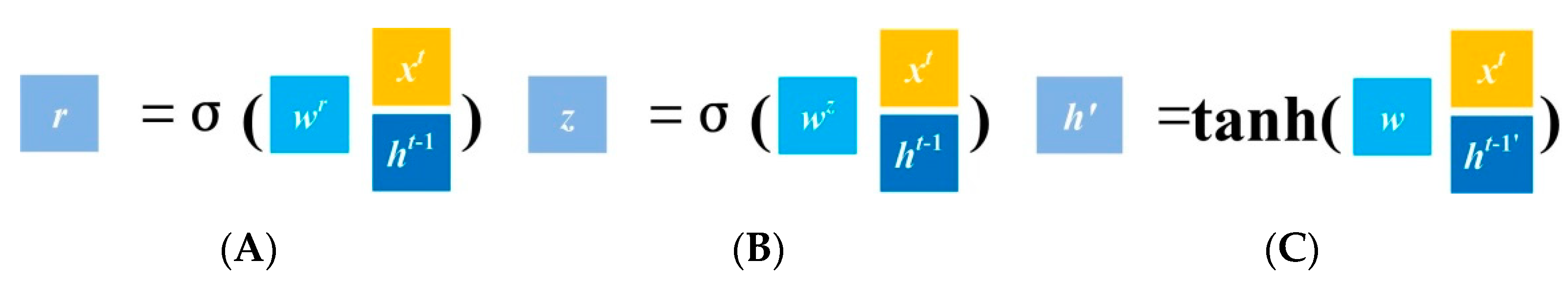

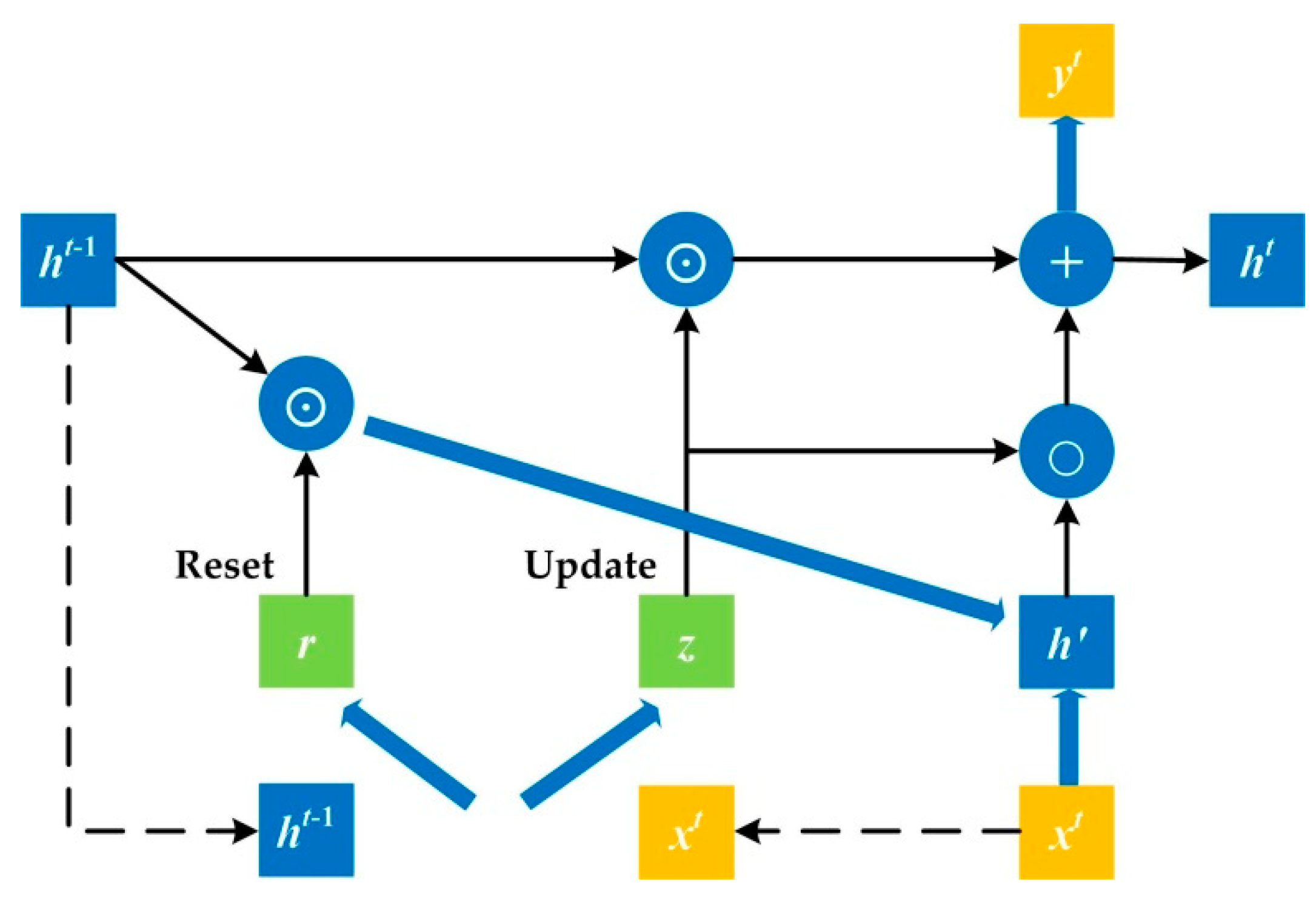

2.3.1. GRU Model

2.3.2. DNN Model

3. Experiment and Analysis

3.1. Data Preliminary

3.2. Performance Meausures

3.3. Model Training

3.4. Model Comparison

4. Adversarial Attack and Defenses

4.1. Adversarial Training

4.2. Dimensionality Reduction

4.3. Prediction Similarity

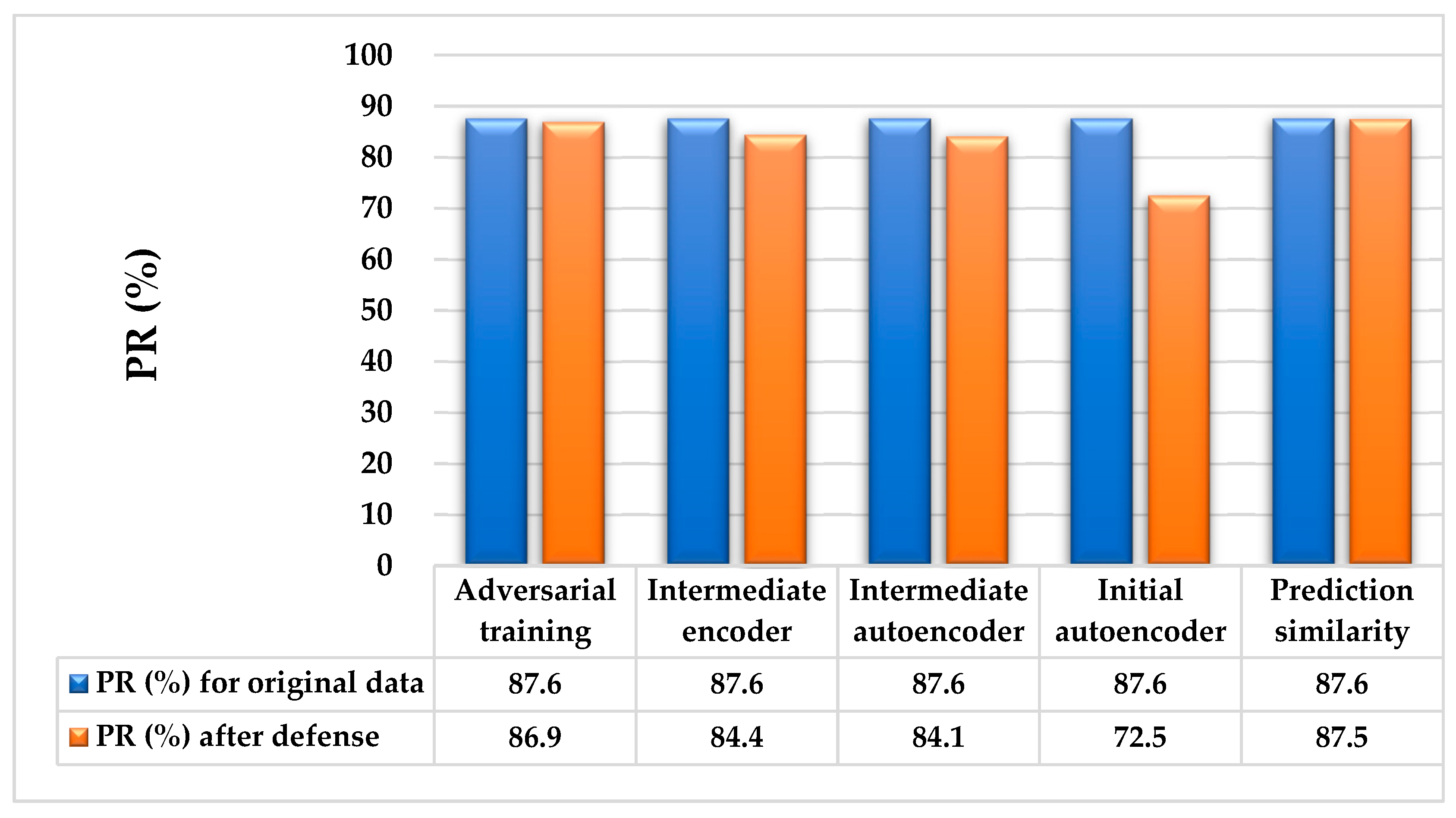

4.4. Effectiveness of the Three Defense Approaches

- (1)

- Adversarial training increases the difficulty of generating new adversarial attacks. With the new adversarial examples obtained, the model has to be retrained to ensure those vulnerabilities are taken into consideration, which is an infinite recursive defense process.

- (2)

- Dimensionality reduction is effective at seeking new vulnerabilities, since the generation of new adversarial examples is detectable to the human eye. When PR remains stable, the GRU-DNN with MFE can be made more robust.

- (3)

- Prediction similarity is only the addition of an external detection layer and does not necessitate the modification of the structure of GRU-DNN with MFE, such that the known adversarial examples are impossible to detect using this approach. However, it can be used as an effective input for risk assessment to detect with a high success rate when an adversarial attack is launched, thus significantly improving the robustness of the model.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, L.; Wu, Q.; Li, S.; Li, Y.; Fan, T. Risk assessment of hazmat road transportation considering environmental risk under time-varying conditions. Int. J. Environ. Res. Public Health 2021, 18, 9780. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Zhou, J.; Ma, C. Design of a network optimization platform for the multivehicle transportation of hazardous materials. Int. J. Environ. Res. Public Health 2020, 17, 1104. [Google Scholar] [CrossRef]

- Li, Y.; Xu, D.; Shuai, J. Real-time risk analysis of road tanker containing flammable liquid based on fuzzy Bayesian network. Process Saf. Environ. Prot. 2020, 134, 36–46. [Google Scholar] [CrossRef]

- Ditta, A.; Figueroa, O.; Galindo, G.; Yie-Pinedo, R. A review on research in transportation of hazardous materials. Socio-Econ. Plan. Sci. 2019, 68, 100665. [Google Scholar] [CrossRef]

- Guo, J.; Luo, C. Risk assessment of hazardous materials transportation: A review of research progress in the last thirty years. J. Traffic Transp. Eng. Engl. Ed. 2022, 9, 571–590. [Google Scholar] [CrossRef]

- Erkut, E.; Tjandra, S.; Verter, V. Hazardous materials transportation. Handb. Oper. Res. Manag. Sci. 2007, 14, 539–621. [Google Scholar]

- Huang, X.; Wang, X.; Pei, J.; Xu, M.; Huang, X.; Luo, Y. Risk assessment of the areas along the highway due to hazardous material transportation accidents. Nat. Hazards 2018, 93, 1181–1202. [Google Scholar] [CrossRef]

- Liu, X.; Turla, T.; Zhang, Z. Accident-cause-specific risk analysis of rail transport of hazardous materials. Transp. Res. Rec. J. Transp. Res. Board 2018, 2672, 176–187. [Google Scholar] [CrossRef]

- Ma, C.; Zhou, J.; Xu, X.; Pan, F.; Xu, J. Fleet scheduling optimization of hazardous materials transportation: A literature review. J. Adv. Transp. 2020, 2020, 4079617. [Google Scholar] [CrossRef]

- Gaweesh, S.; Khan, M.; Ahmed, M. Development of a novel framework for hazardous materials placard recognition system to conduct commodity flow studies using artificial intelligence AlexNet Convolutional Neural Network. Transp. Res. Rec. J. Transp. Res. Board 2021, 2675, 1357–1371. [Google Scholar] [CrossRef]

- Li, S.; Zu, Y.; Fang, H.; Liu, L.; Fan, T. Design optimization of a HAZMAT multimodal Hub-and-Spoke network with detour. Int. J. Environ. Res. Public Health 2021, 18, 12470. [Google Scholar] [CrossRef] [PubMed]

- Hu, H.; Li, X.; Zhang, Y.; Shang, C.; Zhang, S. Multi-objective location-routing model for hazardous material logistics with traffic restriction constraint in inter-city roads. Comput. Ind. Eng. 2019, 128, 861–876. [Google Scholar] [CrossRef]

- Zero, L.; Bersani, C.; Paolucci, M.; Sacile, R. Two new approaches for the bi-objective shortest path with a fuzzy objective applied to HAZMAT transportation. J. Hazard. Mater. 2019, 375, 96–106. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.; Wang, J.; Yip, T.; Gu, Y. An innovative gravity-based approach to assess vulnerability of a Hazmat road transportation network: A case study of Guangzhou, China. Transp. Res. Part D Transp. Environ. 2018, 62, 659–671. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Q.; Chin, K. A decision support model for risk management of hazardous materials road transportation based on quality function deployment. Transp. Res. Part D Transp. Environ. 2019, 74, 154–173. [Google Scholar] [CrossRef]

- Hu, H.; Du, J.; Li, X.; Shang, C.; Shen, Q. Risk models for hazardous material transportation subject to weight variation considerations. IEEE Trans. Fuzzy Syst. 2020, 29, 2997467. [Google Scholar] [CrossRef]

- Zhou, L.; Guo, C.; Cui, Y.; Wu, J.; Lv, Y.; Du, Z. Characteristics, cause, and severity analysis for hazmat transportation risk management. Int. J. Environ. Res. Public Health 2020, 17, 2793. [Google Scholar] [CrossRef]

- Arun, A.; Haque, M.; Bhaskar, A.; Washington, S.; Sayed, T. A systematic mapping review of surrogate safety assessment using traffic conflict techniques. Accid. Anal. Prev. 2021, 153, 106016. [Google Scholar] [CrossRef]

- Cordeiro, F.; Bezerra, B.; Peixoto, A.; Ramos, R. Methodological aspects for modeling the environmental risk of transporting hazardous materials by road. Transp. Res. Part D Transp. Environ. 2016, 44, 105–121. [Google Scholar] [CrossRef]

- Janno, J.; Koppel, O. Operational risks in dangerous goods transportation chain on roads. LogForum 2018, 14, 33–41. [Google Scholar] [CrossRef]

- Verter, V.; Kara, B. A GIS-based framework for hazardous materials transport risk assessment. Risk Anal. 2001, 21, 1109–1120. [Google Scholar] [CrossRef] [PubMed]

- Ronza, A.; Vílchez, J.; Casal, J. Using transportation accident databases to investigate ignition and explosion probabilities of flammable spills. J. Hazard. Mater. 2007, 146, 106–123. [Google Scholar] [CrossRef]

- Landucci, G.; Antonioni, G.; Tugnoli, A.; Bonvicini, S.; Molag, M.; Cozzani, V. HazMat transportation risk assessment: A revisitation in the perspective of the Viareggio LPG accident. J. Loss Prev. Process Ind. 2017, 49, 36–46. [Google Scholar] [CrossRef]

- Benekos, I.; Diamantidis, D. On risk assessment and risk acceptance of dangerous goods transportation through road tunnels in Greece. Saf. Sci. 2017, 91, 1–10. [Google Scholar] [CrossRef]

- Ke, G.; Zhang, H.; Bookbinder, J. A dual toll policy for maintaining risk equity in hazardous materials transportation with fuzzy incident rate. Int. J. Prod. Econ. 2020, 227, 107650. [Google Scholar] [CrossRef]

- Tao, L.; Chen, L.; Long, P.; Chen, C.; Wang, J. Integrated risk assessment method for spent fuel road transportation accident under complex environment. Nucl. Eng. Technol. 2021, 53, 393–398. [Google Scholar] [CrossRef]

- Weng, J.; Gan, X.; Zhang, Z. A quantitative risk assessment model for evaluating hazmat transportation accident risk. Saf. Sci. 2021, 137, 105198. [Google Scholar] [CrossRef]

- Qu, Z.; Wang, Y. Research on risk assessment of hazardous freight road transportation based on BP neural network. Int. Conf. Logist. Syst. Intell. Manag. 2010, 2, 629–631. [Google Scholar]

- Li, P.; Abdel-Aty, M. A hybrid machine learning model for predicting real-time secondary crash likelihood. Accid. Anal. Prev. 2022, 165, 106504. [Google Scholar] [CrossRef] [PubMed]

- Islam, R.; Khan, F.; Venkatesan, R. Real time risk analysis of kick detection: Testing and validation. Reliab. Eng. Syst. Saf. 2017, 161, 25–37. [Google Scholar] [CrossRef]

- Fabiano, B.; Currò, F.; Reverberi, A.; Pastorino, R. Dangerous good transportation by road: From risk analysis to emergency planning. J. Loss Prev. Process Ind. 2005, 18, 403–413. [Google Scholar] [CrossRef]

- Yang, J.; Li, F.; Zhou, J.; Zhang, L.; Huang, L.; Bi, J. A survey on hazardous materials accidents during road transport in China from 2000 to 2008. J. Hazard. Mater. 2010, 184, 647–653. [Google Scholar] [CrossRef]

- Citro, L.; Gagliardi, R. Risk assessment of hydrocarbon release by pipeline. Chem. Eng. Trans. 2012, 28, 85–90. [Google Scholar]

- Shen, X.; Yan, Y.; Li, X.; Xie, C.; Wang, L. Analysis on tank truck accidents involved in road hazardous materials transportation in China. Traffic Inj. Prev. 2014, 15, 762–768. [Google Scholar] [CrossRef] [PubMed]

- Ambituuni, A.; Amezaga, J.; Werner, D. Risk assessment of petroleum product transportation by road: A framework for regulatory improvement. Saf. Sci. 2015, 79, 324–335. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Deng, L. Deep learning: From speech recognition to language and multimodal processing. APSIPA Trans. Signal Inf. Process. 2016, 5, 1–15. [Google Scholar] [CrossRef]

- Beam, A.; Kohane, I. Big data and machine learning in health care. J. Am. Med. Assoc. 2018, 319, 1317–1318. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Xia, K.; Jiang, Y.; Qian, P. Alzheimer’s disease multiclass diagnosis via multimodal neuroimaging embedding feature selection and fusion. Inf. Fusion 2021, 66, 170–183. [Google Scholar] [CrossRef]

- Chen, W.; Wang, W.; Liu, L.; Lew, M. New ideas and trends in deep multimodal content understanding: A review. Neurocomputing 2021, 426, 195–215. [Google Scholar] [CrossRef]

- Dasgupta, K.; Das, A.; Das, S.; Bhattacharya, U.; Yogamani, S. Spatio-contextual deep network based multimodal pedestrian detection for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15940. [Google Scholar] [CrossRef]

- Wang, S.; Guo, W. Sparse multigraph embedding for multimodal feature representation. IEEE Trans. Multimed. 2017, 19, 1454–1466. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2014, arXiv:1312.6199. [Google Scholar]

- Deng, Z.; Yang, X.; Xu, S.; Su, H.; Zhu, J. Libre: A practical Bayesian approach to adversarial detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Manhattan, NY, USA, 2021; pp. 972–982. [Google Scholar]

- Huang, X.; Kroening, D.; Ruan, W.; Sharp, J.; Sun, Y.; Thoma, E.; Wu, M.; Yi, X. A survey of safety and trustworthiness of deep neural networks: Verification, testing, adversarial attack and defence, and interpretability. Comput. Sci. Rev. 2020, 37, 100270. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Wang, T.; Jiang, R.; Xu, J.; Zhao, L. Robust feature learning for adversarial defense via hierarchical feature alignment. Inf. Sci. 2021, 560, 256–270. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Papernot, N.; McDaniel, P.D.; Jha, S.; Fredrikson, M.; Berkay Celik, Z.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS & P), Saarbruecken, Germany, 21–24 March 2016; IEEE: Manhattan, NY, USA, 2016; pp. 372–387. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Manhattan, NY, USA, 2016; pp. 2574–2582. [Google Scholar]

- Carlini, N.; Wagner, D. Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; ACM: New York, NY, USA, 2017; pp. 3–14. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Xu, W.; Evans, D.; Qi, Y. Feature squeezing: Detecting adversarial examples in deep neural networks. In Network and Distributed System Security Symposium (NDSS); The Internet Society: Singapore, 2018. [Google Scholar]

- Echeberria-Barrio, X.; Gil-Lerchundi, A.; Egana-Zubia, J.; Orduna-Urrutia, R. Understanding deep learning defenses against adversarial examples through visualizations for dynamic risk assessment. Neural Comput. Appl. 2022, 1–14. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Meng, D.; Chen, H. Magnet: A two-pronged defense against adversarial examples. In ACM Conference on Computer and Communications Security; ACM: New York, NY, USA, 2017. [Google Scholar]

- Dhillon, G.S.; Azizzadenesheli, K.; Lipton, Z.C.; Bernstein, J.; Kossaifi, J.; Khanna, A.; Anandkumar, A. Stochastic activation pruning for robust adversarial defense. arXiv 2018, arXiv:1803.01442. [Google Scholar]

- Lu, Y.D. Research on Real-Time Risk Warning Method for Hazardous Materials Transportation by Road; China University of Geosciences: Beijing, China, 2018. [Google Scholar]

- Wang, Q.; Si, G.; Qu, K.; Gong, J.; Cui, L. Transmission line foreign body fault detection using multi-feature fusion based on modified YOLOv5. J. Phys. Conf. Ser. 2022, 2320, 012028. [Google Scholar] [CrossRef]

- Wang, Z.; She, Q.; Smolic, A. ACTION-Net: Multipath excitation for action recognition. arXiv 2021, arXiv:2103.07372. [Google Scholar] [CrossRef]

- Abtahi, S.; Omidyeganeh, M.; Shirmohammadi, S.; Hariri, B. YawDD: A yawning detection dataset. In Proceedings of the 5th ACM Multimedia Systems Conference, Singapore, 19–21 March 2014; pp. 24–28. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; IEEE: Manhattan, NY, USA, 2019; pp. 1314–1324. [Google Scholar]

- Kusner, M.J.; Paige, B.; Hernández-Lobato, J.M. Grammar variational autoencoder. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August, 2017; pp. 1945–1954. [Google Scholar]

- Ham, J.; Chen, Y.; Crawford, M. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Dey, R.; Saemt, F. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the IEEE International Midwest Symposium on Circuits & Systems, Boston, MA, USA, 6–9 August 2017; IEEE: Manhattan, NY, USA, 2017; pp. 1597–1600. [Google Scholar]

- Diener, L.; Janke, M.; Schultz, T. Direct conversion from facial myoelectric signals to speech using Deep Neural Networks. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015. [Google Scholar]

- Li, Y.; Liu, C.; Yue, G.; Gao, Q.; Du, Y. Deep learning-based pavement subsurface distress detection via ground penetrating radar data. Autom. Constr. 2022, 142, 104516. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in Neural Information Processing Systems 28 (NIPS 2015); MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Li, Y.; Che, P.; Liu, C.; Wu, D.; Du, Y. Cross-scene pavement distress detection by a novel transfer learning framework. Comput. -Aided Civ. Infrastruct. Eng. 2021, 36, 1398–1415. [Google Scholar] [CrossRef]

- Li, B.; Wang, K.; Zhang, A. Automatic classification of pavement crack using deep convolutional neural network. Int. J. Pavement Eng. 2020, 21, 457–463. [Google Scholar] [CrossRef]

- Gai, K.; Zhu, X.; Li, H. Learning Piece-Wise Linear Models from Large Scale Data for Ad Click Prediction; Cornell University Library: Ithaca, NY, USA, 2017. [Google Scholar]

- Zhang, M.; Zhou, Z. ML-KNN: A lazy learning approach to multi-label learning. Pattern Recognit. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Hearst, M.; Dumais, S.; Osman, E. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Chen, J.; Huang, H.; Tian, S. Feature selection for text classification with Naïve Bayes. Expert Syst. Appl. 2009, 36, 5432–5435. [Google Scholar] [CrossRef]

- Safavian, S.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Chen, S.C. A survey of evaluation and design for AUC based classifier. Pattern Recognit. Artif. Intell. 2011, 24, 64–71. [Google Scholar]

- Carlini, N.; Wagner, D. Magnet and efficient defenses against adversarial attacks are not robust to adversarial examples. arXiv 2017, arXiv:1711.08478. [Google Scholar]

| Level | Classification | Description | Color for Presentation | |

|---|---|---|---|---|

| 1 | Level I | extremely high risk | fatal accidents | red |

| 2 | Level II | high risk | injury accidents | yellow |

| 3 | Level III | medium risk | property damage accident | green |

| 4 | Level IV | low risk | no accident | blue |

| Attributes for Accident Occurrence | Contributing Factor for Attributes | Type of Data | Value of Data | Source of Data |

|---|---|---|---|---|

| probability | travel speed of vehicle | C | [0, 120] (km/h) | I |

| mileage of vehicle | C | [0, 4 × 105] (km) | I | |

| inspection status of vehicle | D | 0 = qualified 1 = disqualified | II | |

| load of vehicle | C | 0 = no overload 1 = overload 2 = heavy overload | VI | |

| vehicle type | D | 0 = tank 1 = van | III | |

| accident-prone road section | D | 0 = no accident-prone road section 1 = tunnel 2 = bridge 3 = long downgrade 4 = long upgrade 5 = zigzag 6 = village 7 = unsignalized intersection | IV and VII | |

| risky driving condition | E | normal, fatigue, distracted driving | V | |

| traffic violation record | D | 0 = no record 1 = traffic violation during transportation 2 = involved in normal accident 3 = involved in severe accident | III | |

| duration for continuous driving | C | [0, 4] (h) | IV | |

| unsafe vehicle behavior | D | 0 = no unsafe driving behavior 1 = unsafe car-following 2 = unsafe lane-changing | I or VI | |

| time of the day | D | 0 = morning 1 = noon 2 = afternoon 3 = night 4 = midnight | / | |

| weather condition | E | sunny, raining, pouring, foggy, snowy | V | |

| severity | type of hazmat | D | 0 = explosives 1 = compressed gases and liquefied gases 2 = flammable liquids 3 = flammable solids, substances liable to spontaneous combustion and substances emitting flammable gases when wet 4 = oxidizing substances and organic peroxides 5 = poisons and infectious substances 6 = radioactive substances 7 = corrosives 8 = miscellaneous dangerous substances | III |

| physicochemical property of hazmat | D | 0 = explosive 1 = flammable 2 = corrosive 3 = oxidative 4 = poisonous 5 = radiative | III | |

| ratio of hazmat amount to tank volume | C | [0, 92] (%) | II | |

| leakage of hazmat | D | 0 = no leakage 1 = permeating leakage 2 = water-clock leakage 3 = heavy leakage 4 = flowing leakage | I and VI | |

| social influence | sensitive period | D | 0 = no 1 = holiday 2 = festival 3 = other large-scale activities | / |

| traffic condition | D | 0 = uncongested 1 = congested 2 = heavily congested | VI | |

| vulnerable community passed by | D | 0 = no vulnerable community 1 = school 2 = hospital 3 = large community | IV and VII | |

| vulnerable natural region passed by | D | 0 = no vulnerable natural region 1 = river 2 = reservoir or lake 3 = forest | IV and VII |

| Label | Accident | Non-Accident | |

|---|---|---|---|

| Prediction Result | |||

| Accident | TP | FP | |

| Non-accident | FN | TN | |

| Performance Measures | Derivation | Definition |

|---|---|---|

| ACC (%) | Proportion of accurate predictions in the predicted sample | |

| PR (%) | Proportion of true positives to predicted positives | |

| RE (%) | Proportion of predicted positive samples to the true samples | |

| F1 (%) | Harmonic mean of precision and recall |

| Measure | ACC (%) | PR (%) | RE (%) | F1 (%) |

|---|---|---|---|---|

| Performance | 87.6 | 86.5 | 89.0 | 87.7 |

| Risk Level | PR (%) | RE (%) | F1 (%) |

|---|---|---|---|

| Risk level I | 94.3 | 83.2 | 88.4 |

| Risk level II | 95.8 | 85.6 | 90.4 |

| Risk level III | 90.5 | 82.5 | 86.3 |

| Model | ACC (%) | AUC | F1 (%) |

|---|---|---|---|

| GRU-DNN with MFE | 87.6 | 0.91 | 87.7 |

| CNN with MFE | 88.1 | 0.77 | 83.6 |

| MLR with MFE | 75.4 | 0.62 | 67.1 |

| DNN with MFE | 82.5 | 0.81 | 78.1 |

| KNN with MFE | 88.7 | 0.82 | 88.3 |

| SVM with MFE | 83.3 | 0.80 | 81.5 |

| NB with MFE | 76.9 | 0.86 | 78.9 |

| DT with MFE | 83.2 | 0.85 | 83.3 |

| RF with MFE | 88.8 | 0.84 | 88.5 |

| Defense Approaches | Detection for Known Adversarial Attack | Detection of New Adversarial Attack | |

|---|---|---|---|

| Adversarial training | 92.0% | No new adversarial attack attempts are detected | |

| Dimensionality reduction | Intermediate encoder | 62.3% | New adversarial attacks are not detected, but are known, and new attacks are distinguishable |

| Intermediate autoencoder | 65.3% | ||

| Initial autoencoder | 71.7% | ||

| Prediction similarity | 0% | The detection rate of the new adversarial attacks is 99.5% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, S.; Li, Y.; Xuan, Z.; Li, Y.; Li, G. Real-Time Risk Assessment for Road Transportation of Hazardous Materials Based on GRU-DNN with Multimodal Feature Embedding. Appl. Sci. 2022, 12, 11130. https://doi.org/10.3390/app122111130

Yu S, Li Y, Xuan Z, Li Y, Li G. Real-Time Risk Assessment for Road Transportation of Hazardous Materials Based on GRU-DNN with Multimodal Feature Embedding. Applied Sciences. 2022; 12(21):11130. https://doi.org/10.3390/app122111130

Chicago/Turabian StyleYu, Shanchuan, Yi Li, Zhaoze Xuan, Yishun Li, and Gang Li. 2022. "Real-Time Risk Assessment for Road Transportation of Hazardous Materials Based on GRU-DNN with Multimodal Feature Embedding" Applied Sciences 12, no. 21: 11130. https://doi.org/10.3390/app122111130

APA StyleYu, S., Li, Y., Xuan, Z., Li, Y., & Li, G. (2022). Real-Time Risk Assessment for Road Transportation of Hazardous Materials Based on GRU-DNN with Multimodal Feature Embedding. Applied Sciences, 12(21), 11130. https://doi.org/10.3390/app122111130