Generalizable Underwater Acoustic Target Recognition Using Feature Extraction Module of Neural Network

Abstract

1. Introduction

2. Feature Extraction Module of Attention-Based Neural Network

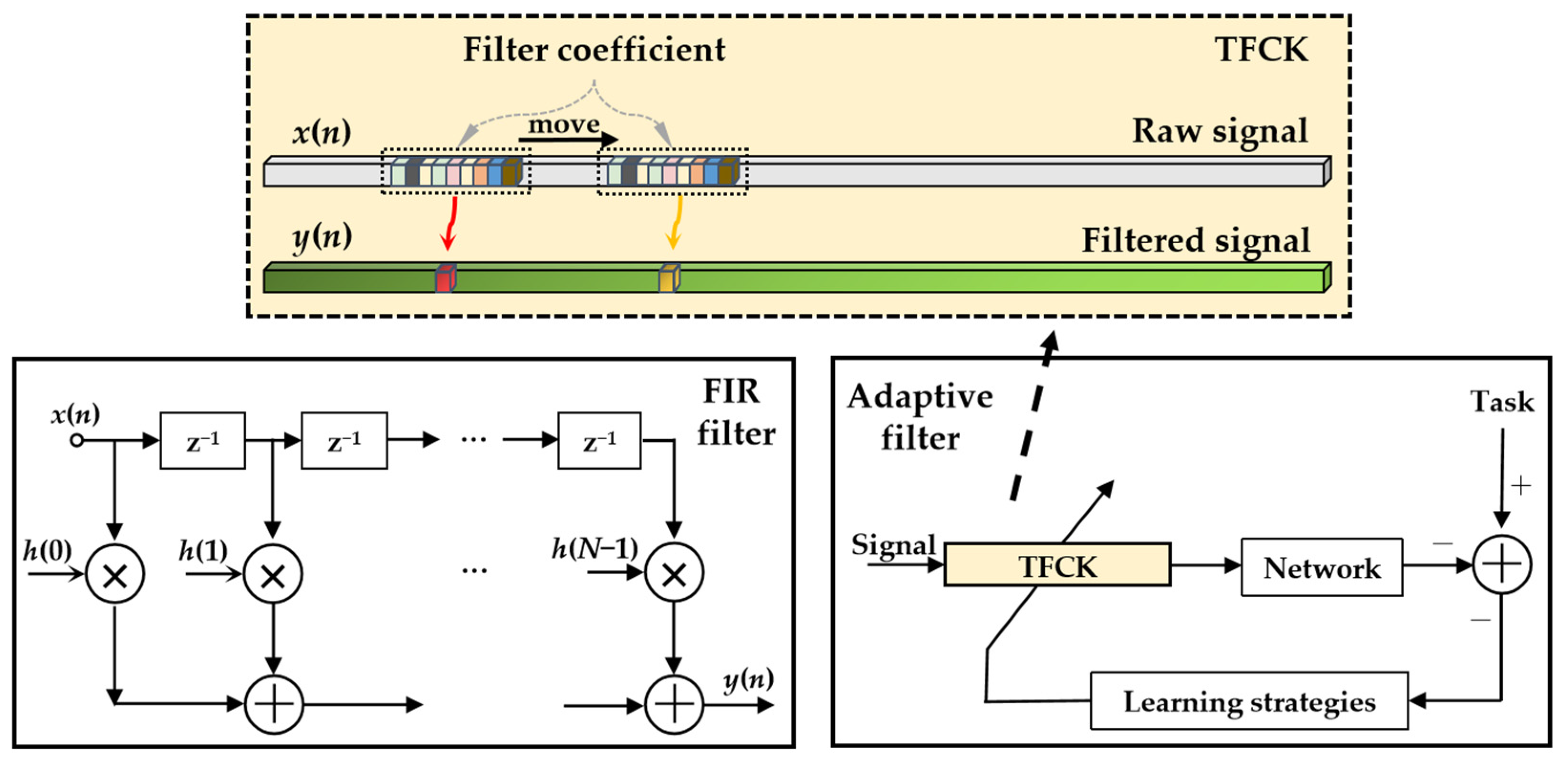

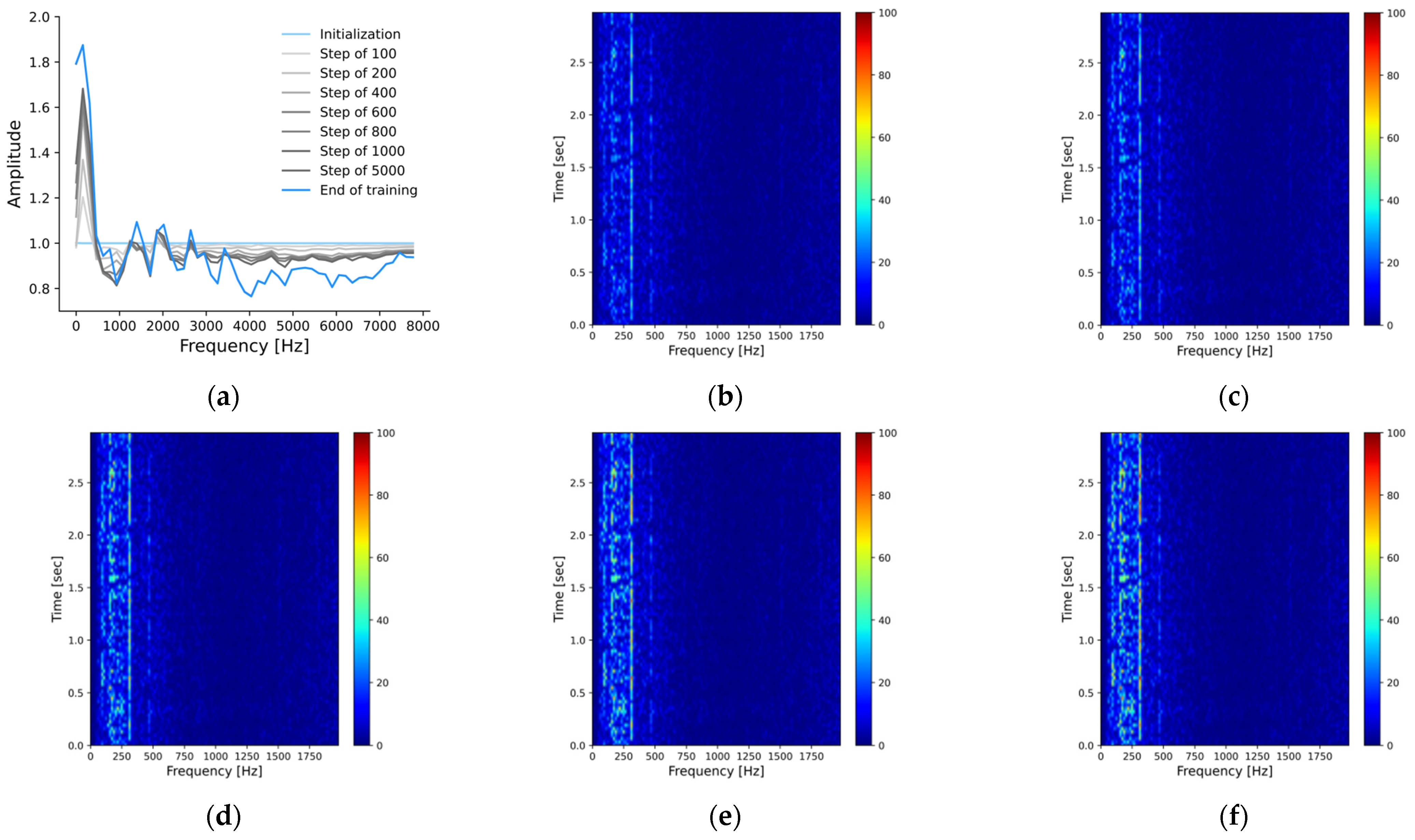

2.1. Time-Domain Filter with Convolution Kernel

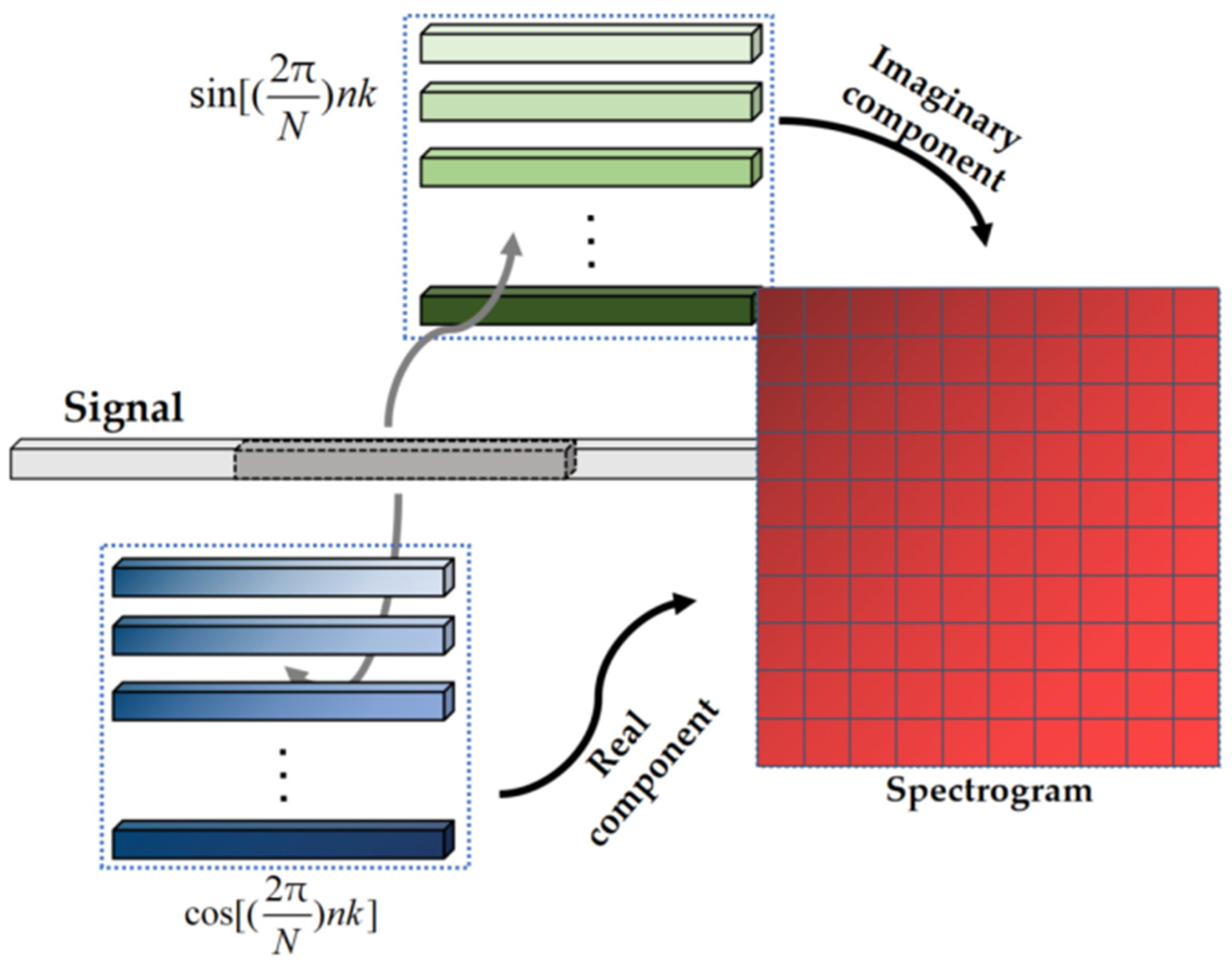

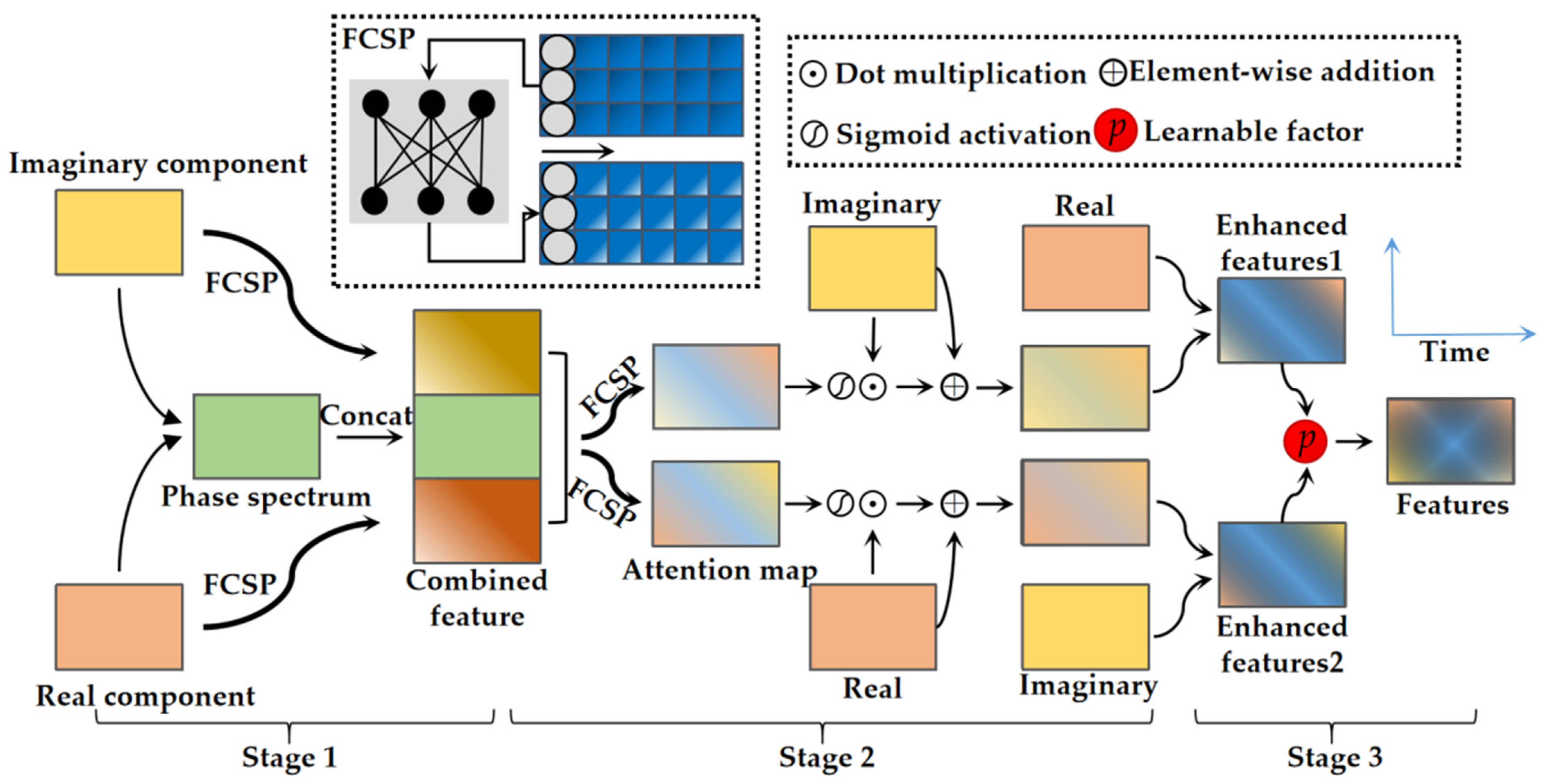

2.2. Time-Frequency Analysis Module of Attention-Based Neural Network

3. Deployment of Underwater Acoustic Target Recognition Network and Validation of Model Based Transfer Learning

4. Experiments and Discussion

4.1. Experimental Dataset

4.2. Experimental Methods

4.3. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Meng, Q.; Yang, S.; Piao, S. The classification of underwater acoustic target signals based on wave structure and support vector machine. J. Acoust. Soc. Am. 2014, 136, 2265. [Google Scholar] [CrossRef]

- Meng, Q.; Yang, S. A wave structure based method for recognition of marine acoustic target signals. J. Acoust. Soc. Am. 2015, 137, 2242. [Google Scholar] [CrossRef]

- Jiang, J.; Wu, Z.; Lu, J.; Huang, M.; Xiao, Z. Interpretable features for underwater acoustic target recognition. Measurement 2020, 173, 108586. [Google Scholar] [CrossRef]

- Lourens, J. Classification of ships using underwater radiated noise. In Proceedings of the COMSIG 88@ m_Southern African Conference on Communications and Signal Processing, Pretoria, South Africa, 24 June 1989; pp. 130–134. [Google Scholar] [CrossRef]

- Rajagopal, R.; Sankaranarayanan, B.; Rao, P.R. Target classification in a passive sonar-an expert system ap-proach. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Albuquerque, NM, USA, 3–6 April 1990; pp. 2911–2914. [Google Scholar] [CrossRef]

- Ferguson, B.G. Time-frequency signal analysis of hydrophone data. IEEE J. Ocean. Eng. 1996, 21, 537–544. [Google Scholar] [CrossRef]

- Boashash, B.; O’Shea, P. A methodology for detection and classification of some underwater acoustic signals using time-frequency analysis techniques. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1829–1841. [Google Scholar] [CrossRef]

- Liu, J.; He, Y.; Liu, Z.; Xiong, Y. Underwater Target Recognition Based on Line Spectrum and Support Vector Machine. In Proceedings of the 2014 International Conference on Mechatronics, Control and Electronic Engineering (MCE-14), Shenyang, China, 29–31 August 2014. [Google Scholar] [CrossRef]

- Ou, H.; Allen, J.S.; Syrmos, V.L. Automatic classification of underwater targets using fuzzy-cluster-based wavelet signatures. J. Acoust. Soc. Am. 2009, 125, 2578. [Google Scholar] [CrossRef]

- Huang, Q.; Azimi-Sadjadi, M.R.; Tian, B.; Dobeck, G. Underwater target classification using wavelet packets and neural networks. In Proceedings of the 1998 IEEE International Joint Conference on Neural Networks Proceedings. IEEE World Congress on Computational Intelligence, Anchorage, AL, USA, 4–9 May 2002. [Google Scholar] [CrossRef]

- Zeng, X.-Y.; Wang, S.-G. Bark-wavelet Analysis and Hilbert–Huang Transform for Underwater Target Recognition. Def. Technol. 2013, 9, 115–120. [Google Scholar] [CrossRef]

- Jahromi, M.S.; Bagheri, V.; Rostami, H.; Keshavarz, A. Feature Extraction in Fractional Fourier Domain for Classification of Passive Sonar Signals. J. Signal Process. Syst. 2019, 91, 511–520. [Google Scholar] [CrossRef]

- Tucker, S. Auditory Analysis of Sonar Signals. Ph.D. Thesis, University of Sheffield, Britain, UK, 2001. [Google Scholar]

- Zhang, L.; Wu, D.; Han, X.; Zhu, Z. Feature Extraction of Underwater Target Signal Using Mel Frequency Cepstrum Coefficients Based on Acoustic Vector Sensor. J. Sensors 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Wang, S.; Zeng, X. Robust underwater noise targets classification using auditory inspired time–frequency analysis. Appl. Acoust. 2014, 78, 68–76. [Google Scholar] [CrossRef]

- Li-Xue, Y.; Ke-An, C.; Bing-Rui, Z.; Yong, L. Underwater acoustic target classification and auditory feature identification based on dissimilarity evaluation. Acta Phys. Sin. 2014, 63, 134304. [Google Scholar] [CrossRef]

- Mohankumar, K.; Supriya, M.H.; Pillai, P.S. Bispectral Gammatone Cepstral Coefficient based Neural Network Classifier. In 2015 IEEE Underwater Technology (UT); IEEE: Piscataway, NJ, USA, 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Hemminger, T.; Pao, Y.-H. Detection and classification of underwater acoustic transients using neural networks. IEEE Trans. Neural. Netw. 1994, 5, 712–718. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Gan, A.; Chen, H.; Pan, Y.; Tang, J.; Li, J. Underwater acoustic target recognition using SVM ensemble via weighted sample and feature selection. In Proceedings of the 2016 13th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 12–16 January 2016; pp. 522–527. [Google Scholar] [CrossRef]

- Doan, V.-S.; Huynh-The, T.; Kim, D.-S. Underwater Acoustic Target Classification Based on Dense Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3029584. [Google Scholar] [CrossRef]

- Hu, G.; Wang, K.; Liu, L. Underwater Acoustic Target Recognition Based on Depthwise Separable Convolution Neural Networks. Sensors 2021, 21, 1429. [Google Scholar] [CrossRef] [PubMed]

- Tian, S.; Chen, D.; Wang, H.; Liu, J. Deep convolution stack for waveform in underwater acoustic target recognition. Sci. Rep. 2021, 11, 1–14. [Google Scholar] [CrossRef]

- Cao, X.; Togneri, R.; Zhang, X.; Yu, Y. Convolutional Neural Network With Second-Order Pooling for Underwater Target Classification. IEEE Sensors J. 2018, 19, 3058–3066. [Google Scholar] [CrossRef]

- Li, C.; Liu, Z.; Ren, J.; Wang, W.; Xu, J. A Feature Optimization Approach Based on Inter-Class and Intra-Class Distance for Ship Type Classification. Sensors 2020, 20, 5429. [Google Scholar] [CrossRef]

- Yang, H.; Xu, G.; Yi, S.; Li, Y. A New Cooperative Deep Learning Method for Underwater Acoustic Target Recognition. In OCEANS 2019-Marseille; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, Q.; Da, L.; Zhang, Y.; Hu, Y. Integrated neural networks based on feature fusion for underwater target recognition. Appl. Acoust. 2021, 182, 108261. [Google Scholar] [CrossRef]

- Wang, X.; Liu, A.; Zhang, Y.; Xue, F. Underwater Acoustic Target Recognition: A Combination of Multi-Dimensional Fusion Features and Modified Deep Neural Network. Remote Sens. 2019, 11, 1888. [Google Scholar] [CrossRef]

- Luo, X.; Feng, Y.; Zhang, M. An Underwater Acoustic Target Recognition Method Based on Combined Feature With Automatic Coding and Reconstruction. IEEE Access 2021, 9, 63841–63854. [Google Scholar] [CrossRef]

- Liu, F.; Shen, T.; Luo, Z.; Zhao, D.; Guo, S. Underwater target recognition using convolutional recurrent neural networks with 3-D Mel-spectrogram and data augmentation. Appl. Acoust. 2021, 178, 107989. [Google Scholar] [CrossRef]

- Kamal, S.; Mujeeb, A.; Supriya, M.H. Generative adversarial learning for improved data efficiency in underwater target classification. Eng. Sci. Technol. 2022, 30, 101043. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Shahbazi, A.; Monfared, M.S.; Thiruchelvam, V.; Fei, T.K.; Babasafari, A.A. Integration of knowledge-based seismic inversion and sedimentological investigations for heterogeneous reservoir. J. Southeast Asian Earth Sci. 2020, 202, 104541. [Google Scholar] [CrossRef]

- Chen, Y.; Lu, L.; Karniadakis, G.E.; Negro, L.D. Physics-informed neural networks for inverse problems in nano-optics and metamaterials. Opt. Express 2020, 28, 11618–11633. [Google Scholar] [CrossRef]

- Khayer, K.; Kahoo, A.R.; Monfared, M.S.; Tokhmechi, B.; Kavousi, K. Target-Oriented Fusion of Attributes in Data Level for Salt Dome Geobody Delineation in Seismic Data. Nonrenewable Resour. 2022, 31, 2461–2481. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Santos-Domínguez, D.; Torres-Guijarro, S.; Cardenal-López, A.; Pena-Gimenez, A. ShipsEar: An underwater vessel noise database. Appl. Acoust. 2016, 113, 64–69. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.W.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar] [CrossRef]

- Yang, Y.Y.; Hira, M.; Ni, Z.; Astafurov, A.; Chen, C.; Puhrsch, C.; Quenneville-Bélair, V. Torchaudio: Building Blocks for Audio and Speech Processing. In Proceedings of the 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 6982–6986. [Google Scholar] [CrossRef]

- Fernandes, R.P.; Apolinário, J.A., Jr. Underwater target classification with optimized feature selection based on Genetic Algorithms. SBrT 2020. [Google Scholar] [CrossRef]

- Xie, J.; Chen, J.; Zhang, J. DBM-Based Underwater Acoustic Source Recognition. In Proceedings of the 2018 IEEE International Conference on Communication Systems (ICCS), Chengdu, China, 19–21 December 2018; pp. 366–371. [Google Scholar] [CrossRef]

- Luo, X.; Feng, Y. An Underwater Acoustic Target Recognition Method Based on Restricted Boltzmann Machine. Sensors 2020, 20, 5399. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Gu, H.; Yin, J.; Yang, J. GAN-based Sample Expansion for Underwater Acoustic Signal. J. Physics: Conf. Ser. 2020, 1544, 12104. [Google Scholar] [CrossRef]

- Hong, F.; Liu, C.; Guo, L.; Chen, F.; Feng, H. Underwater Acoustic Target Recognition with a Residual Network and the Optimized Feature Extraction Method. Appl. Sci. 2021, 11, 1442. [Google Scholar] [CrossRef]

- Khishe, M. DRW-AE: A Deep Recurrent-Wavelet Autoencoder for Underwater Target Recognition. IEEE J. Ocean. Eng. 2022, 47, 1083–1098. [Google Scholar] [CrossRef]

| Stages | Layers | Output Shape |

|---|---|---|

| Stage 1 | FCSP (Imaginary component) | (T, NF) |

| FCSP (Real component) | (T, NF) | |

| Stage 2 | FCSP (Imaginary component) | (T, NF) |

| FCSP (Real component) | (T, NF) | |

| Stage 3 | Learnable factor | (T, NF) |

| Pre-Trained Backbone | Backbone 1 | Backbone 2 |

|---|---|---|

| C(64,3,1) | C(64,3,1) | C(64,3,1) |

| C(64,3,1) | ||

| Avg-pooling(2,2) | Avg-pooling(2,2) | Avg-pooling(2,2) |

| C(128,3,1) | C(128,3,1) | C(128,3,1) |

| C(128,3,1) | ||

| Avg-pooling(2,2) | Avg-pooling(2,2) | Avg-pooling(2,2) |

| C(256,3,1) | C(256,3,1) | C(256,3,1) |

| C(256,3,1) | C(256,3,1) | |

| Avg-pooling(2,2) | Avg-pooling(1,2) | Avg-pooling(2,2) |

| C(512,3,1) | C(512,3,1) | |

| C(512,3,1) | C(512,3,1) | |

| Avg-pooling(1,1) | Avg-pooling(1,2) |

| Layers | Classifier 1 | Classifier 2 |

|---|---|---|

| Fully Connected | FC (512,256) | FC (256,256) |

| Dropout | Rate = 0.5 | Rate = 0.5 |

| Fully Connected | FC (256,x) | FC (256,x) |

| LogSoftmax | Sm (x,x) | Sm (x,x) |

| Category | Targets |

|---|---|

| Class-A | Fishing boats, trawlers, mussel boats, tugboats and dredgers |

| Class-B | motorboats, pilot boats, sailboats |

| Class-C | passenger liners |

| Class-D | ocean liners and ro-ro vessels |

| Class-E | ocean noise |

| Features | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| STFT-512 | 0.684 | 0.707 | 0.690 | 0.748 |

| STFT-1024 | 0.720 | 0.732 | 0.725 | 0.755 |

| STFT-2048 | 0.739 | 0.761 | 0.746 | 0.780 |

| STFT-4096 | 0.671 | 0.689 | 0.656 | 0.691 |

| STFT-8192 | 0.650 | 0.689 | 0.654 | 0.680 |

| FEM-ATNN | 0.787 | 0.816 | 0.797 | 0.839 |

| Fold | Initialization Mode | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| 1 | Random | 0.875 | 0.888 | 0.878 | 0.870 |

| Pre-trained | 0.908 | 0.909 | 0.908 | 0.901 | |

| 2 | Random | 0.962 | 0.955 | 0.958 | 0.958 |

| Pre-trained | 0.964 | 0.956 | 0.960 | 0.958 | |

| 3 | Random | 0.941 | 0.940 | 0.941 | 0.941 |

| Pre-trained | 0.950 | 0.951 | 0.950 | 0.944 | |

| 4 | Random | 0.886 | 0.881 | 0.881 | 0.875 |

| Pre-trained | 0.912 | 0.919 | 0.915 | 0.911 |

| Task | Initialization Mode | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| Task 1 (fold 1) | LFCC | 0.819 | 0.826 | 0.820 | 0.812 |

| MFCC | 0.834 | 0.838 | 0.835 | 0.831 | |

| FBank | 0.875 | 0.867 | 0.879 | 0.876 | |

| FEM-ATNN-trans | 0.926 | 0.939 | 0.931 | 0.926 | |

| Task 1 (fold 2) | LFCC | 0.934 | 0.932 | 0.932 | 0.929 |

| MFCC | 0.943 | 0.941 | 0.942 | 0.939 | |

| FBank | 0.971 | 0.971 | 0.971 | 0.970 | |

| FEM-ATNN-trans | 0.984 | 0.978 | 0.980 | 0.980 | |

| Task 2 | LFCC | 0.682 | 0.706 | 0.684 | 0.750 |

| MFCC | 0.742 | 0.740 | 0.739 | 0.792 | |

| FBank | 0.747 | 0.749 | 0.742 | 0.793 | |

| FEM-ATNN-trans | 0.824 | 0.846 | 0.833 | 0.878 |

| No. | Methods | Accuracy |

|---|---|---|

| 1 | Baseline [36] | 0.754 |

| 2 | Optimized Feature Selection based on Genetic Algorithms [44] | 0.723 |

| 3 | DBM [45] | 0.903 |

| 4 | Inter-class and Intra-class [24] | 0.840 |

| 5 | RBM + BP [46] | 0.932 |

| 6 | GAN-based Sample Expansion [47] | 0.929 |

| 7 | CRNN-9 with 3-D Mel and data_aug [29] | 0.941 |

| 8 | ResNet18 for UATR [48] | 0.943 |

| 9 | DAR-AE [49] | 0.945 |

| 10 | Our | 0.953 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Liu, F.; Shen, T.; Chen, L.; Yang, X.; Zhao, D. Generalizable Underwater Acoustic Target Recognition Using Feature Extraction Module of Neural Network. Appl. Sci. 2022, 12, 10804. https://doi.org/10.3390/app122110804

Li D, Liu F, Shen T, Chen L, Yang X, Zhao D. Generalizable Underwater Acoustic Target Recognition Using Feature Extraction Module of Neural Network. Applied Sciences. 2022; 12(21):10804. https://doi.org/10.3390/app122110804

Chicago/Turabian StyleLi, Daihui, Feng Liu, Tongsheng Shen, Liang Chen, Xiaodan Yang, and Dexin Zhao. 2022. "Generalizable Underwater Acoustic Target Recognition Using Feature Extraction Module of Neural Network" Applied Sciences 12, no. 21: 10804. https://doi.org/10.3390/app122110804

APA StyleLi, D., Liu, F., Shen, T., Chen, L., Yang, X., & Zhao, D. (2022). Generalizable Underwater Acoustic Target Recognition Using Feature Extraction Module of Neural Network. Applied Sciences, 12(21), 10804. https://doi.org/10.3390/app122110804