The Use of Artificial Intelligence in Orthopedics: Applications and Limitations of Machine Learning in Diagnosis and Prediction

Abstract

1. Introduction

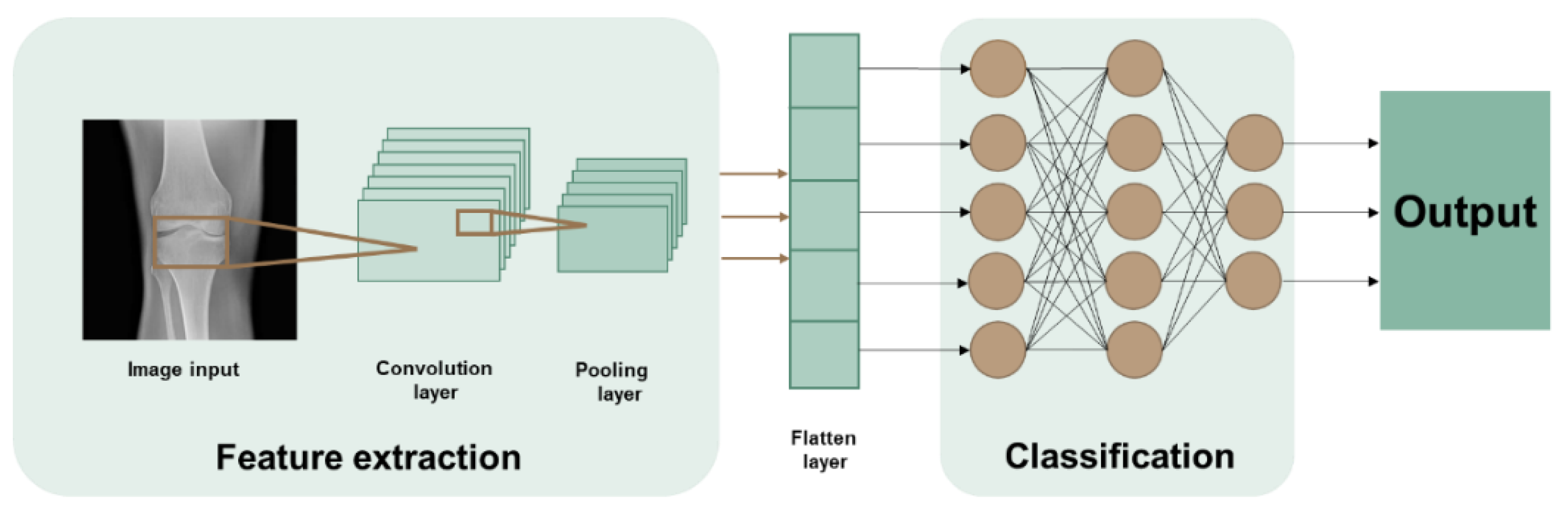

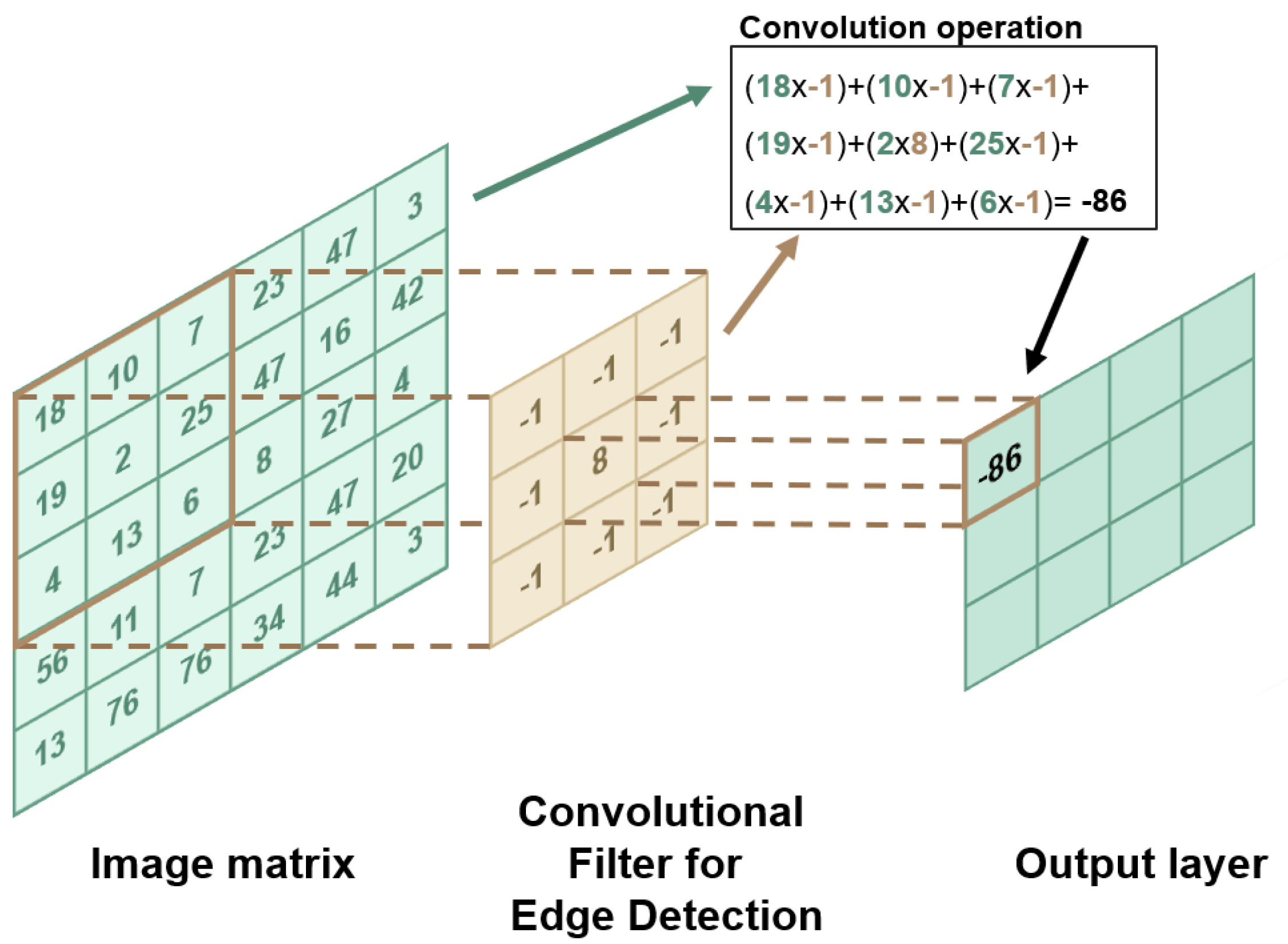

2. Machine Learning: Concepts and Techniques

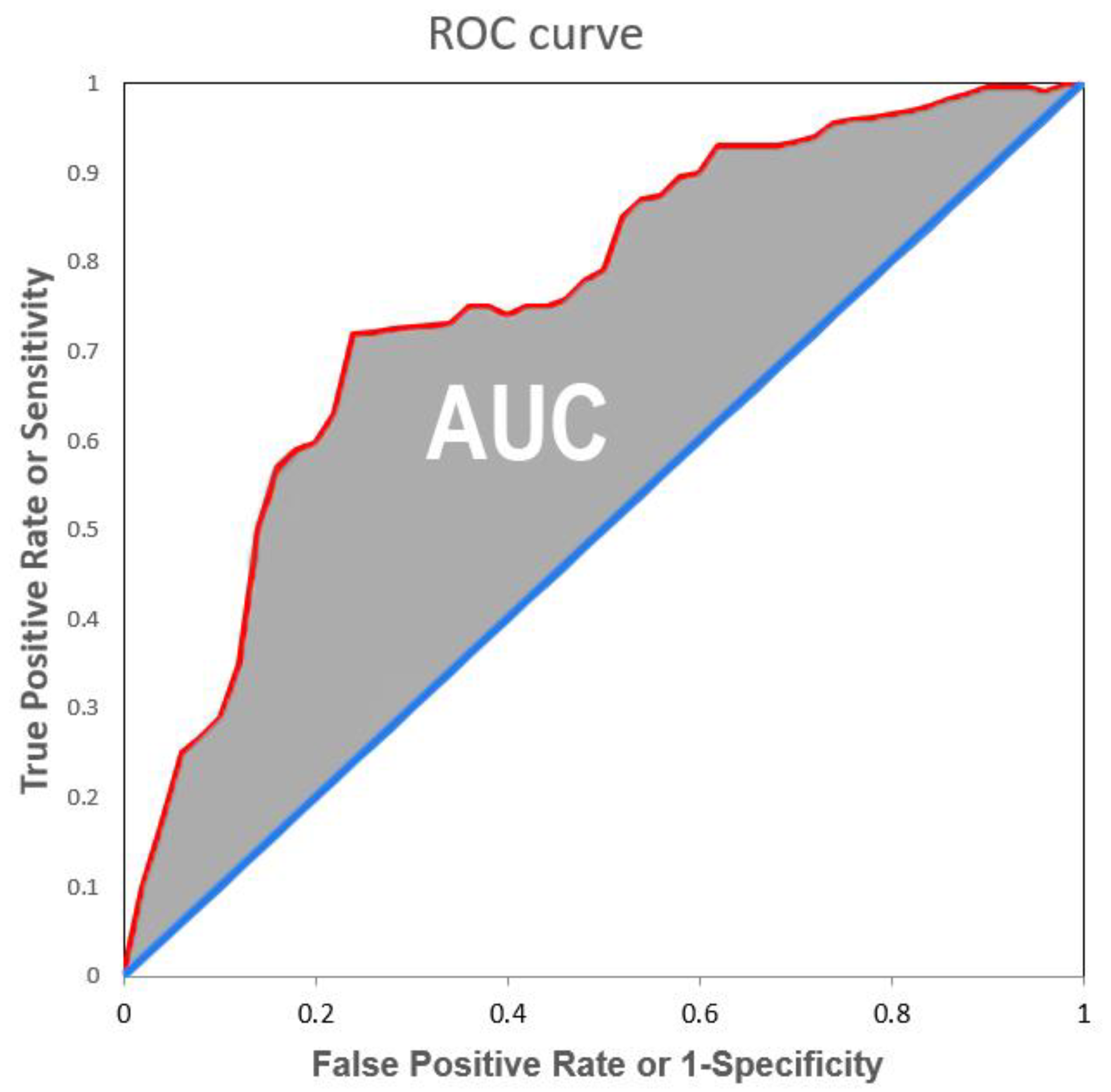

3. Machine Learning Performance Indexes

4. Diagnosing with AI

4.1. Hip Related Diagnosis

4.2. Knee Related Diagnosis

4.3. Other Orthopaedic Related Diagnosis

5. Prediction with AI

5.1. Surgery Prediction

5.2. Prediction of Post-Operative Complications

| Reference | Title | Application |

|---|---|---|

| Cheng et al., 2019 [8] | Deep learning for Detection of Complete Anterior Cruciate Ligament Tear | Diagnosis |

| Park et al., 2022 [18] | Artificial intelligence-based classification of bone tumors in the proximal femur on plain radiographs: System development and validation | Diagnosis |

| Liu et al., 2021 [19] | Artificial Intelligence to Diagnose Tibial Plateau Fractures: An Intelligent Assistant for Orthopedic Physicians | Diagnosis |

| Xie et al., 2021 [20] | Deep Learning-Based MRI in Diagnosis of Fracture of Tibial Plateau Combined with Meniscus Injury | Diagnosis |

| Ghose et al., 2020 [21] | Artificial Intelligence based identification of Total Knee Arthroplasty Implants | Diagnosis |

| Blüthgen et al., 2020 [11] | Detection and localization of distal radius fractures: Deep Learning system versus radiologists | Diagnosis |

| Gan et al., 2019 [22] | Artificial intelligence detection of distal radius fractures: a comparison between the convolutional neural network and professional assessments | Diagnosis |

| Houserman et al., 2022 [25] | The Viability of an Artificial Intelligence/Machine Learning Prediction Model to Determine Candidates for Knee Arthroplasty | Prediction |

| Hinterwimmer et al., 2022 [10] | Prediction of complications and surgery duration in primary TKA with high accuracy using machine learning with arthroplasty-specific data | Prediction |

| Ramkumar et al., 2019 [26] | Deep Learning Preoperatively Predicts Value Metrics for Primary Total Knee Arthroplasty: Development and Validation of an Artificial Neural Network | Prediction |

| Rouzrokh et al., 2020 [9] | Deep Learning Artificial Intelligence Model for Assessment of Hip Dislocation Risk Following Primary Total Hip Arthroplasty From Postoperative Radiographs | Prediction |

6. Limitations of AI and Implications for the Future

6.1. External Validation and the Change in the Clinician’s Working Routine

6.2. Data Limitations and How to Collect Data

6.3. Black-Box and Responsibility Issues

6.4. Automation and the Patient–Physician Relationship

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Oliveira e Carmo, L.; van den Merkhof, A.; Olczak, J.; Gordon, M.; Jutte, P.C.; Jaarsma, R.L.; Jpma, F.F.A.I.; Doornberg, J.N.; Prijs, J. An increasing number of convolutional neural networks for fracture recognition and classification in orthopaedics. Bone Jt. Open 2021, 2, 879–885. [Google Scholar] [CrossRef] [PubMed]

- Ko, S.; Pareek, A.; Ro, D.H.; Lu, Y.; Camp, C.L.; Martin, R.K.; Krych, A.J. Artificial intelligence in orthopedics: Three strategies for deep learning with orthopedic specific imaging. Knee Surg. Sport. Traumatol. Arthrosc. 2022, 30, 758–761. [Google Scholar] [CrossRef] [PubMed]

- Corban, J.; Lorange, J.P.; Laverdiere, C.; Khoury, J.; Rachevsky, G.; Burman, M.; Martineau, P.A. Artificial Intelligence in the Management of Anterior Cruciate Ligament Injuries. Orthop. J. Sport. Med. 2021, 9. [Google Scholar] [CrossRef] [PubMed]

- Marotta, N.; De Sire, A.; Marinaro, C.; Moggio, L.; Inzitari, M.T.; Russo, I.; Tasselli, A.; Paolucci, T.; Valentino, P.; Ammendolia, A. Efficacy of Transcranial Direct Current Stimulation (tDCS) on Balance and Gait in Multiple Sclerosis Patients: A Machine Learning Approach. J. Clin. Med. 2022, 11, 3505. [Google Scholar] [CrossRef] [PubMed]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python: A Guide for Data Scientists; O’Reilly Media: Sebastopol, CA, USA, 2016. [Google Scholar]

- Hallinan JT, P.D.; Zhu, L.; Yang, K.; Makmur, A.; Algazwi DA, R.; Thian, Y.L.; Lau, S.; Choo, Y.S.; Eide, S.E.; Yap, Q.V.; et al. Deep Learning Model for Automated Detection and Classification of Central Canal, Lateral Recess, and Neural Foraminal Stenosis at Lumbar Spine MRI. Radiology 2021, 300, 130–138. [Google Scholar] [CrossRef] [PubMed]

- Pinto, M.; Marotta, N.; Caracò, C.; Simeone, E.; Ammendolia, A.; de Sire, A. Quality of Life Predictors in Patients With Melanoma: A Machine Learning Approach. Front. Oncol. 2022, 12, 843611. [Google Scholar] [CrossRef]

- Cheng, C.T.; Ho, T.Y.; Lee, T.Y.; Chang, C.C.; Chou, C.C.; Chen, C.C.; Chung, I.F.; Liao, C.H. Application of a deep learning algorithm for detection and visualization of hip fractures on plain pelvic radiographs. Eur. Radiol. 2019, 29, 5469–5477. [Google Scholar] [CrossRef]

- Rouzrokh, P.; Ramazanian, T.; Wyles, C.C.; Philbrick, K.A.; Cai, J.C.; Taunton, M.J.; Kremers, H.M.; Lewallen, D.G.; Erickson, B.J. Deep Learning Artificial Intelligence Model for Assessment of Hip Dislocation Risk Following Primary Total Hip Arthroplasty From Postoperative Radiographs. J. Arthroplast. 2021, 36, 2197–2203.e3. [Google Scholar] [CrossRef]

- Hinterwimmer, F.; Lazic, I.; Langer, S.; Suren, C.; Charitou, F.; Hirschmann, M.T.; Matziolis, G.; Seidl, F.; Pohlig, F.; Rueckert, D. Prediction of complications and surgery duration in primary TKA with high accuracy using machine learning with arthroplasty-specific data. Knee Surg. Sport. Traumatol. Arthrosc. 2022, 1–11. [Google Scholar] [CrossRef]

- Blüthgen, C.; Becker, A.S.; de Martini, I.V.; Meier, A.; Martini, K.; Frauenfelder, T. Detection and localization of distal radius fractures: Deep learning system versus radiologists. Eur. J. Radiol. 2020, 126, 108925. [Google Scholar] [CrossRef]

- Bezdan, T.; Džakula, N.B. Convolutional Neural Network Layers and Architectures. In Sinteza 2019-International Scientific Conference on Information Technology and Data Related Research; Singidunum University: Beograd, Serbia, 2019; pp. 445–451. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:abs/1511.08458. [Google Scholar]

- Bengio, Y. Convolutional Networks for Images, Speech, and Time-Series MoDeep View Project Oracle Performance for Visual Captioning View Project. 1997. Available online: https://www.researchgate.net/publication/2453996 (accessed on 10 October 2022).

- Ahmed, M.I.; Mamun, S.M.; Asif, A.U.Z. DCNN-Based Vegetable Image Classification Using Transfer Learning: A Comparative Study. In Proceedings of the 2021 5th International Conference on Computer, Communication, and Signal Processing, ICCCSP 2021, Kumamoto, Japan, 23–25 July 2021; pp. 235–243. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A Comparative Analysis of XGBoost. Artif. Intell. Rev. Vol. 2019, 54, 1937–1967. [Google Scholar] [CrossRef]

- Malik, S.; Harode, R.; Singh, A. XGBoost: A Deep Dive into Boosting (Introduction Documentation) Automatic Railway Barrier System, Railway Tracking and Collision Avoidance using IOT View project Phishing Detection in E-mails using Machine Learning View project. Technol. Rep. 2020. [Google Scholar] [CrossRef]

- Park, C.-W.; Oh, S.-J.; Kim, K.-S.; Jang, M.-C.; Kim, I.S.; Lee, Y.-K.; Chung, M.J.; Cho, B.H.; Seo, S.-W. Artificial intelligence-based classification of bone tumors in the proximal femur on plain radiographs: System development and validation. PLoS ONE 2022, 17, e0264140. [Google Scholar] [CrossRef]

- Liu, P.R.; Zhang, J.Y.; Xue, M.D.; Duan, Y.Y.; Hu, J.L.; Liu, S.X.; Xie, Y.; Wang, H.L.; Wang, J.W.; Huo, T.T. Artificial Intelligence to Diagnose Tibial Plateau Fractures: An Intelligent Assistant for Orthopedic Physicians. Curr. Med. Sci. 2021, 41, 1158–1164. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Li, Z.; Bai, L.; Zhou, R.; Li, C.; Jiang, X.; Zuo, J.; Qi, Y. Deep Learning-Based MRI in Diagnosis of Fracture of Tibial Plateau Combined with Meniscus Injury. Sci. Program. 2021, 2021, 9935910. [Google Scholar] [CrossRef]

- Ghose, S.; Datta, S.; Batta, V.; Malathy, C.; Gayathri, M. Artificial intelligence based identification of total knee arthroplasty implants. In Proceedings of the 3rd International Conference on Intelligent Sustainable Systems, ICISS 2020, Palladam, India, 3–5 December 2020; pp. 302–307. [Google Scholar] [CrossRef]

- Gan, K.; Xu, D.; Lin, Y.; Shen, Y.; Zhang, T.; Hu, K.; Zhou, K.; Bi, M.; Pan, L.; Wu, W.; et al. Artificial intelligence detection of distal radius fractures: A comparison between the convolutional neural network and professional assessments. Acta Orthop. 2019, 90, 394–400. [Google Scholar] [CrossRef]

- Lee, L.S.; Chan, P.K.; Wen, C.; Fung, W.C.; Cheung, A.; Chan VW, K.; Cheung, M.H.; Fu, H.; Yan, C.H.; Chiu, K.Y. Artificial intelligence in diagnosis of knee osteoarthritis and prediction of arthroplasty outcomes: A review. Arthroplasty 2022, 4, 16. [Google Scholar] [CrossRef]

- Ramkumar, P.N.; Haeberle, H.S.; Bloomfield, M.R.; Schaffer, J.L.; Kamath, A.F.; Patterson, B.M.; Krebs, V.E. Artificial Intelligence and Arthroplasty at a Single Institution: Real-World Applications of Machine Learning to Big Data, Value-Based Care, Mobile Health, and Remote Patient Monitoring. J. Arthroplast. 2019, 34, 2204–2209. [Google Scholar] [CrossRef]

- Houserman, D.J.; Berend, K.R.; Lombardi, A.V.; Duhaime, E.P.; Jain, A.; Crawford, D.A. The Viability of an Artificial Intelligence/Machine Learning Prediction Model to Determine Candidates for Knee Arthroplasty. J. Arthroplast. 2022. [Google Scholar] [CrossRef]

- Ramkumar, P.N.; Karnuta, J.M.; Navarro, S.M.; Haeberle, H.S.; Scuderi, G.R.; Mont, M.A.; Krebs, V.E.; Patterson, B.M. Deep Learning Preoperatively Predicts Value Metrics for Primary Total Knee Arthroplasty: Development and Validation of an Artificial Neural Network Model. J. Arthroplast. 2019, 34, 2220–2227.e1. [Google Scholar] [CrossRef] [PubMed]

- Baram, D.; Daroowalla, F.; Garcia, R.; Zhang, G.; Chen, J.J.; Healy, E.; Riaz, S.A.; Richman, P. Use of the All Patient Refined-Diagnosis Related Group (APR-DRG) Risk of Mortality Score as a Severity Adjustor in the Medical ICU. Clin. Med. Circ. Respir. Pulm. Med. 2008, 2, 19–25. [Google Scholar] [CrossRef] [PubMed]

- Haleem, A.; Vaishya, R.; Javaid, M.; Khan, I.H. Artificial Intelligence (AI) applications in orthopaedics: An innovative technology to embrace. J. Clin. Orthop. Trauma 2020, 11, S80–S81. [Google Scholar] [CrossRef]

- Federer, S.J.; Jones, G.G. Artificial intelligence in orthopaedics: A scoping review. PLoS ONE 2021, 16, e0260471. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S. Explainability and artificial intelligence in medicine. Lancet Digit. Health 2022, 4, e214–e215. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.M.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 266. [Google Scholar] [CrossRef] [PubMed]

- Gordon, L.; Grantcharov, T.; Rudzicz, F. Explainable Artificial Intelligence for Safe Intraoperative Decision Support. JAMA Surg. 2019, 154, 1064–1065. [Google Scholar] [CrossRef]

- Leung, K.; Zhang, B.; Tan, J.; Shen, Y.; Geras, K.J.; Babb, J.S.; Cho, K.; Chang, G.; Deniz, C.M. Prediction of total knee replacement and diagnosis of osteoarthritis by using deep learning on knee radiographs: Data from the osteoarthritis initiative. Radiology 2020, 296, 584–593. [Google Scholar] [CrossRef]

- Martin, R.K.; Ley, C.; Pareek, A.; Groll, A.; Tischer, T.; Seil, R. Artificial intelligence and machine learning: An introduction for orthopaedic surgeons. Knee Surg. Sport. Traumatol. Arthrosc. 2022, 30, 361–364. [Google Scholar] [CrossRef]

- Jotterand, F.; Bosco, C. Artificial Intelligence in Medicine: A Sword of Damocles? J. Med. Syst. 2022, 46, 9. [Google Scholar] [CrossRef]

- Kurmis, A.P.; Ianunzio, J.R. Artificial intelligence in orthopedic surgery: Evolution, current state and future directions. Arthroplasty 2022, 4, 9. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Innocenti, B.; Radyul, Y.; Bori, E. The Use of Artificial Intelligence in Orthopedics: Applications and Limitations of Machine Learning in Diagnosis and Prediction. Appl. Sci. 2022, 12, 10775. https://doi.org/10.3390/app122110775

Innocenti B, Radyul Y, Bori E. The Use of Artificial Intelligence in Orthopedics: Applications and Limitations of Machine Learning in Diagnosis and Prediction. Applied Sciences. 2022; 12(21):10775. https://doi.org/10.3390/app122110775

Chicago/Turabian StyleInnocenti, Bernardo, Yanislav Radyul, and Edoardo Bori. 2022. "The Use of Artificial Intelligence in Orthopedics: Applications and Limitations of Machine Learning in Diagnosis and Prediction" Applied Sciences 12, no. 21: 10775. https://doi.org/10.3390/app122110775

APA StyleInnocenti, B., Radyul, Y., & Bori, E. (2022). The Use of Artificial Intelligence in Orthopedics: Applications and Limitations of Machine Learning in Diagnosis and Prediction. Applied Sciences, 12(21), 10775. https://doi.org/10.3390/app122110775