Bearing Fault Diagnosis Based on Small Sample Learning of Maml–Triplet

Abstract

1. Introduction

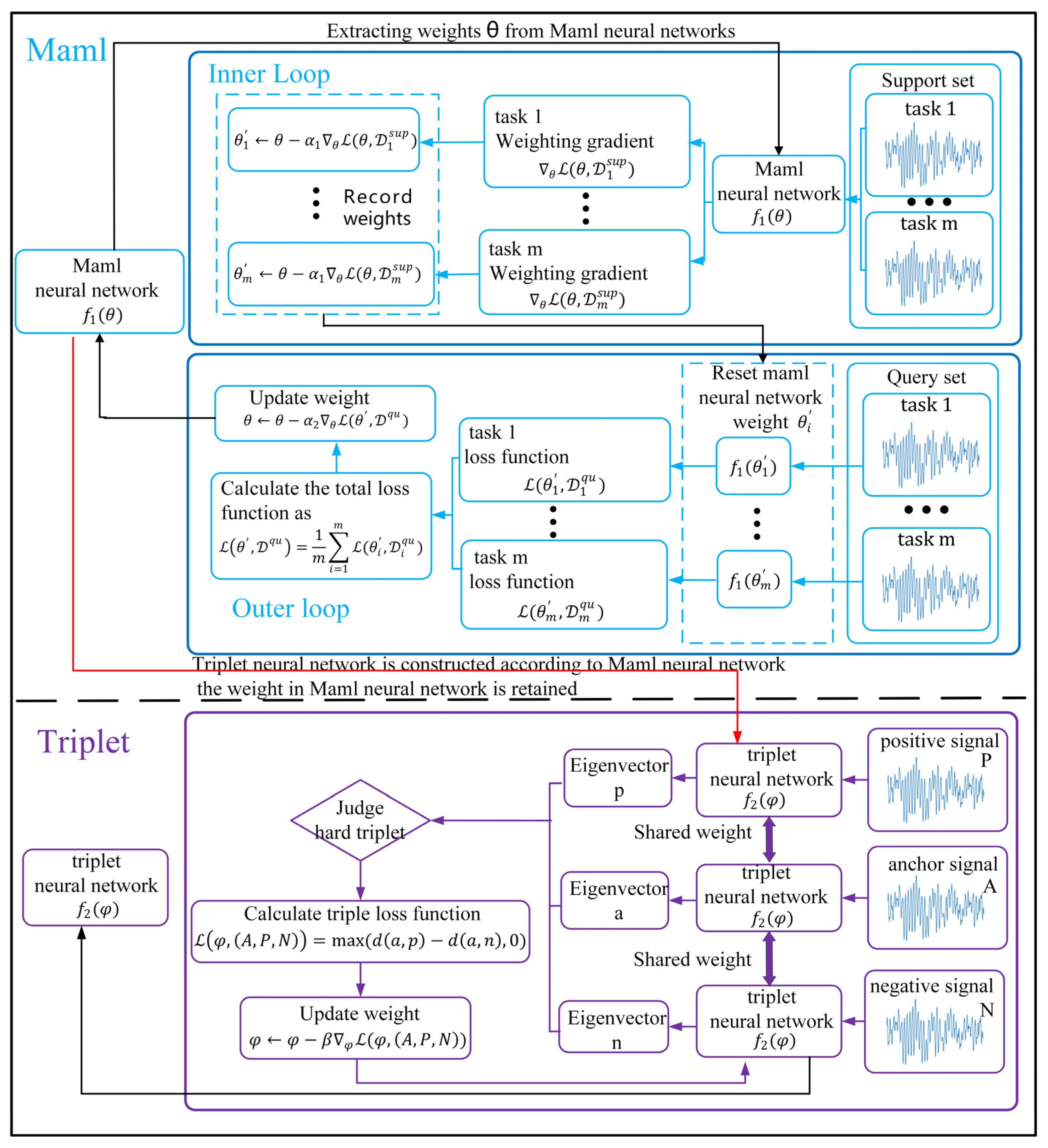

- The Maml–Triplet model for small sample fault diagnosis is proposed. An initial feature extractor that can be quickly adapted to multiple fault classification tasks is obtained using the Maml meta learning method, then the feature vector of signal is obtained using triplet depth measurement learning, and the fault type of unknown signal is judged by comparing the Euclidean distance of the feature vectors between different signals.

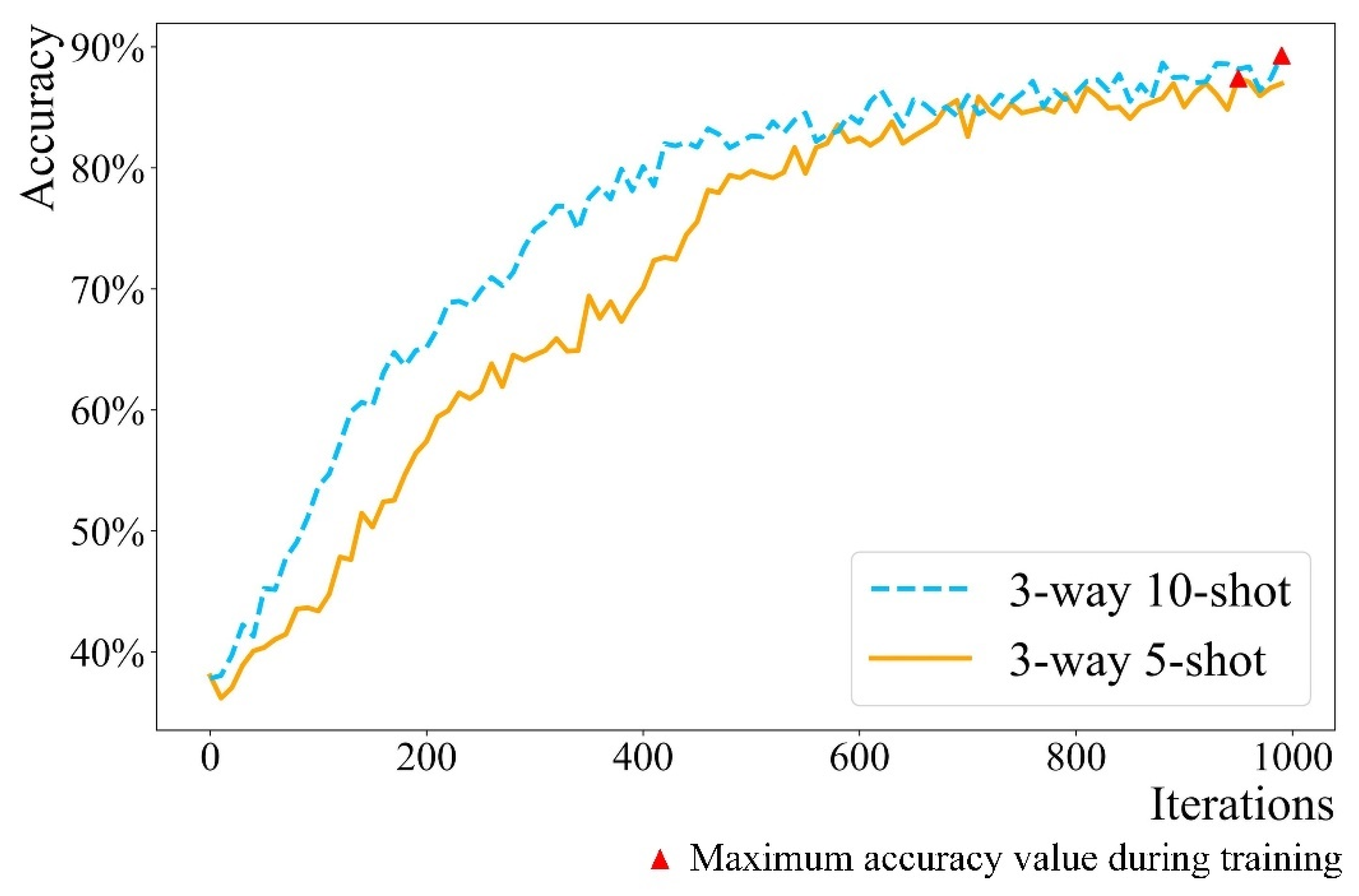

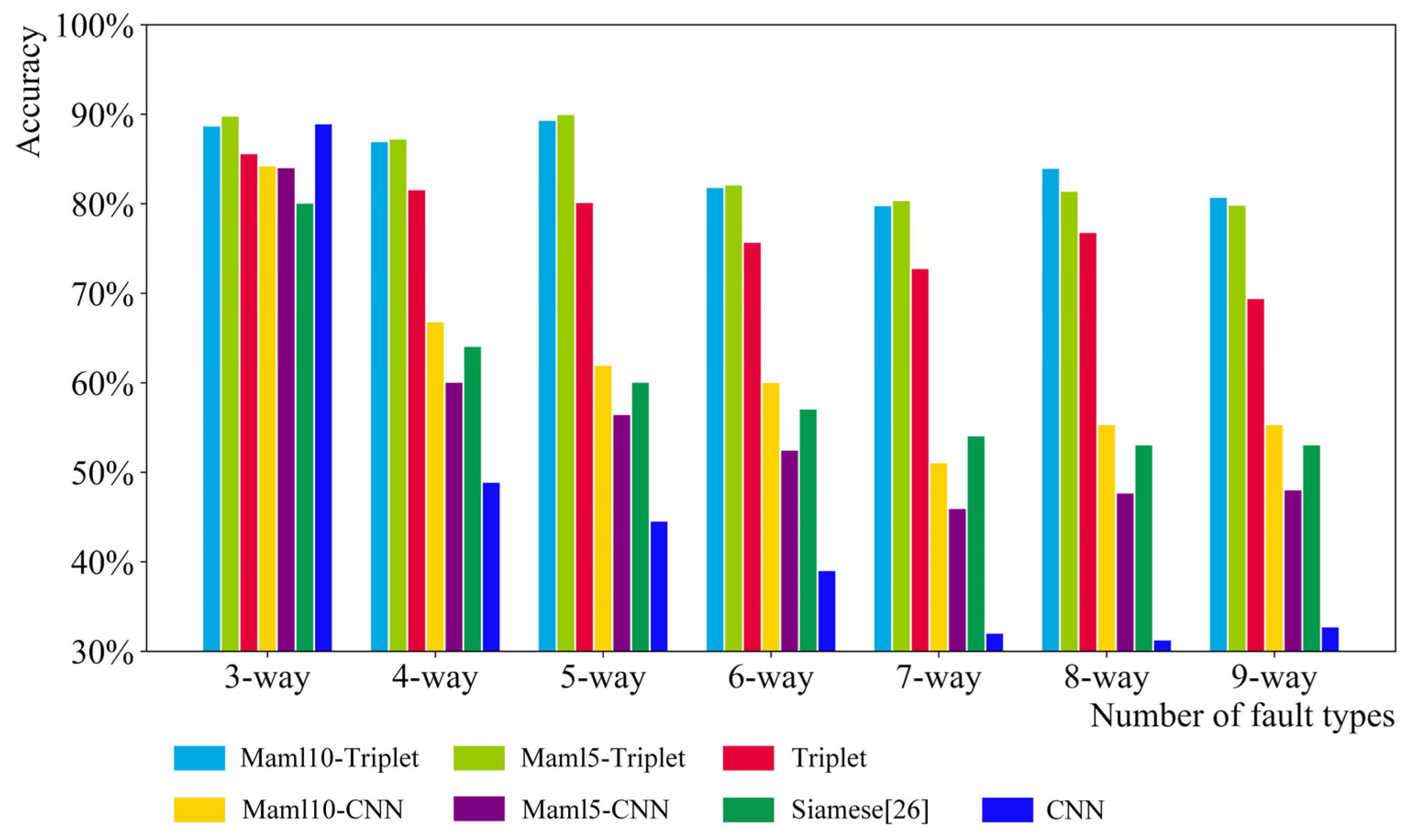

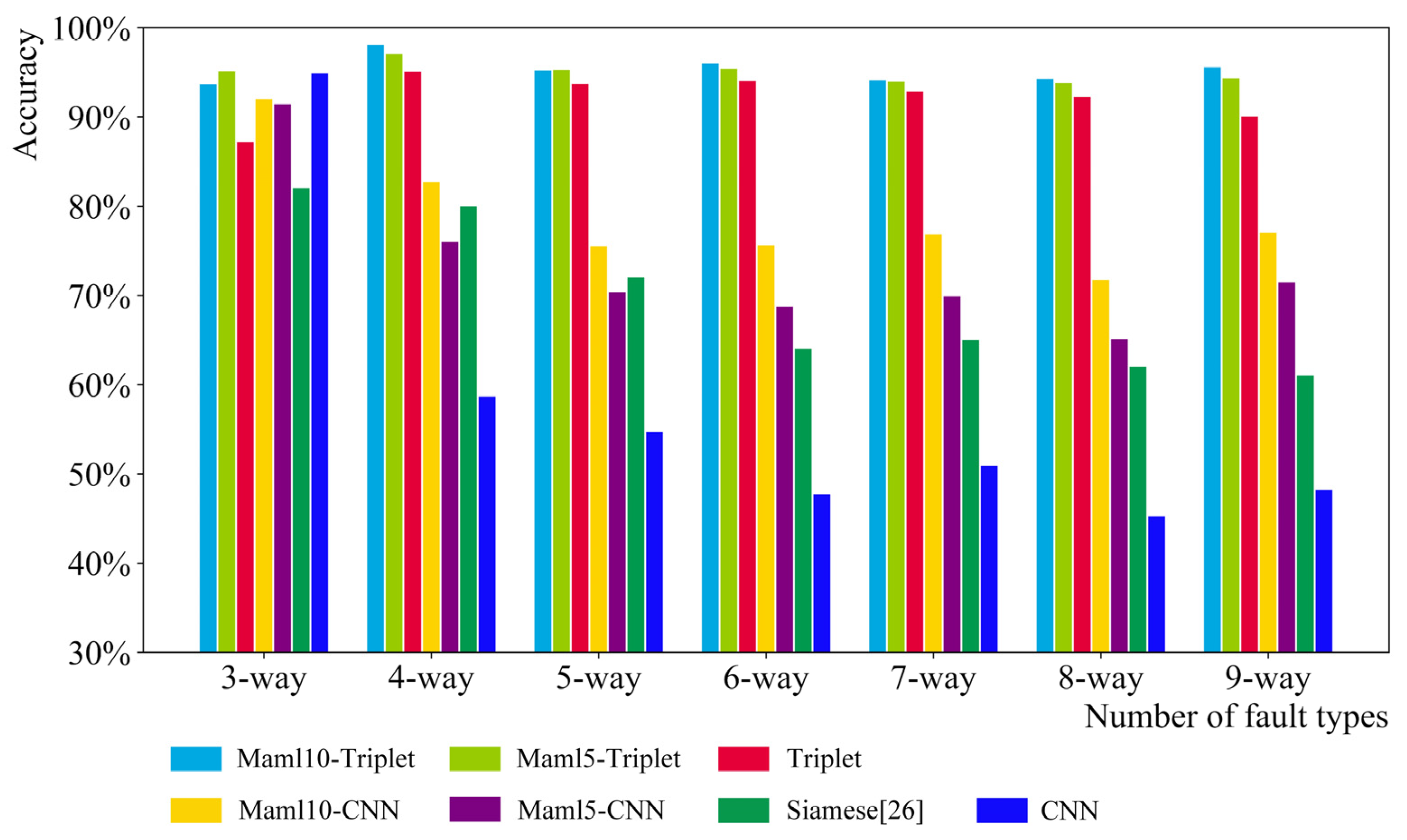

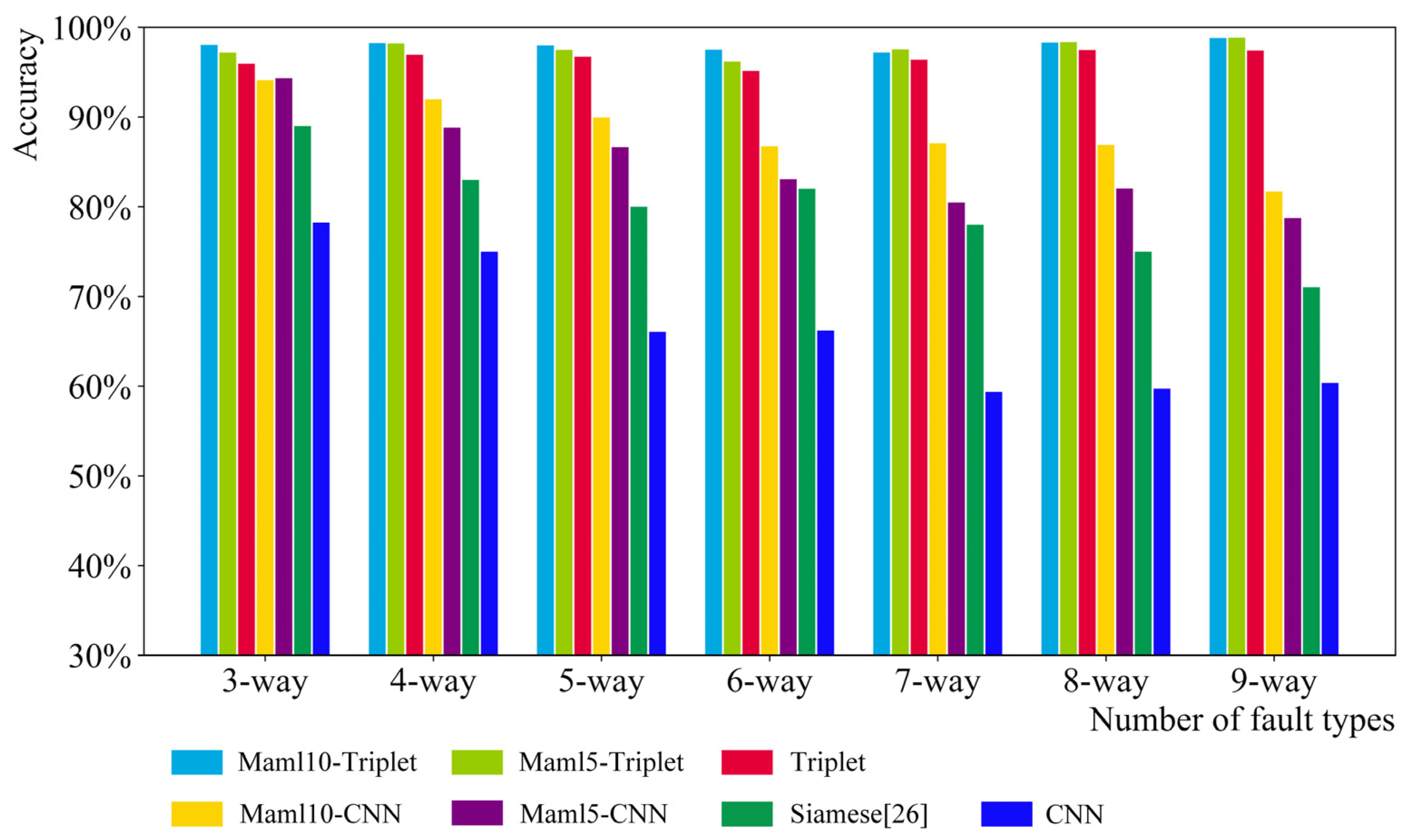

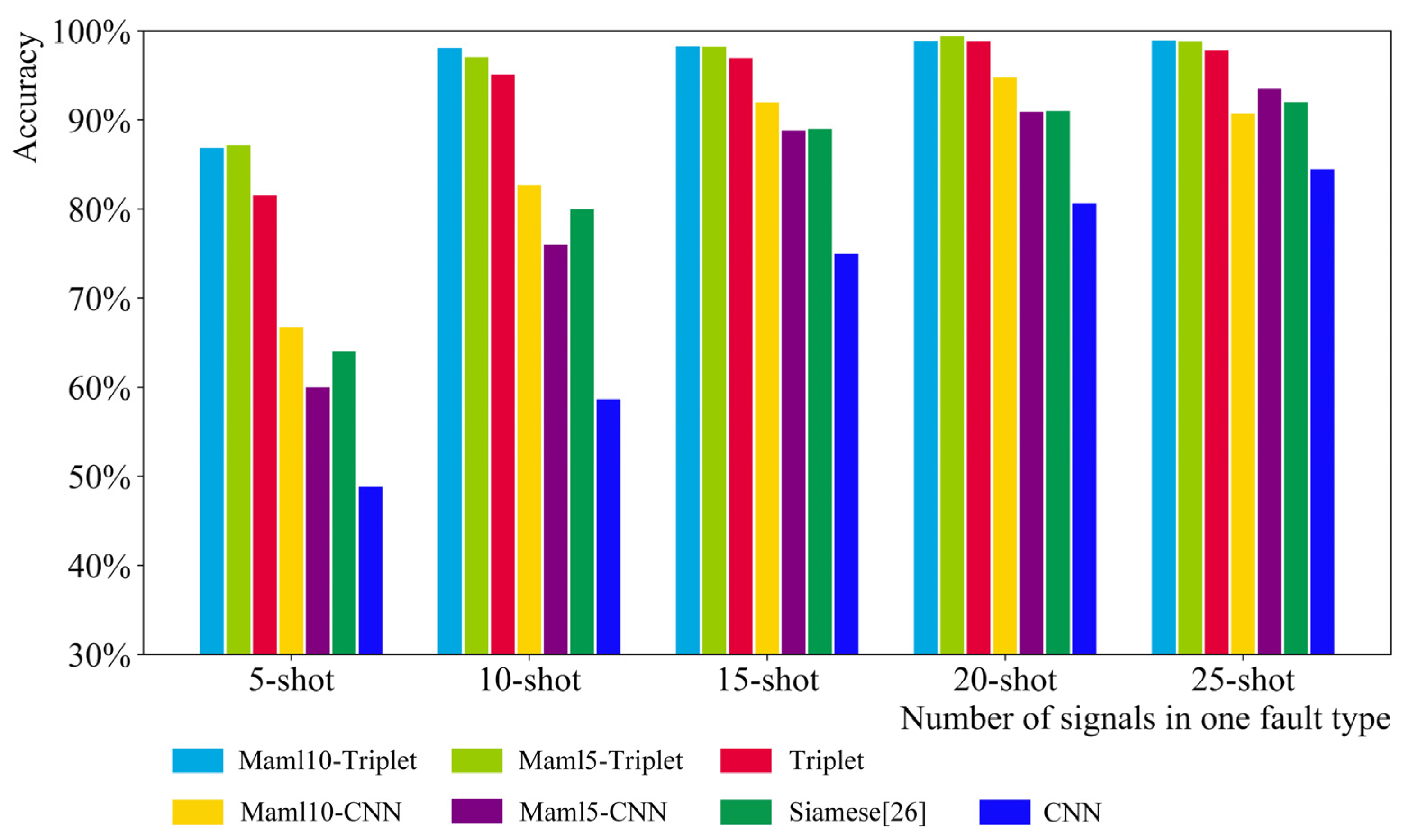

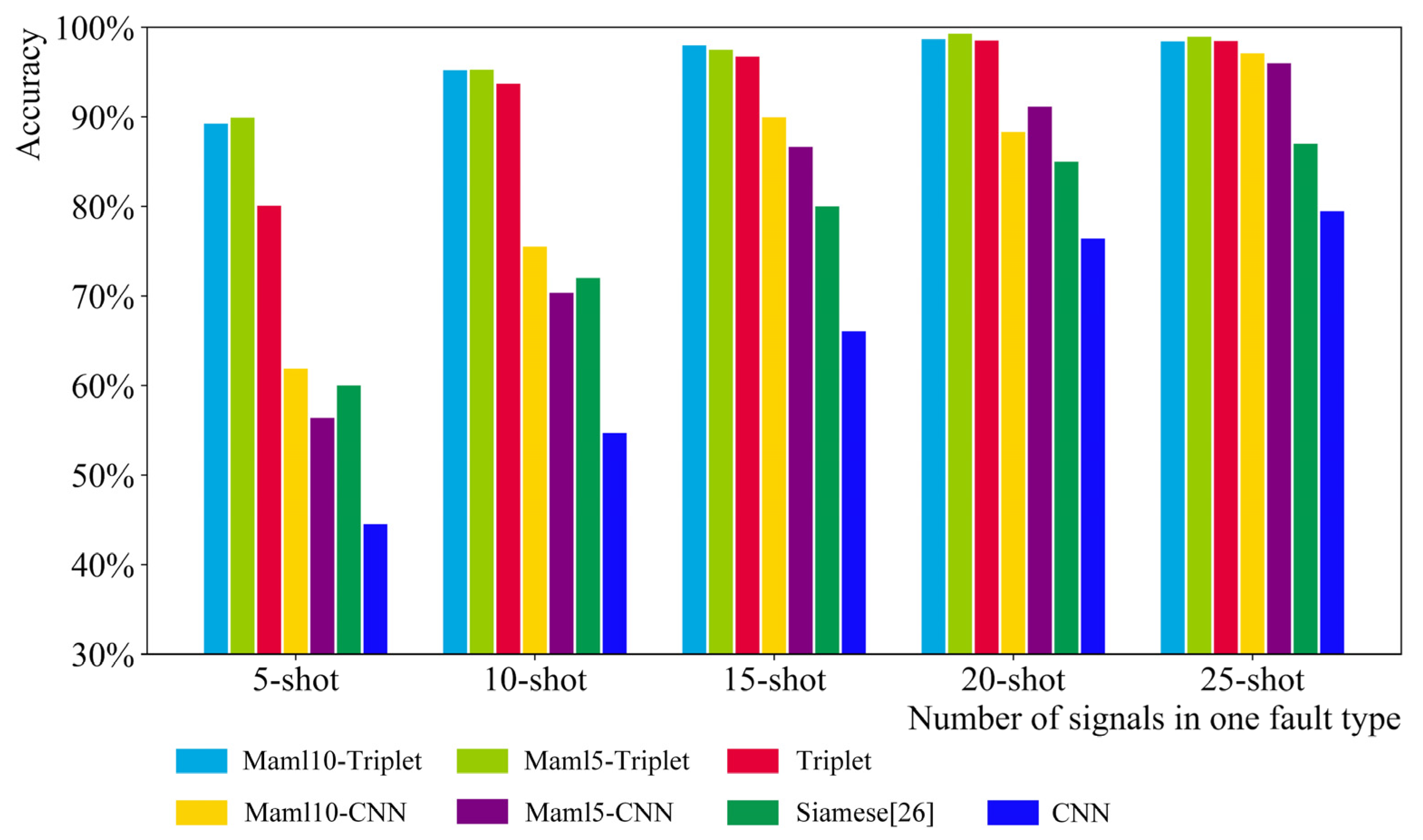

- In the experiment, two datasets for training the Maml neural network are constructed using three-way five-shot fault classification tasks and three-way ten-shot fault classification tasks. Moreover, two different maml-triplet models are constructed based on two different Maml models. Finally, it is proved through experiments that the results of the two Maml–Triplet models are better than those of the other models with different numbers of datasets.

- The influence of the number of fault types and the number of signal data in a single fault on the accuracy of the model is studied. Experiments show that, the more fault types, the lower the accuracy; the less signal data in a single fault, the lower the accuracy. In the experiment, it is also found that the number of fault types and the number of signal data within a single fault have less influence on the Maml–Triplet model than other models.

2. Maml–Triplet Learning Method

2.1. Maml Meta Learning Method

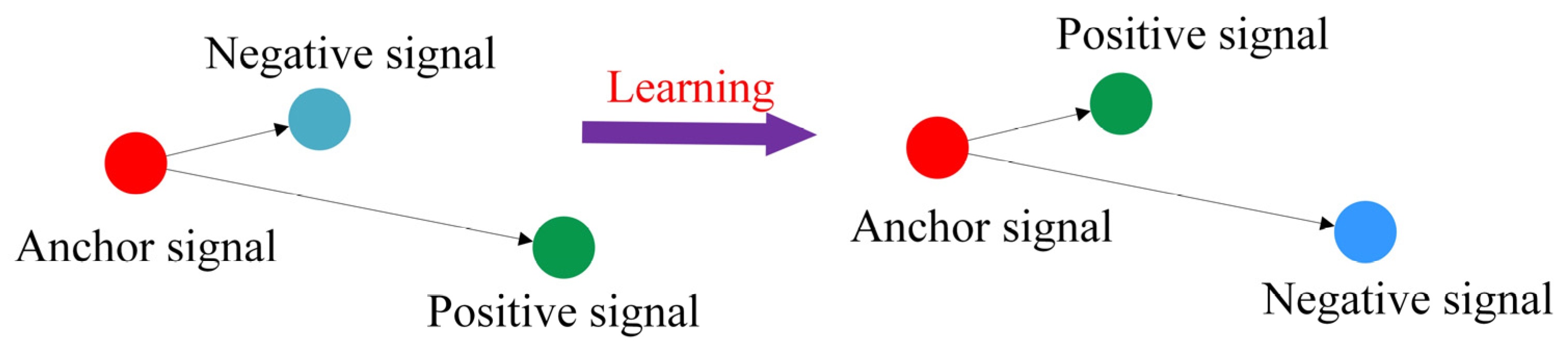

2.2. Depth Metric Learning Method Based on Triplet Loss Function

3. Construction of the Dataset and Model

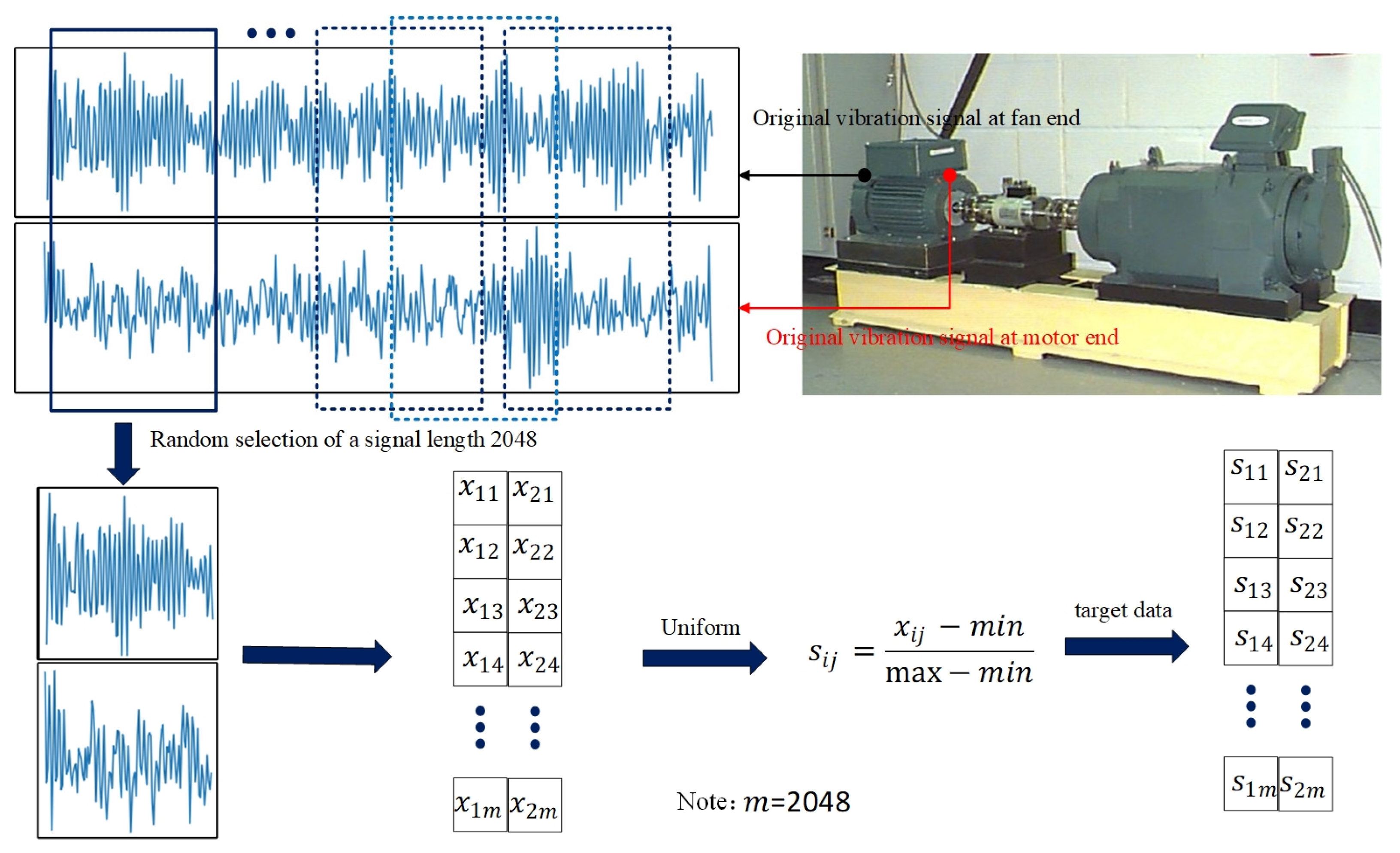

3.1. Dataset Introduction

3.2. Data Preprocessing

3.3. Construction Method of the Maml Neural Network Dataset and Triplet Neural Network Data

3.4. Construction Method of the Maml Neural Network and Triplet Neural Network Model

4. Experimental Process and Results

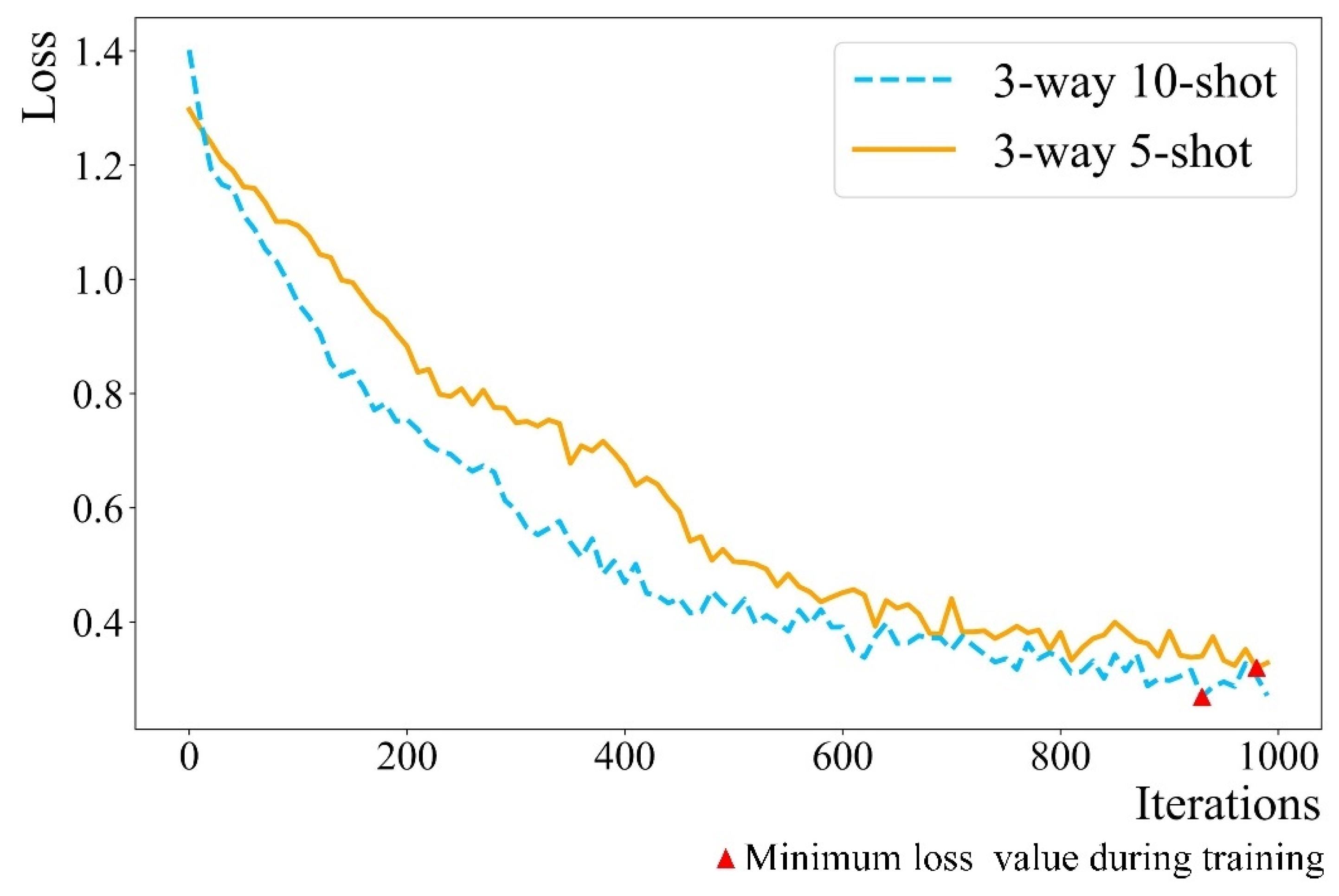

4.1. Experimental Process and Results of the Maml Learning Method

4.2. Test Criteria for Models

4.3. Experimental Process and Results of the Maml–Triplet Learning Method

4.3.1. Research on the Effect of the Number of Failure Types on Different Learning Methods

4.3.2. Research on the Influence of the Number of Signals in a Single Fault on Different Learning Methods

5. Conclusions and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

Abbreviations

| CWRU | Case Western Reserve University |

| CNN | convolutional neural network |

| AE | automatic encoder |

| RNN | recurrent neural network |

| GAN | generative adversarial network |

| DQINN | deep quantum inspired neural network |

| FFT | fast Fourier transform |

| CBLSTM | convolutional bidirectional long short-term memory |

| FTNN | feature-based transfer neural network |

| MAML | model-agnostic meta-learning |

References

- Gao, Z. A Survey of Fault Diagnosis and Fault-Tolerant Techniques—Part I: Fault Diagnosis with Model-Based and Signal-Based Approaches. IEEE Trans. Ind. Electron. 2015, 62, 11. [Google Scholar] [CrossRef]

- Nandi, S.; Toliyat, H.A. Condition Monitoring and Fault Diagnosis of Electrical Motors—A Review. IEEE Trans. Energy Convers. 2005, 20, 11. [Google Scholar] [CrossRef]

- Lei, Y.; Lin, J.; Zuo, M.J.; He, Z. Condition Monitoring and Fault Diagnosis of Planetary Gearboxes: A Review. Measurement 2014, 48, 292–305. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R.X. Deep Learning and Its Applications to Machine Health Monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Sun, W.; Shao, S.; Zhao, R.; Yan, R.; Zhang, X.; Chen, X. A Sparse Auto-Encoder-Based Deep Neural Network Approach for Induction Motor Faults Classification. Measurement 2016, 89, 171–178. [Google Scholar] [CrossRef]

- Chen, Z.; Li, W. Multisensor Feature Fusion for Bearing Fault Diagnosis Using Sparse Autoencoder and Deep Belief Network. IEEE Trans. Instrum. Meas. 2017, 66, 1693–1702. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Lin, Y.; Li, X. A Novel Method for Intelligent Fault Diagnosis of Rolling Bearings Using Ensemble Deep Auto-Encoders. Mech. Syst. Signal Process. 2018, 102, 278–297. [Google Scholar] [CrossRef]

- Junbo, T.; Weining, L.; Juneng, A.; Xueqian, W. Fault Diagnosis Method Study in Roller Bearing Based on Wavelet Transform and Stacked Auto-Encoder. In Proceedings of the 27th Chinese Control and Decision Conference (2015 CCDC), Qingdao, China, 23–25 May 2015; pp. 4608–4613. [Google Scholar] [CrossRef]

- Li, C.; Sanchez, R.-V.; Zurita, G.; Cerrada, M.; Cabrera, D.; Vásquez, R.E. Gearbox Fault Diagnosis Based on Deep Random Forest Fusion of Acoustic and Vibratory Signals. Mech. Syst. Signal Process. 2016, 76–77, 283–293. [Google Scholar] [CrossRef]

- Gao, Z.; Ma, C.; Song, D.; Liu, Y. Deep Quantum Inspired Neural Network with Application to Aircraft Fuel System Fault Diagnosis. Neurocomputing 2017, 238, 13–23. [Google Scholar] [CrossRef]

- Shao, S.; Sun, W.; Wang, P.; Gao, R.X.; Yan, R. Learning Features from Vibration Signals for Induction Motor Fault Diagnosis. In Proceedings of the 2016 International Symposium on Flexible Automation (ISFA), Cleveland, OH, USA, 1–3 August 2016; pp. 71–76. [Google Scholar] [CrossRef]

- Liu, R.; Meng, G.; Yang, B.; Sun, C.; Chen, X. Dislocated Time Series Convolutional Neural Architecture: An Intelligent Fault Diagnosis Approach for Electric Machine. IEEE Trans. Ind. Inf. 2017, 13, 1310–1320. [Google Scholar] [CrossRef]

- Li, S.; Liu, G.; Tang, X.; Lu, J.; Hu, J. An Ensemble Deep Convolutional Neural Network Model with Improved D-S Evidence Fusion for Bearing Fault Diagnosis. Sensors 2017, 17, 1729. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A Deep Convolutional Neural Network with New Training Methods for Bearing Fault Diagnosis under Noisy Environment and Different Working Load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Liu, S.; Xie, J.; Shen, C.; Shang, X.; Wang, D.; Zhu, Z. Bearing Fault Diagnosis Based on Improved Convolutional Deep Belief Network. Appl. Sci. 2020, 10, 6359. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Wang, J.; Mao, K. Learning to Monitor Machine Health with Convolutional Bi-Directional LSTM Networks. Sensors 2017, 17, 273. [Google Scholar] [CrossRef]

- Zhao, R.; Wang, D.; Yan, R.; Mao, K.; Shen, F.; Wang, J. Machine Health Monitoring Using Local Feature-Based Gated Recurrent Unit Networks. IEEE Trans. Ind. Electron. 2018, 65, 1539–1548. [Google Scholar] [CrossRef]

- Zhang, R.; Tao, H.; Wu, L.; Guan, Y. Transfer Learning With Neural Networks for Bearing Fault Diagnosis in Changing Working Conditions. IEEE Access 2017, 5, 14347–14357. [Google Scholar] [CrossRef]

- Lu, W.; Liang, B.; Cheng, Y.; Meng, D.; Yang, J.; Zhang, T. Deep Model Based Domain Adaptation for Fault Diagnosis. IEEE Trans. Ind. Electron. 2017, 64, 2296–2305. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Jia, F.; Xing, S. An Intelligent Fault Diagnosis Approach Based on Transfer Learning from Laboratory Bearings to Locomotive Bearings. Mech. Syst. Signal Process. 2019, 122, 692–706. [Google Scholar] [CrossRef]

- Cabrera, D.; Sancho, F.; Long, J.; Sanchez, R.-V.; Zhang, S.; Cerrada, M.; Li, C. Generative Adversarial Networks Selection Approach for Extremely Imbalanced Fault Diagnosis of Reciprocating Machinery. IEEE Access 2019, 7, 70643–70653. [Google Scholar] [CrossRef]

- Ren, Z.; Zhu, Y.; Yan, K.; Chen, K.; Kang, W.; Yue, Y.; Gao, D. A Novel Model with the Ability of Few-Shot Learning and Quick Updating for Intelligent Fault Diagnosis. Mech. Syst. Signal Process. 2020, 138, 106608. [Google Scholar] [CrossRef]

- Wu, J.; Zhao, Z.; Sun, C.; Yan, R.; Chen, X. Few-Shot Transfer Learning for Intelligent Fault Diagnosis of Machine. Measurement 2020, 166, 108202. [Google Scholar] [CrossRef]

- Zhang, A.; Li, S.; Cui, Y.; Yang, W.; Dong, R.; Hu, J. Limited Data Rolling Bearing Fault Diagnosis With Few-Shot Learning. IEEE Access 2019, 7, 110895–110904. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the International Conference on Machine Learning, Liverpool, UK, 18 July 2017. [Google Scholar]

- Liu, X.; Teng, W.; Liu, Y. A Model-Agnostic Meta-Baseline Method for Few-Shot Fault Diagnosis of Wind Turbines. Sensors 2022, 22, 3288. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, F.; Wang, B.; Habetler, T. Few-Shot Bearing Fault Diagnosis Based on Model-Agnostic Meta-Learning. IEEE Trans. Ind. Applicat. 2021, 57, 4754–4764. [Google Scholar] [CrossRef]

- Yang, T.; Tang, T.; Wang, J.; Qiu, C.; Chen, M. A Novel Cross-Domain Fault Diagnosis Method Based on Model Agnostic Meta-Learning. Measurement 2022, 199, 111564. [Google Scholar] [CrossRef]

- Dixit, S.; Verma, N.K.; Ghosh, A.K. Intelligent Fault Diagnosis of Rotary Machines: Conditional Auxiliary Classifier GAN Coupled With Meta Learning Using Limited Data. IEEE Trans. Instrum. Meas. 2021, 70, 5234. [Google Scholar] [CrossRef]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Wang, X.; Liu, F. Triplet Loss Guided Adversarial Domain Adaptation for Bearing Fault Diagnosis. Sensors 2020, 20, 320. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, J.; He, S.; Li, F.; Feng, Y.; Zhou, Z. Triplet Metric Driven Multi-Head GNN Augmented with Decoupling Adversarial Learning for Intelligent Fault Diagnosis of Machines under Varying Working Condition. J. Manuf. Syst. 2022, 62, 1–16. [Google Scholar] [CrossRef]

- Yang, K.; Zhao, L.; Wang, C. A New Intelligent Bearing Fault Diagnosis Model Based on Triplet Network and SVM. Sci. Rep. 2022, 12, 5234. [Google Scholar] [CrossRef] [PubMed]

- Smith, W.A.; Randall, R.B. Rolling Element Bearing Diagnostics Using the Case Western Reserve University Data: A Benchmark Study. Mech. Syst. Signal Process. 2015, 64–65, 100–131. [Google Scholar] [CrossRef]

- Case Western Reserve University (CWRU) Bearing Data Center. Available online: https://engineering.case.edu/bearingdatacenter/project-history/ (accessed on 21 February 2022).

- Antoniou, A.; Edwards, H.; Storkey, A. How to Train Your MAML. arXiv 2019, arXiv:1810.09502. [Google Scholar]

| Code Number (Group A) | Bearing Position | Fault Position | Fault Size (Inches) |

|---|---|---|---|

| A1 | Fan end | Inner raceway | 0.007 |

| A2 | Fan end | Inner raceway | 0.014 |

| A3 | Fan end | Inner raceway | 0.021 |

| A4 | Fan end | Roller | 0.007 |

| A5 | Fan end | Roller | 0.014 |

| A6 | Fan end | Roller | 0.021 |

| A7 | Fan end | Outer raceway | 0.007 |

| A8 | Fan end | Outer raceway | 0.014 |

| A9 | Fan end | Outer raceway | 0.021 |

| Code Number (Group B) | Bearing Position | Fault Position | Fault Size (Inches) |

|---|---|---|---|

| B1 | Motor end | Inner raceway | 0.007 |

| B2 | Motor end | Inner raceway | 0.014 |

| B3 | Motor end | Inner raceway | 0.021 |

| B4 | Motor end | Roller | 0.007 |

| B5 | Motor end | Roller | 0.014 |

| B6 | Motor end | Roller | 0.021 |

| B7 | Motor end | Outer raceway | 0.007 |

| B8 | Motor end | Outer raceway | 0.014 |

| B9 | Motor end | Outer raceway | 0.021 |

| Bearing Position | Fault Frequencies (Multiple of Shaft Speed) | |||

|---|---|---|---|---|

| BPFI | BPFO | FTF | BSF | |

| Motor end | 5.415 | 3.585 | 0.3983 | 2.357 |

| Fan end | 4.947 | 3.053 | 0.3816 | 1.994 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, Q.; He, Z.; Zhang, T.; Li, Y.; Liu, Z.; Zhang, Z. Bearing Fault Diagnosis Based on Small Sample Learning of Maml–Triplet. Appl. Sci. 2022, 12, 10723. https://doi.org/10.3390/app122110723

Cheng Q, He Z, Zhang T, Li Y, Liu Z, Zhang Z. Bearing Fault Diagnosis Based on Small Sample Learning of Maml–Triplet. Applied Sciences. 2022; 12(21):10723. https://doi.org/10.3390/app122110723

Chicago/Turabian StyleCheng, Qiang, Zhaoheng He, Tao Zhang, Ying Li, Zhifeng Liu, and Ziling Zhang. 2022. "Bearing Fault Diagnosis Based on Small Sample Learning of Maml–Triplet" Applied Sciences 12, no. 21: 10723. https://doi.org/10.3390/app122110723

APA StyleCheng, Q., He, Z., Zhang, T., Li, Y., Liu, Z., & Zhang, Z. (2022). Bearing Fault Diagnosis Based on Small Sample Learning of Maml–Triplet. Applied Sciences, 12(21), 10723. https://doi.org/10.3390/app122110723