Review: Facial Anthropometric, Landmark Extraction, and Nasal Reconstruction Technology

Abstract

1. Introduction

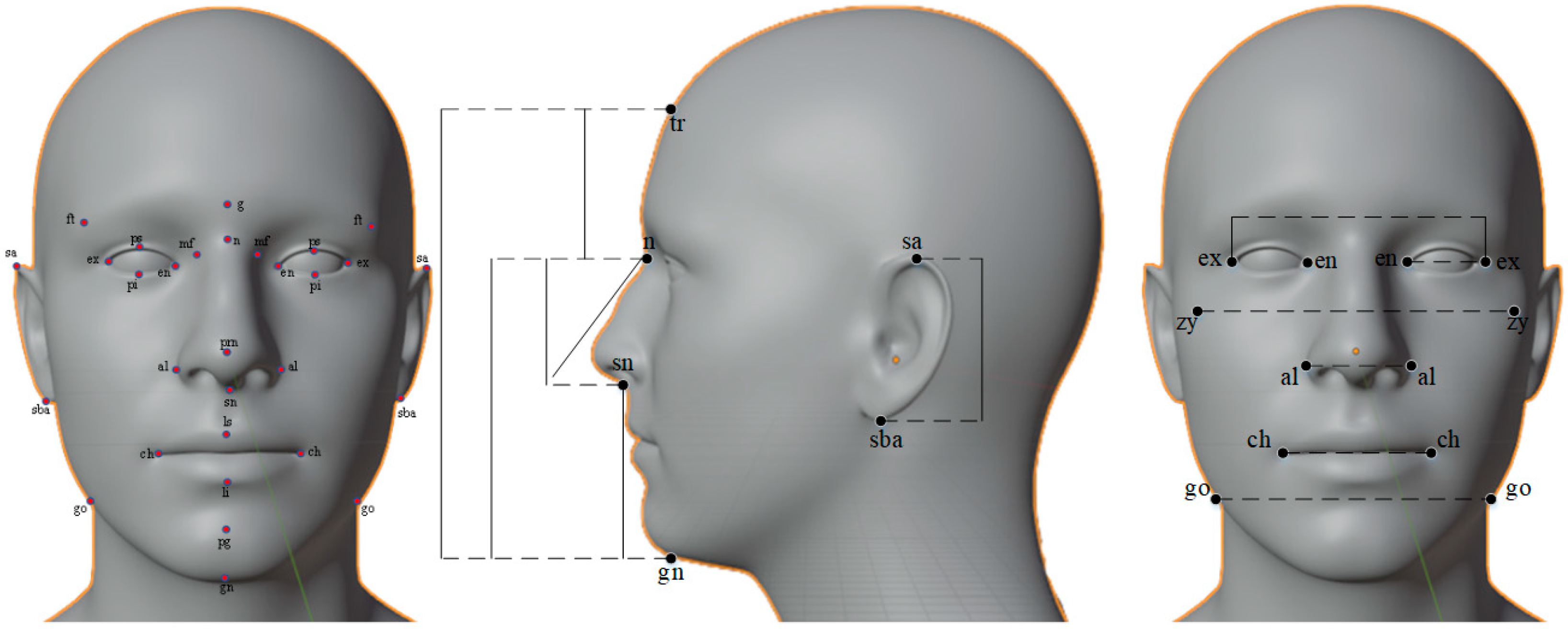

2. Anthropometric Measurement Analysis of Different Regions

| N/C | Sample Size | Year | Age | Subjects | Description | |

|---|---|---|---|---|---|---|

| Farkas et al. [30] | Europe, the Middle East, Asia, Africa, and North America | 1470 | 2005 | 18–30 | Ethnicity, gender | 14 anthropometric measures were used, and the study was carried out by investigators working separately across the world. |

| Kwon et al. [33] | Korea | 192 | 2021 | 20–79 | Age | 26 landmarks were extracted to determine the relationship between age and anthropometric measurements. |

| Zhuang et al. [31] | African Americans, Hispanic, Caucasians | 3997 | 2010 | 18–29 | Gender, Ethnicity, Age | 21 anthropometric measurements, and the purpose was to build an anthropometric database to design protective equipment for workers. |

| Husein et al. [32] | Indian American | 102 | 2010 | 18–30 | Ethnicity | 25 of 30 facial measurements were significant differences compared to North American white (NAW) women, investigates anthropometric factors on the faces of Indian American women. |

| Othman et al. [18] | Malay | 109 | 2016 | 20–30 | Gender | 22 measurements to create an anthropometric basis for Malay adults that was used in medicine, crime identification, design, etc. |

| Menéndez López-Mateos et al. [19] | Southern Spain | 100 | 2019 | 20–25 | Gender | Survey of European adults from southern Spain, 23 of 38 measurements were statistically significant, showing the prominent differences between the sexes. |

| Virdi et al. [20] | Kenyan-African | 72 | 2019 | 18–30 | Gender, Ethnicity | 22 measurements were taken, this is the first survey of Kenyan males and females. |

| Celebi et al. [21] | Italian, Egyptian | 259 | 2018 | 18–30 | Gender, Ethnicity | 139 Italians and 120 Egyptians were surveyed with 23 anthropometric landmarks. Egyptian women have distinct facial features from Italian women, but males demonstrated very close facial features. |

| Dong et al. [22] | Chinese | 100 | 2011 | 20–27 | Gender | 31 landmarks were identified to construct a 3D model of both males and females in China |

| Al-Jassim et al. [23] | Arab, Arian, and Mixed | 1000 | 2014 | >18 | Race, sex | 10 measurements were evaluated on Arab, Arian, and Mixed living in Basrah. Ethnicity affects the diversity of facial features in anthropometrics. |

| Zacharopoulos et al. [24] | Greek | 152 | 2016 | 18–30 | Gender | 30 facial measurements were carried out on Greeks. Anthropometrics of the Greek males and females were established. |

| Staka et al. [25] | Albania | 204 | 2017 | 18–30 | Gender | Anthropometrics data of Kosovar Albanian adults were established with 8 measurements on the face. |

| Miyazato et al. [26] | Okinawa Islanders, Mainland Japanese | 120 | 2014 | 19–37 | Ethnicity, Gender | 21 landmarks and 27 measurements on the facial characteristics of the Okinawa Islanders and Mainland Japanese. |

| Thordarson et al. [28] | Icelandic children | 182 | 2005 | 6–16 | Gender (boy/girl) | Measurements were used to evaluate the changes in an Icelandic sample of boys and girls. |

| Jahanbin et al. [29] | northeast Iran | 583 | 2012 | 11–17 | Boy of northeast Iran | 8 measures were used to survey the aging of the face. |

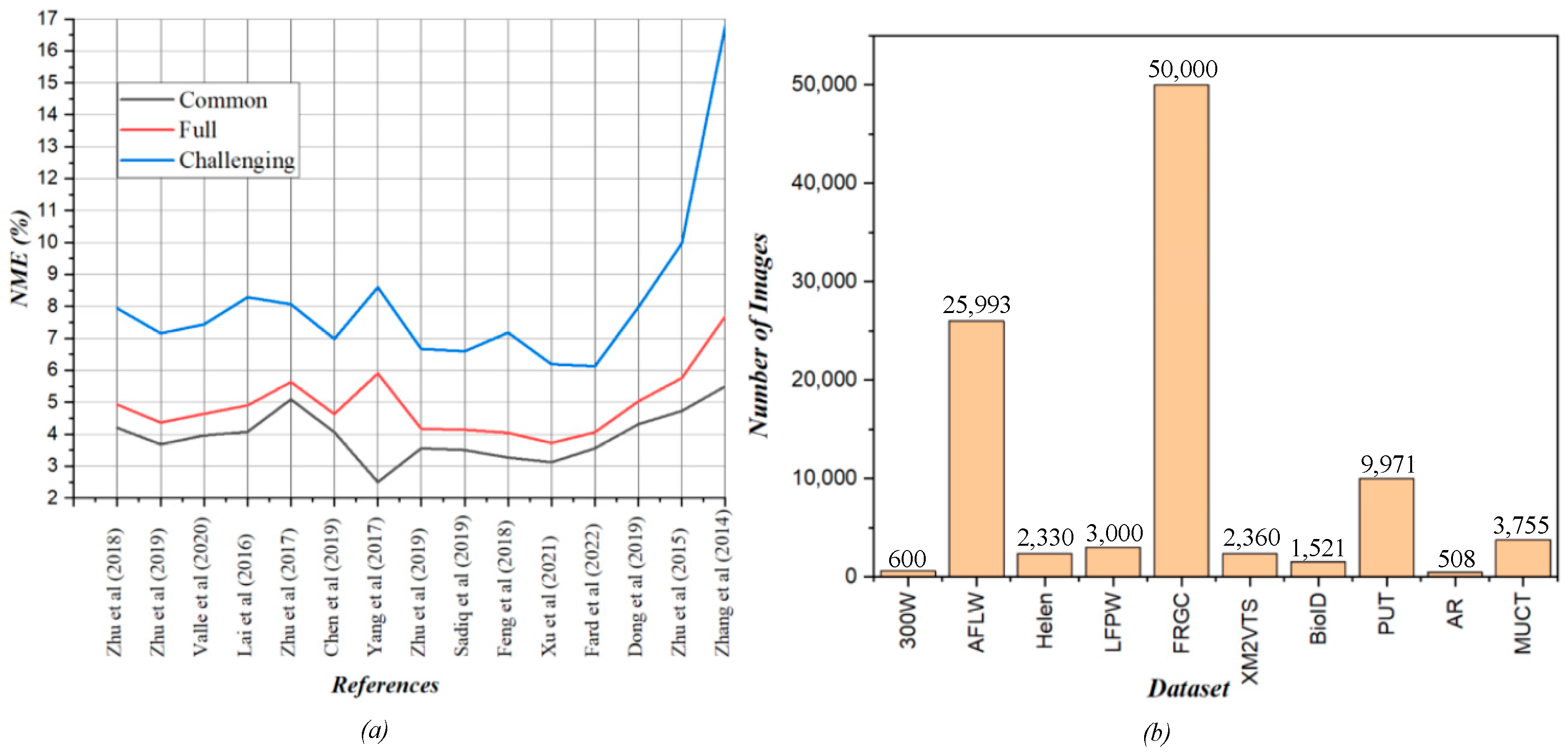

3. Facial Landmark Extraction

3.1. Deep Convolutional Neural Networks

3.2. Non-Deep Convolutional Neral Networks

3.3. Facial Landmark Detection in Medicine

4. Nasal Reconstruction Technology

5. Discussion and Evaluations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Behera, S.K.; Rath, A.K.; Mahapatra, A.; Sethy, P.K. Identification, classification & grading of fruits using machine learning & computer intelligence: A review. J. Ambient. Intell. Humaniz. Comput. 2020, 1–11. [Google Scholar] [CrossRef]

- Garg, A.; Mago, V. Role of machine learning in medical research: A survey. Comput. Sci. Rev. 2021, 40, 100370. [Google Scholar] [CrossRef]

- Chung, S.-H. Applications of smart technologies in logistics and transport: A review. Transp. Res. Part E Logist. Transp. Rev. 2021, 153, 102455. [Google Scholar] [CrossRef]

- Asi, S.M.; Ismail, N.H.; Ahmad, R.; Ramlan, E.I.; Rahman, Z.A.A. Automatic craniofacial anthropometry landmarks detection and measurements for the orbital region. Procedia Comput. Sci. 2014, 42, 372–377. [Google Scholar] [CrossRef]

- Wu, W.; Yin, Y.; Wang, X.; Xu, D. Face Detection with Different Scales Based on Faster R-CNN. IEEE Trans. Cybern. 2018, 49, 4017–4028. [Google Scholar] [CrossRef] [PubMed]

- Ko, B.C. A Brief Review of Facial Emotion Recognition Based on Visual Information. Sensors 2018, 18, 401. [Google Scholar] [CrossRef]

- Ab Wahab, M.N.; Nazir, A.; Ren, A.T.Z.; Noor, M.H.M.; Akbar, M.F.; Mohamed, A.S.A. Efficientnet-lite and hybrid CNN-KNN implementation for facial expression recognition on raspberry pi. IEEE Access 2021, 9, 134065–134080. [Google Scholar] [CrossRef]

- Jackson, A.S.; Bulat, A.; Argyriou, V.; Tzimiropoulos, G. Large Pose 3D Face Reconstruction from a Single Image via Direct Volumetric CNN Regression. In Proceedings of the 16th IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1031–1039. [Google Scholar]

- Irtija, N.; Sami, M.; Ahad, M.A.R. Fatigue detection using facial landmarks. In Proceedings of the International Symposium on Affective Science and Engineering ISASE 2018, Cheney, WA, USA, 31 May–2 June 2018; Japan Society of Kansei Engineering: Tokyo, Japan, 2018; pp. 1–6. [Google Scholar]

- Fabian Benitez-Quiroz, C.; Srinivasan, R.; Martinez, A.M. Emotionet: An accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 5562–5570. [Google Scholar]

- Yashunin, D.; Baydasov, T.; Vlasov, R. MaskFace: Multi-task face and landmark detector. arXiv preprint 2020, arXiv:2005.09412. [Google Scholar]

- Oyetunde, M.O.; Akinmeye, A.J. Factors Influencing Practice of Patient Education among Nurses at the University College Hospital, Ibadan. Open J. Nurs. 2015, 5, 500–507. [Google Scholar] [CrossRef]

- Duffner, S.; Garcia, C. A connexionist approach for robust and precise facial feature detection in complex scenes. In Proceedings of the ISPA 2005 4th International Symposium on Image and Signal Processing and Analysis, Zagreb, Croatia, 15–17 September 2005; pp. 316–321. [Google Scholar]

- Zhu, S.; Li, C.; Loy, C.C.; Tang, X. Face alignment by coarse-to-fine shape searching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4998–5006. [Google Scholar]

- Belhumeur, P.N.; Jacobs, D.W.; Kriegman, D.J.; Kumar, N. Localizing parts of faces using a consensus of exemplars. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2930–2940. [Google Scholar] [CrossRef]

- Vučinić, N.; Tubbs, R.S.; Erić, M.; Vujić, Z.; Marić, D.; Vuković, B. What Do We Find Attractive about the Face? Survey Study with Application to Aesthetic Surgery. Clin. Anat. 2019, 33, 214–222. [Google Scholar] [CrossRef]

- Muslu, Ü.; Demir, E. Development of rhinoplasty: Yesterday and today. Med. Sci. 2019, 23, 294–301. [Google Scholar]

- Othman, S.A.; Majawit, L.P.; Wan Hassan, W.N.; Wey, M.C.; Mohd Razi, R. Anthropometric study of three-dimensional facial morphology in Malay adults. PLoS ONE 2016, 11, e0164180. [Google Scholar] [CrossRef] [PubMed]

- López-Mateos, M.L.M.; Carreño-Carreño, J.; Palma, J.C.; Alarcón, J.A.; López-Mateos, C.M.; Menéndez-Núñez, M. Three-dimensional photographic analysis of the face in European adults from southern Spain with normal occlusion: Reference anthropometric measurements. BMC Oral Health 2019, 19, 196. [Google Scholar]

- Virdi, S.S.; Wertheim, D.; Naini, F.B. Normative anthropometry and proportions of the Kenyan-African face and comparative anthropometry in relation to African Americans and North American Whites. Maxillofac. Plast. Reconstr. Surg. 2019, 41, 9. [Google Scholar] [CrossRef] [PubMed]

- Celebi, A.A.; Kau, C.H.; Femiano, F.; Bucci, L.; Perillo, L. A Three-Dimensional Anthropometric Evaluation of Facial Morphology. J. Craniofacial Surg. 2018, 29, 304–308. [Google Scholar] [CrossRef]

- Dong, Y.; Zhao, Y.; Bai, S.; Wu, G.; Zhou, L.; Wang, B. Three-Dimensional Anthropometric Analysis of Chinese Faces and Its Application in Evaluating Facial Deformity. J. Oral Maxillofac. Surg. 2010, 69, 1195–1206. [Google Scholar] [CrossRef]

- Al-Jassim, N.H.; Fathallah, Z.F.; Abdullah, N.M. Anthropometric measurements of human face in Basrah. Bas. J. Surg. 2014, 20, 29–40. [Google Scholar] [CrossRef]

- Zacharopoulos, G.V.; Manios, A.; Kau, C.H.; Velagrakis, G.; Tzanakakis, G.N.; de Bree, E. Anthropometric analysis of the face. J. Craniofacial Surg. 2016, 27, e71–e75. [Google Scholar] [CrossRef]

- Staka, G.; Asllani-Hoxha, F.; Bimbashi, V. Facial Anthropometric Norms among Kosovo—Albanian Adults. Acta Stomatol. Croat. 2017, 51, 195–206. [Google Scholar] [CrossRef]

- Miyazato, E.; Yamaguchi, K.; Fukase, H.; Ishida, H.; Kimura, R. Comparative analysis of facial morphology between Okinawa Islanders and mainland Japanese using three-dimensional images. Am. J. Hum. Biol. 2014, 26, 538–548. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Zhao, Y.; Bai, S.; Wu, G.; Wang, B. Three-dimensional anthropometric analysis of the Chinese nose. J. Plast. Reconstr. Aesthetic Surg. 2010, 63, 1832–1839. [Google Scholar] [CrossRef] [PubMed]

- Thordarson, A.; Johannsdottir, B.; Magnusson, T.E. Craniofacial changes in Icelandic children between 6 and 16 years of age—a longitudinal study. Eur. J. Orthod. 2005, 28, 152–165. [Google Scholar] [CrossRef] [PubMed]

- Jahanbin, A.; Mahdavishahri, N.; Baghayeripour, M.; Esmaily, H.; Eslami, N. Evaluation of Facial Anthropometric Parameters in 11–17 Year Old Boys. J. Clin. Pediatr. Dent. 2012, 37, 95–101. [Google Scholar] [CrossRef]

- Farkas, L.G.; Katic, M.J.; Forrest, C.R. International Anthropometric Study of Facial Morphology in Various Ethnic Groups/Races. J. Craniofacial Surg. 2005, 16, 615–646. [Google Scholar] [CrossRef]

- Zhuang, Z.; Landsittel, D.; Benson, S.; Roberge, R.; Shaffer, R. Facial Anthropometric Differences among Gender, Ethnicity, and Age Groups. Ann. Occup. Hyg. 2010, 54, 391–402. [Google Scholar]

- Husein, O.F.; Sepehr, A.; Garg, R.; Sina-Khadiv, M.; Gattu, S.; Waltzman, J.; Galle, S.E. Anthropometric and aesthetic analysis of the Indian American woman’s face. J. Plast. Reconstr. Aesthetic Surg. 2010, 63, 1825–1831. [Google Scholar] [CrossRef]

- Kwon, S.H.; Choi, J.W.; Kim, H.J.; Lee, W.S.; Kim, M.; Shin, J.W.; Huh, C.H. Three-Dimensional Photogrammetric Study on Age-Related Facial Characteristics in Korean Females. Ann. Dermatol. 2021, 33, 52. [Google Scholar] [CrossRef]

- Farkas, L.G. (Ed.) Anthropometry of the Head and Face; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 1994. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Viglialoro, R.; Condino, S.; Turini, G.; Carbone, M.; Ferrari, V.; Gesi, M. Augmented Reality, Mixed Reality, and Hybrid Approach in Healthcare Simulation: A Systematic Review. Appl. Sci. 2021, 11, 2338. [Google Scholar] [CrossRef]

- de Bittencourt Zavan, F.H.; Nascimento, A.C.; Bellon, O.R.; Silva, L. 3D face alignment in the wild: A landmark-free, nose-based approach. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 581–589. [Google Scholar]

- Gou, C.; Wu, Y.; Wang, F.Y.; Ji, Q. Shape augmented regression for 3D face alignment. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 604–615. [Google Scholar]

- Jeni, L.A.; Cohn, J.F.; Kanade, T. Dense 3D face alignment from 2D video for real-time use. Image Vis. Comput. 2016, 58, 13–24. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Wang, X.; Tang, X. Deep convolutional network cascade for facial point detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3476–3483. [Google Scholar]

- Zhu, M.; Shi, D.; Chen, S.; Gao, J. Branched convolutional neural networks for face alignment. In Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; Springer: Cham, Switzerland, 2018; pp. 291–302. [Google Scholar]

- Zhu, M.; Shi, D.; Gao, J. Branched convolutional neural networks incorporated with Jacobian deep regression for facial landmark detection. Neural Netw. 2019, 118, 127–139. [Google Scholar] [CrossRef] [PubMed]

- Valle, R.; Buenaposada, J.M.; Baumela, L. Cascade of encoder-decoder CNNs with learned coordinates regressor for robust facial landmarks detection. Pattern Recognit. Lett. 2019, 136, 326–332. [Google Scholar] [CrossRef]

- Lai, H.; Xiao, S.; Pan, Y.; Cui, Z.; Feng, J.; Xu, C.; Yan, S. Deep recurrent regression for facial landmark detection. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 1144–1157. [Google Scholar] [CrossRef]

- Hoang, V.-T.; Huang, D.-S.; Jo, K.-H. 3-D Facial Landmarks Detection for Intelligent Video Systems. IEEE Trans. Ind. Inform. 2020, 17, 578–586. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, X.; Lei, Z.; Li, S.Z. Face alignment in full pose range: A 3d total solution. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 78–92. [Google Scholar] [CrossRef]

- Rao, G.K.L.; Srinivasa, A.C.; Iskandar, Y.H.P.; Mokhtar, N. Identification and analysis of photometric points on 2D facial images: A machine learning approach in orthodontics. Heal. Technol. 2019, 9, 715–724. [Google Scholar] [CrossRef]

- Tao, Q.Q.; Zhan, S.; Li, X.H.; Kurihara, T. Robust face detection using local CNN and SVM based on kernel combination. Neurocomputing 2016, 211, 98–105. [Google Scholar] [CrossRef]

- Chen, L.; Su, H.; Ji, Q. Deep structured prediction for facial landmark detection. Adv. Neural Inf. Processing Syst. 2019, 32, 2450–2460. [Google Scholar]

- Sivaram, M.; Porkodi, V.; Mohammed, A.S.; Manikandan, V. Detection Of Accurate Facial Detection Using Hybrid Deep Convolutional Recurrent Neural Network. ICTACT J. Soft Comput. 2019, 9. [Google Scholar] [CrossRef]

- Chen, Y.; Luo, W.; Yang, J. Facial landmark detection via pose-induced auto-encoder networks. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 2115–2119. [Google Scholar]

- Yang, J.; Liu, Q.; Zhang, K. Stacked hourglass network for robust facial landmark localisation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 79–87. [Google Scholar]

- Zhu, M.; Shi, D.; Zheng, M.; Sadiq, M. Robust facial landmark detection via occlusion-adaptive deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3486–3496. [Google Scholar]

- Sadiq, M.; Shi, D.; Guo, M.; Cheng, X. Facial Landmark Detection via Attention-Adaptive Deep Network. IEEE Access 2019, 7, 181041–181050. [Google Scholar] [CrossRef]

- Feng, Z.H.; Kittler, J.; Awais, M.; Huber, P.; Wu, X.J. Wing loss for robust facial landmark localisation with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2235–2245. [Google Scholar]

- Xu, Z.; Li, B.; Yuan, Y.; Geng, M. AnchorFace: An Anchor-based Facial Landmark Detector across Large Poses. Proc. AAAI Conf. Artif. Intell. 2021, 35, 3092–3100. [Google Scholar] [CrossRef]

- Fard, A.P.; Mahoor, M.H. Facial landmark points detection using knowledge distillation-based neural networks. Comput. Vis. Image Underst. 2021, 215, 103316. [Google Scholar] [CrossRef]

- Dong, X.; Yang, Y. Teacher supervises students how to learn from partially labeled images for facial landmark detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 783–792. [Google Scholar]

- van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2019, 109, 373–440. [Google Scholar] [CrossRef]

- Cootes, T.; Baldock, E.R.; Graham, J. An introduction to active shape models. Image Processing Anal. 2000, 243657, 223–248. [Google Scholar]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. In Proceedings of the European Conference on Computer Vision, Freiburg, Germany, 2–6 June 1998; Springer: Berlin/Heidelberg, Germany, 1998; pp. 484–498. [Google Scholar]

- Wang, Q.; Liu, L.; Zhu, W.; Mo, H.; Deng, C.; Wei, S. A 700fps optimized coarse-to-fine shape searching based hardware accelerator for face alignment. In Proceedings of the 2017 54th ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar]

- Zhang, J.; Shan, S.; Kan, M.; Chen, X. Coarse-to-fine auto-encoder networks (cfan) for real-time face alignment. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 1–16. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Thakur, P.; Wadajkar, G. Facial Feature Points Detection Using Cascaded Regression Tree. Int. J. Res. Eng. Sci. Manag. 2018, 1, 170–173. [Google Scholar]

- Sohail, A.S.M.; Bhattacharya, P. Detection of facial feature points using anthropometric face model. In Signal Processing for Image Enhancement and Multimedia Processing; Springer: Boston, MA, USA, 2008; pp. 189–200. [Google Scholar]

- Sohail AS, M.; Bhattacharya, P. Localization of Facial Feature Regions Using Anthropometric Face Model. In Proceedings of the International Conference on Multidisciplinary Information Sciences and Technologies, Mtrida, Spain, 25–28 October 2006. [Google Scholar]

- Fasel, I.; Fortenberry, B.; Movellan, J. A generative framework for real time object detection and classification. Comput. Vis. Image Underst. 2005, 98, 182–210. [Google Scholar] [CrossRef]

- Alom, M.Z.; Piao, M.L.; Islam, M.S.; Kim, N.; Park, J.H. Optimized facial features-based age classification. Int. J. Comput. Inf. Eng. 2012, 6, 327–331. [Google Scholar]

- Du, R.; Lee, H.J. Consistency of Optimized Facial Features through the Ages. Int. J. Multimed. Ubiquitous Eng. 2013, 8, 61–70. [Google Scholar] [CrossRef][Green Version]

- Tuan, H.N.A.; Dieu, P.D.; Hai, N.D.X.; Thinh, N.T. Anthropometric Identification System Using Convolution Neural Network Based On Region Proposal Network. Tạp chí Y học Việt Nam 2021, 506. [Google Scholar] [CrossRef]

- Tuan, H.N.A.; Hai, N.D.X.; Thinh, N.T. The Improved Faster R-CNN for Detecting Small Facial Landmarks on Vietnamese Human Face Based on Clinical Diagnosis. J. Image Graph. 2022, 10, 76–81. [Google Scholar]

- Guarin, D.L.; Yunusova, Y.; Taati, B.; Dusseldorp, J.R.; Mohan, S.; Tavares, J.; Van Veen, M.M.; Fortier, E.; Hadlock, T.A.; Jowett, N. Toward an Automatic System for Computer-Aided Assessment in Facial Palsy. Facial Plast. Surg. Aesthetic Med. 2020, 22, 42–49. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.; Gong, S.; Su, L.; Howard, N.; Kong, Y. Automatic Detection of Acromegaly from Facial Photographs Using Machine Learning Methods. eBioMedicine 2017, 27, 94–102. [Google Scholar] [CrossRef] [PubMed]

- AbdAlmageed, W.; Mirzaalian, H.; Guo, X.; Randolph, L.M.; Tanawattanacharoen, V.K.; Geffner, M.E.; Ross, H.M.; Kim, M.S. Assessment of Facial Morphologic Features in Patients with Congenital Adrenal Hyperplasia Using Deep Learning. JAMA Netw. Open 2020, 3, e2022199. [Google Scholar] [CrossRef] [PubMed]

- Gurovich, Y.; Hanani, Y.; Bar, O.; Nadav, G.; Fleischer, N.; Gelbman, D.; Gripp, K.W. Identifying facial phenotypes of genetic disorders using deep learning. Nat. Med. 2019, 25, 60–64. [Google Scholar] [CrossRef]

- Liu, H.; Mo, Z.H.; Yang, H.; Zhang, Z.F.; Hong, D.; Wen, L.; Wang, S.S. Automatic Facial Recognition of Williams-Beuren Syndrome Based on Deep Convolutional Neural Networks. Front. Pediatrics 2021, 9, 449. [Google Scholar] [CrossRef]

- Nachmani, O.; Saun, T.; Huynh, M.; Forrest, C.R.; McRae, M. “Facekit”—Toward an Automated Facial Analysis App Using a Machine Learning–Derived Facial Recognition Algorithm. Plast. Surg. 2022. [Google Scholar] [CrossRef]

- Gerós, A.; Horta, R.; Aguiar, P. Facegram—Objective quantitative analysis in facial reconstructive surgery. J. Biomed. Inform. 2016, 61, 1–9. [Google Scholar] [CrossRef]

- Petrides, G.; Clark, J.R.; Low, H.; Lovell, N.; Eviston, T.J. Three-dimensional scanners for soft-tissue facial assessment in clinical practice. J. Plast. Reconstr. Aesthetic Surg. 2021, 74, 605–614. [Google Scholar] [CrossRef]

- Hontanilla, B.; Aubá, C. Automatic three-dimensional quantitative analysis for evaluation of facial movement. J. Plast. Reconstr. Aesthetic Surg. 2008, 61, 18–30. [Google Scholar] [CrossRef]

- Aarabi, P.; Hughes, D.; Mohajer, K.; Emami, M. The automatic measurement of facial beauty. In Proceedings of the 2001 IEEE International Conference on Systems, Man and Cybernetics. e-Systems and e-Man for Cybernetics in Cyberspace (Cat. No. 01CH37236), Tucson, AZ, USA, 7–10 October 2001; Volume 4, pp. 2644–2647. [Google Scholar]

- Zhao, Q.; Rosenbaum, K.; Sze, R.; Zand, D.; Summar, M.; Linguraru, M.G. Down syndrome detection from facial photographs using machine learning techniques. In Proceedings of the Medical Imaging 2013: Computer-Aided Diagnosis, Lake Buena Vista, FL, USA, 9–14 February 2013; SPIE: Bellingham, WA, USA, 2013; Volume 8670, pp. 9–15. [Google Scholar]

- Qin, B.; Liang, L.; Wu, J.; Quan, Q.; Wang, Z.; Li, D. Automatic identification of down syndrome using facial images with deep convolutional neural network. Diagnostics 2020, 10, 487. [Google Scholar] [CrossRef] [PubMed]

- Agger, A.; von Buchwald, C.; Madsen, A.R.; Yde, J.; Lesnikova, I.; Christensen, C.B.; Foghsgaard, S.; Christensen, T.B.; Hansen, H.S.; Larsen, S.; et al. Squamous cell carcinoma of the nasal vestibule 1993–2002: A nationwide retrospective study from DAHANCA. Head Neck 2009, 31, 1593–1599. [Google Scholar] [CrossRef] [PubMed]

- Faris, C.; Heiser, A.; Quatela, O.; Jackson, M.; Tessler, O.; Jowett, N.; Lee, L.N. Health utility of rhinectomy, surgical nasal reconstruction, and prosthetic rehabilitation. Laryngoscope 2020, 130, 1674–1679. [Google Scholar] [CrossRef] [PubMed]

- Shaye, D.A. The history of nasal reconstruction. Curr. Opin. Otolaryngol. Head Neck Surg. 2021, 29, 259. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.F.; Hsieh, Y.C.; Hsieh, Y.L. Factors Affecting Location of Nasal Airway Obstruction. In Proceedings of the 2020 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 23–25 October 2020; pp. 21–24. [Google Scholar]

- Avrunin, O.G.; Nosova, Y.V.; Abdelhamid, I.Y.; Pavlov, S.V.; Shushliapina, N.O.; Bouhlal, N.A.; Ormanbekova, A.; Iskakova, A.; Harasim, D. Research Active Posterior Rhinomanometry Tomography Method for Nasal Breathing Determining Violations. Sensors 2021, 21, 8508. [Google Scholar] [CrossRef]

- Jahandideh, H.; Delarestaghi, M.M.; Jan, D.; Sanaei, A. Assessing the Clinical Value of Performing CT Scan before Rhinoplasty Surgery. Int. J. Otolaryngol. 2020, 2020, 1–7. [Google Scholar] [CrossRef]

- Peters, F.; Mücke, M.; Möhlhenrich, S.C.; Bock, A.; Stromps, J.-P.; Kniha, K.; Hölzle, F.; Modabber, A. Esthetic outcome after nasal reconstruction with paramedian forehead flap and bilobed flap. J. Plast. Reconstr. Aesthetic Surg. 2020, 74, 740–746. [Google Scholar] [CrossRef]

- Baldi, D.; Basso, L.; Nele, G.; Federico, G.; Antonucci, G.W.; Salvatore, M.; Cavaliere, C. Rhinoplasty Pre-Surgery Models by Using Low-Dose Computed Tomography, Magnetic Resonance Imaging, and 3D Printing. Dose-Response 2021, 19, 15593258211060950. [Google Scholar] [CrossRef]

- Suszynski, T.M.; Serra, J.M.; Weissler, J.M.; Amirlak, B. Three-Dimensional Printing in Rhinoplasty. Plast. Reconstr. Surg. 2018, 141, 1383–1385. [Google Scholar] [CrossRef]

- Jung, Y.G.; Park, H.; Seo, J. Patient-Specific 3-Dimensional Printed Models for Planning Nasal Osteotomy to Correct Nasal Deformities Due to Trauma. OTO Open 2020, 4, 2473974X20924342. [Google Scholar] [CrossRef]

- Klosterman, T.; Romo, T., III. Three-dimensional printed facial models in rhinoplasty. Facial Plast. Surg. 2018, 34, 201–204. [Google Scholar] [CrossRef] [PubMed]

- Bekisz, J.M.; Liss, H.A.; Maliha, S.G.; Witek, L.; Coelho, P.G.; Flores, R.L. In-House Manufacture of Sterilizable, Scaled, Patient-Specific 3D-Printed Models for Rhinoplasty. Aesthetic Surg. J. 2018, 39, 254–263. [Google Scholar] [CrossRef] [PubMed]

- Sobral, D.S.; Duarte, D.W.; Dornelles, R.F.; Moraes, C.A. 3D virtual planning for rhinoplasty using a free add-on for open-source software. Aesthetic Surg. J. 2021, 41, NP1024–NP1032. [Google Scholar] [CrossRef]

- Choi, J.W.; Kim, M.J.; Kang, M.K.; Kim, S.C.; Jeong, W.S.; Kim, D.H.; Lee, T.H.; Koh, K.S. Clinical Application of a Patient-Specific, Three-Dimensional Printing Guide Based on Computer Simulation for Rhinoplasty. Plast. Reconstr. Surg. 2020, 145, 365–374. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.-H.; Kim, S. Application of three-dimensional printing technology and Plan-Do-Check-Act (PDCA) cycle in deviated nose correction. J. Cosmet. Med. 2021, 5, 53–56. [Google Scholar] [CrossRef]

- Gordon, A.R.; Schreiber, J.E.; Patel, A.; Tepper, O.M. 3D Printed Surgical Guides Applied in Rhinoplasty to Help Obtain Ideal Nasal Profile. Aesthetic Plast. Surg. 2021, 45, 2852–2859. [Google Scholar] [CrossRef] [PubMed]

- Zammit, D.; Safran, T.; Ponnudurai, N.; Jaberi, M.; Chen, L.; Noel, G.; Gilardino, M.S. Step-specific simulation: The utility of 3D printing for the fabrication of a low-cost, learning needs-based rhinoplasty simulator. Aesthetic Surg. J. 2020, 40, NP340–NP345. [Google Scholar] [CrossRef]

- Guevara, C.; Matouk, M. In-office 3D printed guide for rhinoplasty. Int. J. Oral Maxillofac. Surg. 2021, 50, 1563–1565. [Google Scholar] [CrossRef]

- Erdogan, M.M.; Simsek, T.; Ugur, L.; Kazaz, H.; Seyhan, S.; Gok, U. In-office 3D printed guide for External Nasal Splint on Edema and Ecchymosis After Rhinoplasty. J. Oral Maxillofac. Surg. 2021, 79, 1549-e1. [Google Scholar] [CrossRef]

- Locketz, G.D.; Silberthau, K.; Lozada, K.N.; Becker, D.G. Patient-Specific 3D-Printed Rhinoplasty Operative Guides. Am. J. Cosmet. Surg. 2020, 37, 143–147. [Google Scholar] [CrossRef]

- Yu, N. What does our face mean to us? Pragmat. Cogn. 2001, 9, 1–36. [Google Scholar] [CrossRef]

- Milborrow, S.; Nicolls, F. Locating facial features with an extended active shape model. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 504–513. [Google Scholar]

- Dong, X.; Yan, Y.; Ouyang, W.; Yang, Y. Style aggregated network for facial landmark detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 28–23 June 2018; pp. 379–388. [Google Scholar]

- Wu, W.; Qian, C.; Yang, S.; Wang, Q.; Cai, Y.; Zhou, Q. Look at boundary: A boundary-aware face alignment algorithm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 28–23 June 2018; pp. 2129–2138. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR; pp. 6105–6114. [Google Scholar]

- Lekakis, G.; Claes, P.; Hamilton, G.S., III; Hellings, P.W. Three-dimensional surface imaging and the continuous evolution of preoperative and postoperative assessment in rhinoplasty. Facial Plast. Surg. 2016, 32, 088–094. [Google Scholar]

- Sagonas, C.; Antonakos, E.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 Faces In-The-Wild Challenge: Database and results. Image Vis. Comput. 2016, 47, 3–18. [Google Scholar] [CrossRef]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vis. Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef] [PubMed]

- Kostinger, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Annotated Facial Landmarks in the Wild: A large-scale, real-world database for facial landmark localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 7 November 2011. [Google Scholar] [CrossRef]

- Le, V.; Brandt, J.; Lin, Z.; Bourdev, L.; Huang, T.S. Interactive facial feature localization. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 679–692. [Google Scholar]

- Phillips, P.J.; Flynn, P.J.; Scruggs, T.; Bowyer, K.W.; Chang, J.; Hoffman, K.; Worek, W. Overview of the face recognition grand challenge. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 947–954. [Google Scholar]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the Second International Conference on Audio and Video-Based Biometric Person Authentication 1999, Hilton Rye Town, NY, USA, 20–22 July 2005; Volume 964, pp. 965–966. [Google Scholar]

- Jesorsky, O.; Kirchberg, K.J.; Frischholz, R.W. Robust face detection using the hausdorff distance. In Proceedings of the International Conference on Audio-and Video-Based Biometric Person Authentication, Halmstad, Sweden, 6–8 June 2001; Springer: Berlin/Heidelberg, Germany, 2001; pp. 90–95. [Google Scholar]

- Schmidt, A.; Kasinski, A.; Florek, A. The PUT face database. Image Processing Commun. 2008, 13, 59–64. [Google Scholar]

- Martinez, A.M.; Benavente, R. The AR face database. Computer, Vision Center. Tech. Rep. 1998, 24, 1–10. [Google Scholar]

- Milborrow, S.; Morkel, J.; Nicolls, F. The MUCT landmarked face database. Pattern Recognit. Assoc. S. Afr. 2010, 201, 32–34. [Google Scholar]

- Sun, Y.; Zhao, Z.; An, Y. Application of digital technology in nasal reconstruction. Chin. J. Plast. Reconstr. Surg. 2021, 3, 204–220. [Google Scholar] [CrossRef]

- Bodini, M. A Review of Facial Landmark Extraction in 2D Images and Videos Using Deep Learning. Big Data Cogn. Comput. 2019, 3, 14. [Google Scholar] [CrossRef]

- Johnston, B.; Chazal, P.D. A review of image-based automatic facial landmark identification techniques. EURASIP J. Image Video Processing 2018, 2018, 1–23. [Google Scholar] [CrossRef]

| Amount | Fps * | Year | Approach | |

|---|---|---|---|---|

| Duffner et al. [13] | 4 | N/A | 2005 | Convolutional Face Finder and Feature Detector |

| Sun et al. [42] | 5 | 8.33 | 2013 | Deep Convolutional Network Cascade |

| Zhu et al. [43] | 68 | 83.33 | 2018 | Branched Convolutional Neural Networks (BCNN) |

| Zhu et al. [44] | 68 | 40 | 2019 | Branched Convolutional Neural Networks–Jacobian deep regression (BCNN–JDR) |

| Valle et al. [45] | 68 | 11.11 | 2020 | Cascaded Heatmaps Regression into 2D Coordinates (CHR2C) |

| Lai et al. [46] | 68 | N/A | 2016 | VGG19 network–LSTM model |

| Hoang et al. [47] | 68 | 16.67 | 2020 | Stacked Hourglass Network (SHN) |

| Xiangyu Zhu et al. [48] | 68 | 86.20 | 2017 | 3D Dense Face Alignment (3DDFA) |

| Rao et al. [49] | 19 | N/A | 2019 | Yolo model–Active Shape model (ASM) |

| Asi et al. [4] | 4 | N/A | 2014 | HAAR Cascade Classifier (enHaar and exHaar) |

| Chen et al. [51] | 68 | N/A | 2019 | CNN model–Conditional Random Field (CRF) |

| Sivaram et al. [52] | 49–74 | N/A | 2019 | CNN–LSTM–RNN model |

| Chen et al. [53] | 68 | 10 | 2015 | Pose-Induced Auto-encoder Networks (PIAN) |

| Yang et al. [54] | 68 | N/A | 2017 | Stacked Hourglass Network (SHN) |

| Zhu et al. [55] | 68 | N/A | 2019 | Occlusion-adaptive Deep Networks (called ODN) |

| Sadiq et al. [56] | 68 | N/A | 2019 | Attentioned Distillation module in ODN model |

| Feng et al. [57] | 68 | 8–400 (based on number of parameters) | 2018 | CNN model with a new Wingloss function |

| Xu et al. [58] | 68 | 45 | 2021 | Anchorface |

| Fard et al. [59] | 68 | 253–417 | 2022 | Two different teacher networks guiding the student network with KD-Loss |

| Dong et al. [60] | 68 | N/A | 2019 | Teacher model Supervises Students (TS3) |

| Belhumeur et al. [15] | 29 | 2.5 | 2013 | Support Vector Machine (SVM) classifier |

| Kazemi et al. [66] | 194 | 1000 | 2014 | Regression Trees |

| Thakur et al. [67] | 68 | N/A | 2018 | Cascaded Regression Tree |

| Zhu et al. [14] | 49–194 | 25 | 2015 | Coarse-to-Fine Shape Searching (CFSS) |

| Wang et al. [64] | 68 | 700 | 2017 | Fast Shape Searching Face Alignment model (F-SSFA) |

| Zhang et al. [65] | 68 | 43.48 | 2014 | Coarse-to-Fine Auto-encoder Networks |

| Amount | Fps * | Sample Size | Year | Approach | |

|---|---|---|---|---|---|

| Sohail et al. [68] | 18 | N/A | 150 | 2008 | Anthropometric Face Model |

| Alom et al. [71] | 18 | N/A | 50 | 2012 | Support Vector Machine (SVM)–Sequential Minimal Optimization (SMO) |

| Du et al. [72] | 18 | N/A | 50 | 2013 | Support Vector Machine (SVM)–Sequential Minimal Optimization (SMO) |

| Tuan et al. [73] | 27 | N/A | 182 | 2021 | YOLOv4 model |

| Tuan et al. [74] | 16 | 9 | 182 | 2022 | Faster Region Convolutional Neural Networks (Faster R-CNN) |

| Guarin et al. [75] | 68 | N/A | 200 | 2020 | Cascade of Regression Trees |

| Kong et al. [76] | 68 | N/A | 1123 | 2018 | Ensemble method |

| Gurovich et al. [78] | 130 | N/A | 329 | 2019 | Deep Convolutional Neural Network (DCNN) |

| Nachmani et al. [80] | 468 | N/A | 15 | 2022 | Google’s ML Kit algorithm |

| Gerós et al. [81] | 5 | N/A | 4 | 2016 | Facegram |

| Year | Input | Output | Sample Size | Material | Software | |

|---|---|---|---|---|---|---|

| Baldi et al. [94] | 2021 | CT scans and magnetic resonance imaging | Bone tissue model, soft tissue and cartilage models | 10 | PLA, TPU | 3-Matic software, CURA (Materialise, Belgium) |

| Suszynski et al. [95] | 2018 | 3D image from Vectra H1 | Three-dimensionally gypsum model | _ | Gypsum | MirrorMe3D (New York, NY, USA) |

| Jung et al. [96] | 2020 | CT 1 image | 3D model for nasal osteotomy | 11 | PLA | DICOM, InVesalius, Meshmixer |

| Klosterman et al. [97] | 2018 | Image from canfield H1 camera | Two 3D models before and after surgery | 6 | Gypsum | Vectra Sculptor Software (Canfield Scientific, Fairfield, NJ, USA) |

| Bekisz et al. [98] | 2019 | 3D digital photographic images | Predicted postoperative PLA model | 12 | PLA | Blender (Version 2.78, Amsterdam, The Netherlands) |

| Sobral et al. [99] | 2021 | Image 2D from smartphone | 3D surgical guides | 3 | PLA | Blender (Stichting Blender Foundation, Amsterdam, The Netherlands) and RhinOnBlender (Cicero Moraes, Sinop, Brazil) |

| Choi et al. [100] | 2018 | Morpheus 3D scanner | 3D printed rhinoplasty guide | 50 | Morpheus (Morpheus Co., Ltd., Seongnam City, Gyeonggido, Republic of Korea) | |

| Gordon et al. [102] | 2021 | 3D image from Vectra H1 | 3D models as surgical guides | 15 | Canfield software (approved by the Institutional Review Board (no. 2020-12420)) | |

| Locketz et al. [106] | 2020 | 3D image from Vectra H1 | 3D printed modeling to define contours on the nose | 5 | Rigid plastic | Mirror (Candield Scientific Inc.) |

| Zammit et al. [103] | 2020 | CT 1 image | 3D printed nasal bone models used for rhinoplasty education | _ | PLA | MUHC Medical Imaging Software, 3Dslicer (Intelerad, Orlando, FL, USA) |

| Guevara et al. [104] | 2021 | CT 1 image, 3D image | 3D printed guide | - | - | Dolphin imaging software |

| Erdogan et al. [105] | 2021 | CT 1 image | 3D custom external nasal splint | 41 | Thermoplastic | DICOM, MIMICS (Materialise NV, Leuven, Belgium) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vu, N.H.; Trieu, N.M.; Anh Tuan, H.N.; Khoa, T.D.; Thinh, N.T. Review: Facial Anthropometric, Landmark Extraction, and Nasal Reconstruction Technology. Appl. Sci. 2022, 12, 9548. https://doi.org/10.3390/app12199548

Vu NH, Trieu NM, Anh Tuan HN, Khoa TD, Thinh NT. Review: Facial Anthropometric, Landmark Extraction, and Nasal Reconstruction Technology. Applied Sciences. 2022; 12(19):9548. https://doi.org/10.3390/app12199548

Chicago/Turabian StyleVu, Nguyen Hoang, Nguyen Minh Trieu, Ho Nguyen Anh Tuan, Tran Dang Khoa, and Nguyen Truong Thinh. 2022. "Review: Facial Anthropometric, Landmark Extraction, and Nasal Reconstruction Technology" Applied Sciences 12, no. 19: 9548. https://doi.org/10.3390/app12199548

APA StyleVu, N. H., Trieu, N. M., Anh Tuan, H. N., Khoa, T. D., & Thinh, N. T. (2022). Review: Facial Anthropometric, Landmark Extraction, and Nasal Reconstruction Technology. Applied Sciences, 12(19), 9548. https://doi.org/10.3390/app12199548