A Multifaceted Deep Generative Adversarial Networks Model for Mobile Malware Detection

Abstract

:1. Introduction

2. Literature Review

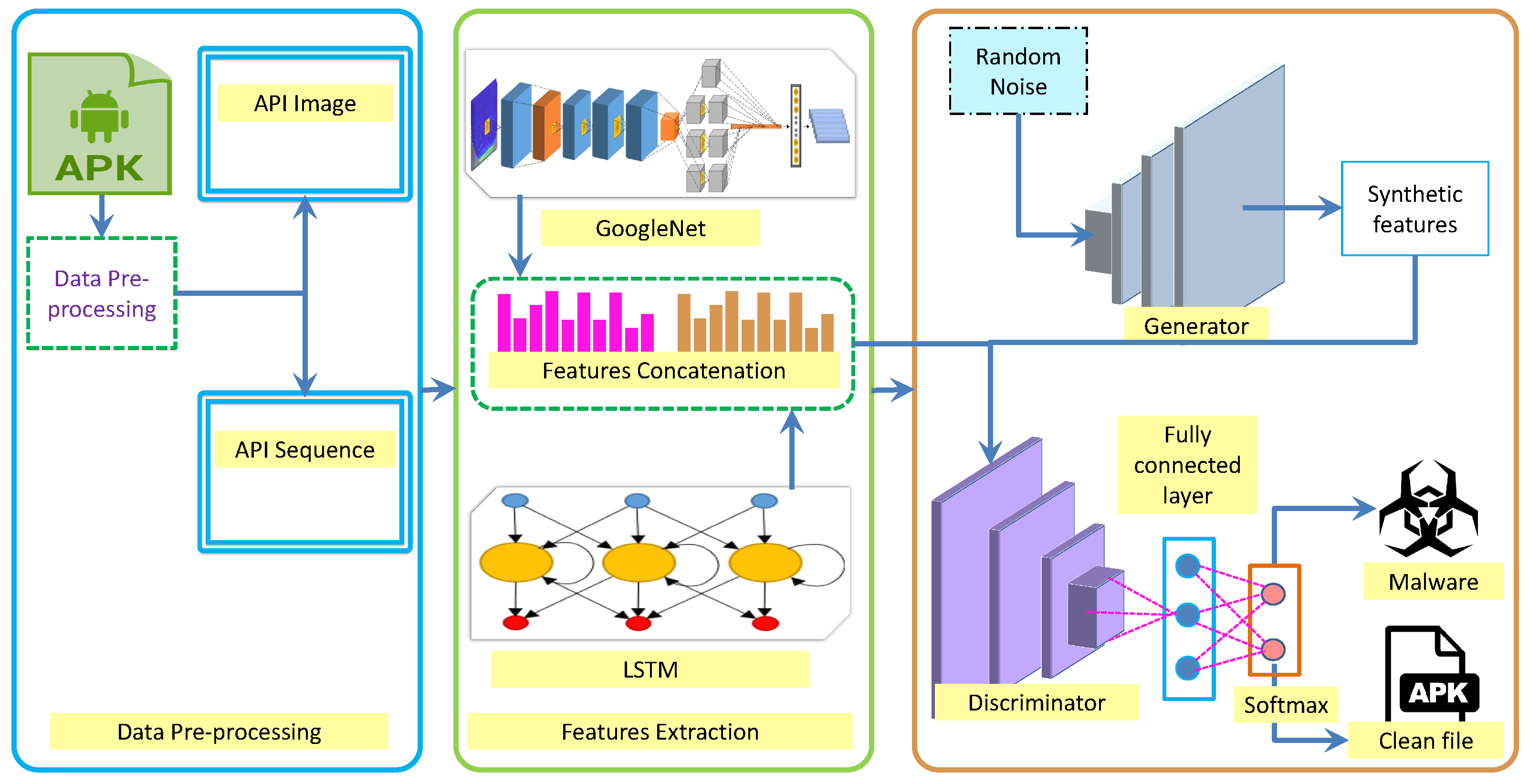

3. Proposed Methodology

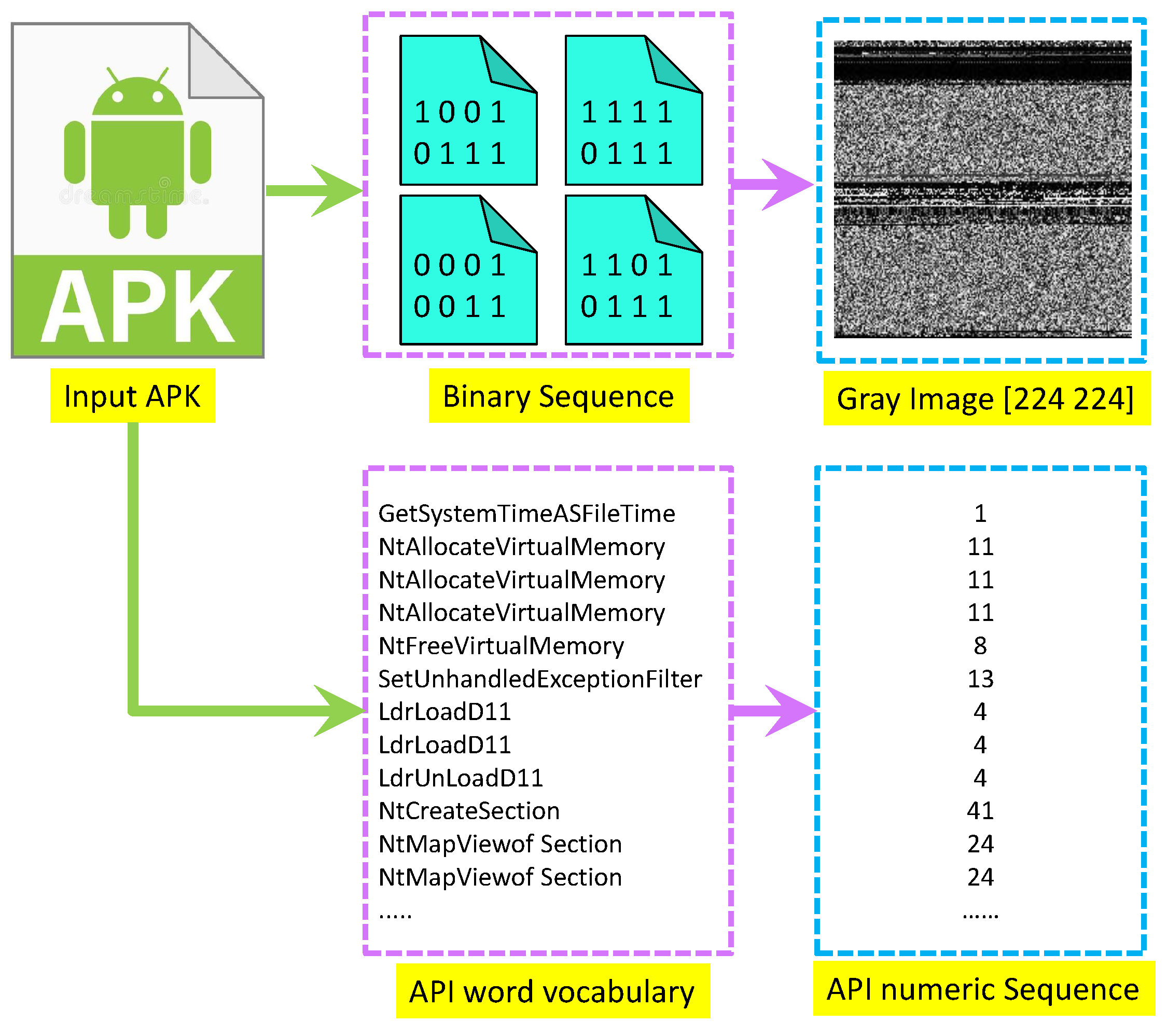

3.1. Data Preprocessing

3.2. Raw Feature Extraction

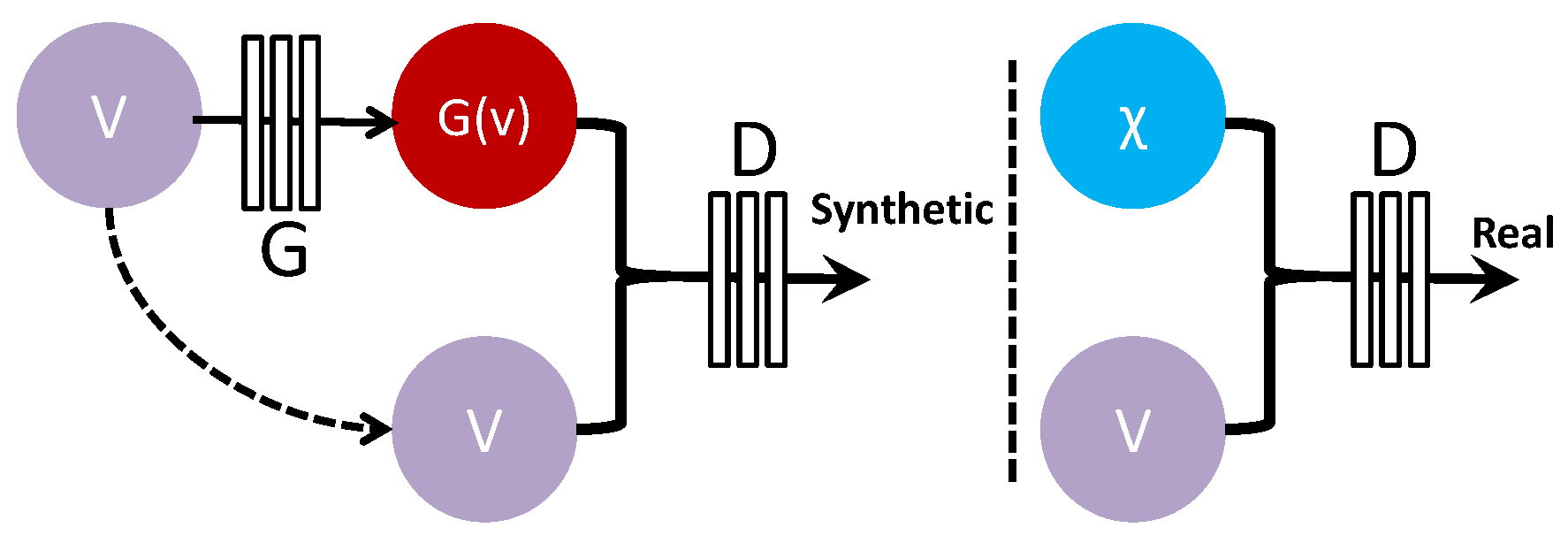

3.3. Generative Adversarial Network

4. Experimental Results

4.1. Datasets

4.2. Evaluation

| Parameter | Expression |

|---|---|

| Mean Precision | |

| Mean Recall | |

| Mean Fscore |

4.3. Classification Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aboaoja, F.A.; Zainal, A.; Ghaleb, F.A.; Al-rimy, B.A.S.; Eisa, T.A.E.; Elnour, A.A.H. Malware Detection Issues, Challenges, and Future Directions: A Survey. Appl. Sci. 2022, 12, 8482. [Google Scholar] [CrossRef]

- Chen, D.; Wawrzynski, P.; Lv, Z. Cyber security in smart cities: A review of deep learning-based applications and case studies. Sustain. Cities Soc. 2021, 66, 102655. [Google Scholar] [CrossRef]

- Awan, M.J.; Farooq, U.; Babar, H.M.A.; Yasin, A.; Nobanee, H.; Hussain, M.; Hakeem, O.; Zain, A.M. Real-time DDoS attack detection system using big data approach. Sustainability 2021, 13, 10743. [Google Scholar] [CrossRef]

- Ferooz, F.; Hassan, M.T.; Awan, M.J.; Nobanee, H.; Kamal, M.; Yasin, A.; Zain, A.M. Suicide bomb attack identification and analytics through data mining techniques. Electronics 2021, 10, 2398. [Google Scholar] [CrossRef]

- Perera, C.; Barhamgi, M.; Bandara, A.K.; Ajmal, M.; Price, B.; Nuseibeh, B. Designing privacy-aware internet of things applications. Inf. Sci. 2020, 512, 238–257. [Google Scholar] [CrossRef]

- Azad, M.A.; Arshad, J.; Akmal, S.M.A.; Riaz, F.; Abdullah, S.; Imran, M.; Ahmad, F. A first look at privacy analysis of COVID-19 contact-tracing mobile applications. IEEE Internet Things J. 2020, 8, 15796–15806. [Google Scholar] [CrossRef]

- Tam, K.; Feizollah, A.; Anuar, N.B.; Salleh, R.; Cavallaro, L. The evolution of android malware and android analysis techniques. ACM Comput. Surv. 2017, 49, 1–41. [Google Scholar] [CrossRef]

- Zheng, M.; Sun, M.; Lui, J.C.S. Droid Analytics: A signature based analytic system to collect, extract, analyze and associate android malware. In Proceedings of the 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; pp. 163–171. [Google Scholar]

- Seo, S.H.; Gupta, A.; Sallam, A.M.; Bertino, E.; Yim, K. Detecting mobile malware threats to homeland security through static analysis. J. Netw. Comput. Appl. 2014, 38, 43–53. [Google Scholar] [CrossRef]

- Sharma, K.; Gupta, B.B. Mitigation and risk factor analysis of android applications. Comput. Electr. Eng. 2018, 71, 416–430. [Google Scholar] [CrossRef]

- Potharaju, R.; Newell, A.; Nita-Rotaru, C.; Zhang, X. Plagiarizing smartphone applications: Attack strategies and defense techniques. ACM Int. Symp. Eng. Secure Softw. Syst. 2012, 7159, 106–120. [Google Scholar]

- Xiao, X.; Xiao, X.; Jiang, Y.; Liu, X.; Ye, R. Identifying Android malware with system call co-occurrence matrices. Trans. Emerg. Telecommun. Technol. 2018, 27, 675–684. [Google Scholar] [CrossRef]

- Chen, Z.; Yan, Q.; Han, H.; Wang, S.; Peng, L.; Wang, L.; Yang, B. Machine learning based mobile malware detection using highly imbalanced network traffic. Inform. Sci. 2018, 433, 346–364. [Google Scholar] [CrossRef]

- Martin, A.; Lara-Cabrera, R.; Camacho, D. Android malware detection through hybrid features fusion and ensemble classifiers: The AndroPyTool framework and the OmniDroid dataset. Inf. Fusion 2019, 52, 128–142. [Google Scholar] [CrossRef]

- Pai, S.; Troia, F.D.; Visaggio, C.A.; Austin, T.H.; Stamp, M. Clustering for malware classification. J. Comput. Virol. Hacking Tech. 2018, 13, 95–107. [Google Scholar] [CrossRef]

- Chawla V, N.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2020, 63, 139–144. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Shaham, T.R.; Dekel, T.; Michaeli, T. Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4570–4580. [Google Scholar]

- Akhenia, P.; Bhavsar, K.; Panchal, J.; Vakharia, V. Fault severity classification of ball bearing using SinGAN and deep convolutional neural network. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2022, 236, 3864–3877. [Google Scholar] [CrossRef]

- Hammad, B.T.; Jamil, N.; Ahmed, I.T.; Zain, Z.M.; Basheer, S. Robust Malware Family Classification Using Effective Features and Classifiers. Appl. Sci. 2022, 12, 7877. [Google Scholar] [CrossRef]

- Aslan, O.A.; Samet, R. A comprehensive review on malware detection approaches. IEEE Access 2020, 8, 6249–6271. [Google Scholar] [CrossRef]

- Wan, Y.L.; Chang, J.C.; Chen, R.J.; Wang, S.J. Feature-selection-based ransomware detection with machine learning of data analysis. In Proceedings of the 2018 3rd International Conference on Computer and Communication Systems (ICCCS), Nagoya, Japan, 27–30 April 2018; pp. 85–88. [Google Scholar]

- Zhang, Y.; Yang, Y.; Wang, X. A Novel Android Malware Detection Approach Based on Convolutional Neural Network. In Proceedings of the 2nd International Conference on Cryptography, Security and Privacy, Guiyang, China, 16–18 March 2018; pp. 144–149. [Google Scholar]

- Jung, J.; Choi, J.; Cho, S.J.; Han, S.; Park, M.; Hwang, Y. Android malware detection using convolutional neural networks and data section images. In Proceedings of the RACS ’18, Honolulu, HI, USA, 9–12 October 2018; pp. 149–153. [Google Scholar]

- Hu, H.; Yang, W.; Xia, G.S.; Lui, G. A color-texture-structure descriptor for high-resolution satellite image classification. Remote Sens. 2016, 8, 259. [Google Scholar]

- Song, T.; Feng, J.; Luo, L.; Gao, C.; Li, H. Robust texture description using local grouped order pattern and non-local binary pattern. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 189–202. [Google Scholar] [CrossRef]

- Patel, C.I.; Labana, D.; Pandya, S.; Modi, K.; Ghayvat, H.; Awais, M. Histogram of oriented gradient-based fusion of features for human action recognition in action video sequences. Sensors 2020, 20, 7299. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Guldmann, J.M. Measuring continuous landscape patterns with Gray-Level Co-Occurrence Matrix (GLCM) indices: An alternative to patch metrics? Ecol. Indic. 2020, 109, 105802. [Google Scholar] [CrossRef]

- Agbo-Ajala, O.; Viriri, S. Deep learning approach for facial age classification: A survey of the state-of-the-art. Artif. Intell. Rev. 2021, 54, 179–213. [Google Scholar] [CrossRef]

- Liu, J.Z.; Padhy, S.; Ren, J.; Lin, Z.; Wen, Y.; Jerfel, G.; Lakshminarayanan, B. A Simple Approach to Improve Single-Model Deep Uncertainty via Distance-Awareness. arXiv 2022, arXiv:2205.00403. [Google Scholar]

- Chen, Y.M.; Yang, C.H.; Chen, G.C. Using generative adversarial networks for data augmentation in android malware detection. In Proceedings of the 2021 IEEE Conference on Dependable and Secure Computing (DSC), Aizuwakamatsu, Fukushima, Japan, 30 January–2 February 2021; pp. 1–8. [Google Scholar]

- Atitallah, S.B.; Driss, M.; Almomani, I. A Novel Detection and Multi-Classification Approach for IoT-Malware Using Random Forest Voting of Fine-Tuning Convolutional Neural Networks. Sensors 2022, 22, 4302. [Google Scholar] [CrossRef]

- Akintola, A.G.; Balogun, A.O.; Capretz, L.F.; Mojeed, H.A.; Basri, S.; Salihu, S.A.; Alanamu, Z.O. Empirical Analysis of Forest Penalizing Attribute and Its Enhanced Variations for Android Malware Detection. Appl. Sci. 2022, 12, 4664. [Google Scholar] [CrossRef]

- Frey, B.J.; Hinton, G.E.; Dayan, P. Does the wake-sleep algorithm produce good density estimators? Adv. Neural Inf. Process. Syst. 1996, 8, 661–667. [Google Scholar]

- Frey, B.J.; Brendan, J.F.; Frey, B.J. Graphical Models for Machine Learning and Digital Communication; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Hu, W.; Tan, Y. Generating adversarial malware examples for black-box attacks based on GAN. arXiv 2017, arXiv:1702.05983. [Google Scholar]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 1, 1–5. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Mchaughlin, N.; del Rincon, J.M.; Kang, B.; Yerima, S.; Safaei, Y.; Trickel, E.; Zhao, Z.; Doupe, A.; Ahn, G.J. Deep Android Malware Detection. In Proceedings of the ACM on Conference on Data and Application Security and Privacy (CODASPY), Scottsdale, AZ, USA; 2017; pp. 301–308. [Google Scholar]

- Liang, S.; Du, X. Permission-combination-based scheme for android mobile malware detection. IEEE Int. Conf. Commun. (ICC) 2014, 1, 2301–2306. [Google Scholar]

- Jerome, Q.; Allix, K.; State, R.; Engel, T. Using opcode-sequences to detect malicious android applications. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, Australia, 10–14 June 2014; pp. 914–919. [Google Scholar]

- Zhang, N.; Xue, J.; Ma, Y.; Zhang, R.; Liang, T.; Tan, Y.A. Hybrid sequence-based Android malware detection using natural language processing. Int. J. Intell. Syst. 2021, 36, 5770–5784. [Google Scholar] [CrossRef]

| Feature Type | Dimension | Malware | Benign |

|---|---|---|---|

| API | 2128 | 11,000 | 11,000 |

| Permission-API | 7629 | 11,000 | 11,000 |

| FlowDroid-API | 3089 | 11,000 | 11,000 |

| Dynamic-API | 5932 | 11,000 | 11,000 |

| Train | Test | |||||

|---|---|---|---|---|---|---|

| Family | Precision | Recall | F-Score | Precision | Recall | F-Score |

| Adrd | 1 | 0.892 | 0.951 | 1 | 0.889 | 0.941 |

| BaseBridge | 0.941 | 0.964 | 0.955 | 0.939 | 0.953 | 0.945 |

| DroidDream | 0.872 | 0.912 | 0.882 | 0.857 | 0.909 | 0.882 |

| DroidKungFu | 0.979 | 0.952 | 0.962 | 0.962 | 0.948 | 0.954 |

| FakeDoc | 0.981 | 1 | 0.991 | 0.961 | 1 | 0.980 |

| FakeInstaller | 0.999 | 0.982 | 0.986 | 0.989 | 0.973 | 0.980 |

| Geinimi | 1 | 0.871 | 0.933 | 1 | 0.866 | 0.928 |

| GinMaster | 0.921 | 0.911 | 0.914 | 0.909 | 0.909 | 0.909 |

| Iconosys | 0.892 | 1 | 0.944 | 0.885 | 1 | 0.939 |

| Kmin | 0.989 | 0.973 | 0.982 | 0.983 | 0.968 | 0.975 |

| Opfake | 0.988 | 0.972 | 0.981 | 0.976 | 0.969 | 0.972 |

| Plankton | 0.970 | 0.979 | 0.970 | 0.952 | 0.975 | 0.963 |

| Average | 0.961 | 0.951 | 0.954 | 0.951 | 0.946 | 0.947 |

| Accuracy | 0.973 | 0.962 |

| Train | Test | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Ref. | Methodology | Precision | Recall | F-Measure | Accuracy | Precision | Recall | F-Measure | Accuracy |

| [40] | DNN/RNN | 90.4% | 84.9% | 87.5% | 90.7% | 90.0% | 84.0% | 87.1% | 90.0% |

| [40] | CNN-raw opcodes | 88.3% | 85.8% | 86.5% | 87.9% | 87.2% | 85.5% | 86.2% | 87.4% |

| [41] | DroidDetective | 89.8% | 96.4% | 92.5% | 96.1% | 89.5% | 96.0% | 92.1% | 96.0% |

| [42] | API calls, Yerima | 94.6% | 91.9% | 92.7% | 91.9% | 94.3% | 91.7% | 92.3% | 91.8% |

| [43] | CNN–BiLSTM-NB | 90.5% | 87.4% | 86.8% | 88.6% | 90.0% | 87.1% | 86.3% | 88.1% |

| Ours | MDGAN | 95.9% | 94.9% | 94.8% | 96.5% | 95.1% | 94.6% | 94.7% | 96.2% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mazaed Alotaibi, F.; Fawad. A Multifaceted Deep Generative Adversarial Networks Model for Mobile Malware Detection. Appl. Sci. 2022, 12, 9403. https://doi.org/10.3390/app12199403

Mazaed Alotaibi F, Fawad. A Multifaceted Deep Generative Adversarial Networks Model for Mobile Malware Detection. Applied Sciences. 2022; 12(19):9403. https://doi.org/10.3390/app12199403

Chicago/Turabian StyleMazaed Alotaibi, Fahad, and Fawad. 2022. "A Multifaceted Deep Generative Adversarial Networks Model for Mobile Malware Detection" Applied Sciences 12, no. 19: 9403. https://doi.org/10.3390/app12199403

APA StyleMazaed Alotaibi, F., & Fawad. (2022). A Multifaceted Deep Generative Adversarial Networks Model for Mobile Malware Detection. Applied Sciences, 12(19), 9403. https://doi.org/10.3390/app12199403