1. Introduction

MPC was proposed by Yao in 1982 with the millionaire’s question [

1]. MPC makes sure that participants receive the public function’s output in accordance with the protocol but cannot learn any intermediate information in order to reveal the input of other participants or any valuable private information. Data are the foundation for machine learning, and MPC offers a secure computing environment for data using, making it possible to combine the data assets of various parties to train more precise models, which is beneficial for the practical application of machine learning. The focus of MPC research has shifted to support secure computation frameworks for neural networks. By designing cryptographic protocols for linear functions as well as nonlinear activation functions for each layer of neural networks, MPC can protect the data assets of the parties involved in the training process. At present, frameworks have been able to provide secure training and secure inference for deep neural networks (DNN) and convolutional neural networks (CNN), such as security inference based on CryptFlow [

2] for detecting lung diseases based on chest X-ray images and using CT images for tumor diagnosis [

3]; SecureNN [

4] supports security training and secure inference as a 3-party MPC framework with semi-honest; ABY3 [

5] supports MPC protocols with the conversion of Arithmetic sharing, Yao’s sharing, and Boolean sharing; MinioNN [

6], a privacy-preserving inference framework based on Oblivious Transfer (OT); in addition, Crypten [

7], a MPC software framework with Pytorch that supports secure inference with GPU; EPIC [

8], an image classification framework based on MPC using SVM et al. Although MPC frameworks already support secure inference, the practical application of machine learning as a service in industrial cases needs to be supplemented. The instantiation of this work improves the automation and detection level of diamond synthesis, while protecting data security of both researchers and practitioners.

Synthetic diamonds are synthesized by chemical vapor deposition (CVD) [

9]. CVD is a process where carbon atoms are deposited from carbon gases under the action of high temperature plasma by a chemical reaction occurring on or in the vicinity of a heated substrate surface. In the process of producing synthetic diamonds, the surface quality inspection of substrate material is relying on experience of experts. The surface quality of the single crystal substrate will be the most important factor, which influences the quality of the epitaxial growth. Therefore, the quality of the synthetic diamonds is determined by the surface quality of the single crystal substrate. The images of the substrate are used to judge the growth quality of diamond. Identification of diamond substrate requires a high degree of knowledge reserve and experience, and the detection process is complex, which will lead to low efficiency of detection. However, the research on diamond substrate cultivation and defect detection technology is relatively exclusive, such as due to the protection of diamond substrate images and labels, commercial partners are not expected to get any data of diamond substrate, which seriously hinders the application of the research progress in the field of computer vision to the diamond defect detection task. At the same time, companies with advanced detection technology do not want their models to be leaked. Therefore, it is of great application value to use the MPC to realize the diamond substrate defect detection under the premise of protecting the data privacy of each party, as

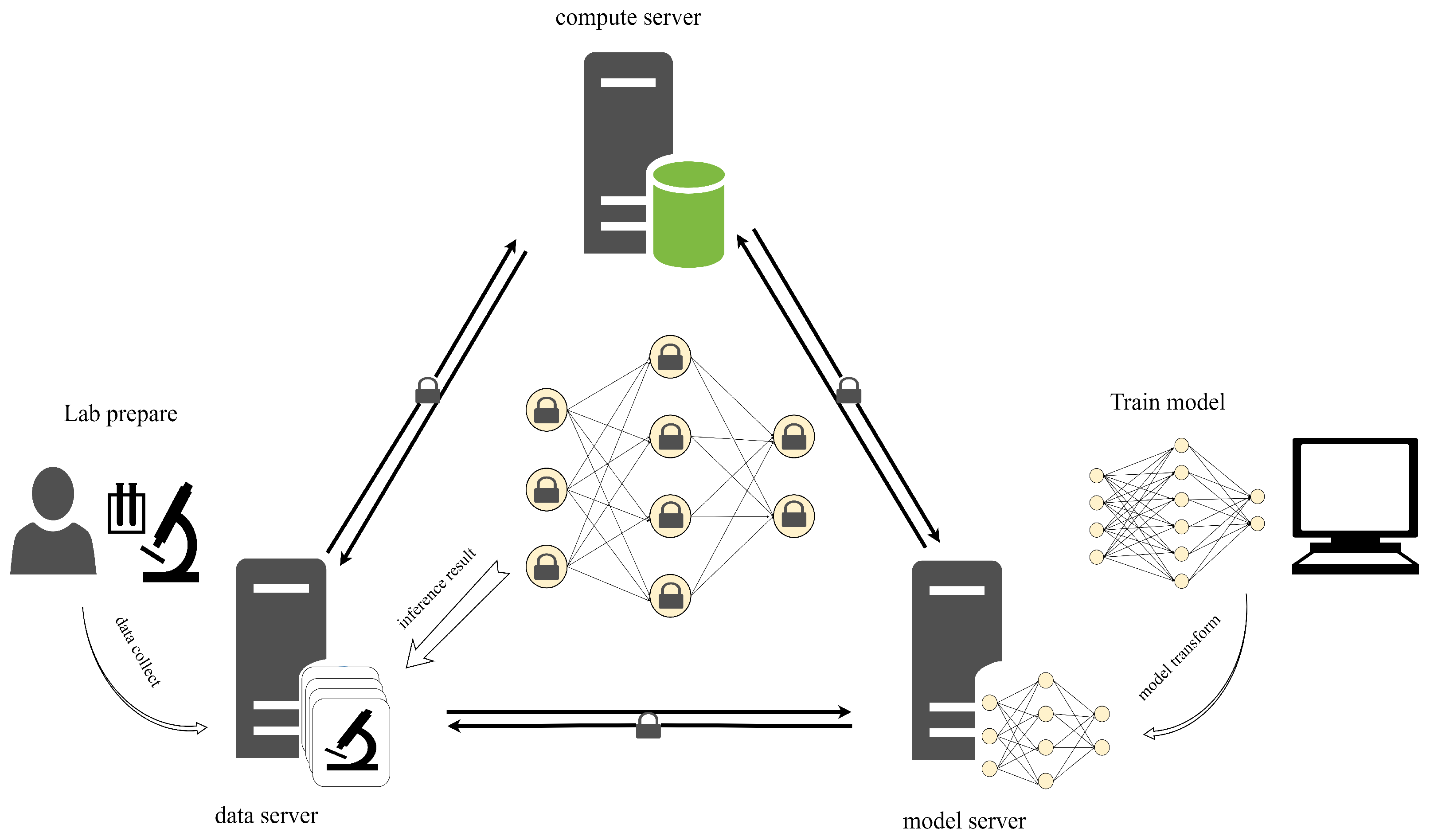

Figure 1.

2. Overview

2.1. Service Scenarios

In diamond synthesis, on the one hand, modeling diamond substrate defects necessitates engineers with expert experience in cultivation and inspection. In particular, inspection engineers and cultivation engineers model several typical diamond substrate defect types by observing potential characteristics influencing diamond substrate regrowth, locating, photographing, and recording, and then cultivating, growing, and judging again to summarize the shape and grade of defects that are present, a process that is accomplished through continuous experimental accumulation. On the other hand, for the purpose of high-quality synthesis, there are some requirements for diamond substrate defect detection of enterprises, but they are not allowed to expose their diamond substrate images due to the fact they must protect the commercial value of these images.

In this instance, The provider of the diamond substrate images is the data holder, and the engineer who summarizes and trains models is the model holder. Considering the inference efficiency, there is a trusted third party as a compute server. To perform secure inference under the conditions of the three-party server service, This paper employs FALCON to convert the private data of each party into secret shares and uses it as the privacy input of the PriRepVGG framework.

2.2. Contributions

Notably, our work mainly focuses on the construction of PriRepVGG and improvement of MPC protocols in FALCON. Our contributions are summarized as follows:

- (1)

This paper proposes a 3-party secure inference framework for image-based defect detection, PriRepVGG. This paper verifies the feasibility of PriRepVGG in the industrial diamond synthesis case, which also contributes to the integration of MPC in industrial defect detection technology.

- (2)

This paper optimizes the division and its subprotocols based on the Goldschmidt algorithm. In terms of operation efficiency, the protocol in this work is and the effective range of division operation is increased by at least four orders of magnitude compared to the origin work in FALCON.

- (3)

This paper improves FALCON compatible with more neural networks by adding the Avgpool protocol and utilizing it to implement the AdaptiveAvgpoolLayer to construct PriRepVGG. It helps FALCON to be integrated with more sophisticated neural networks.

2.3. Organization of the Paper

Subsequently, This paper will discuss the related work. After elaborating the nesessary preliminaries of the protocols in

Section 4,

Section 5 will describe the protocols in detail. Finally, This paper provides experiments and analysis in

Section 6.

3. Related Work

The variety of defects makes it difficult to use unified mathematical methods or traditional machine learning methods to distinguish and locate the features of defects while avoiding model overfitting. The use of typical CNN such as AlexNet [

10], VGG [

11], and ResNet [

12] for defect detection cannot meet the industrial needs. Lightweight CNNs such as MobileNets [

13,

14], ShuffleNet [

15], and RepVGG [

16] gradually come out. In particular, RepVGG can use parameter refactoring to convert the parameters of the training model into the parameters of the inference network of the plain structure. The inference architecture incorporates the original batch normalization (BN) layer of the training framework, which can prevent overfitting of the model. Because there are complex operators such as division and square root in the BN layer, simplifying the BN layer is beneficial to reduce the computational complexity of secure inference, and the effect of network inference still maintains the level of inference of the training model. In addition, in need of privacy protection, there has been some work about data security. For example, Liu [

17] adopts Paillier encryption to realize classification and recognition method of encrypted electroencephalography data based on a neural network; software defect prediction using differential privacy and deep learning [

18,

19], etc. Based on the service scenario discussed in

Section 2.1, this paper uses the MPC framework FALCON [

20] to protect the privacy of data holders and model holders at the same time. This MPC framework combines the features of SecureNN and ABY3, using the replicated secret sharing (RSS). It is superior to the previous work [

4,

5,

21] in terms of time and communication cost for secure inference, and has a distinct advantage for the secure two-party computing framework [

6,

22,

23].

Division is a fundamental but crucial arithmetic operator in MPC; it acts as the core component of several activation function and pooling layers. A number of MPC frameworks have proposed their own different implementations of division: division in SecureML [

24] used a circuit based on the cryptographic tool EMP toolkit [

25]; SecureNN [

4], which constructs a subtractive division based on the underlying secure operators, and ABY2 [

26] used a division implemented in a garbled circuit; in addition, division can be implemented in MPC using functional iteration, such as Crypten [

7], which uses a Newton–Raphson iteration method to implement division. SIRNN [

27] and FALCON are based on the Goldschmidt [

28] method to realize division; and initial approximation is vital in functional iteration. In order to determine an initial approximation, SIRNN and FALCON construct the MSNZB and the POW algorithm to deflate the initial values (denominators). Thus, as for the division algorithm, their implementations are probably divided into the following two ways:

Repeated Subtraction, also known as Subtractive. Assume that until the remainder is zero or a lesser amount than the number being eliminated, the exact number is continually subtracted from the other more important digit; it is computationally intensive when high accuracy is required.

Functional iteration is more suitable for secure computation with division. such as Newton–Raphson and Goldschmidt are used in division. The limitation of these methods is that the initial value requirements are relatively harsh, but the advantage is that the second order iteration is faster compared to the repeated subtraction.

However, the iteration rounds of division depends on the approximation degree of the initial value, that is, there is a trade-off between the complexity of implementing the approximation function with a large range of digital inverse in MPC and the iteration rounds of division. Therefore, if the initial approximation can be determined precisely, then the rounds of iterations will be reduced, which in turn will improve the efficiency of the division algorithm.

4. Preliminaries

Notation are used to represent the parties involved in the protocol, which denote the compute server, model server and data server, respectively. In addition, the algorithm needs to know that is the next party of and the previous party of is . To ensure protocol inheritance, the algorithm uses the notation described in FALCON. Use prefix to denote a function with participation of and . This paper uses to denote the replicated secret sharing (RSS) of modulo m for a general modulus m, such as the arithmetic share or the boolean share value , and uses to denote the i th component of vector x. All of the protocols are based on three specific rings which are , and , where (L = ), ℓ = 64, p = 67. This work uses a fixed-point parameter f with 13, which is for scaling shares forming a real value x, where . The fixed-point parameter will be used in multiplication for truncating the result.

4.1. Threat Model and Security

The threat model in this paper is similar to previous work, like GAZELLE [

23] and SecureML [

24]. The work combines the consideration of the application scenario and chooses to design the protocol based on semi-honest security with an honest-majority. A semi-honest adversary is described as honest and curious because the adversary will follow the protocol instructions honestly but attempt to learn the private input of other parties. This security model can guarantee the security when a semi-honest adversary corrupts one single party. Since the protocols in this work invoke a number of sub-protocols which have proved the security in the prior work FALCON in the real world-ideal word simulation paradigm, and the security of the protocols is implied supported with previous standard composition theorems [

29,

30].

4.2. Secret Sharing Scheme

Secret sharing is a crucial security primitive of MPC, and it is the foundation of various two-party and three-party protocols. Two-party frameworks such as Cryptflow2 and SecureML use a 2-out-of-2 additive secret sharing scheme. Three-party framework such as SecureNN also uses 2-out-of-2 additive secret sharing between two parties with a third assist party that only provides relevant randomness for two-party protocols.

A t-out-of-n threshold secret sharing scheme (TSSS) [

31] consists of two algorithms: Share and Reconstruct. Share scheme means a randomized algorithm that takes a message

x in

as the input and outputs a sequence

a =

of

. The reconstruct scheme means a deterministic algorithm that takes a collection of t or more

of

a as the input, and outputs the message

x. In a word, TSSS is that among

n parties that hold secret shares, and at least

t of them are able to restore the secret.

FALCON combines SecureNN and ABY3 [

5] frameworks using 2-out-of-3 secret sharing scheme, which can tolerate at most corruption of one party. The RSS scheme can help three-party protocols be more efficient and allow more offline computing.

denotes an RSS of

x with module

m. In fact,

demonstrates that

is held by

,

is held by

, and

is held by

. The paper assumes that there are two private inputs

, the process of converting the 3-out-of-3 secret sharing to the 2-out-of-3 RSS shows in

Figure 2.

4.3. Basic Operations

The MPC-based secret sharing protocols do not need to reconstruct the shares in the calculations. To construct new protocols based on the RSS scheme, this paper will call the sub-protocol of the original work to implement the new protocol on a partial step. In this section, the paper describes the basic operations the algorithms needed.

Selectshare: is a critical sub-routine for majority protocols. It takes two shares as input, for which is a select bit. The output c is either a or 0 depending on b. Set : Input form , set if ; otherwise, set if ; the algorithm can abstract this formula as = (1 ) .

ReLU: ReLU is a necessary component of protocol design and network construction, and it is a significant activation function used in neural networks; takes one share as input, the output is if and 0, otherwise. Set : set if and set if

DReLU: DReLU is a derivative of ReLu, for the reason that the paper uses

to perform fixed point arithmetic. It notes that the number defines the positive and negative by the first bit, so it is known that the positive numbers are the first

numbers; therefore, the most significant bits of positive numbers are 0 and negative numbers otherwise. The relation between DReLU and ReLU can be written as:

Set : Set if and Set if .

Pow: is used for estimating the scaling factor to calculate the initial value of division and the rsqrt algorithm; it can always be combined with the truncation function to determine a suitable initial value of the denominator. It takes one share as an input and the output , which is the bounding power of . Set : Input in , then get in clear as output, where

5. Algorithm Construct

In this section, the paper constructs three algorithms used in the work. The notation, security, basic operations, and secret sharing scheme have been elaborated in

Section 4.

5.1. Condition Select

Previous work has implemented similar protocols for conditional selecting like Multiplexer and 1-of-2 select. Conditional selecting protocol functions as a secure if-else judgment statement so the algorithm implements this protocol in FALCON. The paper uses

(

) to realize the function

, as Algorithm 1. The formula of

can be described as

, which means that, when choosing bit

, outputs

a and, when

, outputs b.

| Algorithm 1 Condition Select : |

Require: hold secret shares of and condition ;

Ensure:

All parties get shares of the result c, which is chosen from the inputs by the condition |

| | - 1:

Set - 2:

Compute on inputs . - 3:

Compute on inputs . - 4:

Compute - 5:

return c;

|

5.2. Division

Our work use Goldschmidt’s [

28,

32] algorithm to present a new secret division protocol. The protocol assumed when the numerator and denominator both are secret. The Goldschmidt’s method offers quadratic convergence, and the multiplications in the algorithm iterations can be computed concurrently. We analyze the form of secret division as:

Both the numerator and denominator are secret shares. In the construction of division, the algorithm needs to transform the denominator

b as:

The transformation is to convert the formula to a Taylor series. Then, the algorithm can determine the value of

to be scaled with

to ensure the right iteration conditions, which is more flexible than the previous work for initial approximation:

We can naturally convert Equation (

4) to the formula as follows:

The algorithm uses Equation (

5) to obtain the approximation

by multiplying it with the numerator and denominator at the same time. After multiplying, the denominator becomes 1 and then the algorithm obtains the quotient approximation. The paper has shown the iteration functions in Algorithm 2 with setting

and using

to update the iteration variate. In the

ith iterations, the factor

m is as Equations (

6) and (

7):

The main hurdle for secure division is to determine an appropriate initial approximation. In order to make the division algorithm more adaptive in different computing scenarios, the paper uses global variables to control the LoopCounter

and initial factor

to distinguish a different denominator range. All the settings depend on the public information and take effect when the program is precompiling. The paper scales the denominator range into three level intervals and set parameters, respectively:

.

, and the algorithm sets precise parameters to optimize computational speed and communication in the above range interval while maintaining accuracy;

As for

, our design principle is aiming for a larger effective divisor range and computational accuracy. Considering that the fix-pointed precision is

, which is close to

, the algorithm tries to reduce the

[

24] error due to the calculation approaching this bound; The range of calculations is too large to be of practical significance. Based on parameters such as

ℓ = 64 and the fixed-point precision, the maximum computation boundary is

. Hence, the selection of the initial value of the iteration needs to consider the size of the denominator on which the scene depends. Our algorithm is able to guarantee a reasonable deflation of each element of the required initial values vector, and with vectorisation processing can make the final output vector with a vector of scaling factors maintain the same size as the input vector, and the algorithm as shown in Algorithm 2.

| Algorithm 2 Division : |

Require: hold secret shares of in and Loop Counter , initial factor are

global settings to initialize dividend;

Ensure: get shares of the result in .

|

|

| - 1:

Set - 2:

Initialize , and . - 3:

fordo - 4:

Compute on inputs . - 5:

Compute on inputs . - 6:

Compute on inputs . - 7:

end for - 8:

Compute and - 9:

for

do - 10:

Compute , and - 11:

end for - 12:

return;

|

Division is a necessary component in neural networks, such as the BN layer, Avgpool layer, etc. These layers determine the divisor based on an explicitly defined model structure, so truncation by the public can be used effectively to realize division if the divisor satisfies a power of 2. When division is applied to a general case above or the L1 Normalization, Min-Max Normalization in the neural network Layer, as well as some nonlinear activation functions sigmoid, tanh and some gradient descent methods, such as SGD, it should be applied with a secure division algorithm because the divisor is secret.

works with settings

ℓ = 64 and

, and the paper proved the correctness of the division in secure inference using PriRepVGG in

Section 6.5.

5.3. Avgpool

The paper implements Avgpool based on

, as Algorithm 3, then uses it to construct the AdaptiveAvgpool layer, which can automatically calculate the kernel size of the average pool and the stride according to the output size and input size. The AdaptiveAvgpool layer can be further reduced in parameters in the network, so it is used before the final fully connected layer in RepVGG.

| Algorithm 3 Avgpool : |

Require: hold shares of in , where n is the sharing form of filter size ;

Ensure: obtain shares of the result .

|

|

| - 1:

Initialize - 2:

for

do - 3:

Compute . - 4:

end for - 5:

Compute on inputs - 6:

return avg;

|

In neural networks, the AdaptiveAvgpool layer is popular and can reduce redundant information, enlarge the receptive field and prohibit overfitting. It is common now and will benefit FALCON to accommodate more neural networks.

6. Experiment Evaluation

The paper implements PriRepVGG, a secure inference framework built in C++ based on FALCON. All of the source code implementation of PriRepVGG will be open sourced in Github at

https://github.com/Barry-ljf/PriRepVGG (accessed on 15 September 2022). The plaintext training machine configuration for this paper was performed on an IntelCore i7 processor with 16 GB of RAM and a GTX 1650 Ti GPU with a 4 GB graphics memory. The secure inference machine configuration for this paper is an Ubuntu server with 120 GB RAM and 18 cores with 72 threads. All the image dataset in this work comes from the functional diamond lab. The paper experiment with

ℓ = 32 and

for secure inference. The paper first performs data cleaning and feature processing on the dataset, and then trains the model in plaintext. After training, we extract the model to obtain all the weights and bias. Then, we transform the images and weights into shares. Finally, this work uses PriRepVGG to perform a series of secure inference experiments and record the training index of the plaintext models and compare the inference results of plaintext and ciphertext. Meanwhile, this work performed two experiments on division. First, we compare division of this paper with the original division method of the FALCON in terms of computational speed, range, and accuracy. Then, we compare the division with previous division methods based on different principles in terms of computation time and accuracy. Our division experiment environment is based on the FALCON and Rosetta and works in

ℓ = 64 and

.

6.1. Division Experiment

In the process of using FALCON, it was found that the original division operation has a significant limitation for the denominator, which is mainly related to the design principle and algorithm . In addition, mistakes always occur with vectorization processing with inputs: It is found that the division method in FALCON did not handle the results correctly when different values were taken in the vector. Thus, the work performed division experiments with Avgpool on the same denominator to ensure the origin division can normally work.

The paper compares the division in three different intervals of orders of magnitude (Range-I, Range-II, Range-III). They are

and

respectively, as

Table 1. For each interval set of experiments, the algorithm took random values as input, set the divisor to be a positive floating point number to ensure that the original division method in FALCON can work, set the experimental data type length to unsigned int64, and the experiment recorded the computation time (every 20 times), the communication cost, the precision of the division, and the average relative error between the ciphertext and the plaintext division calculation. The experimental results outperform the original work in terms of efficiency and communication in the lower data range, with a speedup of nearly

. In addition, the algorithm improves the division operations being able to calculate in a larger range than the original division method—about a 4-order of magnitude increase. Then, the experiment compares the division respectively based on Goldschmidt’s method, the repeated subtraction method (SecureNN), and the Newton–Raphson iteration method (CRYPTEN) in ROSETTA, as

Table 2. The experiment results show that Goldschmidt-based implementation of the division achieves a runtime improvement of nearly

compared to the Newton–Raphson iteration method, and about

compared to the repeated subtraction method.

6.2. Network Construction

The paper reports the RepVGG-A0 architecture of RepVGG into FALCON. To ensure the limited memory is fully utilised, the work has redesigned the network to reduce the number of parameters in the fully connected layer by changing convolutional layers. The paper slightly adjusts the output feature map of the convolutional layer in the last stage from 1280 to 1024 in PriRepVGG. In addition, the paper implements AdaptiveAvgpool in FALCON using the Avgpool, which is constructed in

Section 5.3. AdaptiveAvgpool is used as a downsampling layer before the fully connected layer. Then, the paper adds a Relu layer last in PriRepVGG to represent a negative class. The reconfigured PriRepVGG has an input resolution of

and AlexNet has an input resolution of

. The number of layers in the AlexNet network are a total of 21 layers and 46 layers in PriRepVGG.

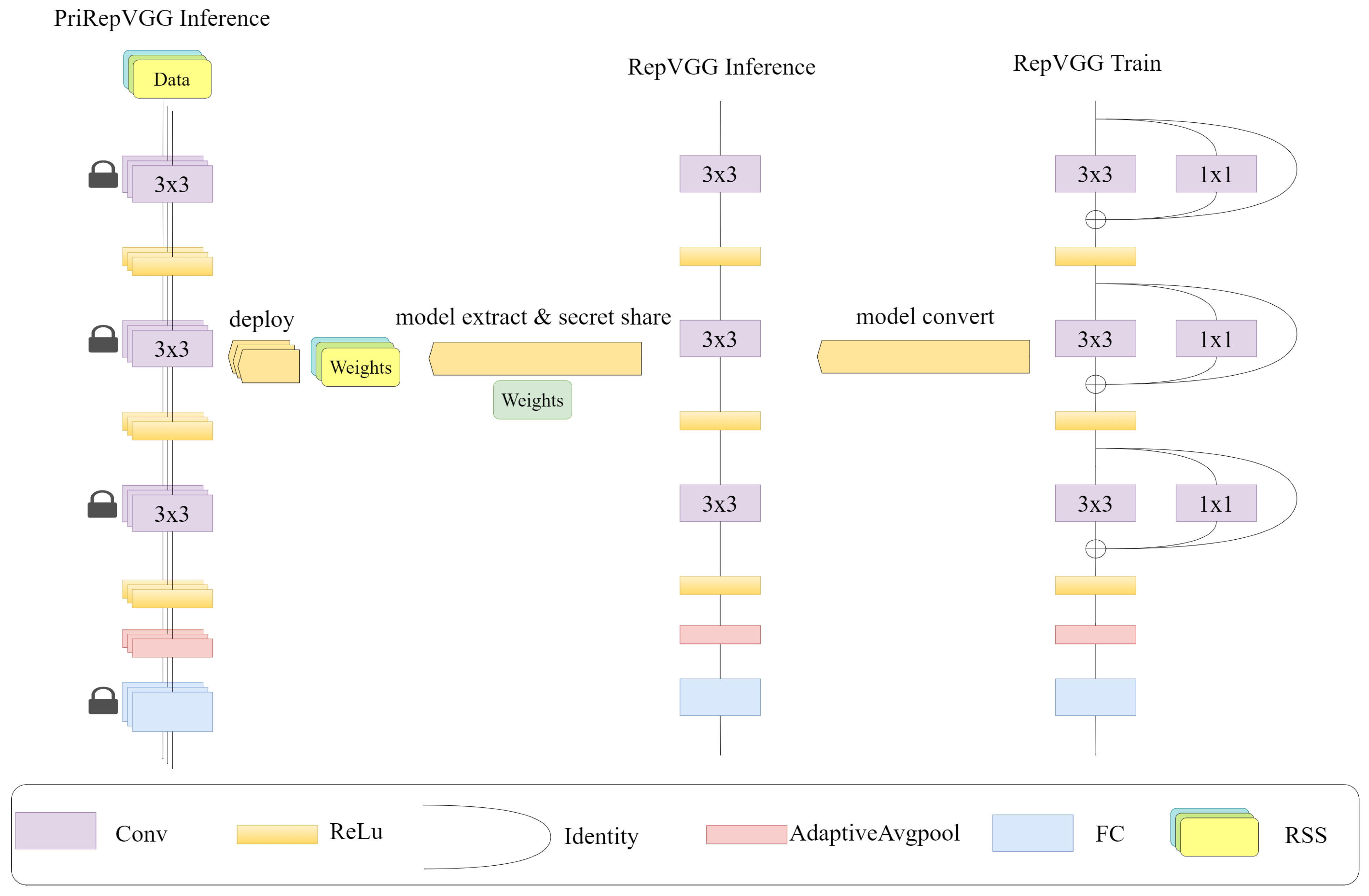

6.3. Model Training and Conversion

The dataset is images of typical defective features and some inclusions of perturbations that have been carefully selected by professional engineers. In addition, the paper performed data enhancement operations on all datasets, including random cropping, rotating, changing the chromaticity, etc. We trained the model with RepVGG and AlexNet, using a resolution of

and

as input, respectively. For AlexNet, this work uses BN layers in plaintext training to prevent overfitting and has deployed the BN layer in FALCON for AlexNet secure inference. For RepVGG, it needs to convert the training model with

,

convolutional kernels and shortcut layers into an inference phase model with a plain structure, as shown in

Figure 3. The transformed model is then used to perform plaintext inference on the validation set.The dataset was sampled randomly (Dataset-I) for validation and recorded the recall, precision, f1 score, and accuracy of the plaintext model obtained by RepVGG and AlexNet by performing the inference. In practical terms, the core situation that needs for attention is defective diamond substrates. Identifying diamond substrate pieces (positive class) as normal (negative class) would be costly in terms of manual cultivation and risk of compensation, so this work needs the recall score of the model to be stable and high. On the other hand, it is convenient to extract all defective diamond substrates for secondary checking when the model is used for model holders themselves, which would contribute to work efficiency. Like the result shown in

Table 3, the deployed inference model obtained performed relatively well, and this work also recorded the indexes for datasets with some perturbation samples (Dataset-II), which demonstrates that RepVGG’s model is more stable.

6.4. Weights Extraction and Sharing

We obtain the plaintext model from

Section 6.3. Then, this work needs to extract all the weights and biases of the convolutional and fully connected layers from the plaintext model. After extracting all the weights, the model holder keeps the weights and the data holder keeps the image data in the local files; respectively; then, all data are shared as RSS to three party servers in PriRepVGG. We use

to denote the privacy input for weight shares, and each party holds

,

,

, respectively, which satisfies

; then, all parties will share again to get a secret share about the next party’s RSS of

. At the same time, the data holder extracts its data about diamond substrate images and does the same thing as the model holder. After sharing, we obtain the RSS of

and each party obtains

,

,

. The process about secret sharing has been shown in

Figure 2.

6.5. Secure Inference

We use PriRepVGG for secure inference and simulate a 3-party scenario with three servers. We record the computation cost of secure inference with input resolution

of PriRepVGG and

of AlexNet, as

Table 4. Each party owns the private secret shares provided by the model holder and the data holder extracted in

Section 6.4. Every single party is unable to reconstruct the origin secret in the security setting discussed in

Section 4; thus, the non-data-owning party is unable to learn the data of others in the task of secure inference. The data holder will finally obtain the result of inference. We record the results of the secure inference to compute the recall, precision, f1 score and accuracy obtained by the model under ciphertext inference, and compare the result with the plaintext inference in

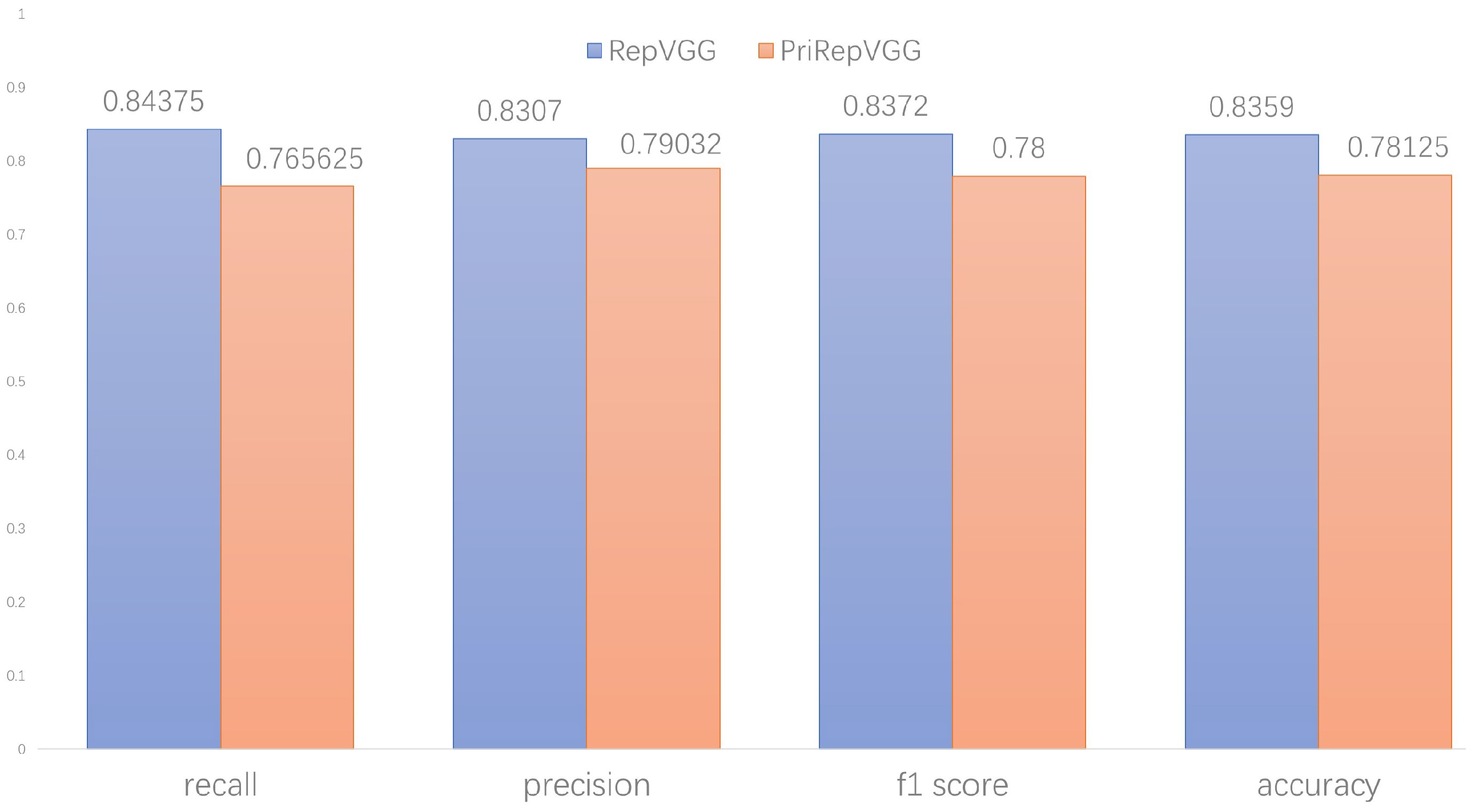

Section 3.

As shown in

Figure 4, the secure inference is stable, and the difference rate does not exceed 0.08. In addition, the accuracy of secure inference is flat compared to the accuracy in plaintext. We obtained the following detailed results: the setting of batch size of 128, and the average misjudged numbers of the plaintext model is 9. As shown in

Table 5 the average error score rate for five random batch experiments is 0.0703.

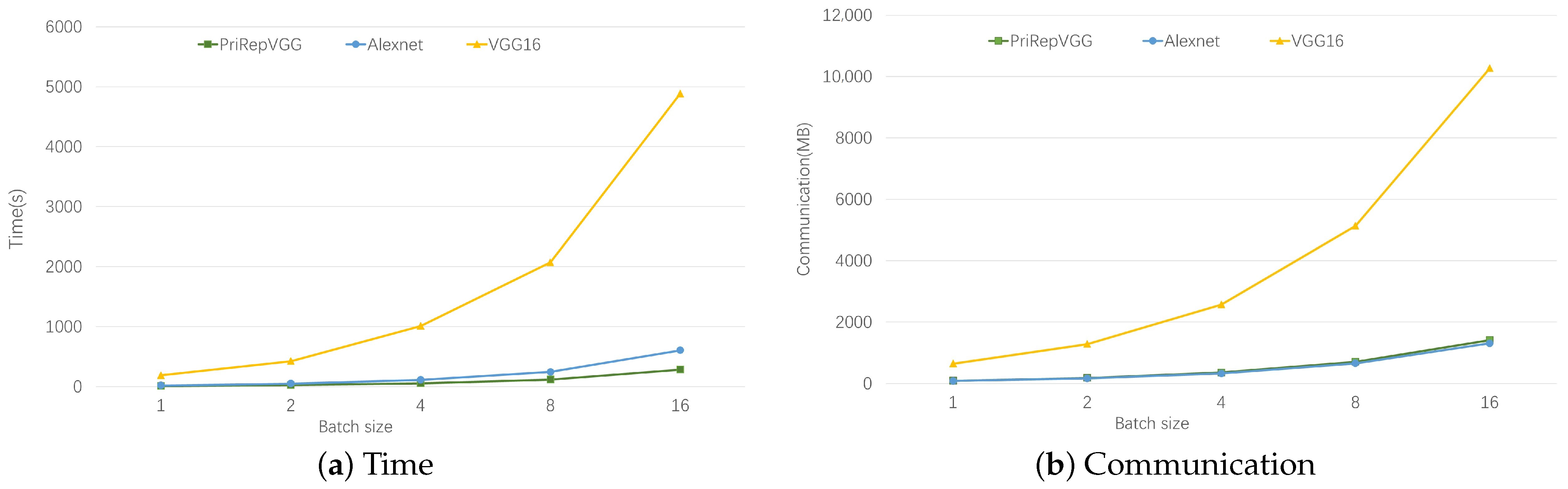

We test AlexNet, VGG-16, and PriRepVGG to compare the performances of the three networks with input resolution

,

, and

.

Figure 5 shows that the communication and time cost of PriRepVGG is 1–1.5 orders of magnitude lower than the VGG-16. The communication rounds of PriRepVGG is

lower than the AlexNet shown in

Figure 6. The evaluation shows that the specific plain inference architecture of PriRepVGG benefits the lightweight of parameters and computational cost reduction. In terms of the time cost for secure inference, the PriRepVGG network is under 10 to 20 s per image with the batch size growing.

7. Conclusions

Based on FALCON, This work presented a secure 3-party defect detection image-based framework PriRepVGG. We have successfully applied PriRepVGG to the task of detecting defection in industrial diamond substrates. Compared to plaintext, the secure inference with PriRepVGG performs stably, and the average error rate is less than 0.07. PriRepVGG is more lightweight then the realization of VGG in MPC. In addition, This work optimized the division algorithm of FALCON, which resulted in a computation speedup and a 4 order of magnitude increase in operational range over the original work. In the future, we would like to consider using PriRepVGG in more image-based secure inference scenarios with malicious settings and improve the compute and memory requirements of PriRepVGG.

Author Contributions

Conceptualization, J.L. and S.G.; methodology, J.L.; software, J.L. and Z.Y.; validation, Z.Y., S.G. and J.L.; formal analysis, J.L.; investigation, S.G.; resources, H.X. and G.Y.; data curation, J.L. and S.G.; writing—original draft preparation, J.L., Z.Y. and S.G.; writing—review and editing, J.L., G.Y. and H.X.; visualization, J.L.; supervision, G.Y. and H.X.; project administration, G.Y. and H.X.; funding acquisition, G.Y. and H.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the National Major Science and Technology Projects of China (2020YFB1711204, 2019YFB1705702).

Acknowledgments

We thank Yankai Li and Xiangyu Xie for providing computational devices and ideas.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chow, J.K.; Su, Z.; Wu, J.; Tan, P.S.; Mao, X.; Wang, Y.H. Anomaly detection of defects on concrete structures with the convolutional autoencoder. Adv. Eng. Inform. 2020, 45, 101105. [Google Scholar] [CrossRef]

- Kumar, N.; Rathee, M.; Chandran, N.; Gupta, D.; Rastogi, A.; Sharma, R. Cryptflow: Secure tensorflow inference. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020; pp. 336–353. [Google Scholar]

- Alvarez-Valle, J.; Bhatu, P.; Chandran, N.; Gupta, D.; Nori, A.; Rastogi, A.; Rathee, M.; Sharma, R.; Ugare, S. Secure medical image analysis with cryptflow. arXiv 2020, arXiv:2012.05064. [Google Scholar]

- Wagh, S.; Gupta, D.; Chandran, N. SecureNN: 3-Party Secure Computation for Neural Network Training. Proc. Priv. Enhancing Technol. 2019, 2019, 26–49. [Google Scholar] [CrossRef]

- Mohassel, P.; Rindal, P. ABY3: A mixed protocol framework for machine learning. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 35–52. [Google Scholar]

- Liu, J.; Juuti, M.; Lu, Y.; Asokan, N. Oblivious neural network predictions via minionn transformations. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 619–631. [Google Scholar]

- Knott, B.; Venkataraman, S.; Hannun, A.; Sengupta, S.; Ibrahim, M.; van der Maaten, L. Crypten: Secure multi-party computation meets machine learning. Adv. Neural Inf. Process. Syst. 2021, 34, 4961–4973. [Google Scholar]

- Makri, E.; Rotaru, D.; Smart, N.P.; Vercauteren, F. EPIC: Efficient private image classification (or: Learning from the masters). In Cryptographers’ Track at the RSA Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 473–492. [Google Scholar]

- Kodas, T.T.; Hampden-Smith, M.J. The Chemistry of Metal CVD; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. 2012 AlexNet. Adv. Neural Inf. Process. Syst. 2012, 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–25 June 2021; pp. 13733–13742. [Google Scholar]

- Liu, Y.; Huang, H.; Xiao, F.; Malekian, R.; Wang, W. Classification and recognition of encrypted EEG data based on neural network. J. Inf. Secur. Appl. 2020, 54, 102567. [Google Scholar] [CrossRef]

- Bensaoud, A.; Kalita, J. Deep multi-task learning for malware image classification. J. Inf. Secur. Appl. 2022, 64, 103057. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, D.; Cui, Z.Q.; Gu, Q.; Ju, X.L. DP-share: Privacy-preserving software defect prediction model sharing through differential privacy. J. Comput. Sci. Technol. 2019, 34, 1020–1038. [Google Scholar] [CrossRef]

- Wagh, S.; Tople, S.; Benhamouda, F.; Kushilevitz, E.; Mittal, P.; Rabin, T. Falcon: Honest-majority maliciously secure framework for private deep learning. arXiv 2020, arXiv:2004.02229. [Google Scholar] [CrossRef]

- Riazi, M.S.; Weinert, C.; Tkachenko, O.; Songhori, E.M.; Schneider, T.; Koushanfar, F. Chameleon: A hybrid secure computation framework for machine learning applications. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security, Incheon, Korea, 4 June 2018; pp. 707–721. [Google Scholar]

- Chandran, N.; Gupta, D.; Rastogi, A.; Sharma, R.; Tripathi, S. EzPC: Programmable and efficient secure two-party computation for machine learning. In Proceedings of the 2019 IEEE European Symposium on Security and Privacy (EuroS&P), Stockholm, Sweden, 17–19 June 2019; pp. 496–511. [Google Scholar]

- Juvekar, C.; Vaikuntanathan, V.; Chandrakasan, A. {GAZELLE}: A low latency framework for secure neural network inference. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1651–1669. [Google Scholar]

- Mohassel, P.; Zhang, Y. Secureml: A system for scalable privacy-preserving machine learning. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–269 May 2017; pp. 19–38. [Google Scholar]

- Wang, X.; Malozemoff, A.J.; Katz, J. EMP-toolkit: Efficient MultiParty computation toolkit, 2016.

- Patra, A.; Schneider, T.; Suresh, A.; Yalame, H. {ABY2. 0}: Improved {Mixed-Protocol} Secure {Two-Party} Computation. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Online, 11–13 August 2021; pp. 2165–2182. [Google Scholar]

- Rathee, D.; Rathee, M.; Goli, R.K.K.; Gupta, D.; Sharma, R.; Chandran, N.; Rastogi, A. SiRnn: A math library for secure RNN inference. In Proceedings of the 2021 IEEE Symposium on Security and Privacy (SP), Francisco, CA, USA, 24–27 May 2021; pp. 1003–1020. [Google Scholar]

- Catrina, O.; Saxena, A. Secure computation with fixed-point numbers. In International Conference on Financial Cryptography and Data Security; Springer: Berlin/Heidelberg, Germany, 2010; pp. 35–50. [Google Scholar]

- Canetti, R. Security and composition of multiparty cryptographic protocols. J. Cryptol. 2000, 13, 143–202. [Google Scholar] [CrossRef]

- Canetti, R. Universally composable security: A new paradigm for cryptographic protocols. In Proceedings of the Proceedings 42nd IEEE Symposium on Foundations of Computer Science, Newport Beach, CA, USA, 8–11 October 2001; pp. 136–145. [Google Scholar]

- Rosulek, M. The joy of cryptography. 2017.

- Markstein, P. Software division and square root using Goldschmidt’s algorithms. In Proceedings of the 6th Conference on Real Numbers and Computers (RNC’6), Wadern, Germany, 15–17 November 2004; Volume 123, pp. 146–157. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).