1. Introduction

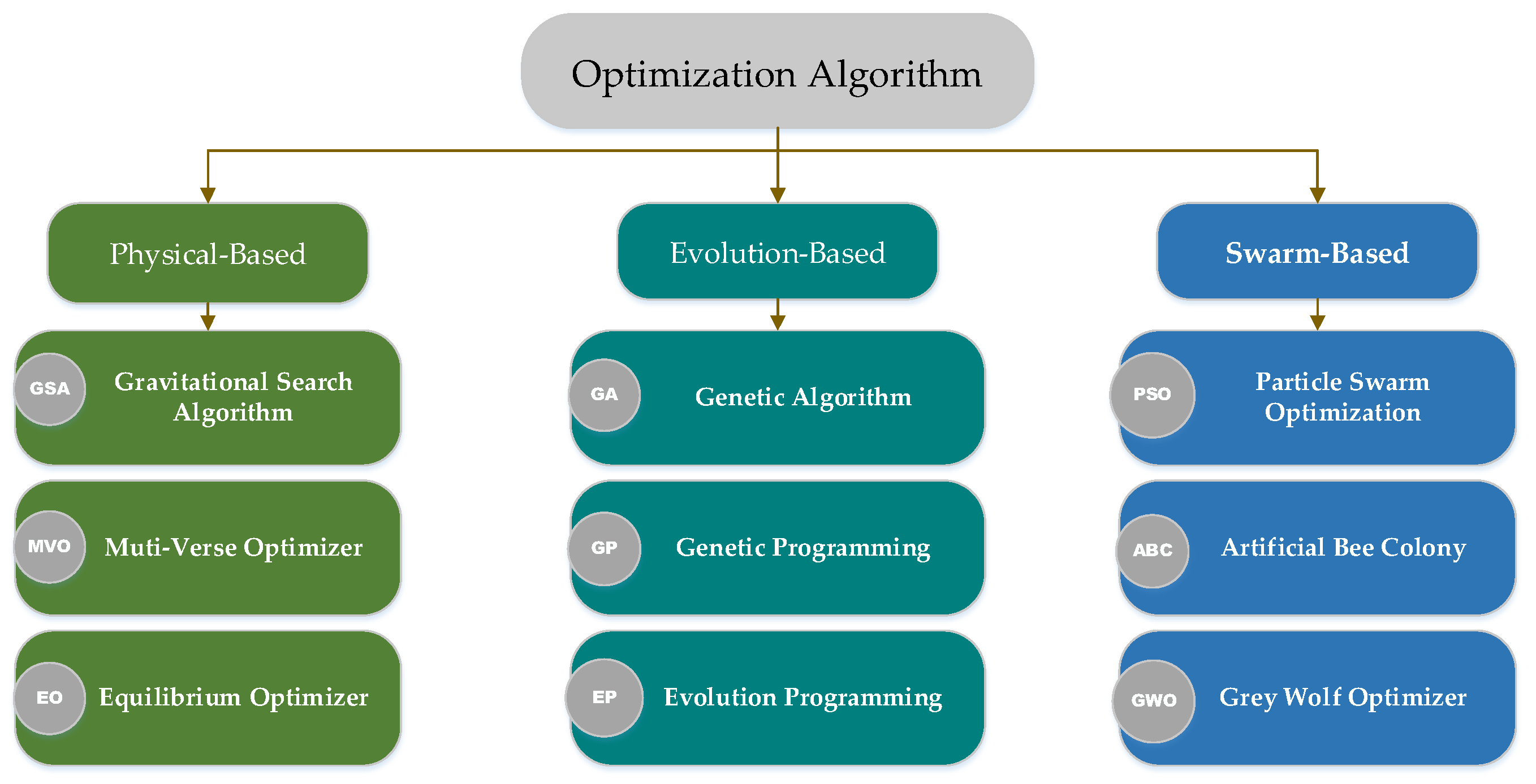

Optimization is a vibrant field with diverse applications in optimal control, disease treatment and engineering design [

1,

2,

3,

4,

5]. In the past few years, modeling and implementing Metaheuristic Algorithms (MAs) have proved their worth [

6,

7,

8,

9]. Compared with other conventional optimization algorithms, MAs are widely used in engineering applications for the following reasons: first and foremost, the concepts of MAs are accessible and easy to implement; second, MAs are better than local search algorithms; and finally, they do not need derivative-function information. Nature-inspired Metaheuristic Algorithms deal with optimization problems by mimicking biological or physical phenomena. MAs can be generally divided into three categories: the physical-based, the evolution-based and the swarm-based [

10] algorithms, as shown in

Figure 1. Although these MAs have some differences, they all benefit from the above advantages.

A variety of MAs are introduced in this paper. To be specific, the physical-based algorithm originates from the physical, chemical phenomenon and the human intelligence. In the literature [

11], the Gravitational Search Algorithm (GSA), which works on gravity and mass interactions, is discussed. The search agent is a set of interacting masses based on the Newtonian gravitation. Furthermore, GSA is wildly used in the field of machine learning. Other typical representatives are the Muti-Verse Optimizer (MVO) [

12], Simulated Annealing algorithm (SA) [

13], Equilibrium Optimizer (EO) [

14], etc. In the following, one of the types which is affected by the biological evolution is introduced. In 1992, the genetic algorithm (GA) [

15] was the first and the most popular algorithm to solve optimization problems, and it was established by Holland. The GA is derived from the laws of Darwinian evolution. This algorithm is regarded as one of the most effective algorithms, and it has been commonly utilized to solve substantial optimization problems with two recombination and mutation operators. This algorithm has been proposed with various modified and recombination versions [

16]. Differential Evolution (DE) [

17], Evolutionary Deduction (ED) [

18] and Genetic Programming (GP) [

19] are some well-known algorithms in this category. It is well-known that the swarm-based algorithm is a common approach, which derives from the survival habits of animal groups. Particle Swarm Optimization (PSO) [

20] is an outstanding instance of the swarm-based algorithm, which was inspired by the swarm behavior of natural animals, such as birds and fish, in 1995. Since then, PSO has attracted humans’ core attention and formed a desirable research subject called swarm intelligence. Another typical algorithm was the Artificial Bee Colony (ABC) [

21], which was proposed by Karaboga in 2005; it originated from the collective behavior of bees. Like other Metaheuristic Algorithms, this algorithm also has some shortcomings. Therefore, a modified version was introduced later. Yang introduced a novel algorithm based on the luminosity of fireflies in 2010 [

22]. The brightness or light of each firefly is compared to that of other fireflies. Of course, fireflies sometimes fly randomly, which improves the modified version of this algorithm. In addition, another swarm-based algorithm called Bat Algorithm (BA) [

23] was proposed by Yang et al. in 2010; it was derived from the echolocation of bats. The Grey Wolf Optimizer (GWO) [

6] is a well-established swarm intelligence algorithm that was developed by Mirjalili et al. in 2016. Moreover, the GWO was inspired by the social life and hunting activities of wolves. In 2019, Heidari et al. proposed the Harris Hawks Optimization (HHO) [

24], which simulated the unique cooperative hunting of Harris hawks. Inspired by the biological behaviors of prey and predators, Faramarzi et al. established the swarm-based Marine Predators Algorithm (MPA) in 2020 [

25].

In 2018, Lin et al. proposed a hybrid optimization method of the Beetle-Antenna Search Algorithm and Particle Swarm Optimization (PSO) [

26]. The BAS has good optimizing speed and accuracy in low-dimensional optimization problems, but it is easy to fall into local optimization in high-dimensional problems. Combining the PSO algorithm with the BAS can improve the BAS’s optimization ability. Moreover, the BAS [

27] can be applied to other Metaheuristic Algorithms to overcome the shortcomings of a single algorithm. For instance, Zhou et al. proposed a Flower-Pollination Algorithm (FPA) based on the Beetle-Antennae Search Algorithm to overcome the slow convergence problem of the original FPA algorithm [

28]. The experimental results show that the improved optimization algorithm has a faster convergence rate. An improved Artificial-Bee-Colony Algorithm (ABC) [

29] based on the Beetle-Antenna Search (BAS–ABC) was proposed by Cheng et al. in 2019 [

30]. This algorithm makes use of the position-update ability of BAS to avoid the randomness of searching, so that the original algorithm can converge to the optimal solution more quickly. The Beetle-Antennae Search Based on Quadratic Interpolation (QIBAS) is an effective swarm intelligence optimization algorithm. QIBAS has been applied to the Inverse Kinematics Solution Algorithm of Electric Climbing Robot Based on the Improved Beetle-Antennae Search [

31]. It is universally believed that this improved algorithm improves the convergence accuracy. The teaching–Learning-Based Optimization (TLBO) [

32] is a very mature swarm intelligence optimization algorithm, which has been used in the improvement and hybrid of many MAs. Tuo et al. proposed a hybrid algorithm based on Harmonious Search (HS) and Teaching–Learning-Based Optimization for complex high-dimensional problems [

33]. HS has a strong global search capability, but its convergence speed is slow. TLBO can make up for this deficiency to increase the convergence rate. Keesari et al. used the TLBO algorithm to solve the job-shop-scheduling problem [

34] and compared with other optimization algorithms. The experimental results show that TLBO algorithm is more efficient in the job-shop-scheduling problem. In order to solve the problem that the TLBO algorithm is prone to local convergence in complex problems, Chen et al. designed the local learning and self-learning methods to improve the original TLBO algorithm [

35]. It is proved that the improved TLBO has a better global search ability than other algorithms by testing on a few functions. Quasi-Reflection-Based Learning (QRBL) [

36] is a variant of Opposition-Based Learning (OBL) [

37], and it is an effective intelligent optimization technique. QRBL can be applied to Biogeography-Based Optimization (BBO) [

38], Ion Motion Optimization (IMO) [

39] and Symbiotic Organisms Search (SOS) [

40]. The modified algorithm with QRBL has better convergence speed and the better ability to avoid the local optimal than the basic algorithm.

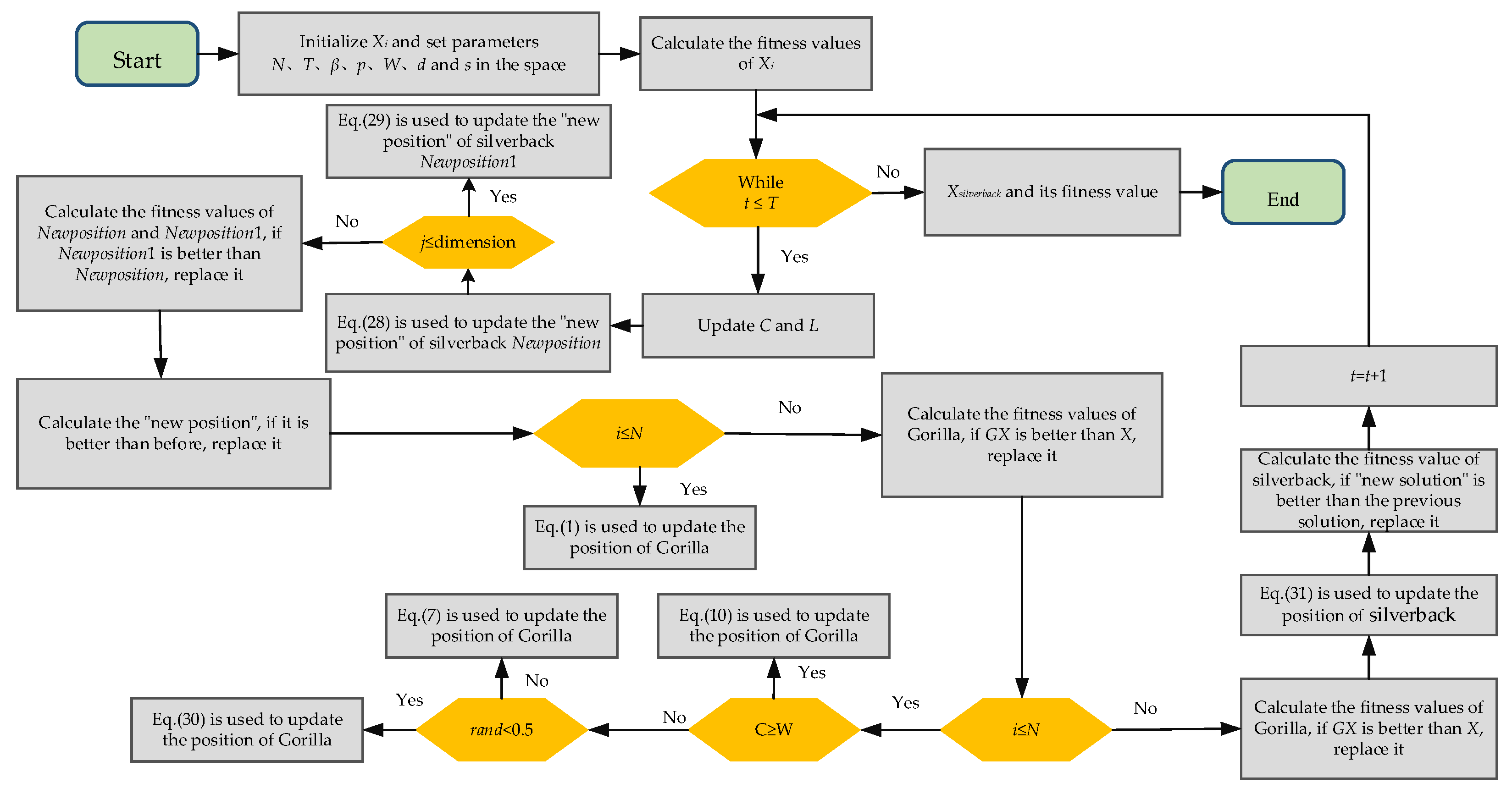

The Gorilla Troops Optimizer (GTO) [

41] is a new swarm intelligence optimization algorithm that was established by Abdollahzadeh et al. in 2021. The inspiration of GTO is the migration, competition for adult females and following behavior of the gorilla colony. Currently, it has been applied to several subject-design and engineering-optimization problems. However, like other swarm intelligence optimization algorithms, GTO is difficult to obtain a balance between exploration and exploitation due to the randomness of the optimization process. Therefore, the algorithm still has some problems, such as low accuracy, slow convergence and ease of falling into local optimal. It is worth mentioning that the No Free Lunch (NFL) [

42] theorem indicates that no algorithm can solve all optimization problems perfectly. Therefore, this theorem and the defects of GTO prompt us to improve and develop the modified swarm-based algorithm to deal with more engineering problems:

- (1)

A modified GTO, which is called MGTO, is proposed in this paper. The modified algorithm introduces three improvement strategies. Firstly, the Quadratic Interpolated Beetle-Antennae Search (QIBAS) [

31] is embedded into the GTO that can get the diversity of the silverback’s position. In addition, Teaching–Learning-Based Optimization (TLBO) [

32] is hybridized with GTO to stabilize the performance between the silverback and other gorillas. Finally, the Quasi-Reflection-Based Learning (QRBL) [

36] mechanism is used to enhance the quality of the optimal position.

- (2)

To verify the effectiveness of the MGTO, 23 classical benchmark functions, 30 CEC2014 benchmark functions and 10 CEC2020 benchmark functions are adopted to conduct a simulation experiment. The performance of the MGTO is evaluated through a variety of comparisons with the basic GTO and eight state-of-the-art optimization algorithms.

- (3)

Furthermore, the MGTO is applied to solve the welded-beam-design problem, pressure-vessel-design problem, reducer problem, compression/tension-spring problem, three-bar-truss-design problem, crash-worthiness-design problem and string-design problem. The experimental results indicate that MGTO has a strong convergence ability and global search ability.

The rest of this paper is organized as follows:

Section 2 introduces the basic GTO. In

Section 3, three strategies and the modified GTO named MGTO are proposed. The experimental results and the discussion of this work are presented in

Section 4. In

Section 5, the MGTO is tested to solve seven kinds of real-world engineering problems. Finally, the conclusion and future work are given in

Section 6.

2. Gorilla Troops Optimizer (GTO)

The Gorilla Troops Optimizer is a swarm-inspired algorithm that simulates the social life of gorillas. The gorilla is a social animal, and it is the largest primate on earth at present. Because of the white hair on its back, the adult male is also known as a silverback.

A gorilla group always consists of an adult male gorilla, several adult female gorillas and their offspring. Among them, the adult male gorilla is the leader, whose responsibilities are to defend the territory, make decisions, direct other gorillas to find abundant food and so on. The research shows that the male and female gorillas deviate from their birth with high probability. Generally, the male gorillas incline to abandon their quondam groups for appealing female gorillas, and then they will form a new group. Nevertheless, the male gorillas sometimes prefer to stay in the group in which they were born, with the hope that they will have the chance to dominate the whole group one day. The fierce competition for females between male gorillas is inevitable. Male gorillas can expand their territory by competition. The relationship between male and female gorillas is close and stable, while the relationship among female gorillas is cold relatively.

This algorithm includes two stages: Exploration and exploitation. Five different operators emulate the optimization operation for the behavior of gorillas in this algorithm. There are three operators in the exploration stage: moving to an undiscovered position, moving toward the other gorillas, moving to a known position. In the exploitation stage, two different operators of tracking the silverback and competing for adult females are adopted to improve the search performance.

2.1. Exploration

The operation procedures of the exploration stage are described in this subsection. It is commonly known that a gorilla group is governed by a silverback who has the capacity to conduct all actions. Sometimes gorillas will go to other places which they have visited before or that are new to them in nature. At each optimization operation stage, the optimal candidate solution is regarded as a silverback solution. Furthermore, three mechanisms at this stage are introduced.

Equation (1) is used to denote three mechanisms in the exploration stage. In the equation,

p is a parameter ranging from 0 to 1 which is utilized to choose the mechanism of migration for an unknown position. For the sake of clarity, the mechanism for migration to an unknown position will be chosen when

rand < p. Then, if

rand ≥ 0.5, the second mechanism, that of movement toward the other gorillas, will be selected. If

rand < 0.5, the mechanism for migration to a known position will be selected.

where

GX(

t+1) indicates the candidate position vector of the gorilla at the next iteration, and

X(

t) is the current position vector of the gorilla. Moreover,

r1,

r2,

r3 and

rand are the random values between 0 and 1.

UB and

LB indicate the upper and lower bounds of the variables, respectively.

Xr and

GXr are the candidate position vectors of gorillas that are selected randomly.

The equations that are used to calculate

C,

L and

H are as follows:

where

It indicates the current iteration value, and

MaxIt is the maximum iteration value. At the initial stage, the variation values are generated in a large interval, and then the changed interval of variation values will decrease in the final optimization stage.

F can be calculated by the following equation:

where

r4 is a random value that is in between [−1,1].

L is a parameter for which the calculation equation is as follows:

where

l is a random value from 0 to 1. Moreover, the Equation (4) is utilized to simulate the silverback leadership. Because of inexperience, silverback gorillas always hard to make the correct decisions to find food or manage the group. However, they can obtain adequate experience and extreme stability in the leadership process. Additionally,

H in Equation (1) is calculated by Equation (5).

Z in Equation (5) is calculated by Equation (6), where

Z is a random value in the range of [−

C,

C]:

At the end of the exploration, a group operation is performed to calculate the cost of all GX solutions. If the cost is identified as GX(t) < X(t), the X(t) solution will be substituted by GX(t) solution. Therefore, the best solution at this stage is regarded as the silverback, as well.

2.2. Exploitation

In the exploitation stage of the GTO algorithm, the two behaviors of following the silverback and competing for adult females are adopted. The silverback leads all the gorillas in the group, and it is responsible for various activities in the group. Competing for adult females is another behavior. The C value indicates that the adult males can choose to follow the silverback or compete with other males. W is a parameter which should be set before the optimization operation. If C satisfies different conditions, the above mechanism will be selected.

2.2.1. Following the Silverback

When the silverback and other gorillas are young, they can perform their duties well. For instance, male gorillas follow the silverback easily. Moreover, each member can influence other members. That is to say, if

C ≥

W, the strategy will be performed. By mimicking this behavior, Equation (7) is used to illustrate this mechanism, as follows:

where

Xsilverback is the vector of the silverback, which presents the optimal solution.

M can be expressed as follows:

where

GXi(t) refers to the vector position of each candidate gorilla at iteration,

t;

N indicates the sum of gorillas; and

g is estimated by Equation (9) as follows:

2.2.2. Competition for Adult Females

Competing for females with other male gorillas is a main stage of puberty for young gorillas. This competition is always fierce, which will persist for days and affect other members. Equation (10) is used to emulate this behavior:

where

Q is adopted to simulate the impact, which is calculated by Equation (11). Moreover,

r5 is a random value between 0 and 1. Equation (12) is used to calculate the coefficient vector of the violence degree in conflict, where

β is the parameter that needs to be given before the optimization operation.

E is used to simulate the effect of violence on the solution’s dimensions. If

rand ≥ 0.5,

E will be equal to a random value in the normal distribution and the problem’s dimensions. However, if

rand < 0.5,

E will be equal to a random value in the normal distribution;

rand is a random value between 0 and 1.

4. The Results and Discussion of Experiment

In this section, 23 classical benchmark functions are used to evaluate the performance of the proposed algorithm. Furthermore, nine optimization algorithms are selected for comparison, namely the Gorilla Troops Optimization (GTO) [

41], Arithmetic Optimization Algorithm (AOA) [

43], Salp Swarm Algorithm (SSA) [

2], Whale Optimization Algorithm (WOA) [

44], Grey Wolf Optimizer (GWO) [

6], Particle Swarm Optimization (PSO) [

20], Random-Opposition-Based Learning Grey Wolf Optimizer (ROLGWO) [

45], Dynamic Sine–Cosine Algorithm (DSCA) [

46] and Hybridizing Sine–Cosine Algorithm with Harmony Search (HSCAHS) [

47]. For the sake of fairness, the maximum iteration and population size of all algorithms are set to 500 and 30, respectively.

The 23 classical benchmark functions can be divided into three categories: unimodal functions (UM), multimodal functions (MM) and composite functions (CM). The unimodal functions (F1~F7) have only one optimal solution that is fluently used to evaluate the exploration ability of the algorithm. The multimodal functions (F8~F13) are characterized by multiple optimal solutions. These functions can be utilized to evaluate the ability of jumping out from the local optimal solution in complex situations. The composite functions (F14~F23) are usually adopted to evaluate the stability of algorithms [

48].

In addition, the benchmark functions (F1~F30) provided in CEC2014 and the benchmark functions (F1~F10) provided in CEC2020 are used in the other experiments [

49]. These benchmarks are applied in many papers to evaluate the performance for the ability of solving problems. The standard to evaluate the performance of the optimization algorithm is whether it can keep the balance between exploration and development and avoid the local optimum.

4.1. The Experiments on Classical Benchmark

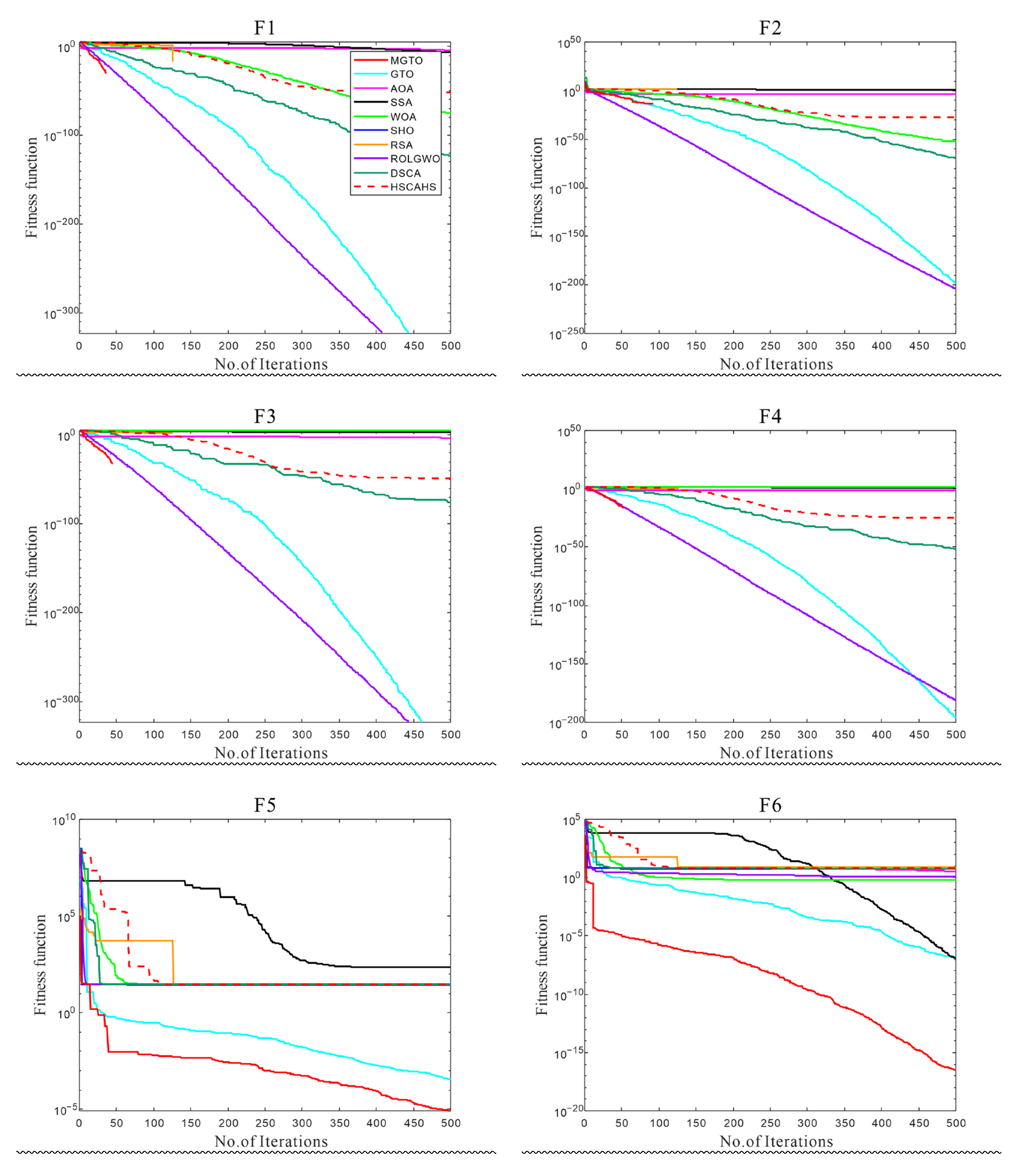

4.1.1. The Convergence Analysis

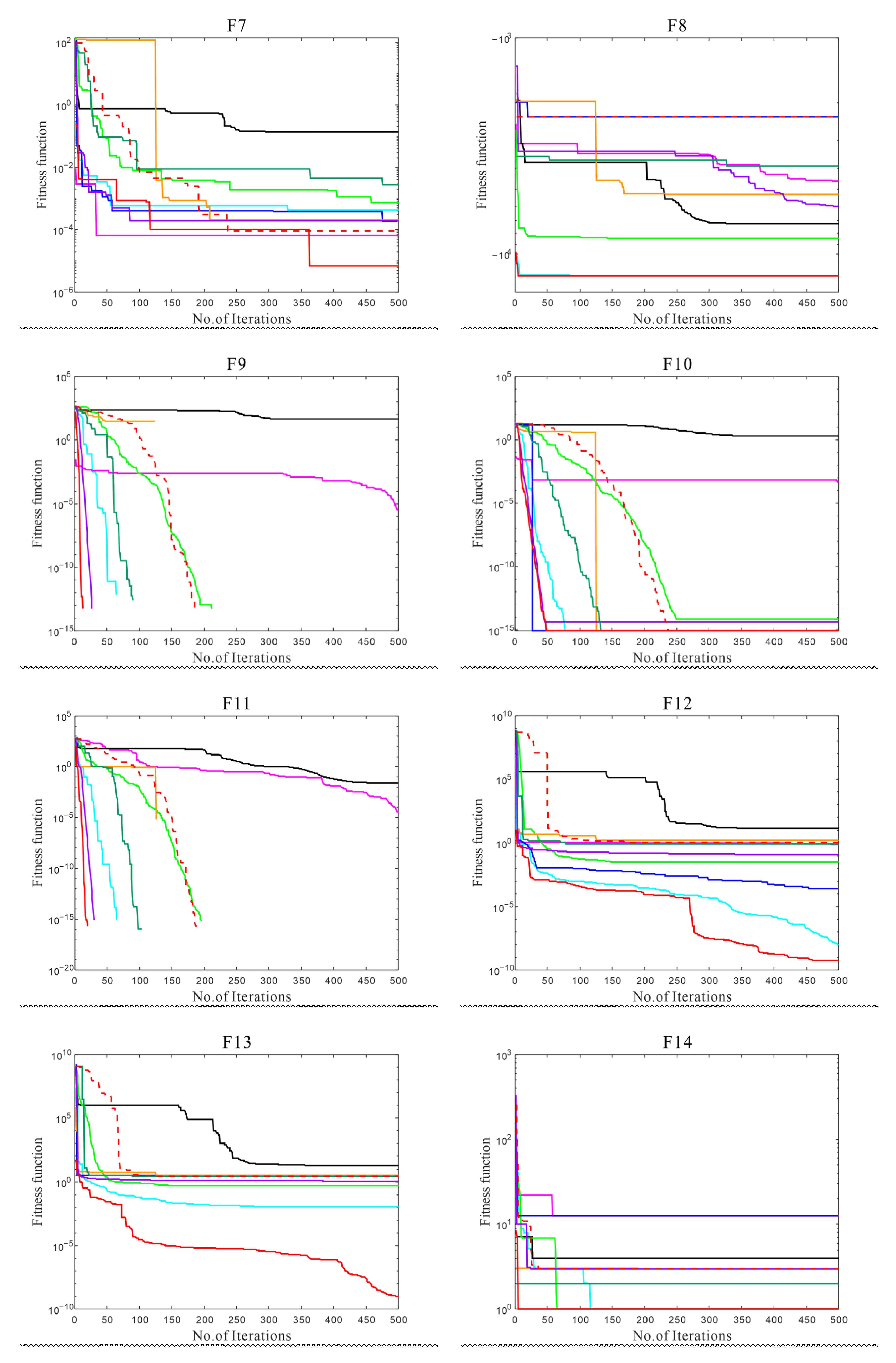

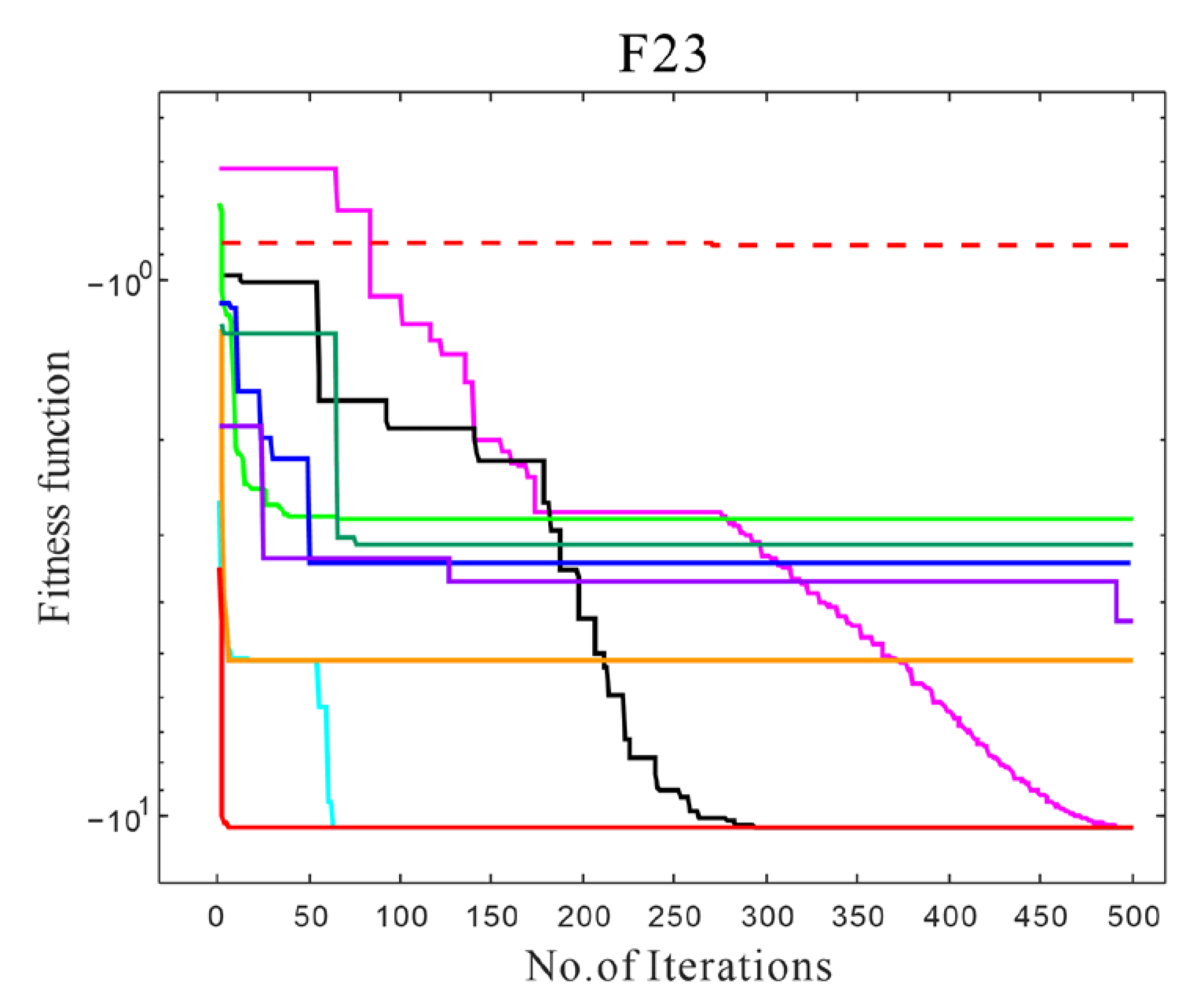

In order to evaluate the advantages of the MGTO algorithm on the benchmark functions, the MGTO is compared with traditional GTO, AOA, SSA, WOA, GWO, PSO, ROLGWO, DSCA and HSCAHS algorithms. The results in

Figure 4 show that the proposed MGTO can achieve more efficient and better results compared with other optimization algorithms. Furthermore, this paper selects “semi-logarithms” to draw the convergence curve with the purpose of making the difference of curve convergence obviously. Because “0” has no logarithms, the iterative curve is interrupted in the figure. As shown in F1~F4, F9 and F11, the modified algorithm does not display curves in the subsequent process, just because it converges to 0, which reflects the high convergence accuracy of this algorithm. In addition, the proposed algorithm is superior to other algorithms in the benchmark-functions (F1~F13) experiments. It is obvious that the MGTO has the excellent property of keeping balance between exploration and exploitation when solving complicated problems. In the benchmark functions (F14~F23), the MGTO can obtain great superiority, thus indicating that the proposed algorithm is competitive in solving composite functions. However, the results of the benchmark functions (F17~F18) and the DSCA and PSO algorithms demonstrate that these algorithms give an excellent performance to obtain high-quality results. In summary, the MGTO performs well in benchmark functions (F14~F23). What is more, the MGTO can find excellent solutions compared with other algorithms in most cases. Thus, in the comparative experiment, the MGTO has certain advantages over the other algorithms. The effectiveness of the MGTO can be proved by the experiment results.

4.1.2. The Results of the Classical Benchmark

The results of 23 classical functions are listed in

Table 1. All results are expressed by average value (Avg) and standard deviation (Std): The average value represents better convergence performance, and the standard deviation indicates the better stability. According to

Table 1, it is obvious that the MGTO performs better in major tests. In particular, the optimal value of most functions is precisely calculated by MGTO, whereas other algorithms cannot find the optimal solution. Compared with the GTO, the proposed mechanism can improve the performance of obtaining the best solution.

Therefore, as far as numerous unimodal functions are concerned, the MGTO still remains the excellent searching performance, thus clearly proving the superiority of the exploitative ability in the MGTO. In contrast to unimodal functions, multimodal functions have multiple optimal solutions, which have many local optimal solutions. These multimodal functions are usually adopted to evaluate the convergence ability of algorithms. As

Table 1 shows, the results can indicate that the proposed model has slight advantages in getting out of the local solutions. The primary cause is that the basic GTO has already provided competitive results. The functions F14~F23 can be used to test the stability and searching capability of algorithms. From

Table 1, it can be seen that the performance of MGTO surpasses the traditional GTO, and the solution results of multiple functions are extremely close to the true value. Evidently, it can be concluded that the MGTO maintains high stability and exploitative ability consistently in the test.

4.2. The Experiments on CEC2014 and CEC2020

In this section, the CEC2014 tests are utilized to prove the performance of the MGTO. The results of the MGTO in complicated CEC2014 functions are compared with nine Metaheuristic Algorithms which are frequently quoted in the literature. The comparison results of CEC2014 benchmark functions are represented in

Table 2. It can be seen from

Table 2 that the MGTO provides the best results in 12 out of 30 cases for Avg and 15 out for 30 cases for Std. For other cases, it can be summarized as follows: SSA provides the best results in F24 and F25 for Avg; ROLGWO provides the best results in F6, F9 and F11 for Avg and F13 for Std; GTO provides the best results in F12 for Std; AOA provides the best results in F5 for Std; DSCA provides the best results in F6 for Std; and HSCAHS provides the best results in F9, F10, F11 and F16 for Std. In these cases, other algorithms are better than the MGTO, with a slightly different result. It can be proved that the MGTO has better efficiency and stability in solving global optimization problems. The MGTO can search the whole space consistently through subsequent iterations, and this can improve the convergence.

For CEC2020 benchmark functions, the functions (F1~F4) are as follows: translational rotation function, translational rotation schwefel function, translational rotation lunacek bi-rastrigin function and expansion rosenbrock’s plus griewangk function. F5~F7 functions are mixed functions. F8~F10 functions are composite functions. The results of CEC2020 calculated by MGTO, GTO, AOA, SSA, WOA, GWO, PSO, ROLGWO, DSCA and HACAHA are displayed in

Table 3. According to

Table 3, MGTO provides the best results in 6 out of 10 cases for Avg and 7 out for 10 cases for Std. For other cases, it can be summarized as follows: ROLGWO provides the best results in F3 for Avg, RSA provides the best results in F3 for Std and SSA provides the best results in F9 for Std. In function F4, all algorithms achieve the same Avg of 1.90E+03. Therefore, it can be concluded that the proposed algorithm can enhance the performance of GTO and solve CEC2020 functions better. Generally speaking, the MGTO has a better performance in solving optimization problems.

4.3. The Non-Parametric Statistic Test

Although the superiority of the MGTO was confirmed by the above benchmark functions, in order to further demonstrate the advantages of the MGTO, the Wilcoxon’s rank-sum test [

50,

51] with 5% accuracy is used to evaluate and research the difference between the proposed algorithm and other algorithms. The

p-values of Wilcoxon’s rank-sum test are shown in

Table 4,

Table 5 and

Table 6. The

p-value less than 0.05 decides the significant differences between two algorithms. According to

Table 4,

Table 5 and

Table 6, the superiority of the MGTO is statistically significant in most of the benchmark functions since most of the

p-values are less than 0.05. Thus, the MGTO is considered to have significant advantages compared with other algorithms.

5. MGTO for Solving Engineering-Optimization Problems

In this section, seven engineering problems are used to evaluate the superiority of the MGTO, namely the welded-beam-design problem, pressure-vessel-design problem, speed-reducer-design problem, compression/tension-spring-design problem, three-bar-truss-design problem, car-crashworthiness-design problem and the tubular-column-design problem. The MGTO runs independently 30 times for each issue, with the maximum iterations setting at 500 and the population size at 30. In addition, the MGTO is compared to other algorithms in regard to dealing with engineering problems, as represented in the following tables; the corresponding conclusions are also discussed.

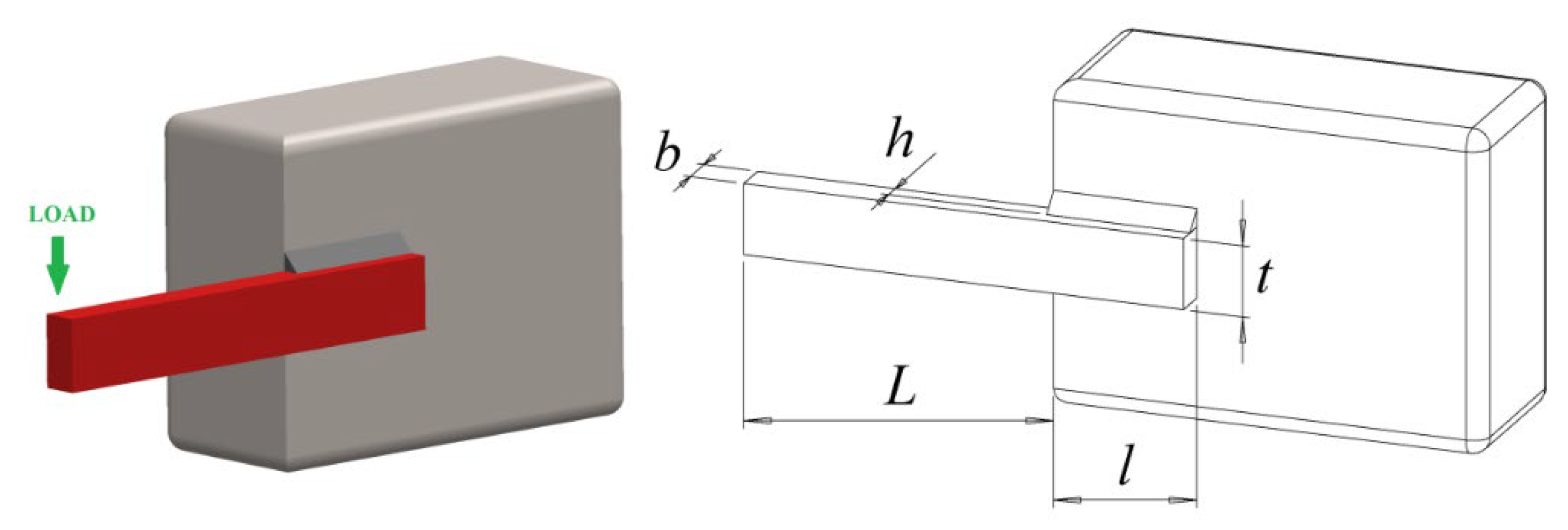

5.1. Welded-Beam Design

The welded-beam-design problem is a common engineering-optimization problem that was developed by Rao et al. [

52].

Figure 5 offers a schematic diagram of a welded beam. In this problem, the constraints may be divided into four categories: shear stress, bending stress, buckling load and deflection of beam. The cost of welding materials is minimized as much as possible under the four constraints. Furthermore, there are four variables in the welded-beam-design problem: The thickness of the weld (

h), the length of the weld (

l), the height of the welded beam (

t) and the thickness of the bar (

b).

The mathematical model of the above problem is presented as follows:

Constraints:

where we have the following:

The results of the MGTO and other comparative optimization algorithms are listed in

Table 7. According to

Table 7, it can be concluded that the MGTO was able to obtain the effective solution for welded-beam-design problems compared with other algorithms.

5.2. The Pressure-Vessel Problem

The pressure-vessel problem proposed by Kannan and Kramer [

53] aims to reduce the cost of the pressure vessel under the pressure requirements.

Figure 6 is a schematic diagram of the pressure vessel. The parameters include the following details: the thickness of the shell (

Ts), the thickness of the head (

Th), the inner radius of the vessel (

R) and the length of the cylindrical shape (

L).

From

Table 8, we can see that the optimal solution of the function obtained by the MGTO is 5734.9131, while

x is equal to 0.7424, 0.3702, 40.3196 and 200. The lowest cost calculated by the MGTO is superior to other optimization algorithms, including the GTO [

41], HHO [

24], SMA [

54], WOA [

44], GWO [

6], MVO [

12] and GA [

15], indicating that the proposed model has the merits in solving the pressure-vessel problem.

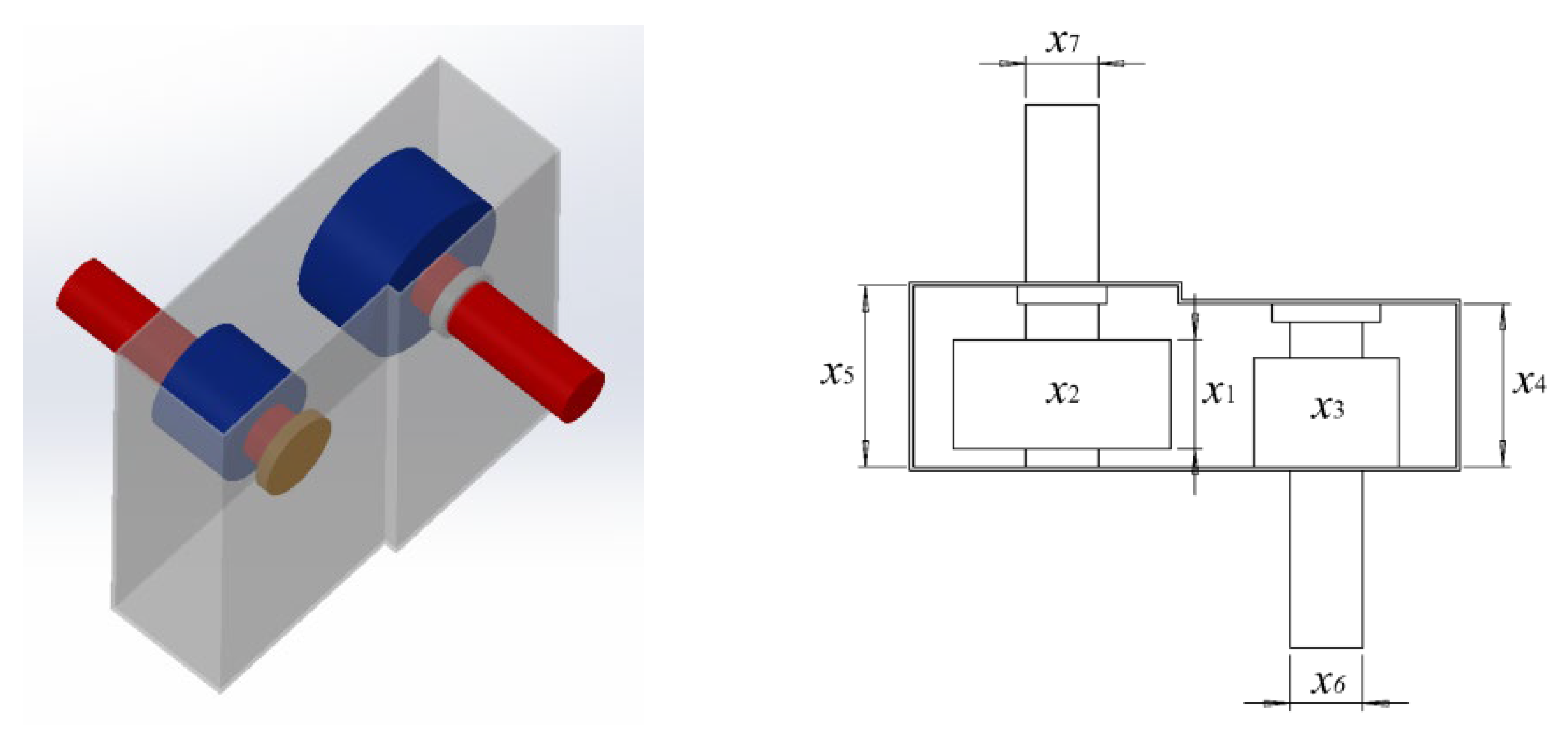

5.3. Speed-Reducer Design

The main intention of this problem is to minimize the weights of the speed reducer, which can be limited by seven variables, namely the face width (

x1), the teeth module (

x2), the discrete design variables representing the teeth in the pinion (

x3), the length of the first shaft between the bearings (

x4), the length of the second shaft between the bearings (

x5), the diameters of the first shaft (

x6) and diameters of the second shaft (

x7) [

55]. In this case, there are four constraints that should be satisfied: covered stress, bending stress of the gear teeth, stresses in shafts and transverse deflection of the shafts, which are shown in

Figure 7.

In addition, the formulas of this problem are expressed as follows:

The comparative optimization results of the speed-reducer-design problem are shown in

Table 9.

Compared with the AO [

56], PSO [

20], AOA [

43], MFO [

57], GA [

15], SCA [

58] and MDA [

59], it can be observed that the MGTO achieves 2995.4373, which is the best optimum weight. Thus, it can be certified that the MGTO has an obvious advantage in solving the speed-reducer-design problem.

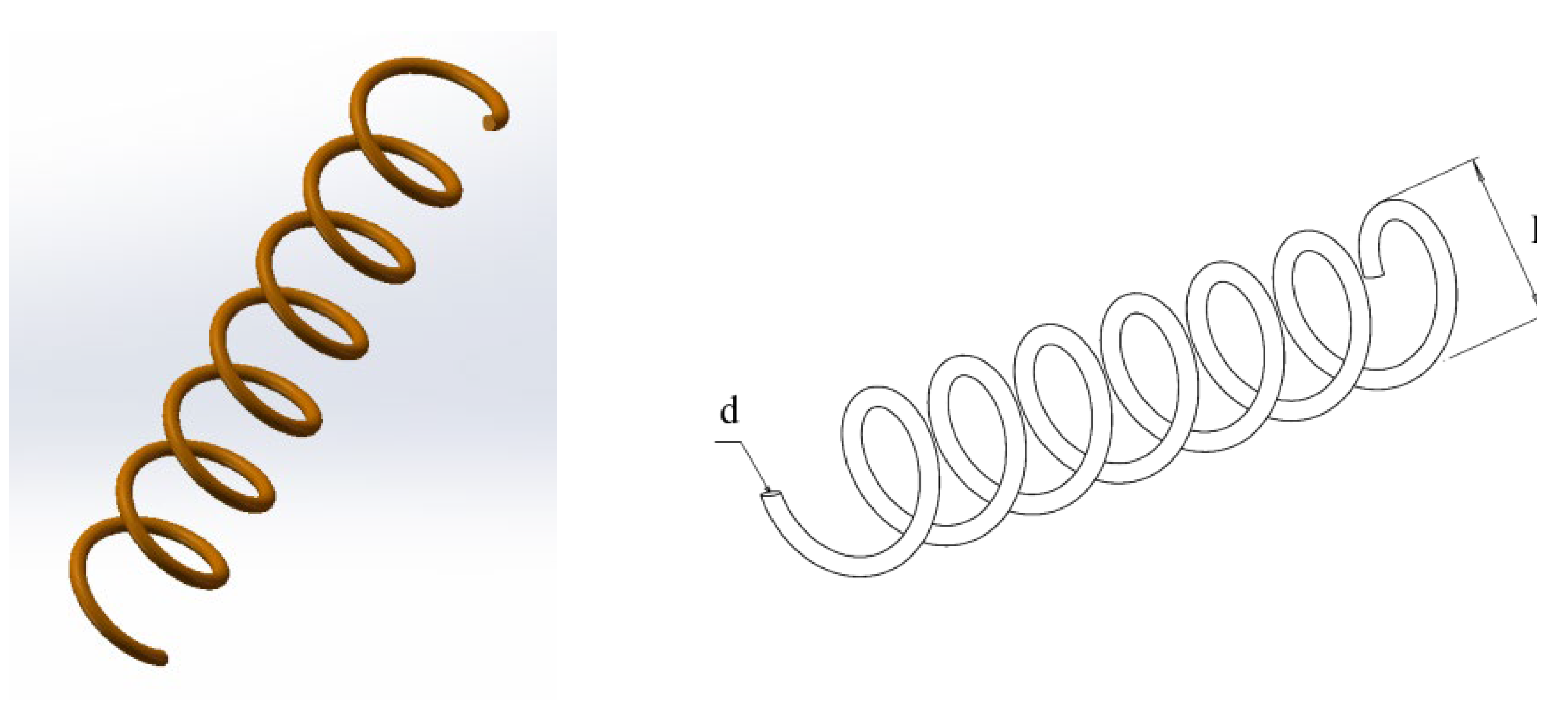

5.4. Compression/Tension-Spring Design

Compression/tension-spring-design problems minimize the weight of the spring (shown in

Figure 8) [

60]. The three constraints impacting frequency, shear stress and deflection should be satisfied in the optimization design. There are three variables displayed in

Figure 8, namely the wire diameter (

d), mean coil diameter (

D) and the number of active coils (

N).

The mathematical model is as follows:

As

Table 10 shows, the optimal solution calculated by MGTO is 0.0099, when variable

x is equal to 0.05000, 0.3744 and 8.5465. According to the results, it is clear that the MGTO is capable of obtaining the best solution in comparison with other optimization algorithms.

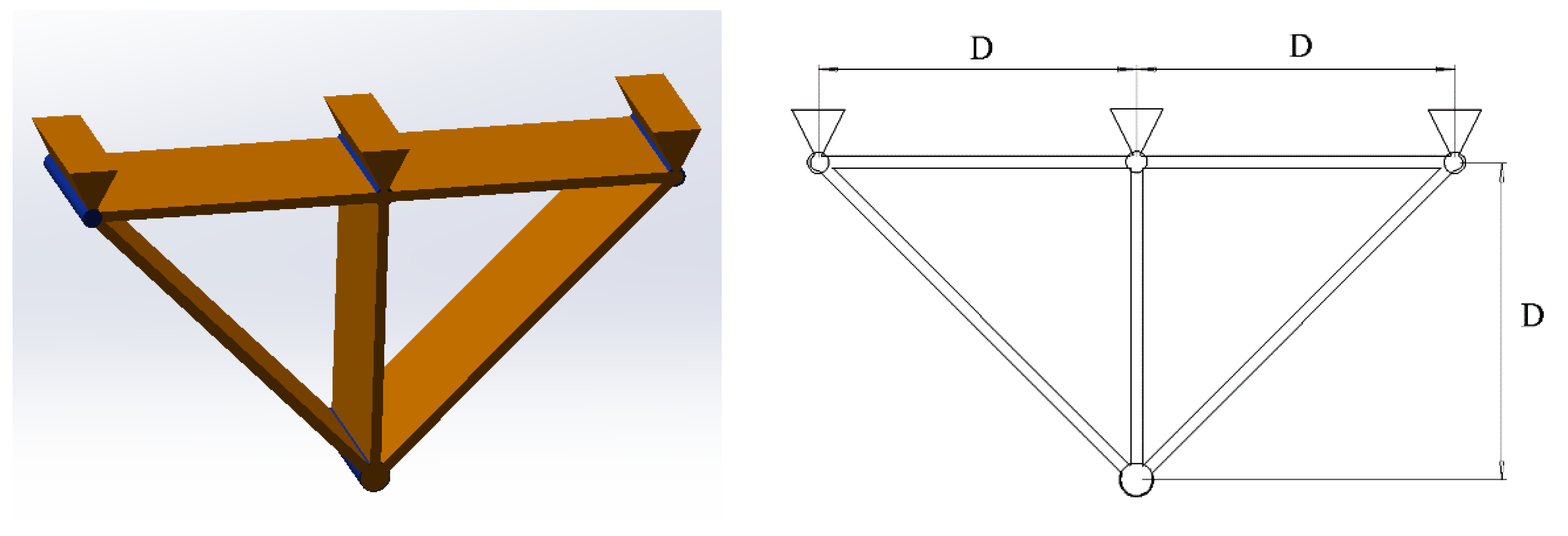

5.5. Three-Bar-Truss Design

The three-bar-truss-design problem [

61] is a typical engineering problem which can be utilized to evaluate the performance of algorithms. The main intention is to minimize the weight, which is subject to deflection, stress and buckling constraints on each of the truss members, by adjusting the cross-sectional areas

A1,

A2 and

A3. It is illustrated in

Figure 9.

The problem considers a non-linear function with three constraints and two decision variables. The mathematical model is given as follows:

Variable range:

where we have the following:

The results of the three-bar-truss-design problem are shown in

Table 11, and it can be seen that the MGTO has significant superiority compared with other optimization algorithms in solving this problem. The proposed model can obtain the lowest optimum weight when

x is equal to 0.7884 and 0.4081.

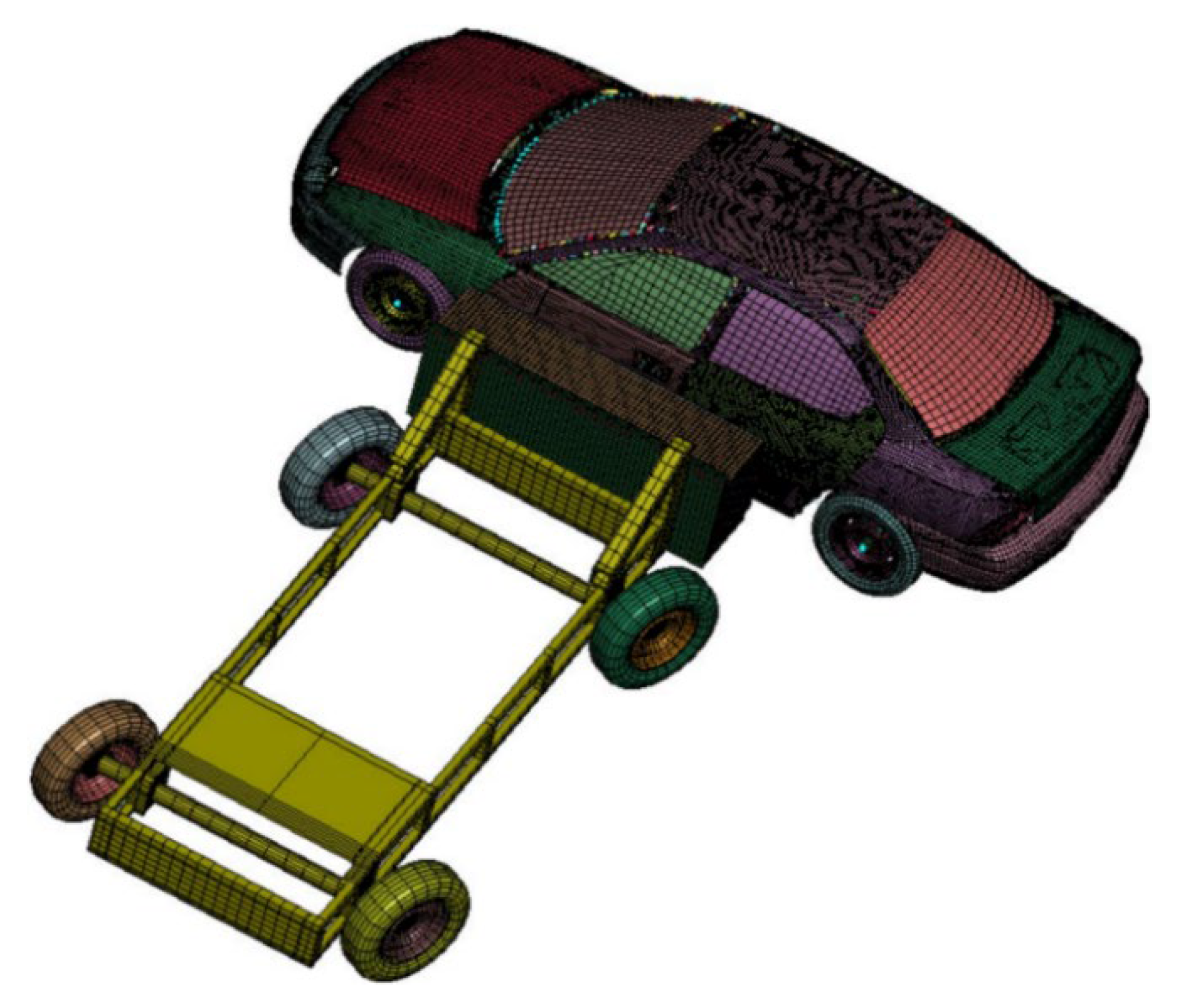

5.6. Car-Crashworthiness Design

The car-crashworthiness-design problem proposed by Gu et al. aims to improve the safety of the vehicle and reduce casualties. The details are shown in

Figure 10 [

62].

In this case, eleven parameters are adopted to reduce the weight, namely the inside of the B-pillar, reinforcement of the B-pillar, the inside of the floor side, the crossbeam, the door beam, the door-strip-line reinforcement, the roof rail of the car (x1–x7), the materials of inside of B-pillar and reinforcement of B-pillar (x8, x9), the thickness of barrier height and the impact position (x10, x11). The mathematical formula is represented as follows:

The results of the car-crashworthiness-design problem are listed in

Table 12. From the contents, we can see that the optimal weight obtained by the MGTO is 23.1894. Furthermore, it is certain that the MGTO is an efficient algorithm in solving the car-crashworthiness-design problem.

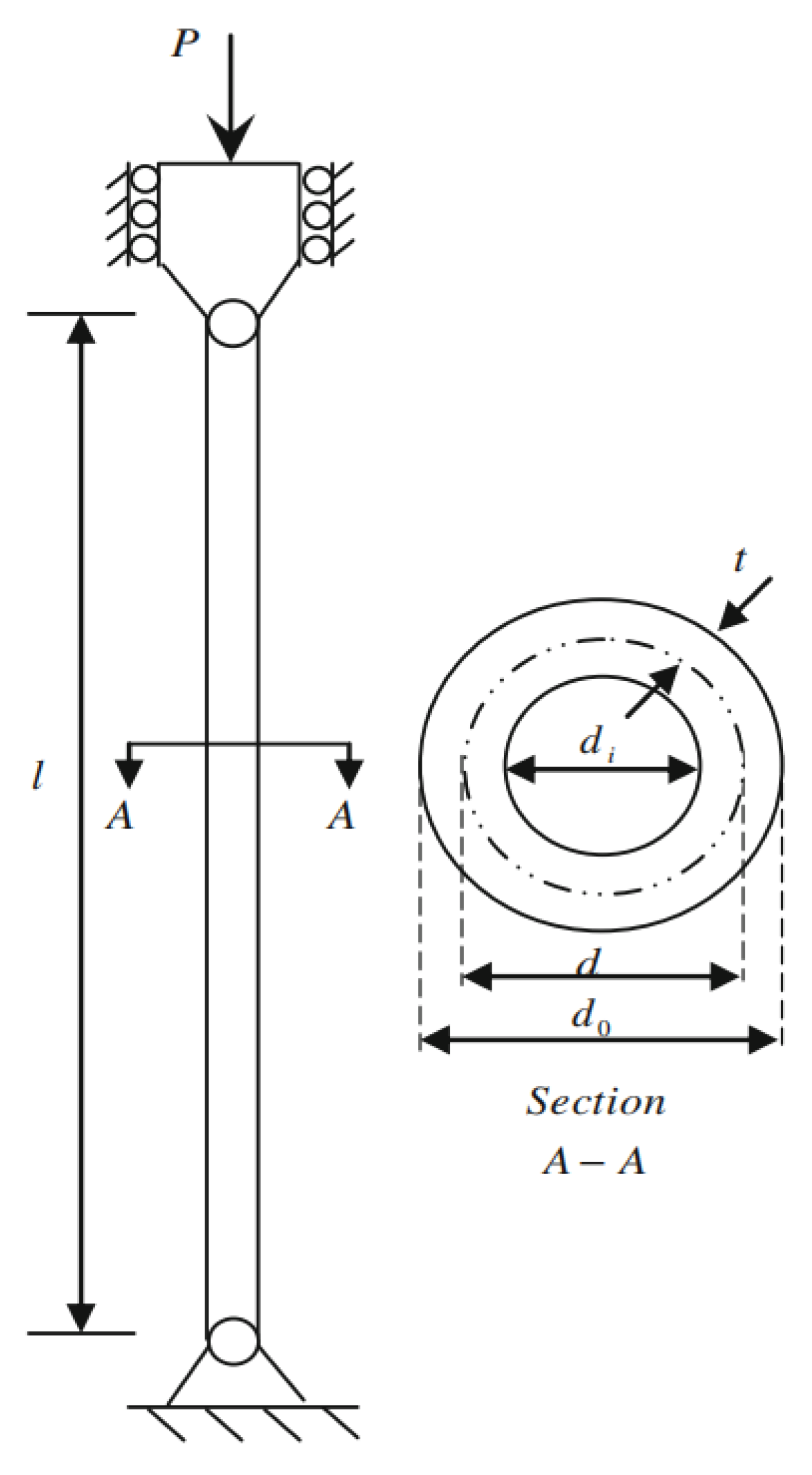

5.7. Tubular-Column Design

According to

Figure 11, a tubular column is given to decrease the cost of supporting the compressive load,

p = 2500 kgf, and it consists of the yield stress, elastic modulus and density.

The functions, including the material and manufacturing costs, are considered. The formulas can be expressed as follows:

The comparative optimization results of the tubular-column-design problem are shown in

Table 13.

The optimal solution obtained by the MGTO is 26.5313 when the variable x is 5.4511 and 0.2919. It is obvious that the proposed algorithm has advantages in solving the tubular-column-design problem.

In summary, the advantages of the proposed algorithm are shown in this section. Because the MGTO has great exploration and exploitation ability, it is superior to the traditional GTO and other existing algorithms in regard to performance. Therefore, the MGTO can be applied in practical engineering problems.

6. Conclusions and Future Work

The MGTO was proposed to modify the performance of GTO in this paper. Three strategies were used to modify the basic GTO. Firstly, the QIBAS algorithm was utilized to increase the diversity of the position of the silverback. Secondly, TLBO was introduced to the exploitation phase to reduce the difference of the silverback and gorillas. Thirdly, the QRBL generates the quasi-refraction position of the silverback to enhance the quality of the optimal position.

For the comprehensive evaluation, the proposed MGTO was compared to the basic GTO and eight other state-of-the-art optimization algorithms, namely AOA, SSA, WOA, GWO, PSO, ROLGWO, DSCA and HSCAHS, on 23 classical benchmark functions, 30 CEC2014 benchmark functions, and 10 CEC2020 benchmark functions. The statistical analysis results disclosed that MGTO is a very competitive algorithm and outperforms other algorithms in regard to exploitation, exploration, escaping local optimum, and convergence behavior. In order to further investigate the superior capability of the MGTO in solving real-word engineering problems, the proposed algorithm was compared with other algorithms, using seven constrained, complex and challenging problems. The obtained results confirmed the high competency of MGTO to optimize real-word problems with complicated and unknown search domains.

In future works, we hope that the MGTO will perform particularly well on more real-world problems, such as image segmentation, feature selection and so on. Moreover, the NFL theorem has also prompted researchers to improve more algorithms.