Chinese Character Component Deformation Based on AHP

Abstract

:1. Introduction

- (1)

- A feature point matching method is proposed. As a prerequisite for affine transformation, we designed an effective Chinese character feature point matching method, which extracts feature points from the Chinese character skeleton map and matches them according to their neighborhood information.

- (2)

- An automatic control point selection technology is proposed. First, we determined the factors that affect the selection of control points, then grouped the feature points, and used AHP to calculate the weight of each group of feature points. The group with the highest weight is used as the optimal control points for affine transformation parameter calculation.

2. Related Works

2.1. Feature Point Detection and Matching

2.2. Chinese Character Component Deformation

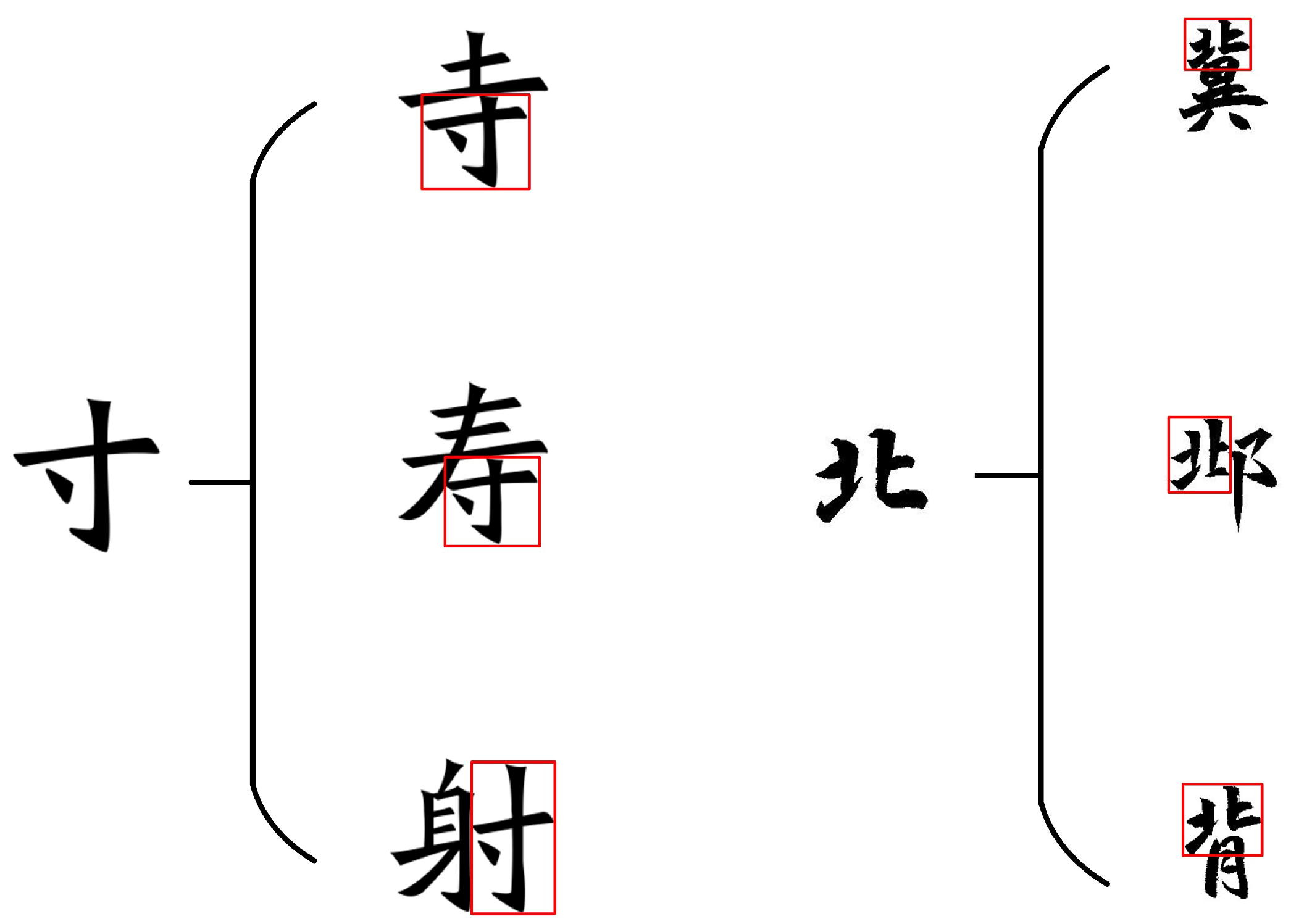

3. Feature Point Matching

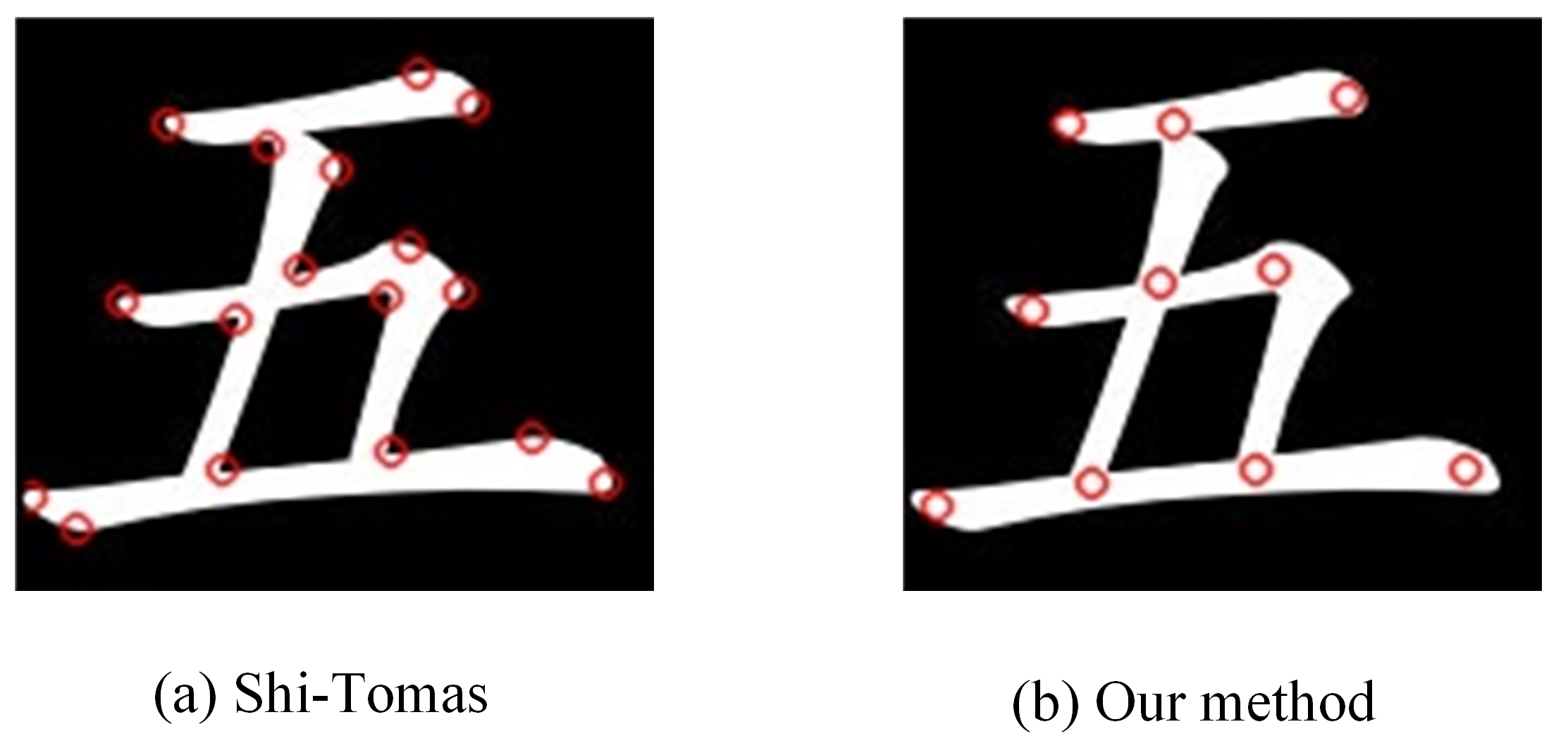

3.1. Feature Point Detection

3.2. Feature Point Matching

4. Control Point Selection and Component Deformation

- (1)

- Establishing the hierarchical structure model;

- (2)

- Hierarchical single ranking and consistency test;

- (3)

- Hierarchical total ranking and consistency test.

4.1. Hierarchical Structure Model

4.2. Hierarchical Single Ranking

4.2.1. Criterion Layer Weights

4.2.2. Scheme Level Weights

4.2.3. Consistency Test

4.3. Hierarchical Total Ranking

4.4. Component Deformation

| Algorithm 1 AHP-based Chinese character component deformation. |

Input: Original component image , reference component image Output: The component image obtained by deforming .

|

5. Results and Analysis

5.1. Evaluation Metrics

5.2. Results

5.2.1. Results of Our Method

5.2.2. Comparison with MLS

. Some have a writing style that differs too much from the original component, such as

. Some have a writing style that differs too much from the original component, such as  . Some have too much local deformation, such as

. Some have too much local deformation, such as  ,

,  . The fourth row in Figure 11 shows the components obtained using our method, which, visually speaking, preserves the original writing style and structural features of the original component well. The Chinese character in the last row shows the new characters composed by stitching together the already existing components and the components generated by our method, which have basically the same style and can be a good fit. Therefore, from a subjective point of view, our generated components can be better applied to the Chinese character generation task.

. The fourth row in Figure 11 shows the components obtained using our method, which, visually speaking, preserves the original writing style and structural features of the original component well. The Chinese character in the last row shows the new characters composed by stitching together the already existing components and the components generated by our method, which have basically the same style and can be a good fit. Therefore, from a subjective point of view, our generated components can be better applied to the Chinese character generation task.6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680.

- Kaji, S.; Kida, S. Overview of Image-to-Image Translation Using Deep Neural Networks: Denoising, Super-Resolution, Modality-Conversion, and Reconstruction in Medical Imaging. 2019. Available online: https://kyushu-u.pure.elsevier.com/ja/publications/overview-of-image-to-image-translation-by-use-of-deep-neural-netw (accessed on 10 June 2019).

- Su, B.; Liu, X.; Gao, W.; Yang, Y.; Chen, S. A restoration method using dual generate adversarial networks for Chinese ancient characters. Vis. Inform. 2022, 6, 26–34. [Google Scholar] [CrossRef]

- Tian, Y. zi2zi: Master Chinese Calligraphy with Conditional Adversarial Networks. Available online: https://github.com/kaonashi-tyc/zi2zi/ (accessed on 6 April 2017).

- Zhang, J.; Du, J.; Dai, L. Radical analysis network for learning hierarchies of Chinese characters. Pattern Recognit. 2020, 103, 107305. [Google Scholar] [CrossRef]

- Cao, Z.; Lu, J.; Cui, S.; Zhang, C. Zero-shot Handwritten Chinese Character Recognition with hierarchical decomposition embedding. Pattern Recognit. 2020, 107, 107488. [Google Scholar] [CrossRef]

- Ministry of Education of the People’s Republic of China. GF 0014-2009 Specification of Common Modern Chinese Character Components and Component Names; Language & Culture Press: Beijing, China, 2009.

- Feng, W.; Jin, L. Hierarchical Chinese character database based on radical reuse. Comput. Appl. 2006, 3, 714–716. Available online: https://kns.cnki.net/kcms/detail/detail.aspx?FileName=JSJY200603064&DbName=CJFQ2006 (accessed on 1 March 2006).

- Zu, X.; Jin, L. Hierarchical Chinese Character Database Based on Global Affine Transformation; South China University of Technology: Guangzhou, China, 2008. [Google Scholar]

- Derpanis, K.G. The Harris Corner Detector; York University: Toronto, ON, Canada, 2004. [Google Scholar]

- Shi, J.; Tomasi. Good features to track. In Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded up robust features. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. Orb: An efficient alternative to sift or surf. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Bian, J.; Lin, W.; Matsushita, Y.; Yeung, S.; Nguyen, T.; Cheng, M. GMS: Grid-Based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, M.; Zhan, H.; Liang, X.; Hu, J. Morphing technology for Chinese characters based on skeleton graph matching. J. Beijing Univ. Aeronaut. Astronaut. 2015, 41, 364–368. [Google Scholar] [CrossRef]

- Sun, H.; Tang, Y.M.; Lian, Z.H.; Xiao, J.G. Research on distortionless resizing method for components of Chinese characters. Appl. Res. Comput. 2013, 30, 3155–3158. [Google Scholar] [CrossRef]

- Yao, W.U.; Jiang, J.; Bai, X.; Li, Y. Research on Chinese Characters Described by Track and Point Sets Changing from Regular Script to Semi-Cursive Scrip. Comput. Eng. Appl. 2019, 30, 232–238+258. [Google Scholar]

- Lian, Z.; Xiao, J. Automatic Shape Morphing for Chinese characters. In Proceedings of the SIGGRAPH Asia 2012 Technical Briefs, Singapore, 28 November–1 December 2012; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, C.; Lian, Z.; Tang, Y.; Xiao, J.G. Automatical System to Generate High-Quality Chinese Font Libraries Based on Component Assembling. Acta Sci. Nat. Univ. Pekin. 2018, 54, 35–41. [Google Scholar]

- Hagege, R.; Francos, J.M. Parametric Estimation of Affine Transformations: AnExactLinear Solution. J. Math. Imaging Vis. 2010, 37, 1–16. [Google Scholar] [CrossRef]

- Bentolila, J.; Francos, J.M. Combined Affine Geometric Transformations and Spatially Dependent Radiometric Deformations: A Decoupled Linear Estimation Framework. IEEE Trans. Image Process. 2011, 20, 2886–2895. [Google Scholar] [CrossRef]

- Yao, Y.; Yang, J.; Liang, Z. Generalized centroids with applications for parametric estimation of affine transformations. J. Image Graph. 2016, 21, 1602–1609. [Google Scholar]

- Schaefer, S.; McPhail, T.; Warren, J. Image deformation using moving least squares. In ACM SIGGRAPH 2006 Papers (SIGGRAPH ’06); Association for Computing Machinery: New York, NY, USA, 2006; pp. 533–540. [Google Scholar] [CrossRef] [Green Version]

- Cinelli, M.; Kadziński, M.; Gonzalez, M. How to support the application of multiple criteria decision analysis? Let us start with a comprehensive taxonomy. Omega 2020, 96, 102261. [Google Scholar] [CrossRef]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Zhang, Y.; Song, J.; Peng, W. GUO Dongdong, SONG Tianbao. Quantitative Analysis of Chinese Vocabulary Comprehensice Complexity Base on AHP. J. Chin. Inf. Process. 2020, 34, 17–29. Available online: http://jcip.cipsc.org.cn/CN/Y2020/V34/I12/17 (accessed on 20 January 2021).

- Hong, Z. Calculation on High-ranked R I of Analytic Hierarchy Process. Comput. Eng. Appl. 2002, 12, 45-47+150. [Google Scholar]

| W | H | N | |

|---|---|---|---|

| W | 1 | ||

| H | 1 | ||

| N | 1 |

| Degree of Importance | Value |

|---|---|

| Equally Important | 1 |

| Slightly Important | 3 |

| Strongly Important | 5 |

| Particular Important | 7 |

| Extremely Important | 9 |

| Median value of two adjacent judgments | 2 4 6 8 |

| Set A | Set B | Set C | Set D | Set E | |

|---|---|---|---|---|---|

| Set A | 1 | 3 | 2 | 1 | 2 |

| Set B | 1/3 | 1 | 1/2 | 1/3 | 1/2 |

| Set C | 1/2 | 2 | 1 | 1/2 | 1 |

| Set D | 1 | 3 | 2 | 1 | 2 |

| Set E | 1/2 | 2 | 1 | 1/2 | 1 |

| Matrix Order | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| RI | 0 | 0 | 0.56 | 0.89 | 1.12 | 1.26 | 1.36 | 1.41 | 1.46 | 1.49 | 1.52 | 1.54 | 1.56 | 1.58 | 1.59 |

| Components | Times | SSIM Mean Values |

|---|---|---|

| 7 | 76.78% |

| 5 | 74.58% |

| 5 | 75.23% |

| 4 | 67.12% |

| 4 | 78.37% |

| 3 | 74.01% |

| 3 | 82.80% |

| 2 | 65.98% |

| 5 | 81.77% |

| 5 | 77.36% |

| 3 | 71.80% |

| 4 | 73.57% |

| 2 | 76.78% |

| 4 | 78.49% |

| 3 | 69.51% |

| 3 | 78.32% |

| 3 | 72.39% |

| 3 | 78.16% |

| 3 | 80.28% |

| 3 | 79.17% |

| Font | Quantity of Components | Average SSIM Value of MLS | Average SSIM Value of Our Method |

|---|---|---|---|

| Kaiti | 70 | 54.18% | 73.07% |

| SimHei | 51 | 60.17% | 78.67% |

| Fangsong | 55 | 58.70% | 74.75% |

| Lishu | 43 | 62.69% | 72.27% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, T.; Yang, F.; Gao, X. Chinese Character Component Deformation Based on AHP. Appl. Sci. 2022, 12, 10059. https://doi.org/10.3390/app121910059

Chen T, Yang F, Gao X. Chinese Character Component Deformation Based on AHP. Applied Sciences. 2022; 12(19):10059. https://doi.org/10.3390/app121910059

Chicago/Turabian StyleChen, Tian, Fang Yang, and Xiang Gao. 2022. "Chinese Character Component Deformation Based on AHP" Applied Sciences 12, no. 19: 10059. https://doi.org/10.3390/app121910059

APA StyleChen, T., Yang, F., & Gao, X. (2022). Chinese Character Component Deformation Based on AHP. Applied Sciences, 12(19), 10059. https://doi.org/10.3390/app121910059