1. Introduction

With the rapid development of the Internet of Things, technology and the increasing number of the Internet of vehicles, the traditional Vehicular Ad-Hoc Network (VANET) is gradually integrated into the Internet of Vehicles (IoV). The Internet of Vehicles is a new model that combines vehicle organization networks and vehicle remote information processing to connect vehicles, people, and things [

1]. Moreover, it is a very important area in Intelligent Transportation Systems (ITSs) as it covers technologies and applications such as intelligent transportation, cloud computing, vehicle information services, logistics and transportation services [

2,

3], modern wireless technologies, Internet access and communication. However, with the high latency of traditional cloud computing services and the high overhead of mobile applications to vehicles, traditional computing services gradually fail to meet user requirements [

4]. Therefore, Mobile Edge Computing (MEC) [

5] came into being, providing computing services with low latency and low overheads for mobile applications and the Vehicular networks systems by deploying edge servers around users. An important research direction in MEC is computational offloading, which mainly includes offloading decisions and resource allocation to minimize delay and energy consumption.

The literature [

6,

7] mainly studies the minimization of delay. Zakaryia et al. [

6] outline a formal model whose goal is to minimize the delay of the offloaded task, taking into account transmission time and load conditions on the cloud server. In addition, a mobile task offloading algorithm based on the queuing network and genetic algorithm are proposed, which is compared with particle swarm optimization (PSO) and achieves better results. Guo et al. [

7] studied the problem of computing offloading in a mobile edge computing system with energy collection devices to minimize the delay. This problem has been formulated as an EH computational uninstalling of the game. The properties of the game are analyzed theoretically, including optimal offloading response, harvesting energy, transmission power, local calculation rate, etc.

The literature [

8,

9] mainly studies the minimization of energy consumption. John et al. [

8] proposed a MEC-assisted offloading scheme for energy-saving tasks by using the cooperative MEC framework. To save energy, a new heuristic hybrid algorithm is proposed to solve the optimization problem. Zhao et al. [

9] studied a stochastic optimization problem involving dynamic offloading and resource scheduling between local devices, base stations, and back-end clouds. The goal is to minimize the energy and computing resource consumption of energy harvesting (EH) devices in MEC systems while meeting the quality-of-service requirements of IoT devices. To solve this stochastic optimization problem, it is transformed into a deterministic optimization problem, and an online dynamic offloading and resource scheduling algorithm is proposed based on Lyapunov optimization theory.

The literature [

10,

11,

12] combined optimization of time delay and energy consumption. Fang et al. [

10] studied a task offloading strategy for a multi-device, and multi-server system. In order to meet the task requirements of different users, a double deep Q network (double DQN) algorithm is proposed to select strategies and allocate computing resources for task execution positions generated on mobile devices. Yan et al. [

11] considered the potentially uneven spatial distribution of mobile devices in MEC networks with multiple wireless edge gateways, allowing one edge gateway to be the first to offload tasks to nearby edge gateways. A joint optimization method for equipment-level and boundary-level task offloading based on deep Q-learning is proposed, and a good balance between task delay and task energy consumption is achieved. Wang Xiaowei et al. [

12] constructed a three-layer MEC network architecture, in which mobile devices performed local computation according to task conditions or offloaded it to edge computing nodes and cloud servers. In addition, the binary particle swarm optimization (BPSO) algorithm was used to solve the optimization objective and obtain the optimal offloading strategy.

After the above analysis, it can be seen that when global optimization of the system is carried out, the ideal effect can be achieved by considering joint delay and energy consumption. Furthermore, the heuristic algorithm [

13,

14,

15] has been well applied in the offloading of mobile edge tasks. Li et al. [

13] proposed a computational offloading strategy based on an improved particle swarm optimization algorithm to solve the delay minimization problem by introducing a cloud server and adding the penalty functions to balance delay and energy consumption. Sheuli et al. [

14] adopted Genetic algorithm (Ga) based optimization technology to identify the optimal solution of task offloading, and compared it with other offloading strategies using standard data sets and achieved good results. Zhang et al. [

15] proposed an improved artificial bee colony algorithm (OMABC) to realize the offloading of computing tasks. These heuristic algorithms have certain effects on the task offloading, but it is still necessary to find a better heuristic algorithm to solve the optimization problem.

A summary of the above literature studies is summarized in

Table 1, where √ indicates that there are studies in this area and ✕ indicates that there are no studies in this area.

In summary, most of the above studies on computational offloading strategy problems either optimize only the delay or energy consumption, or do not consider the dependencies between tasks. Some others do not consider the cooperative offloading between vehicles, MEC servers, and cloud servers. In addition, in order to avoid the defects of premature convergence or premature maturity of traditional heuristic algorithms and to improve the global search capability of the algorithms, better heuristics need to be found to solve the optimization problems. Bald Eagle Search (BES) optimization [

16] is a new meta-heuristic algorithm proposed by Malaysian scholar Alsattar in 2020. This algorithm has the advantage of strong global search ability and can effectively solve various complex numerical optimization problems. The task offloading of moving edge computing can be regarded as an optimization problem, so this paper will explore the task offloading of the BES algorithm.

Considering the limitations of the above studies together, the main contributions of this paper are as follows:

A collaborative computing framework for task offloading is designed, which can offload tasks in local computing, RSU (Road Side Unit) configured with MEC server, and cloud computing, and transform the problem of computing offloading decision into an optimization problem with constraints. According to the relationship between tasks, the priority matrix of task offloading is designed considering the order of task execution;

The bald eagle search optimization algorithm was improved, the Tent chaos map was introduced to increase the initial population diversity, and the Levy Flight mechanism and adaptive weight were introduced to enhance local search and global convergence;

Through the simulation, the IBES algorithm is compared with the Local algorithm, MEC algorithm, particle swarm optimization (PSO) algorithm, and BES algorithm. The simulation results show that the IBES algorithm has been better due to improved offloading performance.

2. System Model

2.1. Network Model

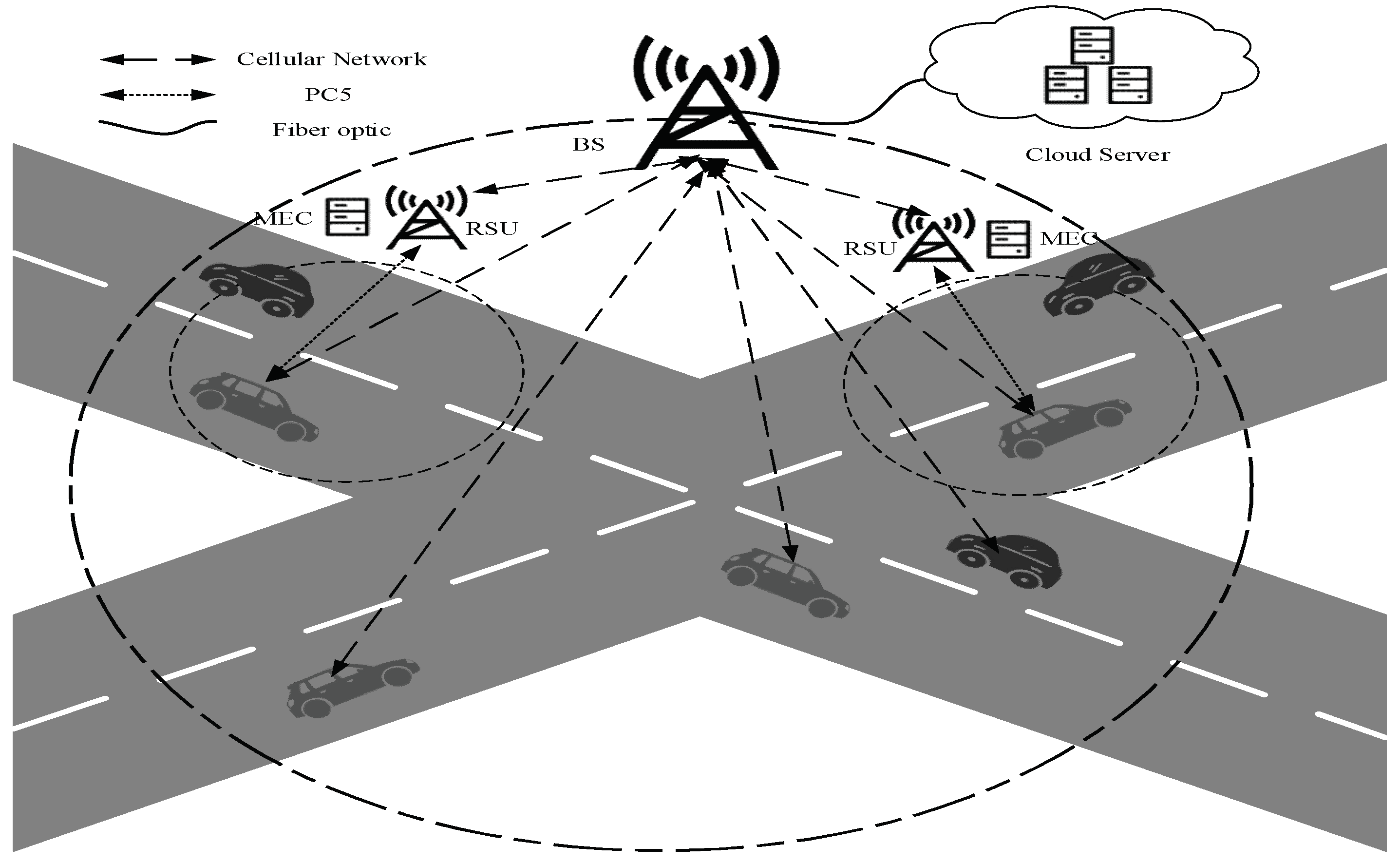

The network model of this paper is shown in

Figure 1, which is composed of a vehicle-mounted computing end, edge computing end, and cloud computing end. The workflow of task offloading: first, the vehicle generates task data that needs to be offloaded; second, the offloading decision collects the task execution requirements and the resource usage of the offloading platform, and gets the task execution plan through the offloading decision algorithm; finally, all the tasks are sent to the local RSU, configured with MEC server or the cloud, respectively. After offloading calculation, tasks that do not need to be offloaded are calculated locally, and tasks that need to be offloaded send task data to MEC or the cloud for task calculation through the offloading scheduling module, and the results are returned to the vehicle terminal after the calculation is completed.

In the process of task execution, the task must depend on other tasks, so the priority of task offloading should be considered. Therefore, the task offloading priority matrix

is introduced to represent the priority relationship between task

and task

.

Since the number of tasks generated by the vehicle is not fixed and the relationship between tasks is not determined, it is possible to customize the matrix according to the specific number and relationship. The priority matrix of the four tasks is shown below. For example, if

, it means that task 1 has priority over task 2; if

, it means that there is no binding relationship between task 2 and task 3; if

, it means that task 4 has priority over task 3.

2.2. Communication Model

Assume that all vehicles are traveling at a constant speed . Each car has a computational task, defined as , Where, represents the size of input data used for calculation, represents the computing resources required to complete task , represents the computing resources of vehicle , represents the maximum delay constraint of task , the computing resource of MEC is .

Offload tasks to Local, MEC, or Cloud Servers through collaborative computing uninstall. The communication model is shown below.

(1) Uninstall to the MEC server:

represents the data rate of upload and download between the mobile vehicle and MEC, according to Shannon’s theory, given by the following equation.

where,

represents the V2R (Vehicle to Road Side Unit) communication channel bandwidth,

represents the transmitting power between vehicles on the channel and RSU,

represents the channel gain of moving vehicles on the channel to RSU, and

represents the white noise power.

(2) Offloading to the cloud server: When the task is offloaded to the cloud server, the task is first transmitted to BS and then to the cloud server.

represents the data rate of upload and download between the moving vehicle and BS.

where,

represents the channel bandwidth,

represents the transmitted power between the vehicle on the channel and BS, and

represents the channel gain of moving the vehicle on the channel to BS.

The vehicle in the scenario is traveling at a constant unidirectional speed, and the speed of the vehicle is represented by

, the mobility of the vehicle causes the distance

between it and the center of the RSU coverage to vary with time, the law of change can be expressed by the following equation.

where,

represents the distance between the vehicle travel level and the RSU, and

represents the coverage of the RSU.

Since tasks can be computed locally, on MEC servers, and on Cloud servers, it is important to segment the tasks, and determine which platforms to offload to. This paper defines as the offloading decision of the vehicle. , , and indicate that the task is uninstalled on the Local, MEC server, and Cloud Server, respectively.

2.3. Calculation Model

2.3.1. Local Computing Model

When a task is processed locally in the vehicle, the delay is the processing delay of the task on the CPU.

represents the computing power of the local vehicle. Local computing delay is defined as:

where,

indicates the number of CPU cycles required to complete task

.

For local computing, in order to calculate the power consumption of the connected car, the power consumption of the computer CPU per revolution is used.

represents the energy consumption coefficient. Therefore, local energy consumption is defined as:

where

represents the equipment power of moving vehicle

.

2.3.2. MEC Calculation Model

When the task is offloaded to the MEC server for processing, data is first transmitted from the vehicle to the MEC server through V2R communication. The RSU is equipped with the MEC server. This part of the time is equal to the delay of the task to the RSU. It is then transmitted back to the vehicle after the MEC server has completed the calculation. Therefore, the delay is divided into transmission delay

, execution delay

and return delay

:

where,

represents the computing resources allocated from the MEC server to the vehicle for task offloading.

is the output data volume factor, which indicates the relationship between the output data volume and the input data volume.

The total delay

and total energy consumption

of offloading tasks from the mobile vehicle to the MEC server are as follows:

where,

represents the power of the mobile vehicle to upload the task, and

represents the device power of the mobile edge computing servers.2.3.3. Cloud server computing model.

The mobile vehicle is connected to the cloud server by fiber optics, through which the tasks are transferred [

17]. Therefore, the delay in offloading the task to the cloud server can be divided into three parts: the computation delay of the task in the cloud server; the transmission delay of the task to the RSU; and the return delay of the computation result from the RSU to the vehicle. In this case, let

represent the calculation time of the task in the Cloud Server,

represent the transmission delay of the task to RSU,

represent the return delay of the calculation result from RSU to the mobile vehicle, and average computing tasks on the fiber-optic transmission waiting for time delay for

,

represents the task of moving vehicle offloading of the total delay of the cloud,

represents the total energy consumption of moving tasks from the vehicle to the cloud server.

where,

represents the computing resources provided by the Cloud Server for mobile vehicles,

represents the device power of the cloud server, and

represents the transmission power of RSU.

To sum up, the total time delay of the task calculation can be derived as Equation (16):

Similarly, the total energy consumption of the task computation is Equation (17):

2.4. Problem Expression

The objective of this paper is to minimize the delay and energy consumption of the whole system, that is, to minimize the total system cost. To get better results, the vehicle network task offloading is simulated. In the calculation of complex tasks, the delay and energy consumption are adaptively adjusted by linear weight factors to obtain a formula that enables a better calculation of the total system cost. We consider a weighting factor where the total cost of offloading is considered only in terms of delay or energy consumption when the weighting factor is 0 or 1. In this paper, we consider the weighted sum of delay and energy consumption of the whole task offloading system and introduce a weight factor:

, so that different weights can be given according to the actual needs of task offloading.

where,

represents the total cost of collaborative offloading system.

In this paper, the offloading of tasks and allocation of resources are formulated as an optimization problem with the aim of minimizing

in Equation (18), so that the optimization problem can be formulated as:

indicates the computing uninstallation policy, and

indicates the computing resource allocation, that is,

,

,

indicates the distance from the location where the vehicle belongs to the point where it leaves the coverage of the MEC server.

indicates that only one uninstallation platform can be selected for each task.

indicates that the time to complete the task for each vehicle should not exceed the maximum tolerated time delay and the vehicle travel time within the MEC coverage area;

indicates the constraint on the total computing resources of the MEC server.

The adaptability function is reciprocal of Equation (18), and the higher value of Equation (20) means that the total cost of the selected offloading scheme is smaller, the smaller the instantaneous delay and energy consumption, and the better the offloading scheme.

3. Solution Method Based on Improved BES Algorithm

3.1. Bald Eagle Search Optimization Algorithm

BES is a new meta-heuristic optimization algorithm that imitates bald eagle hunting behavior. Bald eagles often feast on protein-rich foods, mainly fish. The first step in bald eagle hunting is to move to a specific area, and that area is exploring space. The bald eagle chooses a space between the land surface and deep water. After the bald eagle reaches the designated area, the search process begins. In addition, the bald eagle has excellent eyesight, which enables it to aim at fish in the water from great distances in the air. When the hunter determines his target, he begins a gradual descent to capture his victim and catch the fish. Therefore, the BES algorithm, depending on each stage of the hunt, can be specifically divided into three parts: select stage; search stage; and swooping stage. The superiority or inferiority of the bald eagle position is calculated by the fitness function.

- (1)

Select stage:

The bald eagle randomly selects the area to be searched and determines the best location to search for prey by judging the number of prey, so that it can search for prey in this space. The bald eagle position

updates at this stage and is represented mathematically by Equation (21).

where,

is the parameter used to a bald eagle position change, and its value is at (1.5,2);

is a random number between (0,1).

represents the current search space selected by the vulture based on the best position;

represents the average distribution of bald eagles after the previous search;

is the location of the

bald eagle. In this stage, the candidate solutions are enhanced significantly according to the average distribution position and the optimal distribution position.

- (2)

Search stage:

During the search phase, the bald eagle searches for prey within the selected search space and moves in different directions in a spiral space to speed up the search. The optimal position for a dive is mathematically expressed by Equation (22).

where,

is the next updated location of the next bald eagle

.

where,

and

are parameters controlling the spiral trajectory, and their values range is [5, 10] and [0.5, 2], respectively;

and

are the polar Angle and diameter of the spiral equation, respectively;

is the random number in (0, 1);

and

correspond to the position of the bald eagle in polar coordinates, and the value is [–1, 1] to change the spiral shape. At this stage, the position moves around the center. As “A” and “

” change, BES diversifies to avoid falling into local solutions and to seek more precise solutions.

- (3)

Swooping stage:

The movements of bald eagles have different shapes. Selecting a polar to coordinate equation to describe the movements of these bald eagles during the dive, the average solution can diversify the proposed algorithm toward the optimal solution.

The bald eagle position update formula in the swoop is:

where,

increases the intensity of movement of the bald eagle towards the optimal and central points.

3.2. Improvement of Bald Eagle Search Optimization Algorithm

3.2.1. Tent Chaotic Mapping

Compared with the ordinary initial population random initialization in other algorithms, chaotic mapping has the highlight of good traversal and randomness, i.e., chaotic mapping can generate more diverse populations. Past studies [

18] have shown that excellent population initialization can speed up the convergence of populations and improve the performance of the system. Therefore, choosing a suitable chaotic mapping for population initialization is a key issue at this stage. Similar to other evolutionary algorithms, the BES algorithm has the premature convergence. Chaos exists widely in a nonlinear system, which is a kind of deterministic and stochastic dynamic system. In addition, it has very sensitive dependencies on its initial conditions and parameters. Therefore, the chaotic search strategy can be applied to the BES algorithm to improve the ability of searching global optimal solutions. The invariant density of iteration is the interval [0, 1], and the Tent chaos map shows outstanding advantages and a higher iteration speed than the Logistic chaos map [

19]. In this paper, Tent chaos mapping is used for chaos optimization to generate chaotic sequences to increase the initial population diversity of the BES algorithm. The Tent chaos mapping expression is as follows:

The mathematical expression after Bernoulli shift transformation is:

3.2.2. Adaptive Weight

In the BES algorithm, an adaptive step weight function

is introduced to control the updating position of a bald eagle. When the bald eagle entered the stage of selecting search space, the search scope could be enlarged by searching with larger step size and updating the position in the early stage. The local optimization accuracy can be improved by reducing the search range with a smaller step size. That is, the value of

should be large in the early stage and small in the later stage, so an adaptive weight function is introduced, and the expression is as follows:

where, the value range of

is

, and

is the maximum number of iterations. The improved position update is changed from Equation (19) to Equation (36), as shown below:

3.2.3. Levy Flight Mechanism

Levy Flight [

20] attempted to use versatility and diversity to speed up the optimization process, so as to help the algorithm find the search location effectively and avoid falling into a local minimum.

The foraging activities of most animals also have characteristics of Levy Flight. For example, most foraging time is spent around known food sources, and sometimes it is necessary to fly long distances to find other food sources. The BES algorithm shows good performance in continuous search space problems, but it is not enough in optimization problems. To overcome the above problems, the Levy Flight mechanism was combined with the BES algorithm to improve the overall optimization efficiency of the algorithm. Levy Flight can increase the diversity of the bald eagle population, make it converge earlier, and improve the efficiency of skipping local optimal solutions. At the same time, Levy Flight optimized the diversification of search subjects, enabling the algorithm to efficiently explore the search location and avoid falling into the local minimum.

The Levy Flight mechanism is combined with the BES algorithm by adjusting the search agent with the factor “

“. The mathematical calculation of this factor is as follows:

where,

is a random number (0,1).

The Levy function is inserted into the third step, the Swooping stage. The revised new group calculation is as follows:

where,

and

are constants, and

is a random number of (0,1).

3.3. Solution Steps

According to the above improvement of the BES algorithm, the following specific steps are obtained:

- (1)

Write its constraint formula according to the total cost of the cooperative offloading system, and convert it into a fitness function.

- (2)

Initialize parameters, and use the Tent chaos map to initialize the bald eagle population.

- (3)

Calculate the fitness value of each bald eagle in the population.

- (4)

The adaptive weight function is used to control the selection of the updated position of the bald eagle in the search space phase and find the global optimal spatial position.

- (5)

Find out the optimal fitness value and the worst fitness value of the current individual, as well as the corresponding position.

- (6)

The individual adaptation of the new population was calculated by Levy Flight mechanism and compared with the previous population to update the optimal individual location and fitness value of the bald eagle population.

- (7)

Judge whether the end conditions are met, if so, the end; Otherwise, go to Step 3.

3.4. Complexity Analysis

Suppose the population size is , the dimension of individual position is , set the optimal bald eagle position time as , and the initialization stage time using tent chaotic mapping as , then the time complexity of this stage is: ; After entering the iteration, assume that the time to calculate the fitness value for each bald eagle is and the time to calculate the fitness value of the objective function is , then the time complexity of this stage is: ; The time to update the bald eagle position using the adaptive weight function to find the global optimal position is , and the time to update the optimal individual position and fitness value of the bald eagle population using the Levy Flight mechanism to find the new population individual adaptation to compare with the previous individuals is , then the time complexity of this stage is: .

From the above, the total time complexity of the improved IBES algorithm is: .

Space is influenced by the population size and dimension , then the spatial complexity can be expressed as: .

4. Simulation Results and Analysis

In this section, IBES algorithm, Local algorithm, MEC algorithm, BES algorithm, and PSO algorithm are simulated and compared, respectively.

Local algorithm [

21]: All tasks are left in the Local vehicle for processing.

MEC algorithm [

22]: Offload all tasks onto RSUs with the MEC server for processing.

PSO algorithm [

23]: Offloading algorithm based on particle swarm optimization.

BES algorithm: Based on the bald eagle search optimization algorithm of computational offload algorithm.

The simulation scene of this paper is at the crossroads of the highway, with two lanes, each lane 3.75 m wide. The mobile vehicles will be randomly distributed on the road, setting the vehicles to travel at speeds between 30 and 50 km/h. It is assumed that all edge servers have the same computing power, 40 vehicle end devices, 10 edge servers, and one cloud server. In this paper, the simulation is performed by MATLAB 2020A, and the communication transmission between vehicles in the vehicle network scenario follows the IEEE 802.11p protocol with some adjustments according to the simulation environment. We simplified the model by considering the presence of only Gaussian white noise. Specific simulation parameters are shown in

Table 2.

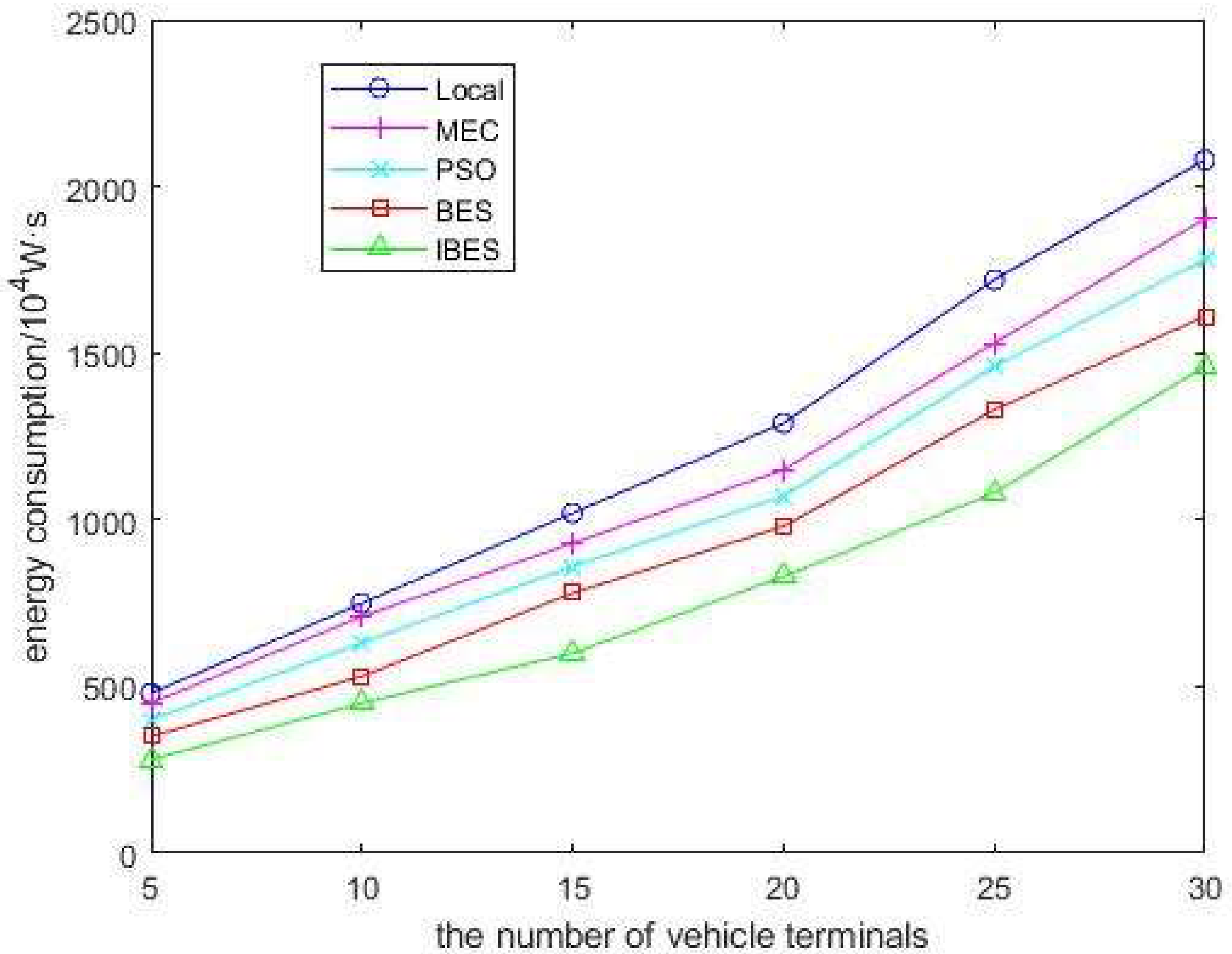

4.1. Impact of the Number of Vehicle Terminals on the System

Figure 2 and

Figure 3, respectively, show the influence of the number of vehicle terminals on the total delay and energy consumption of the system. As can be seen from

Figure 2, the total delay of every scheme increases with the increase in the number of vehicle terminals, but the total delay of IBS is always the smallest. As the number of terminals increases, the computing resources required to process tasks also increase. When the number of vehicle-mounted terminals exceeds 20, the delay of MEC computing scheme increases rapidly and exceeds that of the Local computing scheme, as there is no reasonably designed task offloading sequence in the process of task offloading in the MEC computing scheme, and some waiting links occur, so the delay will become higher and higher. As we can be seen from the figure, when the number of on-board terminals is 30, the delay of the proposed scheme is reduced by 51.08%, 55.47%, 36.87%, and 18.16%, respectively, compared with the Local algorithm, MEC algorithm, PSO algorithm, and BES algorithm.

Figure 3 shows that the system energy consumption also increases with the number of onboard terminals, but the energy consumption of the IBS is the smallest. The energy consumption of PSO, BES, and IBES computing schemes is lower than that of MEC, as they can select a task offloading platform according to the amount of data required by the task, while the IBES computing scheme has the lowest energy consumption due to the superiority of its algorithm and the addition of the task priority matrix. As can be seen from the figure, when the number of on-board terminals is 30, the energy consumption of the proposed scheme is reduced by 29.81%, 23.28%, 17.98%, and 9.32%, respectively, compared with the Local algorithm, MEC algorithm, PSO algorithm, and BES algorithm.

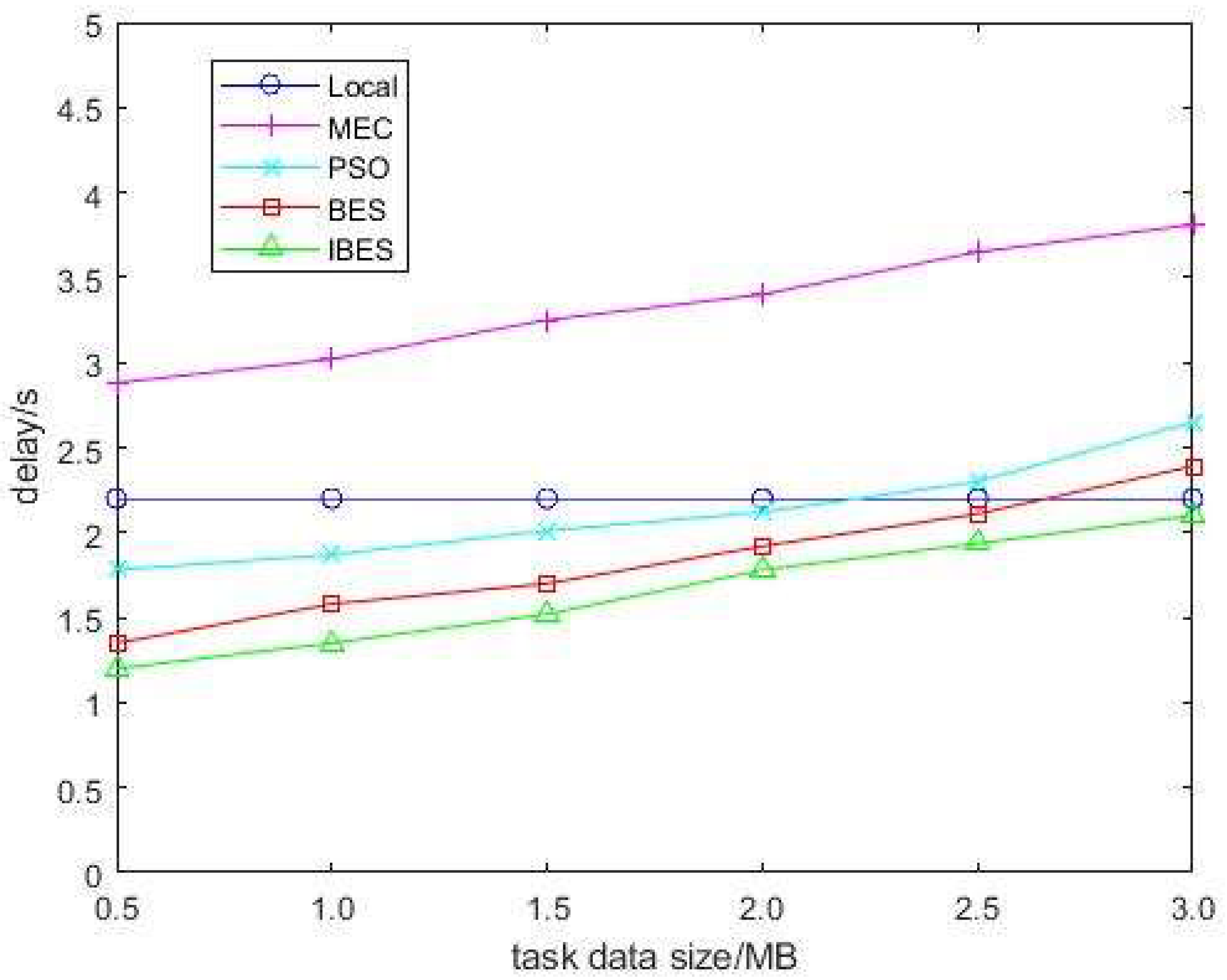

4.2. Impact of Task Size on the System

Figure 4 and

Figure 5, respectively, show the influence of task input data sizes on system delay and energy consumption. As shown in

Figure 4, the delay of all the five schemes increases with the increase in task input data. Since there is no transfer and offload of tasks in local computing, the delay value of local computing remains unchanged. However, with the increase in offloading data, the MEC scheme will compete for communication resources and edge node computing resources, so its delay is higher than that of local computing. The IBES computing scheme can quickly process some tasks requiring a large amount of input data. As can be seen from the figure, when the task data size is 3.0 MB, the delay of the proposed scheme is reduced by 4.55%, 44.89%, 20.75% and 12.14%, respectively, compared with the Local algorithm, MEC algorithm, PSO algorithm, and BES algorithm.

Figure 5 shows the impact of task data sizes on system energy consumption. It can be seen that as the task data size increases, the energy consumption of the five calculation scheme’s increases, but the energy consumption of IBES is much smaller than that of other schemes. As the amount of task input data increases, local computing does not involve data input, and its energy consumption changes steadily. The energy consumption changes gradually increase in other schemes, but IBES performs better than other schemes. Therefore, when faced with large data input, the energy consumption performance of the proposed scheme is far superior to other modes. As can be seen from the figure, when the task data size is 3.0 MB, the delay of the proposed scheme is reduced by 37.79%, 26.31%, 14.38% and 16.76%, respectively, compared with the Local algorithm, MEC algorithm, PSO algorithm, and BES algorithm.

4.3. Influence of Iteration Times on Total System Cost

Figure 6 shows the comparison of total system costs under different iterations. In this section, the maximum number of iterations

= 100, the number of tasks

= 50, and the weight factor

= 0.5 are selected. Since Local and MEC algorithms only offload tasks locally or on the server, the number of iterations has no influence on the simulation results. From the simulation results, it can be seen that the total system cost of the PSO, BES, and IBES algorithms level off at 65, 52, and 38 iterations, respectively. Compared with the PSO algorithm and BES algorithm, the total system cost of IBES decreases by 33.07% and 22.73%, respectively. Therefore, under the influence of the number of iterations, the total system cost of the proposed scheme is lower than that of the PSO algorithm and BES algorithm.

5. Conclusions

In this paper, we design a collaborative computing framework for task offloading, which can offload tasks to Local computing, RSU with MEC server, and Cloud computing. In addition, we take the total cost of task offloading, namely delay and energy consumption, as the optimization objectives and design an improved bald eagle search optimization IBES to achieve task offloading. The IBES is designed to introduce Tent chaotic mapping, Levy Flight mechanism, and Adaptive weights into the bald eagle search optimization algorithm to increase the initial population diversity, enhance the local search, and global convergence. Simulation results show that compared with the Local algorithm, MEC algorithm, PSO algorithm, and BES algorithm, the IBES algorithm has better offloading performance, and the total cost of the IBES system is reduced by 33.07% and 22.73%, respectively, compared with the PSO algorithm and BES algorithm. In the future, the task offloading of the edge computing of the Vehicular networks will be further studied, in combination with vehicle mobility and the task offloading rate.