Abstract

Tele-conference systems are widely used as a form of communication media between remote sites. In order to overcome the limitations of video-based tele-conference systems with the continued technological innovations in mixed reality (MR), the use of a three-dimensional teleported avatar, in which a remote participant is teleported into a local environment, would be an effective future tele-conference system that would allow natural movement and interaction in the same location. However, technical difficulties must be resolved to enable control of the teleported avatar adapted to the environmental differences of the remote location and the user’s situation. This paper presents a novel method to adjust automatic gaze alignment of the teleported avatar with matching in the local site for MR collaborative environments. We ran comparative validation experiments to measure spatial accuracy of the gaze and evaluate the user’s communication efficiency using our method. In a quantitative experiment, the degree of gaze matching error in various environments was found to form a mirror-symmetrical U-shape, and the necessity of gaze matching gain was also recognized. Additionally, our experimental study showed that participants felt a greater co-presence during communication than in an idle situation without conversation.

1. Introduction

In the days ahead, tele-conference systems will be more and more frequently used as a means of general communication or for business meetings among workers in remote environments [1]. Currently, video-based (or 2D) tele-conference systems are still limited in that they only offer 2D upper body imageries, and inconsistent gaze directions [2,3]. In recent years, mixed reality (MR) systems have been widely applied in tele-conference, education, entertainment, and healthcare applications provided by the use of technology to merge and interact the real and virtual worlds [4,5]. In a tele-conference situation, MR systems based on a three-dimensional (3D) teleported avatar moving and interacting naturally in the same location have allowed for a higher co-presence, the sense of being together with other people in the same space, than two-dimensional (2D) streamed videos [6]. In the use of this technology, the teleported avatar of the remote participant presents in the local real environment [7]. Figure 1 shows a 3D MR tele-conference condition for collaboration at the local site with the teleported avatar for the remote participant. In the line thinking, MR-based 3D tele-conference technology can empower remote participants to collaborate through interaction, rather than simple conversation [8]. However, currently there is a lack of study on how to communicate with the teleported avatar in terms of practical deployment. In particular, it is necessary to resolve the environmental differences in order to interact with local participants [1]. For example, imagine a situation where the participant is located at the specific spot in the local site. The teleported avatar of another participant at the remote site should sit or stand in a specific location in the local site. Due to environmental differences, such as furniture configuration and room size, it is difficult to match the gaze between the teleported avatar and the local participant.

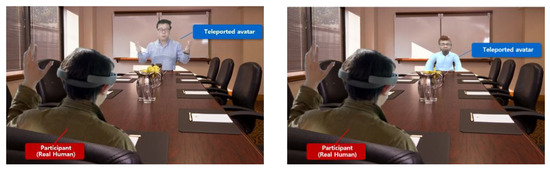

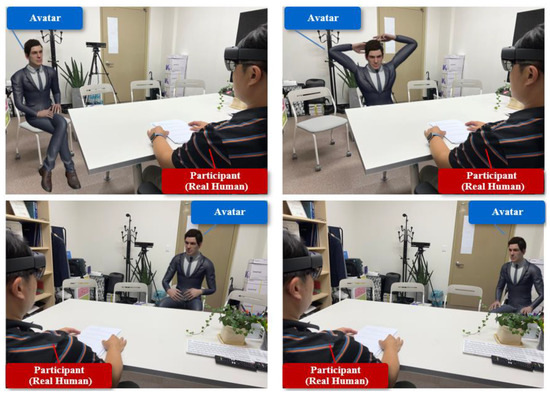

Figure 1.

3D MR tele-conference system to collaborate at a local site with a teleported avatar of the remote participant. The MR tele-conference system can make various typed teleported avatars; for example, a realistic avatar capturing the remote participant (left), and a pre-built character-like avatar using a 3D modeling toolkit (right).

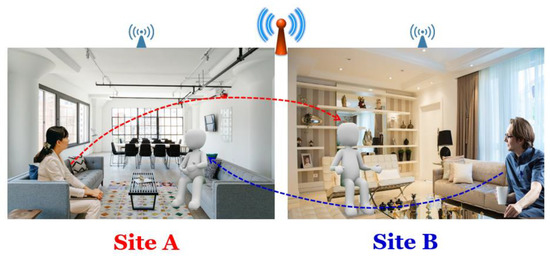

Figure 2 shows an example of gaze alignment with the teleported avatar in the MR tele-conference system. The participant at site A can communicate and interact with the virtual teleported avatar of the participant at site B. In this case, the participant’s eyes should naturally match during the conversation to be adapted to the different configuration of the other site.

Figure 2.

An example of gaze alignment with teleported avatars in the MR tele-conference system: Although the user at site A in the left image is looking in a different direction, the teleported avatar from site B should match the user’s gaze at site A. Typically, the participant in the MR system will wear a glass-typed MR headset to view the virtual object (or teleported avatar) in the real world. In this figure, participants are not wearing MR headsets in order to represent the gaze alignment concept.

In order to overcome this problem, our paper proposes a novel method of automatic gaze alignment of the teleported avatar for the MR collaborative system. Our method provides a natural and spatially correct interaction in the local space with the teleported avatar and improves the communication experience for participants by matching gaze direction. Additionally, we measured the spatial accuracy of gaze alignment, and as a qualitative experiment, evaluated and analyzed the results regarding the co-presence felt by the participant with the teleported avatar according to the degree of gaze accuracy.

The remainder of our paper is structured as follows. Section 2 provides a description of related works to our research. Section 3 gives a system overview, and Section 4 gives the detailed implementation of the proposed method in our paper. Section 5 presents the experimental evaluation for the gaze matching accuracy of the teleported avatar and the qualitative effectiveness and reports the main results. Finally, in Section 6, we summarize the paper and conclude with directions for future work.

2. Related Works

Here, we outline three areas of research that are directly related to the main topic of our work (i.e., realistic tele-conference systems, MR-teleported avatars, and adaptive control in collaborative environments.)

2.1. Realistic Tele-Conference Systems

To develop realistic tele-conference systems, one of the earliest pioneering studies was “the office of the future”, applying tele-conference systems through seamless spatial merging of multiple remote environments [9]. The basic idea of this research was to use rendered images of real surfaces as spatially immersive display spaces to view simultaneously captured remote sites. Recently, with the continued advances in 3D environment sensing technologies, this type of realistic tele-conference system has become much more feasible [2]. Thalmann et al. introduced a method to configure a collaborative system with various typed implementation methods, such as a human-like social robot and an autonomous virtual avatar [10]. Additionally, many previous researchers were concerned with immersive displays to visualize collaboration situations within a perceived shared space between remote sites, for example, a life-sized transparent 3D display [11], an optical see-through head-worn display [1], an environment projection of the remote users [12], and an auto-stereoscopic 3D display [13]. In our study, we were interested in environment differences and gaze control of the teleported avatar at the local site. No comprehensive research has yet been done in this area.

2.2. Mixed Reality (MR) Teleported Avatars

Recently, with the development of real-time reconstruction technologies to create 3D models, such as live 3D avatars that resemble an actual user, video-based tele-conference systems have been evolving to support the 3D interactive avatar in a photorealistic way [1]. Beck et al. investigated a remote collaborative system in which the participants were captured and represented as realistic avatars in a shared environment using depth cameras [6]. More recently, Escolano et al. introduced multiple camera technology based on the reconstruction of a teleported avatar, named Holoportation, that allowed the precise 3D-reconstructed models of participants to be transmitted to remote sites in real-time [14]. Shapiro et al. investigated a rapid capture system that can generate a scanned 3D model with texture blending using a depth camera [15]. Similarly, Facebook showcased a future social network service using cartoon-like characters in an MR world and capturing the participant’s gestures and facial expressions, which can interact with participants in different places [2]. Feng et al. proposed an auto-rigging method for a teleported avatar that creates the avatar’s motion by rigging skeletal animation to represent a 3D character model [16].

Another related issue is the use of life-sized virtual avatars for communication. Jo et al. investigated the use of life-sized teleported avatars for communication in the MR space [2]. Recent advances in display technologies, such as head-mounted MR devices, large-scaled monitors rotated to portrait mode vertically, and projection displays capable of visualizing large images for the virtual life-sized avatar, have become quite mature [8]. Kim et al. introduced an MR virtual human that can walk naturally in the real and physical space [17]. In their research, they used an optical see-through head-mounted display (HMD) to see the virtual human. The optical see-through HMD works on the principle of providing a spatially direct view of the physical world with a computer-generated object such as a virtual human [18].

2.3. Adaptive Control in Collaborative Environments

As previously mentioned, natural representation to resolve the environmental differences of the shared MR space to increase the effectiveness and quality of tele-conference systems needs to be established [1]. Many researchers have been striving to align different physical layouts and adapt the teleported avatar to remote environments. Lehment et al. proposed an automatic alignment method merging into shared workspaces between two remote rooms [19]. Jo et al. focused on adapting the position and motion of the teleported avatar into the local MR space according to scene matching with the corresponding information [1]. Interestingly, their results, with respect to adapting to the differences in remote environments showed significantly improved experience and communication effects for participants in MR tele-conference environments.

More recently, a consistent gaze direction in effective face-to-face communication has been recognized as one of the most important elements [20]. Pan et al. introduced effects of gaze direction with respect to the varying influence of display types, such as flat and spherical displays [21]. Erickson et al. investigated the effects of gaze visualization of a virtual human partner (or teleported avatar) with depth information; for example, the impact of depth gaze errors for collaboration, and use of a gaze visualization method such as a ray or a cursor [22]. They found that the addition of a 3D cursor enhanced participant’s performance and achieved the highest preference rating.

No comprehensive work has been done in this area in gaze alignment of teleported avatars in different shared remote environments. However, we applied approaches in previous works, for example, scene matching and evaluation methods, to assess quality for future MR tele-conference systems.

3. System Overview and Approach

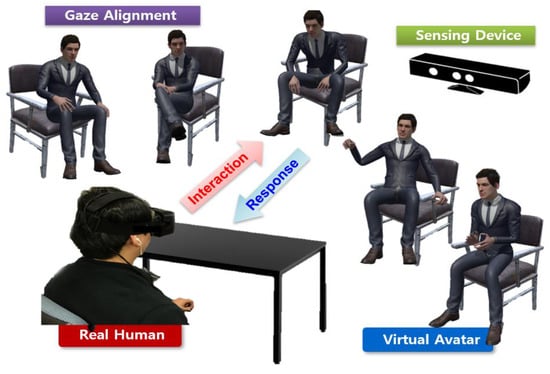

Figure 3 illustrates our system configuration and examples of the adaptive control of gaze alignment of the teleported avatar between tele-conference systems. As already mentioned, the gaze direction of the teleported avatar created from the remote participant needs to be adapted depending on the position of the real participant at the local site. There are real objects on which the teleported avatar may be positioned in various local environments. We assumed that the avatar would be where the real object was. After the position of the avatar is determined, the teleported avatar is made to match the gaze of the participant depending on the avatar’s position during the conversation, regardless of the gaze direction of the remote real participant. As shown in Figure 3, if there are five chairs at the local site, the place where the avatar can sit will be 1 out of 5 candidates. For the local participant, we used the MR see-through headset, and the sensing device was established to detect the position of the participant.

Figure 3.

The system configuration of our adaptive control for gaze alignment of the teleported avatar. A participant is wearing an MR see-through headset, and a sensing device is used for detecting the gaze direction of the participant. At this time, the virtual teleported avatar is made to match the gaze of the participant depending on the avatar’s position, associated with available real objects on which the avatar can sit during the conversation.

For automatic gaze alignment of the teleported avatar, we used an approach that organized the real objects using a reference direction and an adaptive control vector (see Figure 4). Then, the reference direction vector was defined by the position of the sensor, and the position of the participant in the MR tele-conference environment. The adaptive control vector was defined by the position of the participant and the position of the teleported avatar. This can be applied at the remote site in the same way as at the local site.

Figure 4.

The gaze alignment approach of the teleported avatar using a reference direction in the left image and an adaptive control vector in the right image. The reference direction vector (left) is defined by the position of the sensor, Ps, and the position of the participant in the MR tele-conference environment, Pp. Here, Pp1, Pp2, and Pp3 are the position of candidates where the participant can sit. The adaptive control vector (right) is defined by the position of the participant, Pp, and the position of the teleported avatar, Pa. Here, Pa1, Pa2, Pa3, and Pa4 are the position of candidates where the teleported avatar can sit.

Figure 4 shows one sensor position, four candidate positions for the teleported avatar, and three candidate positions for the participant. We found the result of calculating the gaze direction by the reference direction and the adaptive control vector associated with the position of the participant and the avatar. According to our approach, it is possible to apply gaze matching adapted to the local site with gaze information of the tele-conference participant in different environments. Thus, our method can achieve natural gaze alignment and spatially correct interactions with the teleported avatar for an improved communication experience of the MR collaborative system. In the next section, we present the details of our implementation.

4. Implementation

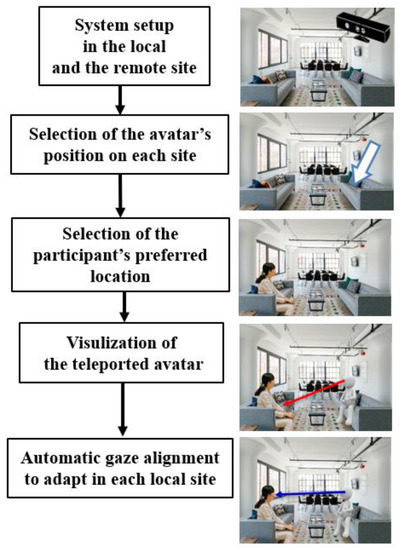

Figure 5 describes our automatic gaze alignment process of the teleported avatar in MR tele-conference systems. To adapt the gaze of the teleported avatar into the local site, first we installed the sensing device such as a depth camera (KINECT [23]), at each local site, and for the 3D MR display, each participant wore a see-through head-mounted display (MS HoloLens 2 [24]). At this time, with the offline process, the participant chose where the teleported avatar would be placed at each site. Then, the participant determined the preferred position where he/she wanted to sit for the MR tele-conference. The participant’s specific transformation information was extracted from the installed depth camera, and the participant’s gaze information was estimated for the corresponding teleported avatar. In our case, we used a rigged character as the avatar, and allowed movement of the gaze.

Figure 5.

Automatic gaze alignment process of the teleported avatar.

Our implementation ran on a 64-bit Windows 11 Pro with Unity3D (Unity Technologies [25]) applying the adaptive gaze control of the avatar. We used Unity3D C# scripting language for interactions to create the gaze control of the avatar with a Mixamo character [26]. Additionally, the motion datasets of Mixamo were configured so that the avatar acted similar to a real person. In order to provide a better tele-conference experience, the teleported avatar should express behavior that resembles that of the remote participant. Future work should aim to achieve a more accurate full-body motion expression of the teleported avatar; in this paper, we focused primarily on the gaze motion.

Figure 6 shows examples of the results of automatic gaze alignment with adaptation depending on the position of the participant and the avatar. Here, the avatar’s position was designated off-line manually, and the avatar’s gaze adjusted with the participant’s position was estimated from the depth camera. The participant may freely move and sit in a desired place. According to our implementation results, we can express natural looking situations of the avatar’s gaze behavior to adapt the position of the participant. In the next section, we present experiments to validate our results in terms of gaze matching accuracy and co-presence with the teleported avatar.

Figure 6.

The results of the MR avatar’s gaze adjusted with the participant’s position. The participant’s position was found by the installed depth camera in the local environment. Except for the gaze motion of the avatar, animation of the avatar was played using motion datasets.

5. Experiments and Results

In this paper, we developed a teleported avatar in the MR tele-conference system and focused on gaze alignment of the avatar. We ran two comparative experiments to measure spatial accuracy in terms of gaze direction and evaluate the participant’s efficiency (e.g., co-presence), as judged by the correct gaze of the avatar in a communication situation.

5.1. Experiments 1: Spatial Accuracy of Gaze

First, we assessed the quantitative accuracy of gaze alignment to evaluate the effectiveness of our approach. Our evaluation method was performed by measuring the gaze error according to the various positions of the avatar. In the experiment, we measured by changing the angle at which the avatar would be positioned within the sensing area of the sensor. In the case of the Kinect V2 used in our experiment, the sensor has sweet spots and a vision of angle that are optimal areas to define the range of movements for people’s interactions [27]. The vision of Kinect V2 is 70 degrees horizontally, and the sweet spot is from 0.8 m to 3.5 m [28].

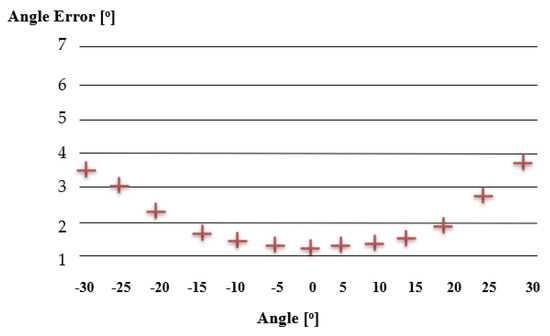

Figure 7 shows the results of gaze alignment error at various angles. This error was caused by the influence of the sensor in generating the depth map. In the work of Tolgyessy et al., a similar validation result was obtained [27]. Interestingly, our test showed that the degree of error had the form of a parabola which was mirror-symmetrical U-shaped. Our calculation method for adaptive gaze alignment by reference direction and the adaptive control vector did not affect the error (see Figure 4). In other words, if rotational gain is used, it is expected to be able to accurately match the gaze [29].

Figure 7.

The results of gaze alignment error using the Kinect V2. The horizontal axis is the difference of various angles used in the experiment, while the vertical axis indicates the angle error of gaze alignment.

5.2. Experiments 2: Qualitative Co-Presence Comparison

To evaluate the level of participant-felt co-presence with the teleported avatar related to gaze accuracy, we investigated how participants respond to two conditions (gaze alignment on or off the avatar) and two different scenarios (idle or communication with the avatar), as indicated in Table 1. In the experiment, the participants were informed of the purpose of our study and given instructions on how to perform the experiment. We presented a scenario to the participants, similar to that in previous studies related to interaction experiments with virtual avatars. Specifically, we adopted a greeting scenario in a university as proposed by Kim and Jo [30]. In the communication scenario of our experiment, most of the participants were undergraduate students, and the participants played the role of the students while the virtual avatar played the role of a teacher. To measure effective co-presence, the teleported avatar was operated by a pre-determined control method in the local environment. Twenty-six paid participants with an average age of 25 years participated in the within-subject experiment, and our experiment was constructed to present in a random order among four cases. If the participant did not say or do anything in the communication scenario, the virtual avatar was allowed to speak and act according to the control by an operator behind the curtain as Wizard-of-Oz testing [31].

Table 1.

The four conditions for experiments: the configured factors consisted of gaze alignment (gaze alignment on or off) and interaction scenario types (idle vs. communication).

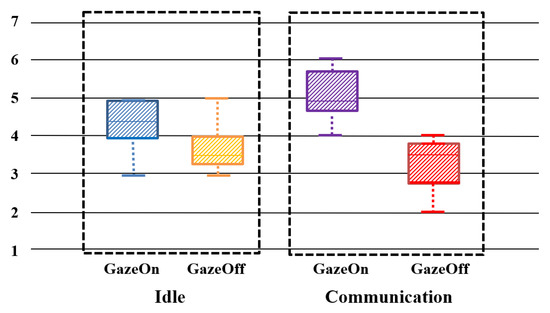

After carrying out the task, the participant’s co-presence was measured by a co-presence questionnaire with a seven-point Likert scale [32]. We defined co-presence as the participant perceiving someone to be in the same space [33]. Figure 8 shows the results of our quantitative experiment of participants’ experiences in terms of gaze accuracy of the avatar. We evaluated co-presence scores generated by the different situations through a pairwise comparison by two-sample t-tests (i.e., gaze alignment in the idle situation or when gaze does not fit, gaze alignment in the communication situation or when gaze does not fit). The two-sample t-tests in the idle situation revealed meaningful main effects at p < 0.05 regarding the level of co-presence. A pairwise comparison showed a significant effect in the statistical analysis (t(44) = 5, p < 0.05). Secondly, we found a significant main effect at p < 0.05 regarding the level of co-presence with two-sample t-tests in the communication situation. A pairwise comparison was shown to reveal a main effect in the statistical analysis (t(44) = 8.04, p < 0.05). In the case of communication, our experimental study showed that participants felt a greater co-presence than in the idle situation.

Figure 8.

The survey results of user experience: gaze alignment versus without gaze alignment in an idle interaction between the participant and the avatar, and gaze alignment versus without gaze alignment in a communication interaction situation.

5.3. Limitations

Many aspects of our results need to be updated in implementation to increase their practical applicability. Figure 9 shows examples of the results that need to be made more automatic to adjust gaze alignment between the participant and the avatar. This situation shows that it is necessary to handle the case beyond the sensing capability of the sensor, and a hybrid sensing method will be needed. Additionally, we plan to further extend the details of the avatar’s motions, such as facial expressions and responses to the situation, for a more natural interaction with the participant to provide an improved experience. Furthermore, we will improve the system to support more remote sites and more participants.

Figure 9.

An example of our results that need to be made more automatic for gaze alignment.

6. Conclusions and Future Works

Tele-conference systems are an important media for communication across remote sites. Recently, 3D MR future tele-conference systems based on a teleported avatar have begun to be actively studied, and there have been many attempts in terms of practical deployment to apply it in the field to increase the sense of being together for remote people in the same space. Ongoing research into these systems is necessary to resolve the environment differences, such as room size and spatial configuration of objects in real spaces, between the local and remote sites. Additionally, the teleported avatar needs to have established behavior to adapt to the other site. In this paper, we were interested in gaze alignment of the teleported avatar, and presented a novel method for adjusting and matching gaze information of the teleported avatar between remote sites in 3D MR tele-conference systems. Further, we ran comparative validation experiments to measure the spatial adaptation accuracy of the gaze alignment of the teleported avatar and evaluate the participant’s communication efficiency in terms of co-presence with the teleported avatar, based on the degree of gaze accuracy.

There are still many elements requiring improvement for its practical applicability. The various approaches need to be made more automatic to be usable in real life, including sensing limitations and realistic gaze animation. For future research, we also plan to extend our system and will continue to explore multiple remote sites in order to process multiple teleported avatars entering the local space at the same time. Finally, a comprehensive evaluation, such as measuring usability via several interaction scenarios with the teleported avatar, will be needed.

Author Contributions

J.-H.C. implemented the prototype and conducted qualitative experiments; D.J. designed the study conceptualization and mainly performed statistical analysis of the results and contributed to the writing of our paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the 2022 Research Fund of the University of Ulsan.

Institutional Review Board Statement

The participants’ information used in the experiment did not include personal information. Ethical review and approval were not required for the study because all participants were adults and participated with their own will.

Informed Consent Statement

Written informed consent was obtained for the user interviews to publish this paper.

Data Availability Statement

The data presented in our study are available on request from the corresponding author.

Acknowledgments

We express sincere gratitude to the users who participated in our qualitative experiments. We also thank the reviewers for their valuable contributions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jo, D.; Kim, K.-H.; Kim, G.J. Spacetime: Adaptive control of the teleported avatar for improved AR tele-conference experience. Comput. Animat. Virtual Worlds 2015, 26, 259–269. [Google Scholar] [CrossRef]

- Jo, D.; Kim, K.-H.; Kim, G.J. Effects of avatar and background types on users’ co-presence and trust for mixed reality-based teleconference systems. In Proceedings of the 30th Conference on Computer Animation and Social Agents (CASA), Seoul, Korea, 22–24 May 2017. [Google Scholar]

- Desilva, L.; Tahara, M.; Aizawa, K.; Hatori, M. A multiple person eye contact (MPEC) teleconferencing system. In Proceedings of the International Conference on Image Processing (ICIP), Washington, DC, USA, 23–26 October 1995. [Google Scholar]

- Gu, J.-Y.; Lee, J.-G. Augmented reality technology-based dental radiography simulator for preclinical training and education on dental anatomy. J. Inf. Commun. Converg. Eng. 2019, 17, 274–278. [Google Scholar]

- Rokhsaritalemi, S.; Niaraki, A.; Choi, S. A review on mixed reality: Current trends, challenges, and prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Beck, S.; Kunert, A.; Kulik, A.; Froehlich, B. Immersive group-to-group telepresence. IEEE Trans. Vis. Comput. Graph. 2013, 19, 616–625. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S. Toward a taxonomy of copresence. Presence Teleoperators Virtual Environ. 2003, 12, 445–455. [Google Scholar] [CrossRef]

- Maimone, A.; Yang, X.; Dierk, N.; State, A.; Dou, M.; Fuchs, H. General-purpose telepresence with head-worn optical see-through displays and projector-based lighting. In Proceedings of the IEEE Virtual Reality, Orlando, FL, USA, 16–20 March 2013. [Google Scholar]

- Raskar, R.; Welch, G.; Cutts, M.; Lake, A.; Stesin, L.; Fuchs, H. The office of the future: A unified approach to image-based modeling and spatially immersive displays. In Proceedings of the SIGGRAPH, Orlando, FL, USA, 19–24 July 1998. [Google Scholar]

- Thalmann, N.M.; Yumak, Z.; Beck, A. Autonomous virtual humans and social robots in telepresence. In Proceedings of the 16th International Workshop on Multimedia Signal Processing (MMSP), Jakarta, Indonesia, 22–24 September 2014. [Google Scholar]

- Pluess, C.; Ranieri, N.; Bazin, J.-C.; Martin, T.; Laffont, P.-Y.; Popa, T.; Gross, M. An immersive bidirectional system for life-size 3D communication. In Proceedings of the 29th Computer Animation and Social Agents (CASA), Geneva, Switzerland, 23–25 May 2016. [Google Scholar]

- Pejsa, T.; Kantor, J.; Benko, H.; Ofek, E.; Wilson, A. Room2Room: Enabling life-size telepresence in a projected augmented reality environment. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW), San Francisco, CA, USA, 27 February–2 March 2016. [Google Scholar]

- Jones, A.; Lang, M.; Fyffe, G.; Yu, X.; Busch, J.; Mcdowall, I.; Debevec, P. Achieving eye contact in a one-to-many 3D video teleconferencing system. ACM Trans. Graph. 2009, 28, 64. [Google Scholar] [CrossRef]

- Escolano, S.-O.; Rhemann, C.; Fanello, S.; Chang, W.; Kowdle, A.; Degtyarev, Y.; Kim, D.; Davidson, P.; Khamis, S.; Dou, M.; et al. Holoportation: Virtual 3D teleportation in real-time. In Proceedings of the ACM UIST, 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016. [Google Scholar]

- Feng, A.; Shapiro, A.; Ruizhe, W.; Bolas, M.; Medioni, G.; Suma, E. Rapid avatar capture and simulation using commodity depth sensors. In Proceedings of the SIGGRAPH, Vancouver, BC, Canada, 10–14 August 2014. [Google Scholar]

- Feng, A.; Casas, D.; Shapiro, A. Avatar reshaping and automatic rigging using a deformable model. In Proceedings of the 8th ACM SIGGRAPH Conference on Motion in Games (MIG), Paris, France, 16–18 November 2015. [Google Scholar]

- Kim, K.; Boelling, L.; Haesler, S.; Bailenson, J.N.; Bruder, G.; Welch, G.F. Does a Digital Assistant Need a Body? The Influence of Visual Embodiment and Social Behavior on the Perception of Intelligent Virtual Agents in AR. In Proceedings of the 17th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018. [Google Scholar]

- Rolland, J.P.; Henri, F. Optical versus video see-through head-mounted displays in medical visualization. Presence Teleoperators Virtual Environ. 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Lehment, N.; Merget, D.; Rigoll, G. Creating automatically aligned consensus realities for AR videoconferencing. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014. [Google Scholar]

- Taylor, M.; Itier, R.; Allison, T.; Edmonds, G. Direction of gaze effects on early face processing: Eyes-only versus full faces. Cogn. Brain Res. 2001, 10, 333–340. [Google Scholar] [CrossRef]

- Pan, Y.; Steptoe, W.; Steed, A. Comparing flat and spherical displays in a trust scenario in avatar-mediated interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing System, Toronto, Canada, 26 April–1 May 2014. [Google Scholar]

- Erickson, A.; Norouzi, N.; Kim, K.; Bruder, G.; Welch, G. Effects of depth information on visual target identification task performance in shared gaze environments. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1934–1944. [Google Scholar] [CrossRef] [PubMed]

- Zeng, W. Microsoft Kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Soares, I.; Sousa, R.; Petry, M.; Moreira, A. Accuracy and repeatability tests on HoloLens 2 and HTC Vive. Multimodal Technol. Interact. 2021, 5, 47. [Google Scholar] [CrossRef]

- Xianwen, Z. Behavior tree design of intelligent behavior of non-player character (NPC) based on Unity3D. J. Intell. Fuzzy Syst. 2019, 37, 6071–6079. [Google Scholar]

- Choi, C. A case study of short animation production using third party program in university animation curriculum. Int. J. Internet Broadcast. Commun. 2021, 13, 97–102. [Google Scholar]

- Tolgyessy, M.; Dekan, M.; Chovanec, L.; Hubinsky, P. Evaluation of the Azure Kinect and its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Kurillo, G.; Hemingway, E.; Cheng, M.; Cheng, L. Evaluating the accuracy of the Azure Kinect and Kinect V2. Sensor 2022, 22, 2469. [Google Scholar] [CrossRef] [PubMed]

- Paludan, A.; Elbaek, J.; Mortensen, M.; Zobbe, M. Disquising rotational gain for redirected walking in virtual reality: Effect of visual density. In Proceedings of the IEEE Virtual Reality, Greenville, SC, USA, 19–23 March 2016. [Google Scholar]

- Kim, D.; Jo, D. Effects on co-presence of a virtual human: A comparison of display and interaction types. Electronics 2022, 11, 367. [Google Scholar] [CrossRef]

- Shin, K.-S.; Kim, H.; Jo, D. Exploring the effects of the virtual human and physicality on co-presence and emotional response. J. Korea Soc. Comput. Inf. 2019, 24, 67–71. [Google Scholar]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D. Likert scale: Explored and explained. Curr. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

- Slater, M. Measuring presence: A response to the Witmer and Singer presence questionnaire. Presence Teleoperators Virtual Environ. 1999, 8, 560–565. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).