Segmentation of Liver Tumor in CT Scan Using ResU-Net

Abstract

:1. Introduction

2. Related Work

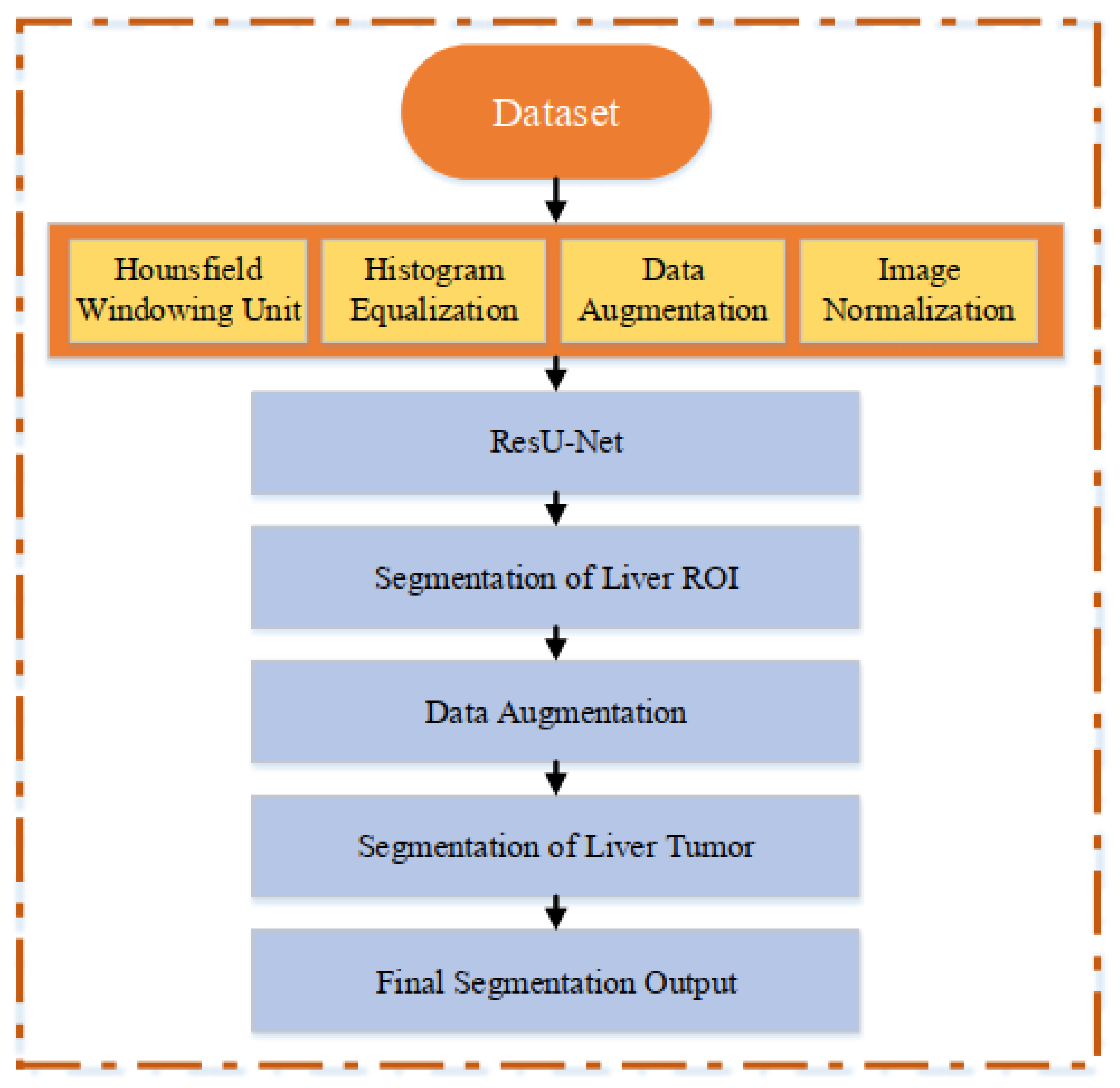

3. Material and Method

3.1. Liver CT Scan Dataset

3.2. Image Pre-Processing

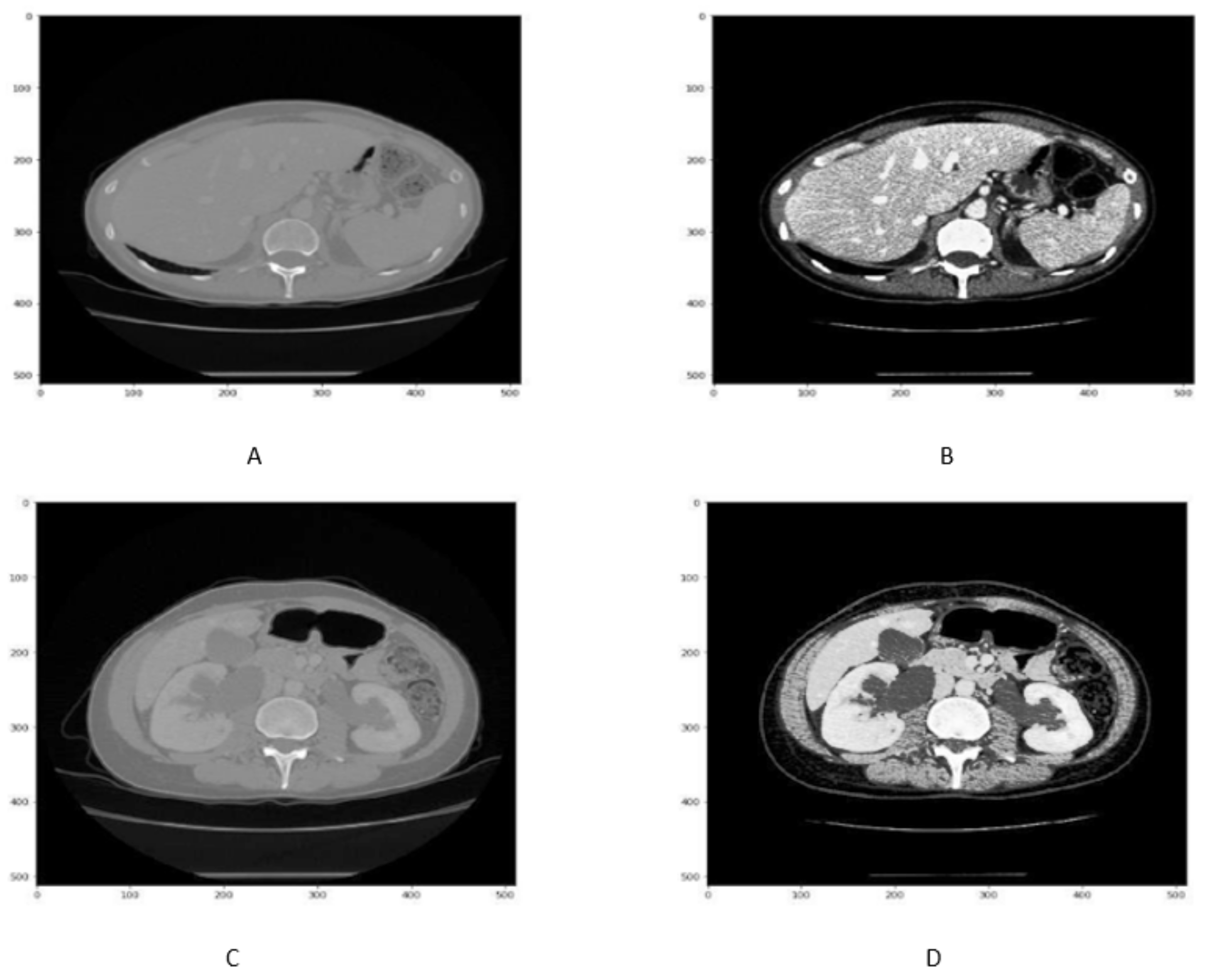

3.2.1. Hounsfield Windowing ()

3.2.2. Histogram Equalization

3.3. Data Augmentation

3.3.1. Reflection

3.3.2. Rotation

3.4. Image Normalization

3.5. ResU-Net Architecture for Liver CT Scan Segmentation

3.6. Loss Function

3.7. Evaluation Metric

3.7.1. Dice Similarity Coefficient ()

3.7.2. Accuracy

3.7.3. Specificity

3.7.4. Precision

3.7.5. Relative Volume Difference (RVD)

3.7.6. Volume Overlap Error ()

4. Result and Discussion

4.1. Experimental Set-Up

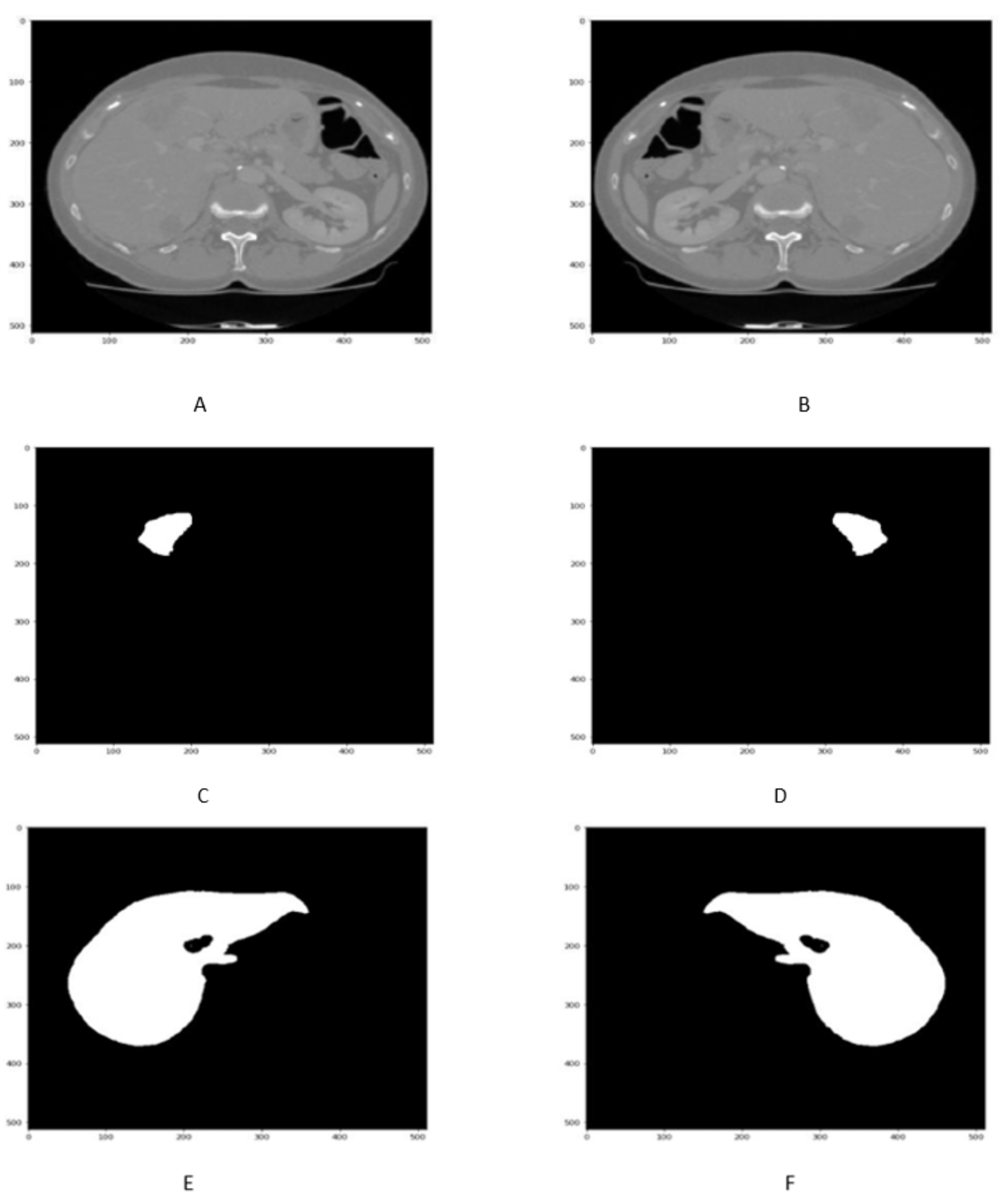

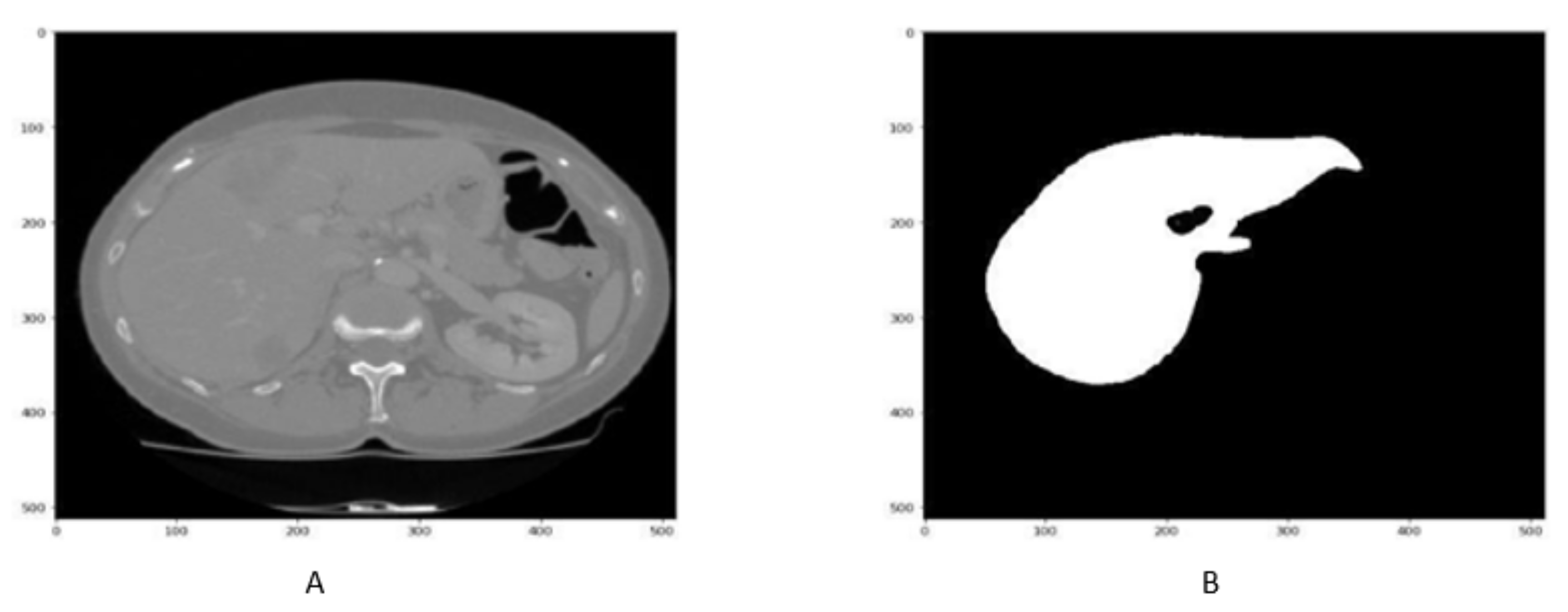

4.2. Liver Segmentation Results

4.3. Tumor Segmentation Results

4.4. Comparative Analysis

4.4.1. Segmentation of Liver

4.4.2. Segmentation of Liver Tumor

5. Conclusions

Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Torre, L.A.; Bray, F.; Siegel, R.L.; Ferlay, J.; Lortet-Tieulent, J.; Jemal, A. Global cancer statistics, 2012. CA A Cancer J. Clin. 2015, 65, 87–108. [Google Scholar] [CrossRef] [PubMed]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- Bilic, P.; Christ, P.F.; Vorontsov, E.; Chlebus, G.; Chen, H.; Dou, Q.; Fu, C.W.; Han, X.; Heng, P.A.; Hesser, J.; et al. The liver tumor segmentation benchmark (lits). arXiv 2019, arXiv:1901.04056. [Google Scholar]

- Lee, H.; Kim, M.; Do, S. Practical window setting optimization for medical image deep learning. arXiv 2018, arXiv:1812.00572. [Google Scholar]

- Li, D.; Liu, L.; Chen, J.; Li, H.; Yin, Y. A multistep liver segmentation strategy by combining level set based method with texture analysis for CT images. In Proceedings of the 2014 International Conference on Orange Technologies, Xi’an, China, 20–23 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 109–112. [Google Scholar]

- Xu, Z.; Burke, R.P.; Lee, C.P.; Baucom, R.B.; Poulose, B.K.; Abramson, R.G.; Landman, B.A. Efficient multi-atlas abdominal segmentation on clinically acquired CT with SIMPLE context learning. Med. Image Anal. 2015, 24, 18–27. [Google Scholar] [CrossRef]

- Song, X.; Cheng, M.; Wang, B.; Huang, S.; Huang, X.; Yang, J. Adaptive fast marching method for automatic liver segmentation from CT images. Med. Phys. 2013, 40, 091917. [Google Scholar] [CrossRef]

- Maklad, A.S.; Matsuhiro, M.; Suzuki, H.; Kawata, Y.; Niki, N.; Satake, M.; Moriyama, N.; Utsunomiya, T.; Shimada, M. Blood vessel-based liver segmentation using the portal phase of an abdominal CT dataset. Med. Phys. 2013, 40, 113501. [Google Scholar] [CrossRef]

- Peng, J.; Dong, F.; Chen, Y.; Kong, D. A region-appearance-based adaptive variational model for 3D liver segmentation. Med. Phys. 2014, 41, 043502. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Khan, Z.; Yahya, N.; Alsaih, K.; Al-Hiyali, M.I.; Meriaudeau, F. Recent Automatic Segmentation Algorithms of MRI Prostate Regions: A Review. IEEE Access 2021, 9, 97878–97905. [Google Scholar] [CrossRef]

- Zhou, T.; Li, L.; Bredell, G.; Li, J.; Konukoglu, E. Quality-aware memory network for interactive volumetric image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 560–570. [Google Scholar]

- Christ, P.F.; Ettlinger, F.; Grün, F.; Elshaera, M.E.A.; Lipkova, J.; Schlecht, S.; Ahmaddy, F.; Tatavarty, S.; Bickel, M.; Bilic, P.; et al. Automatic liver and tumor segmentation of CT and MRI volumes using cascaded fully convolutional neural networks. arXiv 2017, arXiv:1702.05970. [Google Scholar]

- Li, W.; Jia, F.; Hu, Q. Automatic segmentation of liver tumor in CT images with deep convolutional neural networks. J. Comput. Commun. 2015, 3, 146. [Google Scholar] [CrossRef]

- Hu, P.; Wu, F.; Peng, J.; Liang, P.; Kong, D. Automatic 3D liver segmentation based on deep learning and globally optimized surface evolution. Phys. Med. Biol. 2016, 61, 8676. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Chen, H.; Qi, X.; Dou, Q.; Fu, C.W.; Heng, P.A. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med. Imaging 2018, 37, 2663–2674. [Google Scholar] [CrossRef]

- Jiang, H.; Shi, T.; Bai, Z.; Huang, L. Ahcnet: An application of attention mechanism and hybrid connection for liver tumor segmentation in ct volumes. IEEE Access 2019, 7, 24898–24909. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, K.; Liao, X.; Qian, Y.; Wang, Q.; Yuan, Z.; Heng, P.A. Channel-Unet: A spatial channel-wise convolutional neural network for liver and tumors segmentation. Front. Genet. 2019, 10, 1110. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Xiong, H.; Liu, S.; Sharan, R.V.; Coiera, E.; Berkovsky, S. Weak label based Bayesian U-Net for optic disc segmentation in fundus images. Artif. Intell. Med. 2022, 126, 102261. [Google Scholar] [CrossRef]

- Karthik, R.; Radhakrishnan, M.; Rajalakshmi, R.; Raymann, J. Delineation of ischemic lesion from brain MRI using attention gated fully convolutional network. Biomed. Eng. Lett. 2021, 11, 3–13. [Google Scholar] [CrossRef]

- Manjunath, R.; Kwadiki, K. Automatic liver and tumour segmentation from CT images using Deep learning algorithm. Results Control Optim. 2022, 6, 100087. [Google Scholar] [CrossRef]

- Amin, J.; Anjum, M.A.; Sharif, M.; Kadry, S.; Nadeem, A.; Ahmad, S.F. Liver Tumor Localization Based on YOLOv3 and 3D-Semantic Segmentation Using Deep Neural Networks. Diagnostics 2022, 12, 823. [Google Scholar] [CrossRef]

- Jin, Q.; Meng, Z.; Sun, C.; Cui, H.; Su, R. RA-UNet: A hybrid deep attention-aware network to extract liver and tumor in CT scans. Front. Bioeng. Biotechnol. 2020, 1471. [Google Scholar] [CrossRef]

- Ayalew, Y.A.; Fante, K.A.; Mohammed, M.A. Modified U-Net for liver cancer segmentation from computed tomography images with a new class balancing method. BMC Biomed. Eng. 2021, 3, 4. [Google Scholar] [CrossRef]

- Almotairi, S.; Kareem, G.; Aouf, M.; Almutairi, B.; Salem, M.A.M. Liver tumor segmentation in CT scans using modified SegNet. Sensors 2020, 20, 1516. [Google Scholar] [CrossRef] [Green Version]

- Soler, L.; Hostettler, A.; Agnus, V.; Charnoz, A.; Fasquel, J.; Moreau, J.; Osswald, A.; Bouhadjar, M.; Marescaux, J. 3D Image Reconstruction for Comparison of Algorithm Database: A Patient-Specific Anatomical and Medical Image Database; IRCAD: Strasbourg, France, 2010. [Google Scholar]

- Goshtasby, A.; Satter, M. An adaptive window mechanism for image smoothing. Comput. Vis. Image Underst. 2008, 111, 155–169. [Google Scholar] [CrossRef]

- Khalifa, N.E.; Loey, M.; Mirjalili, S. A comprehensive survey of recent trends in deep learning for digital images augmentation. Artif. Intell. Rev. 2021, 55, 2351–2377. [Google Scholar] [CrossRef]

- Zhou, X.Y.; Yang, G.Z. Normalization in training U-Net for 2-D biomedical semantic segmentation. IEEE Robot. Autom. Lett. 2019, 4, 1792–1799. [Google Scholar] [CrossRef]

- Christ, P.F.; Elshaer, M.E.A.; Ettlinger, F.; Tatavarty, S.; Bickel, M.; Bilic, P.; Rempfler, M.; Armbruster, M.; Hofmann, F.; D’Anastasi, M.; et al. Automatic liver and lesion segmentation in CT using cascaded fully convolutional neural networks and 3D conditional random fields. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 415–423. [Google Scholar]

- Litjens, G.; Toth, R.; van de Ven, W.; Hoeks, C.; Kerkstra, S.; van Ginneken, B.; Vincent, G.; Guillard, G.; Birbeck, N.; Zhang, J.; et al. Evaluation of prostate segmentation algorithms for MRI: The PROMISE12 challenge. Med. Image Anal. 2014, 18, 359–373. [Google Scholar] [CrossRef]

- Khan, Z.; Yahya, N.; Alsaih, K.; Meriaudeau, F. Zonal segmentation of prostate T2W-MRI using atrous convolutional neural network. In Proceedings of the 2019 IEEE Student Conference on Research and Development (SCOReD), , Bandar Seri Iskandar, Malaysia, 15–17 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 95–99. [Google Scholar]

- Crum, W.R.; Camara, O.; Hill, D.L. Generalized overlap measures for evaluation and validation in medical image analysis. IEEE Trans. Med. Imaging 2006, 25, 1451–1461. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Rafiei, S.; Nasr-Esfahani, E.; Najarian, K.; Karimi, N.; Samavi, S.; Soroushmehr, S.R. Liver segmentation in CT images using three dimensional to two dimensional fully convolutional network. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2067–2071. [Google Scholar]

- Han, X. Automatic liver lesion segmentation using a deep convolutional neural network method. arXiv 2017, arXiv:1704.07239. [Google Scholar]

- Wu, W.; Wu, S.; Zhou, Z.; Zhang, R.; Zhang, Y. 3D liver tumor segmentation in CT images using improved fuzzy C-means and graph cuts. BioMed Res. Int. 2017, 2017, 5207685. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Guo, S.; Zhang, H.; Li, J.; Chen, M.; Ma, S.; Jin, L.; Liu, X.; Li, X.; Qian, X. Automatic segmentation of liver tumors from multiphase contrast-enhanced CT images based on FCNs. Artif. Intell. Med. 2017, 83, 58–66. [Google Scholar] [CrossRef] [PubMed]

| HU Value for Body Organ | |

|---|---|

| Bone | 1000 |

| Liver | 40 to 60 |

| White Matter | 46 |

| Grey Matter | 43 |

| Blood | 40 |

| Muscle | 10 to 40 |

| Kidney | 30 |

| Cerebrospinal | 15 |

| Water | 0 |

| Fat | −50 to −100 |

| Air | −1000 |

| S.No | Evaluation Metrics | ResU-Net Network |

|---|---|---|

| 1 | DSC | 0.9781 |

| 2 | Accuracy | 0.991 |

| 3 | Precision | 0.982 |

| 4 | Specificity | 0.961 |

| 5 | VOE | 0.13 |

| 6 | RVD | 0.018 |

| S.No | Evaluation Metrics | ResU-Net Network |

|---|---|---|

| 1 | DSC | 0.893 |

| 2 | Accuracy | 0.97 |

| 3 | Precision | 0.950 |

| 4 | Specificity | 0.957 |

| 5 | VOE | 13.15 |

| 6 | RVD | 7.23 |

| Approach | DSC | Accuracy | Precision | Specificity | VOE | RVD |

|---|---|---|---|---|---|---|

| Christ et al. [31] | 0.94 | - | - | - | 10.7 | −1.4 |

| Rafiei et al. [36] | 0.935 | - | - | - | 56.47 | - |

| Li et al. [14] | 0.80 | - | 0.826 | - | 29.04 | - |

| Qiangguo Jin et al. [24] | 0.971 | - | - | - | 0.074 | 0.002 |

| Sultan Almotairi et al. [26] | - | 0.988 | - | - | - | - |

| Yodit Abebe Ayalew et al. [25] | 0.961 | 0.993 | - | - | - | |

| Proposed ResU-Net | 0.9781 | 0.991 | 0.982 | 0.9618 | 0.13 | 0.018 |

| Approach | DSC | Accuracy | Precision | Specificity | VOE | RVD |

|---|---|---|---|---|---|---|

| Christ et al. [13] | 0.823 | - | - | - | - | - |

| Sun et al. [39] | - | - | - | - | 15.6 | 5.8 |

| Wu et al. [38] | 0.83 | - | - | - | 29.04 | −2.20 |

| Qiangguo Jin et al. [24] | 0.595 | - | - | - | 0.380 | −0.152 |

| Han et al. [37] | 0.67 | - | - | - | - | 0.40 |

| Yodit Abebe Ayalew et al. [25] | 0.74 | 0.995 | - | - | - | |

| Proposed ResU-Net | 0.893 | 0.97 | 0.950 | 0.957 | 13.15 | 7.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sabir, M.W.; Khan, Z.; Saad, N.M.; Khan, D.M.; Al-Khasawneh, M.A.; Perveen, K.; Qayyum, A.; Azhar Ali, S.S. Segmentation of Liver Tumor in CT Scan Using ResU-Net. Appl. Sci. 2022, 12, 8650. https://doi.org/10.3390/app12178650

Sabir MW, Khan Z, Saad NM, Khan DM, Al-Khasawneh MA, Perveen K, Qayyum A, Azhar Ali SS. Segmentation of Liver Tumor in CT Scan Using ResU-Net. Applied Sciences. 2022; 12(17):8650. https://doi.org/10.3390/app12178650

Chicago/Turabian StyleSabir, Muhammad Waheed, Zia Khan, Naufal M. Saad, Danish M. Khan, Mahmoud Ahmad Al-Khasawneh, Kiran Perveen, Abdul Qayyum, and Syed Saad Azhar Ali. 2022. "Segmentation of Liver Tumor in CT Scan Using ResU-Net" Applied Sciences 12, no. 17: 8650. https://doi.org/10.3390/app12178650

APA StyleSabir, M. W., Khan, Z., Saad, N. M., Khan, D. M., Al-Khasawneh, M. A., Perveen, K., Qayyum, A., & Azhar Ali, S. S. (2022). Segmentation of Liver Tumor in CT Scan Using ResU-Net. Applied Sciences, 12(17), 8650. https://doi.org/10.3390/app12178650