On Managing Knowledge for MAPE-K Loops in Self-Adaptive Robotics Using a Graph-Based Runtime Model

Abstract

1. Introduction

2. Related Work

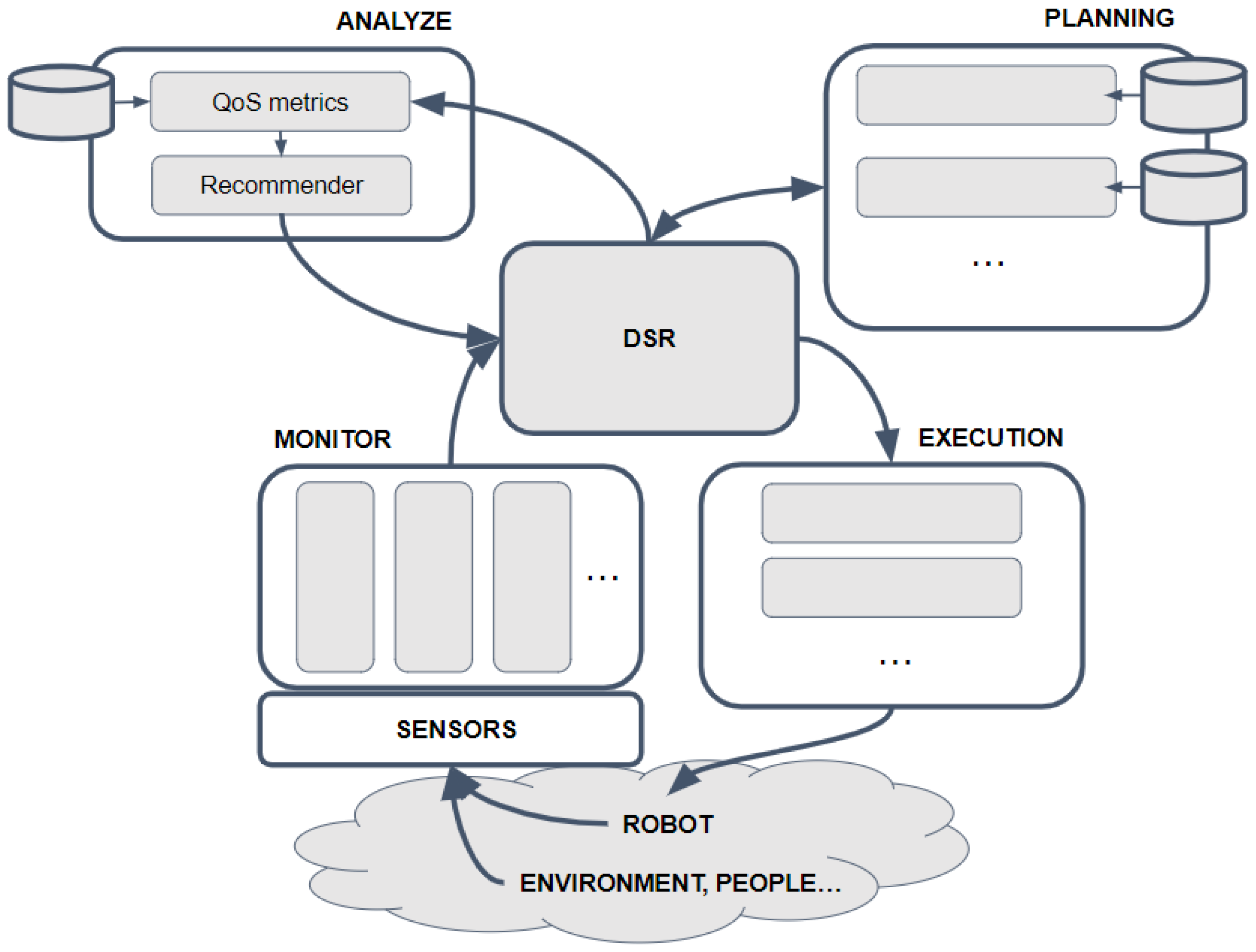

3. Extending the MAPE Scheme: The DSR

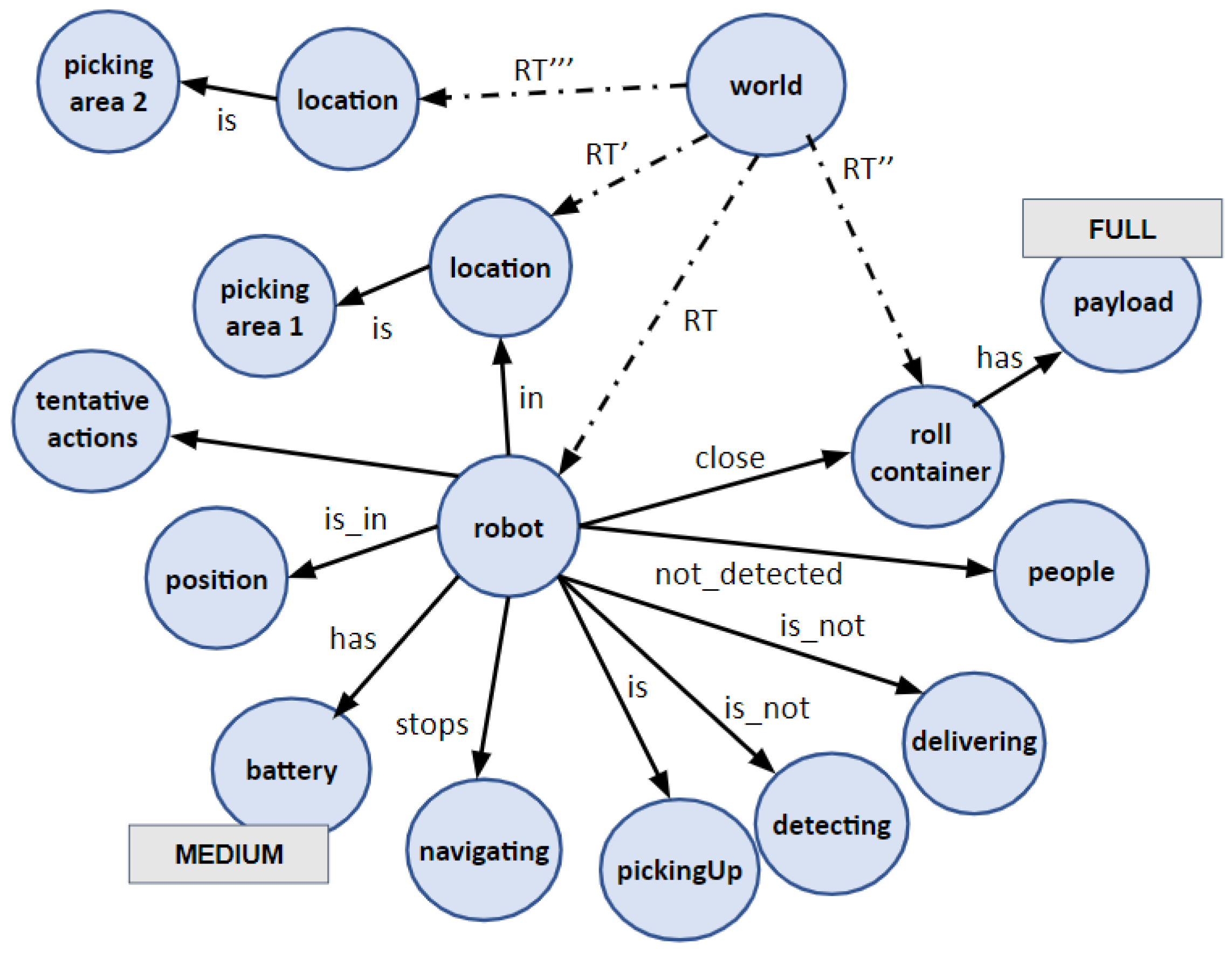

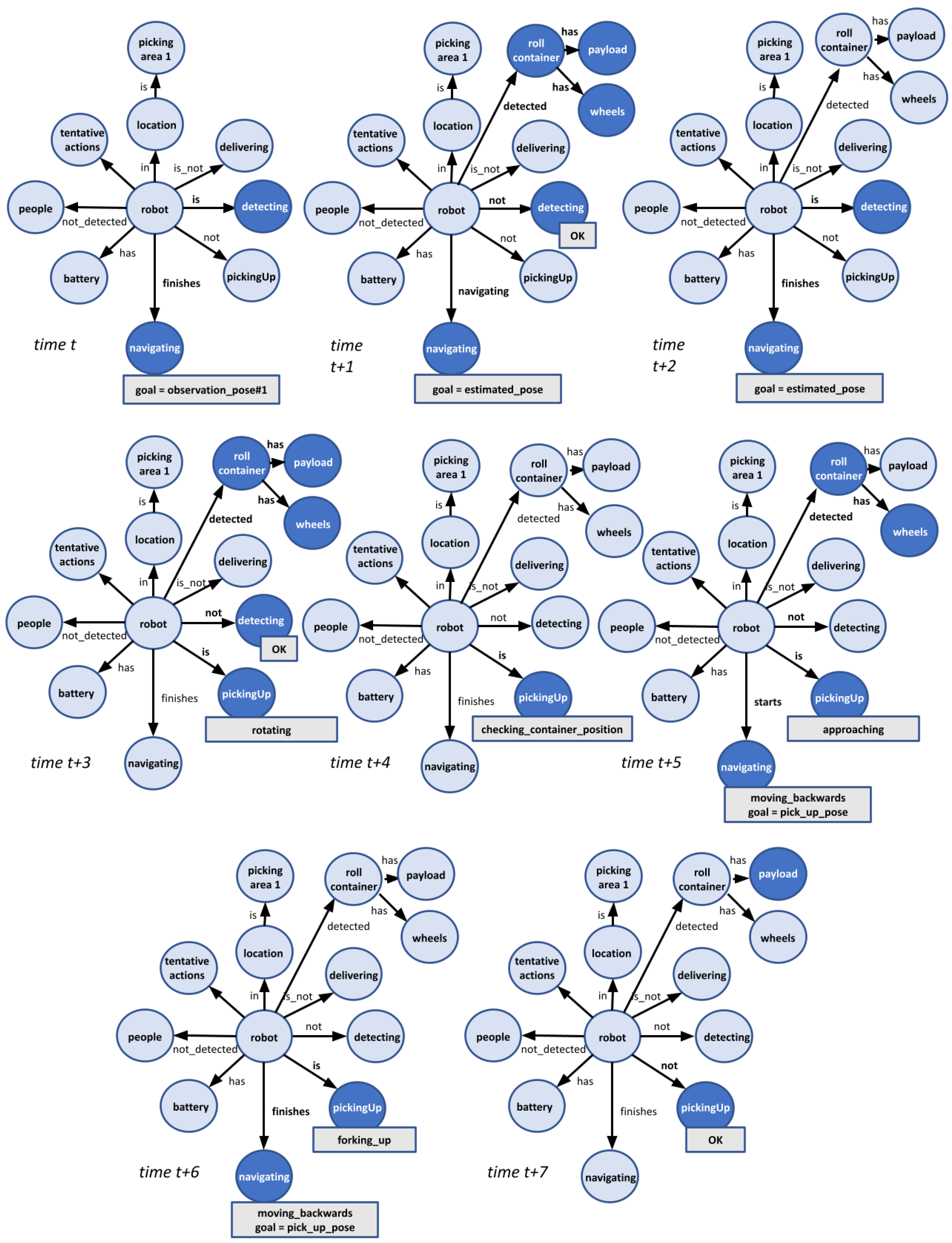

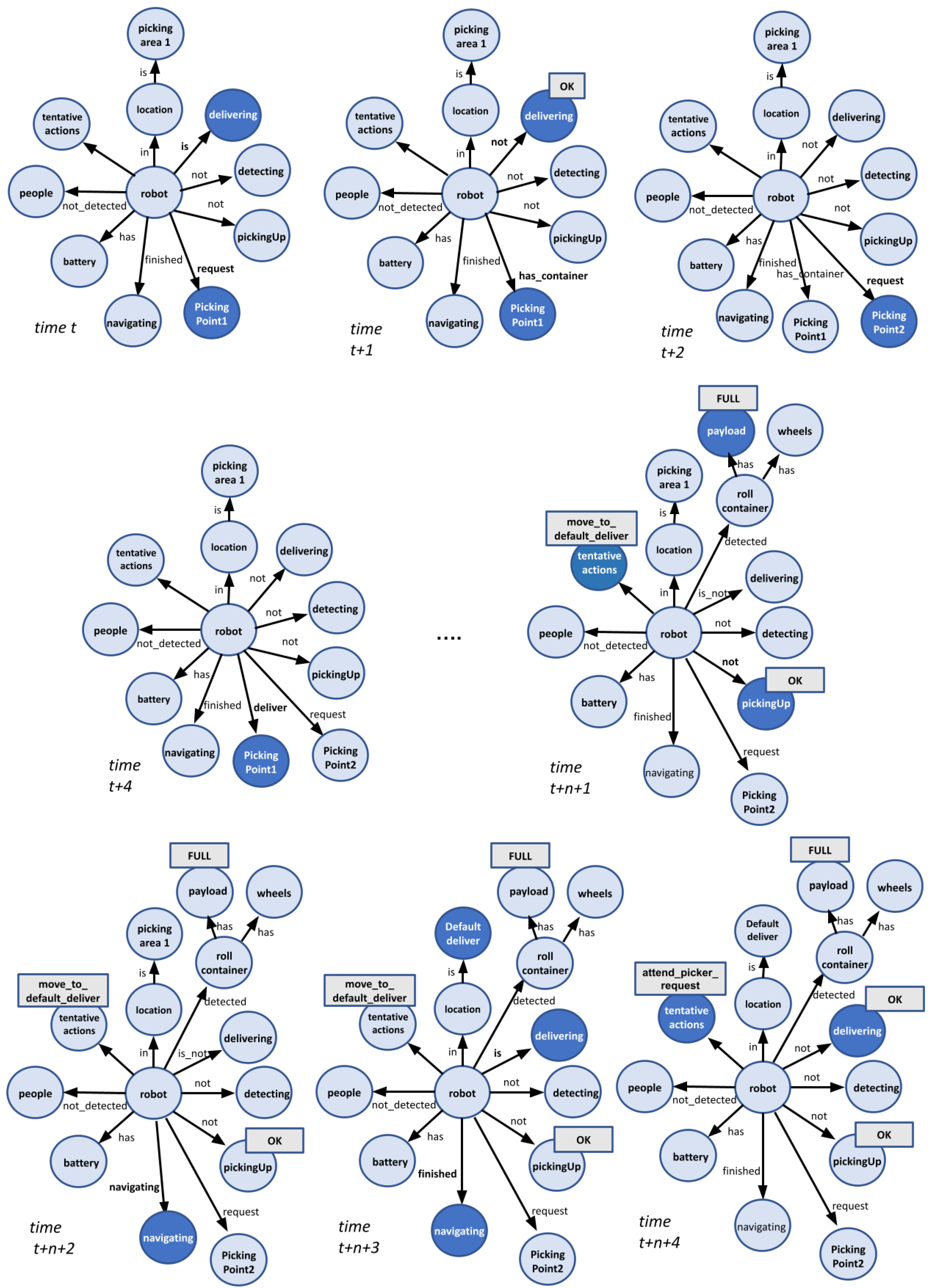

3.1. The Deep State Representation

3.2. The DSR as a Runtime Model

3.3. The DSR as the Place for Coordinating MAPE Loops

4. The Proposed MAPE-K Architecture

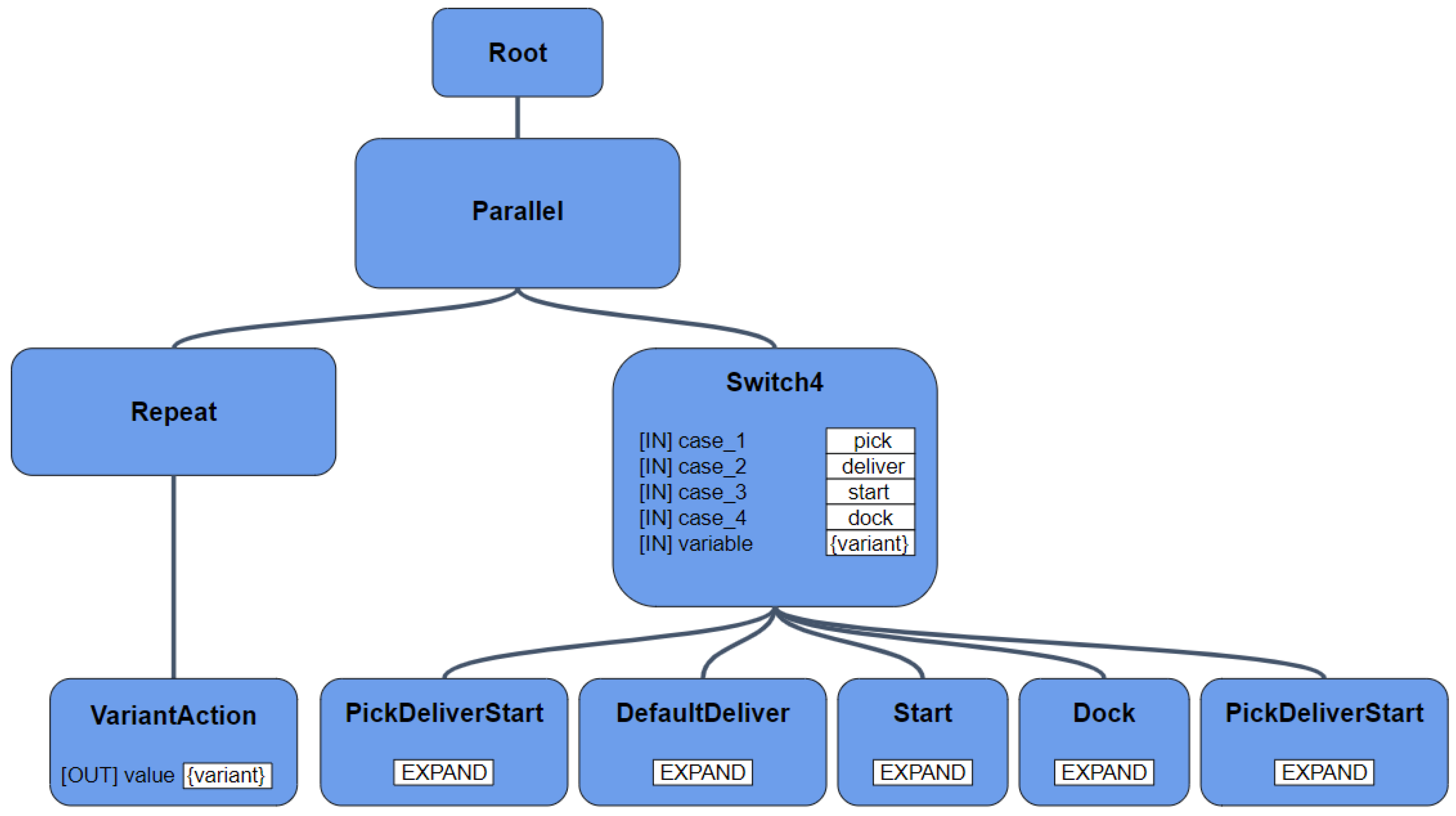

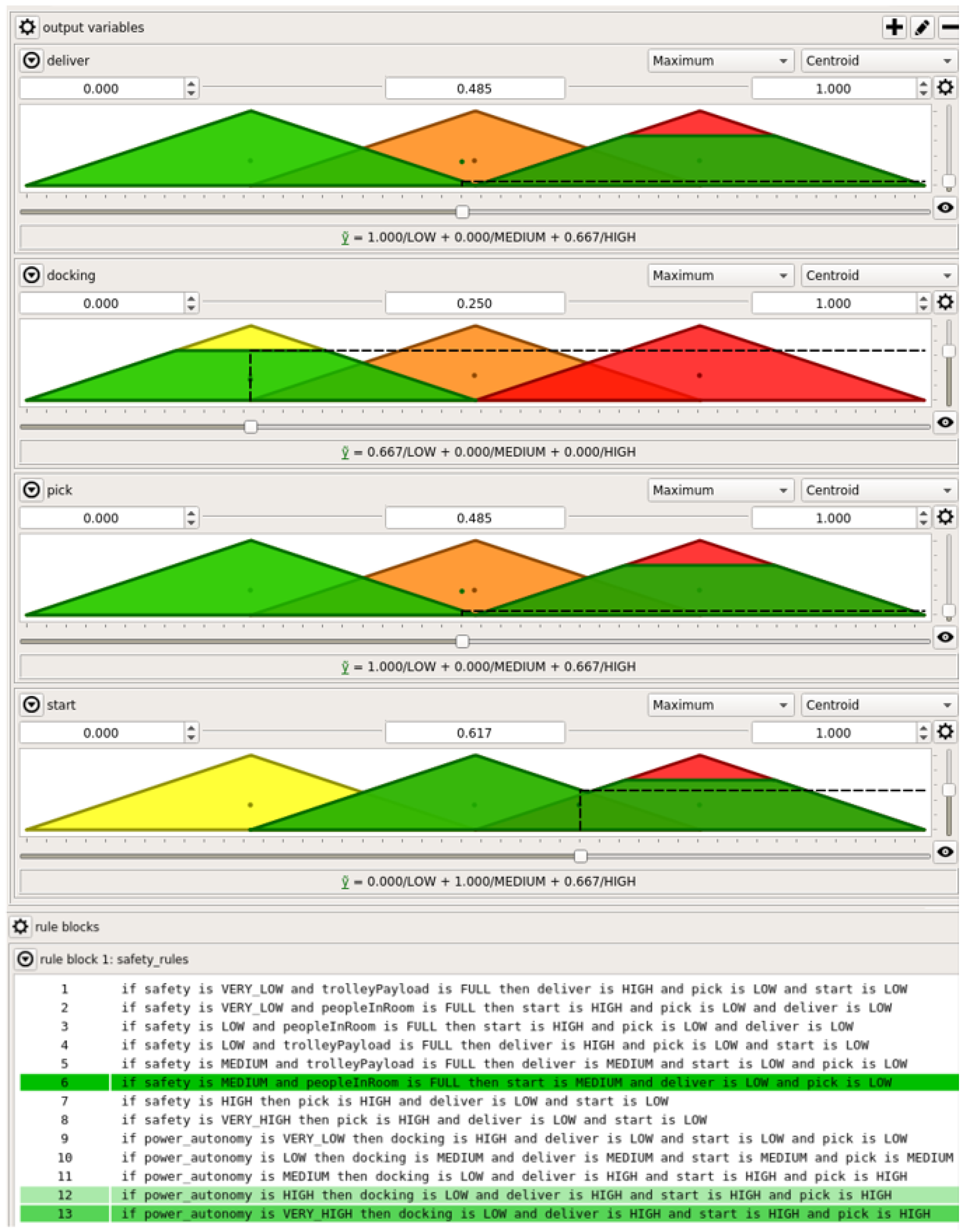

5. Implementation

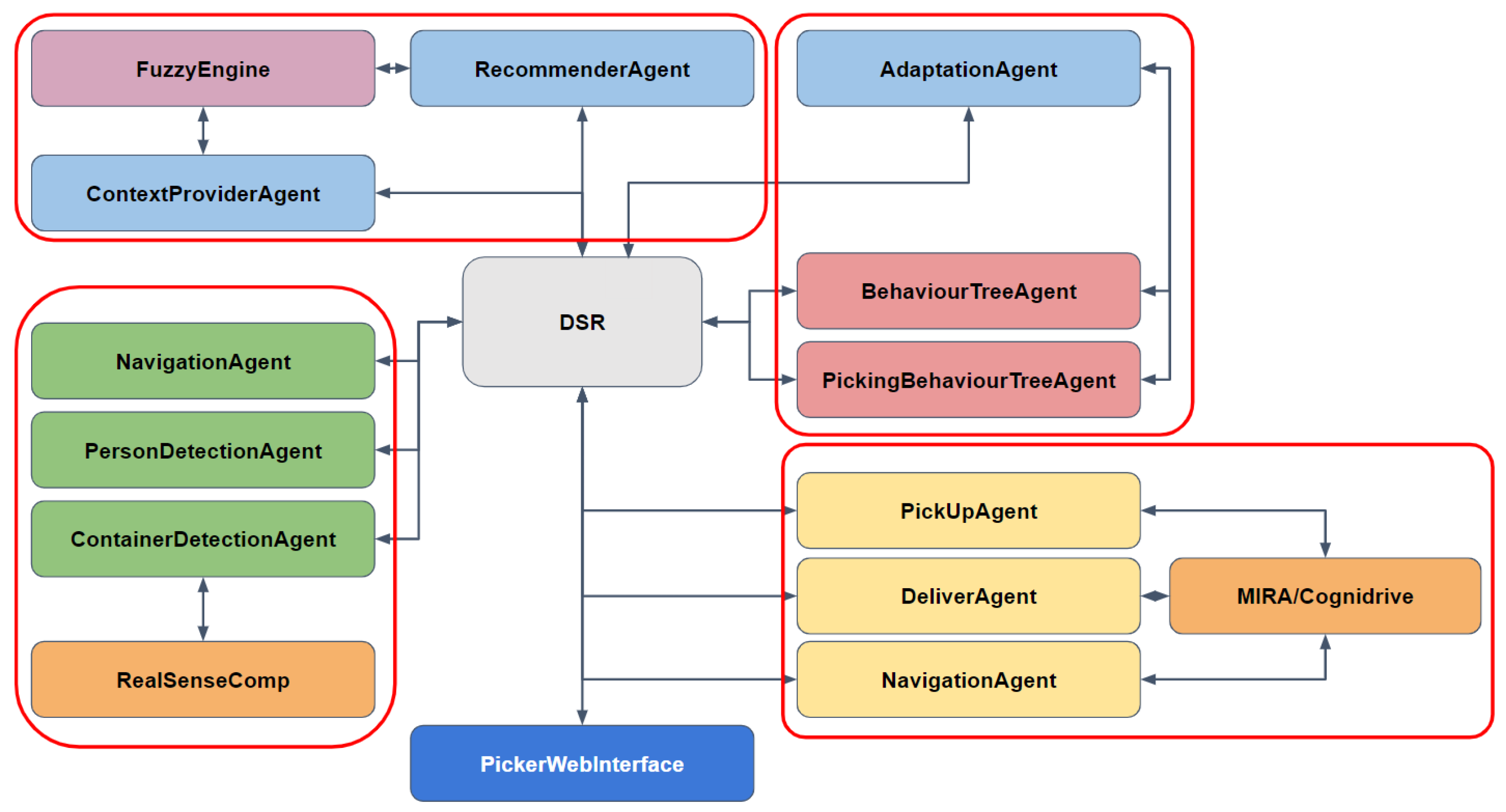

5.1. The CARY Robot

5.2. Software Architecture

6. Experimental Results

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kephart, J.; Chess, D. The vision of autonomic computing. Computer 2003, 36, 41–50. [Google Scholar] [CrossRef]

- Aehnelt, M.; Urban, B. The Knowledge Gap: Providing Situation-Aware Information Assistance on the Shop Floor. In Proceedings of the HCI in Business; Fui-Hoon Nah, F., Tan, C.H., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 232–243. [Google Scholar]

- Vromant, P.; Weyns, D.; Malek, S.; Andersson, J. On interacting control loops in self-adaptive systems. In Proceedings of the 6th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, Honolulu, HI, USA, 23–24 May 2011; pp. 202–207. [Google Scholar] [CrossRef]

- Giese, H.; Bencomo, N.; Pasquale, L.; Ramirez, A.J.; Inverardi, P.; Wätzoldt, S.; Clarke, S. Living with Uncertainty in the Age of Runtime Models. In Models@run.time: Foundations, Applications, and Roadmaps; Bencomo, N., France, R., Cheng, B.H.C., Aßmann, U., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 47–100. [Google Scholar] [CrossRef]

- Bustos, P.; Manso, L.; Bandera, A.; Bandera, J.; García-Varea, I.; Martínez-Gómez, J. The CORTEX cognitive robotics architecture: Use cases. Cogn. Syst. Res. 2019, 55, 107–123. [Google Scholar] [CrossRef]

- Marfil, R.; Romero-Garces, A.; Bandera, J.; Manso, L.; Calderita, L.; Bustos, P.; Bandera, A.; Garcia-Polo, J.; Fernandez, F.; Voilmy, D. Perceptions or Actions? Grounding How Agents Interact Within a Software Architecture for Cognitive Robotics. Cogn. Comput. 2020, 12, 479–497. [Google Scholar] [CrossRef]

- Paulius, D.; Sun, Y. A Survey of Knowledge Representation in Service Robotics. Robot. Auton. Syst. 2019, 118, 13–30. [Google Scholar] [CrossRef]

- Hochgeschwender, N.; Schneider, S.; Voos, H.; Bruyninckx, H.; Kraetzschmar, G.K. Graph-based software knowledge: Storage and semantic querying of domain models for run-time adaptation. In Proceedings of the 2016 IEEE International Conference on Simulation, Modeling, and Programming for Autonomous Robots (SIMPAR), San Francisco, CA, USA, 13–16 December 2016; pp. 83–90. [Google Scholar] [CrossRef]

- Dang, H.; Allen, P.K. Semantic grasping: Planning task-specific stable robotic grasps. Auton. Robot. 2014, 37, 301–316. [Google Scholar] [CrossRef]

- Galindo, C.; Fernández-Madrigal, J.A.; González, J.; Saffiotti, A. Robot task planning using semantic maps. Robot. Auton. Syst. 2008, 56, 955–966. [Google Scholar] [CrossRef]

- Pangercic, D.; Pitzer, B.; Tenorth, M.; Beetz, M. Semantic Object Maps for robotic housework - representation, acquisition and use. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4644–4651. [Google Scholar] [CrossRef][Green Version]

- Singh Chaplot, D.; Salakhutdinov, R.; Gupta, A.; Gupta, S. Neural Topological SLAM for Visual Navigation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12872–12881. [Google Scholar] [CrossRef]

- Chen, C.; Majumder, S.; Al-Halah, Z.; Gao, R.; Ramakrishnan, S.K.; Grauman, K. Audio-Visual Waypoints for Navigation. arXiv 2020, arXiv:2008.09622. [Google Scholar]

- Liao, Z.; Zhang, Y.; Luo, J.; Yuan, W. TSM: Topological Scene Map for Representation in Indoor Environment Understanding. IEEE Access 2020, 8, 185870–185884. [Google Scholar] [CrossRef]

- Armeni, I.; He, Z.Y.; Zamir, A.; Gwak, J.; Malik, J.; Fischer, M.; Savarese, S. 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 5663–5672. [Google Scholar] [CrossRef]

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. Int. J. Robot. Res. 2021, 40, 1510–1546. [Google Scholar] [CrossRef]

- Wu, S.C.; Wald, J.; Tateno, K.; Navab, N.; Tombari, F. SceneGraphFusion: Incremental 3D Scene Graph Prediction from RGB-D Sequences. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 2 November 2021; pp. 7511–7521. [Google Scholar] [CrossRef]

- Hughes, N.; Chang, Y.; Carlone, L. Hydra: A Real-time Spatial Perception System for 3D Scene Graph Construction and Optimization. arXiv 2022, arXiv:2201.13360. [Google Scholar] [CrossRef]

- Bavle, H.; Sanchez-Lopez, J.L.; Shaheer, M.; Civera, J.; Voos, H. Situational Graphs for Robot Navigation in Structured Indoor Environments. arXiv 2022, arXiv:2202.12197. [Google Scholar] [CrossRef]

- Sousa, Y.C.N.; Bassani, H.F. Topological Semantic Mapping by Consolidation of Deep Visual Features. IEEE Robot. Autom. Lett. 2022, 7, 4110–4117. [Google Scholar] [CrossRef]

- Tsai, C.; Su, M. VSGM - Enhance robot task understanding ability through visual semantic graph. arXiv 2021, arXiv:2105.08959. [Google Scholar]

- Rangel, J.C.; Cazorla, M.; García-Varea, I.; Romero-González, C.; Martínez-Gómez, J. Automatic semantic maps generation from lexical annotations. Auton. Robot. 2019, 43, 697–712. [Google Scholar] [CrossRef]

- Bernuy, F.; Ruiz-del-Solar, J. Topological Semantic Mapping and Localization in Urban Road Scenarios. J. Intell. Robot. Syst. 2018, 92, 19–32. [Google Scholar] [CrossRef]

- Roddick, T.; Cipolla, R. Predicting Semantic Map Representations From Images Using Pyramid Occupancy Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11135–11144. [Google Scholar] [CrossRef]

- Vogel, T.; Seibel, A.; Giese, H. The Role of Models and Megamodels at Runtime. In Models in Software Engineering—Proceedings of Workshops and Symposia at MODELS 2010, Oslo, Norway, 2–8 October 2010, Reports and Revised Selected Papers; Dingel, J., Solberg, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6627, pp. 224–238. [Google Scholar] [CrossRef]

- Romero-Garcés, A.; Freitas, R.S.D.; Marfil, R.; Vicente-Chicote, C.; Martínez, J.; Inglés-Romero, J.F.; Bandera, A. QoS metrics-in-the-loop for endowing runtime self-adaptation to robotic software architectures. Multim. Tools Appl. 2022, 81, 3603–3628. [Google Scholar] [CrossRef]

- Rada-Vilela, J. The FuzzyLite Libraries for Fuzzy Logic Control 2018. Available online: https://fuzzylite.com/ (accessed on 23 August 2022).

- Faconti, D. BehaviorTree.CPP. Available online: https://github.com/BehaviorTree/BehaviorTree.CPP (accessed on 23 August 2022).

- MIRA—Middleware for Robotic Applications. Available online: http://www.mira-project.org/joomla-mira/ (accessed on 23 August 2022).

- Chella, A.; Pipitone, A.; Morin, A.; Racy, F. Developing Self-Awareness in Robots via Inner Speech. Front. Robot. AI 2020, 7. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Romero-Garcés, A.; Hidalgo-Paniagua, A.; González-García, M.; Bandera, A. On Managing Knowledge for MAPE-K Loops in Self-Adaptive Robotics Using a Graph-Based Runtime Model. Appl. Sci. 2022, 12, 8583. https://doi.org/10.3390/app12178583

Romero-Garcés A, Hidalgo-Paniagua A, González-García M, Bandera A. On Managing Knowledge for MAPE-K Loops in Self-Adaptive Robotics Using a Graph-Based Runtime Model. Applied Sciences. 2022; 12(17):8583. https://doi.org/10.3390/app12178583

Chicago/Turabian StyleRomero-Garcés, Adrián, Alejandro Hidalgo-Paniagua, Martín González-García, and Antonio Bandera. 2022. "On Managing Knowledge for MAPE-K Loops in Self-Adaptive Robotics Using a Graph-Based Runtime Model" Applied Sciences 12, no. 17: 8583. https://doi.org/10.3390/app12178583

APA StyleRomero-Garcés, A., Hidalgo-Paniagua, A., González-García, M., & Bandera, A. (2022). On Managing Knowledge for MAPE-K Loops in Self-Adaptive Robotics Using a Graph-Based Runtime Model. Applied Sciences, 12(17), 8583. https://doi.org/10.3390/app12178583