Abstract

Graph Convolutional Neural Network (GCN) is widely used in text classification tasks. Furthermore, it has been effectively used to accomplish tasks that are thought to have a rich relational structure. However, due to the sparse adjacency matrix constructed by GCN, GCN cannot make full use of context-dependent information in text classification, and it is not good at capturing local information. The Bidirectional Encoder Representation from Transformers (BERT) has the ability to capture contextual information in sentences or documents, but it is limited in capturing global (the corpus) information about vocabulary in a language, which is the advantage of GCN. Therefore, this paper proposes an improved model to solve the above problems. The original GCN uses word co-occurrence relationships to build text graphs. Word connections are not abundant enough and cannot capture context dependencies well, so we introduce a semantic dictionary and dependencies. While the model enhances the ability to capture contextual dependencies, it lacks the ability to capture sequences. Therefore, we introduced BERT and Bi-directional Long Short-Term Memory (BiLSTM) Network to perform deeper learning on the features of text, thereby improving the classification effect of the model. The experimental results show that our model is more effective than previous research reports on four text classification datasets.

1. Introduction

Text classification is the basic work of natural language processing tasks, and it is also one of the hot spots in its research field. It mainly uses text classification to manage texts effectively and plays an important role in application fields such as information retrieval, public opinion analysis, topic classification, spam screening, and opinion mining. Traditional text classification models are mainly based on machine learning algorithms, such as Support Vector Machines (SVM) [1], naive Bayes [2], etc. These algorithms are relatively mature and have achieved good results. Of course, they also have limitations, such as high-dimensionality, data sparsity and other problems in text classification, insufficient representation of text semantic features, etc. Deep learning methods can extract text features that are richer in semantic information, and it can greatly save the cost of manual intervention. Existing text classification models based on deep neural networks include typical neural network methods (e.g., Convolutional Neural Network (CNN) [3], Recurrent Neural Network (RNN) [4], Capsule Network [5]), and their excellent variants (e.g., Long and Short-Term Memory (LSTM) [6] Network, Gated Recurrent Unit (GRU) [7], Convolutional Recurrent Neural Network (CRNN) [8]), and various models based on attention [9] (e.g., BERT [10]), etc. These deep learning models can well capture the semantic and syntactic information in the local continuous word sequence, but most of them may ignore the information with discontinuous semantic words. Moreover, they are limited in their ability to capture the global features of the information [11]. For example, global word co-occurrence information, global syntactic structure information, etc., and the global dependencies between all words also have a certain guiding role for classification. The Graph Convolutional Neural Network [12] method has been a research hotspot in recent years. This model performs graph convolution operations on the constructed topological relationship graph to obtain features, thereby realizing text classification. In order to solve the classification problem, many variants of GCN [13,14,15,16] have been proposed and explored. Although GCN is gradually becoming a good choice for graph-based text classification, the current research still has some shortcomings that cannot be ignored.

In the recent study on text classification, researchers represented text as a graph structure and captured the structural information of the text as well as the discontinuous and long-distance dependencies between words through a Graph Convolutional Network. The most representative work is Graph Convolutional Network (GCN) [12] and its variant Text GCN [13]. This method represents words as nodes and aggregates the neighborhood information of the nodes through an adjacency matrix, thus integrating the global context of domain-specific languages to some extent. When the graph convolutional network model builds text graphs, most of them use the co-occurrence relationship of words and the inclusion relationship between documents and words, resulting in a lack of richer relationship information expression between words and words and the insufficient capture of long-distance dependencies of words in sentences. The measure of a word co-occurrence relationship generally uses normalized point-wise mutual information (NPMI) to calculate the weight between two word nodes. However, the calculation of NPMI depends on the corpus. If some words have a low probability of appearing in the corpus, this method may make the calculation result of NPMI very small, resulting in the determination that the similarity of two words is low or not.

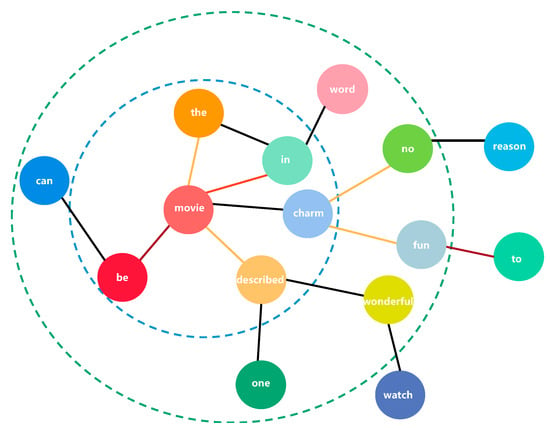

In addition, graph convolution based on word co-occurrence relationships and semantic dictionary composition can only capture the relationship of non-continuous entities in the text and cannot simultaneously capture the short-distance and long-distance dependencies of words in sentences. For example, a text graph is constructed from a corpus of word co-occurrence relations and semantic dictionaries, as shown in Figure 1. Here “This movie can be described in one word: wonderful.” is a sentence in the corpus. Taking “wonderful” as the center, the blue circle represents the aggregation of one-level neighbor node information, and the green circle represents the aggregation of two-level neighbor node information. Among them, “wonderful” is used to modify “movie” in the sentence, and it is very useful for analyzing the expression of the text as a whole. However, the two word nodes “movie” and “wonderful” in Figure 1 are not directly related, but there should be a certain dependency between them. Graph Convolutional Network only aggregates direct neighbor node information, so one layer of GCN can only capture short-distance dependencies of words in sentences. Capturing long-range dependencies between words such as “movie” and “wonderful” can be solved by increasing the number of GCN layers. However, current research shows that multi-layer graph convolutional networks for text classification tasks incur high space complexity. At the same time, increasing the number of network layers will also make the local features converge to similar values. To overcome this problem, we introduce dependencies when building the text graph. Dependency syntax is mainly concerned with the relationship between words (sentence components) in a sentence, and it is not affected by the physical location of the components. By introducing dependencies, short-range dependencies and long-range dependencies can be well utilized while also providing syntactic constraints and partially reducing the number of GCN layers.

Figure 1.

Text Graph.

However, due to the characteristics of the graphs, when learning word features, graph convolutional networks do not effectively capture the word order that is very useful for them, resulting in the loss of contextual semantic information of the text. For example, “You have a lot of hard work.”, “You have to work hard.”, where the word “work” will change meaning depending on its position in the contextual sentence. The graph formed by the words in these two sentences cannot reflect the order between the words, so it cannot represent the characteristics of word order changes. Obviously, this is very important for understanding the meaning of the sentence. In addition, the graph convolutional network cannot distinguish polysemy; for example, “She’s grown into quite an individual.”, “So this individual came up.”, the meaning of the word “individual” expresses different semantics depending on the context. In the first sentence, it is the meaning of “person with personality”, and in the second sentence, it has the meaning of “weird person”. In this case, if not differentiated, it may affect the understanding of the text, thereby affecting the classification effect. Leveraging multilayer multi-head self-attention and positional word embeddings, BERT mainly focuses on local contiguous word sequences that provide local contextual information. By pre-training a large amount of text data in different fields, BERT can well infer the meaning that polysemous words should express in the current context. However, BERT may have difficulty interpreting global information about vocabulary.

To improve the above-mentioned problems, this paper proposes an improved model (IMGCN) for text classification. On the basis of Vocabulary Graph Convolution (VGCN) [15], a semantic dictionary (WordNet) and dependencies are introduced, and the features of GCN and BERT are further learned through residual bi-layer BiLSTM with an attention mechanism [17]. By building dependencies, GCNs can be made to capture long-range dependencies of words in sentences more efficiently. WordNet was introduced to provide more useful connections between words. At the same time, the BERT model is used to extract the local feature information of a text. The global vocabulary information obtained by GCN is combined with the context information obtained by pre-trained BERT. Considering that the text has a hierarchical structure of word-sentence-document, in order to obtain more comprehensive text features, we use hierarchical BiLSTM to extract the combined features hierarchically to capture word, sentence, and document feature information, respectively. In the process of word-to-sentence feature extraction, the attention mechanism is used to generate sentence embeddings, which solves the problem that keyword features cannot be paid attention to in text classification. We changed the initialization method of the context vector in the attention mechanism and combined the bidirectional word hidden features as the initial value of the context vector to guide the learning of the attention mechanism. By introducing residual connections, the neural network degradation problem that occurs when stacking multi-layer network models can be alleviated, and the model can learn residuals to better obtain new features.

We call the proposed model IMGCN. Experiments on four benchmarking datasets demonstrate that IMGCN can effectively improve the results in current GCN-based methods and achieve better results. The main contributions of this paper are as follows:

- The dependency and semantic dictionary are fully integrated into IMGCN. They can enrich the connections between words and capture the long-range dependencies of words in sentences while providing syntactic constraints and partially reducing the number of GCN layers.

- Combining graph features and sequence features, using hierarchical BiLSTM to extract input features hierarchically, capturing word and sentence feature information, respectively, so as to obtain more comprehensive text features.

2. Related Work

Traditional text classification methods are mainly based on feature engineering. Feature engineering includes the bag-of-words model [18] and n-grams, etc. Later, there was research [19,20] that converted text into graphics and performed feature engineering on graphics. However, these methods cannot automatically learn the embedding representation of nodes. Compared with traditional methods, deep learning-based methods can automatically acquire features, and they can learn the deep semantic information of the text. In terms of deep learning models, Kim 2014 [3] uses a simple convolutional neural network (CNN) for text classification. Tang et al. 2015 [21] proposed a gated recurrent neural network. Yang et al. 2016 [17] proposed the use of hierarchical attention networks to model and classify documents. Wang et al. 2016 [22] introduced the attention mechanism into LSTM. Dong et al., 2020 [23] introduce the Bidirectional Encoder Representation from Transformers (BERT) [10] and the self-interaction attention mechanism. Most attention-based deep neural networks mainly focus on local continuous word sequences, which provide local context information. However, the ability to capture the global characteristics of the relevant information may not be sufficient.

In recent years, many studies have tried to apply convolution operations to graph data. Kipf and Welling 2017 [12] proposed Graph Convolutional Networks (GCNs), and the model has achieved good results on node classification tasks. Velickovic et al. 2018 [24] proposed a Graph Attention Network (GAT), which uses the attention mechanism [25] to assign weights to the neighborhood information of nodes. Compared with the GCN without adding weights, it has a better promotion. Later, Yao et al. 2019 [13] proposed the TextGCN model, which introduced the Graph Convolutional Network to text classification for the first time. By modeling the text into a graph, the nodes in the graph are composed of words and documents, and a good effect is finally obtained. Cavallari et al. 2019 [26] introduced a new setting for graph embedding, which considers embedding communities instead of individual nodes.

Now, many studies try to combine GCN with other models. Ye et al. 2020 [14] input the word and document nodes trained by GCN and BERT’s word vector into the BiLSTM classification model, where the input vector of GCN is a one-hot vector. Lu et al. 2020 [15] integrated the vocabulary graph embedding module with BERT and achieved good results in many public datasets, where GCN uses BERT’s word vector. In contrast to this, in our method, GCN uses BERT’s word vectors and uses dependencies when building the text graph. One graph focuses on extracting dependencies between words, and the other graph focuses on word co-occurrences. We use a two-layer BiLSTM with attention combined with GCN and BERT; one layer is used to focus on the word sequence, the other layer focuses on the sentence sequence, and a residual operation is added after BiLSTM to learn the residual.

3. Proposed Method

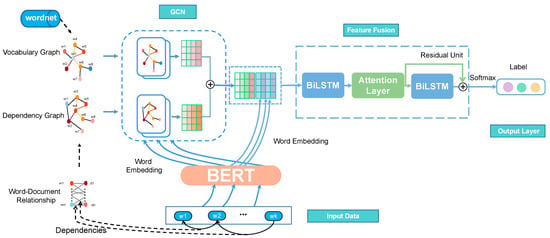

Following in-depth research on text classification based on neural networks, this paper proposes IMGCN. The whole process of IMGCN can be divided into the steps of text composition, graph convolutional network, feature fusion, and final prediction. The architecture of IMGCN is shown in Figure 2. The colored continuous line in the IMGCN architecture is the output of each single model, and the intermittent line represents the modules composed of a single model. We construct two text graphs by establishing edges between word nodes. Build a vocabulary graph from word co-occurrence relations and semantic lexicon. Build a dependency graph from the inclusion and dependencies of documents and words. We use point-wise mutual information (NPMI) [27] and a semantic dictionary to assign association weights to edges between words to jointly build a text graph. Then, the dependency-based TF-IDF method is used to assign association weights to the edges between words and documents and use a matrix transformation to map them to the relations between words and words, thereby constructing another text graph. Then the word embedding of BERT is used as the input vector required by the graph convolutional network, and the two text graphs are respectively used as the adjacency matrix required by the graph convolutional network. Subsequently, they are separately fed into a two-layer graph convolutional network for training, and the two hidden output vectors are then summed. Next, the added word vector is fused with the feature vector of the pre-trained BERT and input to the first-layer BiLSTM model to extract the semantic dependency information between words within a single sentence. To further distinguish the importance of words, we add an attention mechanism to the first layer of BiLSTM to distinguish the importance of words by assigning different word weights. The word weights and the output of the first layer of BiLSTM are weighted and summed to form an overall representation of the current sentence. Here, the bidirectional word hidden features are combined as the context vector of the attention mechanism to guide the learning of the attention mechanism. The obtained sentence feature representation is then input to the second layer of the BiLSTM to extract semantic dependency information between sentences within the text. The learned sentence feature representation and the feature obtained by the context attention layer are subjected to residual operation to form an overall text representation. Finally, the overall text representation is input to the Softmax classification layer to obtain the final category of the text.

Figure 2.

The architecture of IMGCN.

3.1. Text Graph

3.1.1. Vocabulary Graph

In this paper, we first use a semantic dictionary (Wordnet) and Normalized Pointwise Mutual Information (NPMI) to construct a text graph. For constructing text graphs using word co-occurrence relationships, normalized pointwise mutual information (NPMI) is generally used to calculate edge weights between two word nodes, which is a common measure of word association. However, the calculation of NPMI depends on the corpus. If the probability of some words appearing in the corpus is low, this method may lead to a small calculation result of NPMI, and the similarity of two words is judged to be low or not. For example, the word “abundant” has a very low probability of appearing in the corpus. Let P (word and other words) be the co-occurrence probability between words. When calculating the co-occurrence probability of “abundant” and other words, the values of P (abundant and other words) and P (abundant) are very small; even if it is 0, it will result in a very small value of NPMI, so in the end, “abundant” and other words may be judged as having little or no semantic relevance.

In addition, there are a lot of connections between words, and the most direct connection is the synonymous relationship that exists between certain words. For example, the words “abundant” and “plentiful” have very similar meanings and can be used interchangeably in most cases. If two words are synonymous, then only constructing a text graph through word co-occurrence relationship may lack this part of word relationship information. If this synonymous relationship can be used, the relationship information of words can be enriched, thereby improving the accuracy of the calculation. The semantic dictionary describes the different semantic relations between words. Therefore, we jointly construct a text graph using word co-occurrence relations and semantic lexicon.

For the selection of the semantic dictionary, we use WordNet. WordNet describes a network of semantic relationships between words. For every content word in the language, it gives all possible word meanings. WordNet organizes words in a dictionary using semantic and lexical relations, where semantic relations represent the degree of association between the semantic items of two words. Nouns, verbs, adverbs, and adjectives are each organized into a network of synonyms, each set of synonyms representing a basic semantic concept, and these sets are also connected by various relationships. Since a word can have multiple meanings, it is necessary to compare the meanings between the two words. Therefore, we first use WordNet to expand synonyms and then use the WordNet-based Wup method to calculate the semantic similarity between words in the two synonym sets and calculate the average of all similarity scores. This value is the final similarity score of the two words. The larger the calculated value, the higher the semantic similarity between them. Among them, the “Wup” method [28] is a similarity measurement method based on the path structure proposed by Wu and Palmer. The similarity is calculated according to the similarity of the meaning of the words and the position of the synonym sets in the hypernym tree relative to each other.

Therefore, for two words i and j, we first calculate the edge weights between two words using the NPMI method. If the calculated NPMI value is small, the WordNet-based method is used for calculation, which can minimize the problems caused by the low frequency of some words in the corpus, and at the same time, add more word relationship information. Formally, the edge weight between node i and node j is defined as:

The NPMI value of a pair of words i, j is calculated as:

where , , is the number of all sliding windows containing word i, is the number of all sliding windows containing word i and word j, and is the total number of sliding windows. We set the window larger to gain long-term dependence. The value range of NPMI is . A positive NPMI value indicates that the semantic relevance between words is high, while a negative NPMI value indicates that there is little or no semantic relevance. Therefore, we only create an edge between word pairs with positive NPMI values.

When the NPMI value of a pair of words i, j is calculated to be negative, their semantic similarity is calculated as:

where represents the semantic similarity of two words in the synonym lexicon, Let be the deepest parent concept of and , is the number of nodes on the path from to , is the number of nodes on the path from to , and is the root of the concept hierarchy tree from the number of nodes on the path of the node. Let be the synonym word set of word i, be the number of words in , be the synonym word set of word j, be the number of words in , and a is the number of word pairs with 0 semantic similarity in the word set. The value range of is . If the values of the two words are within a certain range, we create an edge between the two words. Experiments show that the performance is better when is between 0.5 and 1.

3.1.2. Dependency Graph

Based on the above two relational compositions, graph convolutional networks can capture short-range dependencies of words in sentences but cannot capture long-range dependencies at the same time. Therefore, we introduce dependencies to construct another text graph to overcome the above problems. In addition, in order to obtain the inclusion relationship between documents and words while introducing the dependencies between words, we combine the two relationships. The edge weights between general document nodes and word nodes are calculated using the TF-IDF algorithm. TF-IDF is the word frequency-inverse text frequency index. The term frequency (TF) is the number of times a word appears in the document, and the inverse text frequency (IDF) reflects the general importance of the feature word in the corpus. However, the TF-IDF method lacks the combination of textual semantics, which is useful for understanding textual content. To overcome this problem, we refer to [29], introduce dependencies, and use an improved edge weight calculation method to understand and optimize text features.

Dependency syntax is mainly concerned with the relationship between the various words in a sentence, and it is not affected by the physical location of the components [30]. Since the importance and relevance of various dependencies in a sentence are not the same, first, the importance of words to sentences, texts, and even categories is determined according to the different dependencies between words and predicates in terms of word meaning. Then, according to the importance of different components to the sentence, the sentence composition is divided into eight levels.

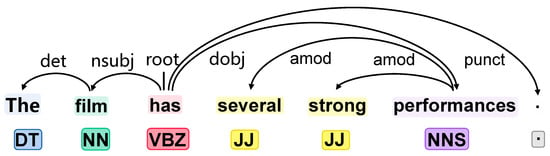

The results of dependency analysis are usually represented by a labeled directed graph. For example, as shown in Figure 3, “root” represents the central word “has”. Examples of the relationship between the performance head word and its subsidiary words are “has” and “performances”. The result of dependency analysis shows that the relationship between these two words is a direct object (dobj, direct object) relationship.

Figure 3.

Directed graph of dependency analysis.

After classifying the text features in the data set according to the dependency relationship, this paper uses the following dependency-based TFIDF weight calculation method [29]. The specific steps are as follows:

For the word i, we calculate the number of times that the word i appears in the text and set it as n. Then, according to the result of the dependency syntax analysis implemented by Stanford Parser, it is obtained that the word i belongs to the m-th sentence component in the text. Furthermore, according to paper [29], classify the m-th occurrence of word i in the text into level, and assign weight to it. The calculation process is shown in Formula (4):

Then the improved frequency with the weight of word i in the text is calculated by Formula (5). For word i, the improved weight based on the dependency relationship is as shown in Formula (6). Where s represents the total number of words in the text where the word i is located, pi represents the number of texts containing the word i, and D represents the total number of texts in the dataset. Where λ is a parameter, which is used to adjust the weight gap between feature levels, and the range is [0,1].

Calculate the weight of the edge between the word node and the document node according to the above method, and obtain the adjacency matrix A, as shown in Formula (7). Then, the relationship between the words and documents is mapped to the relationship between words and words containing document information and dependencies through matrix transformation so as to construct another text graph .

where , n is the number of document nodes and m is the number of word nodes.

3.2. Vocabulary GCN

A general graph convolutional network is a multi-layer (usually two-layer) neural network that performs convolution directly on the graph and updates a node’s embedding vector based on the information of its neighbor nodes. The graph nodes of a graph convolutional network are “task entities”, such as documents that need to be classified. It requires all entities, including those from training, validation, and test sets, to be displayed in the graph, so that node representations are not missing from downstream tasks. This limits the application of graph convolutional networks to many prediction tasks where test data are not seen during training. Therefore, we use Vocabulary Graph Convolution (VGCN), whose graph is constructed on the vocabulary without using the training set to obtain entities, mainly convolving related words. Then for a single document, the single-layer graph convolution is shown in Formula (8):

where represents the vocabulary graph.

to extract part of vocabulary related to the input matrix , combining the words in the input sentence with the relevant words in the vocabulary. W hold hidden state vector of a weight of a single document, dimension is .

The corresponding two-level GCN with LeakyReLU function is:

where m is the mini-batch size, v is the vocabulary size, h is the hidden layer size, and c is the class size or sentence embedding size. W holds the weight of the hidden state vector of a single document. Each row of is a vector containing document features, which is the word embedding of BERT. Then a two-layer convolution is performed to combine the words in the input sentence with the related words in the vocabulary.

Specifically, we perform two layers of graph convolution for the two text graphs generated in the previous section, then add the two generated hidden layer vectors.

In the training process of the original GCN, ReLU is used as the activation function of the GCN. In this paper, the non-linear function LeakyReLU is selected as the activation function of GCN, which overcomes the problem of gradient disappearance and speeds up training. In addition, L2 regularization is also used to reduce overfitting. The LeakyReLU function is as follows:

3.3. Feature Fusion and Classification

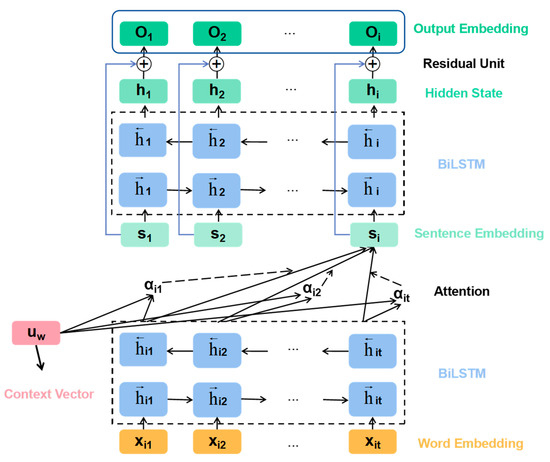

Since words are represented as nodes, the neighborhood information of nodes is aggregated through an adjacency matrix, ignoring the order structure of a text, resulting in the loss of contextual semantic information of a text. Therefore, we combine the global vocabulary information obtained by GCN with the context information obtained by the pre-trained BERT. While using the global vocabulary information, we can also take into account the order of words to capture contextual information. In order to dig deeper into the semantics, the sequence model LSTM can be used to fuse the two parts of information, but it can only capture features in a single direction, from front to back or from back to front. The mode of human reading text is to use the foregoing and the following to jointly infer the meaning of a word when encountering a word. Therefore, BiLSTM models that can capture features in both front-to-back and back-to-front directions are more in line with this pattern. Considering that text has a hierarchical structure, the text is composed of sentences, and sentences are composed of words. Therefore, we use a two-layer BiLSTM to learn word features and sentence features, respectively. Since the feature words have different importance to the text, and the output vector of BiLSTM does not distinguish the importance of words, we add an attention mechanism to its hidden output. We changed the initialization method of the context vector in the attention mechanism and combined the bidirectional word hidden features as the initial value of the context vector to guide the learning of the attention mechanism. At the same time, in order to prevent the deepening of the model layers from affecting the classification results, we introduced the residual operation. The specific structure of the model feature fusion module is shown in Figure 4.

Figure 4.

Residual two-layer BiLSTM structure with attention.

The module consists of a semantic feature splicing layer, word-level feature extraction layer, contextual attention layer, and a sentence-level feature extraction layer. First, the GCN and the pre-trained BERT model convert the text word sequence into two different word vector representations. Secondly, the corresponding fusion of the obtained two different word vector representations is input into the first-layer BiLSTM model to extract the semantic dependency information between words within a single sentence, and a layer of attention mechanism is added to the first-layer BiLSTM, constructs an overall representation of the current sentence. Here, the bidirectional word hidden features are combined as the context vector of the attention mechanism. Then the obtained sentence feature representation is input to the second layer BiLSTM to extract semantic dependency information between sentences within the text. The learned sentence feature representation and the sentence feature representation obtained by the context attention layer are subjected to residual operation to form an overall text representation. Finally, the overall text representation is input to the Softmax classification layer to obtain the final category of the text.

3.3.1. Semantic Feature Connection Layer

We do not directly classify the output features of GCN but use the generated hidden feature representation for subsequent learning. Since does not adequately capture contextual information and does not discriminate word vector representations of polysemy, pre-trained BERT is introduced. The BERT model uses a bidirectional transformer structure for encoding, which can characterize the specific semantics of each word in the context and distinguish multiple semantics according to the semantic relationship of the context. Each word obtains the word vector representation W of the text through the pre-trained BERT model, as shown in Formula (11):

where represents the vector matrix of the i-th text, represents the feature vector of the j-th word in the i-th text, and n represents the maximum sentence length.

Then, the embedded representation output after the second layer graph convolution operation in the GCN model is spliced with the word vector W generated by the pre-trained BERT to obtain a multi-feature vector X, as shown in Formula (12):

3.3.2. Word-Level Feature Extraction Layer

After the semantic feature connection layer is the word-level feature layer BiLSTM model, which is used to extract semantic dependency information between words within a single sentence. The BiLSTM model corresponds to the forward and backward LSTM models. The sequence information and contextual features are captured by LSTMs in two directions, and the combination of the two output layers generated by the two directions can provide past contextual information and future contextual information. The input of this layer of BiLSTM is the result X after semantic feature concatenation.

Suppose a text has L sentences , where , and each sentence contains words. represents the word vector of the t-th word in the i-th sentence, where . In the forward LSTM layer, read sentence s from x to y and perform forward calculation to obtain the hidden state h; in the backward LSTM layer, read sentence s from y to x and perform reverse calculation to obtain the hidden state m, as shown in Equations (13) and (14):

Finally, the hidden state obtained by the forward LSTM layer is spliced with the hidden state obtained by the backward LSTM layer to obtain the final hidden layer state of the bidirectional LSTM, as shown in Formula (15):

3.3.3. Contextual Attention Layer

Considering that the words in a sentence contribute differently to the meaning expression of the sentence, the attention mechanism can focus on important information from a large amount of information while ignoring unimportant information. Therefore, an improved attention mechanism is used to focus on important information, and these word representations are aggregated to form sentence feature representations. The hidden output result of the word-level feature layer is used as the input of the context attention layer, and the calculation process of assigning attention weights is shown in Equations (16) and (17):

where is the weight matrix, is the bias term, and is the normalized importance weight. is the attention context vector, which contains useful information for the text to guide the attention model to locate informative words from the input sequence, and thus plays an important role in the attention mechanism. Prior work either ignores the context vector or initializes it randomly, which greatly weakens the role of context. Therefore, we use the combination of word hidden features learned by LSTM in two directions as the context vector of the attention mechanism, that is, . It contains contextual information of words in sentences to guide the learning of the attention mechanism.

Next, the word importance weight calculated by Formula (17) and the hidden output result of the word-level feature layer are weighted and summed. The word-level feature vectors are aggregated into sentence-level feature vectors to form an overall representation of the current sentence, as shown in Formula (18):

3.3.4. Sentence Level Feature Extraction Layer

Since a text contains multiple sentences, there are semantic dependencies between each sentence. Therefore, the main task of the sentence-level feature extraction layer is to extract the semantic dependency information between sentences within the text in units of sentences. The sentence vector obtained by the contextual attention layer is input into a layer of BiLSTM for sentence sequence learning. Where is the hidden state obtained by the forward LSTM layer through the forward input, is the hidden state obtained by the backward LSTM layer through the reverse input, and finally, the two hidden states are spliced to obtain .

The deepening of the number of network layers can improve the accuracy of the model, but there are problems with the disappearance of the gradient of the deep network and the degradation of the network. When the depth reaches a certain level, the accuracy of the model begins to decline again, and the fitting ability becomes worse. In the deep neural network, the residual network can effectively inhibit the network degradation phenomenon and avoid the problem of sub-optimal solutions in deeper networks. Therefore, we introduce a residual connection between two adjacent layers and perform a residual operation on the sentence feature representation learned by the sentence-level feature extraction layer and the sentence feature representation output by the context attention layer. Let the model learn the residual to obtain a higher-level feature representation to achieve a better classification effect, as shown in Formula (22):

Finally, the result is input to the Softmax layer for classification, and the final category of the text can be obtained.

4. Discussion

In this section, we will conduct experiments on four publicly available datasets and analyze the experimental results of different models. Subsequently, ablation studies were performed to further explore the effectiveness of each component.

4.1. Datasets

For an overall comparison with other more advanced methods, we choose the SST-2 dataset [31], MR [32], CoLA [33], and R8 dataset [13], which are commonly used in other methods to evaluate our proposed method. The four datasets contain the content of reviews, grammar, and news. The SST-2 dataset is the Stanford Sentiment Treebank dataset, which is a binary single-sentence classification task consisting of sentences extracted from movie reviews and human annotations of their sentiments. We use the public version, which has 6920 training samples, 872 validation samples, and 1821 test samples. In total, there are 4963 positive reviews and 4650 negative reviews. The average length of reviews is 19.3 words. The MR dataset is a sentiment classification dataset for movie reviews. We used the public version in [34] with 3554 test samples, 6392 training samples, and 710 validation samples. The average length of reviews is 21.0 words. The CoLA dataset is a grammar dataset released by New York University. This task is mainly to judge whether the grammar of a given sentence is correct, which belongs to the single-sentence text binary classification task. We use the public version with 8551 training data, 1043 validation data, and 1062 test samples for a total of 6744 positives and 2850 negatives. The average length is 7.7 words. The R8 dataset represents a subset of the Reuters 21,578 dataset. The dataset includes eight categories, divided into 5485 training documents and 2189 test documents. We randomly select 20% of the training set of this dataset as the validation set. The average length is 65.72 words.

4.2. Implementation Details

The WordNet threshold of all datasets in this paper is set to 0.5. When calculating NPMI on the dataset, the entire sentence is used as a text window to construct a vocabulary graph. The NPMI threshold of all datasets is set to 0.2 to filter out meaningless relationships between words. In the IMGCN model, the graph embedding output size is set to 16, and the hidden dimension of graph embedding is set to 128. We use the pre-trained BERT model provided by Google and set the maximum sequence length to 200. The dropout rate is set to 0.2. We train the model in 15 epochs. The batch size is set to 16. The Learning rate of the SST-2 dataset is set to 1 × 10−5, and the Learning rate of the other datasets is set to 8 × 10−5. The loss weight decay of the CoLA dataset is set to 0.0001, and the loss weight decay of other datasets is set to 0.001.

4.3. Main Experimental Results

To verify the performance of the proposed model, we conduct comparative experiments with other models. Including the classic Bi-LSTM [35], Text-GCN [13], and BERT [10] models, and three improved GCN methods: VGCN [15], VGCN-BERT [15], and STGCN + BERT + BiLSTM [14].

- Bi-LSTM: Bidirectional LSTM is often used for text classification. Use BERT pre-trained word embeddings as input to the Bi-LSTM model.

- Text-GCN: A text classification method that uses graphs to model text. The words and documents in the text are regarded as nodes, where the edges of the document and the words are based on the appearance information of the words in the document, and the edges of the words and the words are based on the global word co-occurrence information of the words. It has many improvements over many of the most advanced models.

- VGCN: The model only uses VGCN. The pre-trained word embedding of BERT is used as an input. The output of VGCN is relayed to a fully connected layer with the Softmax function to generate classification scores. This model only uses the global information of the vocabulary graph.

- BERT: We use a small version (BERT-base-uncase) of pre-trained BERT.

- VGCN-BERT: Based on the word co-occurrence information, a graph convolutional network is constructed on the vocabulary graph, and then the graph embedding and word embedding are provided to the self-attention encoder in BERT.

- STGCN + BERT + BiLSTM: The topic model is employed to extract the short text topic information, and a short-text graph is constructed by word co-occurrence and document word relations. The word nodes and document nodes trained by the GCN and vectors generated by the BERT’s hidden layer are fed together to the BiLSTM classifier for short text classification.

In order to comprehensively evaluate the model, the weighted average F1-Score and macro F1-Score are selected as evaluation indicators. The main results on the accuracy, weighted average F1-Score, and macro F1-Score on test sets are presented in Table 1. The experimental results on four benchmark datasets confirm that the performance of IMGCN is basically better than other baseline models, which further proves the effectiveness and robustness of IMGCN in text classification. The “—” in the table represents no data. The bold in the table indicates the best results of the model. The Macro F1 score is the arithmetic average of multiple F1 scores, and it assigns equal weight to each class. The weighted avg F1 score assigns different weights to different classes according to the number of samples in each class. When calculating the two scores, the respective Macro F1 scores and Weighted avg F1 scores of the SST-2 and MR datasets are close. Since we rounded our calculations to two decimal places, this resulted in the macro F1 score and the weighted average F1 score being the same on SST-2 and MR.

Table 1.

Accuracy, Weighted average F1-Score and (Macro F1-score) on the test sets.

It can be seen from the table that IMGCN has a good classification effect compared with the baseline models, which proves the effectiveness of the model in this paper. The performance of the Bi-LSTM model and the models VGCN and Text-GCN that utilize the vocabulary graph are comparable. Compared with the VGCN and BERT models, the model in this paper has a certain degree of effect improvement, indicating that the introduction of dependencies, semantic dictionaries, the BERT model, Bi-LSTM model, and residual attention in this model does enrich the contextual semantic information and local feature information of GCN, making the model in this paper compared to other models have better classification performance. At the same time, compared with VGCN-BERT, IMGCN always performs better. All this shows that the combination of each component of this paper is useful.

4.4. Ablation Study

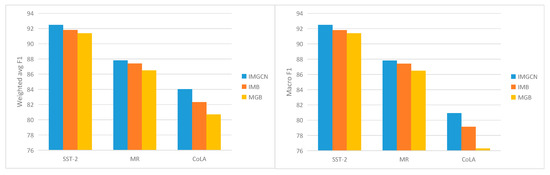

In order to further study the benefits of the various components of IMGCN, this paper conducted ablation experiments on the effectiveness of the constructed model. Since the experimental results of IMGCN and the three variant models on the R8 dataset are not much different, Table 2 only shows the results of the ablation experiments on the three public datasets of SST-2, MR, and CoLA. We selected two indicators, weighted average F1-Score and macro F1-Score. The experimental results of the IMGCN and the three variant models prove that the three components used in this paper can improve the performance of IMGCN. Among them, MGB is a model that only uses point-state mutual information to build a graph network for learning and stitches it together with the local information obtained by BERT. Based on the MGB model, IMB introduces a model that relies on syntactic and semantic dictionaries for composition. IMGCN is a model that further fuses graph features and local features obtained by BERT using hierarchical BiLSTM on the basis of IMB.

Table 2.

Experimental results of ablation study.

As shown in Table 2, we can see that the performance of the model with the corresponding module added has a certain degree of improvement compared with the overall model, indicating that the modules are complementary to each other. It can be observed that different modules have different functions in different datasets.

As shown in Figure 5, the performance of the model named IMB has a certain improvement compared to the MGB model. The F1 score of the IMB model on the three datasets is higher than that of the IGB model. Although the gap is not high, it also proves that the introduction of dependency syntax and semantic dictionary can enrich the word embedding information of the graph. Compared with the F1 score of the IMB model, the F1 scores of the SST-2, MR, and CoLA datasets on the IMGCN model all increased to varying degrees. This shows that the fusion of global information and local information, compared with simple splicing, fusion through model learning has better classification ability, which also shows the effectiveness of this module.

Figure 5.

Histogram of experimental results of ablation study.

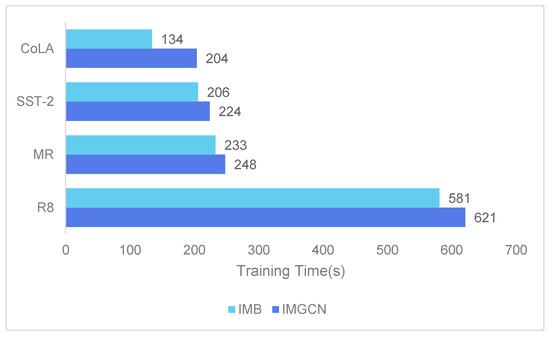

After combining the feature representation obtained by GCN and the pre-trained BERT, the feature fusion module is used for further learning, and the classification effect is better than directly splicing the two parts of the feature information, but the increase in the model will affect the training time. Therefore, we compare the training time of directly concatenating the two-part features and using the feature fusion module to combine the two-part features on four datasets, as shown in Figure 6.

Figure 6.

Average training time per epoch for different models on different datasets.

As can be seen from Figure 5, the IMB model directly splices the output features of the GCN model with the pre-trained BERT word vectors for classification, and the IMGCN model extracts hierarchical features based on the combination of the two features. As can be seen from Figure 5, when the model becomes more complex, the training time required for the model will also increase. Compared with the IMB model, the training time required for each epoch of the IMGCN model is 70 s, 18 s, 15 s, and 40 s for the four datasets of CoLA, SST-2, MR, and R8, respectively. The best results are achieved around 15 epochs of training. Therefore, compared with the IMB model, the overall training time of the IMGCN model on different datasets increases by about 4 to 17 min, while the classification accuracy and Weighted avg value of the IMGCN model on the CoLA dataset increase by 1.92% and 2.32%, respectively. Therefore, compared with the improvement of the model classification effect, the increase in the model training time is within an acceptable range. Therefore, it is meaningful for us to further fuse and learn the feature information obtained by the GCN model and the pre-trained BERT by using contextual attention and the hierarchical model.

5. Conclusions

In this research, we propose a new IMGCN model that uses the dependency relationship to capture the context dependency, and the semantic dictionary can increase the useful connection between words to supplement the shortcomings of the graph convolutional network in text representation. At the same time, a two-layer BiLSTM model is used to combine the local feature information obtained by the BERT model and the global information obtained by the GCN. Then mine the deep features of the text. Then add an attention mechanism to focus on keyword features. Furthermore, by introducing residual connections, the neural network degradation problem that occurs when the network model is stacked with multiple layers is solved. At the same time, let the model learn the residual so as to better obtain new features and improve the effect of text classification. We have conducted experiments on four datasets, which have achieved a certain degree of improvement compared with the baseline model.

In future work, we will further explore the dependencies and construct graph features richer in semantic information to improve the performance of the model. In addition, our proposed model does not analyze the model complexity and how to reduce the model complexity, which is the direction of further research.

Author Contributions

Conceptualization, B.X., C.Z., X.W. and W.Z.; Methodology, B.X.; Software, B.X.; Validation, B.X.; Writing—original draft preparation, B.X.; Writing—review and editing, B.X. and C.Z.; Visualization, B.X.; Project administration, B.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Joachims, T. Text Categorization with Support Vector Machines: Learning with Many Relevant Features. In Proceedings of the ECML, Chemnitz, Germany, 21–23 April 1998. [Google Scholar]

- Alhajj, R.; Gao, H.; Li, X.; Li, J.; Zaiane, O.R. Advanced Data Mining and Applications. In Proceedings of the Third International Conference (ADMA 2007), Harbin, China, 6–8 August 2007. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Zhang, H.; Xiao, L.; Wang, Y.; Jin, Y. A Generalized Recurrent Neural Architecture for Text Classification with Multi-Task Learning. arXiv 2017, arXiv:1707.0289. [Google Scholar]

- Zhao, W.; Peng, H.; Eger, S.; Cambria, E.; Yang, M. Towards Scalable and Reliable Capsule Networks for Challenging NLP Applications. arXiv 2019, arXiv:1906.0282. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; Merrienboer, B.V.; Gülçehre, Ç.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Wang, R.; Li, Z.; Cao, J.; Chen, T.; Wang, L. Convolutional Recurrent Neural Networks for Text Classification. In Proceedings of the 2019 International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.F.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R.; et al. Relational inductive biases, deep learning, and graph networks. arXiv 2018, arXiv:1806.01261. [Google Scholar]

- Kipf, T.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017, arXiv:1609.02907. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. Graph Convolutional Networks for Text Classification. arXiv 2019, arXiv:1809.05679. [Google Scholar] [CrossRef]

- Zhenbo, B.; Shiyou, Z.; Hongjun, P.; Yuanhong, W.; Hua, Y. A Survey of Preprocessing Methods for Marine Ship Target Detection Based on Video Surveillance. In Proceedings of the 2021 7th International Conference on Computing and Artificial Intelligence, Tianjin, China, 23–26 April 2021. [Google Scholar]

- Lu, Z.; Du, P.; Nie, J. VGCN-BERT: Augmenting BERT with Graph Embedding for Text Classification. Adv. Inf. Retr. 2020, 12035, 369–382. [Google Scholar]

- Xue, B.; Zhu, C.; Wang, X.; Zhu, W. An Integration Model for Text Classification using Graph Convolutional Network and BERT. J. Phys. Conf. Ser. 2021, 2137, 012052. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E.H. Hierarchical Attention Networks for Document Classification NAACL. In Proceedings of the NAACL-HLT 2016, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Harris, Z.S. Distributional structure. Word 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Rousseau, F.; Kiagias, E.; Vazirgiannis, M. Text Categorization as a Graph Classification Problem. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015. [Google Scholar]

- Luo, Y.; Uzuner, Ö.; Szolovits, P. Bridging semantics and syntax with graph algorithms-state-of-the-art of extracting biomedical relations. Brief. Bioinform. 2017, 18, 160–178. [Google Scholar] [CrossRef] [PubMed]

- Tang, D.; Qin, B.; Liu, T. Document Modeling with Gated Recurrent Neural Network for Sentiment Classification. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015. [Google Scholar]

- Wang, Y.; Huang, M.; Zhu, X.; Zhao, L. Attention-based LSTM for Aspect-level Sentiment Classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016. [Google Scholar]

- Dong, Y.; Liu, P.; Zhu, Z.; Wang, Q.; Zhang, Q. A Fusion Model-Based Label Embedding and Self-Interaction Attention for Text Classification. IEEE Access 2020, 8, 30548–30559. [Google Scholar] [CrossRef]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio’, P.; Bengio, Y. Graph Attention Networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.C.; Salakhutdinov, R.; Zemel, R.S.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille France, 6–11 July 2015. [Google Scholar]

- Cavallari, S.; Cambria, E.; Cai, H.; Chang, K.C.; Zheng, V.W. Embedding Both Finite and Infinite Communities on Graphs. IEEE Comput. Intell. Mag. 2019, 14, 39–50. [Google Scholar] [CrossRef]

- Bouma, G. Normalized (pointwise) mutual information in collocation extraction. Proc. GSCL 2019, 30, 31–40. [Google Scholar]

- Wu, Z.; Palmer, M. Verb Semantics and Lexical Selection. In Proceedings of the 32nd Annual Meeting on Association for Computational Linguistics, Las Cruces, NM, USA, 27–30 June 1994. [Google Scholar]

- Tang, J.; Qu, M.; Mei, Q. PTE: Predictive Text Embedding through Large-scale Heterogeneous Text Networks. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015. [Google Scholar]

- Tesnière, L. Eléments de syntaxe structurale, 1959 Paris, Klincksieck. Can. J. Linguist./Rev. Can. Linguist. 1960, 6, 67–69. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013. [Google Scholar]

- Pang, B.; Lee, L. Seeing Stars: Exploiting Class Relationships for Sentiment Categorization with Respect to Rating Scales. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05), Ann Arbor, MI, USA, 25–30 June 2005. [Google Scholar]

- Warstadt, A.; Singh, A.; Bowman, S.R. Neural Network Acceptability Judgments. Trans. Assoc. Comput. Linguist. 2019, 7, 625–641. [Google Scholar] [CrossRef]

- Zhu, X.; Xu, Q.; Chen, Y.; Chen, H.; Wu, T. A Novel Class-Center Vector Model for Text Classification Using Dependencies and a Semantic Dictionary. IEEE Access 2020, 8, 24990–25000. [Google Scholar] [CrossRef]

- Graves, A.; Mohamed, A.; Hinton, G.E. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).