Robust Estimation in Continuous–Discrete Cubature Kalman Filters for Bearings-Only Tracking

Abstract

:1. Introduction

2. Continuous–Discrete System Model and Square-Root Continuous–Discrete Cubature Kalman Filter Algorithm for Target Tracking

2.1. Continuous–Discrete System Model

2.2. Square-Root Continuous–Discrete Cubature Kalman Filter Algorithm

| Algorithm 1: SRCD-CKF. |

| Time update Step 1 Expectation and covariance matrix initialization: , . Step 2.1 Covariance decomposition: . Step 2.2 Calculate the state cubature point: . Step 2.3 State cubature point propagation: . Step 2.4 Calculate the state prediction value: . Step 2.5 Calculate the predicted square-root covariance: |

| . Measurement update Step 3.1 Calculate the state cubature point: , . Step 3.2 Measure the spread of cubature points: . Step 3.3 Calculate the predicted value of the measurement: . Step 3.4 Construct the measurement weighted center matrix: |

| Step 3.5 Calculate the innovation covariance matrix: . Step 3.6 Construct the state weighted center matrix: |

| Step 3.7 Calculate the cross covariance matrix: . Step 3.8 The continuous–discrete cubature gain is: . Step 3.9 Calculate the state estimate: . Step 3.10 Update the covariance matrix: . |

3. Robust Square-Root Continuous–Discrete Cubature Kalman Filter Algorithms

3.1. Robust SRCD-CKF with Huber’s Method

| Algorithm 2: HRSRCD-CKF. |

| Step 1 Repeat Step 1 of the SRCD-CKF algorithm. Steps 2.1–3.7 are equivalent to Steps 2.1–3.7 of the SRCD-CKF algorithm. Step 3.8 The innovation covariance matrix is redefined: . Step 3.9–3.11 are equivalent to Steps 3.8–3.10 of the SRCD-CKF algorithm. |

| Algorithm 3: MRSRCD-CKF. |

| Step 1 Repeat Step 1 of the SRCD-CKF algorithm. Steps 2.1–3.7 are equivalent to Steps 2.1–3.7 of the SRCD-CKF algorithm. Steps 3.8–3.11 are equivalent to Steps 3.8–3.11 of the HRSRCD-CKF algorithm, and is calculated by Equation (16). |

3.2. RSRCD-MCCKF Algorithm Based on Maximum Correntropy Criterion

| Algorithm 4: RSRCD-MCCKF. |

| Step 1 Repeat Step 1 of the SRCD-CKF algorithm. Steps 2.1–3.7 are equivalent to Steps 2.1–3.7 of the SRCD-CKF algorithm. Step 3.8 Calculate using Equations (21), (22), and (31). Step 3.9 Obtain the new Kalman filter gain using Equation (29). Step 3.10 Complete the estimation of the state value and the update of the error covariance matrix using Equations (28) and (30). |

3.3. VBSRCD-CKF Algorithm Based on Variational Bayes Criterion

| Algorithm 5: VBSRCD-CKF. |

| Step 1 At Step 1 of the SRCD-CKF algorithm, the initialization of and are included. |

| Steps 2.1–2.5 are equivalent to Steps 2.1–2.5 of the SRCD-CKF algorithm. |

| Step 2.6 Calculate the parameters of the IW distribution of measurement noise covariance: , , where is a scale factor that 0 < ≤ 1 and is a matrix that with a reasonable choice for the matrix . is an identity matrix, and d is the dimension of the measurement. |

| Step 3.1 Before calculating the state cubature point, first let , , and . |

| Steps 3.2–3.8 are equivalent to Steps 3.1–3.7 of the SRCD-CKF algorithm. |

| Step 3.9 For j = 1:M, iterate the following steps (M is the times of algorithm iteration). |

| Step 3.9.1 Calculate the measurement noise covariance matrix: . |

| Step 3.9.2 Update the innovation covariance matrix: . |

| Step 3.9.3 Calculate the continuous–discrete filter gain: . |

| Step 3.9.4 Calculate the state estimate and update the covariance: |

| Step 3.9.5 Calculate the updated parameter of the IW distribution of measurement noise covariance: |

| Step 3.10 Until j = M, output , , and . |

4. Numerical Simulation

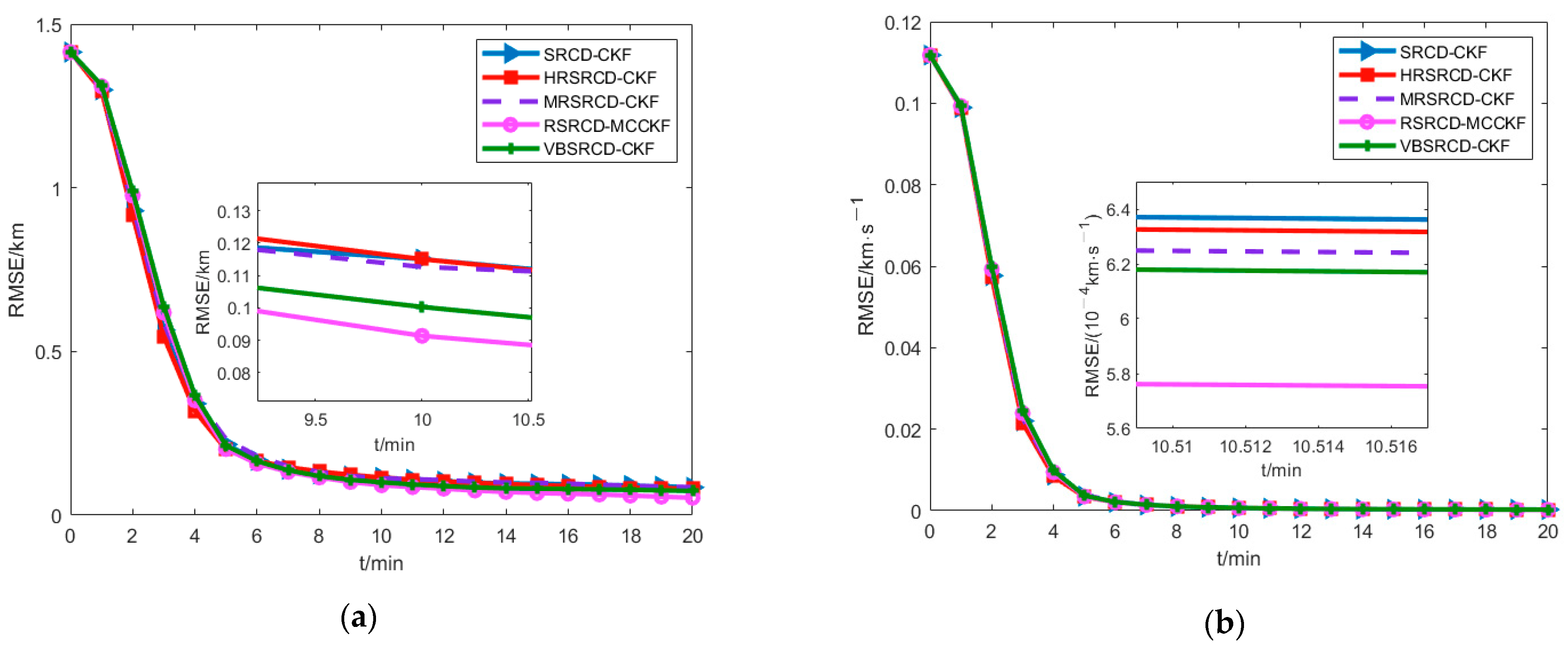

4.1. Gaussian Noise

4.2. Gaussian Mixture Noise

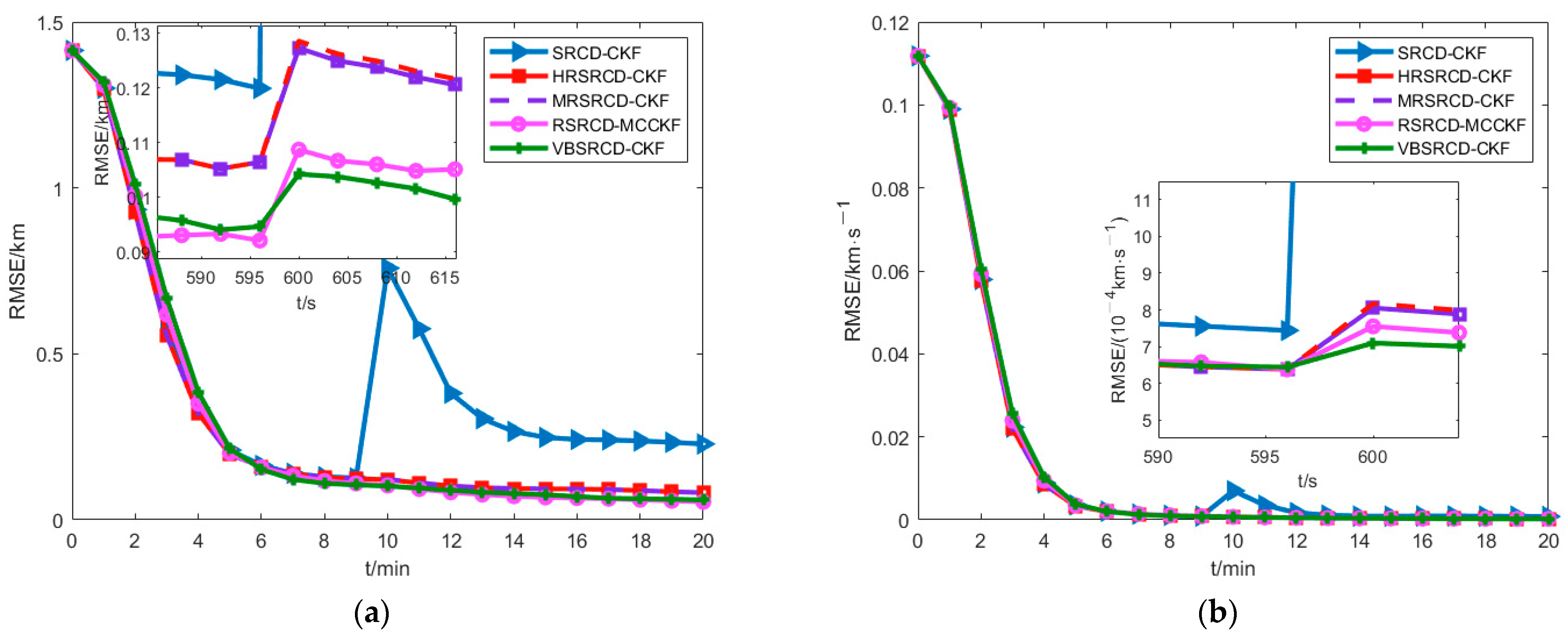

4.3. Gaussian Noise Together with Shot Noise

4.4. Computational Complexity

5. Conclusions

- (1)

- The effectiveness of the algorithms in this paper is evaluated through a remote distance passive target tracking scenario, and the simulation results demonstrate the better environmental adaptability of the proposed algorithms in solving continuous-discrete robust problems. What’s more, this paper aims to solve the slow tracking problem in remote distance passive target tracking, and the proposed algorithms can also be used in other navigation domains.

- (2)

- As a common tool for data processing, Huber’s estimation has a wide range of applications. The RSRCD-MCCKF algorithm and the VBSRCD-CKF algorithm proposed in this paper are better than the Huber’s estimator, which provides an alternative means for the field of robust estimation.

- (3)

- In the simulation results, we can see that the filtering performance of the algorithm without robustness is very poor. Therefore, when the system’s data are contaminated by outliers, the relevant robust estimation method is indispensable.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, R.K.; Chen, S.X.; Wu, H.; Chen, K.; Liu, J. Adaptive Covariance Feedback Cubature Kalman Filtering for Continuous-Discrete Bearings-Only Tracking System. IEEE Access 2019, 7, 2686–2694. [Google Scholar] [CrossRef]

- Li, L.Q.; Wang, X.L.; Liu, Z.X.; Xie, W.X. Auxiliary truncated unscented Kalman filter for bearings-only maneuvering target tracking. Sensors 2017, 17, 972. [Google Scholar] [CrossRef] [PubMed]

- Crouse, D. Basic tracking using nonlinear continuous-time dynamic models. IEEE Aerosp. Electron. Syst. Mag. 2015, 30, 4–41. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S.; Hurd, T.R. Cubature Kalman Filtering for Continuous-Discrete Systems: Theory and Simulation. IEEE Trans. Signal Processing 2010, 58, 4977–4993. [Google Scholar] [CrossRef]

- Crouse, D.F. Cubature Kalman Filters for Continuous-Time Dynamic Models Part I: Solutions Discretizing the Langevin Equation. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014. [Google Scholar]

- Crouse, D.F. Cubature Kalman Filters for Continuous-Time Dynamic Models Part Ⅱ: A Solution Based on Moment Matching. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014. [Google Scholar]

- Kulikov, G.Y.; Kulikova, M.V. Accurate Numerical Implementation of the Continuous-Discrete Extended Kalman Filter. IEEE Trans. Autom. Control 2014, 59, 273–279. [Google Scholar] [CrossRef]

- Wang, J.L.; Zhang, D.X.; Shao, X.W. New version of continuous-discrete cubature Kalman filtering for nonlinear continuous-discrete systems. ISA Trans. 2019, 91, 174–783. [Google Scholar] [CrossRef]

- Kulikov, G.Y.; Kulikova, M.V. NIRK-based Accurate Continuous-Discrete Extended Kalman filters for Estimating Continous-Time Stochastic Target Tracking Models. J. Comput. Appl. Math. 2016, 316, 260–270. [Google Scholar] [CrossRef]

- He, R.K.; Chen, S.X.; Wu, H.; Chen, K. Stochastic feedback based continuous-discrete cubature Kalman filtering for bearings-only tracking. Sensors 2018, 18, 1959. [Google Scholar] [CrossRef]

- Gordon, N.J.; Salmond, D.J.; Smith, A.F.M. Novel Approach to Nonlinear/Non-Gaussian Bayesian State Estimation. Radar Signal Processing IEE Proc. Part F 1993, 140, 107–113. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Processing 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Meinhold, R.J.; Singpurwalla, N.D. Robustification of Kalman filter methods. J. Am. Stat. Assoc. 1989, 84, 479–486. [Google Scholar] [CrossRef]

- Huber, P.J.; Ronchetti, E.M. Robust Statistics, 2nd ed.; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Jureckova, J.; Picek, J.; Schindler, M. Robust Statistical Methods with R, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Chang, J.B.; Liu, M. M-estimator-based robust Kalman filter for systems with process modeling errors and rank deficient measurement models. Nonlinear Dyn. 2015, 80, 1431–1449. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.X.; Chen, K.; Yang, B.F. Robust cubature Kalman filter target tracking algorithm based on genernalized M-estiamtion. Acta Phys. Sin. 2015, 64, 456–463. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.X.; Chen, K.; Yang, B.F. Robust Derivative-Free Cubature Kalman Filter for Bearings-Only Tracking. J. Guid. Control. Dyn. 2016, 39, 1865–1870. [Google Scholar] [CrossRef]

- Särkkä, S.; Nummenmaa, A. Recursive noise adaptive Kalman filtering by variational Bayesian approximations. IEEE Trans. Autom. Control. 2009, 54, 596–600. [Google Scholar] [CrossRef]

- Wang, G.Q.; Li, N.; Zhang, Y.G. Maximum correntropy unscented Kalman and information filters for non-Gaussian measurement noise. J. Frankl. Inst. 2017, 354, 8659–8677. [Google Scholar] [CrossRef]

- Liu, X.; Qu, H.; Zhao, J.; Yue, P.; Wang, M. Maximum correntropy unscented Kalman filter for spacecraft relative state estimation. Sensors 2016, 16, 1530. [Google Scholar] [CrossRef]

- Liu, X.; Chen, B.; Xu, B.; Wu, Z.; Honeine, P. Maximum correntropy unscented filter. Int. J. Syst. Sci. 2017, 48, 1607–1615. [Google Scholar] [CrossRef]

- Särkkä, S.; Solin, A. On continuous-discrete cubature Kalman filtering. IFAC Proc. Vol. 2012, 45, 1221–1226. [Google Scholar] [CrossRef]

- Särkkä, S. On unsented Kalman filtering for state estimation of continuous-time nonlinear systems. IEEE Trans. Autom. Control 2007, 52, 1631–1641. [Google Scholar] [CrossRef]

- Chen, B.D.; Liu, X.; Zhao, H.Q.; Principe, J.C. Maximum correntropy Kalman filter. Automatica 2017, 76, 70–77. [Google Scholar] [CrossRef]

- Särkkä, S.; Hartikainen, J. Non-linear noise adaptive Kalman filtering via variational Bayes. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing (MLSP) Conference, Southampton, UK, 22–25 September 2013. [Google Scholar]

- He, R.K.; Chen, S.X.; Wu, H.; Zhang, F.Z.; Chen, K. Efficient extended cubature Kalman filtering for nonlinear target tracking. Int. J. Syst. Sci. 2020, 52, 392–406. [Google Scholar] [CrossRef]

| Algorithms | ||

|---|---|---|

| RSRCD-MCCKF () | — | — |

| RSRCD-MCCKF () | 0.0528 | 0.000205 |

| RSRCD-MCCKF () | 0.0449 | 0.000188 |

| RSRCD-MCCKF () | 0.0482 | 0.000179 |

| RSRCD-MCCKF () | 0.0645 | 0.000211 |

| RSRCD-MCCKF () | 0.0771 | 0.000239 |

| SRCD-CKF | 0.0784 | 0.000247 |

| Algorithms | ||

|---|---|---|

| RSRCD-MCCKF () | — | 0.0349 |

| RSRCD-MCCKF () | 1.0829 | 0.0043 |

| RSRCD-MCCKF () | 0.4716 | 0.0014 |

| RSRCD-MCCKF () | 0.4813 | 0.0014 |

| RSRCD-MCCKF () | 0.5375 | 0.0016 |

| SRCD-CKF | 0.6679 | 0.0019 |

| Algorithms | Computation Time (Scenario 4.1) | Computation Time (Scenario 4.3) |

|---|---|---|

| SRCD-CKF | 1 | 1 |

| HRSRCD-CKF | 1.029 | 1.034 |

| MRSRCD-CKF | 1.005 | 1.018 |

| RSRCD-MCCKF | 1.076 | 1.080 |

| VBSRCD-CKF | 1.068 | 1.071 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, H.; Chen, S.; Wu, H.; He, R. Robust Estimation in Continuous–Discrete Cubature Kalman Filters for Bearings-Only Tracking. Appl. Sci. 2022, 12, 8167. https://doi.org/10.3390/app12168167

Hu H, Chen S, Wu H, He R. Robust Estimation in Continuous–Discrete Cubature Kalman Filters for Bearings-Only Tracking. Applied Sciences. 2022; 12(16):8167. https://doi.org/10.3390/app12168167

Chicago/Turabian StyleHu, Haoran, Shuxin Chen, Hao Wu, and Renke He. 2022. "Robust Estimation in Continuous–Discrete Cubature Kalman Filters for Bearings-Only Tracking" Applied Sciences 12, no. 16: 8167. https://doi.org/10.3390/app12168167

APA StyleHu, H., Chen, S., Wu, H., & He, R. (2022). Robust Estimation in Continuous–Discrete Cubature Kalman Filters for Bearings-Only Tracking. Applied Sciences, 12(16), 8167. https://doi.org/10.3390/app12168167