1. Introduction

Trust and reputation estimation is typical daily social behavior. Before purchasing particular items, rational customers accumulate information to determine the product’s quality. The decision-maker would highly rely on the comprehensive trustworthiness of the product provider. Similarly, the same mechanism fits MASs, especially for MASs with deceptive agents in a distributed environment.

Numerous protocols have been used to accentuate the trustworthiness issue in MASs. Generally, four models associated with trusted systems are commonly applied. The logical models describe how one agent trusts another according to mathematical logic. The social-cognitive models decide agents’ trustworthiness inspired by human psychology. Organizational models maintain trust through personal relationships in the system, whereas numerical models understand trust from a mathematical probability perspective [

1,

2]. Each of the four models has advantages for trust estimation.

In the numerical models, an agent’s trustworthiness is represented by a real number, signifying the possibility that the interaction with it is a success. The service provider with a high degree of trust is more likely to be selected. To obtain the honesty of an agent, trust models need to collect some information concerning possible cooperation. In general, the trust estimation mainly comes from the following types of information: direct trust, which is based on experience from its direct interactions [

3,

4]; indirect reputation, which is based on the testimonial presented by third-party agents [

2,

5]. In addition, social cognitive information and organizational knowledge are other essential aspects [

6,

7,

8]. However, the collected information from the third-party agents must involve significant uncertainty; therefore, the collected information must have rational confidence to be serviceable.

In terms of distinguishing reliable information collected from third-party agents, some assume that the majority of agents are dependable [

2]. Some identify dishonest agents through personal direct experience [

9] or filter out the unreliable recommendation and discount their evidence by assigning adaptive weights [

9,

10,

11,

12]. Although some of them have excellent security features, most assume that service providers have a list of static performance. That is to say, service providers are all trusted and maintain their honest behavior consistently. What is more, the testimony provided by the third party is always reliable. However, there is no guarantee that service providers and witnesses would always present static behavior. They might decide to go from genuine to malicious or the other way around. Thus, the general assumption might be insufficient due to the dynamic behavior of agents in MASs. Furthermore, most systems that we reviewed only consider reliability, certainty, or consistency as separate models in MASs. These models do not exploit an integrated model concerning all aspects of trust for trustworthiness estimation.

In summary, agents, both resource providers and third-party agents, can change their performances in MASs. In the numerical models, much information is collected to evaluate direct trust and indirect reputation. However, much uncertainty stems from incompleteness, randomness, fading, and so forth in direct trust, whereas the collected information from third-party agents must have rational confidence to be serviceable. This paper proposes an adaptive trust model in MASs where agents’ behaviors might differ over time. Agents in this model can be service providers, as well as witnesses who give testimony for trust estimation. The Dempster-Shafer theory of evidence is adopted to capture direct trust. Dynamic adaptive weights that depend on the quality of the collected information, the witness’s credibility, and the certainty are used to characterize confidence for modifying the indirect reputation. The ultimate trust combines both direct trust and indirect reputation. Our model presents customer agents with a measure to decide with which agent to interact. The rest of the paper is organized as follows. Some related work is introduced in

Section 2. The primary tool, the Dempster-Shafer theory of evidence, is discussed in

Section 3.

Section 4 explains the proposed trust model in detail. Comparisons and conclusions are given in the last section.

2. Related Work

Trust, a subjective judgment, refers to the confidence in some aspect or another of a relationship in most of our reviewed articles [

4]. It was first introduced in computer science as a measure of an agent in 1994 [

13]. Trust can be classified into three types [

14,

15]. The first type of trust refers to truth and belief, which means trusting the behavior or quality of the other object. Next, trust implies that a declaration is correct. Finally, “Trust as commerce” indicates the ability or the intention of a buyer to purchase in the future. In this paper, we adopt the first class, that is to say, trust can be interpreted by believing the behavior and quality of the identity.

The literature is plentiful with various approaches to trust estimation in MASs [

2,

15,

16,

17,

18,

19]. In general, trust should be based on evidence consisting of positive and negative experiences with it [

15,

20,

21]. Jøsang, A and Ismail, R capture trust in MASs based on the beta probability density functions that rely on the number of negative and positive interactions [

2]. The probability expectation is used to express the trust value. The literature [

22] provides a systematic study on when it is beneficial to combine direct trust and indirect reputation. TRAVOS [

9] models trustworthiness probabilistically through a beta distribution computed from the outcomes of all the interactions a truster has observed, and it uses the probability of successful interaction between two agents to capture the value of trustworthiness. Fortino, Giancarlo, et al. studied trust and reputation in the Internet of Things [

23]. In addition, some references evaluate trustworthiness by taking advantage of recent performances. In the literature [

12,

24], they achieve trust by evaluating recent performance and historical evaluations. However, trust evaluation might encounter uncertainty deviated from cognitive impairment, inefficient evidence, randomness, and incomplete information [

25,

26]. Wang and Singh use evidence theory to present uncertainty for trust estimation [

27,

28,

29]. In Zuo and Liu’s model [

30], they adopt a three-value opinion (belief, disbelief, uncertainty) in the opinion-based model for trust estimation. However, these models are questionable for trust estimation in a MAS with deceptive agents involved. At the same time, most of them define uncertainty by the medium evaluations. However, as explained, uncertainty stems from cognitive impairment, inefficient evidence, randomness, and incomplete information [

25,

26]. Therefore, a more exhaustive exploration that exploits the uncertainty needs to be carried out.

It is natural and reasonable to query for third-party information whenever the direct experience is insufficient. However, it is risky to make a final decision relying entirely and directly on the gathered information in a distributed MAS, especially with deceptive agents involved. Thus, we need to ensure that the accumulated information is reliable before being used. Therefore, the concept of confidence is adopted to capture the reliability of gathered information [

9,

12,

15,

20,

24,

28,

31]. We define confidence as a probability that the trust is applicable, supported by evidence, consistency, credibility, and certainty. However, most of these models only take some aspects into consideration as a separate trust model in MASs.

First, some trust models assume that most third -party agents are reliable [

2]. Thus, those opinions that are consistent with the majority can be regarded as reliable [

2,

32]. Others identify unreliable agents by comparing them with direct experience [

9,

28]. Here in this paper, we define this property as

consistency. Consistency displays the differences or similarities with other evidence. It is helpful, especially for those agents whose trust and certainty are unknown. However, most of our reviewed articles did not pay much attention to consistency for trust estimation in MASs.

Credibility is widely applied in distributed MASs to capture confidence. In most circumstances, credibility can be interpreted as a weight indicating how much the agent could be reliable [

12]. It is updated according to the provided information and the interactive feedback [

12,

24,

28,

29]. However, in the literature [

12,

28], the credibility of the third party agents decreases gradually; that is to say, the truster will no longer believe the agents with sufficient interactions. In the literature [

29], the Dempster-Shafer theory of evidence is used to reach trust. However, the credibility of the third-party agents increases if the feedback is a success, and the other way around. In dynamic MASs, false information might also be reliable, and it can be caused by changing performance. Thus, improving agents’ credibility through current interactions can be questionable and not enough.

Certainty is “a measure of confidence that an agent may place in the trust information” [

15]. Measuring certainty can filter out reliable information efficiently [

33]. As previously stated, trust always involves uncertainty caused by the lack of certainty, a lack of right and complete information, or randomness [

34]. Thus, the information that comes from a different degree of certainty may affect the confidence of that agent. For instance, agent A always trusts agent B, yet agent B is uncertain about its information towards some event. Thus, agent A would hesitate at the collected information from agent B. In the literature [

31], the trust model uses fuzzy sets and assigns certainty to each evaluation. In [

15], the model analyzed certainty to capture confidence. Most trust models believe that certainty and trust are independent. However, we consider trust as the rate of successful interaction. Thus, uncertainty comes from the conflict between successful and failed interactions, and certainty should depend on evidence.

In summary, the influential factors and their explanations for trust estimation in MASs are listed in

Table 1. As we can see, from our perspective, we value uncertainty, evidence consistency, witness’ credibility, and certainty and the motivation for a witness, and they are influential factors for trust estimation in MASs. In what follows, we will display some of the recent trust estimation models that are related to confidence estimation.

FIRE is an integrated trust and reputation model for open MASs according to interaction trust (IT), role-based trust (RT), witness trust (WT), and certified trust (CT) [

35]. It is direct trust calculated by direct experience; RT, WT, and CT are about witness experience. FIRE presents good performance. However, the model does not stress uncertainty and assumes that all agents provide accurate information. In addition, many static parameters must be used in the model, which limits its application [

24,

36]. SecuredTrust is a dynamic trust computation model in MASs [

32]. The model addresses many factors, including differences and similarities. However, feedback credibility generated from similarity is used to capture the degree of accuracy of the feedback information, which is the same as consistency. However, certainty and personal credibility are not well stressed. At the same time, an incentive mechanism should be used to encourage agents to be reliable. The actor–critic model selects reliable witnesses by reinforcement learning [

37]. It dynamically selects credible witnesses as well as the parameters associated with the direct and indirect trust evidence based on interactive feedback. Nevertheless, when only one known witness is available for the indirect reputation, bootstrapping errors occur [

38]. What is more, capturing uncertainty in a perfect trust estimation model in a dynamic system is necessary. By analyzing uncertainty, we can obtain a degree of certainty and filter out reliable agents efficiently. An adaptive trust model for mobile agent systems is established. The model combines direct trust and indirect reputation and also assesses the credibility of witnesses. Moreover, the model considers that uncertainty when generating direct trust is reasonable. However, witnesses’ certainty is necessary for a trust model, especially a dynamic trust model. Despite the advantages presented by the models noted above, these trust models are still imperfect and can be extended. This paper proposes an evidence theory-based trust model. It first improves the inappropriate witness credibility updating approach in previous articles. When capturing direct trust, the model manages uncertainty from the perspective of cognitive impairment, inefficient evidence, randomness, and incomplete information [

25,

26]. What is more, consistency, credibility, and certainty are declared to achieve confidence. An encouragement mechanism is used to inspire agents to provide reliable information.

3. Dempster-Shafer Theory of Evidence

The Dempster-Shafer theory of evidence, proposed by Dempster and Shafer to handle uncertainty [

39,

40], has a wide range of applications in risk assessments, medical diagnosis, and target recognition [

41,

42,

43]. It is a typical method of uncertainty information fusion because of its adjustability in uncertainty modeling [

44]. Some preliminaries are introduced below.

Definition 1. The frame of discernment and BPAs.

Evidence theory is defined in the frame of discernment denoted by

, which consists of

n mutually exclusive and collectively exhaustive elements;

is the set of all subsets of

, which is represented by

. Mathematically, a basic probability assignment (BPA, also known as mass function) is

, with the following conditions satisfied:

where ∅ is the empty set, the subsets

such that

is named as focus element, and the set of all focus elements is called core. The other two functions (belief function and plausibility function) are defined as follows.

Definition 2. Belief function.

The belief function Bel of BPA

m is defined as follows:

Definition 3. Plausibility function.

The plausibility function Pl of BPA

m is defined as follows:

In the Dempster-Shafer theory of evidence, Dempster’s combination rule can be used to combine multiple pieces of evidence to reach the final evaluation. Given two BPAs and , the Dempster’s combination rule denoted by can fuse them as follows.

Definition 4. Dempster’s combination rule.

with

,

A,

B, and

C are elements in

. The normalization constant

K shows the conflicting degree between defined BPAs. If

, the two pieces of evidence totally conflict, and Dempster’s combination rule is not applicable. If

, the two pieces of evidence are non-conflicting.

Definition 5. Evidence distance.

With the full application of evidence theory, the study of the distance of evidence has attracted increasing interest. The distance measure of evidence can represent the dissimilarity between two BPAs, and several definitions of distance in evidence theory have also been proposed, such as Jousselme’s distance [

45], Wen’s cosine similarity [

46], and Smets’ transferable belief model (TBM) global distance measure [

47]. Jousselmes distance [

45] is one of the rational and popular definitions of distance, which is identified based on Cuzzolin’s geometric interpretation of evidence theory [

48]. The power set of the frame of discernment space and a distance and vectors are defined with the BPA as a particular case of vectors [

45]. Jousselme’s distance is defined as

with

,

being two BPAs under the frame of discernment

matrix. The element in

D is defined as

,

, and

represents cardinality. Jousselme’s distance is an efficient tool to quantify the dissimilarity of two BPAs.

Definition 6. Entropy of Dempster-Shafer theory.

The concept of entropy comes from physics, which is a measure of uncertainty and disorder [

49,

50]. The Shannon entropy has been widely accepted for uncertainty measurement (or information measurement) in information theory [

51]. However, the uncertainty estimation of BPAs of the Dempster-Shafer theory of evidence still needs trial and error. Since the first definition of a BPA in the evidence theory was given by Höhle in 1982 [

52], many definitions of entropy of the Dempster-Shafer theory have raised, for instance, the definitions proposed by Smets [

53], Yager [

54], Nguyen [

55], Dubois and Prade [

56], Deng [

57], and so on. However, these definitions are still imperfect. For example, Deng entropy has some limitations when the propositions are of the intersection. Thus, several methodologies have been applied for Deng entropy modification [

58,

59]. Recently, Jiroušek and P. Shenoy proposed a new definition, in which five of the six listed properties of entropy are satisfied [

60]. Whether there exists a definition that satisfies the six properties is still an open issue, but the proposed entropy in [

60] is acceptable and could be regarded as near-perfect. As a result, we use the definition of entropy of the Dempster-Shafer theory in the literature [

60] for uncertainty estimation in this paper. For the frame of discernment defined in

where

and

A is a focal element, the entropy of the BPA

m is defined as follows:

where the first part is employed to measure conflict [

60,

61], and

is defined as the plausibility transform [

60,

62], where

The second part in Equation (

6) can be regarded as non-specificity of the BPA

m [

56]. That is to say, the uncertainty of the Dempster-Shafer theory of evidence can be captured from two aspects, namely the conflicts between exclusive elements in set

and the non-specificity uncertainty. Both components are on the scale

, thus, the maximum entropy of a BPA is

.

Example 1. An example of the Dempster-Shafer theory of evidence is given as follows. Suppose an animal stands far away from us, perhaps a cat or a dog. Then the frame of discernment is presented as , and three passers-by show their opinions. Their opinions are shown as

- 1.

, ,

- 2.

, ,

- 3.

, ,

In terms of evidence distance, we have the following calculation process:

. Similarly, ,

. In a similar way, and

The three passers-by are all not one hundred percent sure what the animal is. Nevertheless, we may study their degrees of uncertainty, respectively. Let us take the first witness as an example.

. In the same manner,

Thus, H() In the same way, H( and H( As we can see, the testimony given by the third passer-by is the most vague and uncertain.

4. Proposed Trust Model

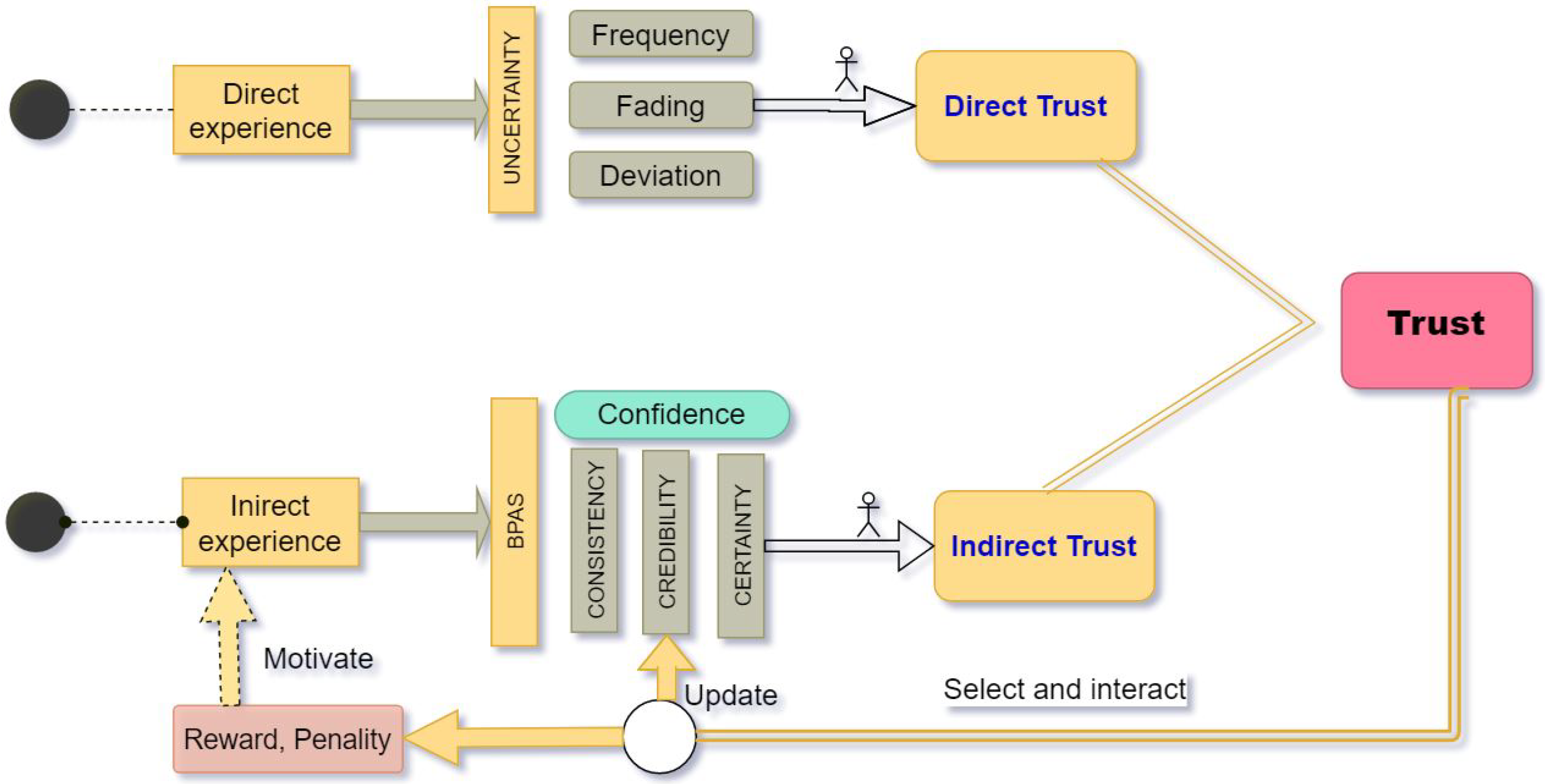

This section presents the trust model for trust estimation in MASs, where agents’ behaviors might differ over time. The proposed method relies on direct trust learned from direct experience and indirect reputation by integrating all indirect experience provided by third-party agents (witness). We study the confidence of witnesses and then combine both direct trust and indirect reputation. In more detail, the direct satisfaction statement based on interactive feedback is first illustrated, mainly emphasizing that recent interactions are more prominent by assigning higher weight. In this way, the proposed method is fitting for dynamic trust estimation. The uncertainty that stems from randomness, incomplete information, and inefficient interactions is then addressed, and the Dempster-Shafer theory of evidence is employed to represent direct trust. We then studied the confidence of individual evidence to determine indirect reputation. Subsequently, direct trust and indirect reputation are combined, and we reach the resource provider’s trustworthiness. The agent with the highest trust value is selected to raise interactions. The credibility of witnesses is updated by the interactive feedback and the average evaluation after the interaction. Finally, credits are reassigned to witnesses, with which they are able to purchase information for trustee evaluations. In this way, information providers are motivated to present accurate information. For simplicity, the detailed processes are shown in

Figure 1, and some related parameters are listed in

Table 2.

4.1. Direct Trust

Direct trust is a performance prediction for the next interaction of the selected agent based on previous interaction feedback. The obtained interactive feedback could be binary, i.e., a success or a failure, or any real number in the range

indicating that the received service is satisfying. This real number can also be interpreted as the trustworthiness of the information or service provider. For the sake of clarity, the service requester is represented by service client agent

, the service provider is modeled as service provider agent

, and those who present information to help evaluate the performance are represented as witness agent

. As is shown in

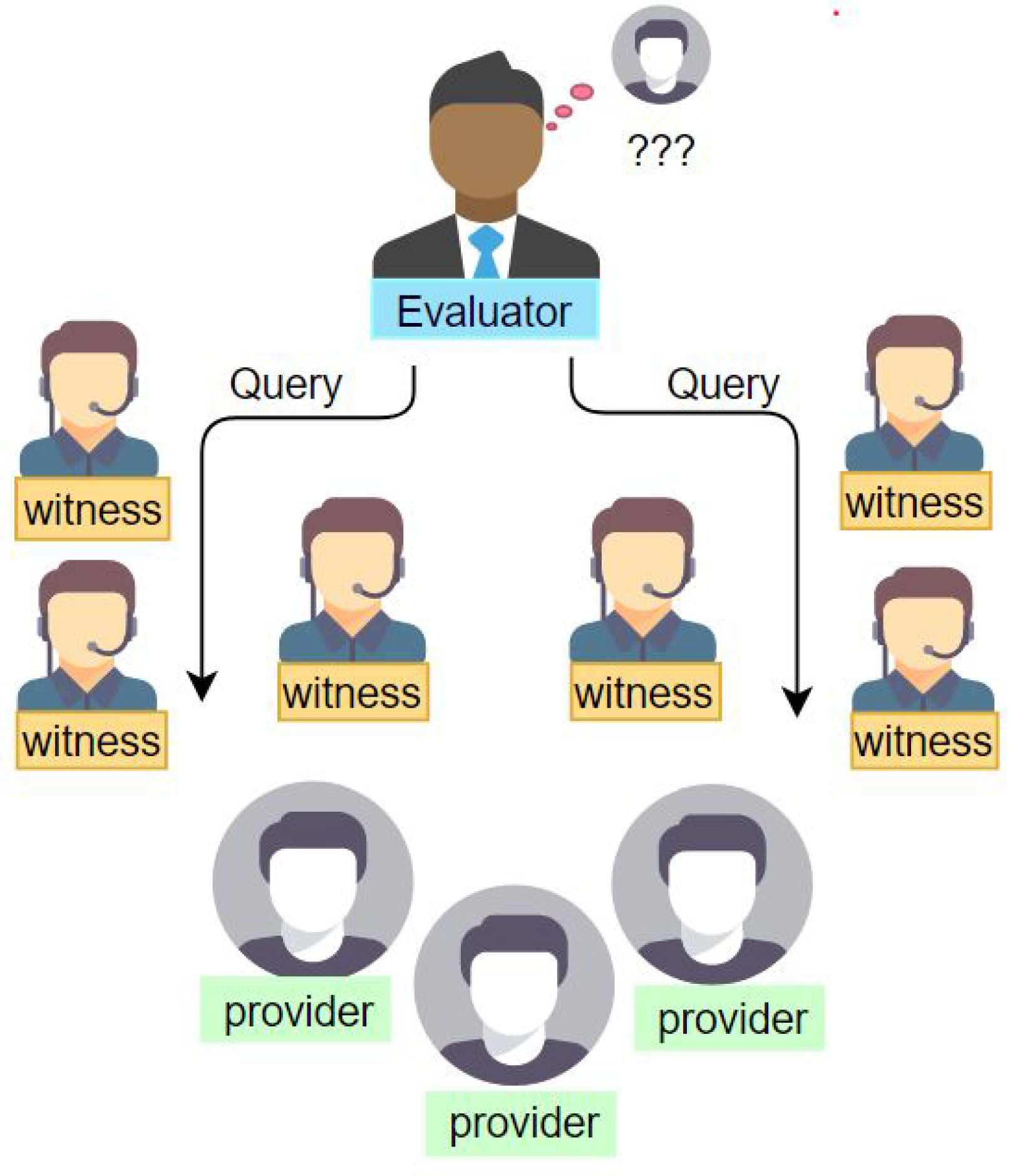

Figure 2, the client agent (evaluator)

estimates the trustworthiness of the resource providers

according to its direct experience and the indirect reputation provided by the witness

.

After interactions, each service client updates the corresponding satisfaction degree for each service provider according to its current degree of compensation and the feedback outcome. Of the previous literature, they average all the evaluations to obtain the ultimate satisfactory degree. In [

27], an evidence theory-based trust model, two parameters are employed to classify evaluations into trust, uncertainty, and distrust, and the medium evaluations are appointed as uncertainty. It could work, but we argue that recent interactions in dynamic MASs should significantly affect the final assessment, and many causes can result in uncertainty, including medium evaluation as well as conflict evaluations. Subsequently in the literature [

2,

9], assigning higher weights to the freshest interactions seems reasonable. From our perspective, uncertainty caused by randomness, incomplete information, and the environment is inevitable and should be taken into account in dynamic MASs. Moreover, clients could not have sufficient interactions with one fixed agent all over time. Therefore, uncertainty caused by the number and frequency of interactions and the deviation between current trust and performance will be adopted to generate direct trust.

Let

T mean that the service client agent considers the service provider agent to be trustworthy. Accordingly,

indicates that the supplier agent to be untrustworthy. Thus, the frame of discernment is represented by

. When

needs to appraise the performance of

,

and

are employed to represent the satisfaction before the

tth interaction and of the

tth interaction, respectively. The satisfaction is updated as follows:

As noted above,

indicates the current interactive feedback, which could be any number in the range of

, namely,

In Equation (

8), it is challenging to decide an appropriate value of

. In the literature [

2], a forgetting factor is adopted. However, the assignment is suitable for the beta reputation system. In [

24], they believe that weight is related to interactive times and frequency. Das et al. hold that

should be dependent on the accumulated deviation [

32]. From our perspective, we suppose that

should mainly be determined by the current performance deviation. That is to say, we pay much attention to its previous performance if agents act stably. If agents perform differently with their actual property frequently to hide their performance, the evaluation should play a more important role in the final estimation. Usually,

is close to 0, for instance,

, indicating that most agents consistently react, which is rational and intuitive in practical life. In this paper, we define

as follows:

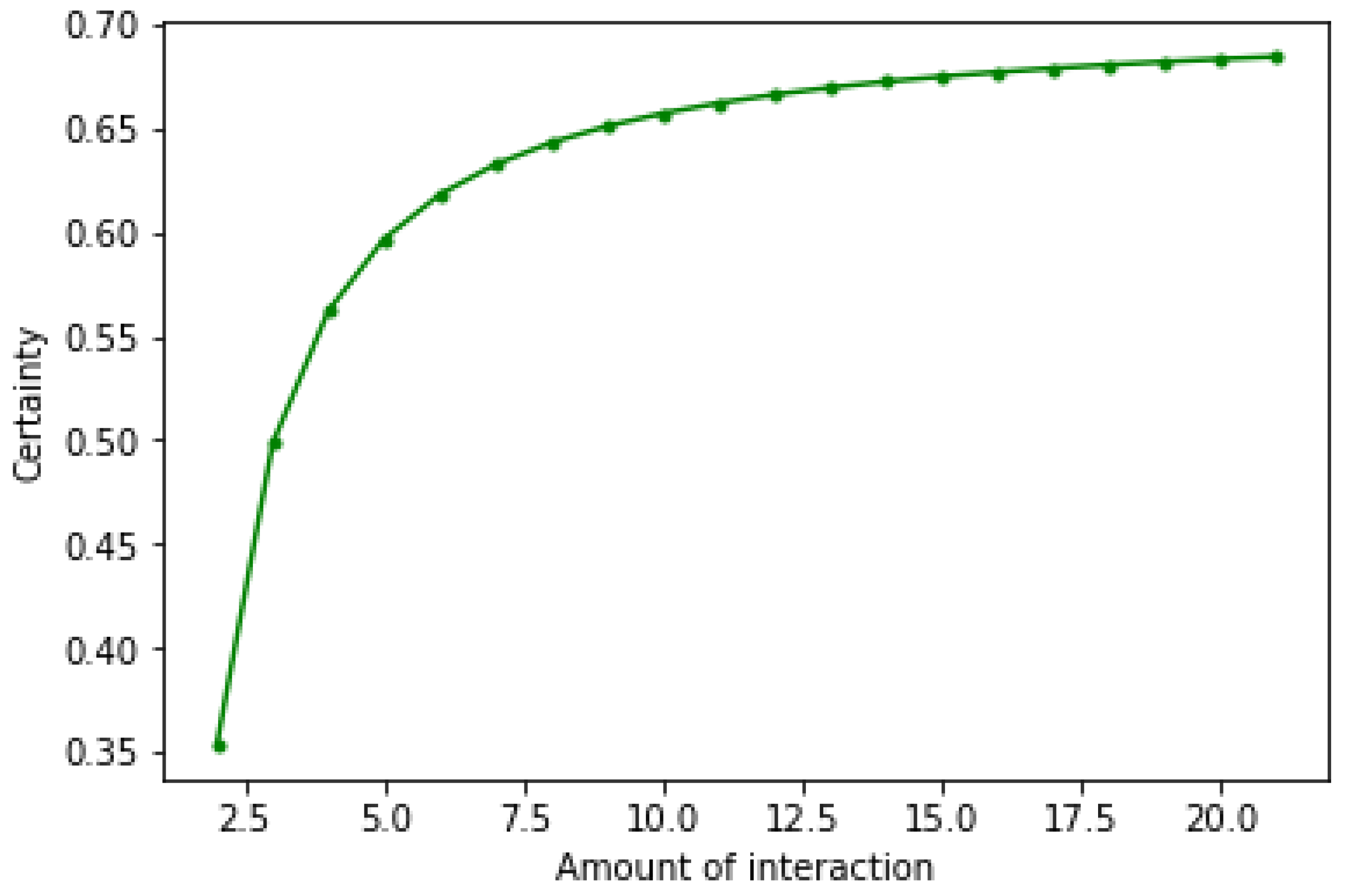

Much uncertainty is involved when generating the evidence due to incomplete information, the interactive frequency, and randomness. A frequency factor

is defined to show the uncertainty of

direct experience in the last period, and it is defined as

where

shows the amount of interaction between

and

in the last period considered. The value of

is influenced by the interaction type and the willingness to trust others; it is usually close to 1. As displayed in Equation (

11), uncertainty caused by the amount of interaction, incompleteness, and randomness is described, and the uncertainty decreases to 0.2 when two agents have at least eight interactions. As a result, the generated BPAs can be represented as follows:

Then , the direct experience can also be rewritten as .

4.2. Indirect Reputation

In the last subsection, we discussed how to measure direct trust according to interactive feedback. The inevitable factor, perceived as uncertainty caused by low interactive frequency, randomness, or incomplete information, is addressed during trust estimation. In this subsection, we focus on indirect reputation management. In some studies, agents only use direct trust if they have direct experience; otherwise, the agent would demand indirect reputations. Some literature employs only direct trust if it is available; otherwise, it would then demand indirect reputations. From our perspective, we have to make full use of both direct trust and indirect reputation for trust estimation, especially in dynamic and distributed MASs. However, it is challenging to rely on the received information directly for the following reasons:

Some agents might be dishonest;

A group of agents can conspire to be deceptive;

Some agents might have incorrect or insufficient information;

Agents may be uncertain about their provided information;

Agents might be used for deception.

Accordingly, the trust evaluator needs to have confidence in each piece of the collected information. We, therefore, determine the indirect reputation from the following aspects.

The estimated trust from the witness’ experience: This aspect could be modeled with the same method as direct trust. The framework of the Dempster-Shafer theory of evidence is adopted to capture its direct trust from the perspective of the information provider (witness).

Evidence consistency: As explained, both witnesses and service providers might present misleading information. For instance, the client trusts the witness . However, does not have sufficient testimony about the service provider , or presents false information deliberately because of a conflict of interest. Under those circumstances, the quality of its received information makes great sense. The conflict is applied to identify the differences between evidences, and—contrary to the differences–the similarities between received evidences could be adopted to judge the quality of the provided information.

The credibility of witnesses: This factor is measured by a real number in the range of . We initialize it to a fixed number indicating how much the witness can be trusted. Subsequently, it is updated interaction by interaction by the interactive feedback. The credibility of close to 1 shows that it is reliable. Otherwise, the witness is of low trustworthiness, and interaction should be avoided. In this way, a group of agents no longer has the opportunity to conspire to deceive.

Certainty of a witness: This factor plays a large role in interaction-based trust estimation among MASs. For instance, agent A trusts agent B completely. However, it is questionable to trust agent B if B is uncertain of its evidence to agent C. In this paper, we believe that certainty is dependent on evidence, and we refine certainty from the received evidence from the entropy perspective. We assume that trusted, witness-provided, good-quality, high-certainty evidence makes a greater contribution to trust estimation.

We use

to present the direct trust of the service provider agent

from the perspective of the witness

. As is asserted in the previous part, agents should have a rational confidence in the received information. In our opinion, evidence availability changes accompanying confidence. Low-confidence evidence has a smaller effect on the final decision. Simultaneously, evidence with high confidence has a more critical role in the final decision-making. We assume that the confidence of the received information

is represented by

, then the received information is revised by the degree of confidence as follows:

where

. Here,

shows the trust evaluation of the agent

from the service client agent

’ perspective according to the information provided by the witness agent

. As defined, the discounted parts are interpreted as uncertainty. It is logical as if the provided information is not convincing, and more information is assigned as uncertainty. Thus, more information is required to eliminate the uncertainty. After modification, Dempster’s combination rule is used to merge all the evidence.

However, it is not easy to decide an appropriate value of confidence, especially in a dynamic environment. In the literature [

28], they assign weights to witnesses representing their credibility. Certainty is also adopted to capture confidence [

15]. However, it is not enough to consider these aspects separately to decide confidence. From our perspective, we have to tell the consistency of the obtained information, as we believe that agents may not have enough interactions. At the same time, even if an agent has interacted with the target many times, its performance might diversify. In addition, in the reviewed work, we rarely find that consistency, credibility, and certainty have been integrated to determine confidence. As a result, to provide a near-perfect trust estimation model, consistency, credibility, and certainty are studied for confidence estimation in this paper.

4.2.1. Evidence Consistency

Distance suggests how far two objects are away from each other in practical life. The distance of BPAs shows the conflicts or differences between two BPAs. The shorter distance means that the two BPAs are more likely to support the same target (service provider). For trust estimation models in MASs, we adopt average distance to capture the consistency of some evidence. Assume that the demander received BPAs from

L different witnesses

, each of which is represented by

. As a result, the consistency (the difference) between these pieces of evidence is explored below. First, the average distance of

is represented by

, which is defined as

where

indicates the distance of

and

, and

represents the average distance to the evidence provided by the witness

, which also expresses its conflict or difference in the group of

L evidence. Obviously, the distance between two pieces of evidence falls in the range

in this paper. At the same time, we claim that the opposite of difference is similarity. Consequently, we can express the similarity by the following operation:

where

represents the consistency towards the service provider

from the perspective of the witness

in the group of

L witnesses.

4.2.2. Credibility of Individual Witnesses

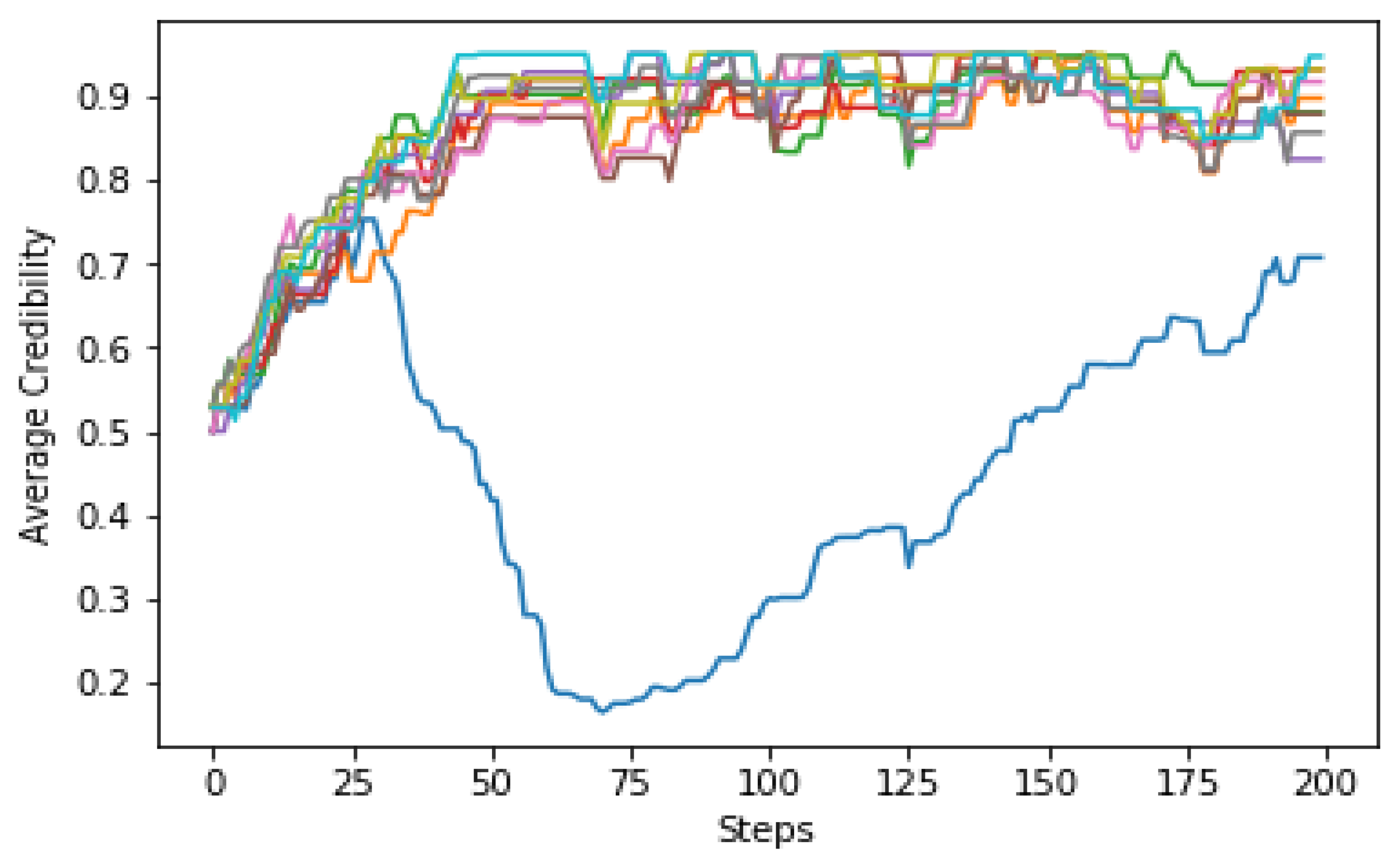

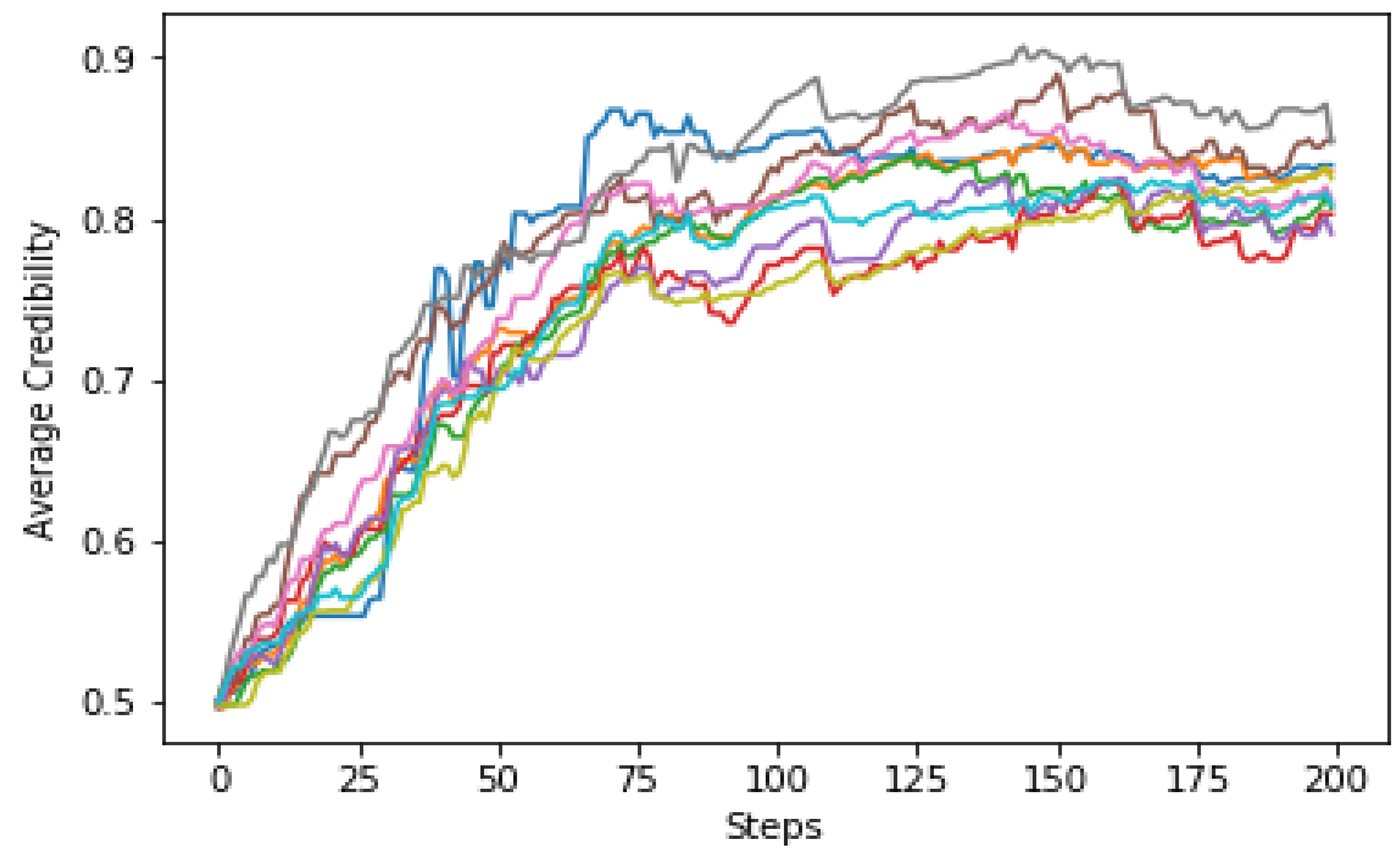

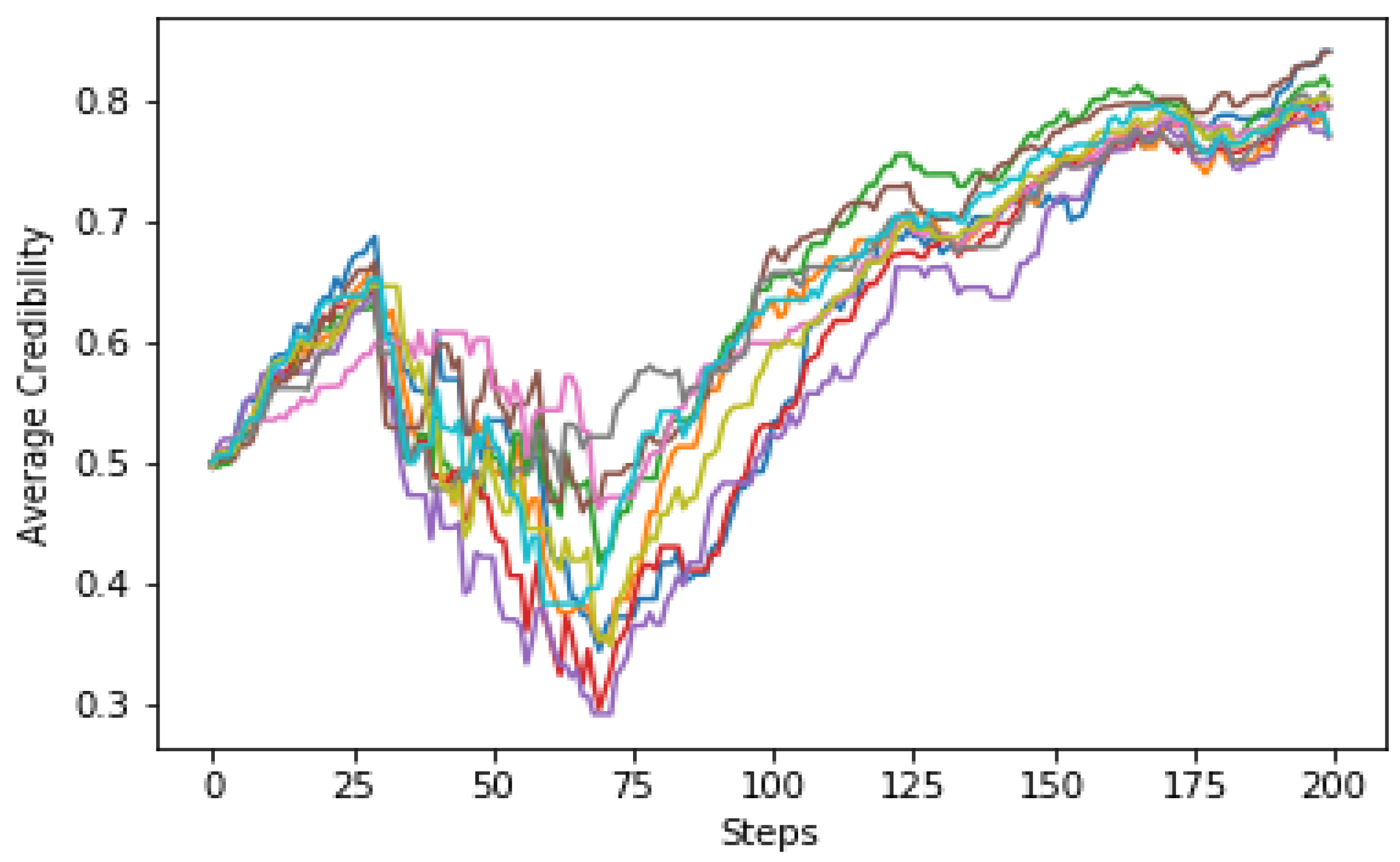

In this subsection, a detailed analysis of the credibility of individual witnesses in MASs is carried out. For a group of witnesses , , …, they provide testimony to the resource client if urges them to evaluate the trustworthiness of the resource provider . From the perspective of , to rely on the witness or not is a thought-provoking question. Thus, the agent’s credibility is adopted to indicate how much the witness is reliable. In this paper, we force the witness credibility to be in the range , and the value is initialized to 0.5 if no cooperation has occurred. As interactions go by, the credibility is updated according to the interactive feedback.

It is necessary to explain how credibility is updated. Of the previous Dempster-Shafer theory based trust estimations models, the value of credibility decreases over interactions all the time if the witness is found lying in [

63]. This model works under the assumption that all agents are acting in a constant way. In addition, never forgiving an agent and providing it a second chance also seems irrational. In the literature [

29], credibility is learned mainly from two aspects, namely, positive feedback and negative feedback. That is to say, rewards are expressed to all agents when positive feedback is reached, whereas all agents are punished when negative feedback is collected. However, some witnesses may have opposing attitudes when positive feedback is raised, and so it is the same when negative feedback is received. Thus, the credibility of these witnesses needs to be updated separately. What is more, it is sometimes questionable to update the value only according to the current interactive feedback, especially in dynamic systems. Individual reliability has a great influence on indirect reputation. We consider the credibility

of the witness

from the perspective of

when generating indirect reputation. More details about learning from the experience to renew personal credibility are discussed in

Section 4.4.

4.2.3. Model Certainty

The witness’s certainty also counts for trust estimation in MASs. It is stressed that uncertainty caused by the lack of certainty and complete information is imperative when generating BPAs. Thus, certainty is independent of evidence (BPAs). From our perspective, certainty comes from two perspectives.

First, certainty decreases as the extent of conflict increases in the evidence (we manage certainty by the ratio of positive and negative observations, namely, trust and distrust). Therefore, evidence certainty decreases as the ratio of positive and negative interactions increases.

Second, certainty decreases as uncertain information increases. That is to say, evidence certainty increases if decreases.

At the same time, we wish the value of certainty to fall in the range. Due to the listed reasons, the entropy of the Dempster-Shafer theory of evidence is an ideal tool for uncertainty measurement.

Many definitions of entropy have been proposed for the space of the Dempster-Shafer theory of evidence, for instance, Deng entropy [

57] and Dubois and Prade’s definition [

56]. In this paper, we adopt the entropy definition presented by Radim and Prakash [

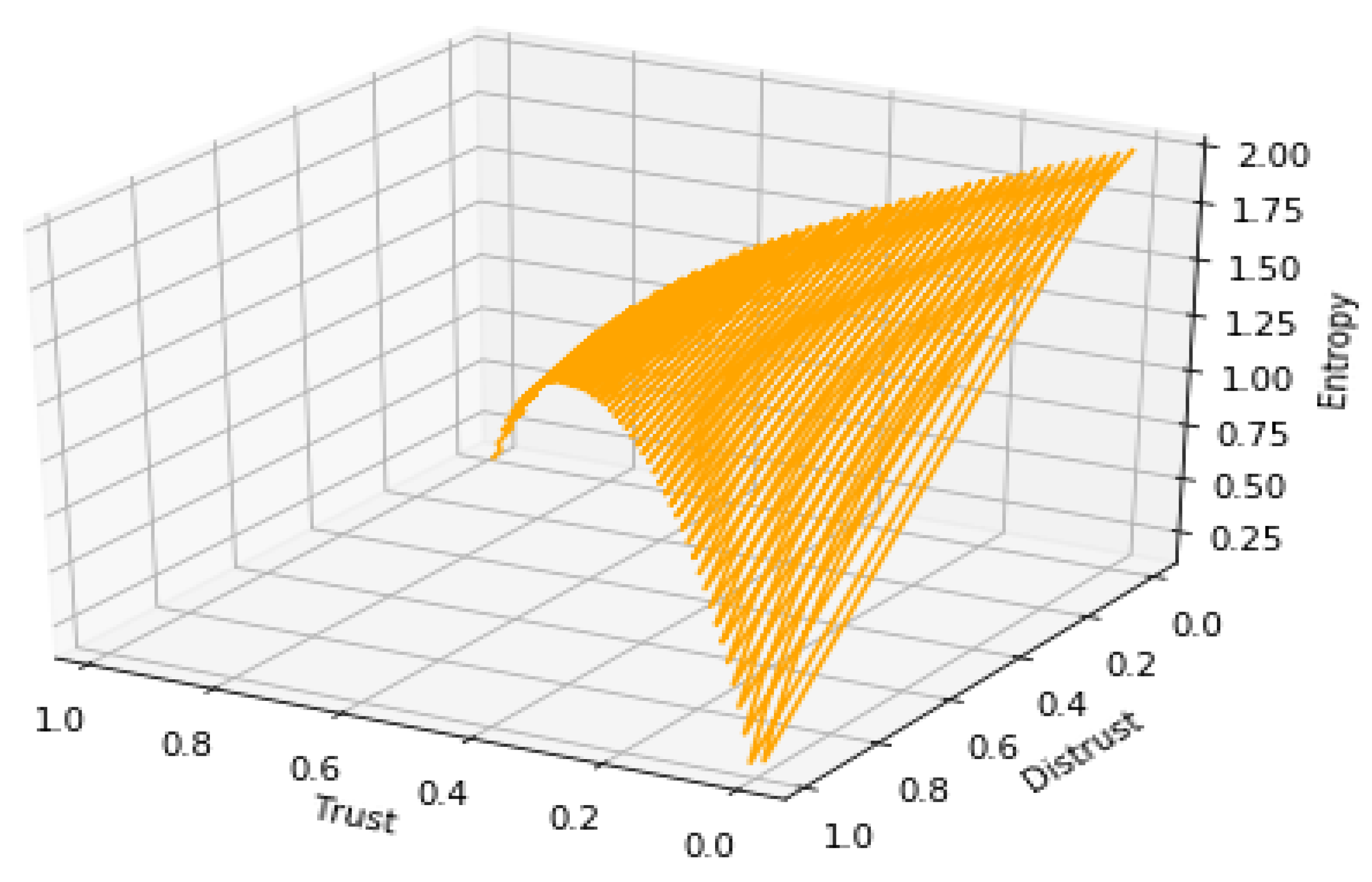

60]. The main reasons are that five of the six listed properties are satisfied, which could be regarded as near-perfect in some perspectives. Moreover, the entropy given by Radim and Prakash is designed from two components. The first component is designed to measure conflicts in the BPAs, and the second is used to measure non-specificity in BPAs, which fits for the main reasons of uncertainty in this paper. As explained, we believe that uncertainty yields from the conflict of positive and negative interaction, and a lack of information could also result in uncertainty. For the frame of discernment defined in this paper, i.e.,

, we have

,

and

, which is under the conditions of

,

, and

. The relation between the entropy [

60] is presented in

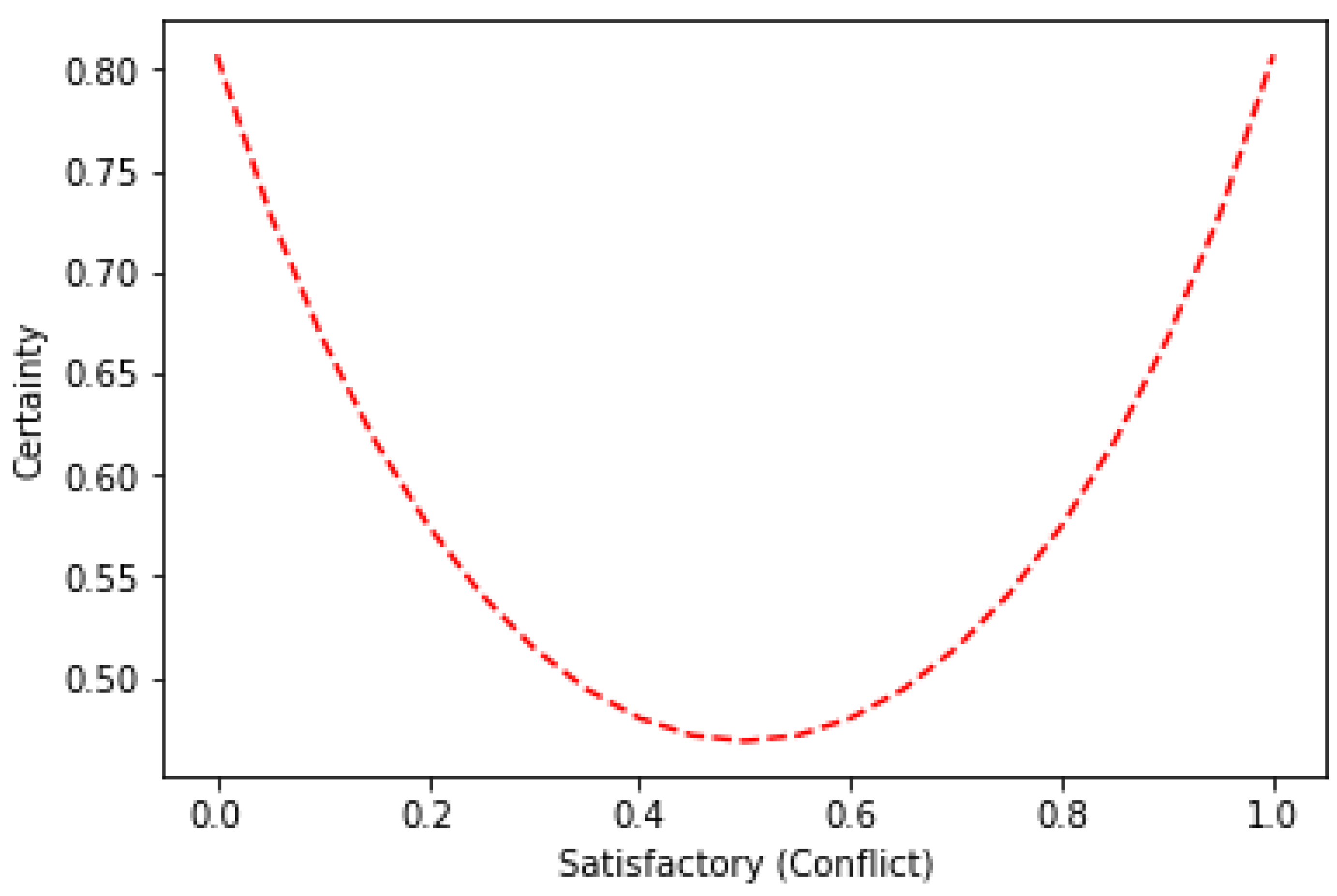

Figure 3.

As is shown, the uncertainty is in the range of

. Thus, we define the certainty of the information

,

For example, a BPA is given as or , then the witness is one hundred percent confident in the evidence. However, the witness is one hundred percent uncertain towards its judgments if the evidence is (0, 0, 1).

4.2.4. Model Indirect Reputation

In this subsection, we explain how to learn the final indirect reputation. As explained, indirect reputation consists of two aspects, namely, the quality evaluation, which is based on performance, and the confidence of the witness. Three sub-factors determine the value of confidence, i.e., the consistency of the provided information (Equation (

15)), the credibility of agents (

Section 4.2.2), and the certainty (Equation (

16)). These three sub-factors are used to revise the generated evidence and

in Equation (

13). Here, we use

,

, and

to represent the value of confidence, the consistency, the credibility, and the certainty for pretreatment of the

tth interaction, respectively. Detailed definition is given as follows:

where

is the smaller value between 0 and the difference of confidence obtained from consistency and credibility. In more detail, the confidence of a piece of evidence provided by the witness is directly proportional to the credibility of a witness, quality, and certainty of the evidence. In terms of certainty, as defines, the confidence

of the evidence provided by the witness

decreases with the certainty

if its credibility

and quality

are below the average level, which is calculated by

. Otherwise, the confidence is determined by only the credibility and quality. That is to say, the resource customer agent is more confident in the good-quality indirect experience provided by credibility agents, as presented in

Figure 4. Those experiences that originate from unreliable agents must be discounted and are no longer reliable. After modification, agents’ indirect reputations are fused by Dempster’s combination rule, shown in Equation (

4).

4.3. Model Overall Trust

In this section, we demonstrate how to model the overall value of trust. The overall evaluation of the service provider

from the perspective of the service client

derives from two parts, namely direct trust and indirect reputation. In general, a client trusts itself more compared to the information provided by third-party agents. At least, agents do not have self-conception. However, a client agent should better trust others if a system is unstable. Thus, the pretreatment of direct trust and indirect reputation proposed in [

64] is adopted; Equation (

18) shows the detailed processes. In the equation,

indicates the weight of direct trust, and

corresponds to the indirect reputation. The value of

depends totally on the stability in the MASs; a small

indicates agents’ performances often change. Generally,

, we use

to represent the pretreated BPA, then we have,

After the pretreatment, Dempster’s combination rule is applied to combine

one time as [

64] stated. The client agent, therefore, can receive the final evaluation of the service provider agent. Subsequently, the resource client selects one of the most reliable service providers to increase interactions. The interactive feedback outcome is achieved after the interaction, which could be either binary results (either success or failure) or an evaluation score in the range

. In the following section, we describe how personal credibility is updated.

4.4. Update Credibility

We first have to distinguish the viewpoint of the witness agent

on whether it stands with the service provider agent

before updating the corresponding credibility. The evaluation of

from the perspective of

is represented by (

, where

indicates that

believes that

is reliable and

represents that the resource provider is untrustworthy. The expression

is used to show the uncertainty caused by delay, interactive frequency, and incomplete information. It is essential to highlight that uncertainty does not mean knowing anything; it could be reassigned to trust or distrust according to Equation (

7), which is represented as follows:

A value of greater than 0.5 symbolizes that the service provider is reliable from the perspective of the witness . Otherwise, the witness implies that the service provider is untrustworthy. Hence, an agent’s credibility needs to be updated by the difference between the provided information and the interactive feedback outcome. Generally, the interactive outcome is represented by a success or a failure if the binary evaluation system is employed; otherwise . As is emphasized, agents in MASs might act unstably. Thus, the obtained incorrect information is probably caused by two conditions: the witness is dishonest, or the service provider intentionally presents a different quality of service. As a result, a client needs to investigate the consistency to update the credibility, and the following four cases are defined to conduct the operation.

The expression

is the feedback difference, which can be calculated from two aspects. First, its testimony and actual feedback outcome. For instance, the evaluation of service provider

given by witness

is represented by

and the first aspect is represented by

where

indicates the differences between the provided testimony and the feedback. It could also be interpreted as a consequence of relying on the received information.

The second aspect is the conflict of the evidence in the group of

L evidences. From our perspective, it is necessary to emphasize these differences, especially in distributed MASs with deceptive agents where agents may deliberately react to hide their actual attributes. Thus, the second aspect is familiar with the definition with Equation (

15), which can be illustrated as follows:

In summary,

is updated with the equation as follows, where

indicates the weight of the difference of its evaluation and the feedback. In general,

if multiagents do not change their behaviors frequently.

4.5. Incentives

One of the significant challenges in MASs involving deceptive agents is to motivate agents to bestow accurate information. In this paper, we are inspired by the credit mechanism to achieve this operation. Each of the agents in MASs has a fixed number of credits, no matter whether it acts as a resource client, a witness, or a resource provider, which are administered and displayed by a blackboard. However, credits only make sense when an agent acts as a resource client or a witness. A witness is only allowed to contribute its testimony if the resource client has credits. Simultaneously, a witness might receive or lose credits according to the interactive feedback of the resource client. As a resource client, an agent can no longer solicit others for any testimonies once it runs out of credits because it acts as a witness. Of course, it has the opportunity to encourage information by presenting convincing information and accumulating credits.

We suppose that gaining or losing credits is affected by interactive feedback. The specific amount is determined by its original credit and the confidence value decided by credibility value and consistency. In order to explain the credit mechanism in detail, we apply to represent the credit of agent . We force the value to be in the range , and it is initialized to 1. It is capable of demanding information unless is bigger than the threshold, for example, . After the interaction, the credits of all witnesses are updated as follows:

If the interactive feedback matches the testimony, then

If the interactive feedback does not match the testimony, then

It is necessary to explain what “match” means here. Namely, the witness supports the resource provider if the feedback was a success and the other way around. We set two bounds, as the witness is not allowed to accumulate or lose too many credits in a dynamic MASs. A high-credit agent loses credits rapidly if it keeps lying. Another advantage of our trust estimation model is that another “second chance” mechanism is not required. As soon as a dishonest agent reaches the minimum credit level, it understands that it can no longer demand information for trust estimation. Thus, to maximize its own social welfare, it has to provide honest information to gain credit.