Knowledge Graph Recommendation Model Based on Adversarial Training

Abstract

:1. Introduction

- ⬤

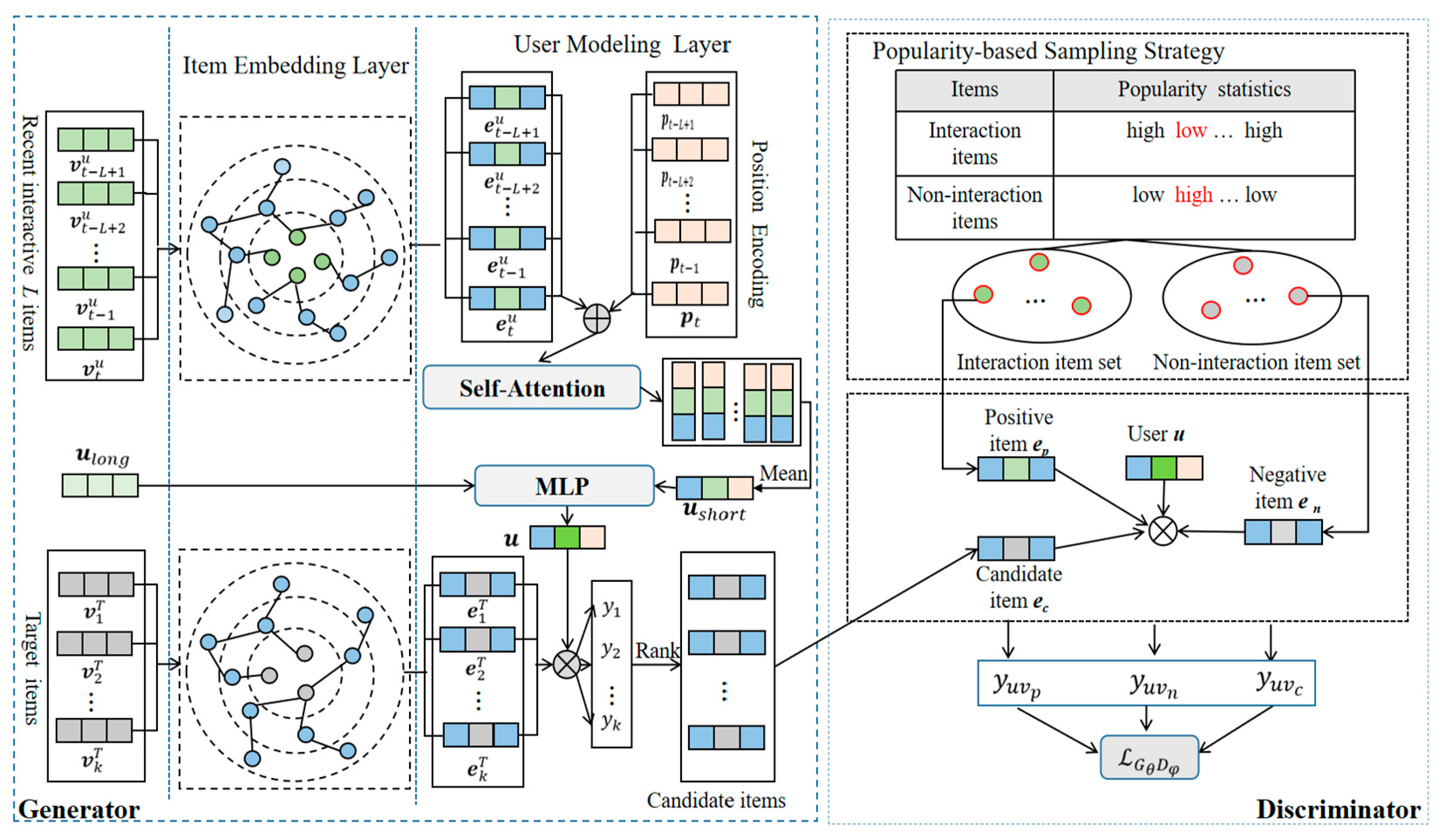

- In this paper, adversarial training is introduced into the KG recommendation model. Therefore, the knowledge graph aggregation weight can be dynamically and adaptively adjusted to obtain more reasonable user features and item features.

- ⬤

- The generator employs a long- and short-term interest model to discover the latent features of users and generates a high-quality set of candidate items. The discriminator uses a popularity-based sampling strategy to obtain more accurate positive items and negative items. It can more accurately identify candidate items by comparing the user’s scores of positive items, negative items, and candidate items. The generator and discriminator continuously improve their performance through adversarial training.

- ⬤

- We present a comparison with multiple knowledge graph recommendation models and multiple adversarial training recommendation models on five public datasets: MovieLens-1M, MovieLens-10M, MovieLens-20M, Book-Crossing, and Last. FM. Thus, the effectiveness of the ATKGRM proposed in this paper is verified.

2. Related Works

2.1. Collaborative Filtering Recommendation Model

2.2. Knowledge Graph Recommendation Model

2.3. Adversarial Training in Recommendation Systems

3. Our Model

3.1. Model Overview

3.2. The Generator

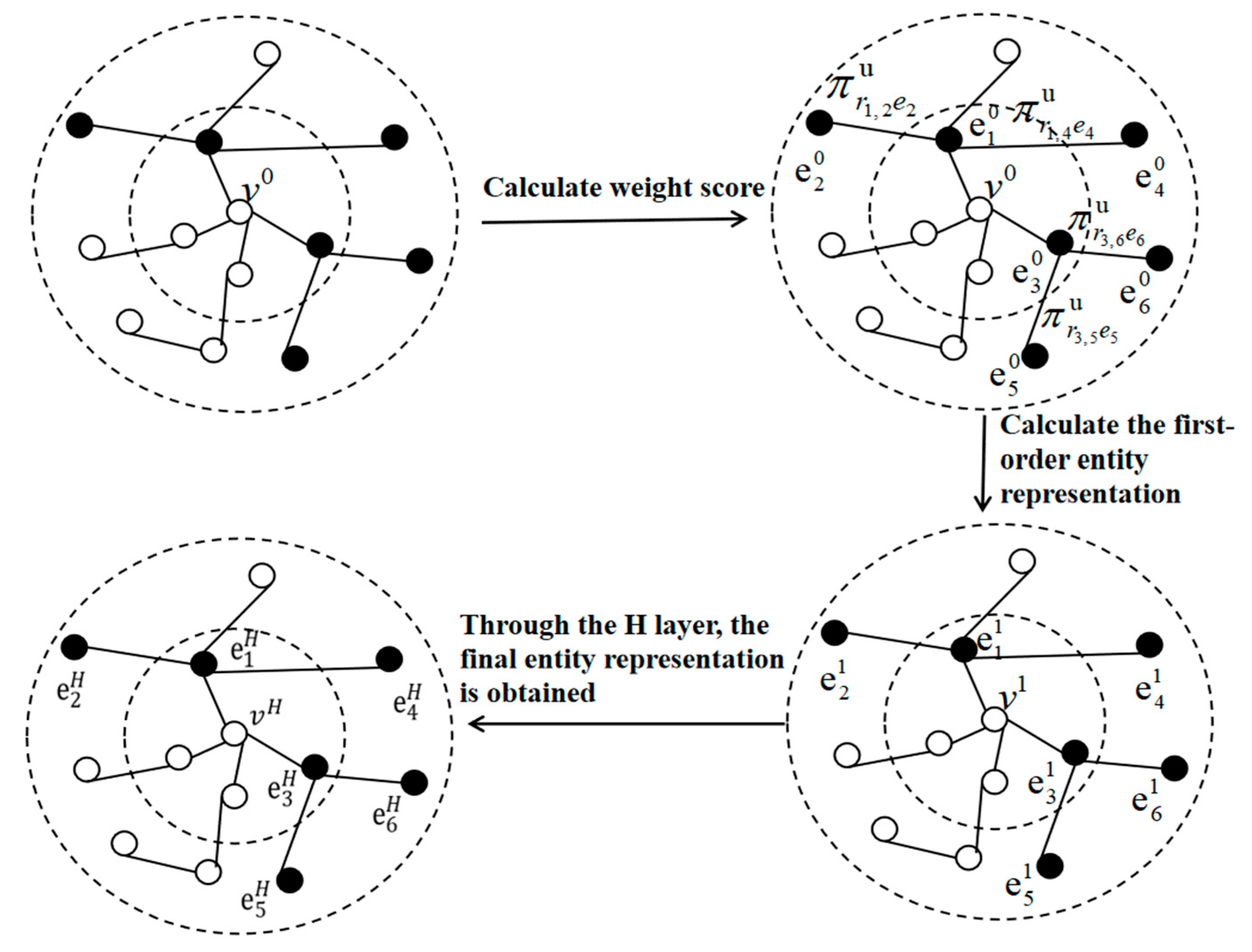

3.2.1. Item Embedding Layer

3.2.2. User Modeling Layer

3.2.3. Generate Candidate Items

3.3. The Discriminator

3.4. Adversarial Training

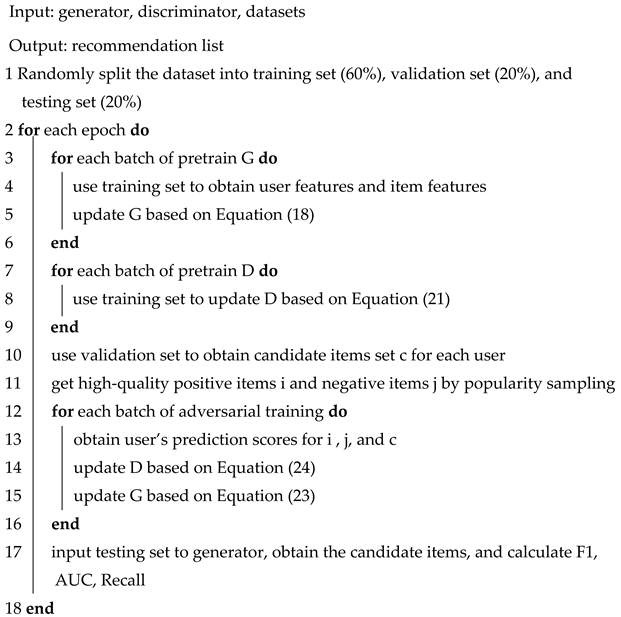

| Algorithm 1 ATKGRM Adversarial Training Algorithm |

|

4. Experiments

4.1. Experimental Settings

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Parameter Settings

4.2. Performance Comparison

4.2.1. Performance Comparison with the Collaborative Filtering Model

- ⬤

- CF-NADE [5] shows how to adapt NADE to CF tasks, encouraging the model to share parameters between different ratings;

- ⬤

- NCAE [6] can effectively capture the subtle hidden relationships between interactions via a nonlinear matrix factorization process;

- ⬤

- NESVD [7] proposes a general neural embedding initialization framework, where a low-complexity probabilistic autoencoder neural network initializes the features of the user and item.

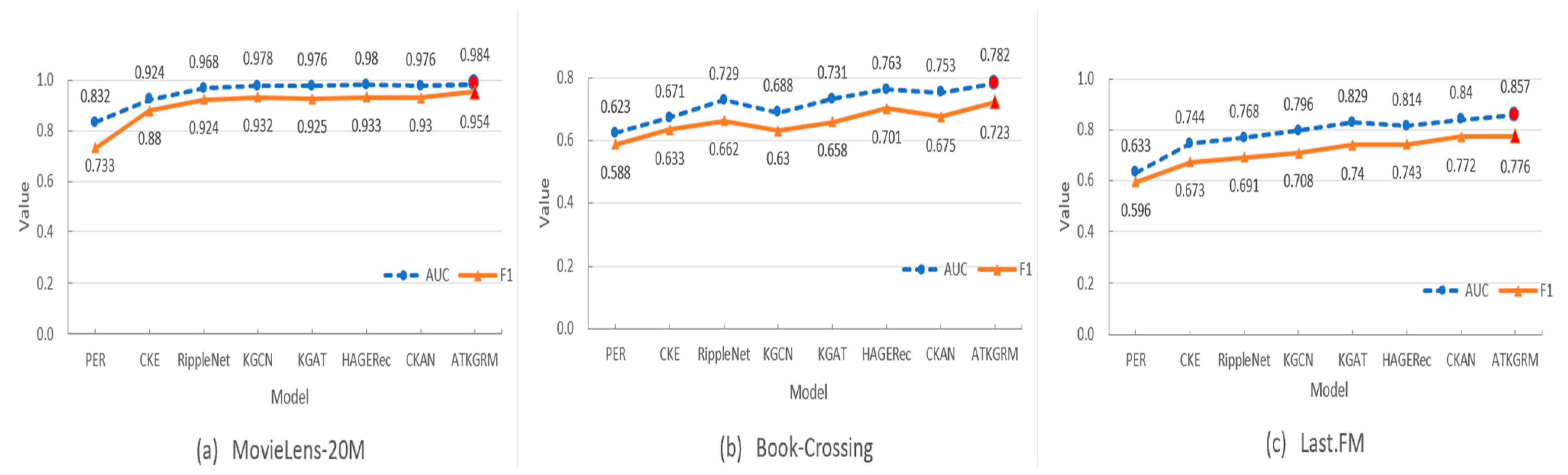

4.2.2. Performance Comparison of the Knowledge Graph Recommendation Model

- ⬤

- ⬤

- PER [21] extracts the information of meta-paths in a knowledge graph to show the connectivity between items and users on diverse types of relationship paths;

- ⬤

- RippleNet [23] uses multi-hop neighborhood information to expand a user’s potential interests by iteratively and autonomously following the links in KG;

- ⬤

- KGCN [24] uses a graph convolution network to automatically obtain semantic information and a high-order structure by sampling definite neighbors as the receptive field of candidate items;

- ⬤

- KGAT [28] combines a user–item interaction graph with KG as a collaborative knowledge graph; in communication, an attention module is used to differentiate the significance of neighborhood entities in a collaborative knowledge graph;

- ⬤

- HAGERec [29] uses the convolution network of a hierarchical attention graph to process KGs and explore the potential preferences of users; to fully use semantic information, a two-way information dissemination strategy is used to obtain the vector of items and users;

- ⬤

- CKAN [30] uses the cooperative knowledge perception attention module to differentiate the contributions of diverse neighborhood entities in processing KG and collaborative information.

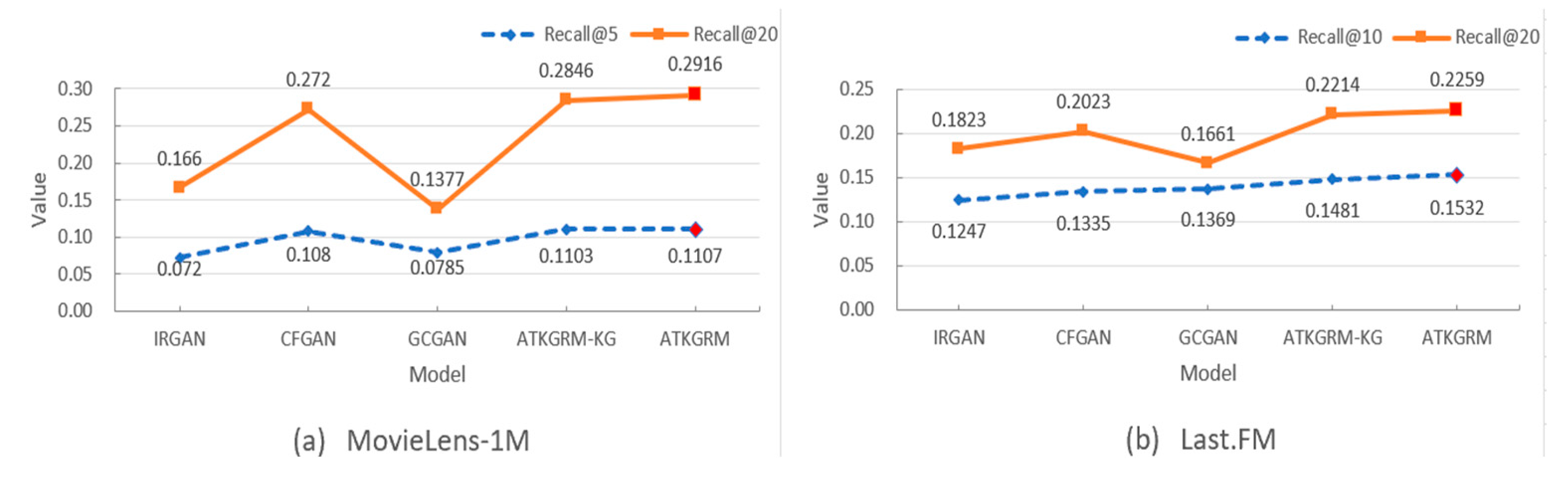

4.2.3. Performance Comparison of Adversarial Training Recommendation Models

- ⬤

- IRGAN [33] is a pioneer in the field of recommendation using adversarial training. The discriminator in the IRGAN is used to distinguish whether the items from the generator conform to the user’s real preference distribution.

- ⬤

- CFGAN [34] is the first model to use a generator to generate a continuous vector instead of a discrete item index.

- ⬤

- GCGAN [35] combines GAN and graph convolution and can effectively learn the main information by propagating feature values through a graph structure.

4.2.4. Ablation Experiment

4.3. Study of ATKGRM

4.3.1. Selection of the Number of User’s Recent Interactive Items L

4.3.2. Selection of Iteration Layers H in Knowledge Graph

4.4. Complexity Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Wang, D.; Xu, C.; He, X.; Cao, Y.; Chua, T.S. Explainable reasoning over knowledge graphs for recommendation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 5329–5336. [Google Scholar]

- Sang, L.; Xu, M.; Qian, S.; Wu, X. Knowledge graph enhanced neural collaborative recommendation. Expert Syst. Appl. 2021, 164, 113992. [Google Scholar] [CrossRef]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable representation learning by information maximizing generative adversarial nets. In Proceedings of the 30th Conference on Neural Information Processing Systems, Barcelona, Spain, 1 June 2016; pp. 2172–2180. [Google Scholar]

- Bousmalis, K.; Silberman, N.; Dohan, D.; Erhan, D.; Krishnan, D. Unsupervised pixel-level domain adaptation with generative adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 1 July 2017; pp. 95–104. [Google Scholar]

- Zheng, Y.; Tang, B.; Ding, W.; Zhou, H. A neural autoregressive approach to collaborative filtering. arXiv 2016, arXiv:1605.09477. [Google Scholar] [CrossRef]

- Li, Q.; Zheng, X.; Wu, X. Neural collaborative autoencoder. arXiv 2017, arXiv:1712.09043. [Google Scholar] [CrossRef]

- Huang, T.; Zhao, R.; Bi, L.; Zhang, D.; Lu, C. Neural embedding singular value decomposition for collaborative filtering. IEEE Trans. Neural Netw. Learn. Syst. 2021, 9, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2013), Lake Tahoe, NV, USA, 8 May 2013; pp. 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 1112–1119. [Google Scholar]

- Lin, Y.; Liu, Z.; Sun, M.; Liu, Y.; Zhu, X. Learning entity and relation embedding for knowledge graph completion. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2181–2187. [Google Scholar]

- Pan, H.; Qin, Z. Collaborative filtering algorithm based on knowledge graph meta-path. Mod. Comput. 2021, 27, 17–23. [Google Scholar]

- Chen, Y.; Feng, W.; Huang, M.; Feng, S. Behavior path collaborative filtering recommendation algorithm based on knowledge graph. Comput. Sci. 2021, 48, 176–183. [Google Scholar]

- Zhang, F.; Li, R.; Xu, K.; Xu, H. Similarity-Based heterogeneous graph attention network for knowledge-enhanced recommendation. In Proceedings of the International Conference on Knowledge Science 2021, Bolzano, Italy, 16–18 September 2021; pp. 488–499. [Google Scholar]

- Li, X.; Yang, X.; Yu, J.; Qian, Y.; Zheng, J. Two terminal recommendation algorithms based on knowledge graph convolution network. Comput. Sci. Explor. 2022, 16, 176–184. [Google Scholar]

- Wang, H.; Zhang, F.; Zhang, M.; Leskovec, J.; Zhao, M.; Li, W.; Wang, Z. Knowledge-aware graph neural networks with label smoothness regularization for recommender systems. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 968–977. [Google Scholar]

- Wang, H.; Zhang, F.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Multi-task feature learning for knowledge graph enhanced recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2000–2010. [Google Scholar]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge graph embedding via dynamic mapping matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Yang, B.; Yih, W.T.; He, X.; Gao, J.; Deng, L. Embedding entities and relations for learning and inference in knowledge bases. arXiv 2014, arXiv:1412.6575. [Google Scholar]

- Nickel, M.; Tresp, V.; Kriegel, H.P. A three-way model for collective learning on multi-relational data. In Proceedings of the 28th International Conference on Machine Learning, Washington, DC, USA, 28 June–2 July 2011; pp. 809–816. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex embeddings for simple link prediction. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2071–2080. [Google Scholar]

- Yu, X.; Ren, X.; Sun, Y.; Gu, Q.; Sturt, B.; Khandelwal, U.; Norick, B.; Han, J. Personalized entity recommendation: A heterogeneous information network approach. In Proceedings of the 7th ACM International Conference on Web Search and Data Mining, New York, NY, USA, 24–28 February 2014; pp. 283–292. [Google Scholar]

- Zhao, H.; Yao, Q.; Li, J.; Song, Y.; Lee, D.L. Meta-graphbased recommendation fusion over heterogeneous information networks. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 635–644. [Google Scholar]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. RippleNet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Wang, H.; Zhao, M.; Xie, X.; Li, W.; Guo, M. Knowledge graph convolutional networks for recommender systems. In Proceedings of the Worldwide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 3307–3313. [Google Scholar]

- Gu, J.; She, S.; Fan, S.; Zhang, S. Recommendation based on convolution network of users’ long- and short-term interests and knowledge graph. Comput. Eng. Sci. 2021, 43, 511–517. [Google Scholar]

- Xu, B.; Cen, K.; Huang, J.; Shen, H.; Cheng, X. A survey of graph convolution neural networks. J. Comput. Sci. 2020, 43, 755–780. [Google Scholar]

- Choi, J.; Ko, T.; Choi, Y.; Byun, H.; Kim, C.K. Dynamic graph convolutional networks with attention mechanism for rumor detection on social media. PLoS ONE 2021, 16, e0256039. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.S. KGAT: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Yang, Z.; Dong, S. HAGERec: Hierarchical attention graph convolutional network incorporating knowledge graph for explainable recommendation. Knowl.-Based Syst. 2020, 204, 106194. [Google Scholar] [CrossRef]

- Wang, Z.; Lin, G.; Tan, H.; Chen, Q.; Liu, X. CKAN: Collaborative knowledge-aware attentive network for recommender systems. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Xi’an, China, 25–30 July 2020; pp. 219–228. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Wang, J.; Yu, L.; Zhang, W.; Gong, Y.; Xu, Y.; Wang, B.; Zhang, P.; Zhang, D. IRGAN: A minimax game for unifying generative and discriminative information retrieval models. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 515–524. [Google Scholar]

- Chae, D.; Kang, J.; Kim, S.; Lee, J. CFGAN: A generic collaborative filtering framework based on generative adversarial networks. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 137–146. [Google Scholar]

- Takato, S.; Shin, K.; Hajime, N. Recommendation System Based on Generative Adversarial Network with Graph Convolutional Layers. Jaciii 2021, 25, 389–396. [Google Scholar] [CrossRef]

- Zhang, F.; Yuan, N.J.; Lian, D.; Xie, X.; Ma, W.Y. Collaborative knowledge base embedding for recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 353–362. [Google Scholar]

| Dataset | Users | Items | Interactions | Relations | Triplets |

|---|---|---|---|---|---|

| MovieLens-1M | 6036 | 2347 | 753,772 | 12 | 20,195 |

| MovieLens-10M | 67,788 | 8704 | 9,945,875 | -- | -- |

| MovieLens-20M | 138,159 | 16,954 | 13,501,622 | 32 | 499,474 |

| Book-Crossing | 19,676 | 20,003 | 172,576 | 18 | 60,787 |

| Last. FM | 1872 | 3846 | 42,346 | 60 | 15,518 |

| Dataset | N | d | H | L | Batch | lr | |

|---|---|---|---|---|---|---|---|

| MovieLens-1M | 4 | 32 | 1 | 5 | 2 × 10−5 | 256 | 5 × 10−4 |

| MovieLens-10M | -- | 32 | -- | 5 | 2 × 10−5 | 256 | 2 × 10−4 |

| MovieLens-20M | 4 | 32 | 2 | 7 | 10−7 | 65,536 | 2 × 10−2 |

| Book-Crossing | 4 | 32 | 1 | 5 | 2 × 10−5 | 256 | 2 × 10−4 |

| Last. FM | 4 | 32 | 1 | 5 | 10−4 | 128 | 5 × 10−4 |

| Model | MovieLens-1M | MovieLens-10M |

|---|---|---|

| CF-NADE | 0.829 | 0.771 |

| NCAE | -- | 0.767 |

| NESVD | 0.826 | 0.762 |

| ATKGRM-KG | 0.511 | 0.441 |

| Model | MovieLens-20M | Book-Crossing | Last. FM | |||

|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | |

| PER | 0.832 | 0.733 | 0.623 | 0.588 | 0.633 | 0.596 |

| CKE | 0.924 | 0.880 | 0.671 | 0.633 | 0.744 | 0.673 |

| RippleNet | 0.968 | 0.924 | 0.729 | 0.662 | 0.768 | 0.691 |

| KGCN | 0.978 | 0.932 | 0.688 | 0.630 | 0.796 | 0.708 |

| KGAT | 0.976 | 0.925 | 0.731 | 0.658 | 0.829 | 0.740 |

| HAGERec | 0.980 | 0.933 | 0.763 | 0.701 | 0.814 | 0.743 |

| CKAN | 0.976 | 0.930 | 0.753 | 0.675 | 0.840 | 0.772 |

| ATKGRM | 0.984 | 0.954 | 0.782 | 0.723 | 0.857 | 0.776 |

| Improve/% | 0.4% | 2.3% | 2.5% | 3.1% | 2.0% | 0.5% |

| Model | MovieLens-1M | Last. FM | ||

|---|---|---|---|---|

| Recall@5 | Recall@20 | Recall@10 | Recall@20 | |

| IRGAN | 0.072 | 0.166 | 0.1247 | 0.1823 |

| CFGAN | 0.108 | 0.272 | 0.1335 | 0.2023 |

| GCGAN | 0.0785 | 0.1377 | 0.1369 | 0.1661 |

| ATKGRM-KG | 0.1103 | 0.2846 | 0.1481 | 0.2214 |

| ATKGRM | 0.1107 | 0.2916 | 0.1532 | 0.2259 |

| Improve/% | 2.5% | 7.2% | 11.9% | 11.7% |

| Model | MovieLens-20M | Book-Crossing | Last. FM | |||

|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | |

| ATKGRM-AT | 0.981 | 0.946 | 0.750 | 0.687 | 0.821 | 0.746 |

| ATKGRM-P | 0.983 | 0.949 | 0.768 | 0.705 | 0.845 | 0.762 |

| ATKGRM | 0.984 | 0.954 | 0.782 | 0.723 | 0.857 | 0.776 |

| L | MovieLens-20M | Book-Crossing | Last. FM | |||

|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | |

| 3 | 0.983 | 0.954 | 0.773 | 0.701 | 0.853 | 0.776 |

| 5 | 0.982 | 0.954 | 0.782 | 0.723 | 0.857 | 0.776 |

| 7 | 0.984 | 0.954 | 0.778 | 0.717 | 0.851 | 0.768 |

| 9 | 0.983 | 0.853 | 0.775 | 0.708 | 0.847 | 0.769 |

| 11 | 0.983 | 0.847 | 0.766 | 0.704 | 0.851 | 0.762 |

| H | MovieLens-20M | Book-Crossing | Last. FM | |||

|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | |

| 1 | 0.981 | 0.946 | 0.782 | 0.723 | 0.857 | 0.776 |

| 2 | 0.984 | 0.954 | 0.773 | 0.715 | 0.850 | 0.773 |

| 3 | 0.983 | 0.952 | 0.769 | 0.708 | 0.844 | 0.769 |

| 4 | 0.980 | 0.948 | 0.764 | 0.701 | 0.840 | 0.760 |

| Model | MovieLens-1M | MovieLens-10M | ||

|---|---|---|---|---|

| Parameters | RMSE | Parameters | RMSE | |

| NESVD | 4.75 M | 0.826 | 39.3 M | 0.762 |

| KGCN | 3.4 M | 0.517 | 20 M | 0.446 |

| GCGAN | 30 M | 0.599 | 52 M | 0.525 |

| ATKGRM | 16 M | 0.511 | 49 M | 0.441 |

| H | D = 10% | D = 30% | D = 50% | D = 100% |

|---|---|---|---|---|

| 1 | 31.3 | 46.6 | 61.6 | 98 |

| 2 | 135.6 | 198.3 | 260.3 | 394 |

| 3 | 1031 | 1295.6 | 1535 | 1734 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Zhang, N.; Fan, S.; Gu, J.; Li, J. Knowledge Graph Recommendation Model Based on Adversarial Training. Appl. Sci. 2022, 12, 7434. https://doi.org/10.3390/app12157434

Zhang S, Zhang N, Fan S, Gu J, Li J. Knowledge Graph Recommendation Model Based on Adversarial Training. Applied Sciences. 2022; 12(15):7434. https://doi.org/10.3390/app12157434

Chicago/Turabian StyleZhang, Suqi, Ningjing Zhang, Shuai Fan, Junhua Gu, and Jianxin Li. 2022. "Knowledge Graph Recommendation Model Based on Adversarial Training" Applied Sciences 12, no. 15: 7434. https://doi.org/10.3390/app12157434

APA StyleZhang, S., Zhang, N., Fan, S., Gu, J., & Li, J. (2022). Knowledge Graph Recommendation Model Based on Adversarial Training. Applied Sciences, 12(15), 7434. https://doi.org/10.3390/app12157434