HTTP Adaptive Streaming Framework with Online Reinforcement Learning

Abstract

:1. Introduction

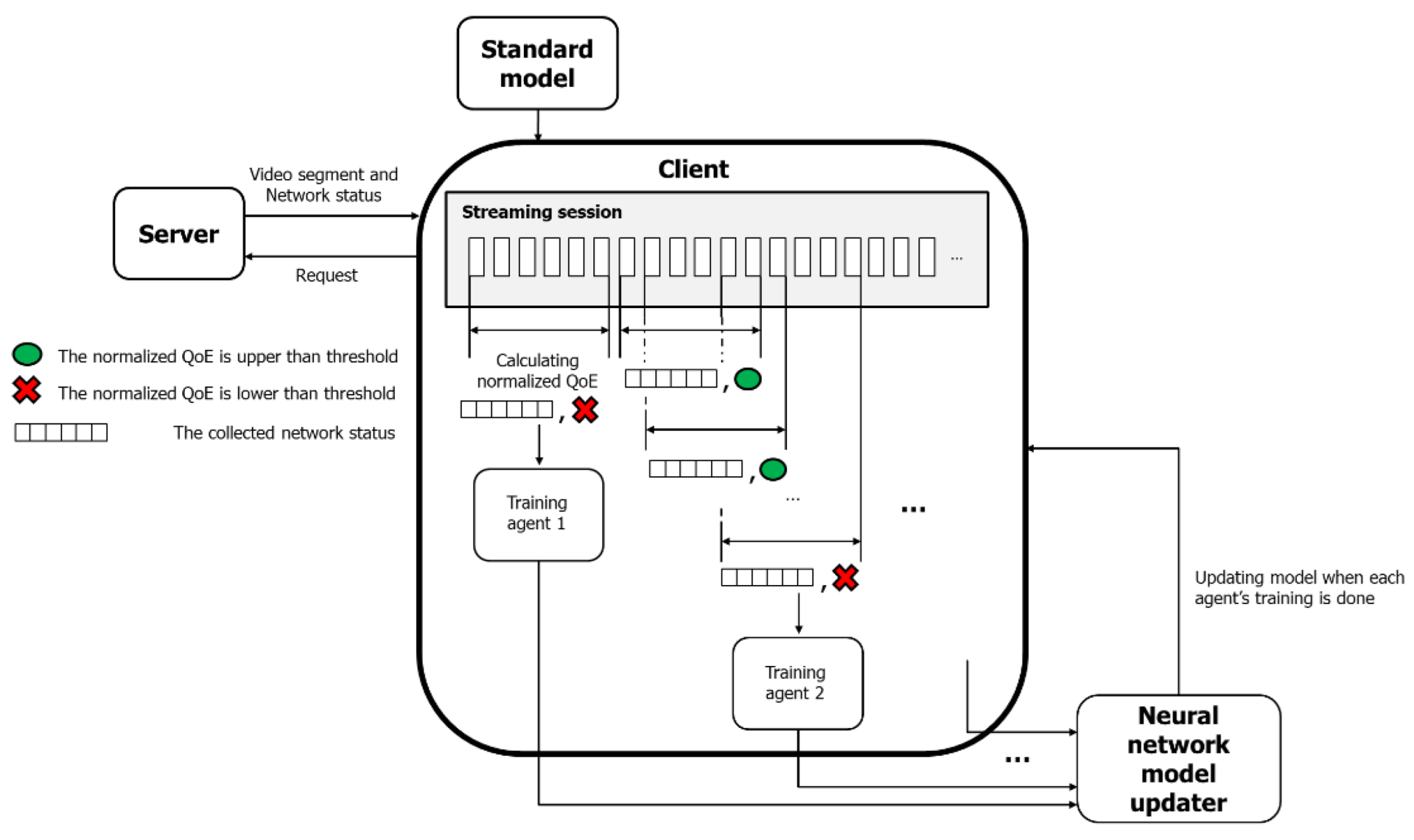

- We propose an HTTP adaptive streaming framework with online reinforcement learning. When a decrease in QoE is confirmed, the client updates the ABR model at the same time as performing video streaming to adapt to the change in the client’s network status.

- Using a network classification scheme, the network traces used as the dataset and the current user’s network environment are classified according to characteristics. The proposed scheme can adapt the ABR model to the time-varying network conditions using classified network data.

- If a learning-based ABR algorithm is used, it can be extended using the proposed scheme regardless of the learning method.

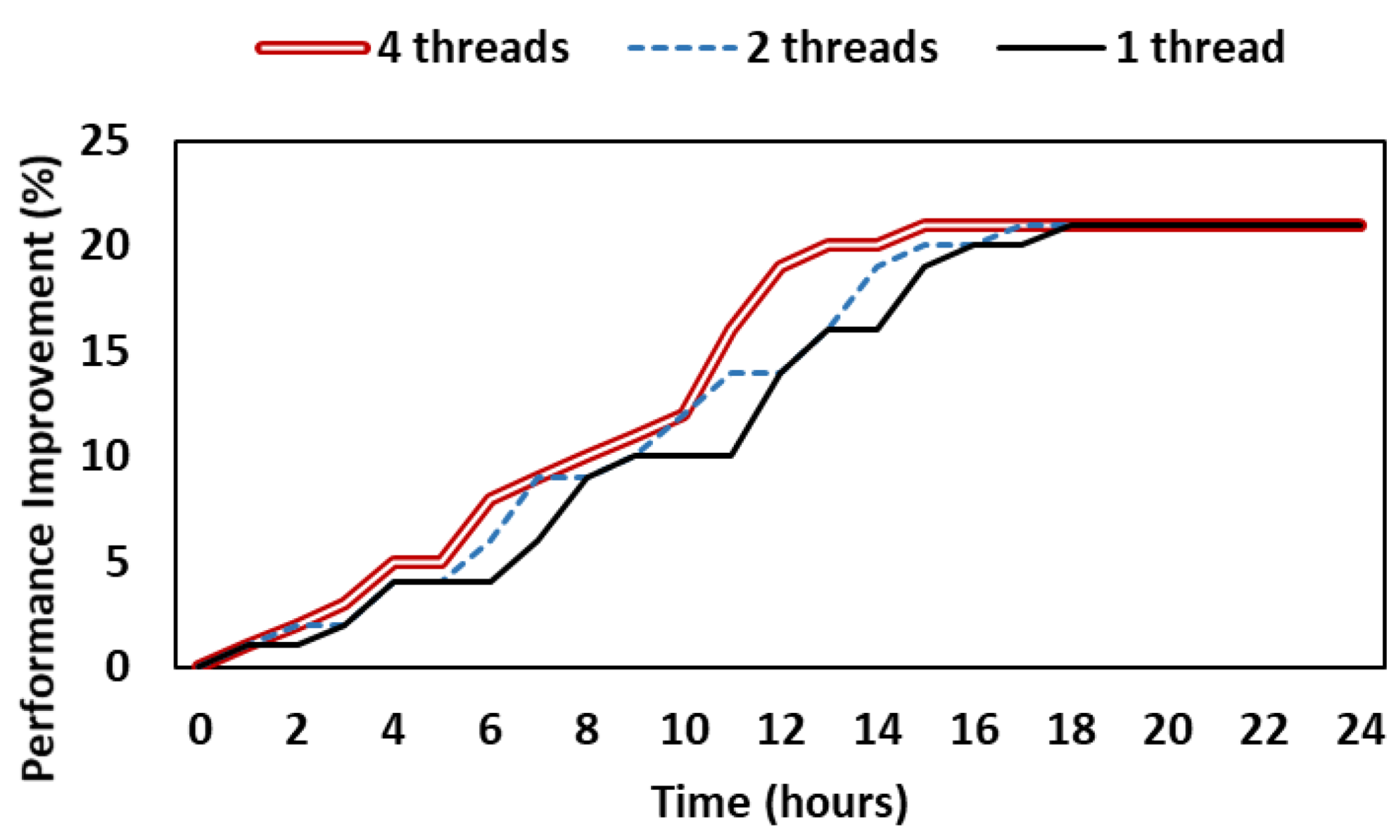

- The neural network model is trained with a state-of-the-art RL algorithm to adapt the ABR model to the network environment that changes simultaneously with video streaming. Training is performed at high speed because it is asynchronous due to creating agents in parallel.

- The proposed scheme was compared with the existing schemes through simulation-based experiments. The experimental results show that the proposed scheme outperforms the existing schemes in terms of overall QoE for clients.

2. Related Work

2.1. DASH-Based Bitrate Adaptation

2.2. Model-Based ABR Algorithms

2.3. Learning-Based ABR Algorithms

3. Framework Design

3.1. Basic Assumptions

3.2. MDP Problem Formulation

3.2.1. State Space

3.2.2. Action Space

3.2.3. Reward Space

3.3. Neural Network Model

3.4. Operation of Framework

3.4.1. Framework Workflow

| Algorithm 1 HTTP Adaptive Streaming with Online Reinforcement Learning |

3.4.2. QoE Normalization

3.4.3. Network Classification

4. Performance Evaluation

4.1. Implementation

4.2. Experimental Settings

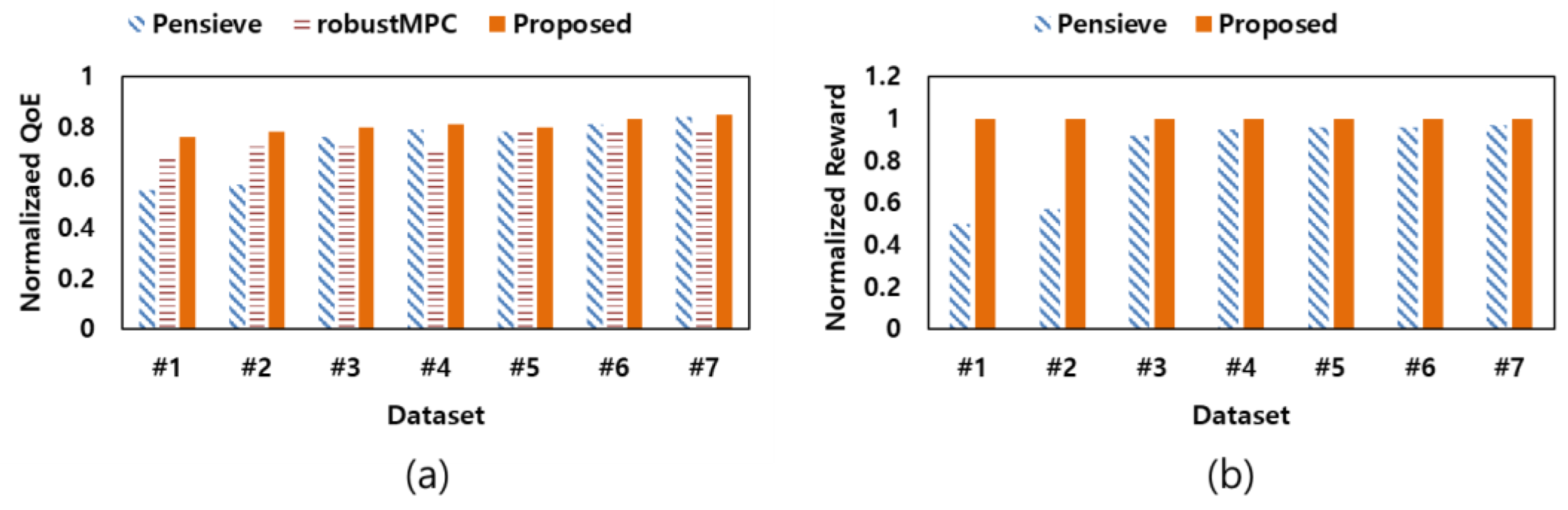

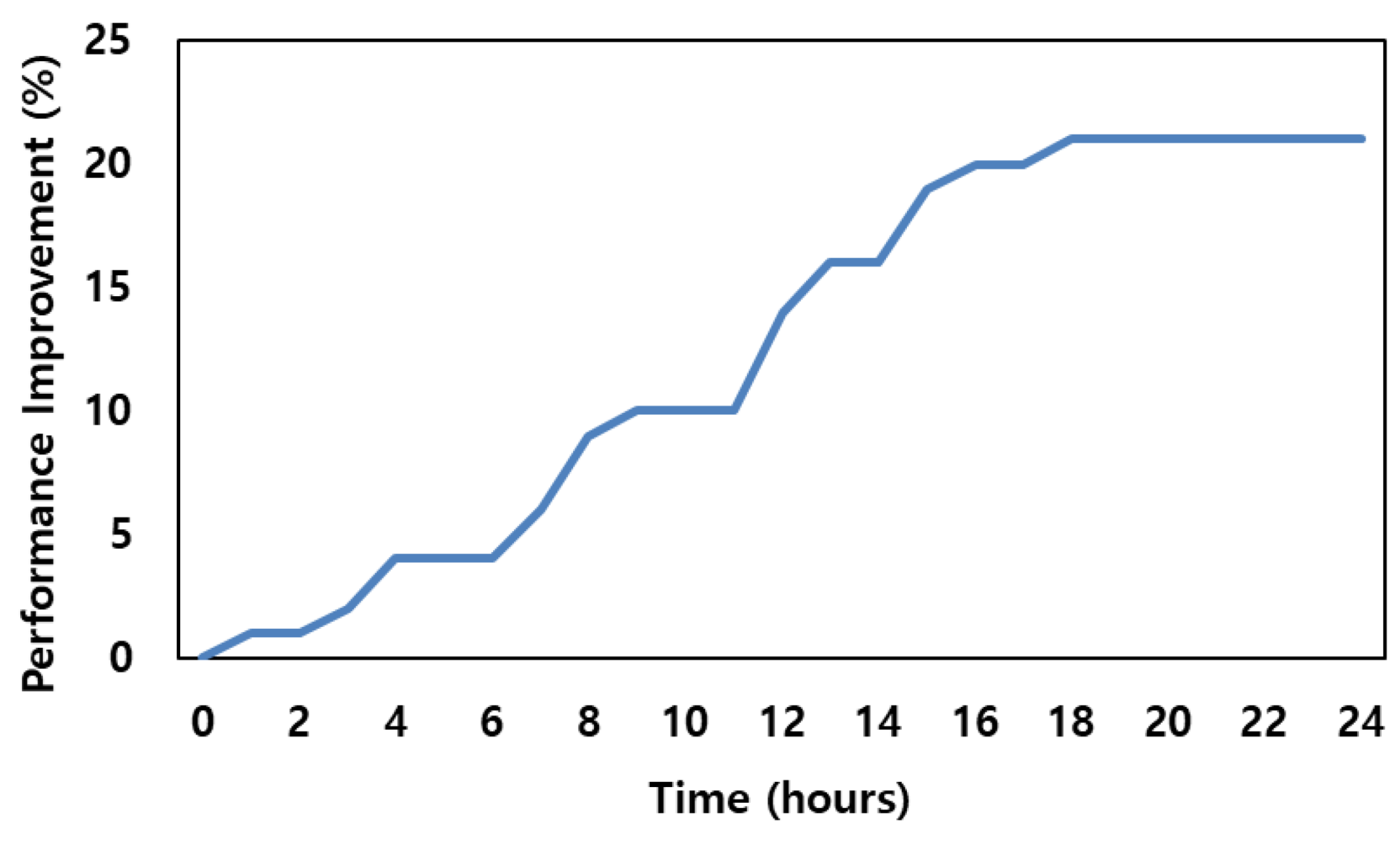

4.3. Results

4.4. Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cisco Public. Cisco Annual Internet Report (2018–2023) White Paper. Available online: https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.html (accessed on 15 March 2022).

- Kua, J.; Armitage, G.; Branch, P. A Survey of Rate Adaptation Techniques for Dynamic Adaptive Streaming over HTTP. In IEEE Communication Surveys & Tutorials; IEEE: Piscataway, NJ, USA, 2017; pp. 1842–1866. [Google Scholar]

- Timmerer, C.; Sodogar, I. Ad Hoc on HTTP Streaming of MPEG Media; ISO/IEC JTC1/SC29/WG11/M176 57; ISO: Geneva, Switzerland, 2010. [Google Scholar]

- Zambelli, A. IIS Smooth Streaming Technical Overview; Microsoft Corporation: Redmond, WA, USA, 2009. [Google Scholar]

- Pantos, R.; May, W.; HTTP Live Streaming. IETF Draft. August 2017. Available online: https://datatracker.ietf.org/doc/html/rfc8-216 (accessed on 20 April 2022).

- Adobe HTTP Dynamic Streaming. Available online: http://www.adobe.com/products/httpdynamicstreaming/ (accessed on 20 April 2022).

- Petrangeli, S.; Hooft, J.V.D.; Wauters, T.; Turck, F.D. Quality of Experience-Centric Management of Adaptive Video Streaming Services: Status and Challenges. ACM Trans. Multimed. Comput. Commun. Appl. 2018, 14, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Bae, S.; Jang, D.; Park, K. Why is HTTP Adaptive Streaming So Hard? In Proceedings of the Asia-Pacific Workshop on Systems, Tokyo, Japan, 27–28 July 2015; pp. 1–8. [Google Scholar]

- Li, Z.; Zhu, X.; Gahm, J.; Pan, R.; Hu, H.; Began, A.C.; Oran, D. Probe and Adapt: Rate Adaptation for HTTP Video Streaming at Scale. IEEE J. Sel. Areas Commun. 2014, 32, 719–733. [Google Scholar]

- Thang, T.; Ho, Q.; Kang, J.; Pham, A. Adaptive Streaming of Audiovisual Content Using MPEG DASH. IEEE Trans. Consum. Electron. 2012, 58, 78–85. [Google Scholar]

- Huang, T.; Johari, R.; McKeown, N.; Trunnell, M.; Waston, M. A Buffer-based Approach to Rate Adaptation: Evidence from a Large Video Streaming Service. In Proceedings of the ACM Conference on SIGCOMM, Chicago, IL, USA, 17–22 August 2014; pp. 187–198. [Google Scholar]

- Le, H.; Nguyen, D.; Ngoc, N.; Pham, A.; Thang, T.C. Buffer-based Bitrate Adaptation for Adaptive HTTP Streaming. In Proceedings of the IEEE International Conference on Advanced Technologies for Communications, Ho Chi Minh, Vietnam, 16–18 October 2013; pp. 33–38. [Google Scholar]

- Zahran, A.H.; Raca, D.; Sreenan, C.J. Arbiter+: Adaptive Rate-based Intelligent HTTP Streaming Algorithm for Mobile Networks. IEEE Trans. Mob. Comput. 2018, 17, 2716–2728. [Google Scholar] [CrossRef]

- Yin, X.; Jindal, A.; Sekar, V.; Sinopoli, B. A Control-Theoretic Approach for Dynamic Adaptive Video Streaming over HTTP. In Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication, New York, NY, USA, 22–26 August 2015; pp. 325–338. [Google Scholar]

- Huang, T.; Zhang, R.X.; Zhou, C.; Sun, L. Video Quality Aware Rate Control for Real-time Video Streaming based on Deep Reinforcement Learning. In Proceedings of the 26th ACM International Conference on Multimedia, New York, NY, USA, 22–26 October 2018; pp. 1208–1216. [Google Scholar]

- Mok, R.K.P.; Chan, E.W.W.; Luo, X.; Chang, R.K.C. Inferring the QoE of HTTP Video Streaming from User-Viewing Activities. In Proceedings of the First ACM SIGCOMM Workshop on Measurements up the Stack, Toronto, ON, Canada, 19 August 2011; pp. 31–36. [Google Scholar]

- Mok, R.K.P.; Chen, E.W.W.; Chang, R.K.C. Measuring the Quality of Experience of HTTP Video Streaming. In Proceedings of the 12th IFIP/IEEE International Symposium on Integrated Network Management and Workshops, Dublin, Ireland, 23–27 May 2011; pp. 485–492. [Google Scholar]

- Gadaleta, M.; Chiariotti, F.; Rossi, M.; Zanella, A. D-DASH: A Deep Q-Learning Framework for DASH Video Streaming. IEEE Trans. Cogn. Commun. Netw. 2017, 20, 703–718. [Google Scholar] [CrossRef]

- Konda, V.R.; Tsitsiklis, J.N. Actor-Critic Algorithms. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2000; pp. 1008–1014. Available online: https://proceedings.neurips.cc/paper/1999/file/6449f44a102fde8-48669bdd9eb6b76fa-Paper.pdf (accessed on 9 April 2022).

- Zhang, G.; Lee, J.Y. Ensemble Adaptive Streaming–A New Paradigm to Generate Streaming Algorithms via Specializations. IEEE Trans. Mob. Comput. 2020, 19, 1346–1358. [Google Scholar] [CrossRef]

- Mao, H.; Netravali, R.; Alizadeh, M. Neural Adaptive Video Streaming with Pensieve. In Proceedings of the Conference of the ACM Special Interest Group on Data Communication, Los Angeles, CA, USA, 21–25 August 2017; pp. 197–210. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Duvenaud, D.; Maclaurin, D.; Adams, R. Early Stopping as Nonparametric Variational Inference. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Cadiz, Spain, 9–11 May 2016; pp. 1070–1077. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy Gradient Methods for Reinforcement Learning with Function Approximation. In Proceedings of the 12th International Conference on Neural Information Processing Systems, Denver, CO, USA, 29 November–4 December 1999; pp. 1057–1063. [Google Scholar]

- Lazaric, A.; Restelli, M.; Bonarini, A. Reinforcement Learning in Continuous Action Spaces Through Sequential Monte Carlo Methods. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–8 December 2007. [Google Scholar]

- Kurth-Nelson, Z.; Redish, A.D. Temporal-Difference Reinforcement Learning with Distributed Representations. PLoS ONE 2009, 4, e7362. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- TFLearn. TFLearn: Deep Learning Library Featuring a Higher-Level API for TensorFlow. Available online: http://tflearn.org/ (accessed on 23 February 2022).

- The Network Simulator NS-3. Available online: http://www.nsnam.org (accessed on 29 December 2021).

- Federal Communications Commission (FCC). Raw Data-Measuring Broadband America Mobile Data. Available online: https://www.fcc.gov/reports-research/reports (accessed on 17 March 2022).

- Riiser, H.; Vigmostad, P.; Griwodz, C.; Halvorsen, P. Commute Path Bandwidth Traces from 3G Networks: Analysis and Applications. In Proceedings of the 4th ACM Multimedia Systems Conference, Oslo, Norway, 28 February 2013; pp. 114–118. [Google Scholar]

- Hooft, J.V.D.; Petrangeli, S.; Wauters, T.; Huysegems, R.; Alface, P.R.; Bostoen, T.; Turck, F.D. HTTP/2-Based Adaptive Streaming of HEVC Video over 4G/LTE Networks. IEEE Commun. Lett. 2016, 20, 2177–2180. [Google Scholar] [CrossRef]

- DASH Industry Forum. Reference Client 2.4.0. Available online: https://reference.dashif.org/dash.js/v2.4.0/samples/dash-ifreference-player/index.html (accessed on 15 May 2021).

| Parameters | Description | Value |

|---|---|---|

| Discount factor | 0.99 | |

| λ, ρ | Weight parameters used in the QoE function | 1.0, 4.3 |

| Learning rate for the actor-critic network | 10−4, 10−3 | |

| β | Entropy weight | 1 to 0.1 (over 106 iterations) |

| Trace Dataset | |||||||

|---|---|---|---|---|---|---|---|

| Characteristics | #1 | #2 | #3 | #4 | #5 | #6 | #7 |

| Mean Throughput (Mbps) | 0.98 | 1.57 | 2.62 | 3.23 | 4.75 | 5.79 | 6.52 |

| Max Throughput (Mbps) | 3.45 | 3.38 | 6.54 | 8.97 | 8.23 | 9.65 | 11.53 |

| Min Throughput (Mbps) | 0.09 | 0.23 | 0.21 | 0.38 | 0.26 | 0.36 | 0.39 |

| Coefficient of Variation | 0.76 | 0.44 | 0.56 | 0.72 | 0.35 | 0.39 | 0.37 |

| Scheme | Characteristics |

|---|---|

| robustMPC | 1. Model predictive control-based adaptation 2. Traditional video adaptation scheme |

| Pensieve | 1. Reinforcement learning (Server-side) 2. QoE model with perceptual quality 3. QoE degradation for specific network |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.; Chung, K. HTTP Adaptive Streaming Framework with Online Reinforcement Learning. Appl. Sci. 2022, 12, 7423. https://doi.org/10.3390/app12157423

Kang J, Chung K. HTTP Adaptive Streaming Framework with Online Reinforcement Learning. Applied Sciences. 2022; 12(15):7423. https://doi.org/10.3390/app12157423

Chicago/Turabian StyleKang, Jeongho, and Kwangsue Chung. 2022. "HTTP Adaptive Streaming Framework with Online Reinforcement Learning" Applied Sciences 12, no. 15: 7423. https://doi.org/10.3390/app12157423

APA StyleKang, J., & Chung, K. (2022). HTTP Adaptive Streaming Framework with Online Reinforcement Learning. Applied Sciences, 12(15), 7423. https://doi.org/10.3390/app12157423