Sex Recognition through ECG Signals aiming toward Smartphone Authentication

Abstract

:1. Introduction

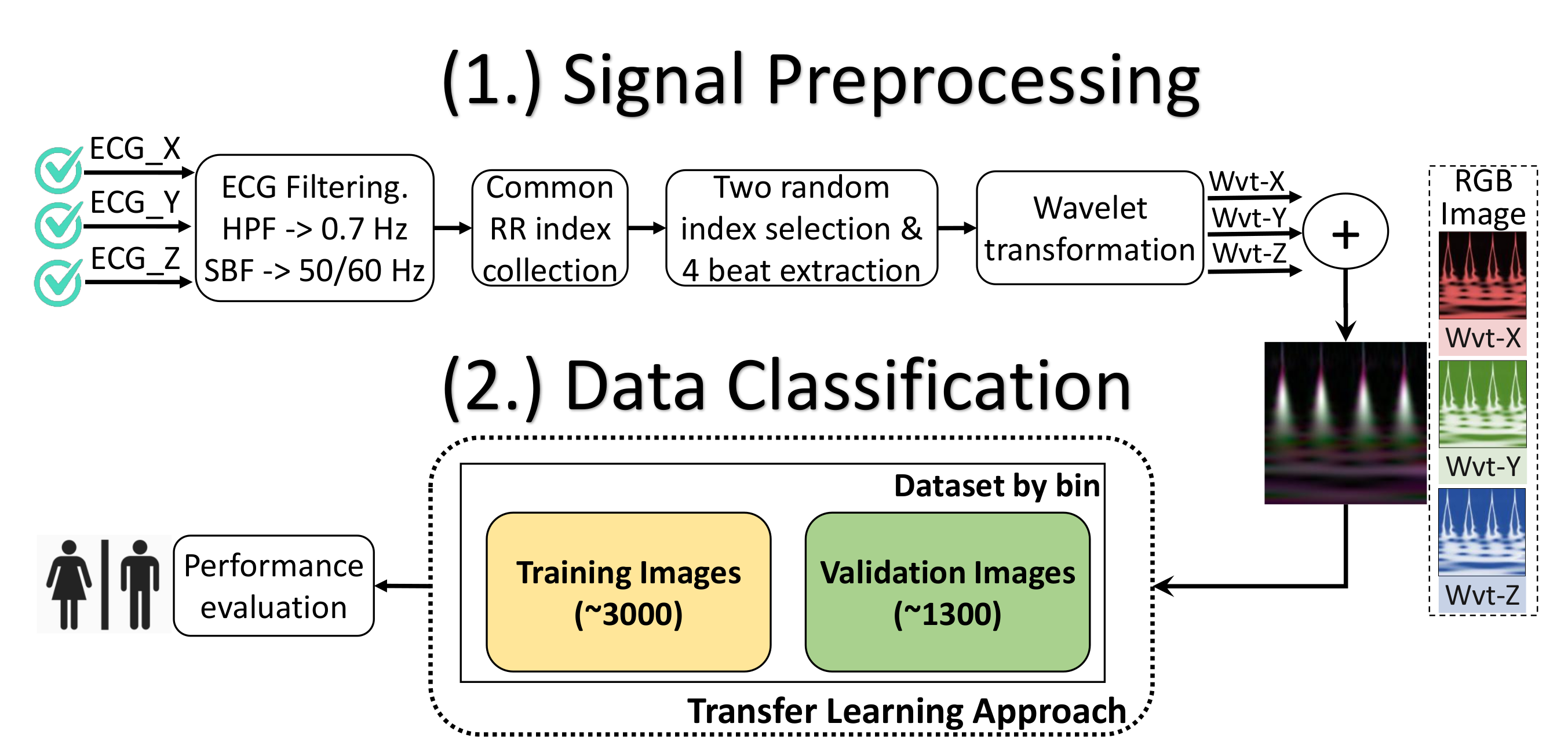

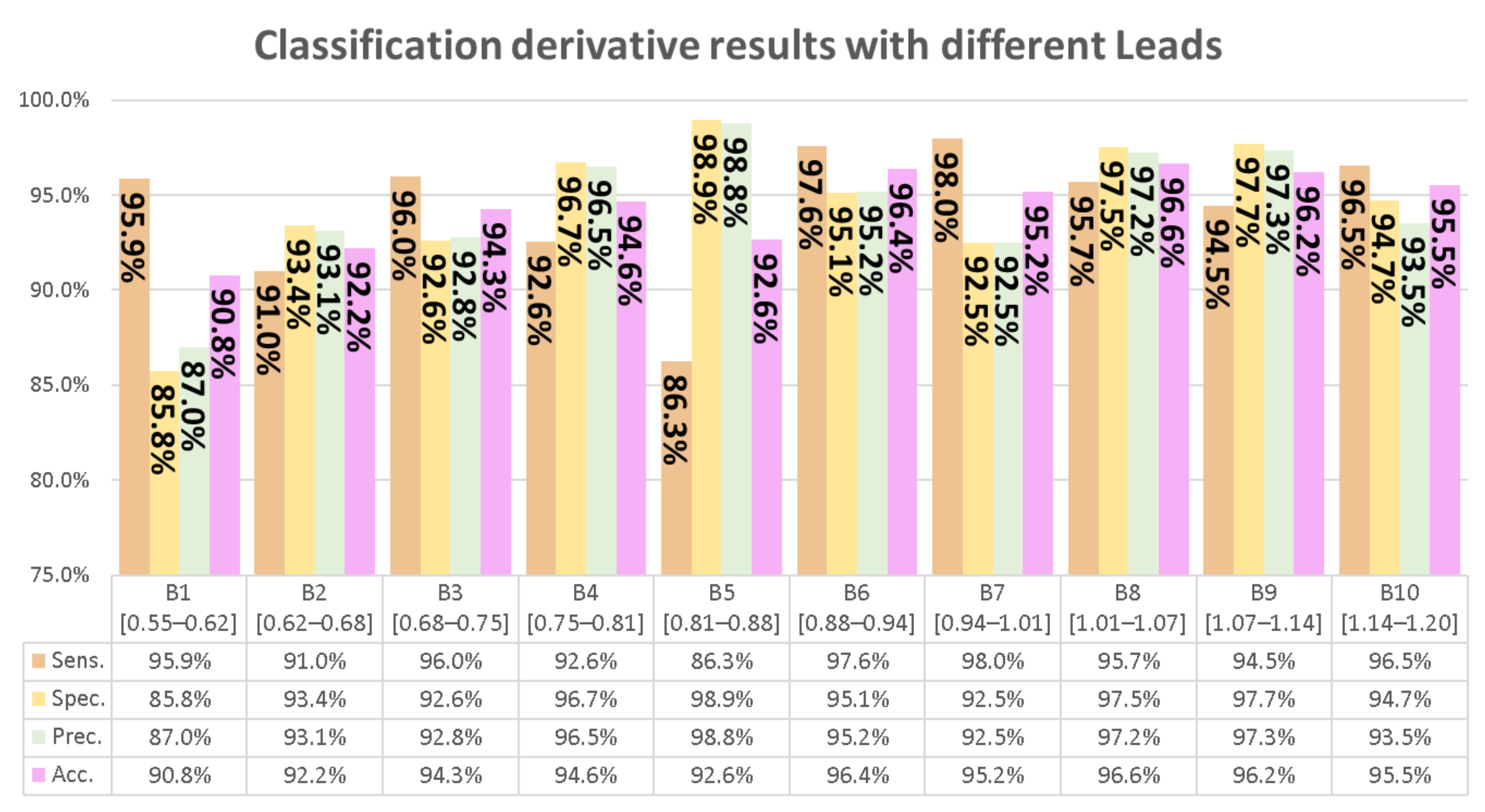

- We implement a novel system that uses heart rate as a feature of a classifier selection, reaching a general accuracy of 94.4% ± 2.0% with a peak over 96% in some bins, collecting only four heartbeats per sample.

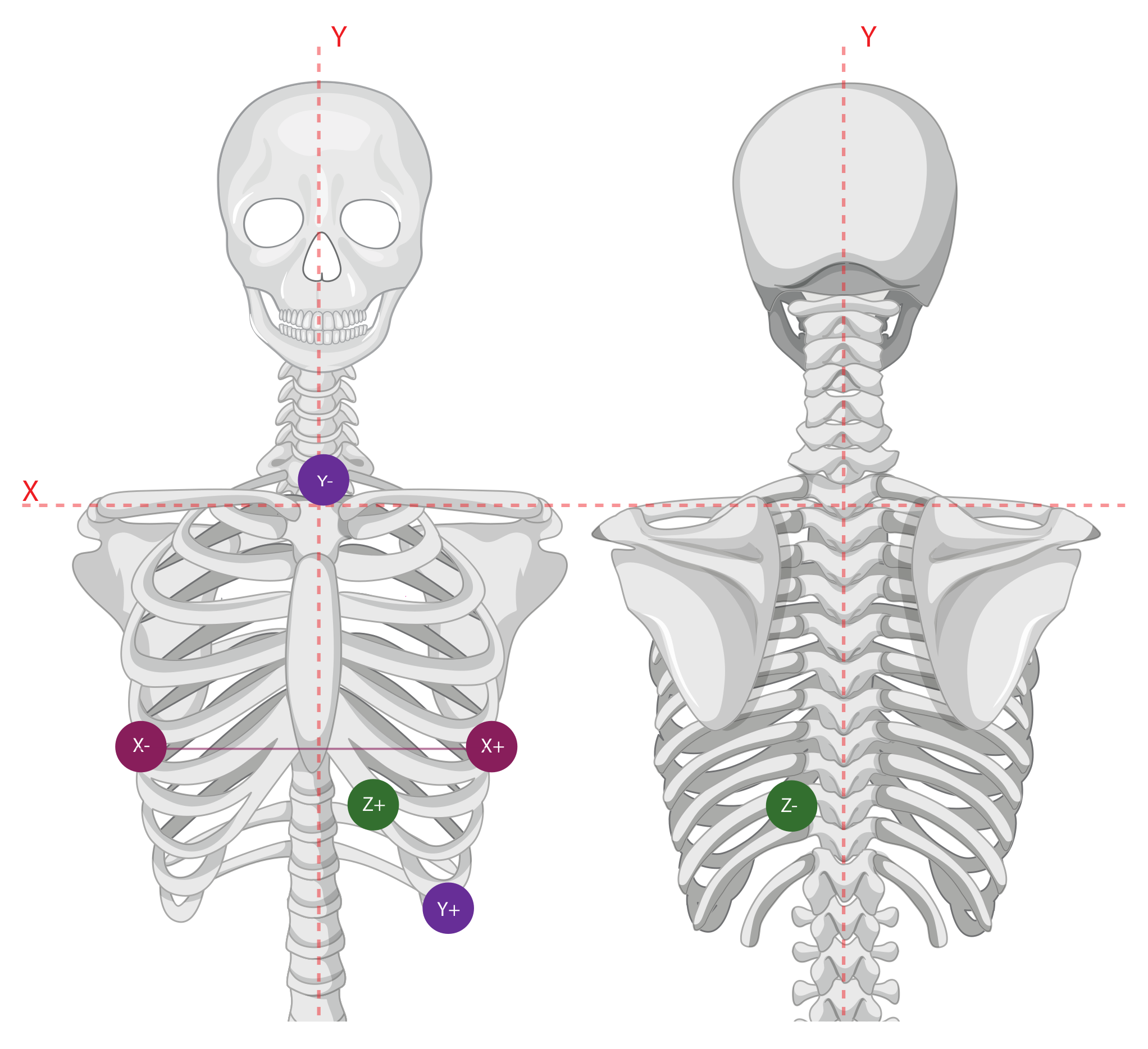

- Our approach does not use the 12-lead configuration that is common in related studies. In contrast, we implement the use of pseudo-orthogonal lead configuration that uses three signals.

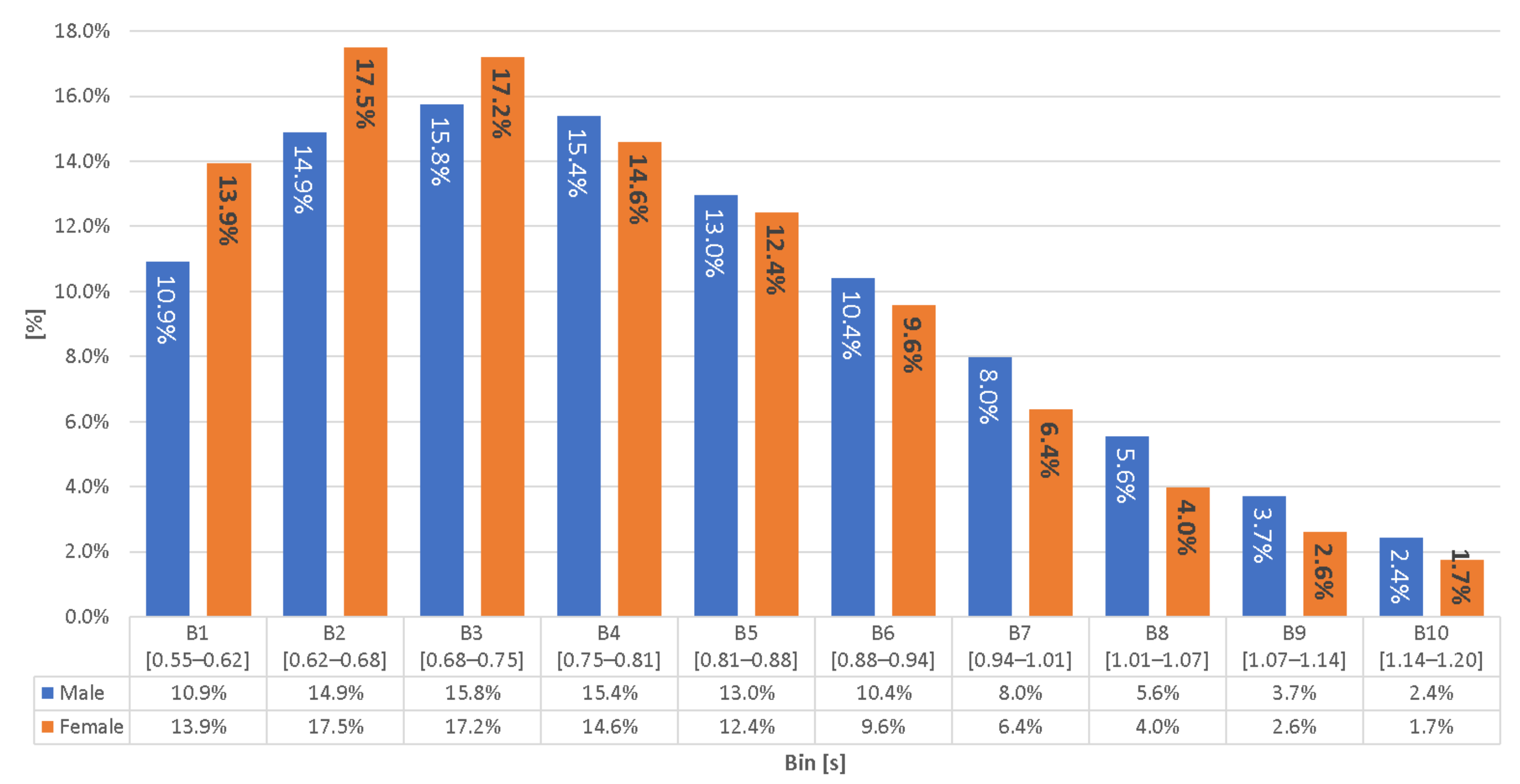

- We propose an RR discrimination to feed the Deep Convolutional Neural Network (CNN) classifier. It provides a fewer number of samples to train the classifier but achieves similar results compared with related work.

- Taking advantage of all the signal waveforms, we provide an RGB strategy for representing orthogonal lead signals in one sample through a wavelet transform.

- Our experiment can classify the person’s sex without controlling the person’s stance.

2. Related Work

3. Materials and Methods

3.1. Database Features

3.2. Methodology

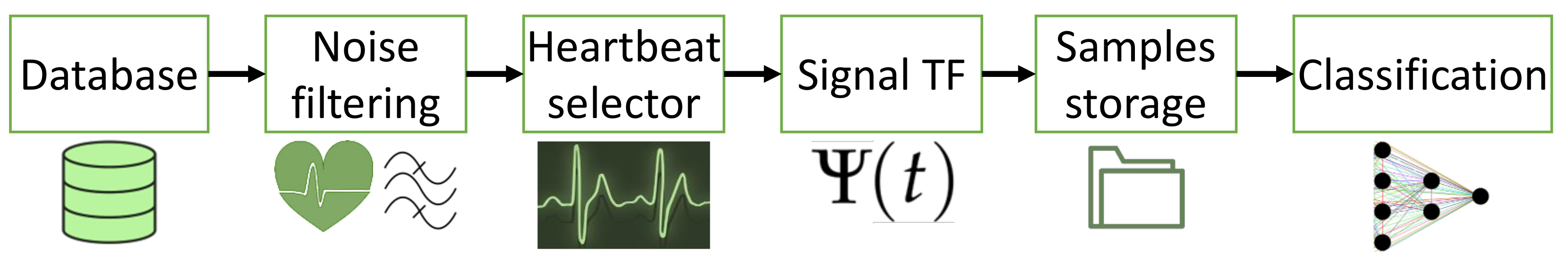

4. Architecture

5. Results

Toward ECG Sex Recognition for Smartphone Authentication?

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADFECGDB | abdominal and direct fECG database |

| AF | Atrial fibrillation |

| ECG | Electrocardiogram |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| TF | Transformation |

| HPF | High Pass Filter |

| LBBB | Left Bundle Branch Block |

| KNN | k-nearest neighbors algorithm |

| LSTM | Long short-term memory |

| LVH | Left ventricular hypertrophy |

| MFF | Multimodal Feature Fusion |

| MIF | Multimodal Image Fusion |

| NSR | Normal Sinus Rhythm |

| PAC | Premature atrial contractions |

| PCLR | Patient Contrastive Learning of Representations |

| PTB-XL | Physikalisch-Technische Bundesanstalt |

| PVC | Premature ventricular contractions |

| RBBB | Right Bundle Branch Block |

| SBP | Stop Band Filter |

| SCP-ECG | Standard communications protocol for computer-assisted electrocardiography |

| SimCLR | Simple Framework for Contrastive Learning |

| SPAR | Symmetric Projection Attractor Reconstruction |

| SVEB | Supraventricular ectopic beat |

| SVTA | Supraventricular Tachyarrhythmia |

| VEB | Ventricular ectopic beat |

References

- Hsu, Y.L.; Wang, J.S.; Chiang, W.C.; Hung, C.H. Automatic ECG-Based Emotion Recognition in Music Listening. IEEE Trans. Affect. Comput. 2020, 11, 85–99. [Google Scholar] [CrossRef]

- Attia, Z.I.; Friedman, P.A.; Noseworthy, P.A.; Lopez-Jimenez, F.; Ladewig, D.J.; Satam, G.; Pellikka, P.A.; Munger, T.M.; Asirvatham, S.J.; Scott, C.G.; et al. Age and Sex Estimation Using Artificial Intelligence From Standard 12-Lead ECGs. Circ. Arrhythmia Electrophysiol. 2019, 12, e007284. [Google Scholar] [CrossRef]

- Cabra, J.L.; Mendez, D.; Trujillo, L.C. Wide Machine Learning Algorithms Evaluation Applied to ECG Authentication and Gender Recognition. In Proceedings of the 2nd International Conference on Biometric Engineering and Applications (ICBEA), Amsterdam, The Netherlands, 16–18 May 2018; pp. 58–64. [Google Scholar]

- Siegersma, K.; Van De Leur, R.; Onland-Moret, N.C.; Van Es, R.; Den Ruijter, H.M. Misclassification of sex by deep neural networks reveals novel ECG characteristics that explain a higher risk of mortality in women and in men. Eur. Heart J. 2021, 42, 3162. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Kim, K.W.; Hong, H.G.; Koo, J.H.; Kim, M.C.; Park, K.R. Gender Recognition from Human-Body Images Using Visible-Light and Thermal Camera Videos Based on a Convolutional Neural Network for Image Feature Extraction. Sensors 2017, 17, 637. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ghildiyal, A.; Sharma, S.; Verma, I.; Marhatta, U. Age and Gender Predictions using Artificial Intelligence Algorithm. In Proceedings of the 3rd International Conference on Intelligent Sustainable Systems (ICISS’20), Thoothukudi, India, 3–5 December 2020; pp. 371–375. [Google Scholar]

- Lee, M.; Lee, J.H.; Kim, D.H. Gender recognition using optimal gait feature based on recursive feature elimination in normal walking. Expert Syst. Appl. 2022, 189, 116040. [Google Scholar] [CrossRef]

- Alkhawaldeh, R.S. DGR: Gender Recognition of Human Speech Using One-Dimensional Conventional Neural Network. Sci. Program. 2019, 2019, 7213717. [Google Scholar] [CrossRef]

- Ikae, C.; Savoy, J. Gender identification on Twitter. J. Assoc. Inf. Sci. Technol. 2021, 73, 58–69. [Google Scholar] [CrossRef]

- Tsimperidis, I.; Yucel, C.; Katos, V. Age and Gender as Cyber Attribution Features in Keystroke Dynamic-Based User Classification Processes. Electronics 2021, 10, 835. [Google Scholar] [CrossRef]

- Bayer. Las Enfermedades Cardiovasculares son la Primera Causa de Muerte en Colombia y el Mundo. 2020. Available online: https://www.bayer.com/es/co/las-enfermedades-cardiovasculares-son-la-primera-causa-de-muerte-en-colombia-y-el-mundo (accessed on 28 April 2022).

- Centers for Disease Control and Prevention. Heart Disease Facts. 2022. Available online: https://www.cdc.gov/heartdisease/facts.htm (accessed on 28 April 2022).

- Publishing, H.H. The Heart Attack Gender Gap. 2016. Available online: https://www.health.harvard.edu/heart-health/the-heart-attack-gender-gap (accessed on 28 April 2022).

- Health, H. Throughout Life, Heart Attacks are Twice as Common in Men than Women–Harvard Health. 2016. Available online: https://www.health.harvard.edu/heart-health/throughout-life-heart-attacks-are-twice-as-common-in-men-than-women (accessed on 28 April 2022).

- Albrektsen, G.; Heuch, I.; Løchen, M.L.; Thelle, D.S.; Wilsgaard, T.; Njølstad, I.; Bønaa, K.H. Lifelong Gender Gap in Risk of Incident Myocardial Infarction: The Tromsø Study. JAMA Intern. Med. 2016, 176, 1673–1679. [Google Scholar] [CrossRef]

- Cho, L. Women or Men—Who Has a Higher Risk of Heart Attack? 2020. Available online: https://health.clevelandclinic.org/women-men-higher-risk-heart-attack/ (accessed on 28 April 2022).

- Mieszczanska, H.; Pietrasik, G.; Piotrowicz, K.; McNitt, S.; Moss, A.J.; Zareba, W. Gender Related Differences in Electrocardiographic Parameters and Their Association with Cardiac Events in Patients After Myocardial Infarction. Am. J. Cardiol. 2008, 101, 20–24. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nakagawa, M.; Ooie, T.; Ou, B.; Ichinose, M.; Yonemochi, H.; Saikawa, T. Gender differences in the dynamics of terminal T wave intervals. Pacing Clin. Electrophysiol. 2004, 27, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Ergin, S.; Uysal, A.K.; Gunal, E.S.; Gunal, S.; Gulmezoglu, M.B. ECG based biometric authentication using ensemble of features. In Proceedings of the 9th Iberian Conference on Information Systems and Technologies (CISTI’14), Barcelona, Spain, 18–21 June 2014; pp. 1274–1279. [Google Scholar]

- Pinto, J.R.; Cardoso, J.S.; Lourenço, A. Evolution, Current Challenges, and Future Possibilities in ECG Biometrics. IEEE Access 2018, 6, 34746–34776. [Google Scholar] [CrossRef]

- Jain, A.K.; Nandakumar, K.; Lu, X.; Park, U. Integrating Faces, Fingerprints, and Soft Biometric Traits for User Recognition. In Proceedings of the 2nd Biometric Authentication ECCV International Workshop (BioAW’04), Prague, Czech Republic, 15 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 259–269. [Google Scholar]

- Ceci, M.; Japkowicz, N.; Liu, J.; Papadopoulos, G.A.; Raś, Z.W. Foundations of Intelligent Systems. In Proceedings of the 24th International Symposium, ISMIS 2018, Limassol, Cyprus, 29–31 October 2018; Springer: Cham, Switzerland, 2018; pp. 120–129. [Google Scholar]

- Roy, A.; Memon, N.; Ross, A. MasterPrint: Exploring the Vulnerability of Partial Fingerprint-Based Authentication Systems. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2013–2025. [Google Scholar] [CrossRef]

- Winder, D. Hackers Claim ‘Any’ Smartphone Fingerprint Lock Can be Broken in 20 Minutes. Available online: https://www.forbes.com/sites/daveywinder/2019/11/02/smartphone-security-alert-as-hackers-claim-any-fingerprint-lock-broken-in-20-minutes/?sh=2ca0734f6853 (accessed on 15 June 2022).

- Mott, N. Hacking Fingerprints Is Actually Pretty Easy—And Cheap. 2021. Available online: https://www.pcmag.com/news/hacking-fingerprints-is-actually-pretty-easy-and-cheap (accessed on 15 June 2022).

- Winder, D. Apple’s iPhone FaceID Hacked In Less Than 120 Seconds. 2019. Available online: https://www.forbes.com/sites/daveywinder/2019/08/10/apples-iphone-faceid-hacked-in-less-than-120-seconds/?sh=349627621bc3 (accessed on 15 June 2022).

- Pato, J.; Millett, L. Biometric Recognition: Challenges and Opportunities, 1st ed.; The National Academies Press: Washington, DC, USA, 2010; p. 165. [Google Scholar]

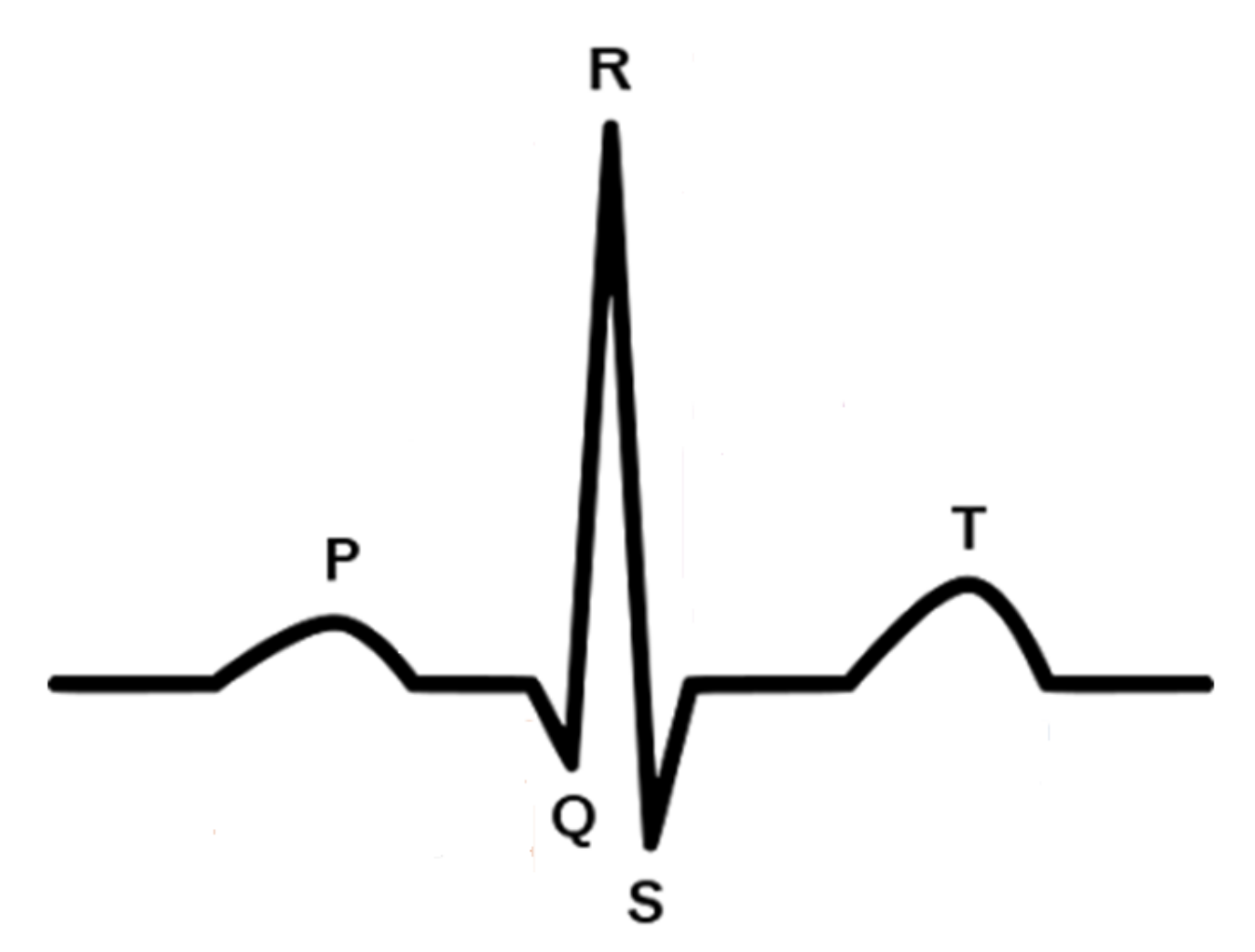

- Atkielski, A. Schematic Diagram of Normal Sinus Rhythm for a Human Heart as Seen on ECG. 2009. Available online: https://commons.wikimedia.org/wiki/File:SinusRhythmLabels-es.svg (accessed on 25 June 2022).

- İzci, E.; Değirmenci, M.; Özdemir, M.A.; Akan, A. ECG Arrhythmia Detection with Deep Learning. In Proceedings of the 28th IEEE Signal Processing and Communications Applications (SIU), Gaziantep, Turkey, 5–7 October 2020; pp. 1541–1544. [Google Scholar]

- Essa, E.; Xie, X. An Ensemble of Deep Learning-Based Multi-Model for ECG Heartbeats Arrhythmia Classification. IEEE Access 2021, 9, 103452–103464. [Google Scholar] [CrossRef]

- Ahmad, Z.; Tabassum, A.; Guan, L.; Khan, N.M. ECG Heartbeat Classification Using Multimodal Fusion. IEEE Access 2021, 9, 100615–100626. [Google Scholar] [CrossRef]

- Niu, J.; Tang, Y.; Sun, Z.; Zhang, W. Inter-Patient ECG Classification With Symbolic Representations and Multi-Perspective Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2020, 24, 1321–1332. [Google Scholar] [CrossRef]

- Ebrahimi, Z.; Loni, M.; Daneshtalab, M.; Gharehbaghi, A. A review on deep learning methods for ECG arrhythmia classification. EXpert Syst. Appl. X 2020, 7, 100033. [Google Scholar] [CrossRef]

- Bahrami, M.; Forouzanfar, M. Sleep Apnea Detection From Single-Lead ECG: A Comprehensive Analysis of Machine Learning and Deep Learning Algorithms. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Lee, K.J.; Lee, B. End-to-End Deep Learning Architecture for Separating Maternal and Fetal ECGs Using W-Net. IEEE Access 2022, 10, 39782–39788. [Google Scholar] [CrossRef]

- Li, H.; Deng, J.; Feng, P.; Pu, C.; Arachchige, D.D.K.; Cheng, Q. Short-Term Nacelle Orientation Forecasting Using Bilinear Transformation and ICEEMDAN Framework. Front. Energy Res. 2021, 9, 780928. [Google Scholar] [CrossRef]

- Li, H.; Deng, J.; Yuan, S.; Feng, P.; Arachchige, D.D.K. Monitoring and Identifying Wind Turbine Generator Bearing Faults Using Deep Belief Network and EWMA Control Charts. Front. Energy Res. 2021, 9, 799039. [Google Scholar] [CrossRef]

- Gajare, A.; Dey, H. MATLAB-based ECG R-peak Detection and Signal Classification using Deep Learning Approach. In Proceedings of the 3rd IEEE Bombay Section Signature Conference (IBSSC), Gwalior, India, 18–20 November 2021; pp. 157–162. [Google Scholar]

- Cai, W.; Hu, D. QRS Complex Detection Using Novel Deep Learning Neural Networks. IEEE Access 2020, 8, 97082–97089. [Google Scholar] [CrossRef]

- Pokaprakarn, T.; Kitzmiller, R.R.; Moorman, R.; Lake, D.E.; Krishnamurthy, A.K.; Kosorok, M.R. Sequence to Sequence ECG Cardiac Rhythm Classification Using Convolutional Recurrent Neural Networks. IEEE J. Biomed. Health Inform. 2022, 26, 572–580. [Google Scholar] [CrossRef] [PubMed]

- Abdeldayem, S.S.; Bourlai, T. A Novel Approach for ECG-Based Human Identification Using Spectral Correlation and Deep Learning. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 1–14. [Google Scholar] [CrossRef]

- Uwaechia, A.N.; Ramli, D.A. A Comprehensive Survey on ECG Signals as New Biometric Modality for Human Authentication: Recent Advances and Future Challenges. IEEE Access 2021, 9, 2169–3536. [Google Scholar] [CrossRef]

- Strodthoff, N.; Wagner, P.; Schaeffter, T.; Samek, W. Deep Learning for ECG Analysis: Benchmarks and Insights from PTB-XL. IEEE J. Biomed. Health Inform. 2021, 25, 1519–1528. [Google Scholar] [CrossRef]

- Siegersma, K.R.; van de Leur, R.R.; Onland-Moret, N.C.; Leon, D.A.; Diez-Benavente, E.; Rozendaal, L.; Bots, M.L.; Coronel, R.; Appelman, Y.; Hofstra, L.; et al. Deep neural networks reveal novel sex-specific electrocardiographic features relevant for mortality risk. Eur. Heart J.-Digit. Health 2022, 3, 1–10. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning (ICML’20), Vienna, Austria, 12–18 July 2020; pp. 1597–1607. [Google Scholar]

- Diamant, N.; Reinertsen, E.; Song, S.; Aguirre, A.D.; Stultz, C.M.; Batra, P. Patient contrastive learning: A performant, expressive, and practical approach to electrocardiogram modeling. PLoS Comput. Biol. 2022, 18, e1009862. [Google Scholar] [CrossRef]

- Lyle, J.V.; Nandi, M.; Aston, P.J. Symmetric Projection Attractor Reconstruction: Sex Differences in the ECG. Front. Cardiovasc. Med. 2021, 8, 1–17. [Google Scholar] [CrossRef]

- brgfx. Telemetric and ECG Holter Warehouse Project. Available online: http://thew-project.org/Database/E-HOL-03-0202-003.html (accessed on 18 June 2022).

- brgfx. Vector de Cuerpo Humano Creado por Brgfx - www.freepik.es. 2021. Available online: https://www.freepik.es/vectores/cuerpo-humano (accessed on 18 June 2022).

- Padsalgikar, A. Plastics in Medical Devices for Cardiovascular Applications. A Volume in Plastics Design Library, 1st ed.; Elsevier: Kidlington, UK, 2017; p. 115. [Google Scholar]

- Arteaga-Falconi, J.S.; Osman, H.A.; Saddik, A.E. ECG Authentication for Mobile Devices. IEEE Trans. Instrum. Meas. 2016, 65, 591–600. [Google Scholar] [CrossRef]

- Wiggers, K. AliveCor Raises $65 Million to Detect Heart Problems with AI. 2020. Available online: https://venturebeat.com/2020/11/16/alivecor-raises-65-million-to-detect-heart-problems-with-ai/ (accessed on 28 October 2021).

- CardioID. Every Heart Has a Beat, But the Way We Use it is Unique! 2016. Available online: https://www.cardio-id.com/ (accessed on 28 October 2021).

- Nymi. Nymi Workplace Wearables. 2021. Available online: https://www.nymi.com/nymi-band (accessed on 28 October 2021).

| Ref. | Acc. [%] | Lead | Sample Length [s] | Tech. | Fs [Hz] | Position | Male- Female [%] | Tr. | Ts. Sample | Year |

|---|---|---|---|---|---|---|---|---|---|

| [2] | 90.4 | 12 | 10 | CNN | 500 | Supine | 52–48 | ∼500 k |∼275 k | 2019 |

| [4] | 92.2 | 12 | N/A | DNN | N/A | N/A | 50.5–49.5 | ∼131 k |∼68.5 k | 2021 |

| [47] | DB1: 91.3 DB2: 86.3 | 12 | 10 | SPAR & KNN | DB1: 1000 DB2: 500 | Resting | DB1: 60–40 DB2: 46–54 | DB1: N = 0.104 k DB2: N = 8.9 k | 2021 |

| [43] | 84.9 | 12 | 10 | CNN xresnet 1d101 | 100 | Resting | 52–48 | N = ∼22 k 10 fold: 8 | 2 | 2021 |

| [44] | valid. int: 89 ext: 81 & 82 | 12 | 10 | DNN | 250 or 500 | Resting | Tr: N/A int. valid.: N/A ext. valid. 42.6–57.4 | Tr: ∼132 k int. valid.: 68.5 k ext. valid.: 7.7 k | 2022 |

| [46] | f-score: 87 | 12 | 10 | PCLR & constrastive learning | 250 or 500 | Resting | N/A | N = ∼3229 k 90% | 10% | 2022 |

| Own | 94.4 | 6 | CNN | 200 | Random | 51–49 | ∼3 k |∼1.3 k | 2022 |

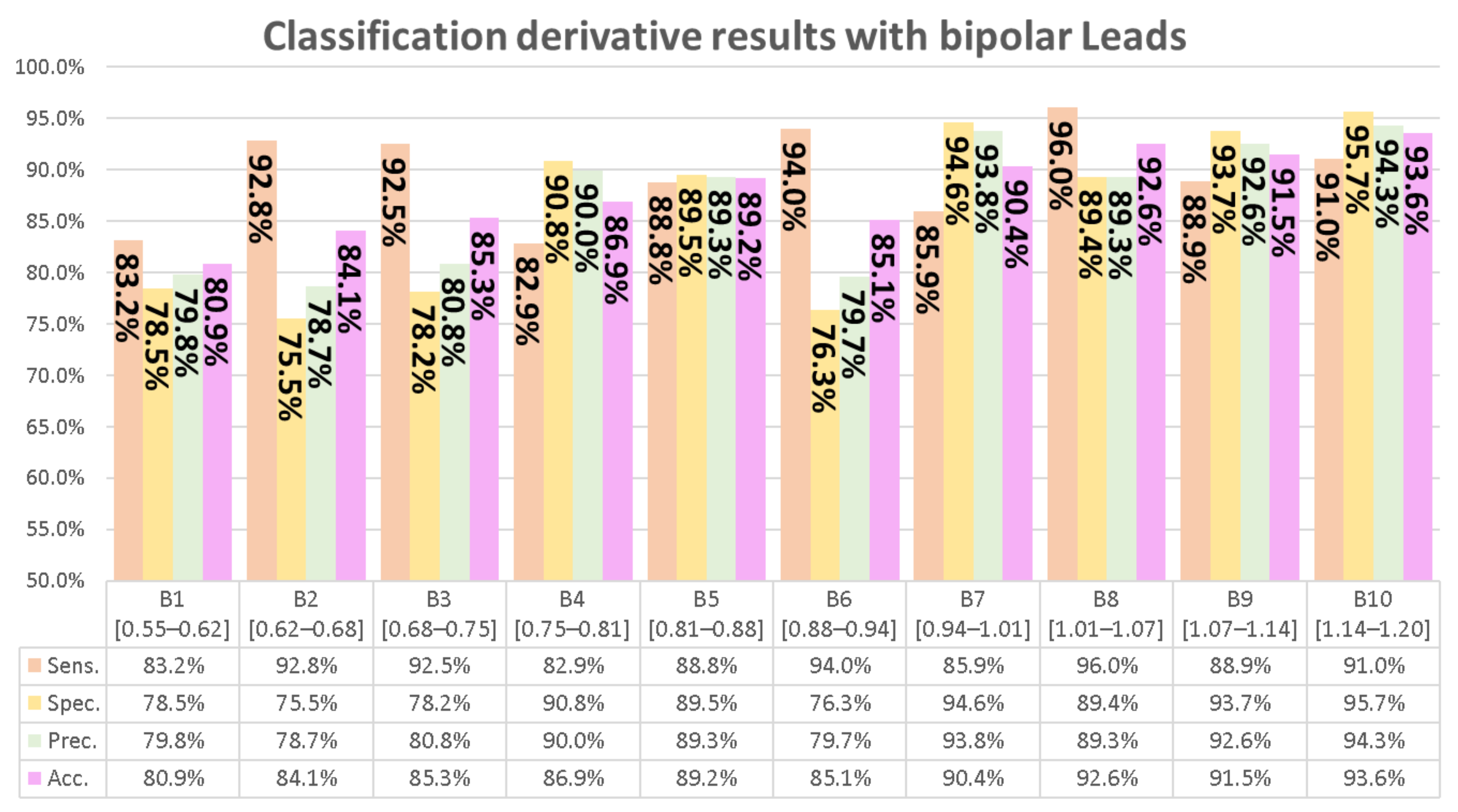

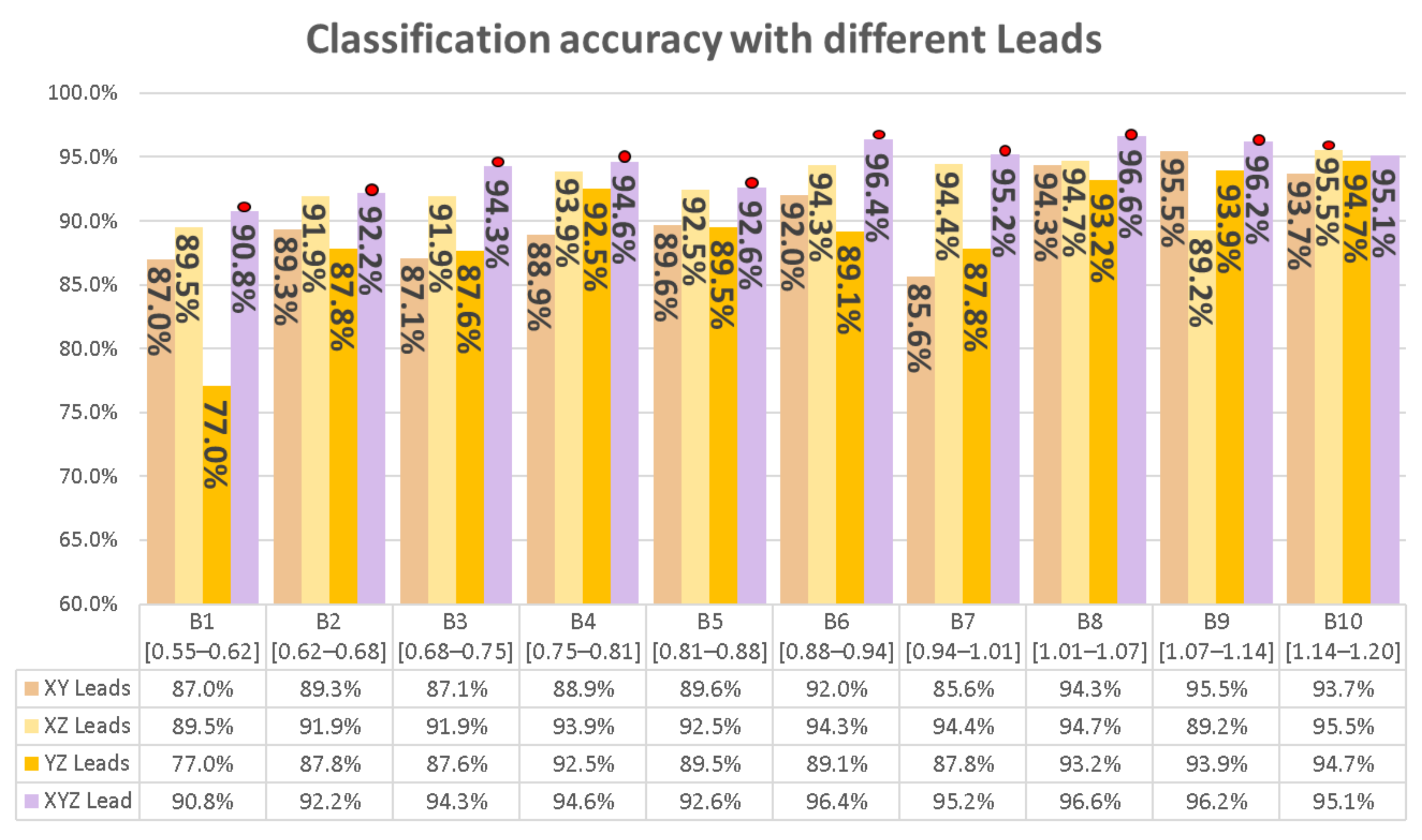

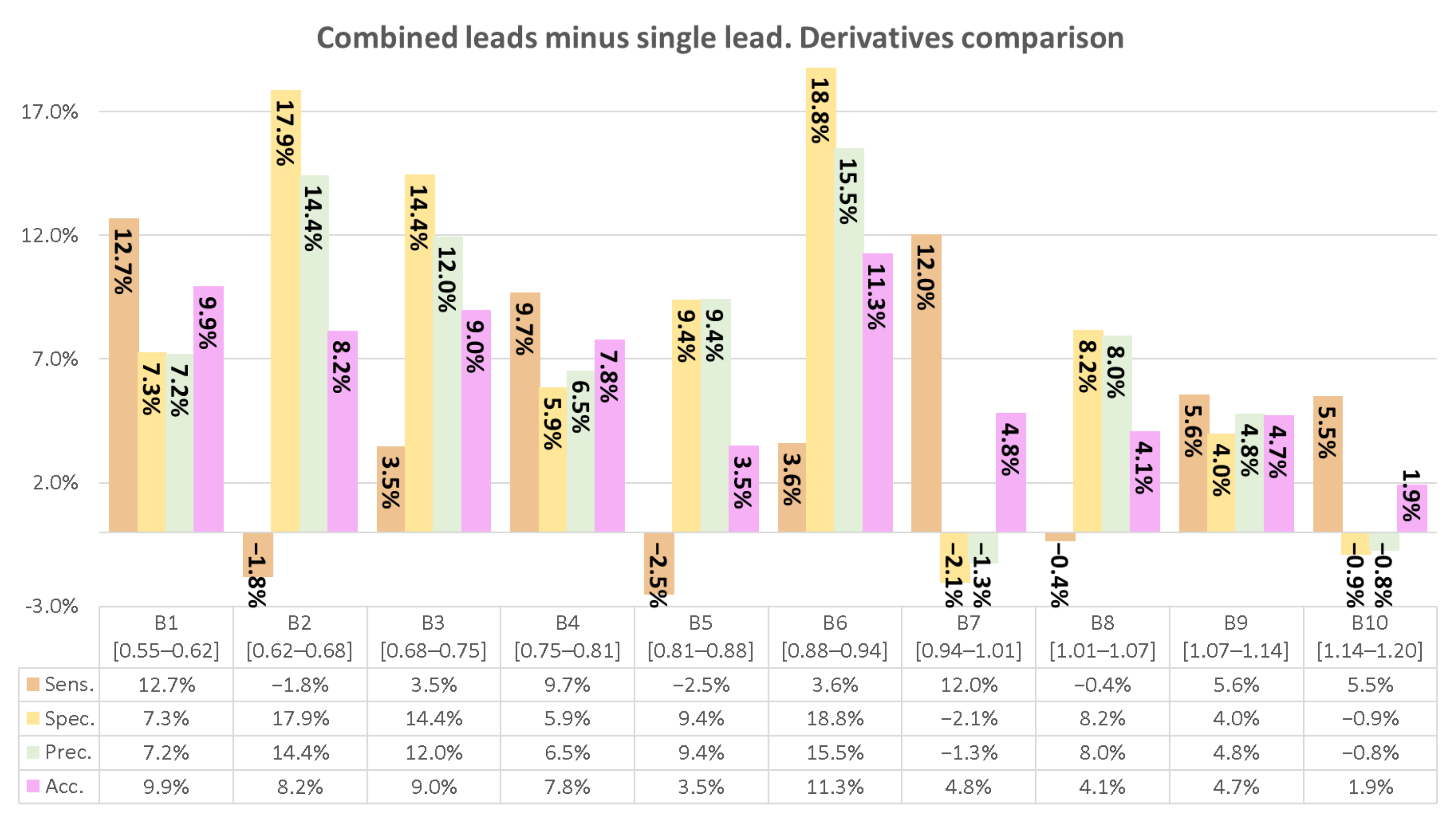

| CMD | Lead | B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | B9 | B10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc. | X | 0.809 | 0.841 | 0.853 | 0.864 | 0.892 | 0.813 | 0.904 | 0.927 | 0.915 | 0.936 |

| Y | 0.743 | 0.802 | 0.811 | 0.811 | 0.852 | 0.851 | 0.890 | 0.892 | 0.883 | 0.924 | |

| Z | 0.803 | 0.826 | 0.830 | 0.869 | 0.889 | 0.821 | 0.866 | 0.926 | 0.910 | 0.924 | |

| XY | 0.870 | 0.893 | 0.871 | 0.889 | 0.896 | 0.920 | 0.856 | 0.943 | 0.955 | 0.937 | |

| YZ | 0.771 | 0.878 | 0.876 | 0.925 | 0.895 | 0.891 | 0.878 | 0.932 | 0.939 | 0.947 | |

| XZ | 0.777 | 0.864 | 0.856 | 0.875 | 0.878 | 0.903 | 0.924 | 0.916 | 0.890 | 0.894 | |

| XYZ | 0.908 | 0.922 | 0.943 | 0.947 | 0.926 | 0.964 | 0.952 | 0.966 | 0.962 | 0.951 | |

| AUC | X | 0.892 | 0.937 | 0.940 | 0.943 | 0.957 | 0.955 | 0.970 | 0.972 | 0.966 | 0.978 |

| Y | 0.848 | 0.877 | 0.887 | 0.896 | 0.925 | 0.936 | 0.960 | 0.957 | 0.950 | 0.976 | |

| Z | 0.914 | 0.921 | 0.935 | 0.939 | 0.947 | 0.953 | 0.966 | 0.984 | 0.977 | 0.978 | |

| XY | 0.939 | 0.959 | 0.966 | 0.973 | 0.977 | 0.975 | 0.944 | 0.985 | 0.993 | 0.988 | |

| YZ | 0.917 | 0.948 | 0.963 | 0.975 | 0.976 | 0.983 | 0.986 | 0.983 | 0.984 | 0.988 | |

| XZ | 0.923 | 0.939 | 0.939 | 0.951 | 0.960 | 0.956 | 0.978 | 0.964 | 0.955 | 0.950 | |

| XYZ | 0.973 | 0.981 | 0.986 | 0.988 | 0.989 | 0.992 | 0.991 | 0.995 | 0.994 | 0.990 | |

| Prec. | X | 0.798 | 0.787 | 0.808 | 0.855 | 0.893 | 0.737 | 0.938 | 0.929 | 0.926 | 0.943 |

| Y | 0.822 | 0.798 | 0.788 | 0.785 | 0.862 | 0.797 | 0.859 | 0.860 | 0.866 | 0.955 | |

| Z | 0.756 | 0.769 | 0.767 | 0.900 | 0.861 | 0.742 | 0.944 | 0.893 | 0.946 | 0.881 | |

| XY | 0.842 | 0.930 | 0.949 | 0.933 | 0.962 | 0.902 | 0.960 | 0.936 | 0.971 | 0.958 | |

| YZ | 0.705 | 0.873 | 0.816 | 0.896 | 0.842 | 0.828 | 0.805 | 0.934 | 0.949 | 0.957 | |

| XZ | 0.912 | 0.887 | 0.857 | 0.822 | 0.839 | 0.938 | 0.914 | 0.887 | 0.907 | 0.871 | |

| XYZ | 0.870 | 0.931 | 0.928 | 0.965 | 0.988 | 0.952 | 0.925 | 0.973 | 0.974 | 0.933 | |

| Sens. | X | 0.832 | 0.928 | 0.925 | 0.876 | 0.888 | 0.968 | 0.859 | 0.917 | 0.889 | 0.910 |

| Y | 0.626 | 0.803 | 0.850 | 0.855 | 0.836 | 0.940 | 0.928 | 0.925 | 0.887 | 0.869 | |

| Z | 0.895 | 0.925 | 0.946 | 0.829 | 0.926 | 0.979 | 0.771 | 0.960 | 0.857 | 0.958 | |

| XY | 0.913 | 0.848 | 0.782 | 0.836 | 0.824 | 0.943 | 0.736 | 0.948 | 0.930 | 0.896 | |

| YZ | 0.937 | 0.882 | 0.970 | 0.961 | 0.972 | 0.986 | 0.990 | 0.922 | 0.919 | 0.921 | |

| XZ | 0.619 | 0.831 | 0.852 | 0.955 | 0.935 | 0.863 | 0.932 | 0.947 | 0.854 | 0.894 | |

| XYZ | 0.959 | 0.910 | 0.960 | 0.926 | 0.863 | 0.976 | 0.980 | 0.957 | 0.945 | 0.958 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cabra Lopez, J.-L.; Parra, C.; Gomez, L.; Trujillo, L. Sex Recognition through ECG Signals aiming toward Smartphone Authentication. Appl. Sci. 2022, 12, 6573. https://doi.org/10.3390/app12136573

Cabra Lopez J-L, Parra C, Gomez L, Trujillo L. Sex Recognition through ECG Signals aiming toward Smartphone Authentication. Applied Sciences. 2022; 12(13):6573. https://doi.org/10.3390/app12136573

Chicago/Turabian StyleCabra Lopez, Jose-Luis, Carlos Parra, Libardo Gomez, and Luis Trujillo. 2022. "Sex Recognition through ECG Signals aiming toward Smartphone Authentication" Applied Sciences 12, no. 13: 6573. https://doi.org/10.3390/app12136573