Abstract

In the era of data deluge, Big Data gradually offers numerous opportunities, but also poses significant challenges to conventional data processing and analysis methods. MapReduce has become a prominent parallel and distributed programming model for efficiently handling such massive datasets. One of the most elementary and extensive operations in MapReduce is the join operation. These joins have become ever more complex and expensive in the context of skewed data, in which some common join keys appear with a greater frequency than others. Some of the reduction tasks processing these join keys will finish later than others; thus, the benefits of parallel computation become meaningless. Some studies on the problem of skew joins have been conducted, but an adequate and systematic comparison in the Spark environment has not been presented. They have only provided experimental tests, so there is still a shortage of representations of mathematical models on which skew-join algorithms can be compared. This study is, therefore, designed to provide the theoretical and practical basics for evaluating skew-join strategies for large-scale datasets with MapReduce and Spark—both analytically with cost models and practically with experiments. The objectives of the study are, first, to present the implementation of prominent skew-join algorithms in Spark, second, to evaluate the algorithms by using cost models and experiments, and third, to show the advantages and disadvantages of each one and to recommend strategies for the better use of skew joins in Spark.

1. Introduction

Big Data is a term that has been mentioned in many recent studies. People generate terabytes of data every hour, leading to challenges in storing and handling data in traditional ways. Therefore, Google designed the MapReduce processing model [1] for parallel and distributed processing of large-scale datasets. One of the major limitations of the MapReduce model is data-skew processing [2]. A typical case is data skew in join operations, which are the operation of joining many relationships or datasets that have some common attributes into a new relationship [3]. The join operation is used in many applications, such as the construction of search engines and some big data-intensive applications [4]. Taking the example of joining two datasets R and L, R has the join key column and L the has join key column . In MapReduce, Mappers read input data from the two datasets and distribute intermediate data to reducers based on join keys. Data with the same join keys will be sent to the same reducers to create join results. Assuming that dataset R is skewed at , the join key with a value of 1 occurs many times. In this case, the reducers and will finish faster than reducer , since reducer receives more data than the others. If there is a large amount of skewed data with , then overload and congestion will appear at the computing node and lead to inefficiency in the computational system. This is a real challenge for join operations in MapReduce.

Some algorithms have been proposed for data-skew-join operations in MapReduce, such as hash-based partition [5], range-based partition [5], multi-dimensional range partition (MDRP) [6], MRFA-Join [7], and randomized partition [8]. Chen et al. [9] introduced LIBRA, a sampling and partitioning algorithm for handling high-frequency join keys in reduction tasks. Bruno et al. [10] discussed challenges in large-scale distributed join operation and introduced novel execution strategies that robustly handle data skew. They used different partitioning schemes and join graph topologies for high-frequency key values. Zhou et al. [11] proposed an efficient key-select algorithm to find skew key tuples and defined a lightweight tuple migration strategy to solve data-skew problems. Their FastJoin system improved the performance in terms of latency and throughput. Zhang and Ross [12] presented an index structure to reorder data so that popular items were concentrated in the cache hierarchy. They analyzed the cache behavior and efficiently processed database queries in the presence of skew. Meena et al. [13] presented their approach for handling data skew in a character-based string-similarity join in MapReduce. They compared the proposed algorithm with three other algorithms for handling data skew for evaluation. Jenifer and Bharathi [14] gave a brief survey on the solutions for data skew in MapReduce. Nawale and Deshpande [15] studied various methodologies and techniques used to mitigate data skew and partition skew. Myung et al. [6] proposed multi-dimensional range partitioning to overcome the limitations of traditional algorithms. There have been some studies on data skew in MapReduce. However, there has not been any study that has shown an adequate and systematic comparison of data-skew handling for join operations in Spark.

In this paper, we will present and compare three algorithms for partitioning data, i.e, hash-based partition, range-based partition, and multi-dimensional range partition. This helps users to choose suitable solutions for processing join operations in large-scale datasets. We built cost models for the algorithms for evaluation. This provided a scientific basis for the comparison. Experiments were conducted on a Spark cluster with different skew ratios. The structure of this paper is organized as follows. Section 2 presents the background related to the large-scale data processing model and platform. Section 3 provides the solutions for data skew in join operations on large datasets in detail. Section 4 presents an evaluation with the cost models and experiments conducted in the Spark cluster. The conclusion of the paper is presented in Section 5.

2. Background

2.1. Join Operations in the MapReduce Model

MapReduce [1] is a parallel and distributed large-scale data processing model. A program can be run on clusters with a number of computing nodes that can be up to thousands. Introduced in 2004, MapReduce has been widely used in the field of Big Data, since it allows users to simply focus on the design of data processing operations regardless of the parallel or distributed nature of the model [3]. MapReduce is implemented through two basic functions, Map and Reduce, which are also two consecutive stages in data processing. The Map function receives input data to convert them into intermediate data (key–value pairs), and the Reduce function accepts the intermediate data created to perform calculations.

Hadoop (http://hadoop.apache.org (accessed on 8 May 2022)) has become one of the popular Big Data processing platforms of the last decade [16]. Hadoop is an open-source implementation of the MapReduce model. Hadoop breaks data down into many small chunks and runs an application on the data of the computing nodes in the system. Each time it performs a task, Hadoop has to reload the data from the disk, which is costly and is considered a “penalty” [17]. Therefore, Hadoop has not fully supported join operations with high I/O and communication costs [2]. In recent years, with the advent of Apache Spark [17], many outstanding features of this platform have helped this to become the next generation of Big Data processing platforms [6].

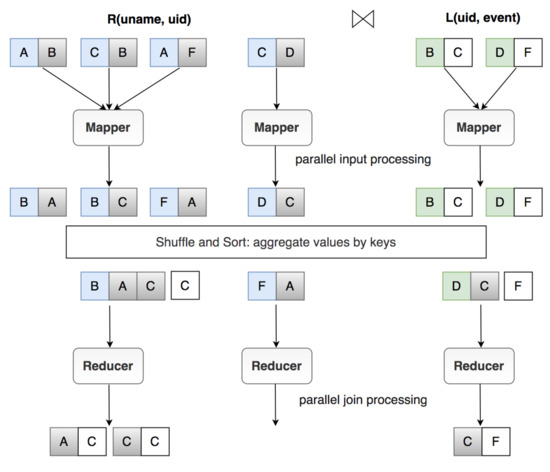

The join operation is a basic operation that consumes much processing time, and it is an intensive data operation in data processing [3]. This section discusses the process and gives a concrete example of a join operation using the MapReduce model. Considering the two datasets R (user data) and L (log data), to list a username and the corresponding event that the user accesses, we have the query . In large-scale data applications, such as social networks, this query can enforce the joining of trillions of records. Therefore, the parallel and distributed processing model in MapReduce is a good solution to this problem. As shown in Figure 1, the JobTracker of MapReduce creates three Mappers to process three partitions of the input data simultaneously. The first Mapper computes the first partition, consisting of three records of dataset R. The second Mapper processes one record of R, and the third Mapper processes two records of L. The Mappers transform the datasets R and L based on the join key .

Figure 1.

Join operation in MapReduce.

The data after transformation are called intermediate data, and those are sent to the Reducers. Intermediate datasets with the same join key are sent to the same Reducer. Here, the reduction function is called for every single key with a list of values. Finally, each record of R finds the records of L that have the same join key to produce the join results. Figure 1 shows that there are three join results from the Reducers.

- Reducer 1 for join key B:

- Reducer 2 for join key F:

- Reducer 3 for key join D:

2.2. Apache Spark

Apache Spark (http://spark.apache.org (accessed on 8 May 2022)) is an open-source cluster computing framework that was originally developed in 2009 by AMPLab at the University of California, Berkeley. Spark has continued to be developed by the Apache Software Foundation since 2013. Given a task that is too large to be handled on a server, Spark allows us to divide this task into more manageable tasks. Then, Spark will run these small tasks in memory on a cluster of computing nodes. Apache Spark has three salient features.

- Speed: Spark is 100 times faster when running in memory and 10 times faster when running on a disk than Hadoop [17].

- Support for multiple programming languages: Spark provides built-in APIs in the Java, Scala, and Python languages.

- Advanced analysis: Spark not only supports MapReduce models, but also supports SQL queries, streaming data, machine learning, and graph algorithms.

2.2.1. Resilient Distributed Datasets

Resilient distributed datasets (RDDs) are the underlying data structure and outstanding feature of Spark. They are a type of distributed collection that can be temporarily stored in RAM with a high fault tolerance and the capability of parallel computation. Each RDD is divided into multiple logical partitions and can be computed on different nodes of a cluster. In this research, we use the Scala language, since Apache Spark is built mainly on Scala, so it has the best speed and support for this language. RDDs basically support two main types of operations.

- Transformation: Through a transformation, a new RDD is created from an existing RDD. All transformations are “lazy” operations, meaning that these transformation operations will not be performed immediately, but the steps taken are only memorized and saved as pending scripts. This process can be understood as a job-planning process. Those operations can only be performed when an Action is called.

- Action: An Action performs all transformations related to it. By default, each RDD will be recalculated if an Action calls it. However, RDDs can also be cached in RAM or on a disk using the persist command for later use. The Action will return the results to the driver after performing a series of computations on the RDDs.

It would be time-consuming if we encountered an RDD being reused many times because each RDD will be recalculated by default. Therefore, Spark supports a mechanism called persist or cache. When we ask Spark to persist with an RDD, the nodes that contain those RDDs will store those RDDs in memory, and that node will only compute once. If the persist fails, Spark will recalculate the missing parts if necessary.

2.2.2. Examples of Spark Functions

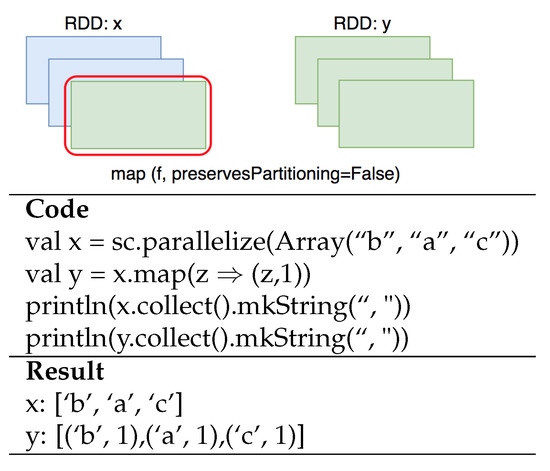

Map (a transformation) returns a new RDD by passing each input element through a function. An example of a map function is presented in Figure 2, in which each element in x is a map with 1 and creates a new RDD y.

Figure 2.

Map function.

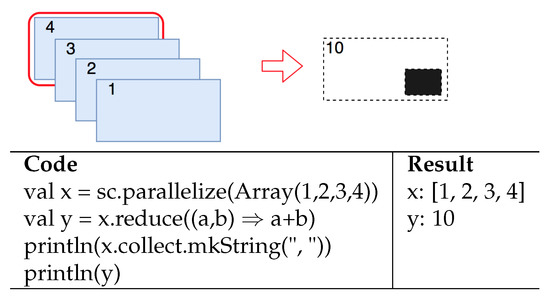

Reduction (an action) aggregates all elements of the original RDD by applying a user function in pairs with the elements and returns the results to the driver. An example of a reduction function is presented in Figure 3, in which the RDD x is reduced to the sum of all its elements.

Figure 3.

Reduction function.

3. Data-Skew-Handling Algorithms

3.1. Skew Join

Data skew is a problem in which the data distribution is uneven or asymmetric. In a database, cases where some attributes appear with a greater frequency than other attributes usually occur [18], and this is called data skew. In distributed parallel computation systems, the join operations using these attributes will take a longer time than with data with a normal distribution. A join operation consists of several steps, including data uploading, projection and selection based on the query, partitioning of the data into partitions, and joining of datasets. Therefore, data skew limits the efficiency of join operations in parallel computation.

There are several types of data skew based on the stage in which skew problems occur, such as tuple placement skew, selectivity skew, redistribution skew, and join skew [19]. The initial placement of the datasets in the partitions may cause a tuple placement skew. Selectivity skew occurs when the selectivity of selection predicates is different between nodes. Redistribution skew is caused by a large amount of data on the partitions after the redistribution scheme is applied. Joining product skew is the result of join selectivity on each node. In this paper, we will not consider tuple placement skew, since MapReduce creates split files regardless of the size of the original file. Selectivity skew is also ignored because it does not have any significant impact on the performance, and we do not use projections and selections in our program. Joining product skew cannot be avoided, since it is created after joining two datasets together. Redistribution skew is the main and most important type of data skew, and it affects the distribution of the workload between nodes. This situation is caused by an improper redistribution mechanism. Hence, we will cover redistribution skew in our research.

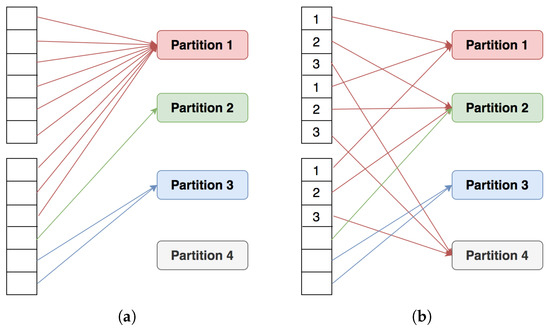

Join computations based on the MapReduce model go through two phases, Map and Reduce. Mappers read the data and convert them into intermediate data in the form of key–value pairs, with the key being the join key and the value being the row containing the join key. After generating key–value pairs, Mappers will shuffle the intermediate data to Reducers according to the rule that pairs of the same key will go to the same Reducers. Data skew may appear at this stage if the input data contain one or more frequent join keys (Figure 4a). This will inevitably lead to data imbalances between computing nodes. Data containing these frequent join keys are processed by only one or a handful of computing nodes, while the rest of the computing nodes are idle. Thus, skewed data occur when one or more computing nodes have to process a much larger number of join keys than other computing nodes in a system [20]. As a result, some nodes encounter bottlenecks or delays, and the remaining nodes are a waste of resources. In parallel computing, the join operation execution time is determined by the longest-running task. Therefore, if the data are skewed, the benefits of parallel computation become meaningless [2].

Figure 4.

Data partitioning example. (a) Skew partitioning. (b) Not skewed.

A solution is to clearly point out skew data and to allow it to be distributed in different ways to avoid or reduce the skew effect before calculations begin. In a data-skew problem, heavily weighted partitions will appear. Spark assigns one task per partition, and each worker processes one task at a time; thus, heavy partitions will affect the performance. The main idea now is to avoid heavily weighted partitions. As an example, we add more information on the skew-join key so that it can be distributed into different partitions (Figure 4b).

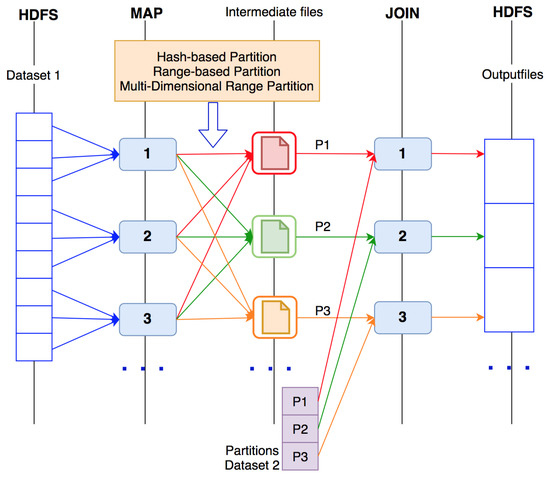

In Spark, data are divided into partitions on many different nodes in a cluster. Thus, it is difficult to avoid data shuffling between nodes with join operations in Spark. This shuffling process will slow down the processing speed and program performance. Therefore, reasonable partitioning of data before the join computation can improve the performance and reduce the effect of shuffling of data. Figure 5 presents the data flow of the three algorithms.

Figure 5.

Data flow of the three join algorithms.

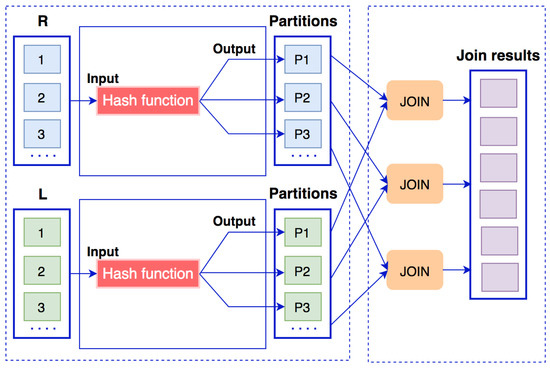

3.2. Hash-Based Partition

In hash-based partition (HBP) [5], after mapping the input data, the partition processing will redistribute the workload to the nodes based on the join keys. Hash-based partition is not ideal for handling skewed data, since the data skew will go to the same computing nodes. Therefore, hash-based partition is not a good solution for skewed data [21].

Suppose that we have two datasets and , where the attribute k is a join key. The data flow of hash-based partition algorithm is presented in Figure 6. The join operation between two datasets R and L using a hash-based partition algorithm goes through two phases.

Figure 6.

Data flow of a hash-based partition algorithm.

- Partitioning phase: The k attribute in each record of the dataset R is passed to a hash function by the formula (R.k mod , where p is the number of partitions. The result of the hash function is also the partition number to which this record was sent. The same thing is done with dataset L.

- Join phase: The partitions will receive a list of data with the same join key k. Here, we can use any join algorithms, such as join, rightOuterJoin, or leftOuterJoin.

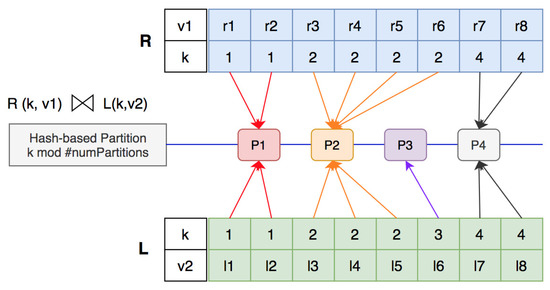

Given an example of two datasets and with , , and there are partitions, in hash-based partition, one partition can receive more records than other partitions. The join computation with hash-based partition is shown in Figure 7. In this example, partition has four records from dataset R and three records from dataset L. Thus, partition produces 12 join results , , which is a larger number than in other partitions. Specifically, partition only receives one record and does not generate any join results. The execution time of a join computation depends on the completion of the last reducer. If the number of identical join keys is too large, then, even if the number of partitions is large, the data with the same join key will only gather on a few certain partitions. This results in some partitions being too big and the others having no data. This easily causes out-of-memory errors or slows down the processing speed. Therefore, processing skewed data is a very important issue for join operations.

Figure 7.

Join computation with hash-based partition.

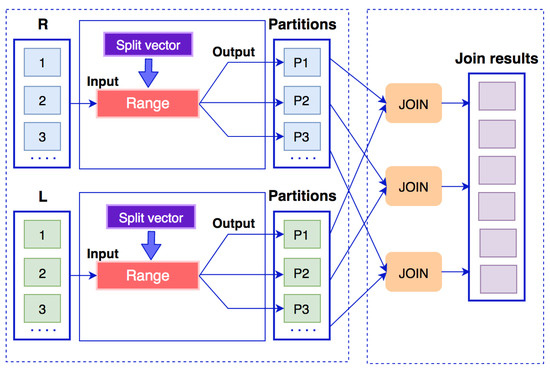

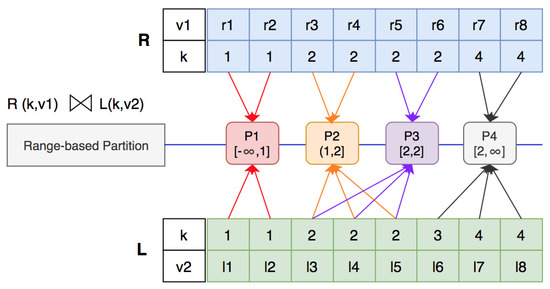

3.3. Range-Based Partition

Range-based partition (RBP) is proposed as a solution for skewed data instead of hash-based partition [5]. The idea of the algorithm is to divide the mapped data into sub-ranges. Therefore, each computing node will process a sub-range instead of a join key value in order to reduce the burden on the computing nodes when data are skewed. The data flow of range-based partition algorithm is presented in Figure 8. A split vector is created to limit the range of sub-ranges. If there are n partitions, the split vector will contain elements . Thus, data with join keys will come to partition 1, data with join keys in the range will come to partition 2, and data with join keys will come to partition n. However, the algorithm still produces skewed results after the partition period.

Figure 8.

Data flow of a range-based partition algorithm.

In this method, the first thing to do is to create a split vector from the two input datasets by calculating the appearance of the join keys in each dataset. The dataset that has the most skewed join keys will be selected as the split vector. We use the “fragment–replicate” technique [22] before the join computation. When a mapper reads input records and maps into RDD key–value pairs, if the join key belongs to more than one partition, this key–value pair is a fragment or replicated. We will use a fragment of the key–value pairs on the dataset with the most skewed join key and use a replicate of the key–value pairs on the other dataset.

The following steps need to be performed to partition key–value pairs in the dataset with more skewed join keys (a build relation):

- A split vector is created from the input dataset and this vector is stored in the HDFS;

- Each node in the cluster will read the split vector from the HDFS and create a range map, and each sub-range will be assigned to the corresponding partition;

- Based on this range map, when a mapper reads input records and maps into RDD key–value pairs, if the join key belongs to only one sub-range, the data will be partitioned into the corresponding partition; if the join key belongs to more than one sub-range, the data will be randomly assigned to one of the partitions corresponding to the sub-ranges (fragment).

For a dataset with fewer skewed join keys, we use the split vector created above to partition key–value pairs (a probing relation). If the join key belongs to only one sub-range, the data will be assigned to the corresponding partition. Conversely, if the join key belongs to more than one sub-range, the data will be distributed to all partitions corresponding to the sub-range (replicate). The algorithm used to partition the datasets is presented in Algorithms 1 and 2.

| Algorithm 1 Range-based partition algorithm for dataset R—a build relation |

Input: An input record Input: Split vector Input: A list of partitions P Begin

End. |

| Algorithm 2 Range-based partition algorithm for dataset L—a probing relation |

Input: An input record Input: Split vector Input: A list of partitions P Begin

End. |

The partitions will have a list of data with a join key k belonging to the same sub-range. Here, we use a join algorithm to create the join results (presented in Algorithm 3).

| Algorithm 3 Join two datasets |

Input: (partitionID, ) Begin

End. |

Given an example of two datasets and , as presented above, we create a split vector from dataset R. The elements in the split vector will be chosen from with the formula , in which is the number of records of dataset R. In the example (Figure 9), we have and ; thus, the and elements in will be chosen to have the split vector . From the split vector, we create four sub-ranges, i.e., .

Figure 9.

Join computation with range-based partition.

According to the “fragment–replicate” technique, , are classified into two partitions and are replicated in two partitions ( and ). As a result, we have two partitions, each of which produces a join result of six records instead of 12, as in the hash-based partition algorithm. The range-based partition algorithm allows data to be partitioned into more than two partitions. With the “fragment–replicate” technique used, we can divide the workload of the partitions when generating the join results.

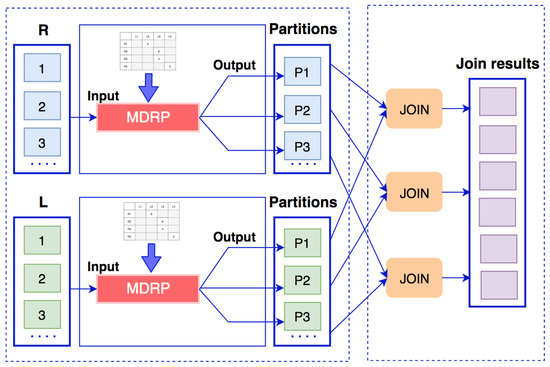

3.4. Multi-Dimensional Range Partition

Multi-dimensional range partition (MDRP) is an algorithm that combines a range partition and a random partition [6]. Mappers read input data from the datasets and create split vectors to divide the data into sub-ranges. The data will be put into a partitioning matrix corresponding to the sub-range values of the two datasets. Input data are distributed to the reducers based on this partitioning matrix, in which cells containing more data in the matrix will be subdivided and assigned to two or more reducers. The data flow of multi-dimensional range partition algorithm is presented in Figure 10.

Figure 10.

Data flow of multi-dimensional range partition algorithm.

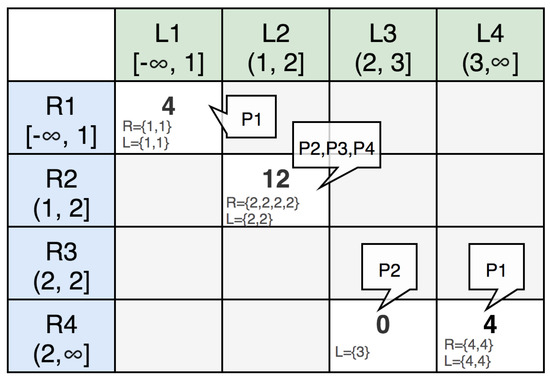

3.4.1. Partitioning Matrix

The algorithm considers two datasets R and L with a join key of k. Suppose that there are p partitions, and the input datasets will be divided into p sub-ranges, i.e., and . Creating sub-ranges of the two datasets is similar to creating sub-ranges with the range-based partition algorithm. For dataset R, the sub-range will include the entire domain of the join key k from to , . Two special cases and will include sub-ranges and , respectively.

As in the previous example, we have and with partitions. We get two split vectors and from the two datasets R and L. In the partitioning matrix M, as shown in Figure 11, the ith row represents the sub-range and the jth column represents the sub-range . The cell is classified into one of the following two groups: candidate and non-candidate. Candidates are cells that produce join results and non-candidates are cells that do not generate join results.

Figure 11.

Mapping between cells and partitions.

is the value representing the workload of a candidate cell to be processed. In the MDRP algorithm, , where and are records that belong to the same sub-range. In the partitioning matrix M, because we have four records whose join key is 2 in dataset R and three records with a join key of 2 in dataset L, which belong to the same sub-range . Similarly, because we have two records in dataset R and two records in dataset L that have join keys belonging to the same sub-range . , since we have two records in dataset R and two records in dataset L that have join keys belonging to the same sub-range . The partition matrix will help to ensure that the total number of cells and the total workload in each partition are relatively equal.

3.4.2. Identifying and Dividing Heavy Cells

Heavy cells in the partitioning matrix M are cells that satisfy the conditions in Equation (1). That is, if the ratio of the workload of the cell to the total workload of the partitioning matrix M is greater than or equal to , this cell is called a heavy cell. The ratio is the optimal workload ratio for each partition. Therefore, if the partitioning matrix contains heavy cells, it will not be possible to balance the workload between the partitions.

As in the example, we have one heavy cell . The total workload in the example is 20 and the optimal workload ratio is . The cell has workload ; thus, it is a heavy cell . To ensure load balancing between the partitions, the heavy cell has to be divided into cells with smaller workloads. A quantity is defined as the optimal workload, as in Equation (2). After this value is defined, the heavy cells are split into d cells, with the conditions shown in Equation (3).

We have . Therefore, the heavy cell will be split into three non-heavy cells, since (). The heavy cells are split into several non-heavy cells that are partitioned into different partitions.

3.4.3. Partitioning the Non-Heavy Cells

- We have a list of non-heavy cells denoted by .

- Each consists of a sub-range , and its workload is denoted by . For example, a non-heavy cell is denoted by .

- These non-heavy cells are partitioned into different partitions .

- A list of non-heavy cells assigned to partition is denoted as .

- The number of non-heavy cells of is denoted as .

- The total workload of partition is defined by .

We use the assign algorithm to distribute the non-heavy cells to the partitions. First, the non-heavy cells in C are sorted in descending order of workload . For each non-heavy cell , we choose partition so that has the minimum number of non-heavy cells and minimum total workload. The algorithm is shown in Algorithm 4.

| Algorithm 4 Assignment algorithm |

Input:, list of non-heavy cells sorted in descending order of Output:, partitions of the non-heavy cells

getNextPartition Output:, the partition selected for the non-heavy cells

|

For example, we have a list of non-heavy cells sorted in descending order of workload, , , and four partitions . The three cells are because the original cell is chopped into three cells. Initializing with and , we have:

The result of assigning the non-heavy cells into partitions is shown in Figure 11.

3.4.4. Multi-Dimensional Range Partition

The MDRP algorithm is shown in Algorithm 5. The “fragment–replicate” technique is used for the join operation. Assume that a mapper receives a record with the join key of dataset R; then, this record will belong to a cell . However, this cell is divided into three partitions , , and , since , , and . So, we should use a fragment or a replicate for record . By counting the number of records in with join key , if the number of records of for dataset R is greater than that for dataset L, then will be fragmented (randomly selected partition), and the records in dataset L will be replicated (replicated to both partitions).

| Algorithm 5 Multi-dimensional range partition algorithm |

Input: an input tuple Input: a partitioning matrix M Begin

End. |

4. Evaluation

4.1. Cost Model

Join computation cost is the total cost of several stages, including pre-processing, data reading, map processing, communication between nodes, reduction processing, and data storage. The parameters used in the cost model are shown in Table 1. The general cost model for the join computation of two datasets is described in Equation (4).

where:

Table 1.

Parameters used in the cost model.

- (there is no preprocessing task of the three algorithms)

Some values ( and ) are different in the three algorithms.

4.1.1. Hash-Based Partition

In this algorithm, records with the same key will come to the same reducer due to the hash function. Therefore, in this study, the skewed data are the records with a join key of 1, which will be processed by reducer 1.

- (*)

4.1.2. Range-Based Partition

A range-based partition processes skewed data by randomly distributing skew records to the reducers and duplicating records with the same key in the remaining dataset.

- (**)

The value of is equal to the number of segments with duplicate keys.

- If , then ;

- If and , the amount of intermediate data generated is negligible;

- If , the intermediate data generated are significant, but the hash-based partition algorithm cannot be performed because the nodes processing the skewed data will be overloaded or even stop working. That is, in this case, a range-based partition is more efficient than a hash-based partition. Therefore, we only need to consider the cases of moderately skewed ratios and to compare the costs of the algorithms based on the performance of the reducers.

4.1.3. Multi-Dimensional Range Partition

A multi-dimensional range partition tends to divide data evenly, including the skew records in the datasets for the reducers.

- We compared the three algorithms based on the performance of the reducers as follows:

- (***)

4.1.4. Analysis

Since skew-join records occur often in reducer 1 and other skew keys are insignificant, reducer 1 determines the execution time of the algorithms. We compared the costs of join computation between the three algorithms.

- From (*) and (**),.

- From (*) and (***),.

- From (**) and (***),.

Therefore, we have Equation (5).

A range-based partition performs join operations with skewed data more efficiently than a hash-based partition thanks to the fragment–replicate mechanism. A multi-dimensional range partition is more efficient than a range-based partition thanks to the thorough skew processing of both datasets.

4.2. Experiments

4.2.1. Cluster Description

We conducted experiments on a computer cluster with 14 nodes (one master and 13 slaves) at the Mobile Network and Big Data Laboratory of the College of Information and Communication Technology, Can Tho University. The configuration of each computer was with four Intel Core i5 3.2 GHz CPUs, 4 GB of RAM, 500 GB of HDD, and the Ubuntu operating system 14.04 LTS with 64 bits. The following versions of applications were used: Java 1.8, Hadoop 2.7.1, and Spark 2.0.

4.2.2. Data Description

We generated experimental datasets with scalar skew distribution. The term “scalar skew” was introduced by Christopher Walton and his colleagues [19] when developing a taxonomy of skew effects. Scalar skew distribution was later used by other researchers for skew handling [6,23,24,25]. In this work, we generated six datasets, in which each had 100,000,000 records. The idea of scalar skew distribution is that, in 100,000,000 records, the skew-join key with a value of 1 appears in some fixed number of records. The remaining records contain randomly appearing join key values from 2 to 100,000,000. This will help us to easily understand which experiments are performed while keeping the output size constant over varying amounts of skew. The format of the datasets was plain text consisting of three fields separated by commas, the primary key, the join key, and other text (pk, jk, others). The six created datasets had different skew ratios for the experiments. The details of the datasets are presented in Table 2.

Table 2.

Dataset description.

4.2.3. Evaluation Method

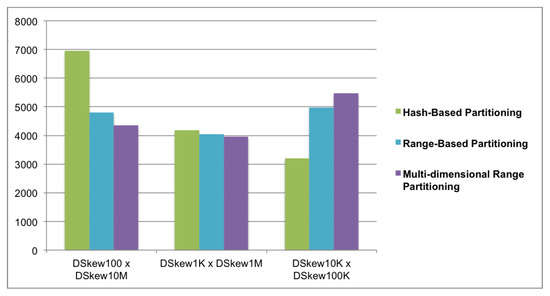

We used three algorithms—hash-based partition, range-based partition, and multi-dimensional range partition—in the three test cases. In each case of running the algorithms, we ran them three times to get the average execution time and evaluated the three algorithms based on their execution times. The join selectivity was the number of output records divided by the number of records in the cross product of the input relations. In this work, we kept the output size of for all three test cases.

- Test 1 (high skew ratio): DSkew100 ⋈ DSkew10M =

- Test 2 (average skew ratio): DSkew1K ⋈ DSkew1M = 1000 ∗ 1,000,000 =

- Test 3 (low skew ratio): DSkew10K ⋈ DSkew100K = 10,000 ∗ 100,000 =

4.2.4. Analysis of the Results

We examined the performance of the algorithms in the join tests with different ratios of skewed join keys. The differences in the execution times of the join tests are shown in Figure 12. In the first join test with a high skew ratio (Test 1), the multi-dimensional range partition performed better than the others. The hash-based partition algorithm was the worst, as it was 1.59 times slower than the multi-dimensional range partition and 1.44 times slower than the range-based partition. In the second join test (Test 2), the multi-dimensional range partition was slightly better than the other two algorithms. Nevertheless, the performance of the three algorithms was almost equivalent in the case of the average skew ratio. In the last join test with a low skew ratio (Test 3), the hash-based partition gave a better performance than the others. The multi-dimensional range partition was the worst in this case, as it was 1.7 times slower than the hash-based partition. The range-based partition appeared to have an average performance in comparison with the other two algorithms in the three test cases. Regarding Formula (5), the experimental results were appropriate for the cost models presented above.

Figure 12.

Execution time of the three algorithms (seconds).

Considering the advantages and disadvantages of the algorithms, with the hash-based partition, the advantage of this algorithm is that it is easy to implement and commonly used in join computation. However, it is not ideal for handling join operations with a high skew ratio. Tuples with the same join keys are hashed to the same reducers, leading to seriously imbalanced tasks. As can be seen, datasets with more skew-join keys had a worse performance; on the contrary, datasets with fewer skew-join keys had a better performance.

In the case of the range-based partition, the tuples with more skew data were selected for range determination. It is quite easy to determine sub-ranges with a small cost with this method; thus, it has been widely used to deal with data-skew problems. This method is more efficient than the hash-based partition algorithm, but it is not a good choice in the case of datasets with a low skew ratio. A limitation of this algorithm is that the determination of the sub-ranges does not take into account the size of the join results; thus, a joining product skew can arise.

The multi-dimensional range partition overcomes the limitation of the range-based partition by using a partitioning matrix instead of a split vector. The cross products of the sub-ranges represented by cells in the partitioning matrix can be estimated to avoid the oversizing of the join results. With this algorithm, the greater skew ratio of the datasets, the more the performance is improved. Conversely, datasets with fewer skew-join keys incur a higher cost of creating a partitioning matrix, recalculating heavy cells in the partition matrix, and assigning cells to reducers.

5. Conclusions

Implementations of MapReduce are used to perform many operations on very large datasets, including join operations. It has become a prominent parallel and distributed programming model for efficiently handling massive datasets. The major obstacle of the model for join processing is data skew. If the data are significantly skewed, with some common join keys appearing to have a greater frequency than the others, the reduction tasks of these join keys will finish later than those of the others. Thus, any benefits from parallelism become meaningless. There are some algorithms that have been proposed to solve the problem of skew joins. Several surveys on solutions for skew joins have been made, but an adequate and systematic comparison in the Spark environment has still not been presented. Thus, this work was designed to provide a comprehensive comparison of several skew-join algorithms with mathematical models and experiments. We fully evaluated the hash-based partition, range-based partition, and multi-dimensional range partition algorithms in MapReduce on the Spark framework. We provided an analysis of the advantages and disadvantages of each algorithm. The cost model built was an important theoretical basis for the evaluation and comparison of the skew-join algorithms. Lastly, the experiments were conducted in Spark, the new generation of Big Data processing. Through the cost models and experimental results, this research presented a comparison of the three algorithms. This is a highly scientific contribution, since join operations are commonly used in Big Data environments. In the scope of this work, we only provided an evaluation of the three algorithms. It is necessary to conduct an investigation and evaluation of more skew-join algorithms to have an overview of the problem of skewed data processing. In addition, Apache Spark 3 was introduced to dynamically handle skew in sort–merge join operations by splitting and replicating skewed partitions. It would be interesting to compare the data-skew handling provided by Spark and the user-defined data-skew handling with other algorithms.

Author Contributions

Conceptualization, T.-C.P. and A.-C.P.; methodology, A.-C.P., H.-P.C. and T.-C.P.; software, T.-C.P. and T.-N.T.; validation, A.-C.P. and H.-P.C.; formal analysis, A.-C.P. and T.-C.P.; investigation, A.-C.P. and T.-C.P.; resources, A.-C.P. and T.-C.P.; data curation, T.-N.T. and H.-P.C.; writing—original draft preparation, T.-C.P. and T.-N.T.; writing—review and editing, H.-P.C. and A.-C.P.; visualization, T.-N.T. and H.-P.C.; supervision, T.-C.P.; project administration, A.-C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available on request by contacting the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RDD | Resilient Distributed Dataset |

| HDFS | Hadoop Distributed File System |

| HBP | Hash-Based Partition |

| RBP | Range-Based Partition |

| MDRP | Multi-Dimensional Range Partition |

References

- Dean, J.; Ghemawat, S. MapReduce: Simplified data processing on large clusters. Commun. ACM 2008, 51, 107–113. [Google Scholar] [CrossRef]

- Phan, T.C.; d’Orazio, L.; Rigaux, P. A Theoretical and Experimental Comparison of Filter-Based Equijoins in MapReduce. In Transactions on Large-Scale Data- and Knowledge-Centered Systems XXV; Springer: Berlin/Heidelberg, Germany, 2016; pp. 33–70. [Google Scholar] [CrossRef] [Green Version]

- Zikopoulos, P.; Eaton, C. Understanding Big Data: Analytics for Enterprise Class Hadoop and Streaming Data, 1st ed.; McGraw-Hill Osborne Media: New York, NY, USA, 2011. [Google Scholar]

- Afrati, F.N.; Ullman, J.D. Optimizing Joins in a Map-Reduce Environment. In Proceedings of the 13th International Conference on Extending Database Technology, Lausanne, Switzerland, 22–26 March 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 99–110. [Google Scholar] [CrossRef] [Green Version]

- DeWitt, D.J.; Naughton, J.F.; Schneider, D.A.; Seshadri, S. Practical Skew Handling in Parallel Joins. In Proceedings of the 18th International Conference on Very Large Data Bases, Vancouver, BC, Canada, 23–27 August 1992; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1992; pp. 27–40. [Google Scholar]

- Myung, J.; Shim, J.; Yeon, J.; Lee, S.G. Handling data skew in join algorithms using MapReduce. Expert Syst. Appl. 2016, 51, 286–299. [Google Scholar] [CrossRef]

- Hassan, M.A.H.; Bamha, M.; Loulergue, F. Handling Data-skew Effects in Join Operations Using MapReduce. Procedia Comput. Sci. 2014, 29, 145–158. [Google Scholar] [CrossRef] [Green Version]

- Okcan, A.; Riedewald, M. Processing Theta-Joins Using MapReduce. In Proceedings of the 2011 ACM SIGMOD International Conference on Management of Data, Athens, Greece, 12–16 June 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 949–960. [Google Scholar] [CrossRef]

- Chen, Q.; Yao, J.; Xiao, Z. LIBRA: Lightweight Data Skew Mitigation in MapReduce. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 2520–2533. [Google Scholar] [CrossRef]

- Bruno, N.; Kwon, Y.; Wu, M.C. Advanced join strategies for large-scale distributed computation. Proc. VLDB Endow. 2014, 7, 1484–1495. [Google Scholar] [CrossRef] [Green Version]

- Zhou, S.; Zhang, F.; Chen, H.; Jin, H.; Zhou, B.B. Fastjoin: A skewness-aware distributed stream join system. In Proceedings of the 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Rio de Janeiro, Brazil, 20–24 May 2019; IEEE: Hoboken, NJ, USA, 2019; pp. 1042–1052. [Google Scholar]

- Zhang, W.; Ross, K.A. Exploiting data skew for improved query performance. IEEE Trans. Knowl. Data Eng. 2020, 34, 2176–2189. [Google Scholar] [CrossRef]

- Meena, K.; Tayal, D.K.; Castillo, O.; Jain, A. Handling data-skewness in character based string similarity join using Hadoop. Appl. Comput. Inform. 2020, 18, 22–24. [Google Scholar] [CrossRef]

- Jenifer, M.; Bharathi, B. Survey on the solution of data skew in big data. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, India, 19 November 2016; IEEE: Hoboken, NJ, USA, 2016; pp. 1–4. [Google Scholar]

- Nawale, V.A.; Deshpande, P. Survey on load balancing and data skew mitigation in MapReduce application. Int. J. Comput. Eng. Technol. 2015, 6, 32–41. [Google Scholar]

- Zaharia, M.; Chowdhury, M.; Franklin, M.J.; Shenker, S.; Stoica, I. Spark: Cluster Computing with Working Sets. In Proceedings of the 2nd USENIX Conference on Hot Topics in Cloud Computing, Boston, MA, USA, 21–25 June 2010; USENIX Association: Berkeley, CA, USA, 2010; p. 10. [Google Scholar]

- Singh, D.; Reddy, C. A survey on platforms for big data analytics. J. Big Data 2014, 2, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lynch, C.A. Selectivity Estimation and Query Optimization in Large Databases with Highly Skewed Distribution of Column Values. In Proceedings of the 14th International Conference on Very Large Data Bases, San Francisco, CA, USA, 29 August–1 September 2003; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1988; pp. 240–251. [Google Scholar]

- Walton, C.B.; Dale, A.G.; Jenevein, R.M. A Taxonomy and Performance Model of Data Skew Effects in Parallel Joins. In Proceedings of the 17th International Conference on Very Large Data Bases, Barcelona, Catalonia, Spain, 3–6 September 1991; Lohman, G.M., Sernadas, A., Camps, R., Eds.; Morgan Kaufmann: Burlington, MA, USA, 1991; pp. 537–548. [Google Scholar]

- Kwon, Y.; Ren, K.; Balazinska, M.; Howe, B.; Rolia, J. Managing Skew in Hadoop. IEEE Data Eng. Bull. 2013, 36, 24–33. [Google Scholar]

- Atta, F. Implementation and Analysis of Join Algorithms to Handle Skew for the Hadoop Map/Reduce Framework. Master’s Thesis, School of Informatics, University of Edinburgh, Edinburgh, UK, 2010. [Google Scholar]

- Epstein, R.; Stonebraker, M.; Wong, E. Distributed Query Processing in a Relational Data Base System. In Proceedings of the 1978 ACM SIGMOD International Conference on Management of Data, Austin, TX, USA, 31 May–2 June 1978; Association for Computing Machinery: New York, NY, USA, 1978; pp. 169–180. [Google Scholar] [CrossRef]

- DeWitt, D.J.; Naughton, J.F.; Schneider, D.A.; Seshadri, S. Practical Skew Handling in Parallel Joins; Technical Report; University of Wisconsin-Madison Department of Computer Sciences: Madison, WI, USA, 1992. [Google Scholar]

- Harada, L.; Kitsuregawa, M. Dynamic join product skew handling for hash-joins in shared-nothing database systems. In Proceedings of the 4th International Conference on Database Systems for Advanced Applications (DASFAA), Singapore, 11–13 April 1995; Volume 5, pp. 246–255. [Google Scholar]

- Atta, F.; Viglas, S.D.; Niazi, S. SAND Join—A skew handling join algorithm for Google’s MapReduce framework. In Proceedings of the 2011 IEEE 14th International Multitopic Conference, Karachi, Pakistan, 22–24 December 2011; IEEE: Hoboken, NJ, USA, 2011; pp. 170–175. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).