Smart Factory Using Virtual Reality and Online Multi-User: Towards a Metaverse for Experimental Frameworks

Abstract

:1. Introduction

2. Background and Related Works

2.1. Fourth Industrial Revolution

2.2. Smart Factory

2.3. Virtual Reality and Virtual Worlds

2.4. Metaverses

2.5. Summary

3. The Unreal Engine Experiment Framework

3.1. Basics

- Game classes—The VR system implements basic functions to make it work, including level transitions, pawn navigation, settings, and other essential data. Some of the most important functions of the game classes are: game mode declares all game classes except the game instance. Game instance has the unique ability to maintain its state even after a level change which makes it useful for storing persistent data. Therefore, it is the best place to store language, graphics, or audio settings. Additionally, the game instance stores the data assets of the current level. Player controller generates, owns, and navigates the pawn and handles the level transition logic and the pause. Player stats manage and provides information about each player and is therefore especially useful for Multi-User.

- Level setup—Each level of the framework is encapsulated in a general map that consolidates all the maps and the load of the level. In addition, the framework relies on several essential actors at all levels to function correctly. These must be placed and configured at each new level.

- Navigation—The framework’s navigation elements are connected to the teleportation system, which is very important for the VR environment since normal movement in VR is prone to cause motion sickness. Teleportation navigation elements primarily serve to restrict the areas to which a player can teleport. In VR, teleport is used, which is a flat actor with a teleport, and component with possible interaction methods are selected.

- Changing levels—The framework provides a series of mechanisms to load or change levels without problems. For this function, we find a fully configured intro-level which contains: intro screen, player position, map info actor, and sky sphere. The transition of the object is automatically created and filled with the content specified in the level data asset, and it is opened every time a new level is loaded. We then describe the VR system according to Figure 1 as consisting of a series of components that allow virtual reality to work properly for immersive experiences.

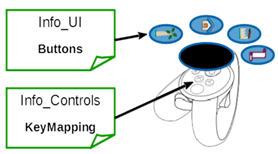

3.2. Controllers

3.3. The Component System

- Interaction components—Manage the interaction between the player and the actors in an application, including selection, capture, and others.

- State components—Apply initiate, and reproduce state changes for the owning actor.

- Snapping components: Cover all the requirements necessary to allow actors to snap into place after being released by a player.

- Multi-user components—Consolidate a set of useful multi-user management components.

- UI components—Cover the design, visualization, and content of the user interface elements.

- Pawn components—They provide the pawn with functions such as controls.

- Miscellaneous components—They comprise a set of useful components that run in the background to enable advanced functionality.

3.4. Environments

- VR—This pawn is automatically chosen when an HMD is present at the beginning of the experience and generates motion controllers to move and interact with the virtual reality experience.

- Desktop—The desktop pawn serves as the default environment and provides functionalities to move and interact with the experience using the mouse and keyboard.

- Mobile—The mobile pawn is automatically chosen when the operating system is IOS or Android and provides functionalities to move and interact with the experience through the touch screen.

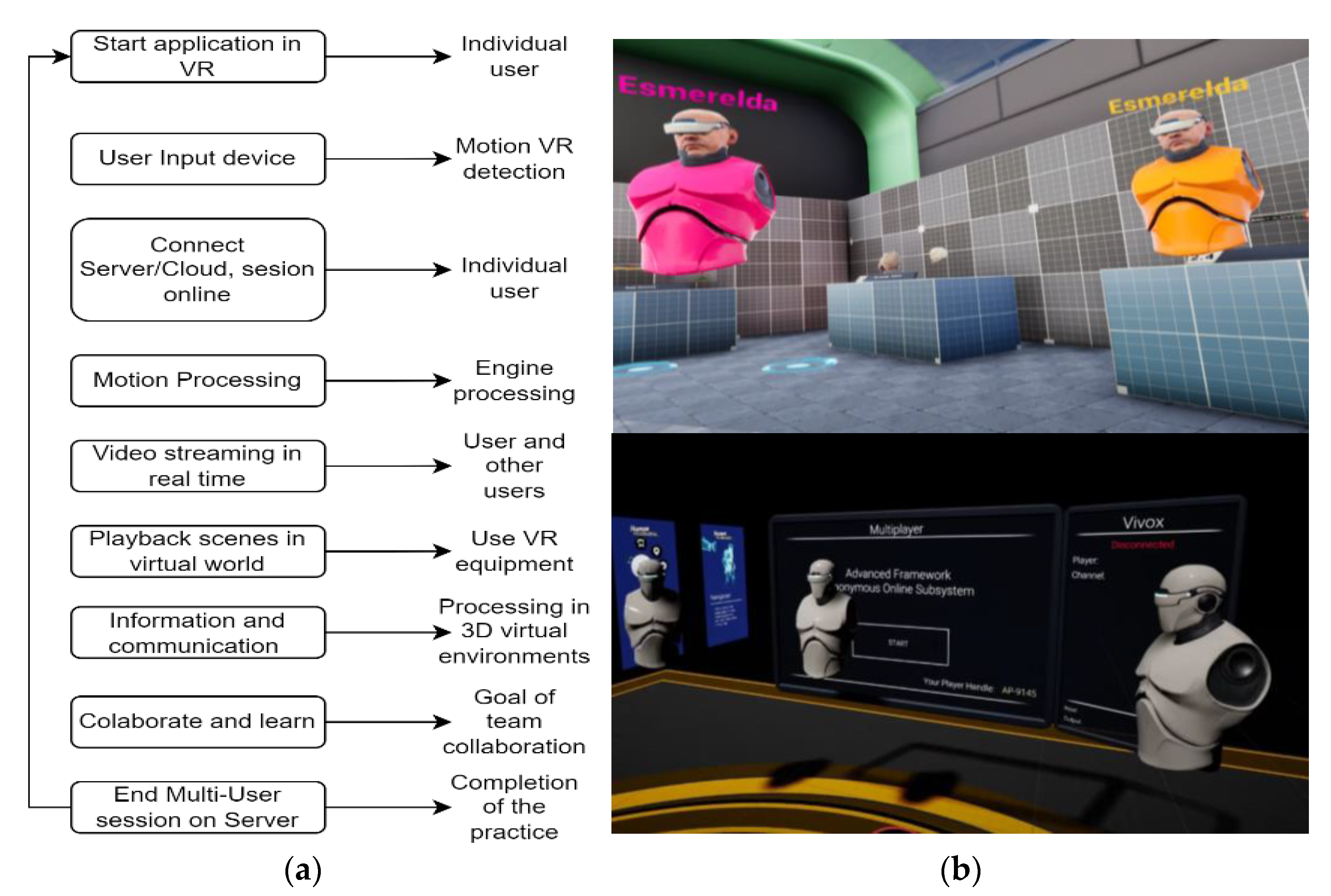

3.5. Multi-User

- The host and server—Two external plugins were used for the multi-user functionality, which are integrated into the core programming: EOS core plugin, used to present the online services (EOS) of the project. Vivox Core Plugin connects the project to the vivox voice chat system, so that it can be used with several users connected in real time.

- Replication—All actors whose status, position, or other properties are relevant in the multi-user must be replicated to ensure synchronization between clients.

- Preparations and configuration—The external plugins must be configured and registered in the Epic Games developer portal through the following steps for the application to work online: (creating an Epic Games developer account, creating a product, setting up Epic account services, P2P setup, checking your product details). The project must be configured to correctly create a multi-user application using the framework, plugins, and the epic games online services add-on.

- Testing—If the correct settings were followed, then we should be ready to test the Multi-User with different users anywhere in the world. When one starts in multi-user mode, there are the following possibilities:

- Organize a session—The session is automatically created and added to the session list.

- Refresh session list—Refresh the session list if a session is hosted and not displayed.

- Join a session—The player pawn is automatically generated in one of the starting positions for a created session.

- All clients must be using the same engine build.

- All computers and HMD must be on same LAN so that local connections are possible and so that it is possible to connect from anywhere in the world without any restriction other than access to the Internet.

- Start with exactly same content (typically synced from source control).

3.6. User Interfaces and Data

- Widgets—Widgets represent each visual part of the application with images, text, buttons, interactions, and customization adapted to the virtual scenarios.

- Data—Data assets are a pre-established set of variables used to store, organize, and access various information. As a result, these occupy a similar role to data tables with the advantage of being more intuitive to manage. The framework provides the following types:

- d.

- Data tables—The data tables provided for this purpose are called I18n data tables, which are separated into rows. Each row is assigned a key that, along with the current language setting, unambiguously points to a cell in the data table that provides the text that ultimately displays the widget.

- e.

- Structs—The framework makes use of this concept to standardize translatable text, communication between components, and other functions. Many structures are only used internally and are automatically generated. However, others are used especially in component configuration.

- f.

- Enums—Enumerations provide a modifiable list of keys that can change functions or be displayed in various parts of the application. Most enumerations are best explained in their inherent environment, such as the component or user interface or another element in which they are used.

4. Method

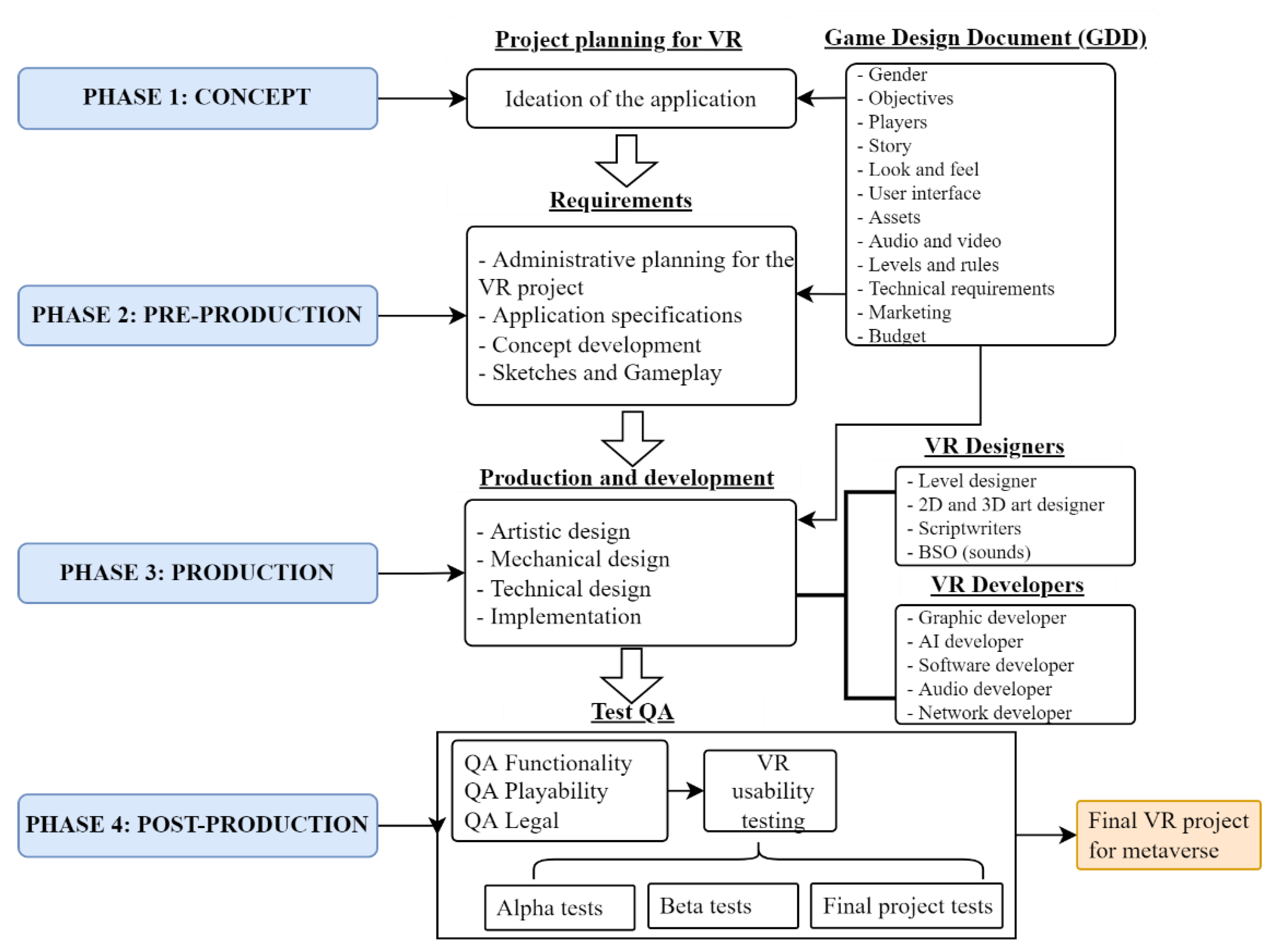

4.1. VR Development Methodology

- Phase 1—Concept. In this phase, we propose the project’s initial idea. All the supported planning of the Game Design Document (GDD) is performed to record the technical highlights of the project.

- Phase 2—Pre-production. We identify the requirements and resources needed to start the project and plan for specifications and monitoring. Here, we also begin the development of the concept, previous sketches, and gameplay design. The GDD document is constructed and drafted to contain the most relevant aspects.

- Phase 3—Production. In this phase, the development of the framework for the creation of the VR system is carried out. The time and resources needed for the development will be considered depending on the size of the project and specific needs according to the sector.

- Phase 4—Post-production. The testing and operation of the application is performed in different versions, alpha, beta, and finished project. In the case of metaverse testing, the multi-user is tested with Internet connection from different locations.

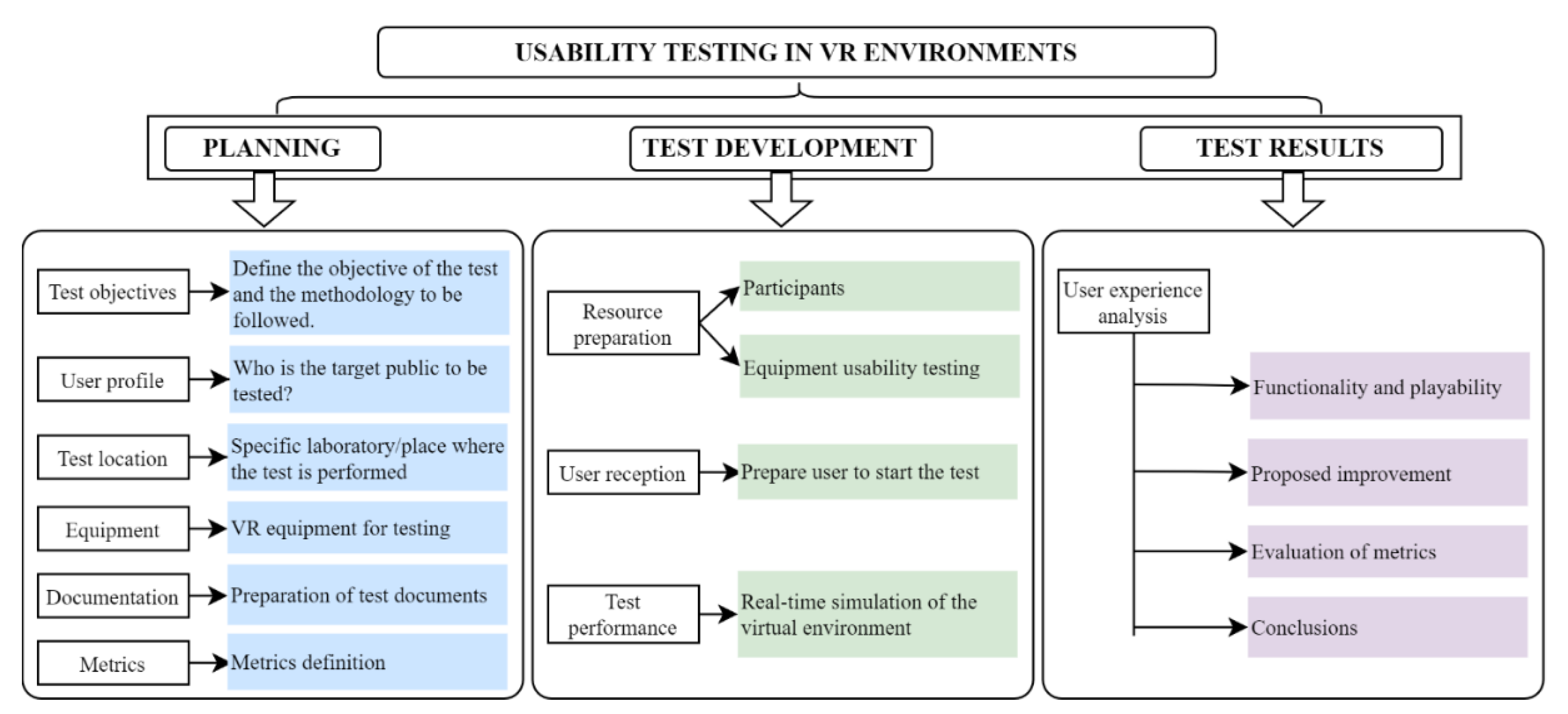

4.2. Usability Testing Methodology

5. Case Study

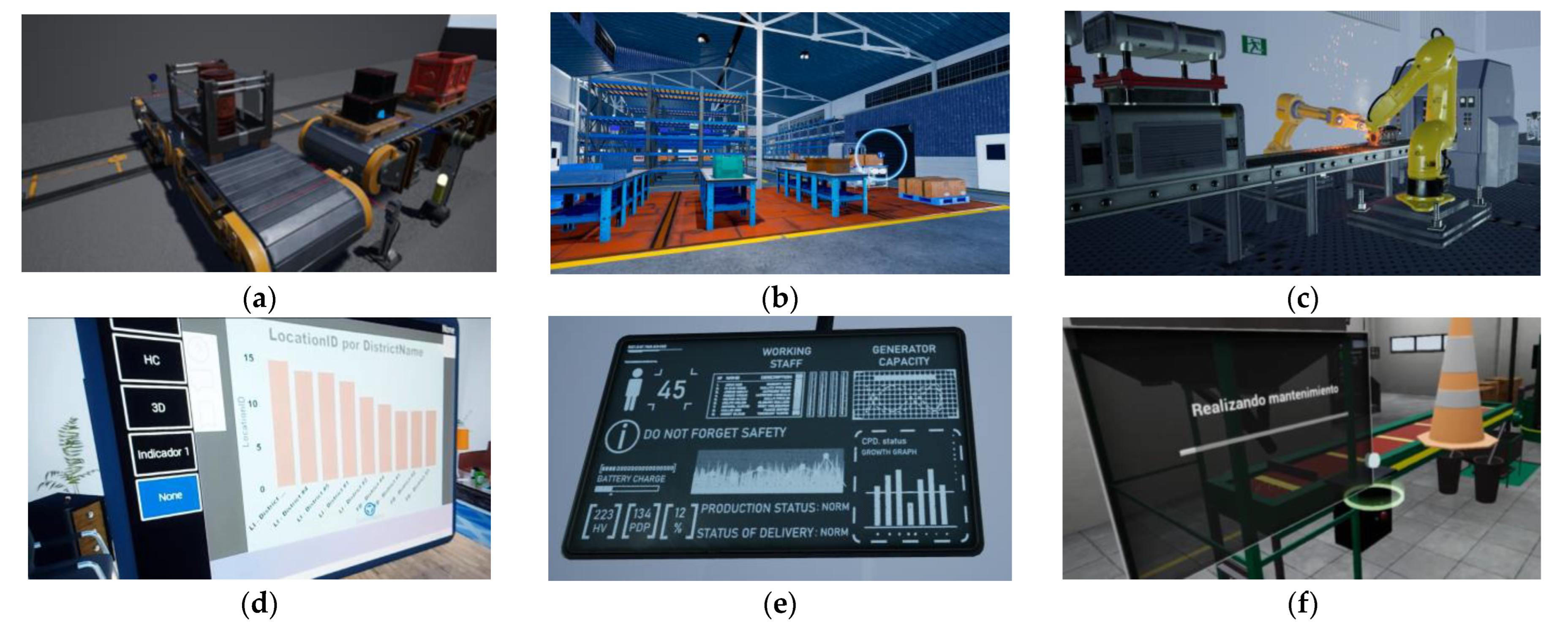

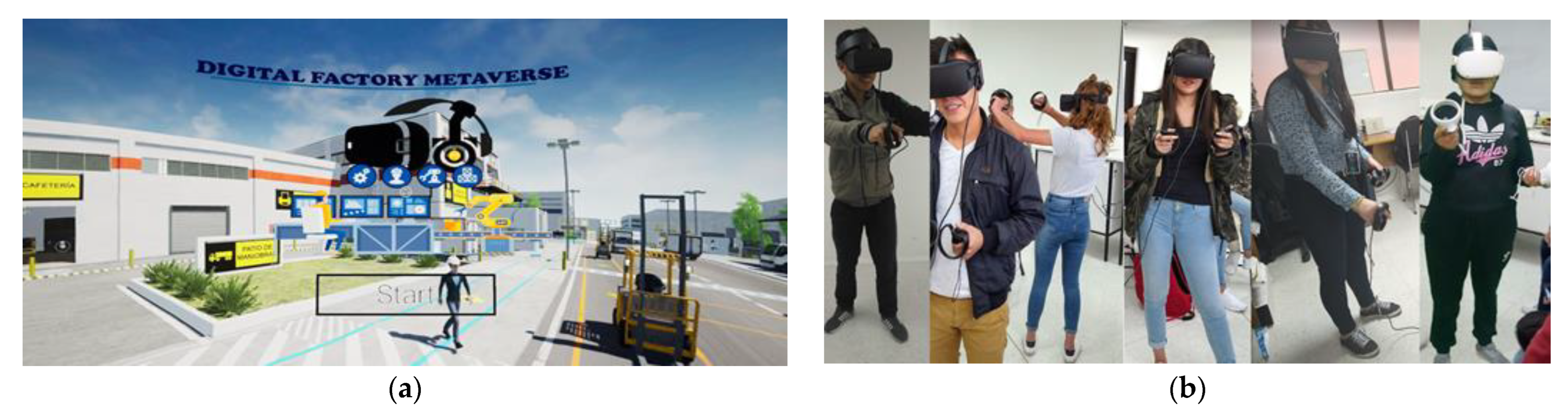

5.1. Digital Factory Metaverse

5.2. Experimental Design

5.2.1. Participants

- Group 1—eight participants, none of which had used VR devices before.

- Group 2—six participants with previous experience of video games and virtual worlds although not with VR.

- Group 3—six participants which had some previous experience with virtual world management and the use of VR equipment that they had used before.

5.2.2. Apparatus

5.2.3. Procedure

6. Results

6.1. Effective Team Collaboration in the 3D Virtual Environment

6.1.1. 3D Visual Environment/Metaverse

- Presence—The developed environment presented in the case study can stimulate users’ immersion because it offers an immersive experience in each of the senses through the perception generated by the brain. In addition, it can stimulate participation in each of the participants with visual cues, sounds, texts and relevant simulations that could help them process the information in real time that is given in the virtual scenario.

- Realism—We focused on a virtual environment that offers a realism of high quality in textures, which allows users to believe that the virtual environment is real, which is why, in the development process, the UE4 graphic engine has integrated images, assets, and components that allow navigation in which the hyper-realistic aspects of the objects are experienced according to real life.

- Interactivity—The interactivity present in the application with VR allows the ability to move and navigate with controls through the entire virtual environment created in real time.

6.1.2. Multi-User Mode of the Metaverse Application

6.2. Small-Scale Metaverse Application: Smart Factory

6.3. Usability Testing

6.3.1. Metrics Definition

6.3.2. Functionality and Playability

6.3.3. Proposed Improvement

- P1—Compared to traditional virtual technology, workgroup users using VR in the virtual world may experience a higher level of (a) presence, (b) realism, and (c) interactivity.

- P2—After using VR, users also experience a higher level of (a) social presence and (b) control over their self-expression with their avatars.

- P3—Users of the virtual world have a higher level of information processing.

- P4—Users of the virtual world have a higher level of communication support at the event.

- P5—VR virtual environments developed with a graphics engine such as UE4 demonstrate a high level of realism compared to traditional virtual scenarios.

- P6—The 3D virtual world can function perfectly as a small-scale metaverse. Users mostly experienced near-realistic training and training efficiency compared to a real environment.

- P7—VR technology and virtual worlds can help institutions and companies save costs related to transportation logistics, purchase supplies and materials, and risk prevention in hazardous environments.

- P1—VR devices are critical in their use; for the case of students who tested with the Oculus rift touch helmets, the experience after half an hour of use resulted in dizziness, whereas for the case of users with Meta Quest 2 helmets, there was no dizziness, which is mainly due to the resolution of the lenses and the integration of sensors to detect movements.

- P2—The avatars for the application version are preliminary, so several users said they wanted to customize their avatars.

- P3—The application’s functionality may be limited in terms of graphics and playability for low-end computers.

- P4—Although the tests were conducted in the laboratory with the vast majority of users, it is expected that users will be able to access the VR equipment from their homes in the future.

- P5—The navigation and interaction with the content worked preliminarily in the first use. For the case of new students who started with the VR world, it was difficult to become accustomed with the controls and interactions within the application. However, users who had already tried VR or video games learned very fast and were very satisfactory during the tests. It is expected that users will be able to get used to it without any inconvenience and with more practice.

6.3.4. Evaluation of Metrics

6.4. Discussion

7. Contribution to Research

8. Practical Implications

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brookes, J.; Warburton, M.; Alghadier, M.; Mon-Williams, M.; Mushtaq, F. Studying human behavior with virtual reality: The Unity Experiment Framework. Behav. Res. 2020, 52, 455–463. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, S.M. and Kim, Y.G. A Metaverse: Taxonomy, Components, Applications, and Open Challenges. IEEE Access 2022, 10, 4209–4251. [Google Scholar] [CrossRef]

- Fillatreau, P.; Fourquet, J.Y.; Le Bolloc’H, R.; Cailhol, S.; Datas, A.; Puel, B. Using virtual reality and 3D industrial numerical models for immersive interactive checklists. Comput. Ind. 2013, 64, 1253–1262. [Google Scholar] [CrossRef] [Green Version]

- He, Z.; Rosenberg, K.T.; Perlin, K. Exploring configuration of mixed reality spaces for communication. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Beier, G.; Ullrich, A.; Niehoff, S.; Reißig, M.; Habich, M. Industry 4.0: How it is defined from a sociotechnical perspective and how much sustainability it includes—A literature review. J. Clean. Prod. 2020, 259, 120856. [Google Scholar] [CrossRef]

- Juřík, V.; Herman, L.; Kubíček, P.; Stachoň, Z.; Šašinka, Č. Cognitive aspects of collaboration in 3D virtual environments. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume 41, pp. 663–670. [Google Scholar] [CrossRef]

- Connect 2021: Nuestra Visión del Metaverso. 2022. Available online: https://about.fb.com/ltam/news/2021/10/connect-2021-nuestra-vision-del-metaverso/ (accessed on 25 January 2022).

- Carruth, D.W. Virtual reality for education and workforce training. In Proceedings of the 2017 15th International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 26–27 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Santos, K.; Loures, E.; Piechnicki, F.; Canciglieri, O. Opportunities assessment of product development process in Industry 4.0. Procedia Manuf. 2017, 11, 1358–1365. [Google Scholar] [CrossRef]

- Landherr, M.; Schneider, U.; Bauernhansl, T. The Application Center Industrie 4.0—Industry-driven manufacturing, research and development. Procedia Cirp 2016, 57, 26–31. [Google Scholar] [CrossRef]

- Rupp, M.; Schneckenburger, M.; Merkel, M.; Rainer Börret, R.; Harrison, D. Industry 4.0: A Technological-Oriented Definition Based on Bibliometric Analysis and Literature Review. J. Open Innov. Technol. Mark. Complex. 2021, 7, 68. [Google Scholar] [CrossRef]

- Quiroga-Parra, D.J.; Torrent-Sellens, J.; Murcia-Zorrilla, C.P. Las tecnologías de la información en América Latina, su incidencia en la productividad: Un análisis comparado con países desarrollados. Dyna 2017, 84, 281–290. [Google Scholar] [CrossRef]

- Xu, L.D.; Xu, E.L.; Li, L. Industry 4.0: State of the art and future trends. Int. J. Prod. Res. 2018, 56, 2941–2962. [Google Scholar] [CrossRef] [Green Version]

- Zhong, R.Y.; Xu, X.; Klotz, E.; Newman, S.T. Intelligent Manufacturing in the Context of Industry 4.0: A Review. Engineering 2017, 3, 616–630. [Google Scholar] [CrossRef]

- Lu, Y. Industry 4.0: A survey on technologies, applications and open research issues. J. Ind. Inf. Integr. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Zaimovic, T. Setting speed-limit on Industry 4.0—An outlook of power-mix and grid capacity challenge. Procedia Comput. Sci. 2019, 158, 107–115. [Google Scholar] [CrossRef]

- Roldán, J.J.; Crespo, E.; Martín-Barrio, A.; Peña-Tapia, E.; Barrientos, A. A training system for Industry 4.0 operators in complex assemblies based on virtual reality and process mining. Robot. Comput. Integr. Manuf. 2019, 59, 305–316. [Google Scholar] [CrossRef]

- Ottogalli, K.; Rosquete, D.; Amundarain, A.; Aguinaga, I.; Borro, D. Flexible framework to model Industry 4.0 processes for virtual simulators. Appl. Sci. 2019, 9, 4983. [Google Scholar] [CrossRef] [Green Version]

- Liagkou, V.; Salmas, D.; Stylios, C. Realizing virtual reality learning environment for industry 4.0. Procedia CIRP 2019, 79, 712–717. [Google Scholar] [CrossRef]

- Büchi, G.; Cugno, M.; Castagnoli, R. Smart factory performance and Industry 4.0. Technol. Forecast. Soc. Chang. 2020, 150, 119790. [Google Scholar] [CrossRef]

- Shi, Z.; Xie, Y.; Xue, W.; Chen, Y.; Fu, L.; Xu, X. Smart factory in Industry 4.0. Syst. Res. Behav. Sci. 2020, 37, 607–617. [Google Scholar] [CrossRef]

- Jones, M.D.; Hutcheson, S.; Camba, J.D. Past, present, and future barriers to digital transformation in manufacturing: A review. J. Manuf. Syst. 2021, 60, 936–948. [Google Scholar] [CrossRef]

- Żywicki, K.; Zawadzki, P.; Górski, F. Virtual reality production training system in the scope of intelligent factory. In Proceedings of the International Conference on Intelligent Systems in Production Engineering and Maintenance, Wroclaw, Poland, 17–18 September 2017; Springer: Cham, Switzerland, 2017; pp. 450–458. [Google Scholar] [CrossRef]

- Wittenberg, C.; Bauer, B.; Stache, N. A smart factory in a laboratory size for developing and testing innovative human-machine interaction concepts. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Washington, DA, USA, 25–29 July 2019; Springer: Cham, Switzerland, 2019; pp. 160–166. [Google Scholar] [CrossRef]

- Damiani, L.; Demartini, M.; Guizzi, G.; Revetria, R.; Tonelli, F. Augmented and virtual reality applications in industrial systems: A qualitative review towards the industry 4.0 era. IFAC-PapersOnLine 2018, 51, 624–630. [Google Scholar] [CrossRef]

- Radhakrishnan, U.; Koumaditis, K.; Chinello, F. A systematic review of immersive virtual reality for industrial skills training. Behav. Inf. Technol. 2021, 40, 1310–1339. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Jensen, L.; Konradsen, F. A review of the use of virtual reality head-mounted displays in education and training. Educ. Inf. Technol. 2018, 23, 1515–1529. [Google Scholar] [CrossRef] [Green Version]

- Ozcinar, C.; Smolic, A. Visual attention in omnidirectional video for virtual reality applications. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Cagliari, Italy, 29 May–1 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Sutcliffe, A.G.; Poullis, C.; Gregoriades, A.; Katsouri, I.; Tzanavari, A.; Herakleous, K. Reflecting on the design process for virtual reality applications. Int. J. Hum. Comput. Interact. 2019, 35, 168–179. [Google Scholar] [CrossRef]

- Scavarelli, A.; Arya, A.; Teather, R.J. Virtual reality and augmented reality in social learning spaces: A literature review. Virtual Real. 2021, 25, 257–277. [Google Scholar] [CrossRef]

- Guo, Z.; Zhou, D.; Chen, J.; Geng, J.; Lv, C.; Zeng, S. Using virtual reality to support the product’s maintainability design: Immersive maintainability verification and evaluation system. Comput. Ind. 2018, 101, 41–50. [Google Scholar] [CrossRef]

- Lee, L.H.; Braud, T.; Zhou, P.; Wang, L.; Xu, D.; Lin, Z.; Kumar, A.; Bermejo, C.; Hui, P. All one needs to know about metaverse: A complete survey on technological singularity, virtual ecosystem, and research agenda. arXiv 2021, arXiv:2110.05352. [Google Scholar]

- Ning, H.; Wang, H.; Lin, Y.; Wang, W.; Dhelim, S.; Farha, F.; Ding, J.; Daneshmand, M. A survey on metaverse: The state-of-the-art, technologies, applications, and challenges. arXiv 2021, arXiv:2111.09673. [Google Scholar]

- Seok, W.H. Analysis of Metaverse Business Model and Ecosystem. Electron. Telecommun. Trends 2021, 36, 81–91. [Google Scholar] [CrossRef]

- Song, S.W.; Chung, D.H. Explication and Rational Conceptualization of Metaverse. Informatiz. Policy 2021, 28, 3–22. [Google Scholar] [CrossRef]

- Duan, H.; Li, J.; Fan, S.; Lin, Z.; Wu, X.; Cai, W. Metaverse for social good: A university campus prototype. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 153–161. [Google Scholar] [CrossRef]

- Anacona, J.D.; Millán, E.E.; Gómez, C.A. Application of metaverses and the virtual reality in teaching. Entre Cienc. Ing. 2019, 13, 59–67. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, S.N.; Kanematsu, H.; Barry, D.M.; Ogawa, N.; Yajima, K.; Nakahira, K.T.; Yoshitake, M. Virtual Experiments in Metaverse and their Applications to Collaborative Projects: The framework and its significance. Procedia Comput. Sci. 2020, 176, 2125–2132. [Google Scholar] [CrossRef]

- Chen, C.J.; Toh, S.C.; Fauzy, W.M. The Theoretical Framework for Designing Desktop Virtual Reality-Based Learning Environments. J. Interact. Learn. Res. 2004, 15, 147–167. [Google Scholar]

- Steffen, J.H.; Gaskin, J.E.; Meservy, T.O.; Jenkins, J.L.; Wolman, I. Framework of Affordances for Virtual Reality and Augmented Reality. J. Manag. Inf. Syst. 2019, 36, 683–729. [Google Scholar] [CrossRef]

- Kim, W.S. Edge Computing Server Deployment Technique for Cloud VR-based Multi-User Metaverse Content. J. Korea Multimed. Soc. 2021, 24, 1090–1100. [Google Scholar] [CrossRef]

- He, Z.; Du, R.; Perlin, K. CollaboVR: A Reconfigurable Framework for Creative Collaboration in Virtual Reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 542–554. [Google Scholar] [CrossRef]

- Chen, X.; Wang, M.; Wu, Q. Research and development of virtual reality game based on unreal engine 4. In Proceedings of the 2017 4th International Conference on Systems and Informatics (ICSAI), Hangzhou, China, 11–13 November 2017; pp. 1388–1393. [Google Scholar] [CrossRef]

- Unreal Engine: The Most Powerful Real-Time 3D Creation Tool. 2021. Available online: https://www.unrealengine.com/en-US/ (accessed on 25 January 2022).

- Urrutia, G.A.M.; López, C.E.N.; Martínez, L.F.F.; Corral, M.A.R. Procesos de desarrollo para videojuegos. Cult. Cient. Tecnol. 2015, 37, 25–39. Available online: https://148.210.21.18/ojs/index.php/culcyt/article/view/299 (accessed on 25 January 2022).

- Gómez Sánchez, M. Test de usabilidad en entornos de Realidad Virtual. No Solo Usabilidad 2018, 17. Available online: https://www.nosolousabilidad.com/articulos/test_usabilidad_realidad_virtual.htm (accessed on 25 January 2022).

- Schmeil, A. Designing collaboration experiences for 3D virtual worlds. Comput. Sci. 2012, 226, 209. Available online: https://www.semanticscholar.org/paper/Designing-collaboration-experiences-for-3D-virtual-Schmeil/8f0c4910636c8cfc1a6033c1e23467aafa1e301d (accessed on 25 January 2022).

- Lee, H.; Woo, D.; Yu, S. Virtual Reality Metaverse System Supplementing Remote Education Methods: Based on Aircraft Maintenance Simulation. Appl. Sci. 2022, 12, 2667. [Google Scholar] [CrossRef]

- Rebollo, C.; Gasch, C.; Remolar, I.; Delgado, D. Learning First Aid with a Video Game. Appl. Sci. 2021, 11, 11633. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef]

- Alpala, L.O.; Alemany, M.D.M.E.; Peluffo-Ordoñez, D.H.; Bolaños, F.; Rosero, A.M.; Torres, J.C. Methodology for the design and simulation of industrial facilities and production systems based on a modular approach in an “Industry 4.0” context. DYNA 2018, 85, 243–252. [Google Scholar] [CrossRef]

| Controllers | Function | |

|---|---|---|

| Motion controllers | Motion controllers are designed to represent physical controllers in VR experiences. Each controller consists of a motion controller and several motion components. |  |

| Laser motion controller | It equips the motion controller with a laser (implemented as a motion component) as the primary means of interaction with other players. The laser motion component connects a laser pointer to the controller which emits a laser trace and provides the player with the possibility of remote interaction. |  |

| Hands motion controller | It enables the motion controller to appear with a skeletal mesh of a hand. However, the hand motion controller primarily serves as the main class for all hand motion controllers. |  |

| Grab motion component | It allows the motion controller to grab or grasp the actors. When virtual reality hands attempt to grab or grasp an actor, the hand searches the actor’s mesh for sockets and matches the most appropriate socket of the motion controller. |  |

| Radial menu | The radial menu motion component generates a circular set of buttons that can be selected by the same hand’s thumb or the other hand’s laser. The radial menu is a VR-only user interface. |  |

| Planning | Test Development | Test Results | |||

|---|---|---|---|---|---|

| Test goals | Performing online mutual playback tests for a production plant with smart factory | Resource preparation | Participants: prior accompaniment and training was available for each user. Participants were divided into 3 groups to participate in different sessions approximately in 2 h duration. | User experience analysis | Functionality and playability |

| User profile | Engineering students in total 20 participants | Equipment: a specialized VR laboratory was available for testing with a complete equipment kit, a total of 6 pieces of equipment. For users residing at home, the use of their personal computers was available. | Improvement strategy | ||

| Test setting | Laboratory and users’ places of residence | User reception | Users were trained as a group on the use of controls, multi-user and the immersive VR experience prior to the test. | Metrics evaluation | |

| Equipment | Specialized VR equip-ment as detailed in Section 5.2.2. | Testing | Each user had a VR device connected to the Internet, and the application was run through a session created by the main tutor. Each user within the groups experienced real-time immersion into the virtual world to work on team tasks. | Conclusions | |

| Documentation | Usability document | ||||

| Metrics | Metrics | ||||

| Technologies | Type of Specific Applications Integrated in the Factory |

|---|---|

| Radio frequency identification (RFID) | Electronic sensors and actuators, RFID integrated into machines. |

| Cyber-physical Systems (CPS) | Connected smart factory in real time with all process. |

| Big data and data analytics (BD) | Management and analysis of big production data. |

| Cloud computing | External data storage, in the so-called cloud, with fast response capability. |

| Human–machine interface (HMI) | Interfaces and monitors in the process. |

| Manufacturing execution system (MES) | Control of the main processes through real-time connection. |

| Computer-aided maintenance management (CMMS) | Maintenance of machinery and equipment. |

| Collaborative platforms | Integration of different platforms in real time. |

| Augmented reality, virtual reality and simulation | Use of VR and simulation for training and education with users. |

| Artificial intelligence (AI) | People, machines, processes, and transport systems are controlled with AI. |

| Digital twin | Representation of real factory to virtual factory in installations, machines, and products. |

| Collaborative robotics | Integration of different types of robots for repetitive tasks. |

| User Experience | |

| Learning | Assess how quickly the user learns to operate the device and how this process can be improved. |

| Help | Evaluate when the user requires help in handling the device and what is the most effective way to transmit it without influencing their actions. |

| Application functionality | In terms of interactivity, walkthroughs, multimedia, and object simulations. |

| 3D virtual worlds with VR vs. other technologies | User experience with different 3D simulation technologies. |

| Visualization of 2D and 3D graphics in the application | The perception of the quality of the graphics experienced by the user will be assessed. |

| Navigation and interaction | Since VR is still new to most users, the user’s experience with the application will be evaluated. |

| Content developed for smart factory | The immersive experience of the user on the virtual world scenario will be valued according to reality. |

| Preparation and Control of Devices | |

| Test location | Laboratory or user’s home. |

| Critical errors | Related to planning, VR equipment, space, and staff logistics. |

| Handling of controls | The learning and adequate use of the controls for the different functions of the application will be valued. |

| Use of VR equipment | The comfort and handling and FPs of all VR equipment will be assessed. |

| Multi-user | |

| Communication | The most effective form of communication between users, inside and outside the application, will be valued. |

| Team collaboration | Effective collaboration to perform tasks as a team will be valued. |

| Multi-user connectivity | Stable Internet connectivity for multimedia playback and online multi-user connection. |

| Avatars | User’s perception of the avatar within the virtual world. |

| Immersion Effects | |

| General discomfort | The participant presents general body discomfort during and after the test. |

| Vertigo | Sensation is perceived by the participant, due to light movements and heights inside the VR application. |

| Sweating | The participant has perspiration on their forehead, hands, and other parts of the body during the test. |

| Nausea | Sensation of feeling like vomiting during or after the test. |

| Fatigue | Fatigue of parts of the body due to standing for a period of time. |

| Stomach awareness | Sensation of feeling dizziness or discomfort in the stomach during or after the test. |

| Difficulty focusing | The participant has difficulty concentrating on the test. |

| Blurred vision | The participant has blurred vision to adequately visualize the graphics on the VR device lenses. |

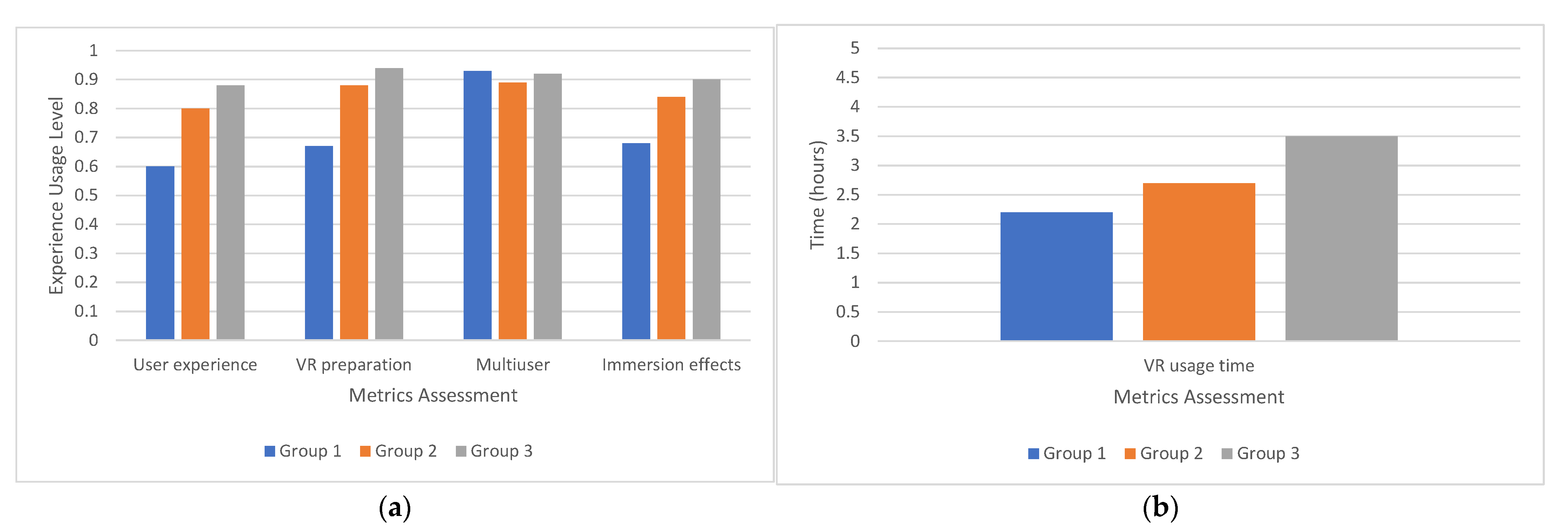

| User experience | The first metric evaluated is the user experience with VR, which can analyze whether the participants who had a previous experience of the use of VR and video games demonstrated a higher satisfaction of the gameplay in contrast to the other groups. See Figure 8a. The evaluation included 20 participants. The most interesting information obtained from the tests is that 88% of the males had good response in terms of user experience and playability, while 70% of the females had an affirmative response. On average, men spent 2.8 h using VR, while women averaged 2.4 h. In terms of meeting expectations, all participants would use VR again as a training and coaching practice. |

| Preparation and control of devices | In the second metric preparation and the control of the devices and start of the application, it can be analyzed that the users who had previous experience had less preparation and learning time, and for the first group, difficulty in the preparation was totally new and their adaptation was possible in several sessions with the VR application. See Figure 8a. The evaluation included 20 participants. The most interesting data obtained from the tests is that 95% of men and 89% of women were able to quickly adapt to the preparation and control of VR devices. For the new participants who used the VR devices for the first time, learning took longer; however, for the second time they used the VR devices, everything went faster and without complications. |

| Multi-user | For metric 3 (multi-user), the group experience, communication, collaboration, connectivity, and avatars were evaluated, and this metric in its analysis shows that all students made a favorable evaluation according to their experience. See Figure 8a. The most interesting data obtained from the tests is that 93% of males and 90% of females had a good experience using the multi-user mode both at the level of communication and group collaboration. Multi-user proved to be very practical for group practices in metaverse, as all participants stated that during the test session, they could feel a realism reminiscent of the real world. |

| Immersion effect | The metrics defined for immersion effects were performed taking as reference some of the items of the Virtual Reality Sickness Questionnaire (VRSQ) presented in [51]. Particularly, since our study focuses on the proposal of a VR system framework for an experimental metaverse, the most approximate items in the general evaluation of the questionnaire proposed in this research were considered, which included general discomfort, dizziness, sweating, nausea, fatigue, stomach sensitization, difficulty focusing, and blurred vision. The fourth metric of effects caused by immersion in VR was evaluated for the side effects that VR may cause after use. The evaluation involved 20 participants; the most interesting data obtained from the tests are: The analysis showed that the participants of the third group mostly did not present dizziness or serious side effects; on the contrary, the first group which did not have any experience with VR equipment and video games, presented slight sensations of dizziness and eye fatigue, which is also due to the time of use of the VR goggles as shown in (Figure 8a,b). Some side effects caused by VR devices may disappear after then frequent use of VR, which was a manifestation of the more experienced participants who had been using these devices for a long time. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alpala, L.O.; Quiroga-Parra, D.J.; Torres, J.C.; Peluffo-Ordóñez, D.H. Smart Factory Using Virtual Reality and Online Multi-User: Towards a Metaverse for Experimental Frameworks. Appl. Sci. 2022, 12, 6258. https://doi.org/10.3390/app12126258

Alpala LO, Quiroga-Parra DJ, Torres JC, Peluffo-Ordóñez DH. Smart Factory Using Virtual Reality and Online Multi-User: Towards a Metaverse for Experimental Frameworks. Applied Sciences. 2022; 12(12):6258. https://doi.org/10.3390/app12126258

Chicago/Turabian StyleAlpala, Luis Omar, Darío J. Quiroga-Parra, Juan Carlos Torres, and Diego H. Peluffo-Ordóñez. 2022. "Smart Factory Using Virtual Reality and Online Multi-User: Towards a Metaverse for Experimental Frameworks" Applied Sciences 12, no. 12: 6258. https://doi.org/10.3390/app12126258