1. Introduction

As a significant part of smart city construction, an intelligent transportation system (ITS) acts as a powerful tool to enhance the quality of city transportation. Benefiting from the rapid development of the Internet of Things [

1], the ITS can provide key information to aid in managing traffic through the connection between traffic sensors and the Internet. For instance, traffic police can inspect illegal parking and allocate traffic resources more efficiently with the deployment of automatic license plate recognition (LPR) infrastructure [

2] or identify potentially illegal or dangerous driving behaviors by assessing driving style [

3]. Moreover, with the vigorous development of mobile applications and intelligent vehicle terminals, every driver can easily obtain information about traffic congestion location predictions [

4] and recommended driving routes. In addition, the traditional service industry relying on the road network has also been benefited in a variety of ways, such as the birth of crowdsourced logistics in the express industry, which could coordinate taxi passenger and express delivery services [

5], and the location planning of business districts in the urban layout [

6]. Therefore, ITS benefits not only traffic managers but also all participants including drivers, pedestrians, and so on. For the purpose of strengthening the foundation of ITSs, more researchers have funneled study into the field of traffic flow prediction.

Based on different methods, traffic flow prediction analyzes and generalizes the traffic characteristics of both common and special areas (e.g., schools and hospitals) in both general and special periods (e.g., during congestion or after accidents) and generates accurate predictions of future traffic states [

7]. Current traffic prediction methods fall into two types: model-driven methods and data-driven methods [

8,

9,

10]. However, the former methods have the disadvantage of being unable to handle cases affected by special factors or events such as weather conditions and traffic accidents.

Thus, in order to take special situations into account, current studies mainly adopt data-driven deep learning methods, which are capable of analyzing multi-dimensional data. Lint et al. [

11] modeled time series with a recurrent neural network (RNN). However, the RNN has the defects of gradient disappearance and long-term dependence, and therefore Ma et al. [

12] used a long short-term memory (LSTM) method to conduct a predictive analysis of the data collected from microwave sensors. By making use of a gate recurrent unit (GRU), an effective variant of LSTM, Fu et al. [

13] improved the speed forecast effectiveness. The above three studies only focused on either the temporal dependence or spatial dependence; they did not consider both comprehensively. Although Yao et al. [

14] focused on temporal–spatial correlation, and the proposed spatial–temporal dynamic network (STDN) model combined the dynamic change in spatial correlation with the periodicity of time correlation, Yao’s study had the same disadvantage as the previous research, i.e., lanes at an intersection were considered as a whole and their differences were not distinguished [

15].

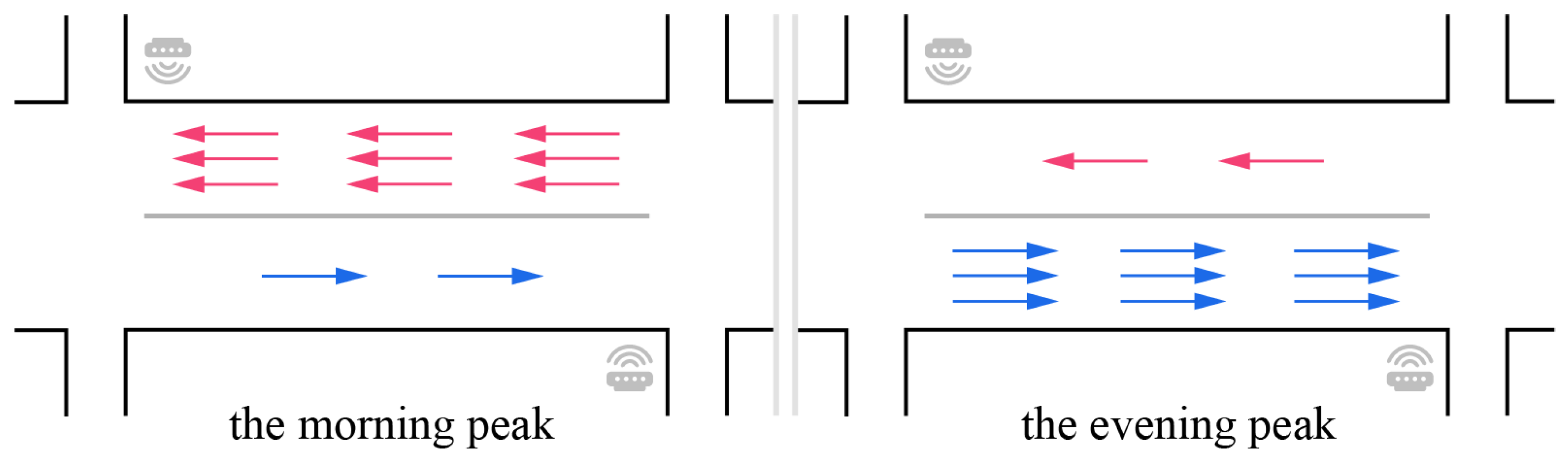

At different intersections and in different flow directions, owing to the high mobility of vehicles, lanes often show diverse traffic patterns [

15,

16]. As shown in

Figure 1, in the morning, represented by red arrows, the traffic flow directed from right to left has a larger scale, and the opposite is true in the evening peak. In view of the ever-increasing development of smart cities, traffic flow prediction technology should detect dynamic changes in lanes more quickly and efficiently, with multiple trajectory data. Accordingly, from a data-driven perspective, a technology of traffic prediction at the lane level emerges and becomes one of the most vital parts of an ITS.

The lane-level traffic prediction method has attracted the attention of researchers mainly because it can be applied to a variety of complex dynamic prediction scenarios. Based on the improvement of the existing prediction method, it could play a huge role in the future in applications such as unmanned driving, lane-level positioning [

17], and cloud video surveillance traffic detection [

18].

In order to focus on the temporal and spatial characteristics of traffic flow, Gu et al. [

19] used an entropy-based gray correlation method to analyze the correlation between lanes and an LSTM-GRU model to derive the spatial–temporal information matrix which is iterated to output the speed prediction. Ke et al. [

20] proposed an innovative two-stream multi-channel convolutional neural network (TMCNN) model, based on the view that different lanes at an intersection can be equated with diverse channels in an image. By introducing a theory of lane-changing behavior, Xie et al. [

21] established a model for lane-changing and its implementation based on a deep belief network (DBN) and LSTM. In addition, Lu et al. [

22] and Ma et al. [

23] utilized a convolutional LSTM network (ConvlTM) model to process temporal–spatial information.

All the above authors carried out in-depth studies on the temporal and spatial characteristics of traffic flow, but excessive emphasis on these characteristics will inevitably lead to the neglect of other heterogeneous data; for example, some time-varying characteristics are not taken into account. Consequently, in our work, considering static and dynamic road network structures, we propose a heterogeneous graph convolution model that takes advantage of the road occupancy rate, minimum visibility, and other data to minimize the deviations in prediction results caused by a single data source.

Inspired by some of the latest research on dynamic graphs [

24,

25], we propose generating dynamic graphs in an internal graph convolution network to determine the correlation between lanes. Since the impact of weather conditions and road occupancy on flow prediction cannot be ignored, we fuse other heterogeneous data in an external data fusion network. In addition, in order to mine the time-dependence of traffic flow, we deploy a codec network with a temporal mechanism.

In summary, regarding lane-level prediction, this study mainly demonstrates innovation and progress in the following three respects:

We propose a heterogeneous graph convolution model based on dynamic graph generation. In order to discover the spatial dependence of dynamic graphs, a graph convolutional network (GCN) is introduced into this model, and a temporal attention mechanism is applied to further study the time-dependence.

We analyze other heterogeneous data to obtain more potential information that has not been previously considered. That is, by incorporating multivariate data such as occupancy and minimum visibility into heterogeneous data fusion networks, we can reduce the loss of accuracy.

We conduct extensive experiments on two real-world datasets, and the results show that our proposed model can increase the precision of lane-level traffic state prediction.

2. Methodology

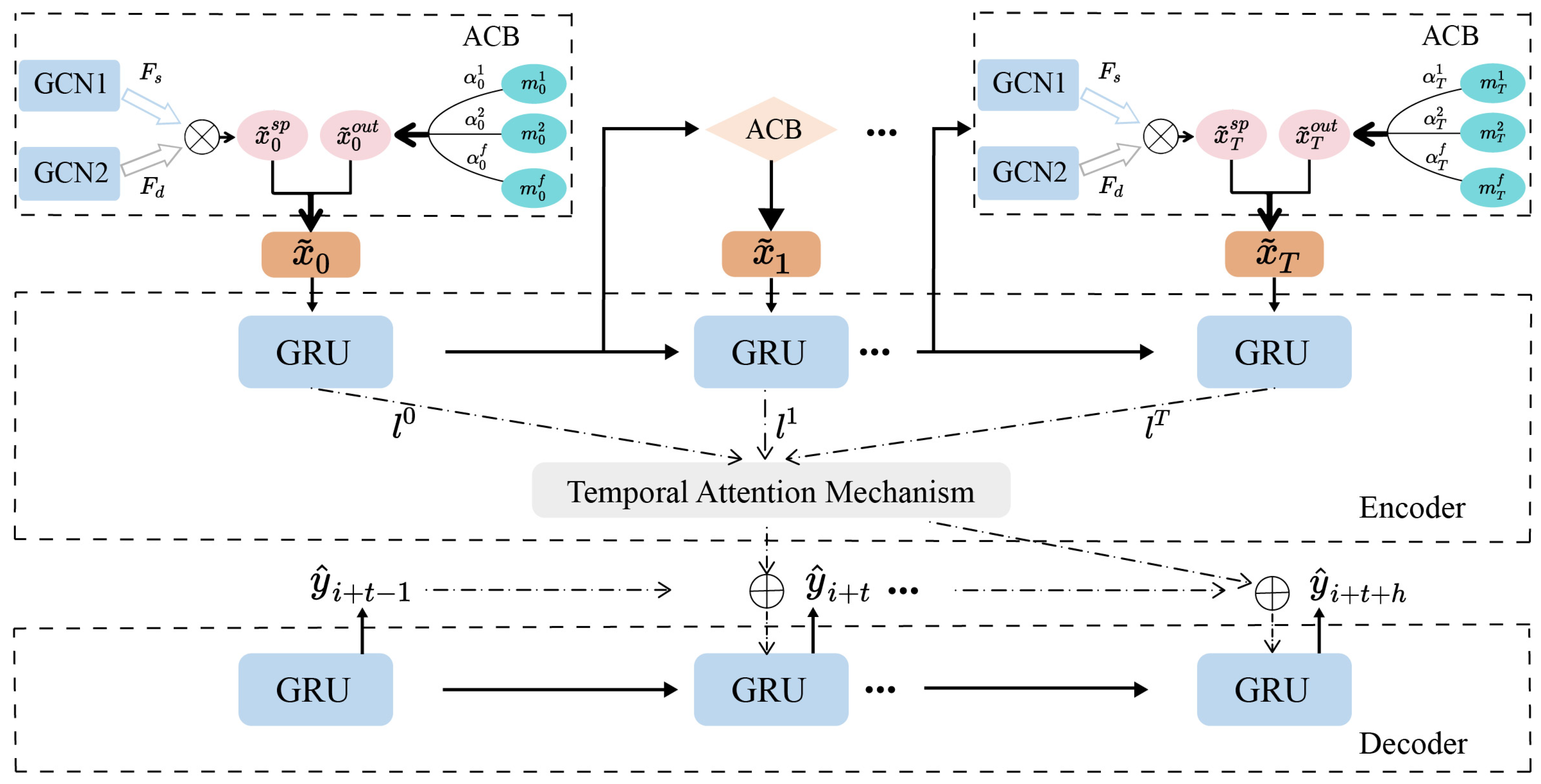

Figure 2 exhibits the model architecture of the heterogeneous graph convolutional network. The network consists of three main components: (1) an internal graph convolution network based on dynamic graphs; (2) an external heterogeneous data fusion network; and (3) a codec network based on an attention mechanism. The internal convolution network is integrated with the external heterogeneous data fusion network, and they are represented as an association convolution block (ACB). Here,

represents the output of the internal graph convolution network and

represents the final result of the external heterogeneous data fusion network.

The process shown in the whole frame starts from the internal convolution network of the ACB, i.e., the left part of the ACB shown in

Figure 2. The participating lanes are divided into two categories according to their relationship with the target lane: (1) for lanes at different intersections, the internal convolution network combines a dynamic graph matrix and a static adjacency matrix and (2) for lanes at the same intersection, only a dynamic graph matrix is generated, with no static adjacency matrix. Graph convolutional operations are performed according to the above conditions, and the outputs are fused into the final internal graph convolution result.

Regarding the external fusion network of the ACB, by adopting an attention mechanism, reasonable weights are allocated to the various parameters and then the results are fused with the convolution result of the internal graph to produce the outputs of the ACB.

In the last part of the whole framework, the encoder assigns the temporal weights to the hidden layers in the GRU units by utilizing the attention mechanism. After obtaining the context vector constructed in the encoder, the decoder outputs the final prediction result through iterative processing.

2.1. Internal Graph Convolution Network

Since traffic information can be regarded as graph signals, many researchers apply graph neural networks in traffic flow prediction to capture spatial characteristics in road networks. In this paper, we utilize the graph convolution network based on a spectral domain to mine the deep spatial dependency in static adjacency graphs and dynamic graphs.

First, according to the road topology, we define an undirected and weighted graph

, where

is the set of nodes,

N is the number of nodes, and

E is the set of links, representing the connectivity between nodes. The weights and static adjacency matrix of

G are calculated as follows:

where

denotes the distance between

i and

j.

is the static adjacency matrix, which has only two types of values: zero represents the fact that two nodes do not correlate and a non-zero value represents the correlation degree (i.e., the weight) between two nodes. Thus,

means that the smaller the distance between two nodes, the greater the correlation degree.

We apply Chebyshev polynomials to greatly reduce the operation convolution time:

where

is the coefficient of the Chebyshev polynomials and

is the maximum eigenvalue of the Laplace matrix. The recursive form of Chebyshev polynomials can be expressed as

, where

and

.

Graph convolution networks based on Chebyshev polynomials help to improve the efficiency but cannot sense dynamic changes in traffic patterns through the static adjacency matrix in Equation (

1). Taking the change in traffic flow in the morning and evening peaks as an example, during the early peak, the traffic flow on main roads between the suburbs and cities tends to increase sharply, and the traffic direction is from the suburbs to the cities. In contrast, during the evening peak, the number of vehicles driving from cities to the suburbs shows a growth trend. The static adjacency matrix only focuses on the influence of the distance between two nodes and ignores the dynamics caused by the change in vehicle flow at different times.

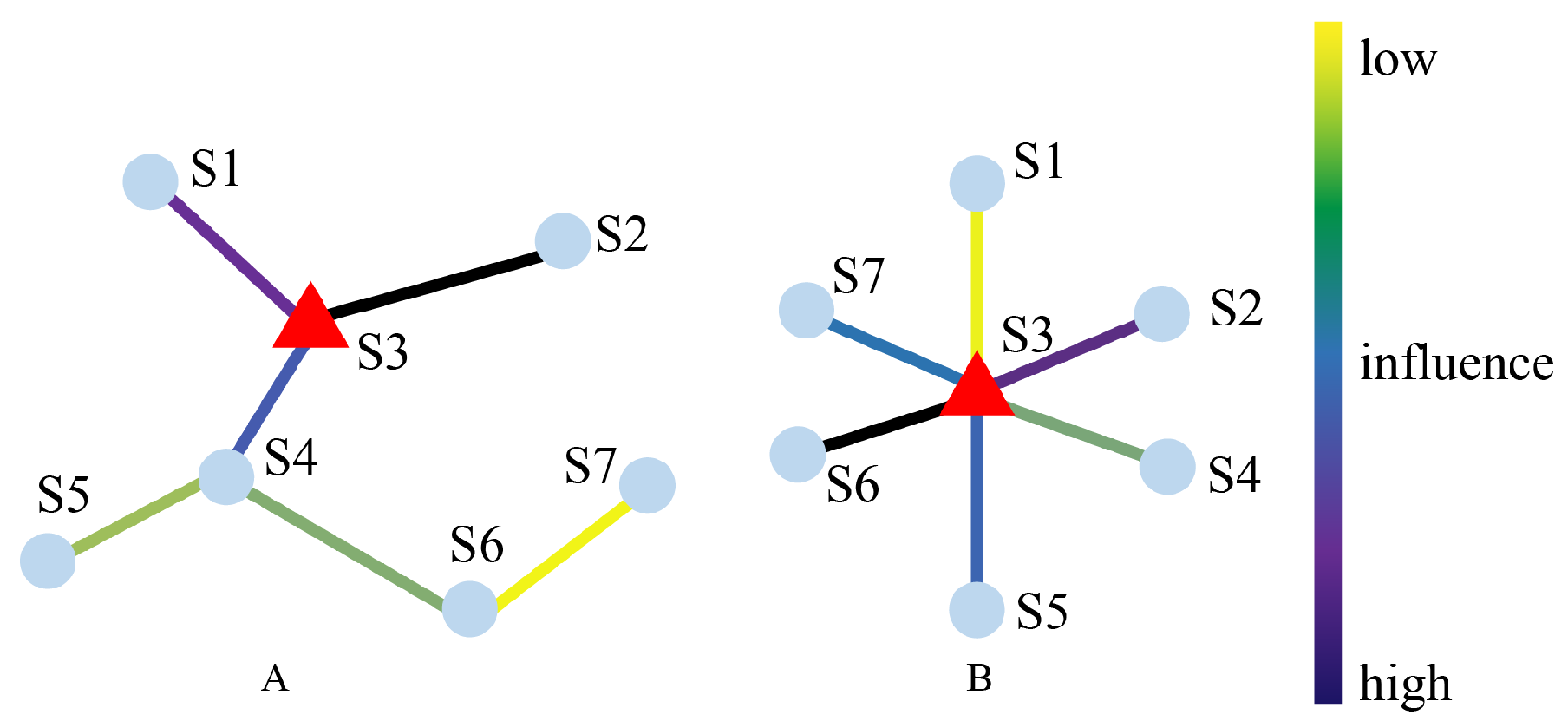

Figure 3 shows the correlation degree between nodes changing over time, where different colors denote different correlation degrees.

Apart from ignoring temporal variability, Equation (

1) also overlooks the impact of similar traffic patterns between nodes. As shown in

Figure 4, S3 is closest to S1, S2, and S4, and in consequence these links have higher weights in subfigure A. We assume that after the analysis of traffic patterns, the pattern of S3 is the most similar to that S6, i.e., their traffic flows are related during the morning and evening peaks, and congestion at S6 will spread to S3 in a short time. Then, we are able to draw the dynamic graph in subfigure B. Although S3 is far away from S6, they have the highest link weight.

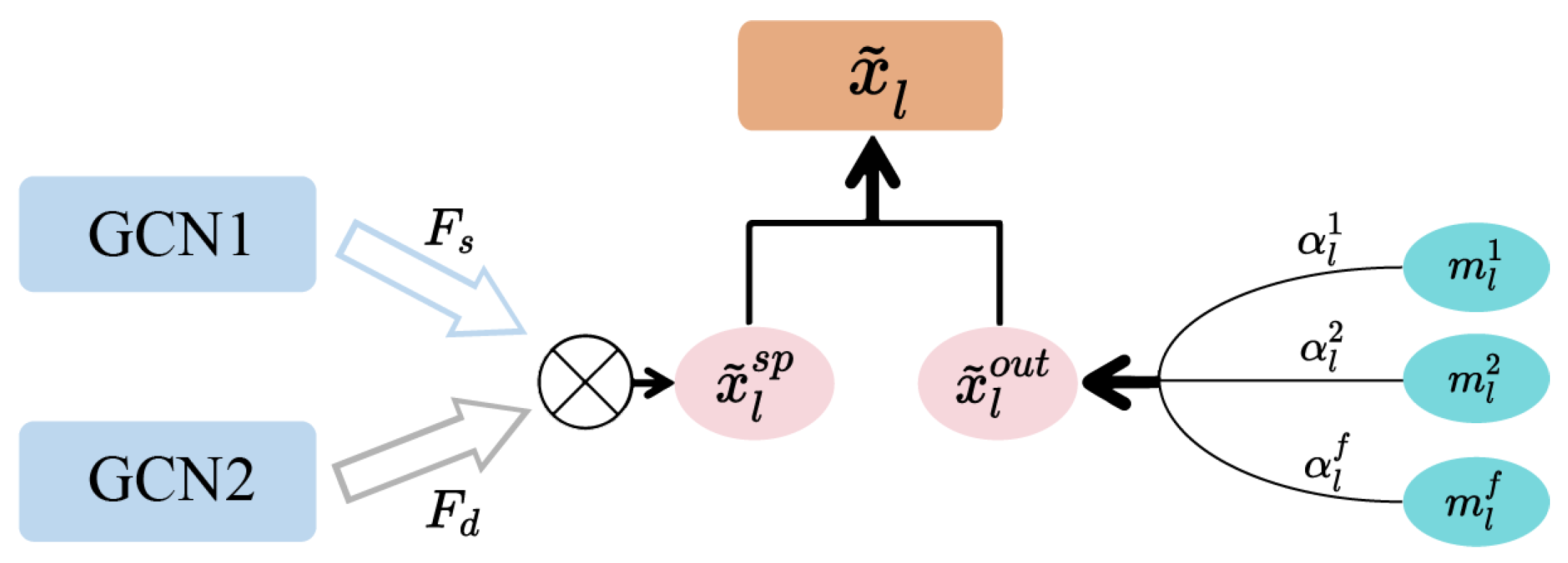

Considering the dynamic changes with time and traffic patterns, we innovatively propose the internal graph convolutional network based on dynamic graph generation.

Figure 5 shows the architecture of the network.

Before model training, in terms of the adjacency relationship with the target lane, we divide all participating lanes into same-intersection adjacency lanes

and different-intersection adjacency lanes

. Traffic flow parameters have the ability to reflect the congestion degree of the road network and the probability of accidents occurring. Therefore, we select the traffic flow as the metric and calculate the correlation degree of each node, using the Pearson correlation coefficient to generate the dynamic graph:

Equation (

3) shows the formula for the Pearson correlation coefficient, where

and

are the parameters of lane

i and lane

j at moment

l, respectively, and

Z is the amount of data for each lane.

in Equation (

4) is the dynamic graph matrix of the same-intersection lanes and

is the correlation degree between lane

i and lane

j at the same intersection. Similarly, we can derive the dynamic graph matrix

for different-intersection lanes.

When performing a graph convolution operation on same-intersection lanes, we utilize the dynamic matrix

to generate a convolution kernel

and convolute

at moment

l:

When performing a graph convolution operation on different-intersection lanes, we utilize the convolutional kernels

and

generated by the dynamic matrix

and the static adjacency matrix, respectively, to convolute

:

where

W is the trainable weight matrix and ⊙ denotes the element-wise product.

The final result of the internal graph convolution network is calculated by the fusion of

and

at moment l:

where

and

are convolution kernels and

and

c are biases corresponding to the kernels.

2.2. External Heterogeneous Data Fusion Network

Through previous experiments, it was found that one of the main reasons for the low accuracy of traffic flow prediction results is the fact that multi-source heterogeneous data are left out of consideration and data of only one type are acquired. According to the speed–flow equation for traffic, the nonlinear relationship between speed, flow, and road occupancy rate is so complex that we cannot confirm one variable by any other single variable. For instance, when at a flat peak, the speed of the vehicle is high but the road occupancy is low, so the flow is quite low. However, in traffic congestion, though the occupancy rate is high, the speed stays at a low level and the flow remains at a small value. Therefore, the current traffic flow condition cannot be analyzed according to only one aspect of the traffic status, as the forecast result will have a large initial difference from the actual data.

Furthermore, weather is a significant factor that affects the traffic conditions to a great extent. Whether the weather is sunny or not affects the driving safety factor, which leads to higher vehicle speeds when the weather is sunny, and the saturation flow of the lane can be higher at this time. In the case of bad weather such as snowstorms and fog, the driver takes the initiative to slow down due to the low visibility, and reaching the saturation level is unlikely.

The above shows that there are many determinants of traffic conditions. Considering only single-source traffic flow parameters leads to the traffic conditions being oversimplified and the complexity being ignored. To be rigorous, the model requires vehicle speed, road occupancy, minimum visibility, and other data as a supplement.

We apply an external heterogeneous data fusion network to solve the problem of analysis error caused by a lack of information.

Figure 6 shows the architecture of the network. The neural network adopts an attention mechanism and reasonably allocates the weights of various heterogeneous characteristic parameters.

Attention scores are calculated as:

where

,

, and

are trainable weight matrices,

is the bias,

is the result of connecting hidden layers with cell state layers in the GRU units of the decoder,

is the

p-th heterogeneous characteristic parameter at moment

l, and

is the allocated weight. The final result

is calculated as:

2.3. Codec Network Based on Attention Mechanism

As shown in

Figure 7, the final result of the ACB is fused with the internal and external networks:

where

and

are trainable weight matrices and

and

are biases corresponding to the matrices.

In spite of the deep spatial dependency between nodes in the result of the ACB, temporal dependency must be further mined to improve prediction accuracy. In traffic flow prediction, extensive experimental studies have proved that LSTM and GRU models have the advantage of effectively processing time series by collecting historical information and referring to previous scenarios. Based on this conclusion, in order to ensure our model obtains this advantage, we construct the codec network with the GRU. Due to the limited storage space, the length of the context vectors is fixed, which restricts the expression ability of the codec network.

For the benefit of finding long-term dependencies in sequences, we combine the codec network with a temporal attention mechanism. The temporal attention mechanism allocates reasonable weights to each period efficiently via self-learning and parallel computing. Temporal attention weights are calculated as follows:

where

,

, and

are trainable weight matrices,

is the result of connecting hidden layers with cell state layers in the previous GRU units of the decoder,

is the output of the ACB at moment

l, and

is the bias. Then, the context vectors are calculated as:

where Equation (

15) derives the output

of the GRU units at moment

l,

denotes the GRU units of the encoder,

is the output of the previous GRU unit, and

is the output of the ACB at moment

l. Equation (

16) derives the context vector

C, and

is the temporal weight.

Then, the context vectors are decoded as follows:

where

denotes the GRU unit of the encoder,

is the previous hidden layer of the decoder,

is the current hidden layer of the decoder,

and

are trainable matrices,

is the bias, and

is the final prediction result.

3. Experiments and Results

3.1. Experimental Data

Aiming at conducting a dynamic analysis, we performed prediction tests on heterogeneous data, including traffic flow data and weather information as follows:

Traffic data: PEMSD4 and PEMSD8 are two real-world datasets from the Caltrans Performance Measurement System (PeMS). The detailed information is shown in

Table 1.

Weather information: We collected weather data for the San Francisco Bay area and for San Bernardino during the periods shown, selected the visibility metric, and then integrated the data into the test as a special factor.

In order to facilitate the test in the internal convolution network of the ACB, we preprocessed the data in advance. First, we classified the data according to whether they referred to the same intersection or the same lane. Then, we obtained the total traffic flow, average occupancy rate of lanes, and average speed every five minutes. According to the road speed limit and actual situations, we removed unreasonable data, smoothed burr points, and utilized the sliding window method with fixed length to estimate missing data. To verify the comparison of the accuracy of traffic flow prediction, we split each dataset and selected 47 days as the training set and 15 days as the test set.

3.2. Prediction Task

In the preprocessing of the dataset, the traffic flow and weather data were recorded every five minutes. Our prediction task was to use the first five records to predict the sixth datum. That is, the traffic flow datum for the next five minutes was predicted based on the information from the first sixty minutes of data. Finally, our proposed lane-level traffic flow prediction algorithm was evaluated by comparing the real data with the predicted data.

3.3. Experimental Setting

To demonstrate the superiority of the proposed model, we compared it with seven other algorithms, including parametric methods, non-parametric methods, and deep learning methods:

HA (historical average): this method is based on statistics and calculates the average value of traffic flow parameters to derive the prediction results.

SVM (support vector machines): this is a supervised learning method and classifies data by binary methods. The optimal hyperplane can maximize the distance between multiple categories.

ARIMA (autoregressive integrated moving average): this is a time series prediction method, composed of an autoregressive block and a moving average block.

GRU (gate recurrent unit): this model is a variant of LSTM that removes forgetting gates and only consists of update gates and reset gates. It requires fewer parameters and converges more easily.

T-GCN [

26]: this model is a time series neural network with a GRU-GCN structure, where the GCN deals with spatial dependency and the GRU deals with temporal dependency.

ST-AFN [

27]: this model represents our previous work and mainly has a temporal–spatial attention mechanism construction. The network consists of a speed processing network, a spatial encoder, and a temporal decoder.

ASTGCN [

28]: this model is graph convolution network that divides a temporal sequence into three parts, i.e., recent, daily-periodic and weekly-periodic dependencies. Prediction results are derived by fusing the outputs of the three parts.

All experiments were run on the same computer, where the OS was Ubuntu 18.04, the memory capacity was 64 GB, the CPU was an Intel Xeon Silver, and the GPU was an NVIDIA Quadro M4000. We used Pytorch 1.6.0 and set a series of hyperparameters as follows: 0.0001 for the learning rate; 128 for the batch size; 64 for the size of the hidden layers and cell states in the GRU; and 12 for the step length for historical data. Moreover, we selected the mean absolute error (MAE), the root mean squared error (RMSE), and the mean absolute percentage error (MAPE) to evaluate the prediction performance.

3.4. Performance Comparison

First, we compared the accuracy of our proposed algorithm with seven other prediction algorithms. Then, a comparison experiment was conducted considering the difference in traffic flow characteristics between weekdays and weekends. After verifying the effectiveness of our proposed algorithm, ablation experiments were conducted in order to investigate whether the internal graph convolutional network or the external heterogeneous data fusion network in the algorithm played a more important role. Finally, the time consumption of our algorithm was compared with three other algorithms.

3.4.1. Overall Comparison

Table 2 shows the results of the overall performance comparison. The smaller the values of MAE, RMSE, and MAPE, the better the prediction effect. Although the HA method based on statistics showed higher efficiency than the other methods and the evaluation indicators were not all larger than the others, this simple algorithm has an inability to process complex, changeable, real-time traffic flow data. The ARIMA method focuses on the regularity and stability of traffic flow data in the time dimension, and therefore it performed better than the traditional machine learning method SVM, but the accuracy was still low. The performance of GRU was superior to ARIMA, but both of them lack spatial dependency, which is exactly what T-GCN has to help it perform better. Although ST-AFN and ASTGCN both take the spatio-temporal characteristics of the data into account, the latter method is more accurate for constructing a multi-branched structure. The results show that the proposed heterogeneous graph convolution network performs best overall and that that MAE, RMSE, and MAPE reached 16.1654, 24.656, and 12.413% in PeMSD4 and 15.486, 22.513, and 13.641% in PeMSD8, respectively.

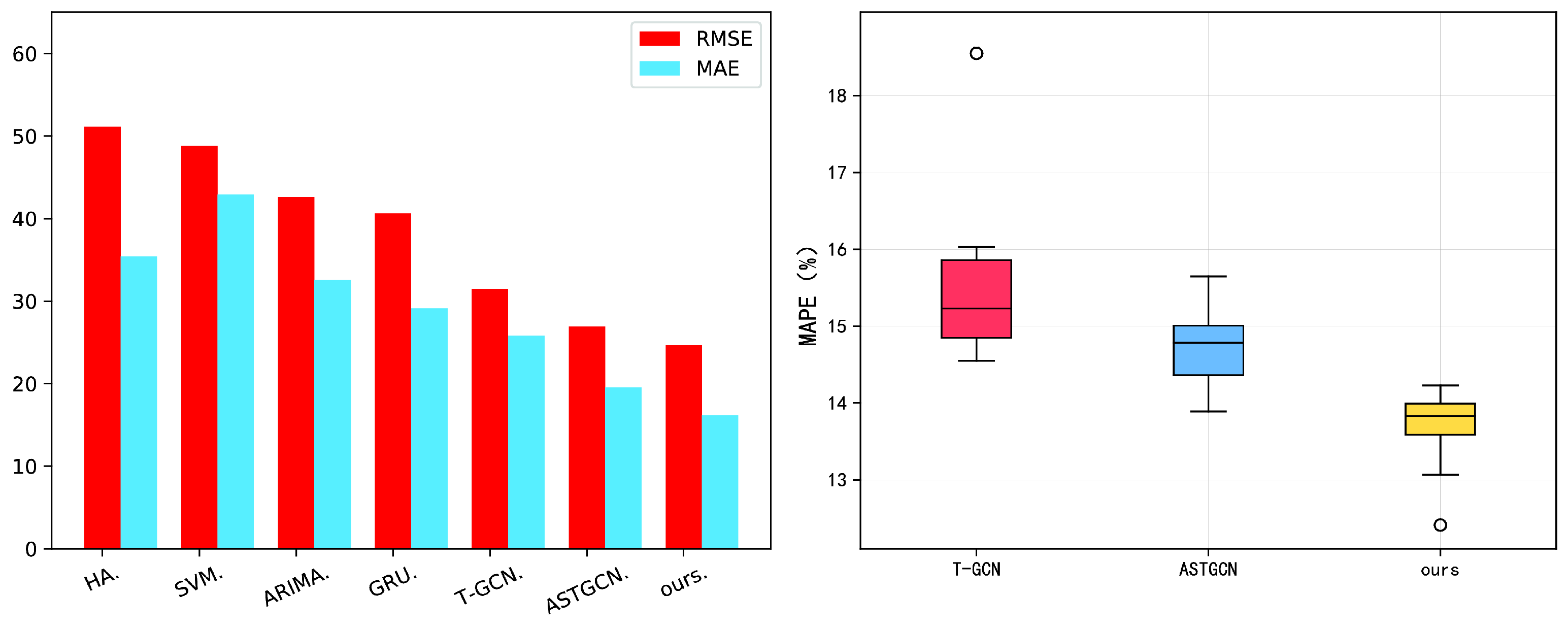

In order to visualize the difference between the test performances of different methods, we constructed a bar chart and box chart according to

Table 2, as shown in

Figure 8. Through detailed analysis and graphical presentation of the experimental results, it can be determined that the the new proposed algorithm outperformed the other seven methods in the overall performance of the prediction task.

3.4.2. Comparison of Weekends and Workdays

As a result of people’s different travel intentions, the fluctuation range of traffic flow is more obvious on weekdays than on weekends. The main difference is that traffic flow increases more rapidly during the morning and evening peaks on weekdays. In order to compare the differences between algorithms regarding the prediction for these two periods, we divided the prediction results into working days and non-working days for the statistics.

Table 3 shows the metrics of T-GCN, ASTGCN, and the heterogeneous graph convolution network for workdays and weekends. The prediction results of all these three algorithms for non-working days were better than those for working days. Among them, our proposed model reached the optimal prediction results, and the accuracy for non-working days was higher than that for working days.

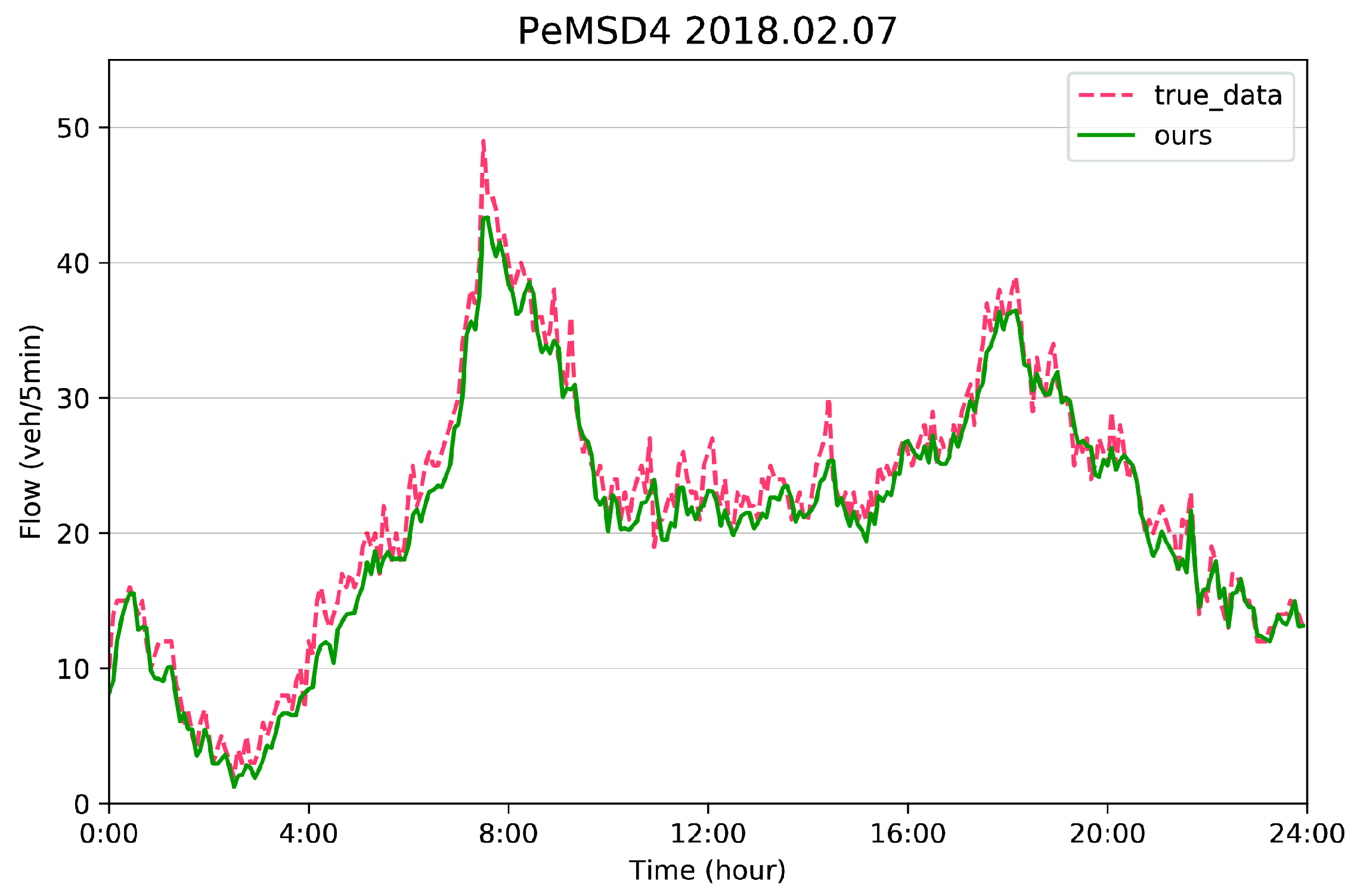

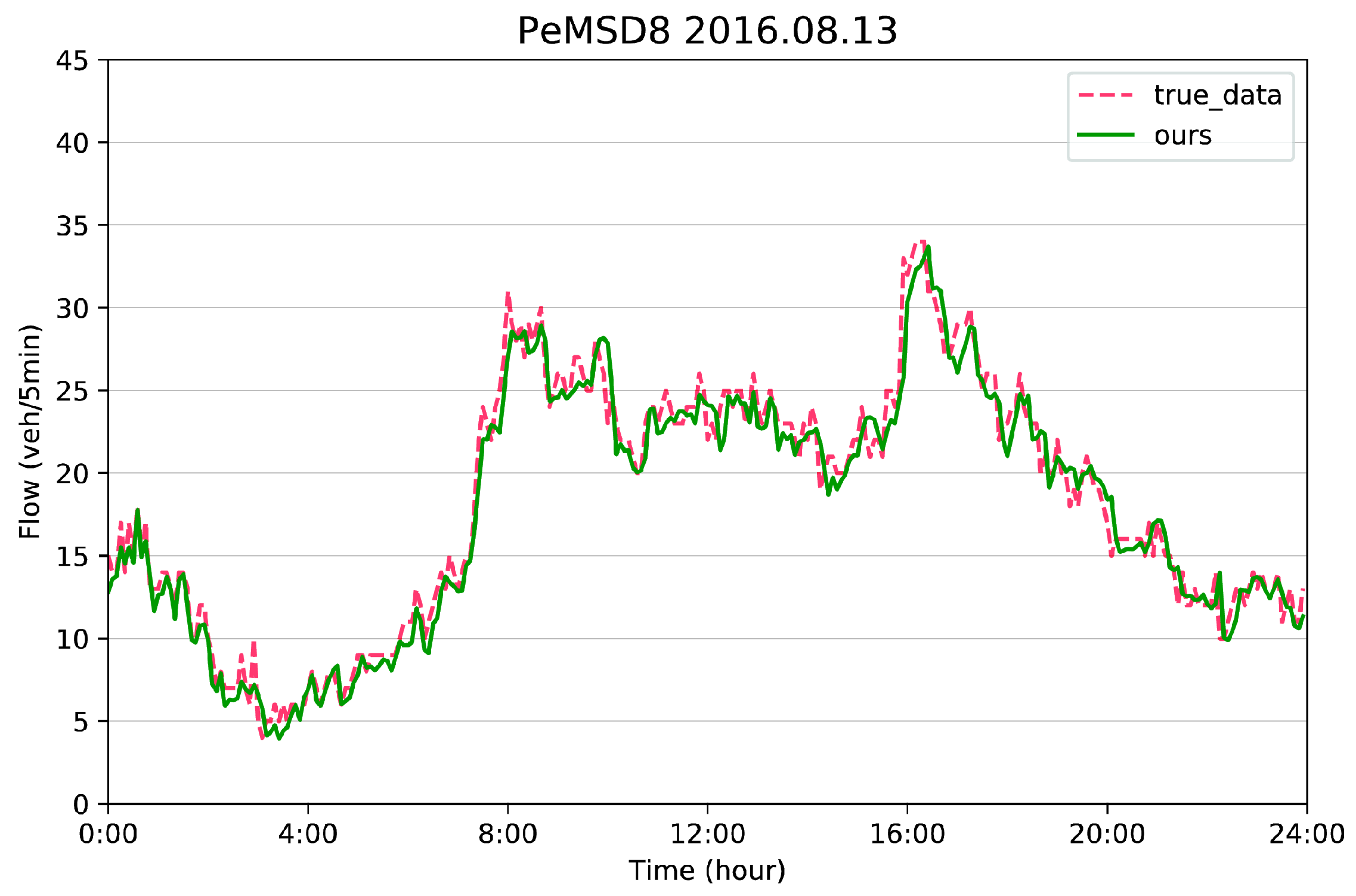

In order to better reveal the prediction accuracy and distinction of the heterogeneous graph convolution model on different types of days, we selected a working day from PeMSD4 and a non-working day from PeMSD8 for in-depth analysis. As shown in

Figure 9 and

Figure 10, the heterogeneous graph convolution model obtained good traffic flow prediction results. It can be seen from the curve overlap degree of

Figure 9 and

Figure 10 that the prediction effect for non-working days was slightly better than that for working days. The prediction effect in the morning and evening peaks was not good enough, which means that stability of traffic conditions also affects the final prediction results, i.e., the prediction accuracy is high when the traffic conditions are relatively stable but the gap between the prediction result and the real value becomes large when fluctuation occurs in the traffic flow. In addition, it can be observed that the prediction error at noon was large, as the fluctuations and interleaving of the curves are quite different. Furthermore, the model has the defects of failing to predict the maximum flow value in the morning and evening peaks.

3.4.3. Ablation Comparison

To determine whether the internal graph convolutional network or the external heterogeneous data fusion network played a key role in the heterogeneous graph convolution network in improving the accuracy, we conducted ablation experiments by removing a certain portion of the proposed network. The experimental results are shown in

Table 4, where Ours* is the prediction result of the static graph convolution network without the internal graph convolution network and Ours** is the prediction result without the external heterogeneous data fusion network. Since the accuracies of Ours** and Ours* are similar and much lower than for the full structure, this indicates that both structures play an important role in the whole framework, and they are both indispensable in improving the accuracy.

3.4.4. Training Cost Comparison

As well as focusing on the accuracy of the algorithms, we compared the training costs as an indicator of the computational efficiency of T-GCN, ASTGCN, ST-AFN, and the heterogeneous graph convolution network. As shown in

Table 5, the ST-AFN method showed the fastest training speed due to its efficient attention mechanism, and ASTGCN, with a multi-branched network, took the most time. Since the network structure of T-GCN is relatively simple, it also performed well with respect to time cost, though it was slightly inferior to ST-AFN. Regarding the heterogeneous graph convolution network, the performance was also good in terms of time consumption, and not too much time was expended.