Abstract

Following the occurrence of a typhoon, quick damage assessment can facilitate the quick dispatch of house repair and disaster insurance works. Employing a deep learning method, this study used aerial photos of the Chiba prefecture obtained following Typhoon Faxai in 2019, to automatically detect and evaluate the roof damage. This study comprised three parts: training a deep learning model, detecting the roof damage using a trained model, and classifying the level of roof damage. The detection object comprised a roof outline, blue tarps, and a completely destroyed roof. The roofs were divided into three categories: without damage, with blue tarps, and completely destroyed. The F value obtained using the proposed method was higher than those obtained using other methods. In addition, it can be further divided into five levels from levels 0 to 4. Finally, the spatial distribution of the roof damage was analyzed using ArcGIS tools. The proposed method is expected to provide a certain reference for real-time detection of roof damage after the occurrence of a typhoon.

1. Introduction

1.1. Purpose and Significance

Reports on field investigations following the occurrence of typhoons in the past [1,2] have reported houses, particularly low-rise wooden houses, as being significantly affected by strong winds and often suffering serious damage. After the typhoon, quick damage assessment of residents’ houses can facilitate quick dispatch of necessary assistance to house repair and the confirmation of disaster insurance to residents. Therefore, in March 2017, the Japan Cabinet Office revised “the guidelines for the identification and classification of residential house damage after disasters” [3] to improve the efficiency of filed investigation for houses damaged by disasters. In addition, local governments requested amendments to the “disaster relief act” and “act on support for reconstructing livelihoods of disaster victims” to propose investigation methods and evaluation criteria suitable for strong wind disasters. Recently, Typhoon Faxai, in 2019, caused serious damage to houses in Japan. Consequently, the effective and rapid detection and classification of the damage has become an urgent topic in Japan.

In Japan, the assessment of disaster situations regarding a house following a typhoon is mostly conducted by local government and disaster research groups through field investigations [4]. Thus, the result of the evaluation is released after a few days. The disaster field investigation is conducted not only for typhoons but following the occurrence of earthquakes as well. For example, according to the “investigation on the situation of certificates issuing for disaster in case of large-scale disasters-centered on the Kumamoto earthquake in 2016” [5] conducted by the Ministry of general affairs Japan, the certificates issued for disaster were delayed by more than three months after the Kumamoto earthquake occurred.

From 10 to 13 November 2019, a field investigation was conducted on the residential houses damaged after Typhoon Faxai 2019, considering Tateyama city, Kyonan town, and Minamiboso city in the south of Chiba Prefecture as the investigation area. The statistical result of the field investigation is presented in Table 1, where more than half of the houses in the survey area were damaged, and roofs with damage accounted for more than 80% of the damaged houses, far exceeding the damage proportion of other components of the house. Thus, it was found that the damaged condition of the house can be speculated based on the damaged condition of the roof.

Table 1.

Statistics of field investigation after typhoon Faxai in 2019.

1.2. Previous Research

After the 1960s, the use of aerial photographs in field investigations after typhoons, earthquakes, tsunamis and other disasters gained traction. Aerial photographs can provide local information with rich surface texture and spectral characteristics. Rapid and effective house damage extraction has been one of the most important research contents in the field of aerial photo processing. For example, Miura et al. [6] successfully measured the damaged area of houses in the 1995 Hanshin earthquake with aerial photos.

There are three extraction methods of roof damage detection following a disaster based on aerial photos.

The first method is visual inspection (1970–2000). The damage extraction is primarily performed manually by architects, emergency risk judgers, and other civil field experts. For example, Noda et al. [7] conducted a field investigation using a small aircraft after typhoon JEBI in 2018. Considering the south of Osaka Prefecture and the north of Wakayama Prefecture as the survey areas, they investigated the distribution of blue tarps covered on residents’ roofs. The results indicated a linear relationship between the number of blue tarps, such that, the number of damaged houses can be estimated using the number of blue tarps. Suzuki et al. [8] successfully detected houses with blue tarps covering the roof, using the aerial photos taken after tornadoes in Tsukuba, adhering to the criteria for damage identification issued by the Japan cabinet office. However, although this method offered high accuracy, it often requires several days.

The second method is image analysis (2000–2015), which is based on the pixel, threshold of the spectrum, brightness, and texture of aerial photos to judge house damage. When the threshold is exceeded, roof damage is concluded to have occurred. For example, Kono et al. [9] used the aerial photos taken after typhoon Jebi in 2018 to assess the roof damage of residential houses depending on whether blue tarps were covered, and used the results to infer the damage rate of the roof. In addition, Kono et al. [10] established a method of identifying the damage to residential roofs in a wide area using aerial photos acquired after Typhoon Faxai in 2019. In addition, it was compared to visual inspection results and its accuracy was confirmed as efficient. Naito et al. [11] used the aerial photos taken immediately after the Kumamoto earthquake, and used DSM analysis and texture analysis methods to extract the roofs covered with blue tarps. However, this method does not provide clear technical points regarding threshold setting.

The third method is deep learning (2015 and beyond) [12], wherein a single house is considered as the extraction object. For example, Miura et al. [13] used the aerial photo of the 1995 southern Hyogo and 2016 Kumamoto earthquakes to train a deep learning model. The model can be used to automatically classify the damage grade of houses into three levels: collapsed houses, non-collapsed houses, and houses covered with blue tarps. Further, Miura verified the accuracy of the model at Kyonan town, Chiba prefecture after Typhoon Faxai in 2019. Huang et al. [14] presented a deep learning method that incorporates the autoregressive (AR) time series model with two-step artificial neural networks (ANNs) to identify damage under temperature variations. Numerical results indicate that the proposed approach could successfully recognize, locate, and quantify structure damage by using output-only vibration and temperature data regardless of other influencing factors.

As mentioned above, the method for detecting roof damage using the distribution of blue tarps covered on the roof is widely used after disasters, such as typhoons and earthquakes in Japan. Moreover, the results of the above research show that with the increased blue tarps area, there is an increased loss of residential roofs.

However, the current detection methods for roof damage in aerial photos suffer from two primary shortcomings. First, it is impossible to calculate the proportion of roof damage. Currently, the existing basic outline of houses provided by the Japan Institute of land and geography is used. However, in addition to new constructions, reconstruction and expansion of houses, there are also completely destroyed houses caused by the typhoon, resulting in great changes in the roof outline. Therefore, the roof outline must be measured in real time. Second, roofs that are completely destroyed and not covered with blue tarps cannot be detected. If the house is seriously affected by a typhoon, for example, collapses or is damaged due to a secondary fire disaster, it is impossible to continue to live in them. Consequently, many residents tend to move to another safe place temporarily and although the house is seriously damaged, there is no need to continue to cover it using blue tarps. Therefore, this type of house damage has been omitted.

Thus, it is incorrect to only count the area of blue tarps, and roofs that are completely destroyed must be detected separately. Consequently, the sum of the area of blue tarps and the area of the roof completely destroyed must be considered as the total damaged area of the roof in the investigation area. In addition, according to the previous deep learning model, only two or three types of the level of roof damage can be classified, for example, damaged or undamaged roofs. Moreover, there is a lack of a specific classification basis for calculating the area of damaged roofs.

Therefore, in this study, a deep learning model with a segmentation function was used to detect roof damage after a typhoon. According to the characteristics of roof damage after a typhoon in Japan, this study provides a solution for the rapid detection of roof damage after a typhoon. The innovation of this study is in providing an overall solution for fast and accurate detection and classification of roof damage. Compared with other methods, this method has superior performance in speed and accuracy. The rest of this paper consists of six sections: Research Methods, Materials, Training and Detection using Deep Learning, Classification of Roof Damage Level, Discussion which includes a comparison with other classification methods, and Conclusions.

2. Research Methods

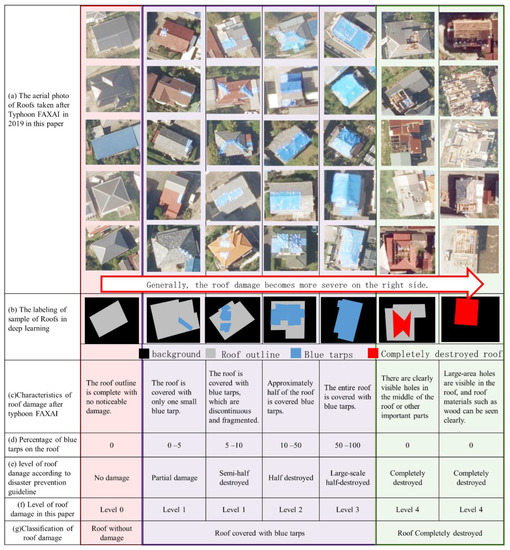

Figure 1 shows the specific appearance of roof damage after a typhoon. In Figure 1a, the roof is clearly divided into three parts. The left part with pink background represents the roof without damage, the middle part with purple background is the roof covered with blue tarps (coverage area of blue tarps increases from left to right), and the right part with green background is the roof completely destroyed (function of the roof was completely lost, thus, was not necessary to cover using blue tarps). Thus, With the direction of the red arrow in the Figure 1, the degree of roof damage increases. In Figure 1f, according to “the guideline for disaster prevention” [3] by the Japan cabinet office, the level of roof damage after a typhoon is divided into five levels: levels 0~4. The roof without damage is level 0. The roof covered with blue tarps is divided into levels 1–3 according to the proportion of blue tarps in the roof. Finally, the roof completely destroyed is classified as level 4. The specific distinction is shown in Figure 1.

Figure 1.

Appearance and level of roof damage in Typhoon Faxai in 2019. The left part with pink background represents the roof without damage, the middle part with purple background is the roof covered with blue tarps, and the right part with green background is the roof completely destroyed. Thus, With the direction of the red arrow in the Figure 1, the degree of roof damage increases.

In this study, it was found that dividing roofs (for instance, the roof which is neither covered with blue tarps nor completely destroyed, but with some holes,) into two types can greatly improve the accuracy of classification. One type is a roof with a small hole (less than approximately 5% of the roof area). The hole will not affect the normal life of residents and thus, it can be marked as “roof without damage”. The other roof type can be marked as “roof completely destroyed” in the case of a roof with huge holes (more than 80% of the roof area). This is because such holes are not covered with blue tarps. Such houses are completely unfit for living, or the residents have moved to another place during the field investigation [15]. Such a classification method may lead to detection omission, such as, classifying an actual “roof with damage” as “roof without damage;” however, it is beneficial to the overall accuracy of “roof completely destroyed”.

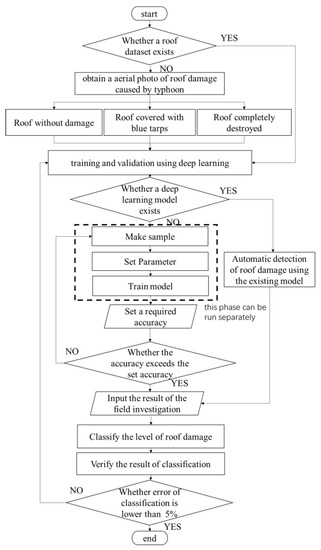

This study was mainly divided into three parts. First, based on the aerial photos taken after the typhoon Faxai, the images of roofs were obtained. Thereafter, the obtained images were used to render samples for training a deep learning model. Finally, the model was used to automatically detect three types of objects: blue tarps, roof outline, and roofs completely destroyed, and the level of roof damage was classified by combining three types of objects (Figure 2).

Figure 2.

Flowchart of detection and classification of damage roof.

3. Materials

3.1. Used Aerial Photos

When the damaged area is relatively large, generally, satellite images are used for photography of roof damage. In contrast, in the case of disasters with limited area, photos obtained via aircraft offer better image resolution than satellite images [10]. This study purchased the aerial photos of Typhoon Faxai in 2019 taken by International Airlines Company from September 19 to 20 in 2019. The area included a part of the Minamiboso and Tateyama cities. All aerial photos were acquired using a digital camera DMC for aerial survey built by Z/I Imaging Company [16]. Simple orthophoto with terrain skew corrected and distortion of camera lens fixed were obtained. They were composed of 8-bit Blue, Green and Red bands. Finally, the aerial photos were resampled to 0.1 m/pixel. the GSD for a scanned aerial image can be calculated using the following formula 1:

where G, H, f, and d represent the ground sampling distance (GSD), flying height above ground, focal length, and CCD pixel size, respectively, which are 0.1 m, 1000 m, 120 mm, and 12 μm, respectively, in this study.

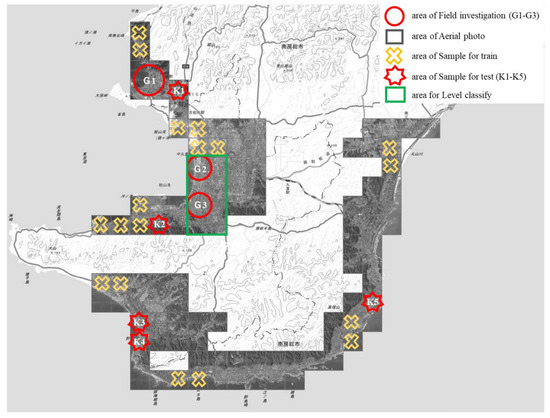

Figure 3 shows a data crop in the south of Chiba prefecture, where the area with the red circle is the field investigation area (G1 is a part of Minamiboso city, and G2 and G3 are parts of Tateyama city). The black square area is the photographing area taken by the aerial plane, which was purchased with scientific research fees. The area with the yellow cross represents the manually labeled samples used for training the deep learning model (18 areas). The area with a red seven-point star is the area used for testing the accuracy of the trained model, including K1–K5.

Figure 3.

Aerial photo used (southern part of Chiba prefecture). G1 is a part of Minamiboso city, and G2 and G3 are parts of Tateyama city. K1–K5 with red seven-point star is the area used for testing the accuracy of the trained model.

3.2. Labeling and Cropping Aerial Photos

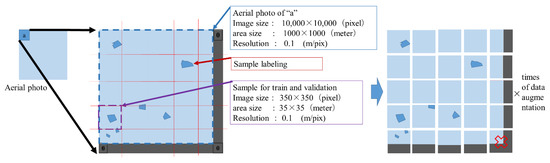

The upper left corner block “a” (Figure 4) was assumed as a piece of the aerial photo in Figure 3, and used as an example to illustrate the method in this study.

Figure 4.

Schematic of labeling and cropping on the aerial photo.

The size of this aerial photo is 10,000 × 10,000 pixels. Further, the largest area in the manual labeling data is the roof profile with 260 × 240 pixels, whereas the smallest is the blue tarps with 20 × 20 pixels.

First, through manual labeling, the three types of data, comprising roof outline, blue tarps, and roof completely destroyed, were labeled on the aerial photos, which were then used to create training samples for the deep learning. The labeling was mainly operated via visual inspection with an error rate of less than 5%. The operators of this study were professionals in architecture and civil engineering from Chiba University. To avoid data obfuscation between the training and validation data which can affect the validation accuracy, the labeling area was mainly set along the coastline with more damaged roofs. In the area of the training sample, as in Figure 3, 25,205 data were labeled for training in Section 4.2. In addition, 3269 data were made in the K1–K5 area of Figure 3 to test the accuracy of the trained model. This study compared the detection results using the model in the G1–G3 area of Figure 3 with those obtained from the field investigation after typhoon Faxai in 2019. The statistics of the labeling data are presented in Table 2.

Table 2.

Statistics of the labeling data in Figure 3.

3.3. Export Data

First, the above areas were cropped to pieces with dimensions of 350 × 350 pixels (Figure 5a). The size can include more than two roofs to reflect the overall characteristics of the roof and those of the surrounding environment that inflect on the neural network. However, a very small result in the roof outline was not being well detected. Whereas, too large a size will drastically reduce the detection accuracy of blue tarps. Thereafter, a 150-pixel width area with 0 value was added around the original aerial photo “a”, and the aerial photo was divided into 29 × 29 training samples. Among them, the sample with the lowest 0 value accounting for the majority was deleted (Figure 4, it is OK not to delete).

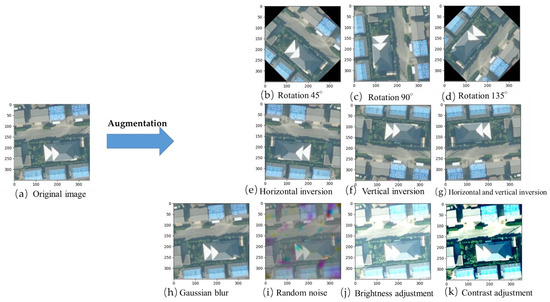

Figure 5.

Sample augmentation of a training sample.

In the subsequent stage, sample augmentation was conducted. Each cropped image was converted as follows. Figure 5 is a sample augmentation of one of these photos. There are three rotation transformations (b–d), three mirror transformations (e–g), and four color space transformations (h–k), resulting in a total of 10 transformations being conducted. In color space conversion, Gaussian noise (h), random noise (i), brightness (j), and contrast (k) were generated via mapping functions. Equation (1) is a formula for calculating the number of training samples created from aerial photos. Consequently, 8800 samples were obtained from “a” in Figure 4, whereas 151,200 training images were obtained from the entire area in 18 regions of Figure 3 (18 regions are the training sample regions indicated by the yellow cross.)

where Nt is Number of training samples, Ws is cropping width (pixel), Wk is width of aerial photo (pixel), Hs is Height of cropping (pixel), Wt is width of added 0 value (pixel), Nn is number of discarded sample, Hk is Height of aerial photo (pixel), Nk is times of data augmentation, and Ht is Height of added 0 value (pixel).

During the training stage, the training times can be calculated using Equation (3). Considering sets A and D in Table 3 as an example, the training times were 17,010 times with a validation ratio of 10% and eight batch sizes (eight samples trained simultaneously). In set D, the training times were 37,800 times with a validation ratio of 20%, 64 batch sizes (train 64 samples at the same time), and 20 epochs. The epoch was set randomly in this study. The more the training times, the better; however, the training time increased. Furthermore, to inspect the performance of the model, the observation of the results was limited to 6000 times in this study.

where T is training times, P is the ratio of validation, E is train epoch, and B is batch size.

Table 3.

Parameters for the model training.

4. Training and Detection Using Deep Learning

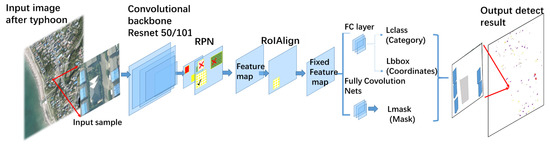

This study employed the neural network Mask R-CNN [17] (CNN is the abbreviation of convolutional neural network,). It can automatically detect different objects with high precision and calculate the area, and outline of the objects. Mask R-CNN is a neural network improved by R-CNN [18], FPN [19], Fast R-CNN [20] and Faster R-CNN [21]. Mask R-CNN adds a full convolution module to the existing two last modules (object detection and classification) and is used for object detection, classification, and segmentation (classify each pixel separately).

A schematic of Mask R-CNN [17] is shown in Figure 6. Taking blue tarps as an example, (1) in the stage of Resnet, the input roof image was convoluted to obtain the feature map of the many features. (2) In the stage of RPN, the candidate region (ROI), which is considered as blue tarps, was selected, and consequently, the location of blue tarps was obtained. (3) In the stage of ROI alignment, all candidate areas of blue tarps were unified into a certain size (7 × 7). (4) In the stages of Lclass, LBbox, and Lmask, the candidate regions were sent to the neural network to output the results of object classification, detection, and segmentation.

Figure 6.

Framework of the deep learning.

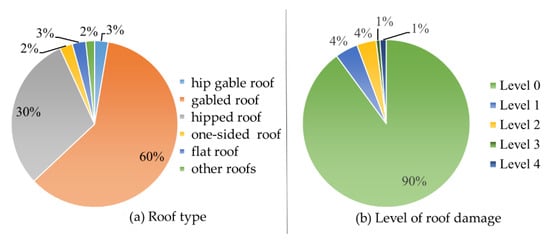

4.1. Making Sample

Figure 7 shows a pie chart detailing each roof type and the level of roof damage in the sample. The left side is counted according to the type of roof, and the right side is counted according to the level of roof damage. Further, the training sample includes levels 0–4 in Figure 1a, as well as all roof types identified in the field investigation [4].

Figure 7.

Composition of training sample. (a) Proportion of roof types in training data. (b) Proportion of level of roof damage in training data.

The parameters of the model can be understood as the weight composed of a series of values in deep learning. If there is a large number of samples, the weight of this type increases, which increases the probability of detection. In contrast, if the number of samples is small, and the probability of failure to be detected is high. In this study, attempts were made to include different types of roof samples, such that the parameter of the model was balanced, and there were fewer cases where a certain type of roof was not detected. In addition to locating the blue tarps in the center of the roof or on the eaves, samples of blue tarps hanging on the edges and corners were also marked as a supplement, which is relatively small and may be neglected if not careful.

4.2. Setting Parameters

When training the neural network, the initial learning rate, training times, batch size, and other parameters (Note 1–Note 3) must be set. The manner in which to adjust the learning rate is an important stage to train a good model of deep learning.

The result and training model are affected by various parameters, such as learning rate, the proportion of validation, batch size and other factors. Currently, there no standard exists on the manner in which to obtain a set of best parameters. In the continuous experiment, the most appropriate parameters were selected in this study, and experiments were conducted using different learning rates and other parameters.

The training and the validation sample during the training acquired the ratio of 8:2 or 9:1. Further, Python language and Pytorch [22] were used to build a training framework using a workstation with 64 GB, Windows 10 pro for workstations and a 64-bit Quadro RTX 5000 graphics card as the training environment. An entire training task required 2.5 h.

4.3. Training Model

To eliminate the possible interference of cars and trees, a pre-trained model was used to transfer the parameters that have been trained in another task to improve the training efficiency. This study employed the model of the COCO data set [23] as a pre-trained model to improve the training effect.

4.3.1. Loss Function in Training

The loss function used in training comprises classification, detection, and segmentation losses (Equation (4)). The cross entropy of the real and predicted values of the object was used to calculate classification loss. Further, the real and predicted values of the four vertex coordinates of the rectangular box were used to calculate detection loss using the smooth-L1 formula. Whereas, the binary cross entropy of the mask’s real and predicted values was used to calculate the segmentation loss. Furthermore, in each training, each loss value was summed to calculate the final loss value. The unit of the function loss is non-dimensional. In addition, the training quality of the model is determined by observing the decline of the loss curves later.

where L is training loss, LBbox is the loss of detection loss, Lclass is classification loss, and Lmask is segmentation loss.

4.3.2. Optimization Algorithm

Owing to the multitude of samples, the amount of calculation also increases. However, the loss value can be reduced using certain optimization algorithms, such as SGD, WOA [24] and MFO [25]. This study adopted the stochastic gradient descent (SGD) method as the optimization algorithm. The weight of the model was adjusted using Equation (5). Further, the learning rate was adjusted using Equation (6) (inverse time decay method), such that the learning rate is inversely proportional to the current training times. The factor γ was set to 0.95.

where Wi+1 is updated weight in i+1 times, Ri+1 is updated learning rate, Wi is weight in i times, and ∇L is the gradient of loss value.

where is the updated learning rate which is expressed by Equation (5), is the learning rate Note (3), and γ is the factor which is set to 0.95.

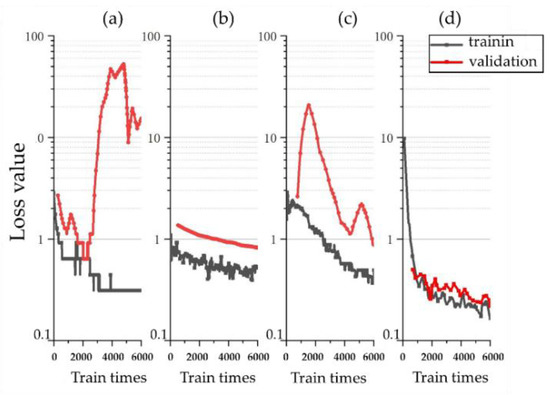

4.3.3. Training Process

Figure 8a shows the results of using set A of Table 3 for training. Initially, the loss value rapidly decreased. However, there was a sudden fluctuation in the loss value and it rebounded sharply at the 2500 training time. This is because there is a problem with the parameters, resulting in overfitting. Figure 8b shows the results using set B of Table 3. The loss value decreases gradually and is considered to be a good parameter that can be used for detection. Further, Figure 8c shows the results for the parameters of set C of Table 3. The loss curve exhibits great changes in the initial stage and is stable in the later stage; however, the validation curve has two peaks. Finally, Figure 8d shows the results using set D in Table 3. The parameters can be considered appropriate. The loss curve of training and validation in the training process decreases rapidly in the initial stage and tends to be stable in the last stage.

Figure 8.

Loss value in the training and validation. (a) the training and validation loss using set A of Table 3. (b) the training and validation loss using set B. (c) the training and validation loss using set C. (d) the training and validation loss using set D.

Parameters must be determined before model training and cannot be updated manually during training. Currently, there is no consensus on the selection of appropriate parameters. However, according to the various situations of loss curve and the user’s personal preference, certain judgment conditions can be set for selection [26]. The judgment condition used in this study was that the loss value reached less than 0.2 within 6000 times without obvious rebound. According to this benchmark [26], set D was the best parameter, although set B can also be used according to personal preference.

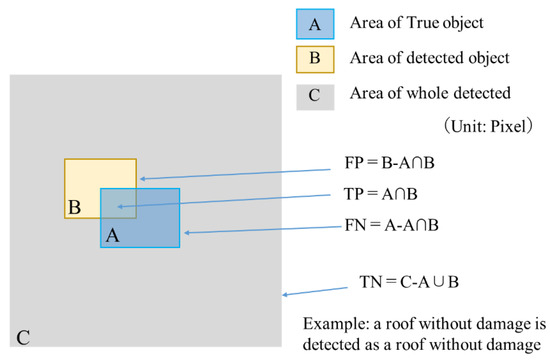

4.4. Validation in Training

In this study, it was determined that the overlap between the detected and actual targets being greater than 0.5 was the correct detection (T). Whereas, overlap less than 0.5 was considered an incorrect detection (F). Figure 9 shows a calculation diagram of TP, FN, FP, and TN. Taking roof outline as an example, TP (true positive) is the situation in which the actual roof was detected as a roof. FN (false negative) is the situation in which the actual roof was not detected as a roof (missed detection). FP (false positive) is the situation where a roof is incorrectly detected in the absence of a roof. TN (true negative) is the situation there is no actual roof and it is not detected as one either. K in Equation (6) is the total number of roof outlines, blue tarps, and roofs completely destroyed. The accuracy of a general deep learning model is not clearly specified and is according to actual needs. The required accuracy of target segmentation is approximately 72% [27]. This study attempted to measure the specific area of roof damage, and at least 90% accuracy was required in the training stage. According to the adjustment of parameters, the learning accuracy was approximately 98%. Further, the mIoU (mean value of IoU) is usually used as an evaluation indicator. The calculation formula is shown in Equation (7).

Figure 9.

The calculation of TP, TN, FP, FN.

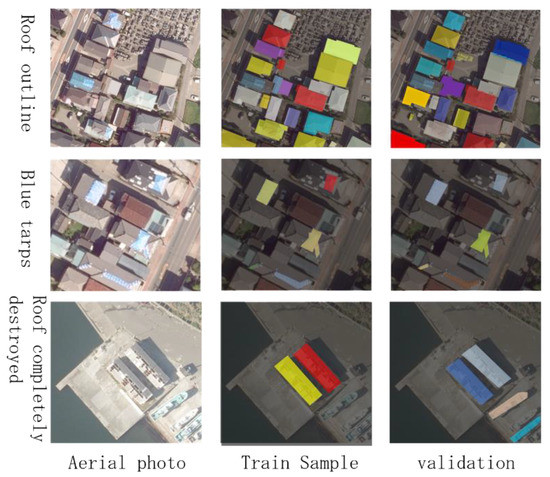

Figure 10 is a result of the validation in training. According to the results of the roof outline, certain adjacent houses were mistaken for a whole object. The test result for blue tarps is the best. According to the results of roofs completely damaged, certain ships were mistakenly detected as roofs completely damaged. In the comparison between ground truth and the results of validation, it is found that the target region is accurate and satisfies the accuracy of segmentation. The mIoU of roof outline, blue tarps, and roofs completely destroyed was more than 98%.

Figure 10.

The validation in the training.

4.5. Test after Training

To test the model after training, the K1–K5 area was selected as the test area (Figure 3), and a confusion matrix [28] was used for evaluation. In the confusion matrix, the detection accuracy of each pixel was calculated separately. The result is shown in Table 4. The unit of the value is in pixels. In this study, the pixel statistics of three detection targets were conducted. This is because the three detection target pixels always overlap. For example, blue tarps always lay on the roof, thus, the pixels on the roof always include pixels of blue tarps. Each row of Table 4 represents the results of each detection target, and the total number of each row is 108.

Table 4.

Results of each detection target.

In addition, according to the value of the confused matrix, five indices: accuracy, precision, recall, specification prefecture, and F value, were selected, which are expressed in Equations (8)–(12), respectively. Evaluation results of the test results are shown in Table 5.

Table 5.

Evaluation results of each detection target.

Blue tarps have the highest F value, reaching 0.90 because of the large number of samples of blue tarps that were labeled in Section 4.1. The characteristics of blue tarps themselves are simple, there are almost no changes other than shape and angle, resulting in easy detection. The F value of roof outline was 0.89 with an accuracy of 0.97. This is considered to be because the use of the COCO dataset as a pre-trained model excluded many other interfering objects. Finally, the F value of roofs completely destroyed was 0.84, which is relatively low. This is because the number of samples of roofs completely destroyed was small. Following the occurrence of the typhoon disaster, the number of roofs found to be completely destroyed was lower than the roofs covered with blue tarps. This study used the data augmentation method in Figure 5 to increase the 384 samples of roofs completely destroyed to 2304. However, in the actual detection, the error rate was still very high. For example, four houses were missed during the inspection while five houses were mistakenly inspected (four agricultural greenhouses and one abandoned house). Owing to the large area of forest and sea in the study area in this research, there are many pixels that are not objects, and the value of TN of each detection target was very large. Therefore, the accuracy is determined to be higher than the actual perceived value. It is appropriate to use the F value for the final evaluation.

4.6. Comparison with Field Investigation

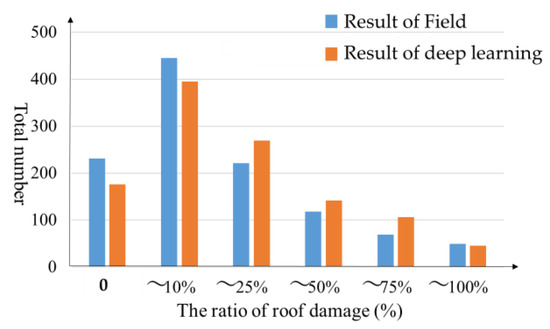

In Figure 11, the automatic detection results by using deep learning are compared with the results of the field investigation [4]. As a result of deep learning, it shows that the number and proportion of roof damage are almost consistent with the field investigation.

Figure 11.

Comparison with field investigation in the proportion of roof damage.

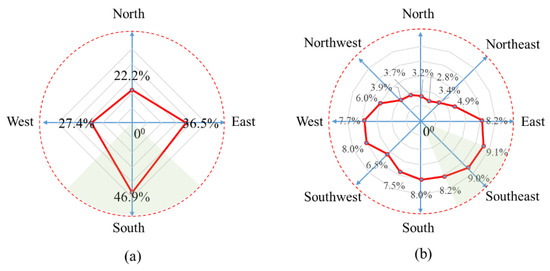

In addition, in the field investigation [4], the statistical results of the direction of the damage to the roof are shown in Figure 12a. the south side of the roof suffered the most. The method adopted in field investigation is that the investigators stand in one direction for visual inspection. For example, if a roof is found to have been damaged in four directions, it will be counted as damaged in the south, east, west and north. For further discussion, this paper refines the calculation method of damaged direction from the results of deep learning. The center of gravity of the damaged roof is divided into 16 directions, and the central distribution of the damaged area is counted. The results are shown in Figure 12b. The results were slightly different from the results of the field investigation, and it is found that the southeast suffered the most.

Figure 12.

Comparison with field investigation in the location of roof damage. (a) the result of the location of the roof damage in the field investigation. (b) the result of the location of the roof damage using deep learning.

Finally, the trained deep model was used to detect the roof damage in the south of Chiba prefecture (Figure 3). The performance of the PC was the same as that of previous training. Consequently, it was confirmed that it can be completed within 10 min without improving the hardware. Within the range of aerial photos in this study, the deep training model automatically detected 48,315 roofs. Among them, 4838 roofs were damaged, and the rate was approximately 10%.

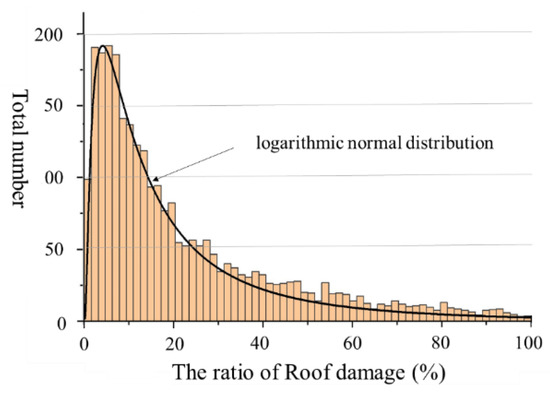

Figure 13 is the fitting curve of the damaged roof detected through the deep learning model. The horizontal axis in the figure is the proportion of the damaged area in the entire roof area, whereas, the black curve is the fitted probability density plot, showing a lognormal distribution. The statistical results show that the roof with a damage ratio of 1–10% accounts for 44.3%. Further, the roof with a damage ratio of 10–30% accounts for 35.4%. Furthermore, the roof with an 80–100% damage ratio is 3.6%. Thus, it was found that most roofs were partially damaged, and the proportion of roofs completely destroyed was very small.

Figure 13.

Statistics of detected roof damage.

5. Classification of Roof Damage Level

The ultimate goal was to use the test model to classify the level of roof damage.

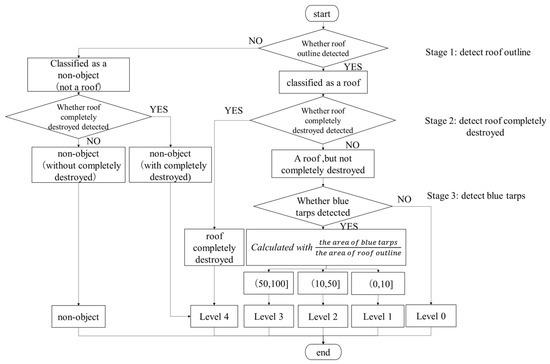

Figure 14 shows a flow chart for classification that has been roughly divided into three parts. First is the detection of roof outline, which if not detected is determined to be Non-roof. Thereafter, if the roofs that are completely destroyed are detected. Whether blue tarps are detected later or not, it is directly classified as Level 4. Finally, if blue tarps and the roof outline are detected simultaneously in an area, the proportion of blue tarps in this area can be calculated using the formula provided in Figure 14. Levels 1, 2, and 3 were determined according to the proportion. If only the roof outline was detected out and no blue tarps were detected out, it was classified as level 0.

Figure 14.

Flowchart of classification of the level of roof damage.

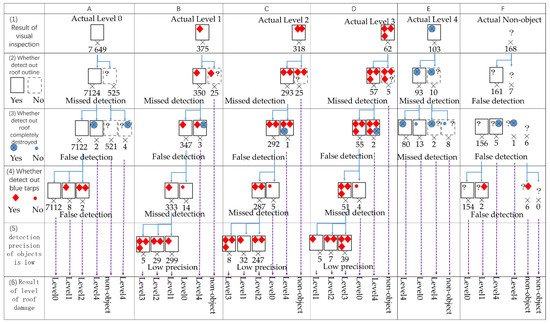

Figure 15 shows the statistical data of each classification stage. The first row is the data counted by the visual inspection of the area surrounded by the green box in Figure 3. There are 7649, 375, 318, 62, and 104 roofs of levels 0, 1, 2, 3, and 4, respectively, as well as several non-roofs. In the detection of the contours of roofs in the second row, 7117 houses were correctly detected, 590 houses were missed (most were undamaged roofs, a total of 525 roofs), and 161 houses were wrongly detected (mainly automobiles, agricultural greenhouses, pools, etc.). Further, in the detection of roofs completely destroyed in the third row, 82 roofs completely destroyed were correctly detected, 21 were missed, and 18 were wrongly detected (including outdoor warehouse). In the detection of blue tarps in the fourth row, the detection omission and error detection are displayed below the block. In particular, in row 5, owing to the detection accuracy of blue tarps and roof outlines, although certain objects were detected out successfully, the position deviation was considerably large, resulting in the wrong classification. For example, in column B of row 5, because the area of the detected blue tarps is larger than the actual area, 33 roofs of level 1 are wrongly classified (5 and 29 roofs are wrongly classified as level 3 and 2, respectively). In row 6, the sum of the results of the final tests is presented. For example, in column C, there are 318 roofs of level 2 of which 247 roofs were correctly classified as level 2, 5 roofs are wrongly classified as level 0, 32 roofs are wrongly classified as level 1, 8 roofs are wrongly classified as level 3, 1 roof is wrongly classified as level 4, and 25 roofs are non-roof.

Figure 15.

Statistical chart for classification of the level of roof damage. the column A–E is the process of level classification from actual level 0–4 to each classification level. The column F is the process of level classification frome actual non-object to each classification level.

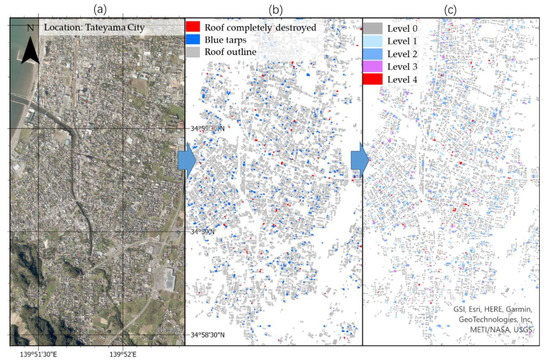

Other detailed statistical results are shown in Table 6. Figure 16 shows the map showing the result of each detected object and the level of roof damage.

Table 6.

(a) Evaluation of test results using confusion matrix. (b) The accuracy and F value of the confusion matrix.

Figure 16.

Results of the detection and classification for the green areas in Figure 3. (a) Aerial photo in Tateyama city. (b) Each detected object using deep learning. (c) the level of roof damage using automatically classification.

In Table 6, the accuracy and F value of the classification of each damage level is calculated separately. Then, the accuracy of each level and the average value of the F value were considered as the mean accuracy and F value of classification, respectively. The results show that the overall accuracy was 0.97, and the overall F value was approximately 0.81. This is because the number of levels 0 was considerable at each level. Therefore, the accuracy can be evaluated based on the accuracy rate. However, when the number of different levels varies greatly, the accuracy rate could not be used as a good index. Therefore, the comparison in Chapter 6 mainly compares with other methods using the F value.

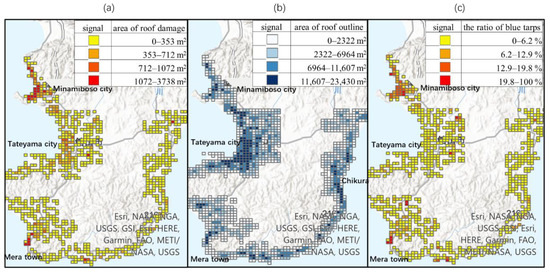

To inspect the spatial distribution of blue tarps after typhoon Faxai a 250 m mesh was used to render the distribution map according to the benchmark of the Bureau of statistics of Japan [29], and the blue tarps, roof outline, and the ratio of blue tarps were calculated for each mesh. Figure 17 shows the distribution map, where (a) is the total area of the detected roof damage in each grid (sq m). Most of the blue tarps were distributed in Minamiboso City, the central part, near the Mera area and Tateyama city. Further, (b) is the total area of the detected roof outline (sq m). The outline of the roof is mainly distributed on the coastline of Tateyama City, Mera area and Chikura area; (c) is the distribution of the ratio of roof damage, and the unit is a percentage. Equation (13) is used to calculate the rate of roof damage in each mesh. In Tateyama City, it is evident that there exist areas with a high ratio inland, rather than the coastline.

where proof is the rate of blue tarps (percentage), Sb is the area of blue tarps (m2), Sc is the area of roof completely destroyed (m2), Sf is the area of roof outline (m2).

Figure 17.

Distribution of roof damage in southern Chiba Prefecture. (a) the total area the detected roof damage in each mesh. (b) the total area of the detected roof outline. (c) the distribution of the ratio of roof damage.

6. Discussion

Through the comparison with other methods of damage roof detection, the F value of this classification method was found to be good. The comparison studies considered the entire south of Chiba Prefecture after Typhoon Faxai in 2019 as the test area object and aerial photos. As a method of image analysis, it mainly refers to the methods of Liu et al. [30] and Kono et al. [10]. The method of CNN refers to the method of Miura et al. [13]. Table 7 shows the different classification methods and evaluation indexes compared.

Table 7.

Comparison with other classification methods.

In addition to the accuracy (accuracy and F value) determined, the time required for analysis and universality was also investigated.

First, although the method of visual inspection offers high accuracy, it is time consuming. The author initially extracted damaged roofs from aerial photos in the southern area of Chiba prefecture through visual inspection, which required more than 2 days. Next, employing the method of image analysis, the spectral characteristics of blue tarps were studied, and different parameters were used to extract blue tarps. This method also requires time and lacks universality. Training in the CNN-based method is time consuming, while the time taken for automatic detection is relatively negligible. For example, using a workstation with 64 GB RAM, Windows 10 Pro for workstations, and a 64-bit Quadro RTX 5000 graphics card as the training environment, approximately 3 h are required to train a 10,000 × 10,000 area, while it only takes 245 s to detect roof damage at the same place using the trained model; however, in the comparison of various methods about time consumption, owing to the differences between the algorithms and computer hardware used, particularly, the time to prepare the data set and the time to determine which method is the bestcan not be calculated clearly, the specific comparison in time consumption become difficult. Using the same workstation as mentioned above, it takes 245 s to detect a 10,000 × 10,000 area and only 10 s to classify damage level using the detected results at the same area.

The accessibility of this study refers to the convenience with which it can be used by researchers later. In terms of the updatability of model parameters, the training in this study can be regarded as the first training, and subsequent users can take the model as the initial parameters for secondary and further training. Further, with the increase in training time, the accuracy of model parameters is gradually improved. It can provide initial parameters for other researchers’ automatic detection research. Therefore, the method in this paper can be said to be accessible.

Thus, there are many difficulties in 100% automatic extraction of damaged roofs from aerial photos, primarily owing to three factors. (1) Currently, owing to the different house materials of the roof, there are detection omissions or detection errors in extracting geometric or structural features. (2) The appearance of the roof of general public houses is a regular shape; however, the appearance of the roof of residential houses is complex and changeable, with different shapes (such as oval, etc.). (3) Suburban homes are often covered by dense trees, while the lower houses are often covered by the shadow of high-rise houses, thereby rendering the extraction of complete roofs a challenge.

7. Conclusions

In recent years, frequent typhoon disasters have caused great damage to houses. The manner in which to quickly detect damaged roofs has become a challenge. This study considered the residential roof in the southern part of Chiba Prefecture after typhoon Faxai in 2019 as the research area using aerial photos, and the sequence and principle of detection methods were described in detail. Specifically, it included the sample making, the parameter adjustment, the model training and validation. Subsequently, the detection results obtained using the model were compared with the results of the field investigation after typhoon Faxai. It proves the feasibility of this method. In addition, it also summarizes the advantages and disadvantages of this method in the field investigation.

Compared with the traditional field investigation and other image analysis methods, the deep learning method in this study can greatly shorten the damage detection time, and the F value of the damage classification is more than 0.8. Furthermore, this study provides a research template for further research on damage detection of roofs after a typhoon. In the future, this method is expected to be extended to many fields, such as to detect damage in walls and other parts of the house following the occurrence of a typhoon.

Note, (1) batch size: the number of data contained in each subset of training is called batch size. For example, when the dataset of 2000 roofs is divided into 100 subsets, the batch size is 20.

Note, (2) backbone: the omitted form of the backbone network, and its scale and type vary with the network. The backbone of the model in this paper is RESNET 50 and RESNET 101, which show the number of the convolution layer.

Note, (3) learning rate: learning rate is a parameter that adjusts the size of parameter changes in the optimization of deep learning. An excessive learning rate will lead to good results, and too small a learning rate will prolong the training time.

Author Contributions

Conceptualization, J.X.; methodology, T.T.; validation, F.Z.; formal analysis, W.L.; investigation, J.X. and T.T.; resources, W.L. and T.T.; data curation, T.T.; writing—original draft preparation, J.X.; writing—review and editing, J.X.; funding acquisition, T.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by JSPS Grant-in-Aid for Special Purposes (2019) G19K24677, a field investigation on the effects of prolonged power outages caused by Typhoon Faxai (Yoshihisa Maruyama).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Part of this paper was supported by a field investigation after Typhoon Faxai (Yoshihisa Maruyama). Thank to everyone who accompanied us in the field investigation after Typhoon Faxai in 2019.

Conflicts of Interest

The authors declare no conflict of interest.

References

- National Institute for Land and Infrastructure Management (NILIM); Building Research Institute (BRI). Disaster report: Field Survey report on damage to buildings due to strong winds caused by typhoon Faxai in 2019 (Summary). Build. Disaster Prev. 2019, 503, 57–66. [Google Scholar]

- National Institute for Land and Infrastructure Management (NILIM). Field Investigation Report on Damage of Buildings by Strong Wind Accompanied with Typhoon No.21. Available online: http://www.nilim.go.jp/lab/bbg/saigai/ (accessed on 14 November 2018).

- Cabinet Office (Disaster Prevention): Disaster Guidelines for Disaster Victims 2021. Available online: http://www.bousai.go.jp/taisaku//pdf/r303shishin_all.pdf (accessed on 18 March 2021).

- Kazuyoshi, N.; Yuya, K.; Takashi, T.; Eriko, T.; Hiroshi, N. Survey report on damage and recovery after Typhoon Faxai in 2019: Part1-Part4. Analysis of roof damage with aerial photo image. In Summaries of Technical Papers of Annual Meeting, Architectural Institute of Japan; Chiba University: Chiba, Japan, 2020; pp. 46–85. [Google Scholar]

- Ministry of Internal Affairs and Communications, Japan. Result Report: Fact-Finding Survey on the Issuance of Disaster Certificates in the Event of a Large-Scale Disaster, Focusing on the 2016 Kumamoto Earthquakes. Available online: https://www.soumu.go.jp/main_content/000528758.pdf (accessed on 18 March 2021).

- Mitomi, H.; Matsuoka, M.; Yamazaki, F.; Taniguchi, H.; Ogawa, Y. Determination of the areas with building damage due to the 1995 Kobe earthquake using airborne MSS images. IEEE Int. Geosci. Remote Sens. Symp. 2002, 5, 2871–2873. [Google Scholar]

- Noda, M.; Tomokiyo, E.; Takeuchi, T. Aerial survey of wind damage by T1821 in South Osaka and North Wakayama. Summ. Tech. Pap. Annu. Meet. Jpn. Assoc. Wind. Eng. 2019, 1, 105–106. [Google Scholar]

- Suzuki, K.; Hanada, D.; Yamazaki, F. building damage inspection of the 2012 Tsukuba Tornado from field survey and aerial photographs. Inst. Soc. Saf. Sci. 2013, 21, 9–16. [Google Scholar]

- Kono, Y.; Kazuyoshi, N.; Takashi, T.; Eriko, T.; Hiroshi, N. Housing damage caused by Typhoon Jebi 2018 Part 1 Estimation of roof damage rate based on comparison of satellite images taken before and after the typhoon. Archit. Inst. Jpn. 2020, 1, 72–85. [Google Scholar]

- Kono, Y.; Nishijima, K. Survey report on damage and recovery after Typhoon Faxai in 2019: Part4: Analysis of roof damage with aerial photo image. Archit. Inst. Jpn. 2019, 2, 89–99. [Google Scholar]

- Naito, S.; Monma, N.; Yamada, T.; Shimomura, H.; Mochizuki, K.; Honda, Y.; Nakamura, H.; Fujiwara, H.; Shoji, G. Building damage detection of the kumamoto earthquake utilizing image analyzing methods with aerial photographs. J. Jpn. Soc. Civ. Eng. 2019, 75, 218–237. [Google Scholar] [CrossRef]

- Kurihara, S. The Theory of the Future of Life, Industry and Society from the Viewpoint of the Practical Use of AI Revolution with Human Being; NTS Press: Tokyo, Japan, 2019; pp. 49–113. (In Japanese) [Google Scholar]

- Hiroyuki, M.; Aridome, T.; Matsuoka, M. Deep learning-based identification of collapsed, non-collapsed and blue tarp-covered buildings from post-disaster aerial photo. Remote Sens. 2020, 12, 1924. [Google Scholar]

- Minshui, H.; Wei, Z.; Jianfeng, G.; Yongzhi, L. Damage identification of a steel frame based on integration of time series and neural network under varying temperatures. Adv. Civ. Eng. 2020, 1, 1–15. [Google Scholar]

- Ministry of Internal Affairs and Communications, Japan: About the Damage Situation Caused by Typhoon Faxai in 2019. Available online: https://www.soumu.go.jp/r01_taifudai15gokanrenjoho/ (accessed on 18 March 2021).

- Hinz, A. The Z/I Imaging digital aerial camera system. In Photogrammetric Week 1999; Fritsch, D., Spiller, R., Eds.; Wichmann Verlag: Heidelberg, Germany, 1999. [Google Scholar]

- Kaiming, H.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Ross, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch, an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision—ECCV 2014. ECCV 2014; Lecture Notes in Computer Science; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Minshui, H.; Cheng, X.; Zhu, Z.; Luo, J.; Gu, J. A novel two-stage structural damage identification method based on superposition of modal flexibility curvature and whale optimization algorithm. Int. J. Struct. Stab. Dyn. 2021, 21, 2150169. [Google Scholar]

- Minshui, H.; Cheng, X.; Lei, Y. Structural damage identification based on substructure method and improved whale optimization algorithm. J. Civ. Struct. Health Monit. 2021, 11, 351–380. [Google Scholar]

- Chida, H.; Takahashi, N. Study on image diagnosis of timber houses damaged by earthquake using deep learning. Archit. Inst. Jpn. J. Struct. Constr. Eng. 2020, 85, 529–538. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Kanai, K.; Yamane, T.; Ishiguro, S.; Chun, P.-J. Automatic detection of slope failure regions using semantic segmentation. Intell. Inform. Infra. 2020, 1, 421–428. [Google Scholar]

- Statistics Bureau of Japan. About Regional Mesh Statistics. Available online: https://www.stat.go.jp/data/mesh (accessed on 24 December 2020).

- Liu, W.; Maruyama, Y. Damage estimation of buildings’ roofs due to the 2019 typhoon Faxai using the post-event aerial photographs. J. Jpn. Soc. Civ. Eng. 2020, 76, 166–176. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).