1. Introduction

The student course evaluation survey exercises serve as one of the key student feedback channels for quality assurance and continuous enhancement in teaching and learning at the institutional level. Research shows that students’ engagement in learning is a critical indicator of learning productivity, satisfaction, and academic success. Ref. [

1] suggest that student engagement is a key indicator of the quality of their learning experience in school. Student engagement is referred to as the “effort to study a subject, practice, obtain feedback, analysis, and solve problems” by [

2]. The work by [

3] classifies student engagement into behavioral, emotional, cognitive, and agentic levels. In other words, student engagement is closely associated with their class participation, contribution, and self-ownership in learning.

The previous empirical analysis at a leading performing arts university in Asia shows that students’ overall ratings of Outcome Achievement, Teacher Rating, and Student Effort attribute positively to Course Satisfaction. Meanwhile, Course Design, Teaching Practice, and Student Effort contribute positively to students’ ratings on Outcome Achievement, Teacher Rating, and Course Satisfaction. Course Design has a significant impact on Course Satisfaction, whereas Teaching Practice leads to drastic variations in Teachers’ Rating, especially in the performing arts education. These findings are consistent with the extant literature that fosters students’ active engagement in learning, which is central to student satisfaction, learning productivity, and academic success.

Built on these insights, this study focuses on the following areas: (1) applies the data warehouse multi-dimensional analysis approach to analyze the course student evaluation data, a key aspect of the quality assurance for higher education; (2) applies a newly developed analytical framework to categorize courses into various domains (Student Effort, Outcome Achievement, Course Rating, and Teacher Rating) to address the domain-specific course issues for the program leaders and policymakers at the senior management level. In particular, this diagnostic tool provides a multi-dimensional lens to classify courses into different domains, which comprise metaphors and targeted enhancement strategies for addressing domain-specific issues.

2. Literature Review

Student evaluations of courses and teaching, as a key aspect of the teaching and learning quality assurance at tertiary institutions, have become increasingly important and is recognized as one of the ways in which teacher effectiveness can be identified and improved. Higher education institutions use the evaluation not only to assist in accreditation procedures and to provide measures for accountability but also to garner data regarding teaching quality, effectiveness, and enhancement.

A student satisfaction study conducted at a Romanian university by [

4] presents important aspects in three dimensions (the teaching and learning activities, the institutional material base, and the support services offered by the institution) of the student satisfaction survey, and also shows that the evaluation is a suitable measure of effectiveness [

5]. Various scaled studies were investigated to measure academics’ performance based on student evaluation and indicated that the evaluation plays a significant role in academics’ promotion or dismissal [

6,

7,

8].

Furthermore, the works by [

9,

10] explore relevant legal issues on the dismissal of the unproductive lecturers that do not meet the required evaluation expectations during times of restructuring in the context of the US or Australian education systems.

To elicit the course-related expectations of university students about elective courses in music, descriptive research and data are collected by a questionnaire at Ankara University by [

11] on 552 students; it investigates: (1) Reasons behind choosing the music course; (2) Expectation of the objectives of the music course; (3) Expectation of the content of the music course; (4) Expectation of the teaching process of the music course; and (5) Expectation of the evaluation process of the music course.

Interpretative Phenomenological Analysis (IPA) is used in music education research by [

12] to investigate the personal meaning and lived experience in the music classroom. The study examines musical identity, detail of curriculum, pedagogy, and technology. This study explores how the analysis system works in the relevant research for music educators.

The author of [

13] explores the potential for using a synchronous online piano teaching internship as a service-learning project for graduate pedagogy interns. The work suggests that educators shift the teaching focus from teacher-centered to student-centered (from single-way interaction to bilateral interaction). Thus, feedback from students becomes more important. The study shows evidence of an effective learning and engaging classroom. It is beneficial to hear from learners to manifest learning outcomes through improved teaching.

The work by [

14] investigates the impact the pandemic has had on Ethno World, JM-International’s programme for folk, world, and traditional music. The research aims to find out how artistic mentors have responded to the teaching and learning shift from face-to-face to an online environment. A range of questions are used, including: How do artistic mentors perceive online teaching and learning practices during COVID-19? How has the shift to an online music teaching and learning environment impacted their understanding of the teaching and learning principles of Ethno? The study has revealed both pros and cons of online learning.

The works of [

3,

15] reveal that students are motivated to engage in learning when those learning activities and social conditions satisfy their basic needs for competence (feeling competent), autonomy (feeling in control), and relatedness (establishing emotional bonds with others). In teaching and learning contexts, students experience autonomy to the extent to which their learning activities foster a sense of choice, psychological freedom, and internal locus of control. As such, student engagement is affected by course design (curriculum development), learning environment (social climate), and social agents (teachers and peers), according to the works by [

1,

16]. Studies suggest that supportive teaching practices are positively associated with student engagement, including: (a) target high-order cognitive skills; (b) incorporate active learning activities; (c) involve collaborative investigation; and (d) incorporate social learning activities, such as observation, guided inquiry, and interaction with peers, experts, and teachers [

2,

17,

18,

19]. Furthermore, from the program design and management point of view, a combination of effective course designs and engaging teaching is key to student satisfaction, which leads to the higher achievement of learning goals.

Several newer evaluation models of teachings and a pathway to more accurate assessments are proposed by [

20] to standardize the evaluation protocols for the performing arts teacher preparation programs. However, how to analyze the massive amount of data and how to present the aggregate data to others in an efficient way are extremely difficult for the evaluation of performing arts teachers.

With the rapid development of information technology, researchers try to use various online analytical processing technology and visualization methodologies in tertiary education, especially in the teaching and learning-related fields. Researchers [

21] take a systematic review of learning analytic visualizations and conclude that while there is considerable work in the field, there “is a lack of studies that both employ sophisticated visualizations and engage deeply with educational theories” (p. 129). At the program level, scholars [

22] present how learning analytics can inform the curriculum review through the analysis of data such as students’ grades and subject satisfaction scores to identify areas for enhancement and improvement. The work by [

23] presents the use of a data warehouse (DW) to analyze the behavior of the users (students) of the e-learning platform to make decisions with respect to their assessment. The DW is used to process and analyze data, which were generated by the students whilst navigating the e-learning platform. Ref. [

24] uses the Online Analytical Processing (OLAP) technique in order to generate valuable reports, which are then used to improve the e-learning platform and help in learning evaluation.

Referring to the current online analytical processing technology and growing demands from the program leaders and policymakers at the senior management level, an efficient solution is needed. In this study, the researchers present a multi-dimensional analysis methodology and a novel analytical framework to categorize courses into various domains to address the domain-specific course issues. This framework results in an easy and effective visualization for relevant administrators and policymakers to quickly identify issues, adjust policies, and take necessary actions to improve and enhance teaching.

3. Data and Methodology

3.1. Source Data

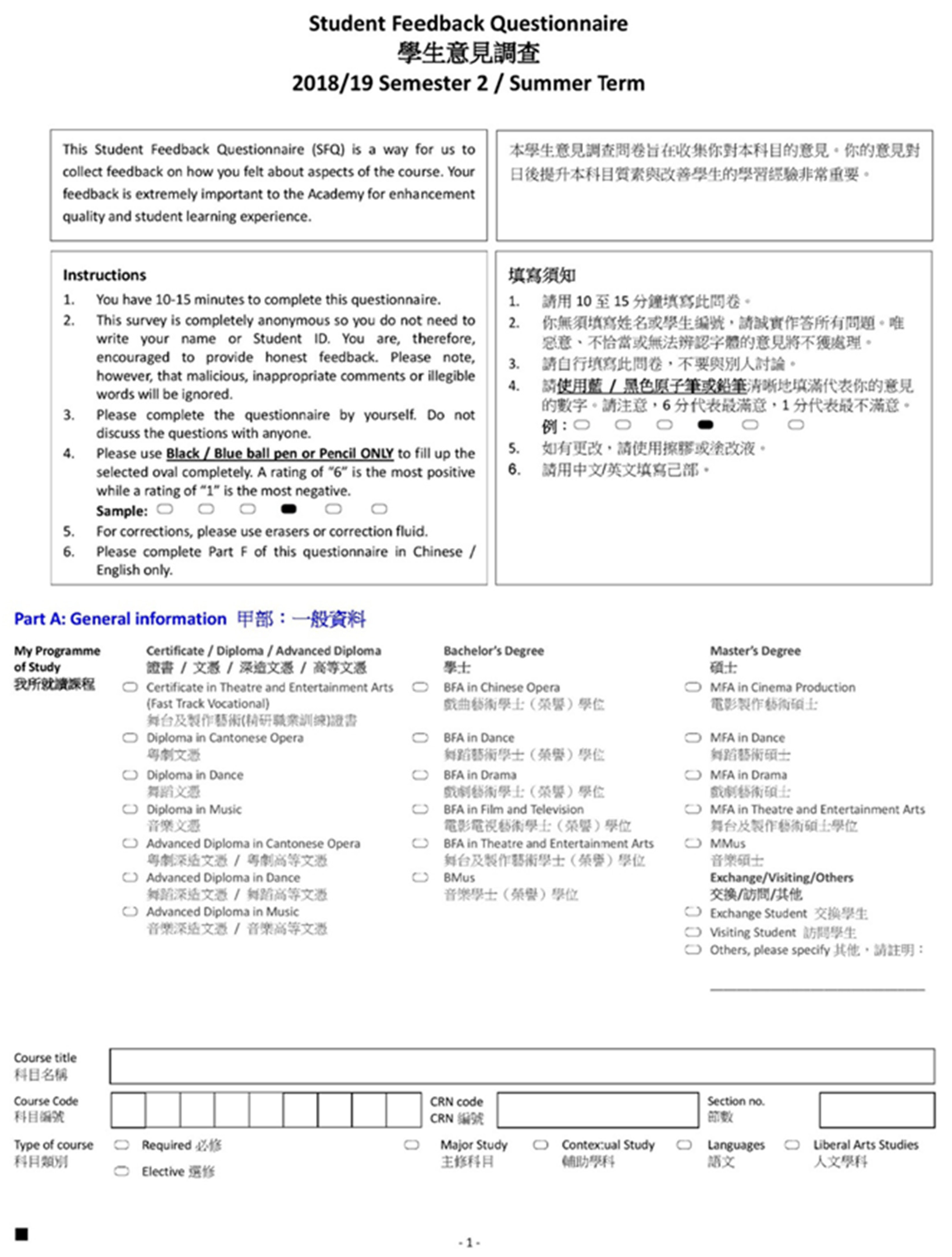

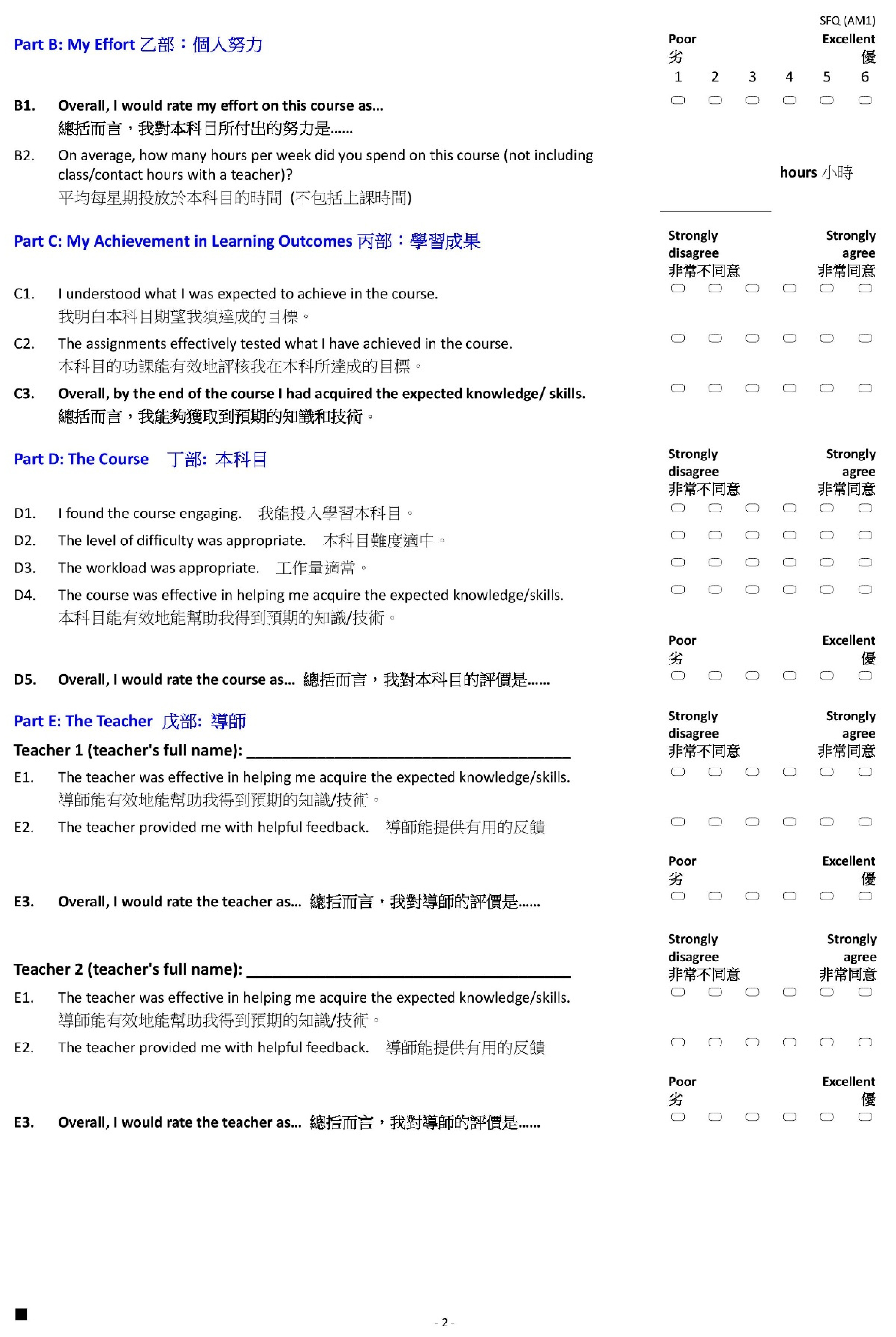

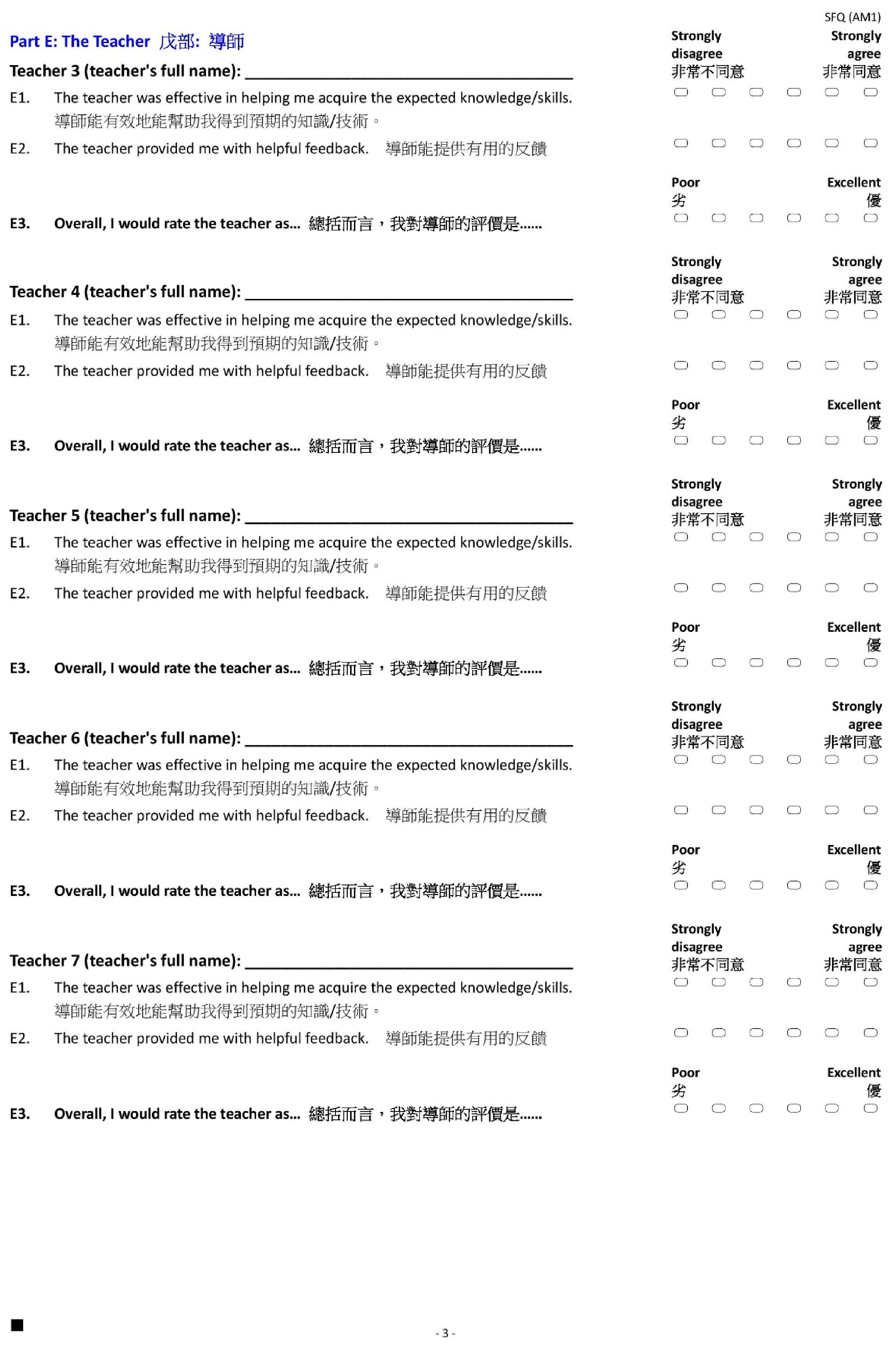

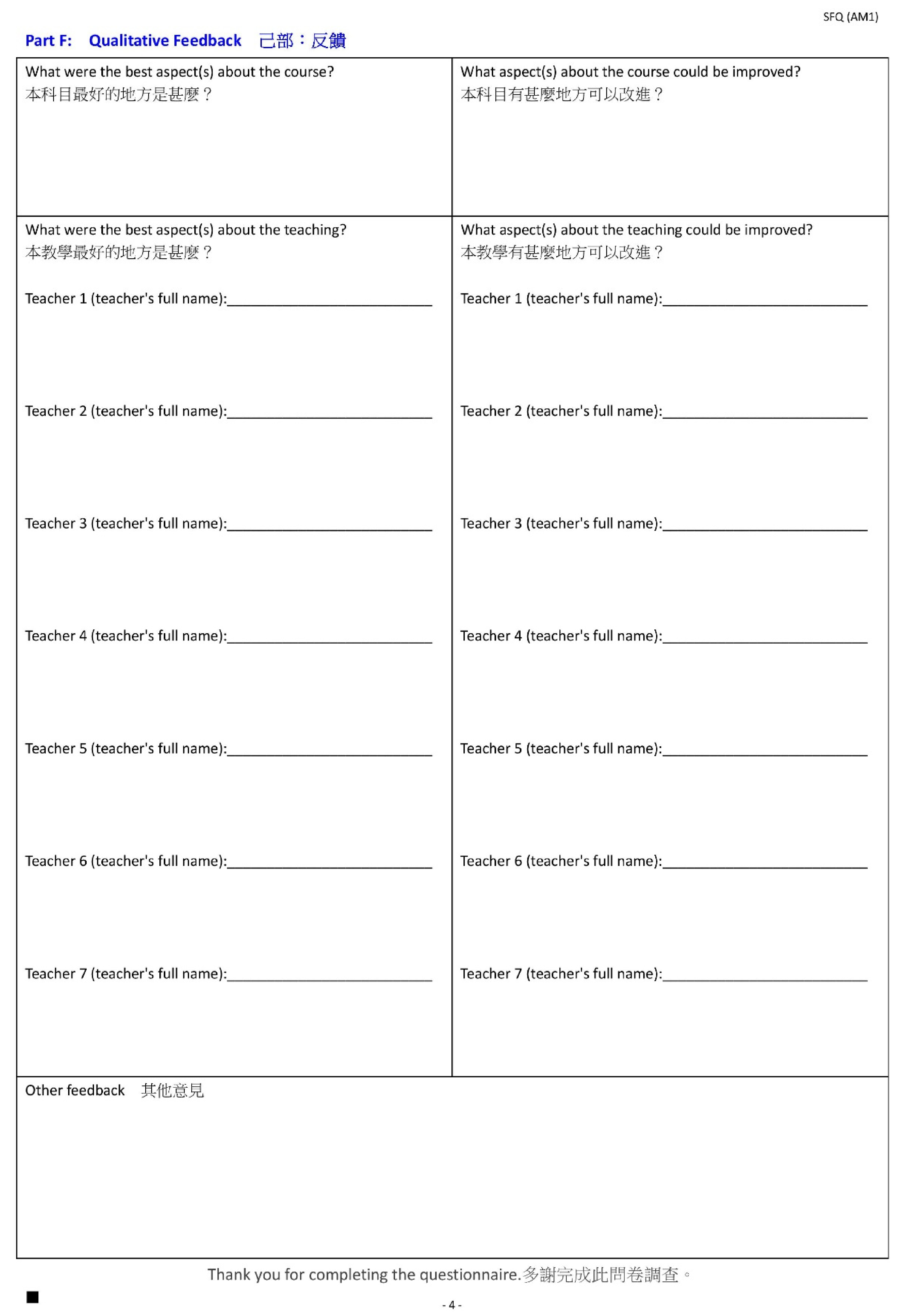

This study focuses on a leading performing arts university in Asia. It is committed to maintaining the highest program and teaching standards. Student survey exercises are conducted regularly at the institutional level to gather feedback on faculty performance. The centralized Student Feedback Questionnaire (“SFQ”) survey is one of the means of the institutional evaluation system for quality assurance and continuous teaching enhancement.

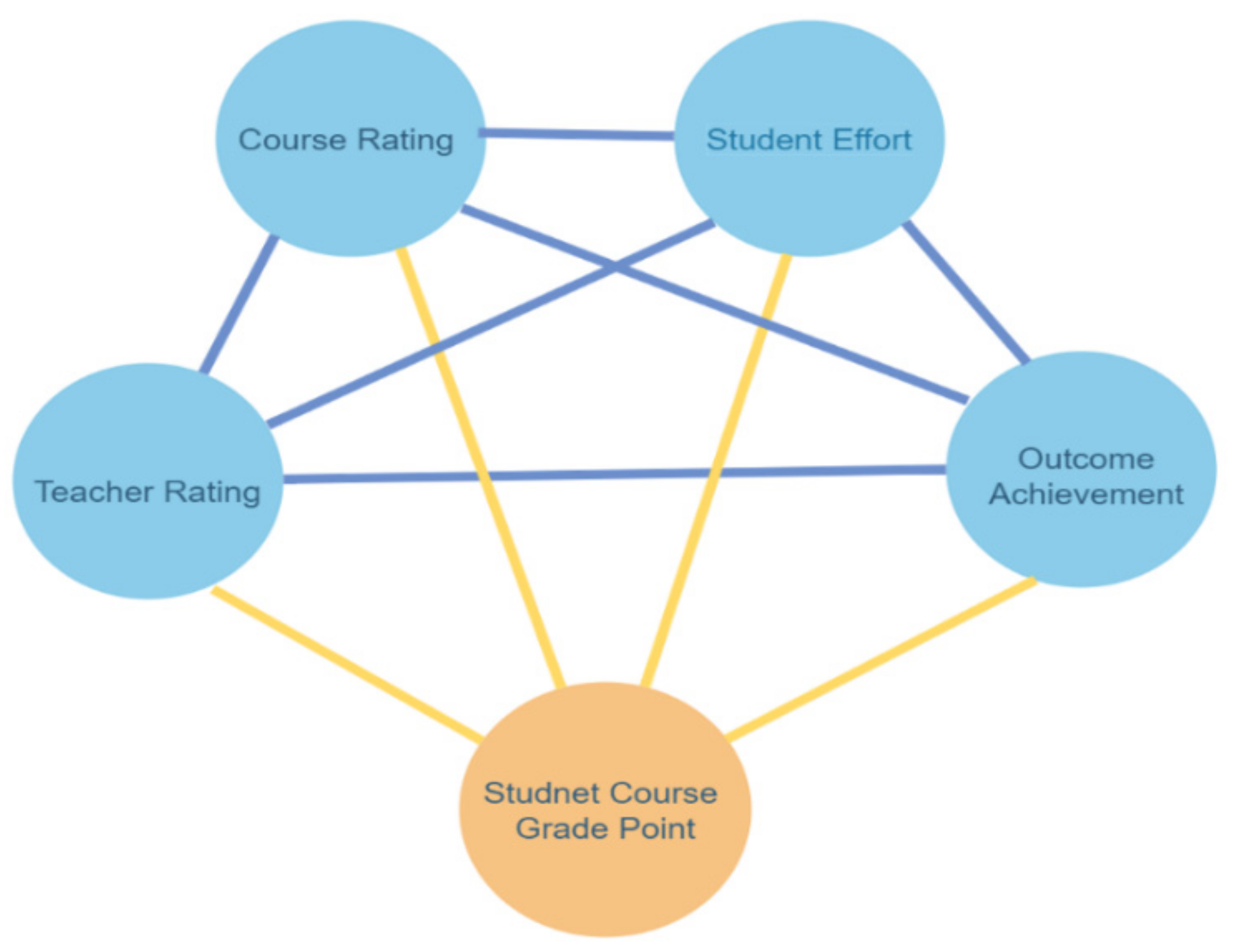

The questionnaire covers questions in four dimensions—Student Effort, Outcomes Achievement, Course Rating, and Teacher Rating. The aspect-question mapping is shown in

Table 1.

The detailed questionnaire is listed in

Appendix A. As a standard practice, two rounds of the SFQ survey were conducted for AY 2018/19, one to cover courses delivered in the Spring Semester and the other to cover courses delivered in the Fall Semester (summary in

Table 2).

Performing arts education tends to have small classes, such as one-on-one or one on a few; for example, soprano coaching or dance choreography. These small classes’ SFQ scores are usually high due to the close relationship between teachers and students. For data accuracy, this study excluded all small classes.

3.2. Scopes and Multi-Dimensional Data Model

For quality management, after collecting the requirements from the senior management, the system was designed to analyze the relationships among the SFQ four categories and the student course grade data (

Figure 1).

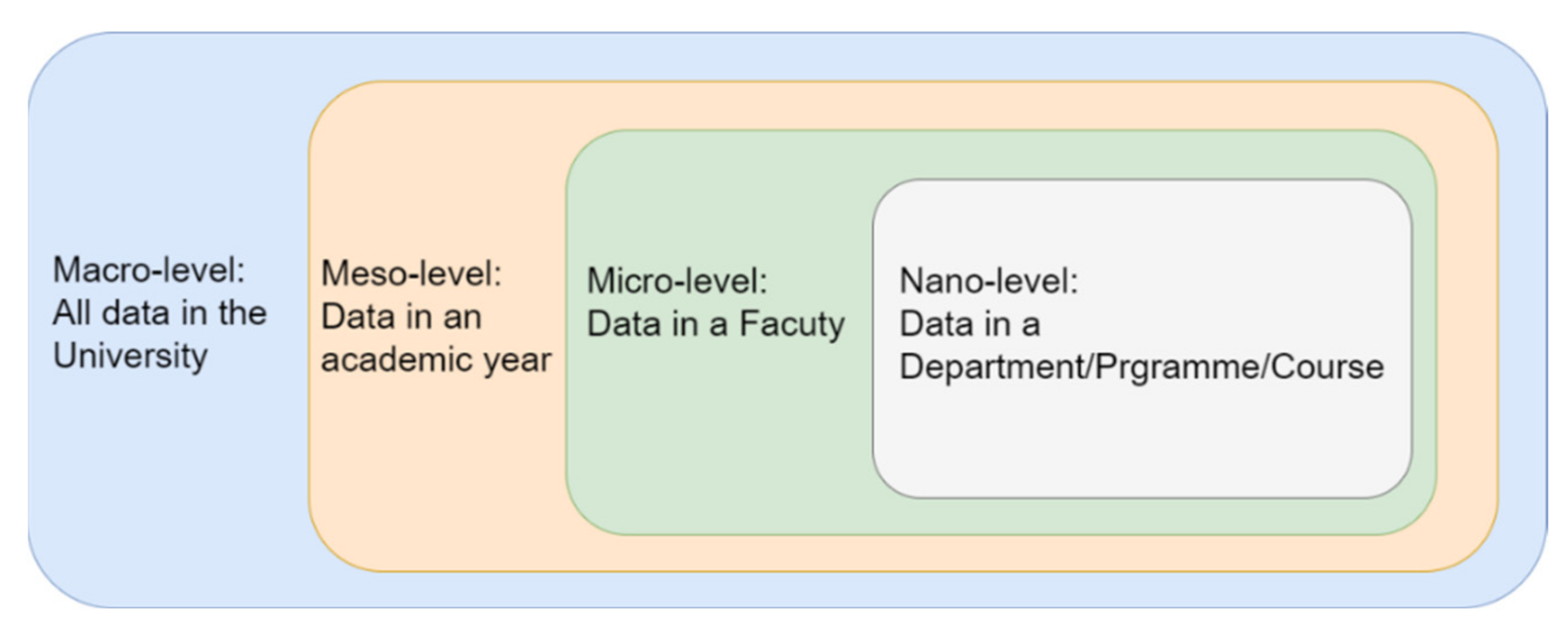

In this study, as demonstrated in

Figure 2, the analytics have been classified into four levels: “Nano-level” points to the information/data in a course, program, or department; the “Micro-level” indicates many courses in a school/faculty; the “Meso-level” includes many courses in many faculties in a specific academic year, and last, the “Macro-level” concerns many faculties and many years at the institution.

The student Course Grade Data and Course Rating Data are included in this study in the multi-dimensional model. The dimensions are the perspectives or entities concerning which the institution keeps records. For example, the data warehouse keeps records of the Course Rating Data for the dimension time, course, and survey questions (

Figure 3). These dimensions allow the ability to keep track of things. Each dimension has a table related to it, called a dimensional table, which describes the dimension further.

3.3. Data Warehouse Architecture

At semi-annual Academic Board Meetings, reports processed through this system are presented to the senior management and high-profile faculty members (deans, directors, and program leaders). The reports explain complicated data and interpretations via visual graphs with simple lines, dots, and key points. Senior management members are pleased to hear the presentation, view the graphs, and take swift and effective actions on policy implementations. However, for a broader understanding, the researchers break it down to make it relevant and applicable by others who might be interested in using a similar approach.

To achieve the multi-dimensional model in

Figure 3, a data warehouse, also known as an enterprise data warehouse, a system for reporting and data analysis, is used as a core component of business intelligence [

25]. The data warehouse performs sophisticated data analysis for all kinds of users, especially the senior management/decision-makers. The data warehouse supports developers to create customized and complex queries to retrieve information based on multiple data sources. It provides a systematic approach for the people who want simple technology to access the data for making decisions, especially when business users want to extract key information with fast performance from a huge amount of data in a certain format, e.g., reports, grids or charts.

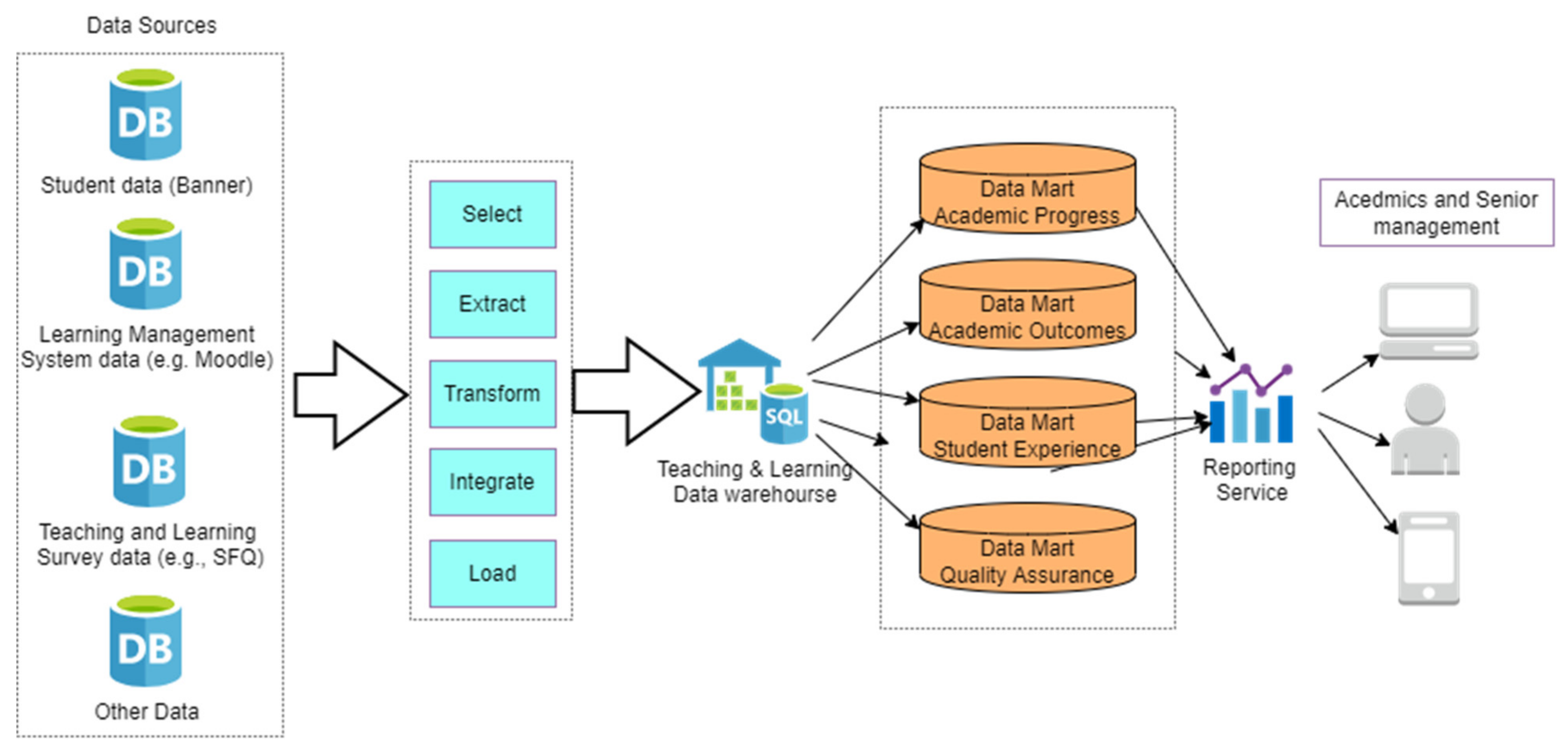

Figure 4 demonstrates the data flow of the survey data in the data warehouse.

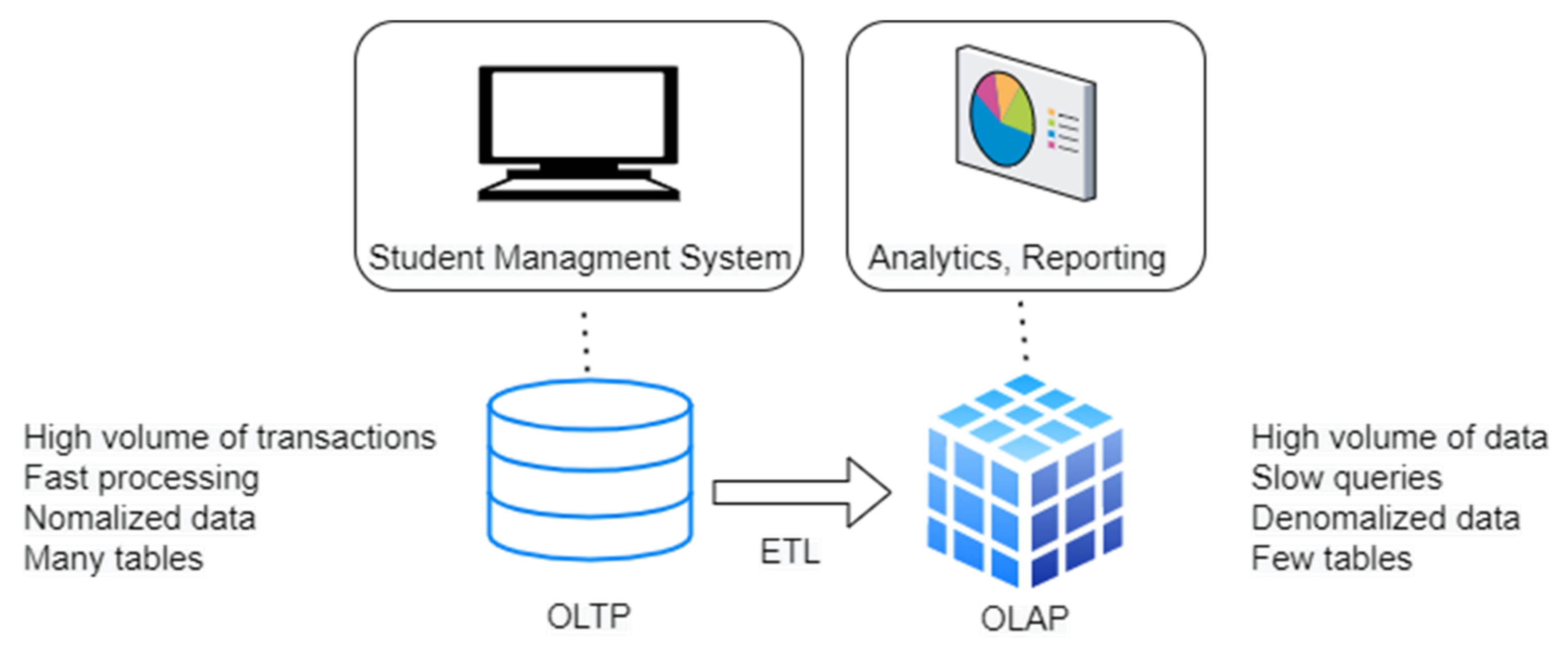

Compared to the traditional operational database, data warehouses are optimized for analytic access patterns. Traditional operational databases are based on the following two technologies. Online transaction processing (OLTP), which is a category of data processing that is focused on transaction-oriented tasks [

26]. Online analytical processing (OLAP), which is an approach to answering multi-dimensional analytical (MDA) queries swiftly in computing, which is the approach behind Business Intelligence (BI) applications. Due to the technical complexity and for a broader understanding, the researchers present it through a few graphic designs below to illustrate how it works. The difference between OLAP and OLTP is described in

Figure 5.

ETL is an abbreviation of extract, transform, and load. In this study, the ETL tool (SSIS) extracts the data from different database source systems (e.g., student information, survey data). Then, it transforms these data into a staging place (format, structure) and then loads the data into the final stage in the data warehouse system every night. This is an important part not only because it generates detailed reports for semi-annual board meetings it also provides the most updated information for senior faculty leaders, such as the dean of a faculty. An example to elaborate is that the current semester is suspended due to the fifth wave of the COVID-19 pandemic. The academy has called an emergency academic board meeting. In order to make the right and evidence-based decision, this data warehouse system’s ability to generate the latest report is proven to be extremely valuable. As shown in

Figure 6, the raw sensor data are aggregated from the operational database by ETL and then are processed to the data warehouse, which divides them into different data marts for the reporting service.

After the ETL process, a data cube is generated in the data warehouse, which is based on the multi-dimensional data model(s). Inside the data warehouse, the data allows data to be viewed and modeled in multiple dimensions and tables; the fact table contains measures of the raw data and foreign keys with related dimension tables in the data warehouse.

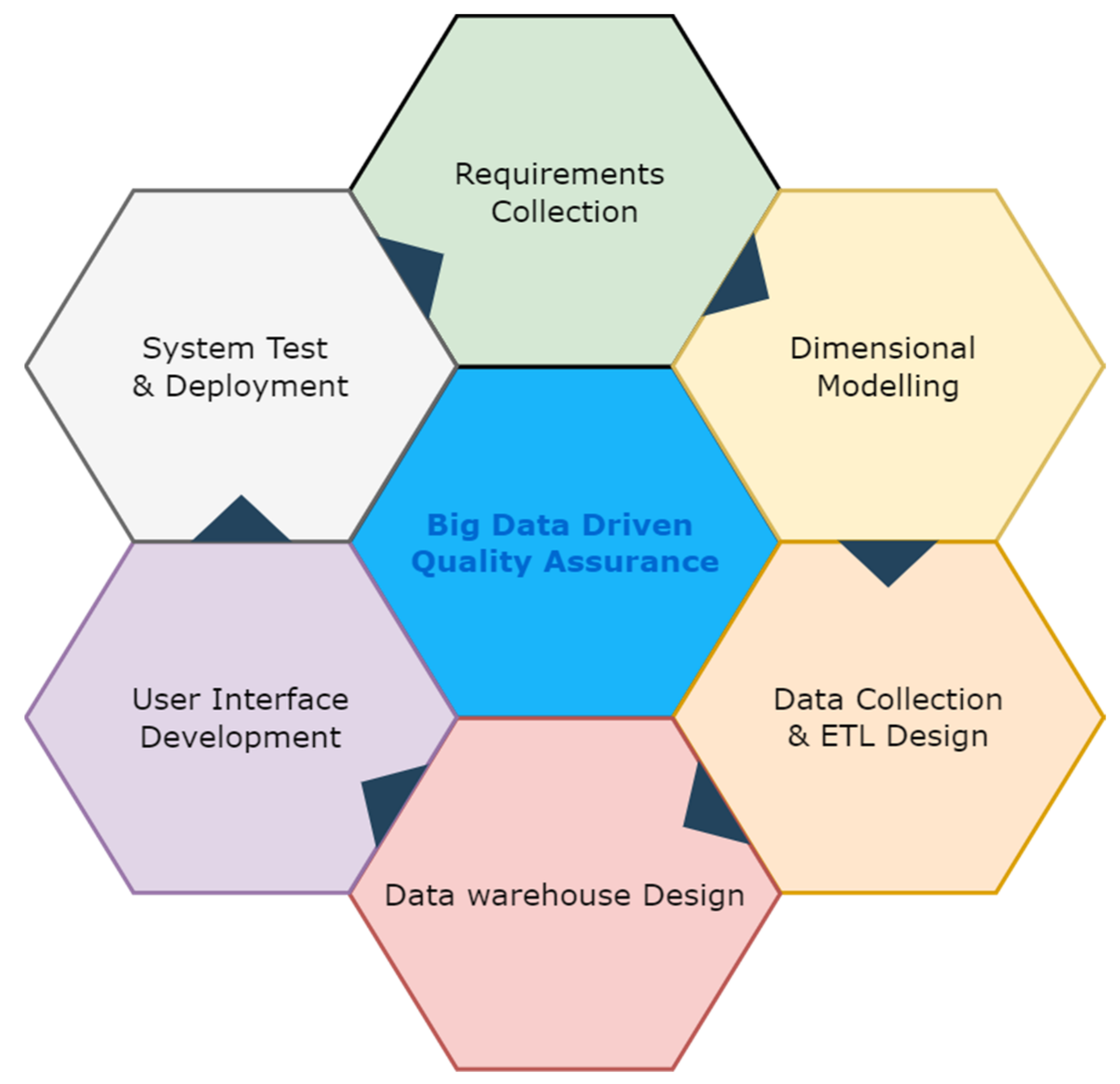

In this study, the development lifecycle (

Figure 7) is an iterative approach based on six steps:

Identify the system requirements and associate value from the program leader and senior management. A broad spectrum of user requirements is collected among various academic departments/units for the desired aspects and reports. Meanwhile, the data sources (owner, availability, constraints, quality) are identified.

A high-performing multi-dimensional model is designed and built in the database (SQL server in this study), according to the user requirements collected from step 1.

Liaise with various data source owners; an IT team designs the database schema and the ETL program to extract, transform, and load the source data into the data warehouse system.

Based on user requirements, the data marts and CLAP cubes are built in the data warehouse, with complex measures and calculations.

A dynamic interactive user interface is developed in Power BI for end-users to explore the data via mobile, PC, or other applications (e.g., excel, Power Apps)

The developed solution is tested with end-users and deployed to the Power BI cloud.

3.4. User Interface

This data warehouse makes the collection, visualization, and interrogation of course evaluation data easily accessible for course coordinators and senior administrators. With the following user interfaces, the relevant people can make swift and necessary actions.

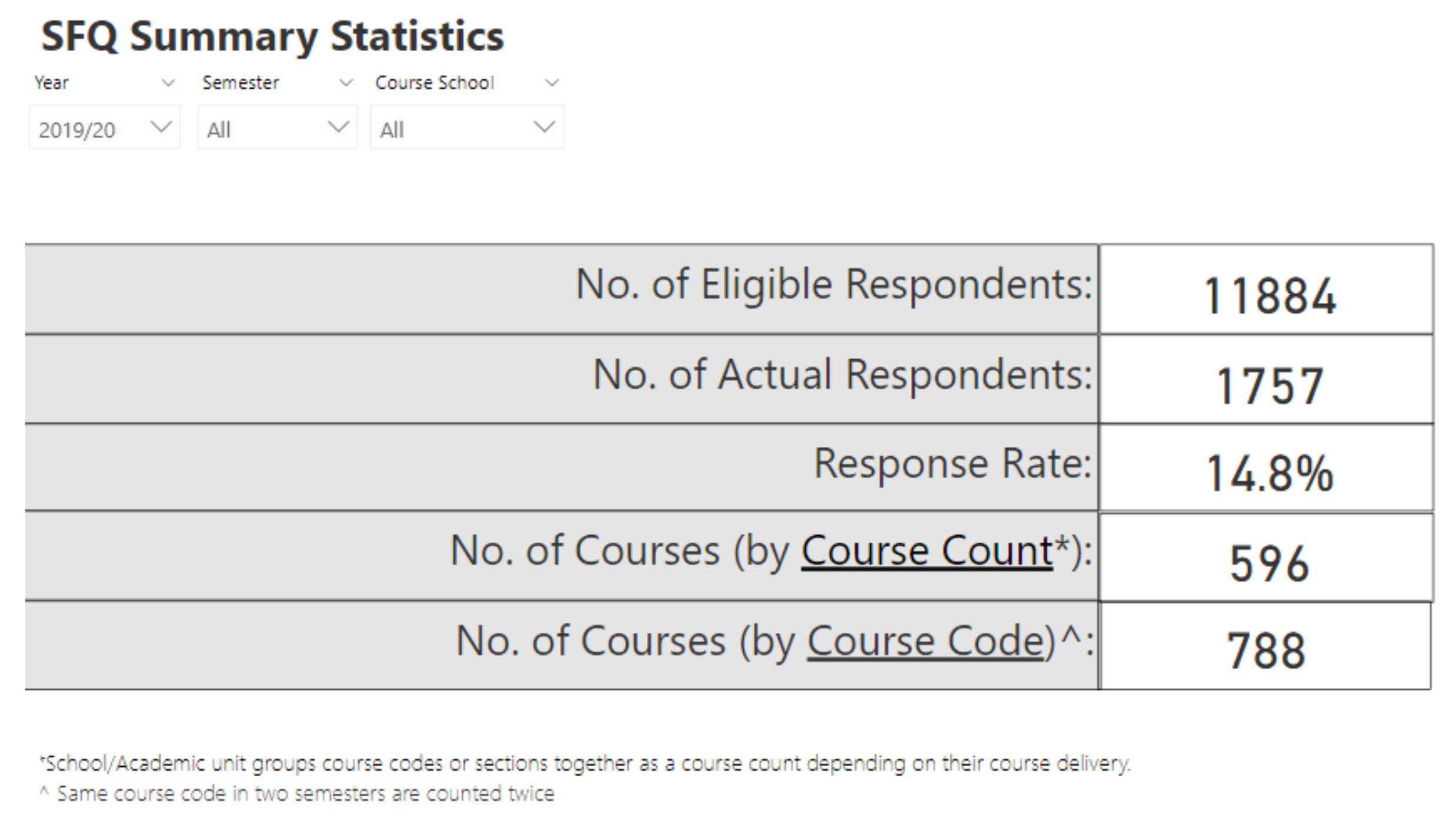

3.4.1. Summary Statistics

A summary statistics page presents information on the number of Eligible Respondents, the number of Actual Respondents, Response Rate, and the number of Courses for teachers (

Figure 8).

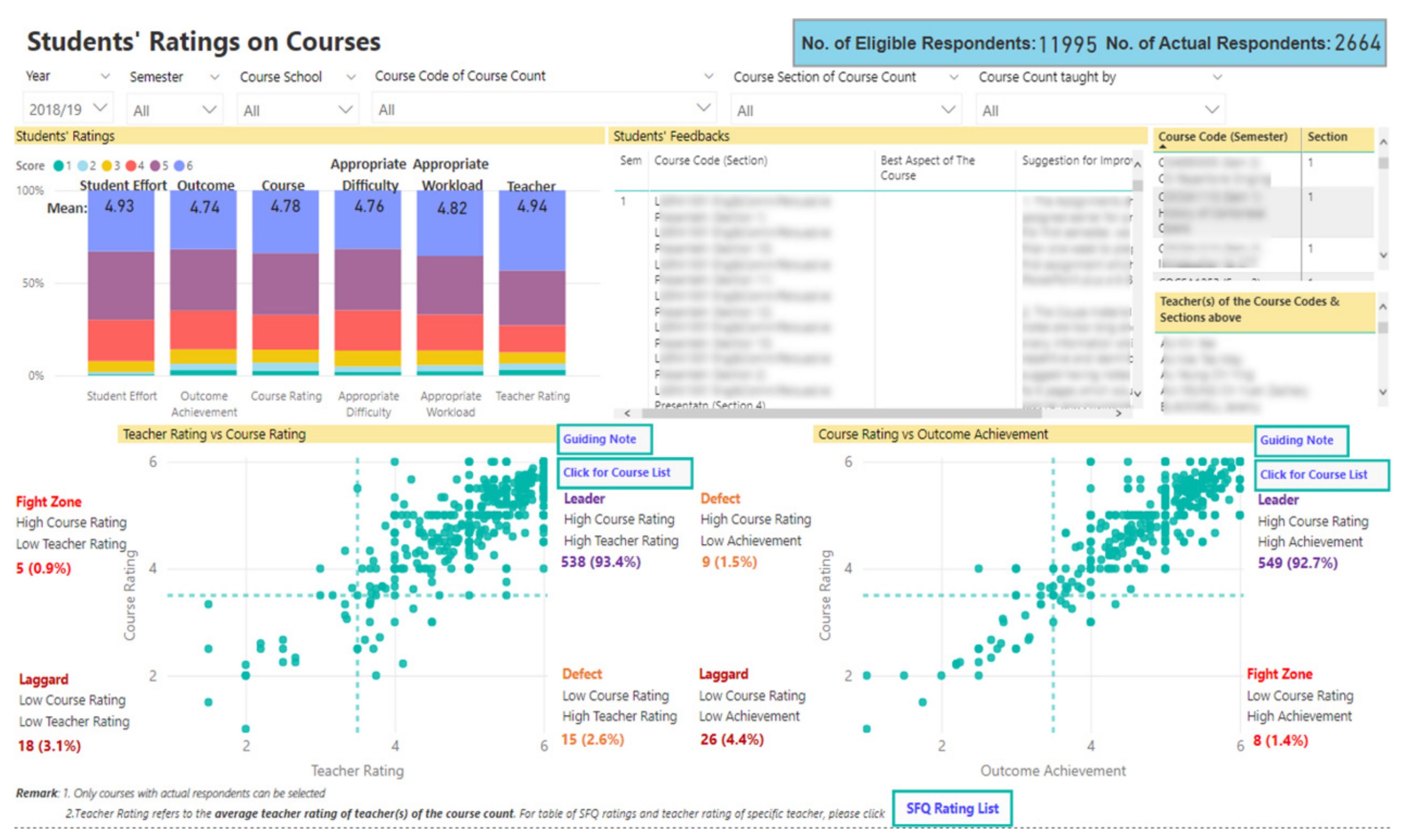

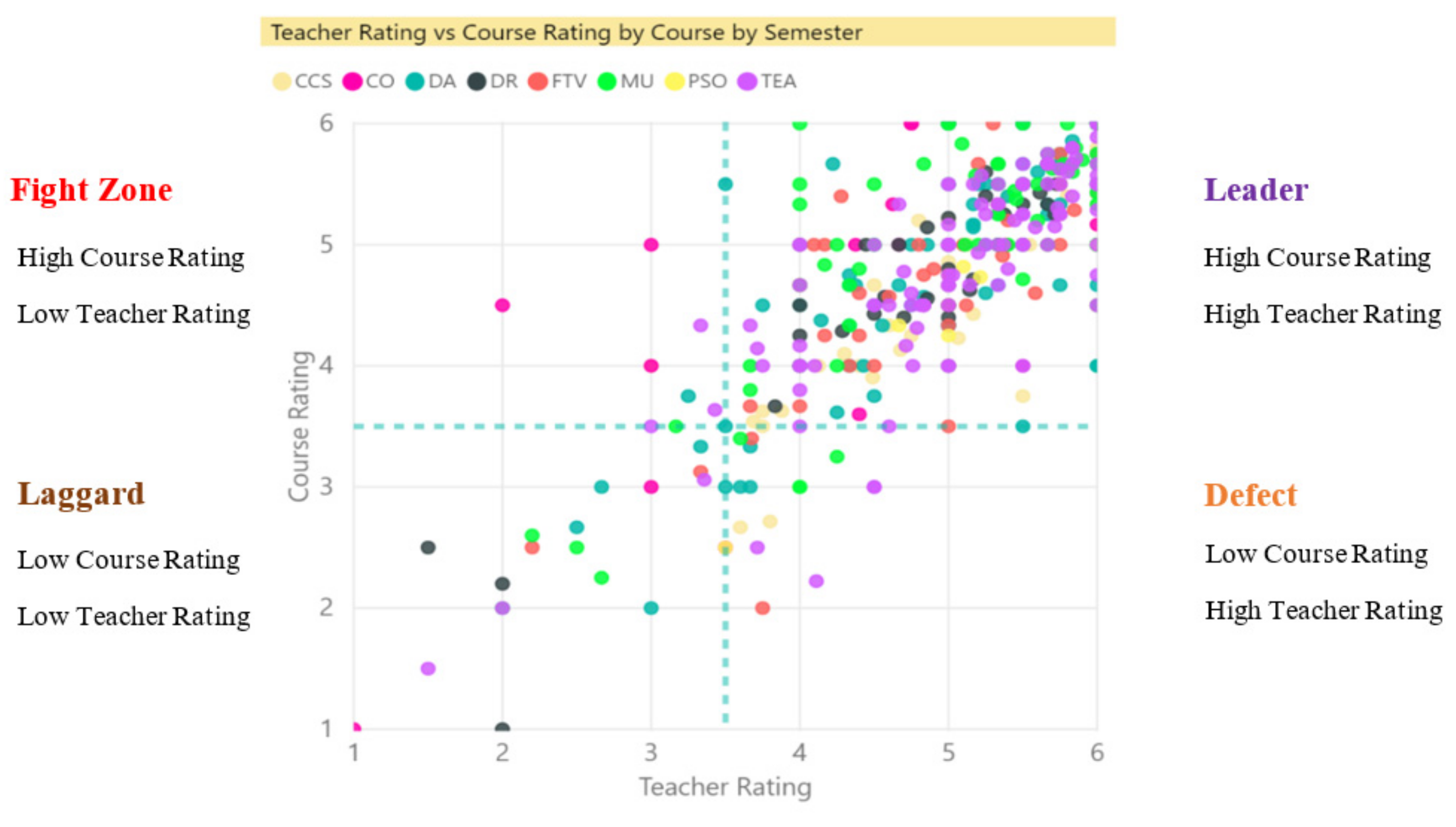

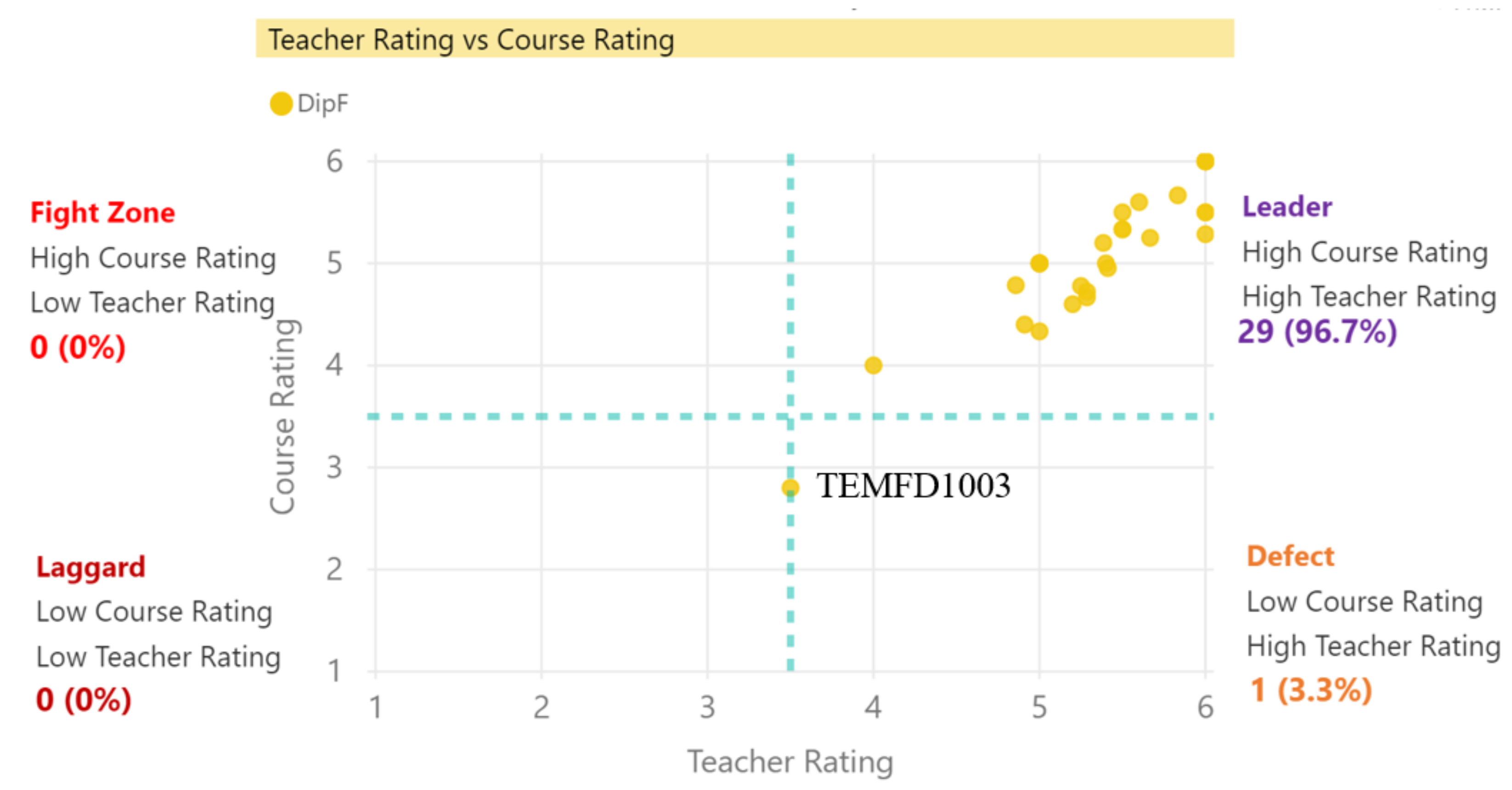

3.4.2. Students’ Ratings on Courses

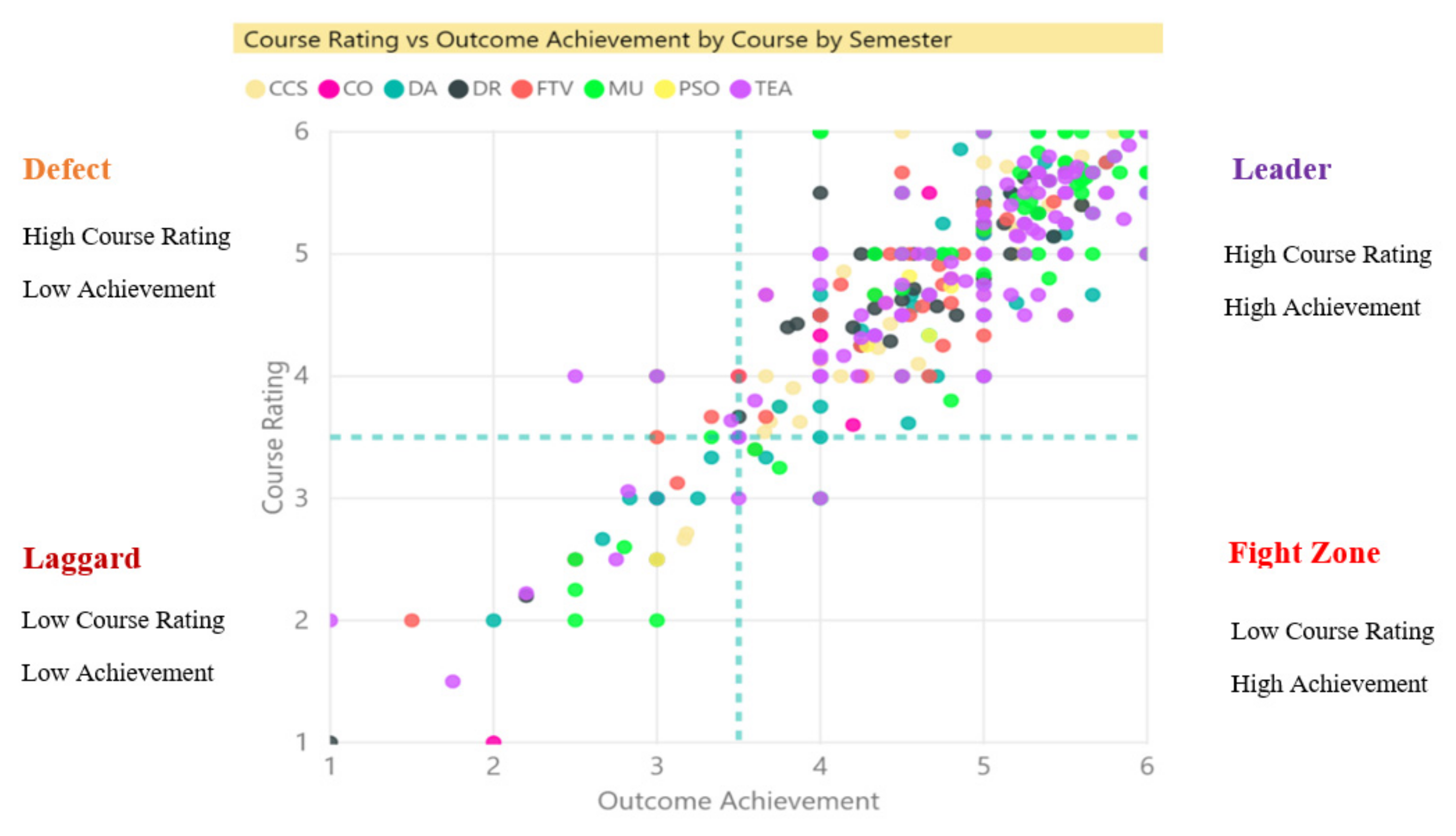

Students’ ratings on courses, their feedback, Teacher Rating vs. Course Rating, and Course Rating vs. Outcome Achievement are presented in this dashboard (

Figure 9). This integrated graph gives administrators quick access to all the critical data. It compiles all data in one place for deeper understanding and more robust analysis. Users can view the rating data of different academic terms and schools by selecting the slicer-“Year”, “Semester”, and “Course School”. The slicer-“Course Section of Course Count”, “Course Code”, and “Course Count taught by” are used for users to filter data of the “Section number of a course”, a specific course and course teachers to get deeper insight focusing on a particular course. Users can also click on the visuals below to filter the data in the report. This would lead to swift and effective action, if necessary.

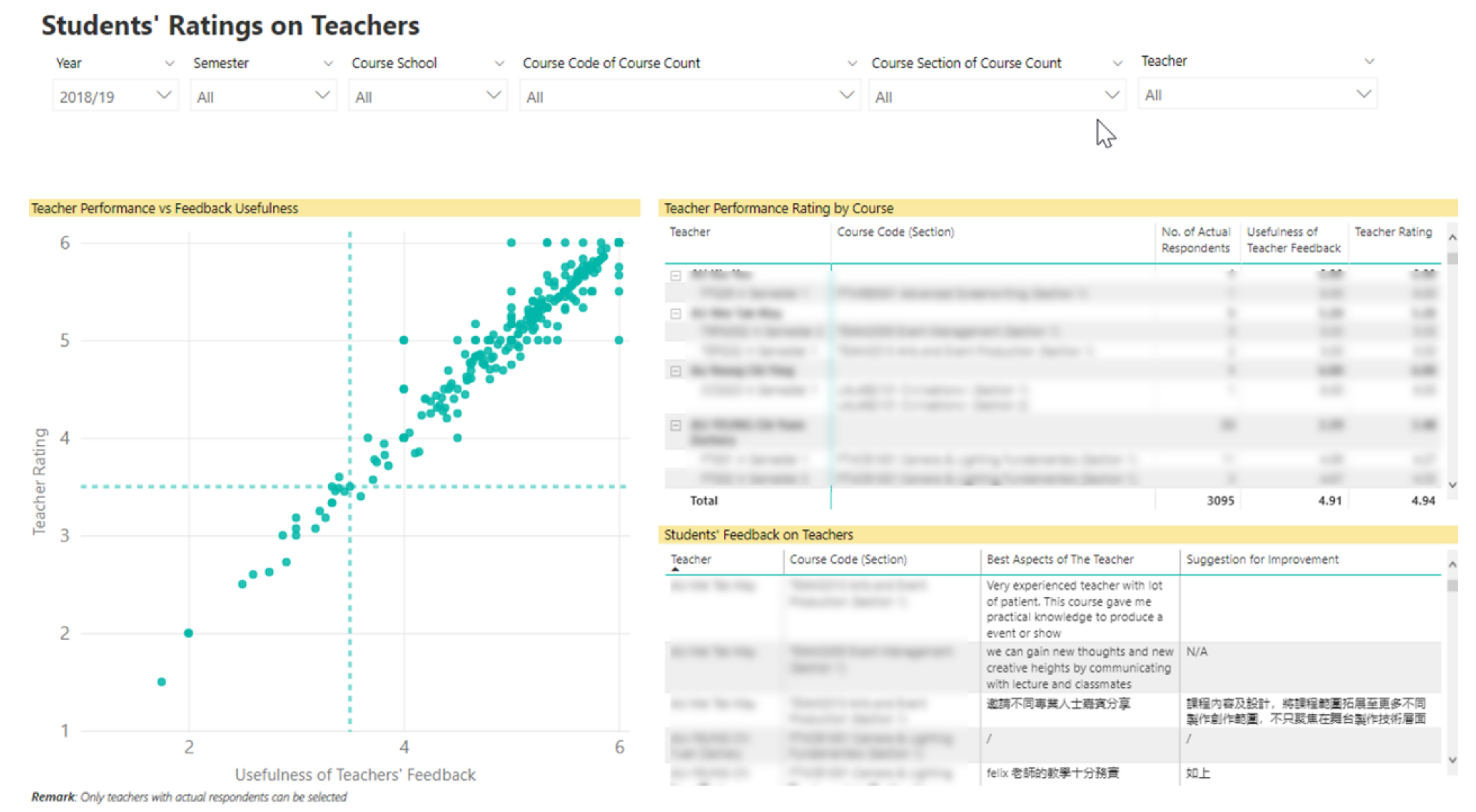

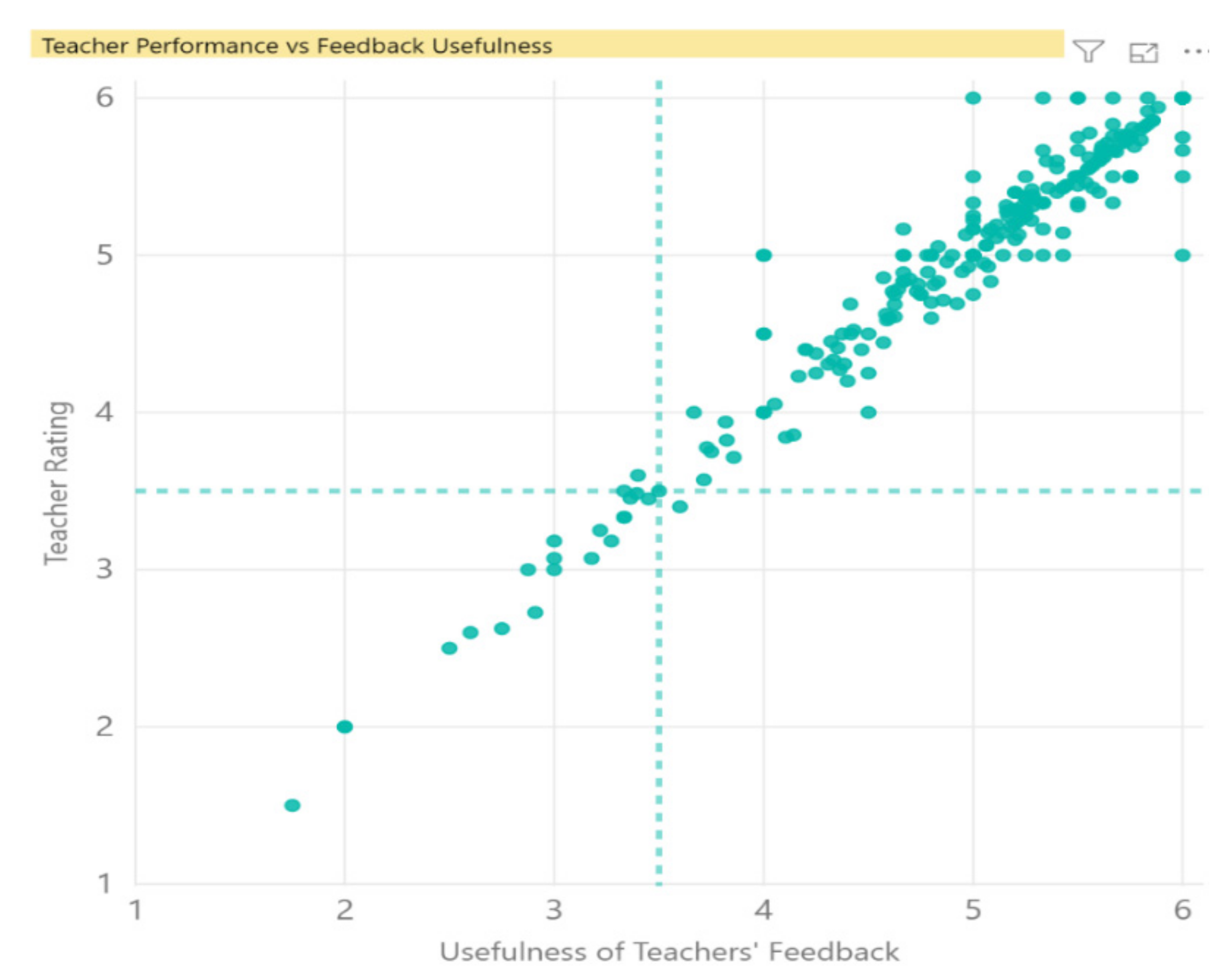

3.4.3. Students’ Ratings on Teachers

Teacher Ratings vs. feedback usefulness and students’ feedback are presented in this dashboard (

Figure 10). This image presents some critical information (ratings and feedback). Through color coding, summary tables, and detailed sub-score reports, administrators can quickly identify trends. They can use such data to plan differentiated interventions and enrichment activities.

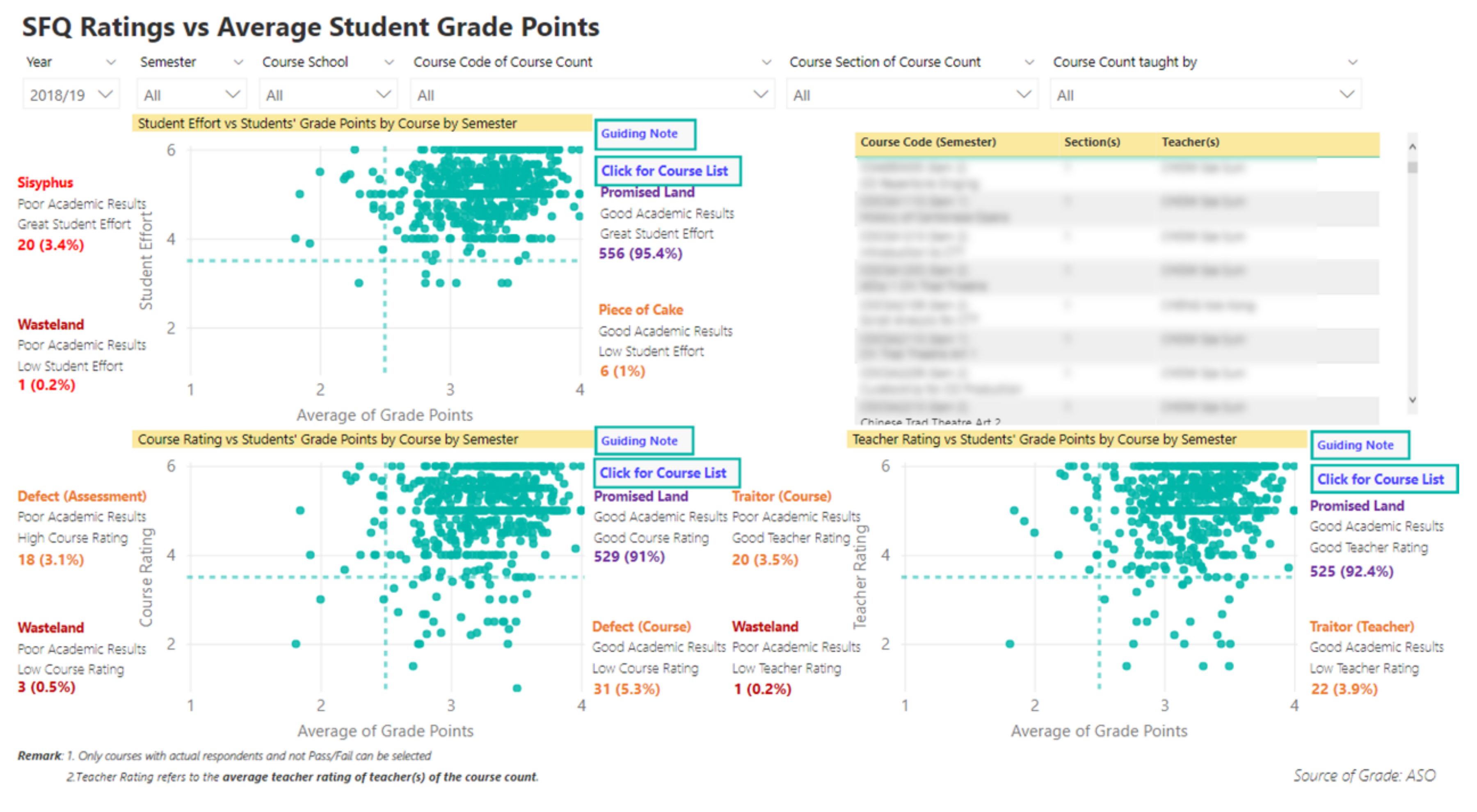

3.4.4. SFQ Ratings vs. Average Student Grade Points

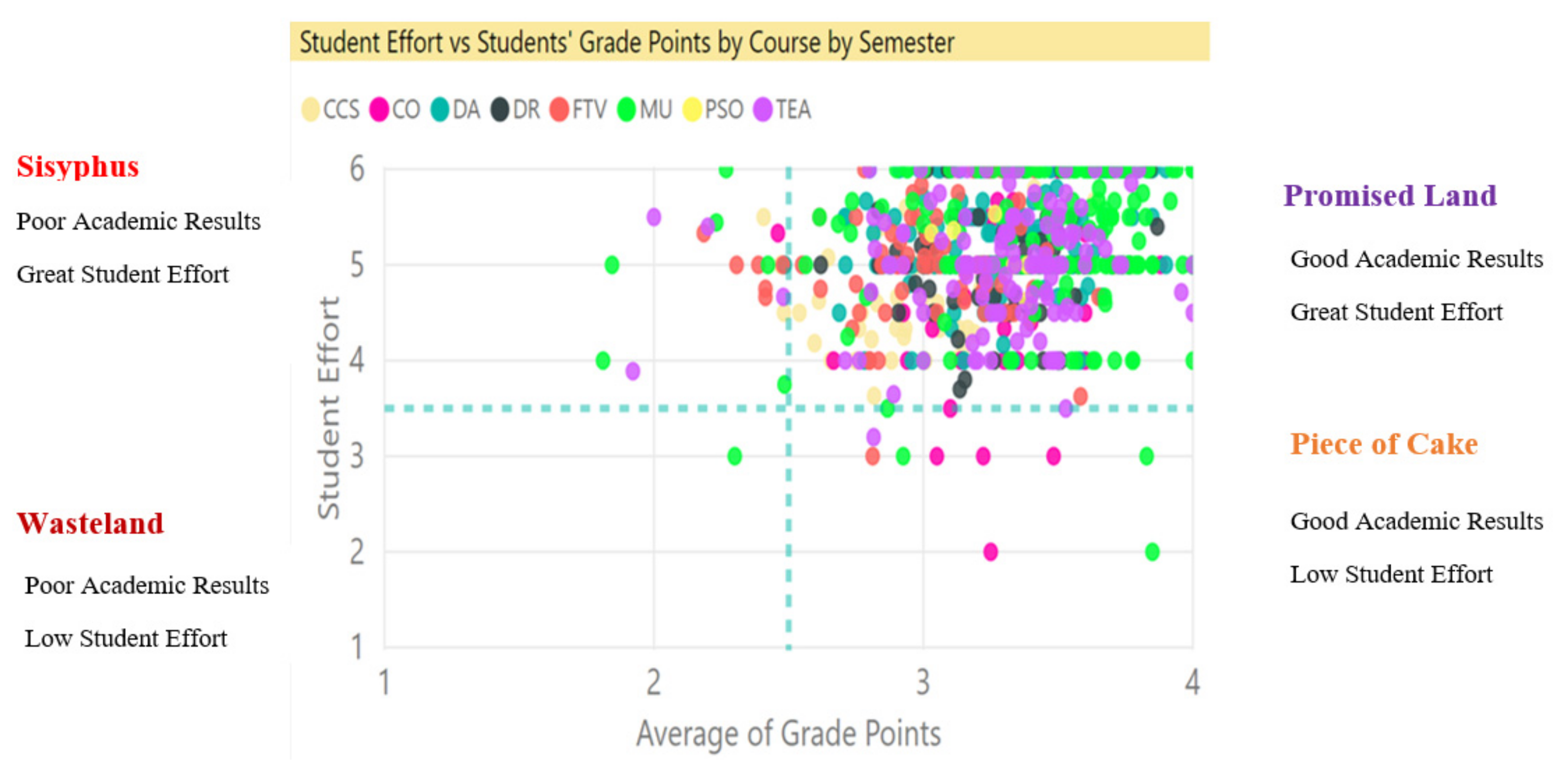

This dashboard presents Student Effort vs. Students’ Grade by course and by semester; Course Rating vs. Students’ Grade by course and by semester; and Teacher Rating vs. Students’ Grade by course and by semester (

Figure 11).

5. Conclusions and Discussion

This study applies multi-dimension lenses to analyze the large volume of data in the course student evaluation area, a key aspect of the teaching and learning quality assurance for higher education. Based on the data warehouse system, a novel analytical framework is proposed for categorizing the courses into various domains (Student Effort, Outcome Achievement, Course Rating, and Teacher Rating) to address domain-specific course issues, which helps senior administrators and program leaders quickly identify courses that need closer scrutiny. This streamlined system ascertains whether and what areas of course enhancement measures are warranted.

This novel analytical framework provides efficient assessment and leads to effective solution-based actions. Such a measure enables policymakers and senior management to see the big picture and gain insight from a large volume of the student course and teacher evaluation data. It analyzes a huge amount of data, including teaching and learning, activities, assessment, performance quality, among other domains. For instance, the use of this framework generates recommendations to pair up those teachers in the “Promised Land” with those struggling in the “Wasteland” and “Traitor” domains in a peer mentoring program. Furthermore, the scales of the four domains in the five matrices of the framework need to be customized among different course types (required course/elective course and online course/face-2-face course).

Overall, this study suggests that more performing arts institutions should utilize this novel analytical framework as a diagnostic tool to provide efficient and effective oversight of their course portfolio, to identify those courses worthy of special attention, and take enhancement measures accordingly. In addition, policymakers and senior management can make use of the framework to quickly adjust education policies, develop concrete, multi-faceted feedback, and professional development strategies. Based on this framework, they can also offer constructive advice to different academic programs and associate staff during program and teacher performance reviews.

Through color coding, summary tables, and detailed sub0score reports, this framework creates an efficient workflow to identify areas of concerns makes solution-based enhancement strategies, provides evidence-based advice, monitors programs/staff effectiveness, and promotes teaching excellence. In addition, this framework could be easily implemented in other disciplines and subject areas for similar benefits in other tertiary institutions.