SLLB-DEVS: An Approach for DEVS Based Modeling of Semiconductor Lithography Load Balance

Abstract

1. Introduction

2. Methodology

2.1. The Legacy Load Balancer Model

2.2. Load Balancer Policy

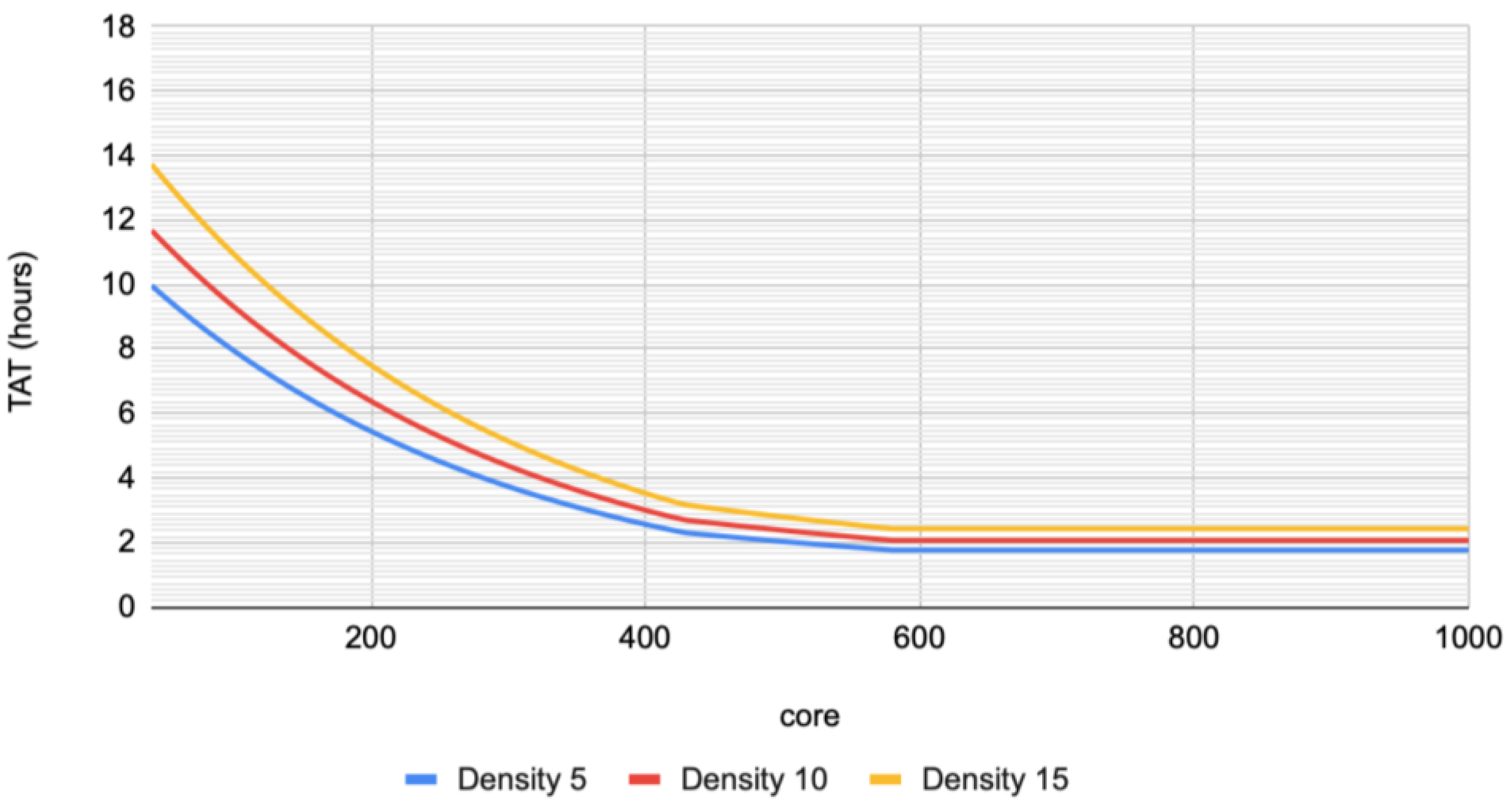

- The scalability problemMost of the computation workload performs parallel processing on multiple computing nodes for fast processing. However, due to the saturation core point, performance does not increase linearly according to the number of computing nodes. Then saturation core point is easily reached. An E-beam job has the same limitations as a computation workload, and the allocation of computation nodes above the saturation core point causes an unnecessary waste of computing resources [16].

- The overspecification problemIf the load balancer operates ineffectively, the architecture designer favors the system hardware power over necessary performance. In this case, there is an advantage of providing a quick response, compared to the expected response time of the user. However, as a result, the actual usage time of the system is very low compared to the total time, which incurs unnecessary costs.

2.2.1. The Saturation Point Policy

2.2.2. The Mission Time Policy

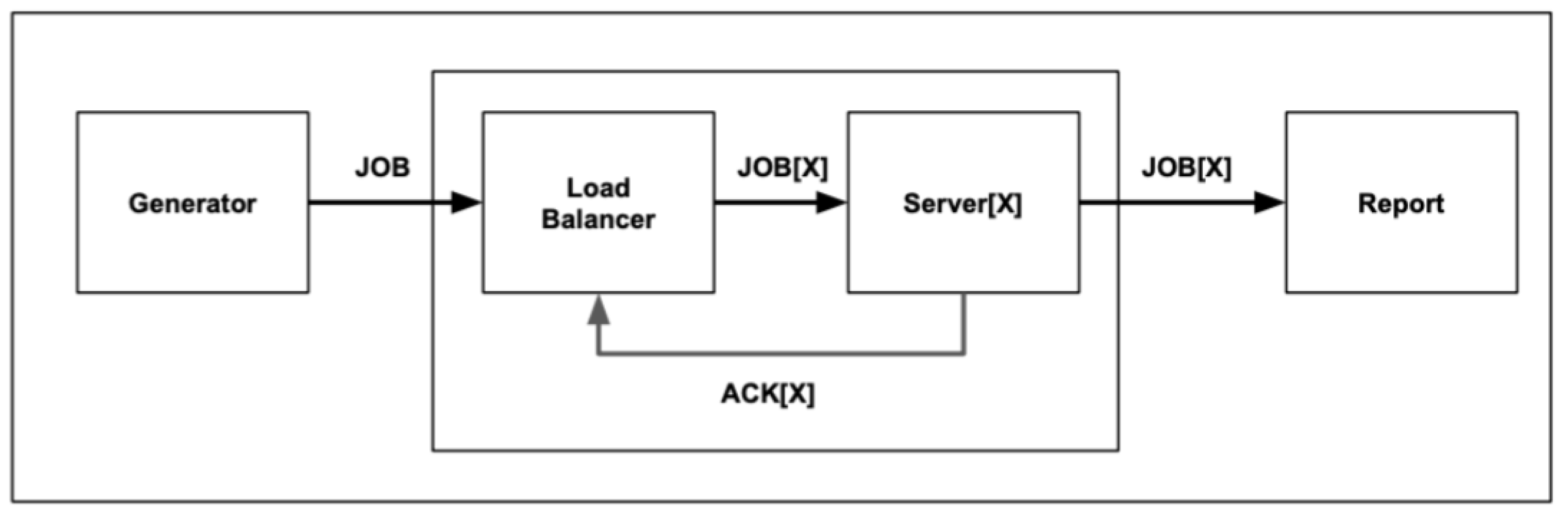

2.3. Default DEVS Model Design

- The generatorThe generator creates E-beam jobs according to the distribution of jobs generated in the E-beam cluster. The E-beam job includes the attribute of the value of density. The density attribute value follows the Gaussian distribution. The load balancing simulation experiment for the E-beam job applies an empirical model, which requires a higher TAT for higher-density jobs. In order to facilitate the delay analysis in the experiment, each job density attribute should follow a Gaussian distribution.

- The load BalancerThe load balancer queues the job and observes the status of the idle server. It then performs the task of allocating the queued task to the appropriate server.

- The serverThe server performs the actual processing of the incoming job. Processing time follows the Gaussian distribution according to the number of core allocations and the density of the job. When the task is finished and becomes idle, it relays the current status to the load balancer.

- The reportThe report collects jobs that have finished working, and collects statistical information such as the TAT, the wait time, and the time to job completion in all assigned servers.

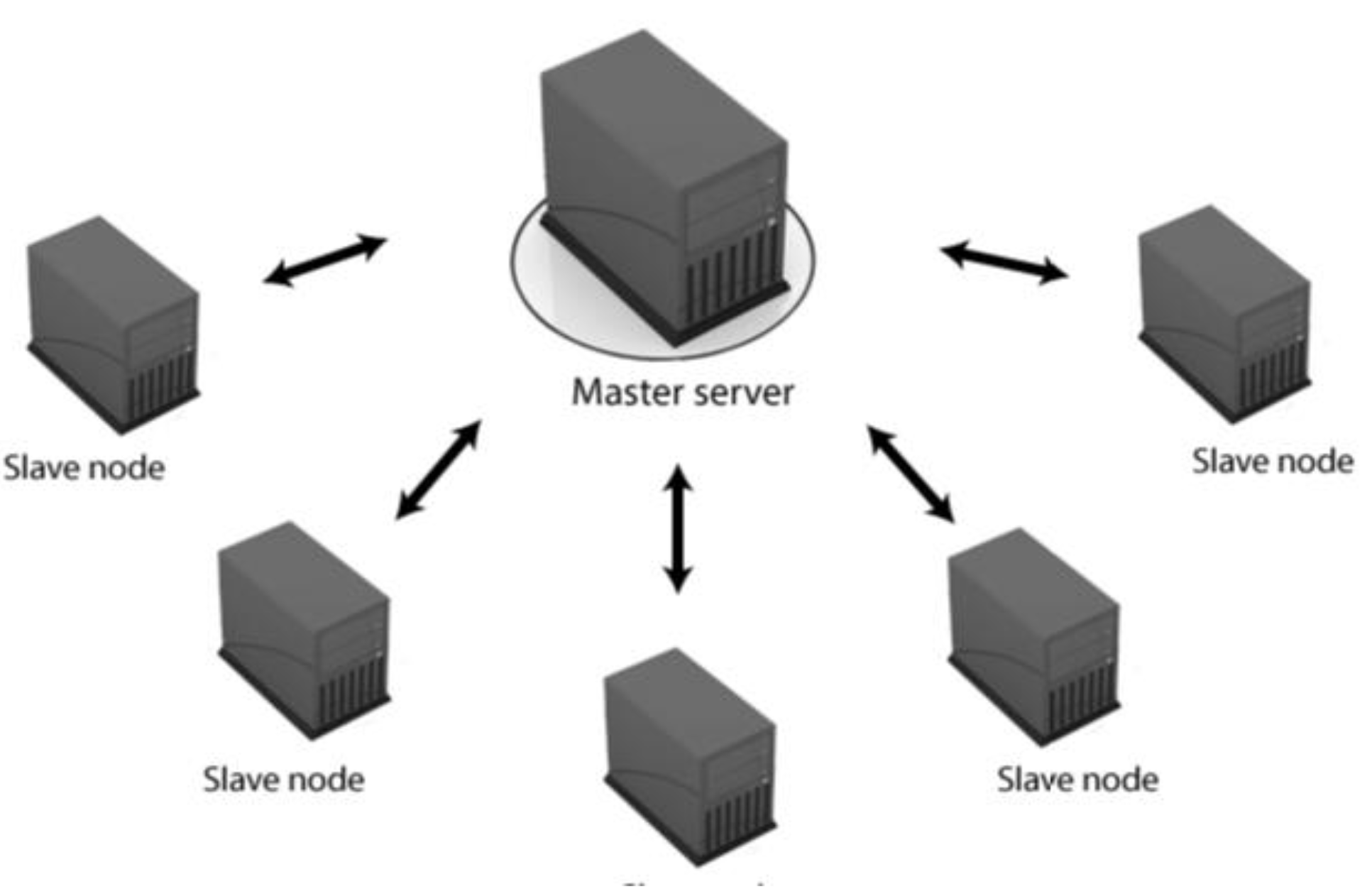

2.4. The Simulation Architecture

- First, various tasks with different characteristics are created and requested from the load balancer.

- The load balancer schedules a work order based on the current work, the idle resources of the server cluster, and the saturation point model.

- When the resources of the server cluster reach the scheduling condition, the next task is requested from the server cluster.

- The server cluster simulates the corresponding task and records the result in the form of a database or file.

- The result is analyzed using an analyzing tool.

3. Results and Discussion

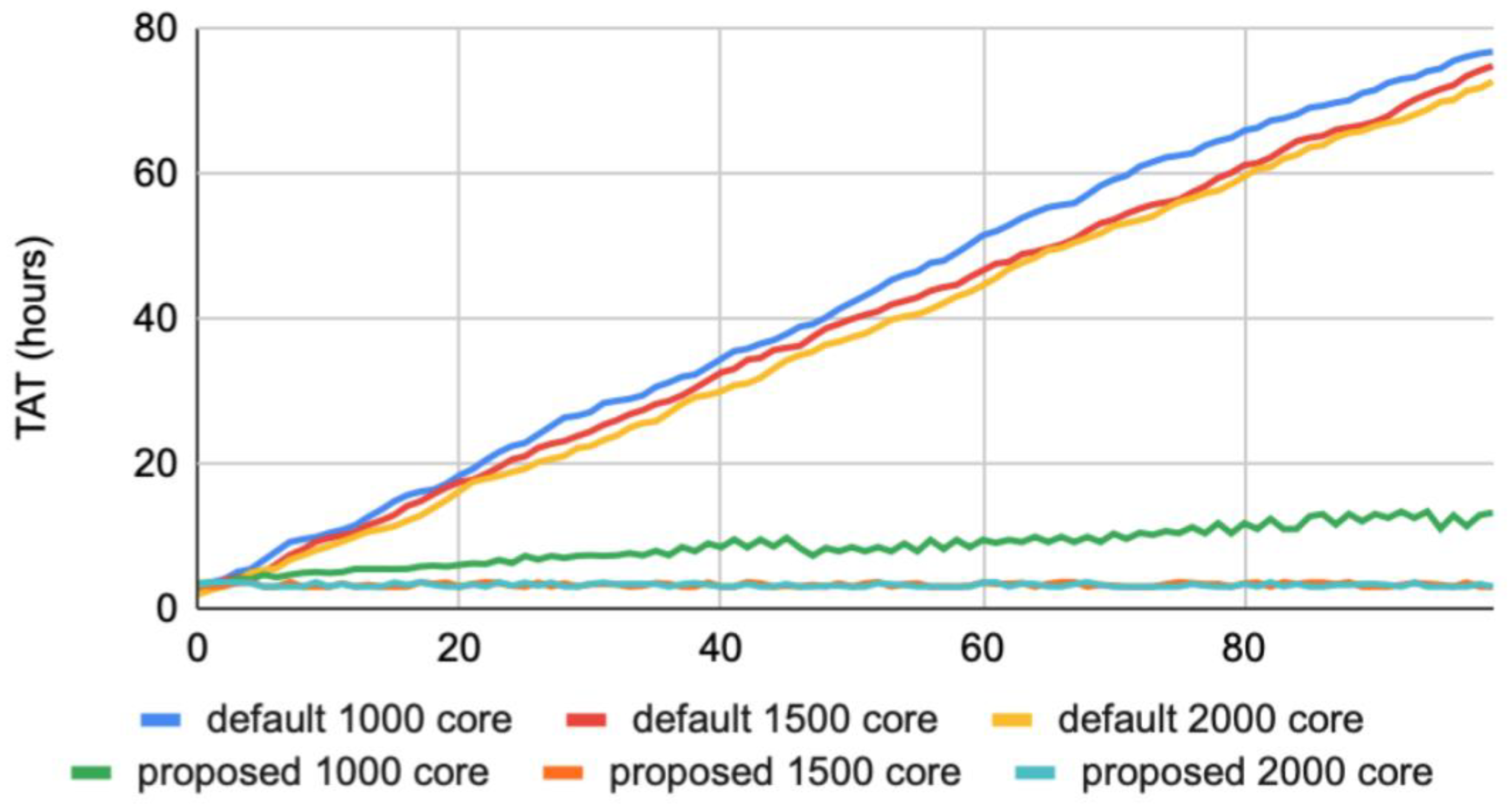

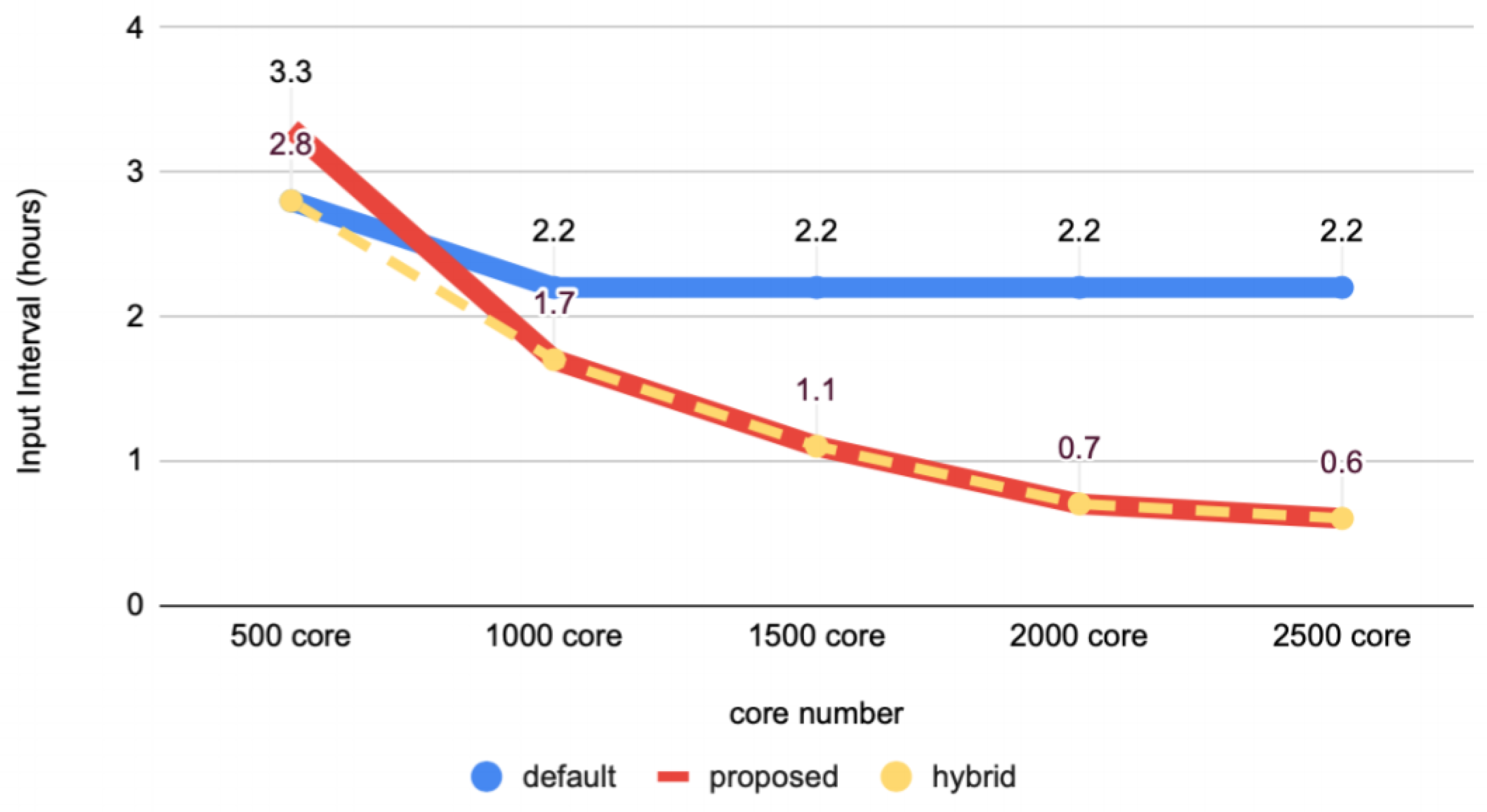

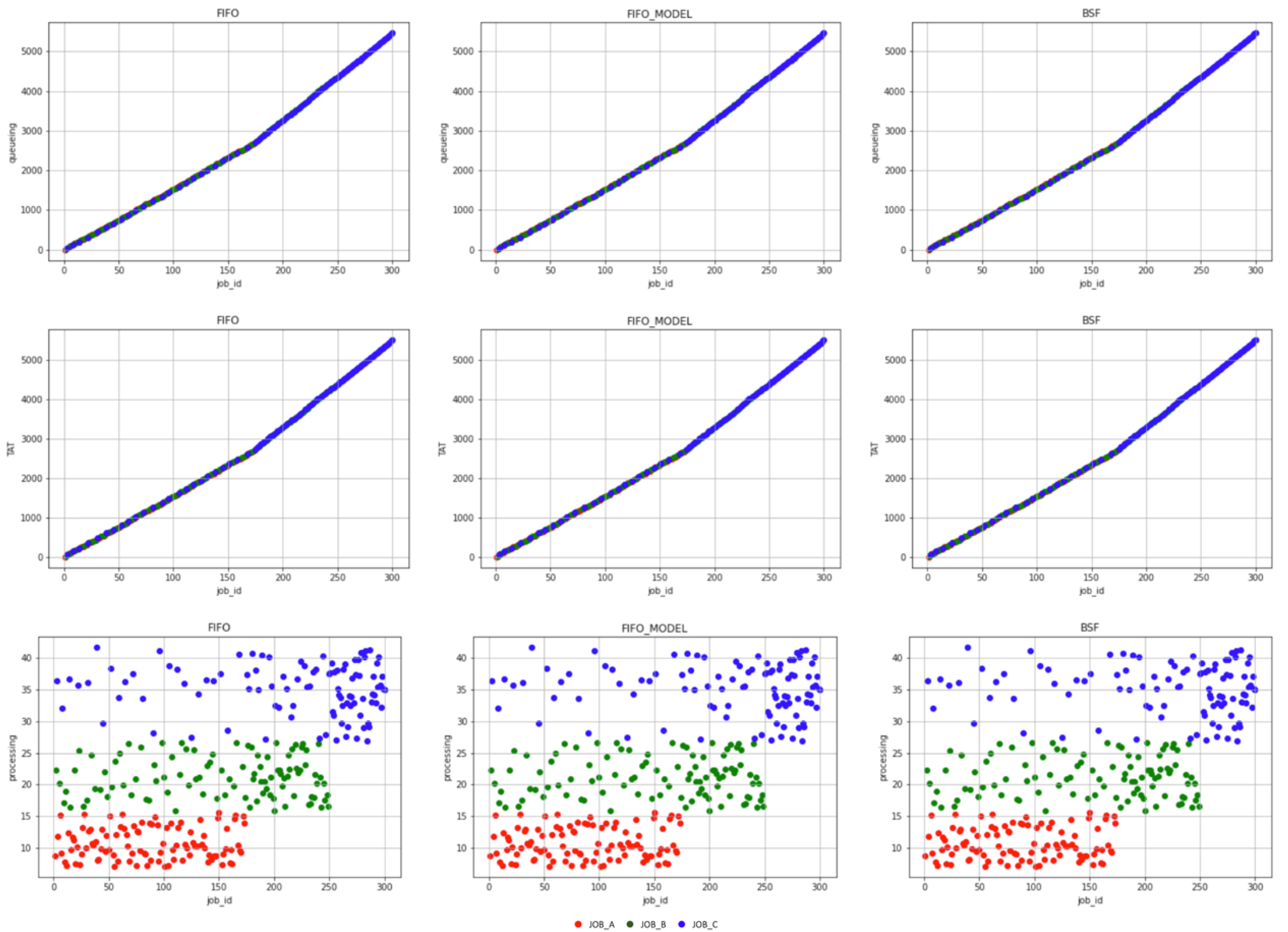

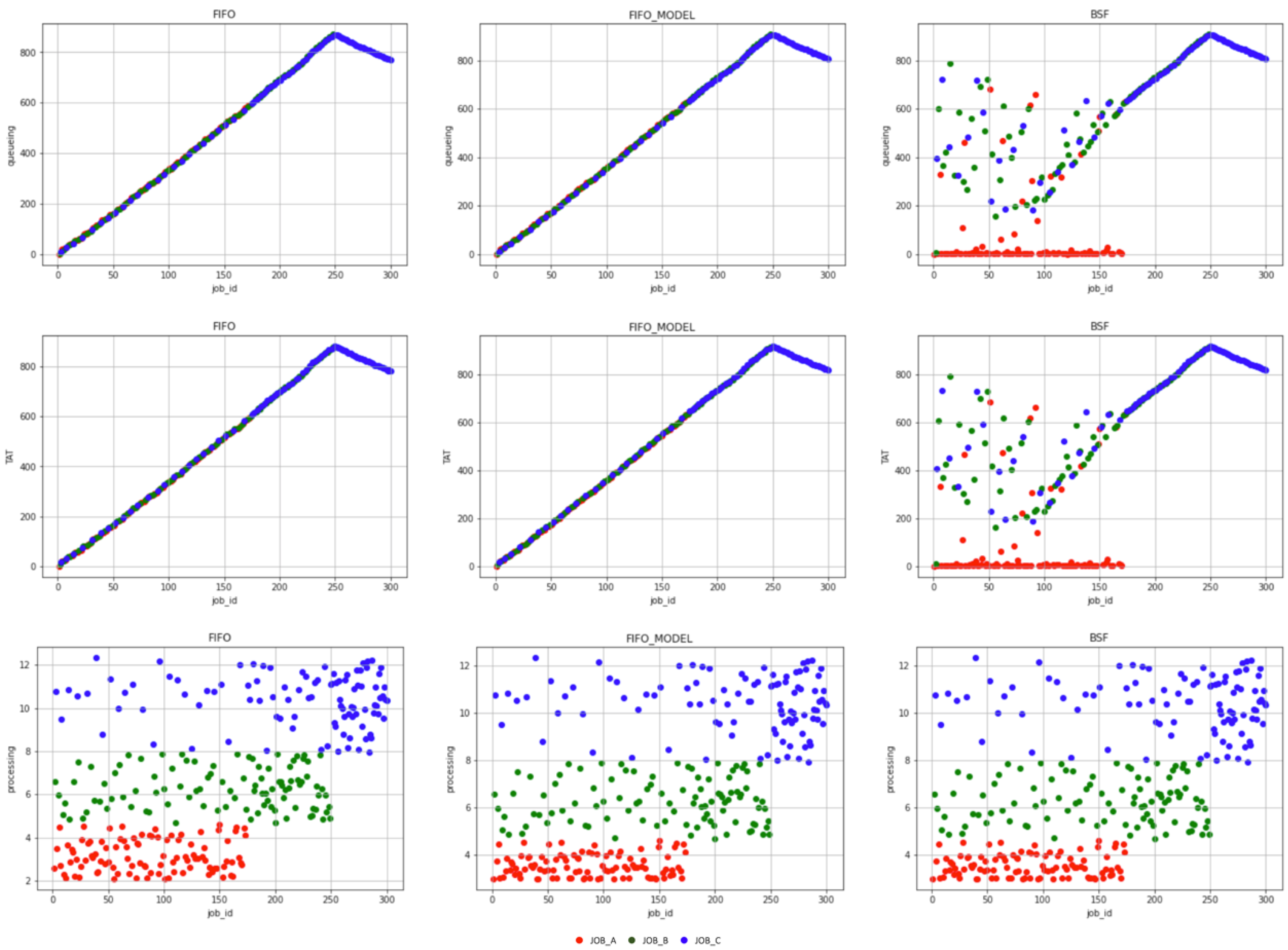

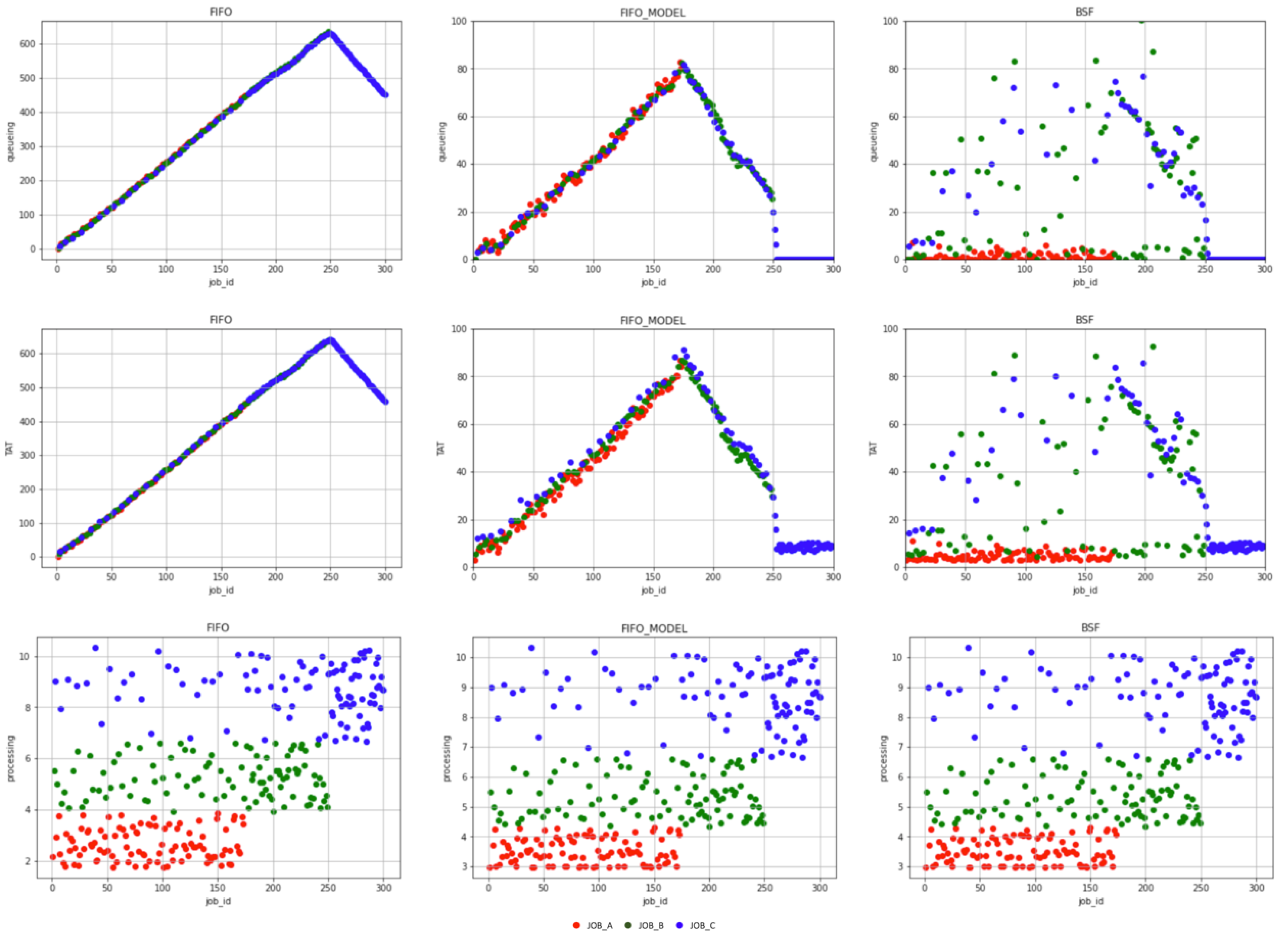

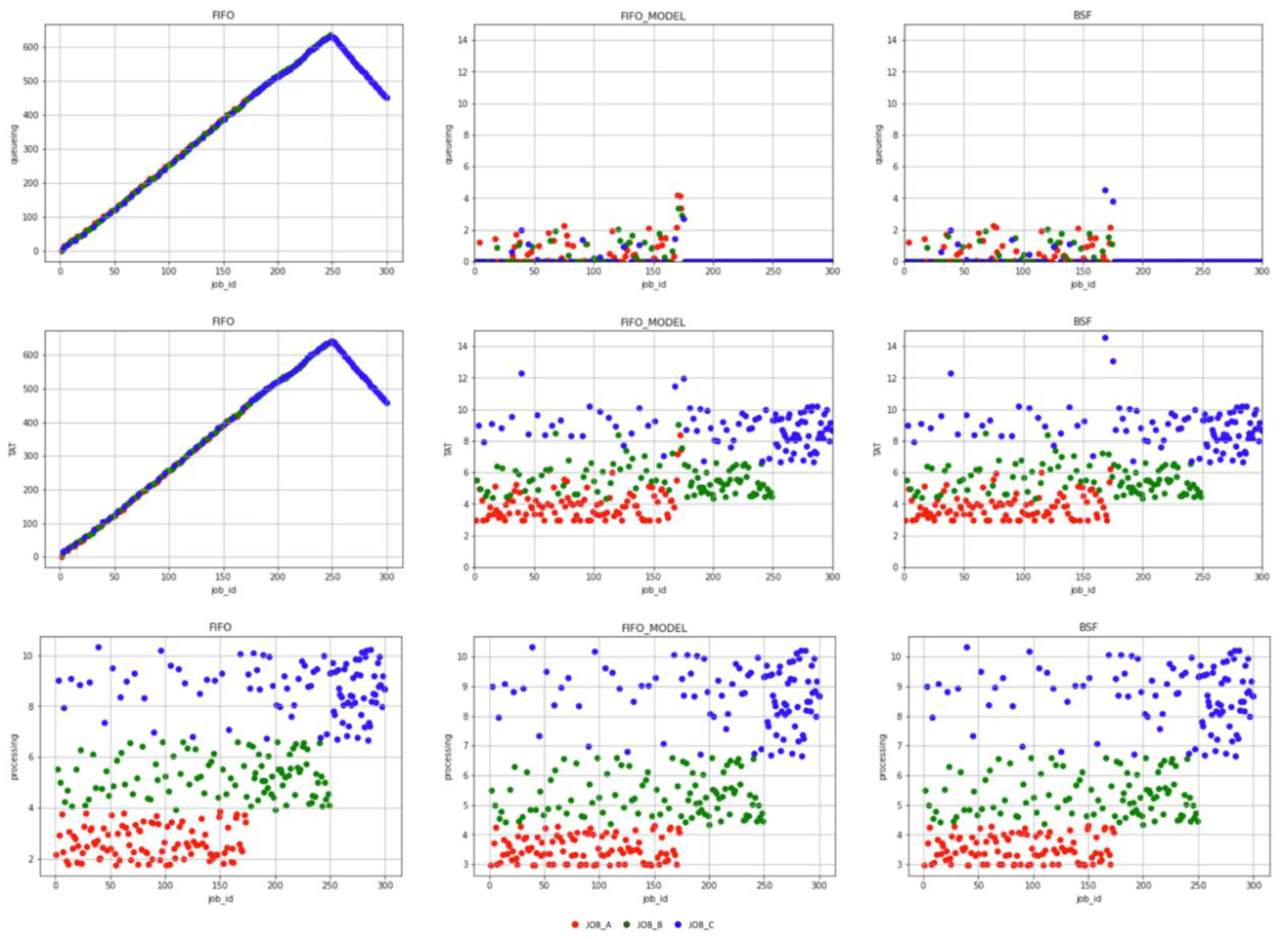

3.1. The Default DEVS Model Simulation

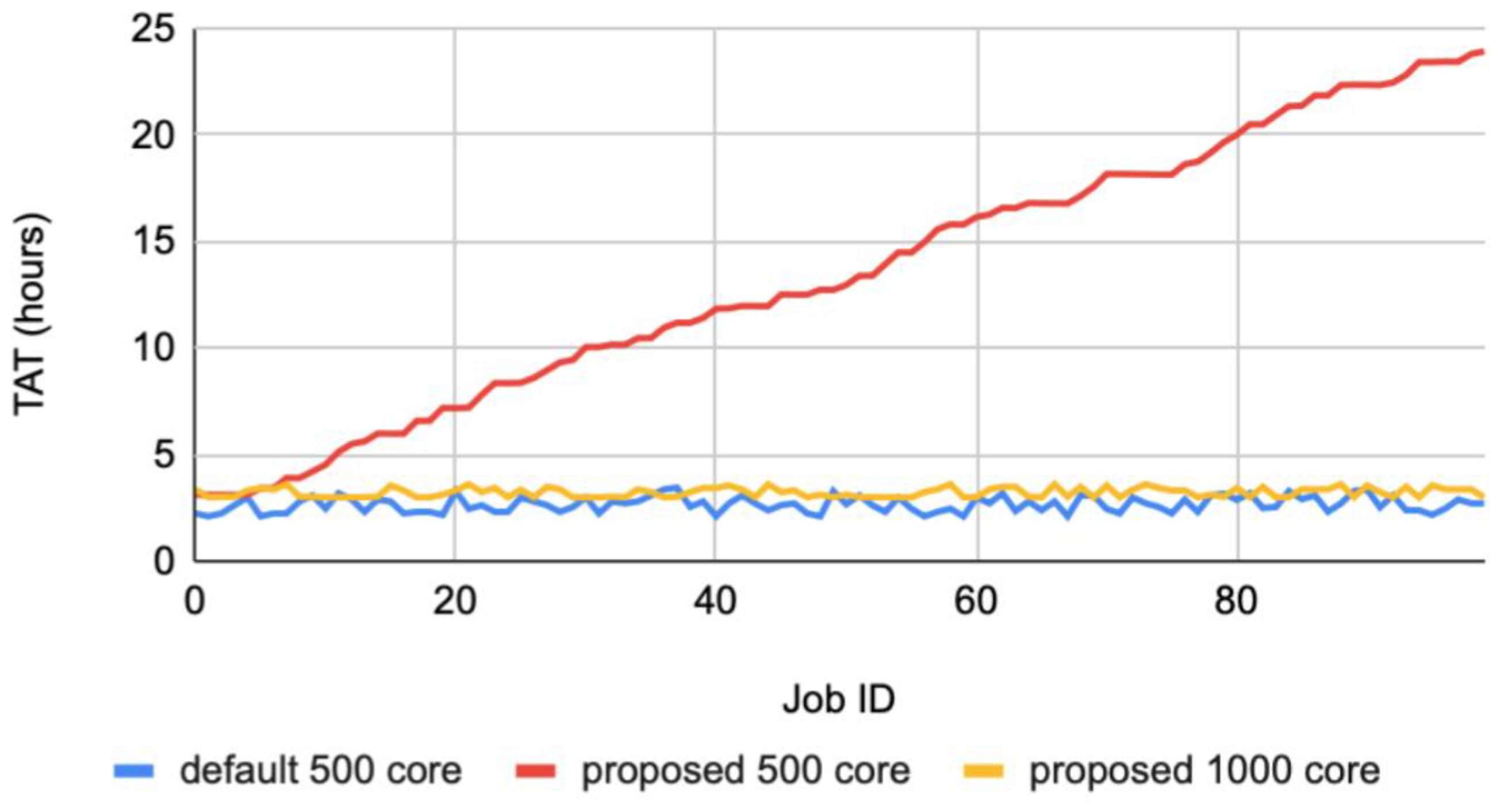

3.2. Multi-Input DEVS Model Simulation

- Core 100

- Core 500

- Core 1500

- Core 2000

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bjorkholm, J. EUV Lithography-The Successor to Optical Lithography? Intel Technol. J. 2001, 3, 98. [Google Scholar]

- Stulen, R.H.; Sweeney, D.W. Extreme Ultraviolet Lithography. IEEE J. Quantum Electron. 1999, 35, 694–699. [Google Scholar] [CrossRef]

- Lee, J.J.; Jang, W.S. Nanoscale 3d shape patterning technology. Phys. High Technol. J. Korean Phys. Soc. 2004, 13, 14–19. [Google Scholar]

- Kim, J.; Lee, J.S.; Han, Y.S. Fault Detection Prediction Using a Deep Belief Network-Based Multi-Classifier in the Semiconductor Manufacturing Process. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 1125–1139. [Google Scholar] [CrossRef]

- Ji, M.K. The Method of Run Time Prediction Simulation-Based Load Balancing. Master’s Thesis, Sungkyunkwan University, Suwon, Korea, 2015. [Google Scholar]

- Cortes, A.; Ripoll, A.; Senar, M.; Luque, E. Performance Comparison of Dynamic Load Balancing Strategies for Distributed Computing. In Proceedings of the 32nd Annual Hawaii International Conference on Systems Sciences. 1999. HICSS-32. Abstracts and CD-ROM of Full Papers, Maui, HI, USA, 5–8 January 1999; pp. 170–177. [Google Scholar] [CrossRef]

- Alakeel, A. A Guide to Dynamic Load Balancing in Distributed Computer Systems. Int. J. Comput. Sci. Netw. Secur. (IJCSNS) 2009, 10, 153–160. [Google Scholar]

- Zeigler, B.P. Multifaceted Modeling and Discrete Event Simulation; Academic Press: Orland, CA, USA, 1984. [Google Scholar]

- Park, S.C.; Chang, M.H. Hardware-in-the-loop simulation for a production system. Int. J. Prod. Res. 2011, 50, 2321–2330. [Google Scholar] [CrossRef]

- Chang, D.S.; Cho, K.H.; Cheon, S.; Lee, S.J.; Park, S.C. Effective Simulation Modeling Formalism for Autonomous Control Systems. J. Korean Soc. Qual. Manag. 2018, 46, 973–982. [Google Scholar] [CrossRef]

- Kwon, S.; Sung, C.; Song, H.; Kim, T.G. Integrated Hybrid Modeling Methodology and Simulation Engine Design Based on HDEVS Formalism. J. Korea Soc. Simul. 2013. [Google Scholar] [CrossRef][Green Version]

- Inostrosa-Psijas, A.; Wainer, G.; Gil-Costa, V.; Marin, M. DEVS modeling of large scale Web Search Engines. In Proceedings of the Winter Simulation Conference 2014, Savannah, GA, USA, 7–10 December 2014; pp. 3060–3071. [Google Scholar] [CrossRef]

- Alshareef, A.; Sarjoughian, H.S.; Zarrin, B. An Approach for Activity-based DEVS Model Specification. In Proceedings of the 2016 Symposium on Theory of Modeling and Simulation (TMS-DEVS), Pasadena, CA, USA, 3–6 April 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Han, Y.S.; Kim, J.M.; Lee, C. DEVS-based semiconductor process scheduling using the multifacetted system modelling and discrete event simulation. In Proceedings of the 2014 Winter Simulation Conference. General Modeling Methodologies I, Savannah Georgia, Savannah, GA, USA, 7–10 December 2014. [Google Scholar]

- Song, H.S.; Lee, J.Y.; Kim, T.G. DEVS-based Modeling Simulation for Semiconductor Manufacturing Using an Simulation-based Adaptive Real-time Job Control Framework. J. Korea Soc. Simul. 2010, 19, 45–54. [Google Scholar]

- Ray, S.; Das, A. Execution Analysis of Load Balancing Algorithms in Cloud Computing Environment. Int. J. Cloud Comput. Serv. Archit. 2012, 2, 1–13. [Google Scholar] [CrossRef]

| Type | TAT (Hours) | Min Density | Max Density | Count |

|---|---|---|---|---|

| JOB_A | 3 | 5 | 30 | 100 |

| JOB_B | 6 | 30 | 50 | 100 |

| JOB_C | 12 | 50 | 70 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.S.; Kwon, B.S.; Nam, C.S.; Lee, J.S. SLLB-DEVS: An Approach for DEVS Based Modeling of Semiconductor Lithography Load Balance. Appl. Sci. 2021, 11, 4235. https://doi.org/10.3390/app11094235

Han YS, Kwon BS, Nam CS, Lee JS. SLLB-DEVS: An Approach for DEVS Based Modeling of Semiconductor Lithography Load Balance. Applied Sciences. 2021; 11(9):4235. https://doi.org/10.3390/app11094235

Chicago/Turabian StyleHan, Young Shin, Bo Seung Kwon, Choon Sung Nam, and Jong Sik Lee. 2021. "SLLB-DEVS: An Approach for DEVS Based Modeling of Semiconductor Lithography Load Balance" Applied Sciences 11, no. 9: 4235. https://doi.org/10.3390/app11094235

APA StyleHan, Y. S., Kwon, B. S., Nam, C. S., & Lee, J. S. (2021). SLLB-DEVS: An Approach for DEVS Based Modeling of Semiconductor Lithography Load Balance. Applied Sciences, 11(9), 4235. https://doi.org/10.3390/app11094235