1. Introduction

Robotic exoskeletons are wearable devices that can enhance physical performance and provide movement assistance. In the case of lower-limb robotic exoskeletons, they can be beneficial for people with motor impairment in the lower extremities as they can assist the gait and facilitate rehabilitation [

1]. The combination of lower-limb robotic exoskeletons with brain–machine interfaces (BMI), which are systems that decode neural activity to drive output devices, offers a new method to provide motor support. Thus, patients could walk while being assisted by an exoskeleton that is controlled by their brain activity.

In the literature, there are different BMI control paradigms for lower-limb exoskeletons based on brain changes. The most common ones are steady-state visually evoked potentials [

2], which are based on visual stimuli; motion-related cortical potentials [

3,

4,

5,

6], which are produced between 1500 and 500 ms before the execution of the movement, and and event-related desynchronization/synchronization (ERD/ERS), which is considered to indicate the activation and posterior recovery of the motor cortex during preparation and completion of a movement [

7,

8,

9]. BMI based on ERD/ERS are usually employed to detect motion intention [

3,

6,

10]. Similar ERD/ERS patterns are produced during motor imagery (MI), which consists of the imagination of a movement [

11,

12,

13]. When performing MI, in contrast to external stimuli, brain changes are induced voluntarily and internally by the subject. BMI based on MI have the objective of identifying different MI tasks or differentiating between MI and an idle state [

5,

14,

15,

16]. The work of [

16] combined MI with eye blinks as a control criterion.

The main limitation of MI is that patients have to maintain it for long periods in order to force the external device to perform any action. However, contrary to instantaneous brain changes, such as MRCP or motion intention, continuous cognitive involvement of a patient during the assisted motion can induce mechanisms of neuroplasticity. Neuroplasticty is the ability of the brain to reorganize its structure and promote rehabilitation [

17]. The performance of maintained brain tasks can be challenging as it requires high focus from the user during the whole experiment and any external influence could easily disturb it. Previous studies have tried to evaluate the level of attention of a subject during the control of the external device [

18] and some of them have considered it as a control paradigm for a lower-limb BMI [

15]. BMI systems need a training phase in which the model is calibrated for each subject and then it is tested with with new data. In [

5,

14,

15,

16], during the training phase, participants alternated periods of MI with idle state and the output device was only moving during MI. Nevertheless, since BMI focus on sensorimotor rhythms, it is difficult to ensure that it is not considering the actual motion instead of motor imagery.

In our previous work [

19], we designed a lower-limb MI BMI to control a treadmill and it was tested with able-bodied subjects. The BMI combined the paradigm of MI with another one that measured the level of attention that users had during MI tasks. In the test phase, i.e., when the output device was commanded by the BMI, the treadmill was only activated when the attention measured was higher than a certain threshold, reducing the number of false triggers. In order to ensure that motion artifacts did not affect the BMI classifier model, the training phase consisted of two types of trials: full standing and full motion trials. The mental tasks to perform were the same for both types, alternating periods of MI with idle state. Both types of trials allowed the creation of two different classifier models to be applied depending on the status of the subject: gait and stand.

In this study, the BMI designed in [

19] was adapted for the control of the gait of a lower-limb exoskeleton and it was evaluated with able-bodied subjects. The combination of this BMI with a lower-limb exoskeleton is a promising and intuitive assistive approach for people with motor impairment. In addition, it could potentially benefit people with cortical damage (e.g., after a stroke) as a therapeutic approach for the recovery of lost motor function. Participants were trained over 2–5 days to assess the effect of practice on the performance. Each day’s session was divided into two parts: the training and test phases. During training, subjects performed trials in which the exoskeleton was walking the entire time and trials in which it was standing. In the test phase, the exoskeleton provided real-time feedback in a closed-loop control scenario. This is a previous step in the development of a BMI that will reinforce rehabilitation and/or assist the gait for patients with neurological damage.

2. Materials and Methods

2.1. Participants

Two subjects participated in the study (mean age 23.5 ± 3.5). They did not report any known disease and had no movement impairment. They did not have any previous experience with BMI. They were informed about the experiments and signed an informed consent form in accordance with the Declaration of Helsinki. All procedures were approved by the Responsible Research Office of Miguel Hernández University of Elche.

2.2. Equipment

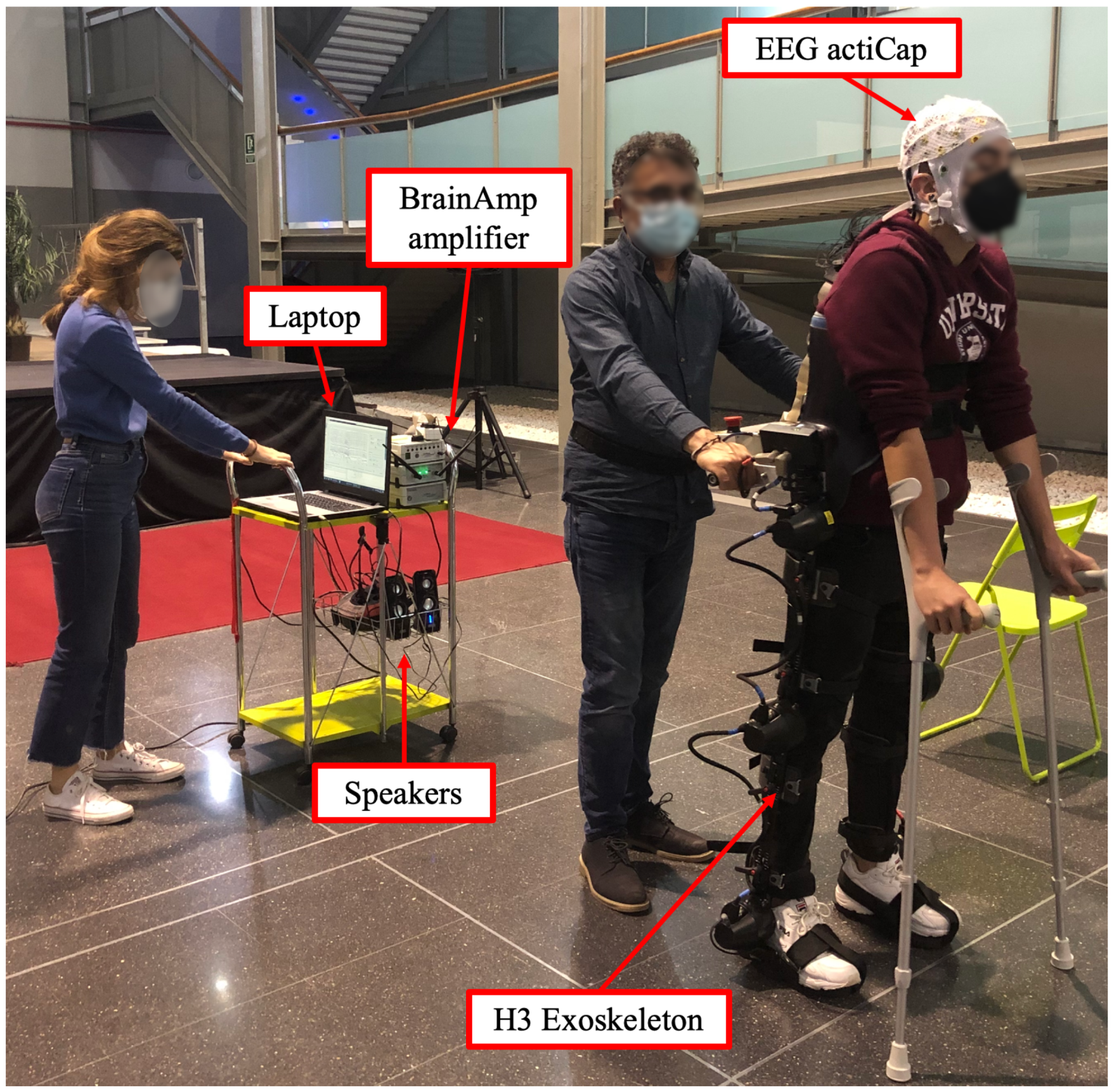

Brain activity was recorded with electroencephalography (EEG). A 32-electrode system actiCap (Brain Products GmbH, Germany) was employed to record EEG signals. The 27 channels selected for acquisition were: F3, FZ, FC1, FCZ, C1, CZ, CP1, CPZ, FC5, FC3, C5, C3, CP5, CP3, P3, PZ, F4, FC2, FC4, FC6, C2, C4, CP2, CP4, C6, CP6, P4. They were placed following the 10-10 international system on an actiCAP (Brain Products GmbH, Germany). Four electrodes were located next to the eyes to record electrooculography (EOG) and ground and reference electrodes were located on the right and left ear lobes, respectively. Each channel signal was amplified with BrainVision BrainAmp amplifier (Brain Products GmbH, Germany). Finally, signals were transmitted wirelessly to the BrainVision recorder software (Brain Products GmbH, Germany).

H3 exoskeleton (Technaid, Madrid, Spain) was employed to assist the movement and participants used crutches as support. Control start/stop gait commands were sent via Bluetooth. The experimental setup can be seen in

Figure 1.

2.3. Experimental Design

Each participant completed several sessions and each session was divided into two parts. The first part consisted of the training phase, in which the exoskeleton was in opened-loop control. Thus, it was remotely controlled by the laptop with predefined commands based on the mental tasks to be registered and not by the output of the BMI classifier. Afterwards, the second part of the session allowed assessment of the BMI performance during closed-loop control of the exoskeleton. Commands issued by the BMI were sent to the exoskeleton in real time based on the decoding of the brain activity obtained as output of the BMI classifier, receiving the subjects’ real-time feedback on their performance.

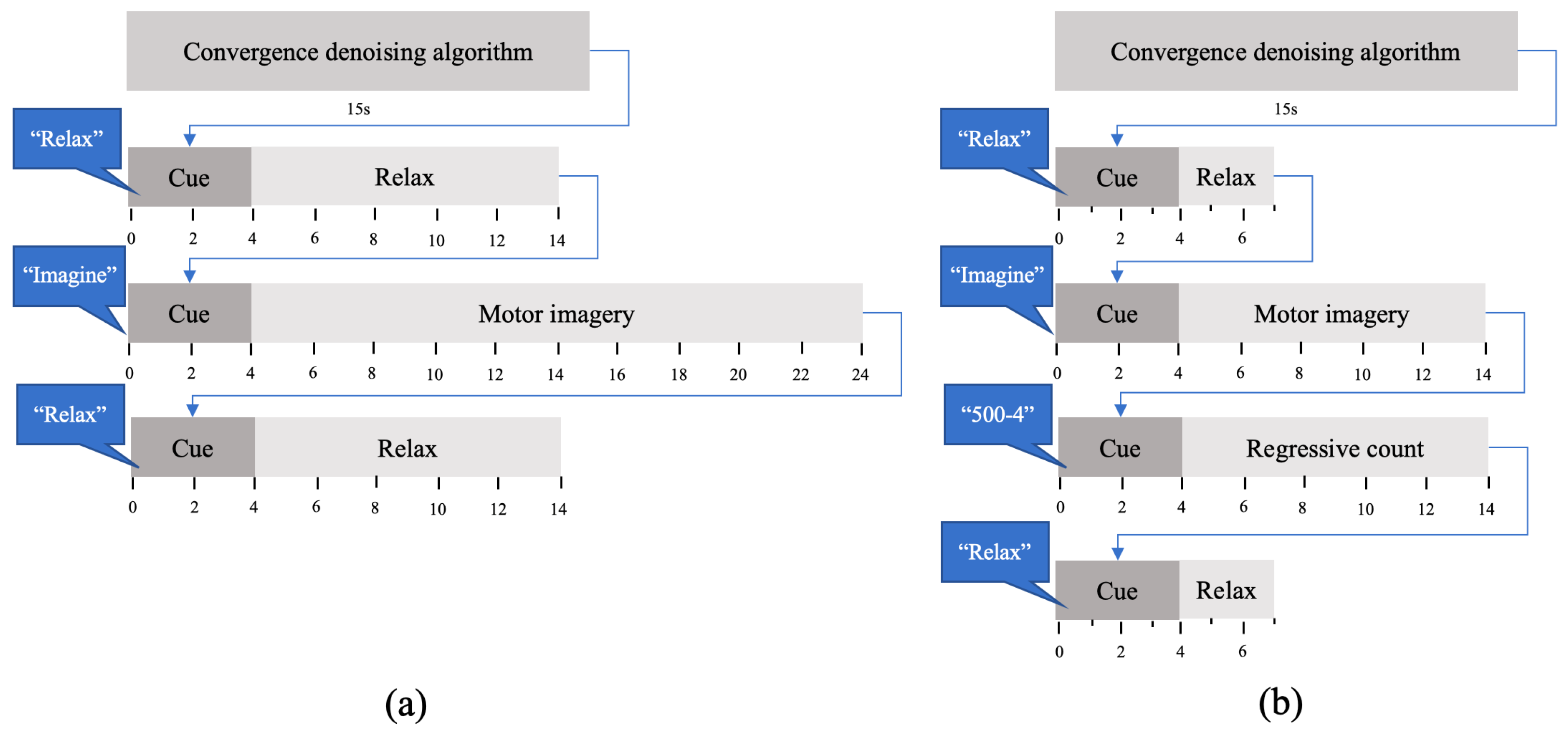

2.3.1. Training Phase

In the first part of each session, subjects performed 20 trials. Each trial consisted of a sequence of three mental tasks: MI of the gait, idle state and regressive count. For idle state, participants were asked to be as relaxed as possible. The regressive count was randomly changed every trial and consisted of a number between 300 and 1000 and a subtrahend between 1 and 9. For example, if they were given the count 500-4, they had to compute the series of subtractions of 496, 492, 488... until they had to perform the following task. This task aims to focus the subject on a demanding mental task very different to MI in order to assess a low level of attention to gait. The protocol can be seen in

Figure 2a. There was a voice message that indicated the beginning of each task: ‘Relax’, ‘Imagine’, ‘500-5’. The message for the regressive count indicated a different mathematical operation each time. In order to avoid evoked potentials, the 4 s period after auditory cues was not considered for further analysis.

During the session, subjects used crutches to maintain stability. In addition, a member of the research staff softly held the exoskeleton to prevent any possible loss of balance or fall. Ten of the training trials were performed in a full no-motion status and the other ten in a full motion status assisted by the exoskeleton. These trials were employed to train two different BMI classifiers: StandClassifiers (with non-motion trials) and GaitClassifiers (with full motion trials).

2.3.2. Test Phase

In the second part of each session, the BMI was tested in closed-loop control with the two groups of classifiers obtained with the data of the training phase (StandClassifiers, GaitClassifiers). Subjects performed five trials, whose protocol can be seen in

Figure 2b. The transition between tasks was indicated with voice messages for ‘Relax’ and ‘Imagine’ tasks. Notice that no ‘Regression count’ task was considered, as attention level to gait was computed based on the information from training, but there was no need to implement a low-level gait attention task in the testing trials.

2.4. Brain Machine Interface

The presented BMI had the following steps: data acquisition, pre-processing, feature extraction, classification, exoskeleton control and evaluation.

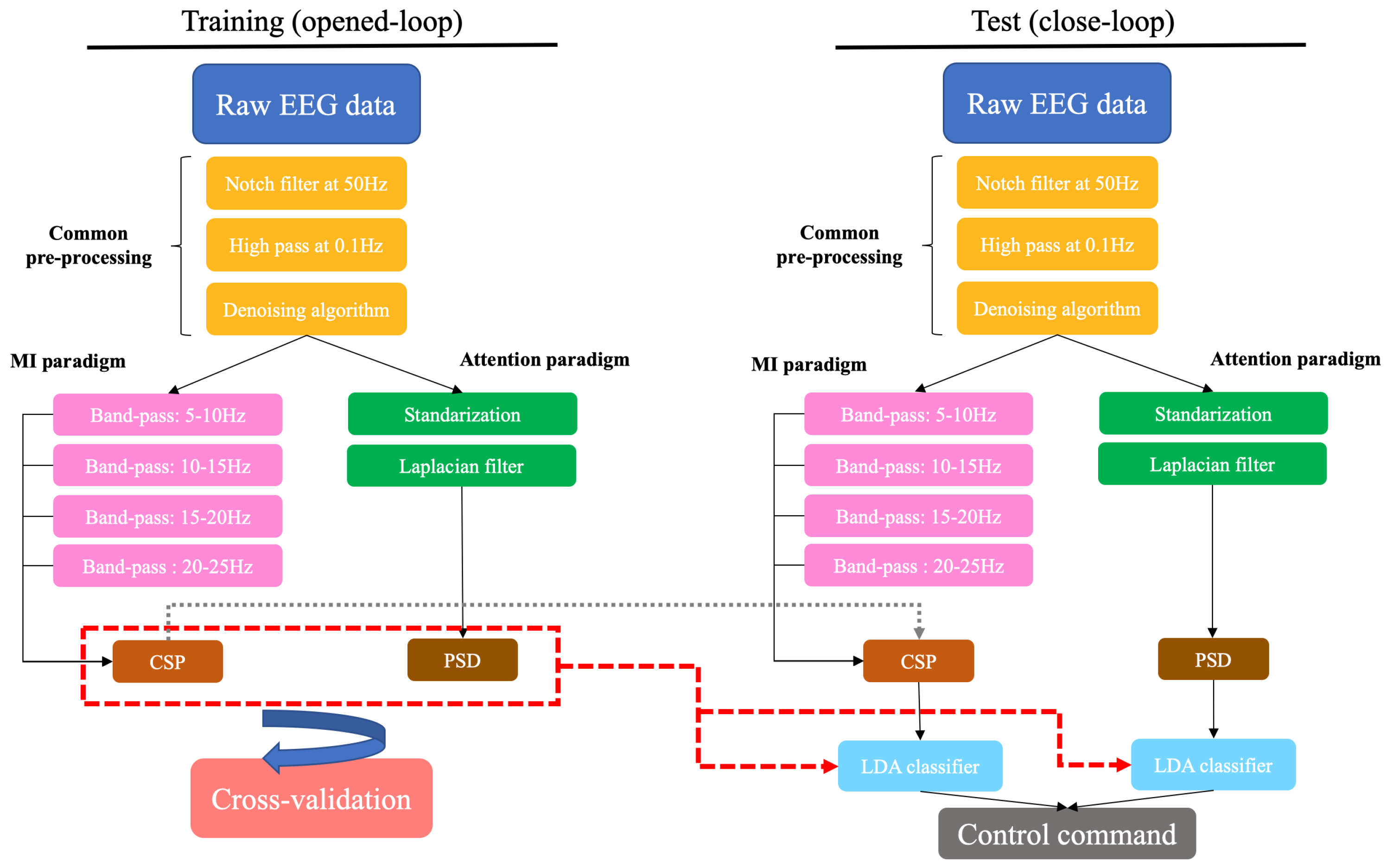

As indicated before, this BMI was based on two paradigms: MI and attention. The first one was based on the distinction between MI of the gait and an idle state, so only data associated with these brain tasks were considered to train the classifiers (relax and motor imagery). With regard to the attention paradigm, it measured the level of attention to gait. Therefore, it had the objective of differentiating between the attention of the subject during MI and the attention during irrelevant tasks. For this paradigm, all brain tasks from training trials were contemplated (relax, motor imagery and regressive count). While the attention to the gait was assumed to be high during MI tasks, it was assumed to be low during regressive count and idle state. The schema of the BMI can be seen in

Figure 3.

2.4.1. Data Acquisition

EEG signals were recorded at a sampling frequency of 200 Hz. Then, epochs of 1 s with 0.5 s of shifting were extracted and processed.

2.4.2. Pre-Processing

The pre-processing stage started with two frequency filters: a notch filter at 50 Hz to remove the contribution of the power line and a high-pass filter at 0.1 Hz. In order to reduce motion artifacts, electrode wires were fixed with clamps and a medical mesh. The movement of jaw muscles can generate signal artifacts, so subjects were asked to not swallow or chew while they were performing MI, regressive count or were in a idle state.

The

denoising algorithm was applied to mitigate the presence of eye artifacts and signal drifts [

5]. This algorithm estimates the contribution of the EOG and a constant parameter to the EEG signal and removes it. Afterwards, there were two different pre-processing lines, one for each paradigm.

For the MI paradigm, a filter bank comprising multiple band-pass filters was applied to the data after the denoising algorithm. Four band-pass filters were employed to obtain data associated with alpha and beta rhythms.

Regarding the attention paradigm, EEG signals from each channel were first standardized following the process presented in [

20]. For each channel, the maximum visual threshold was computed as the mean of the 6 highest values of the signal. This value was iteratively updated for each epoch and it was used to standardize the data as

The signal of each chanel,

, was normalized taking into consideration the maximum visual threshold (

) of all the EEG channels. Subsequently, the surface Laplacian filter was used to reduce spatial noise and enhance the local activity of each electrode [

21].

2.4.3. Feature Extraction

The following step of the BMI has the objective of computing the characteristics of the EEG during each brain task that could be discriminating.

For the MI paradigm, common spatial patterns (CSP) [

22] are computed for each frequency band. CSP estimate a spatial transformation that maximizes the discriminability between two brain patterns. If

X is the EEG that has

dimensions, which are the number of channels and number of samples, respectively, the CSP algorithm estimates a matrix of spatial filters

W that discriminates between two classes: (

) and (

). Firstly, the normalized covariance matrices are computed for each class as in

These matrices are computed for each trial and

and

are calculated by averaging over all trials of the same class. The averaged covariance matrices are combined to result in the composite spatial covariance matrix that can be factorized as

is a matrix of eigenvectors and

is the diagonal matrix of eigenvalues. The averaged covariance matrices are transformed as

and

have common eigenvectors, and the sum of both matrices of eigenvalues is the identity matrix.

The projection matrix is obtained as

Z is the projection of the original EEG signal

S into another space. Columns of

are the spatial patterns.

Although

Z has

dimensions, the first and last rows are the components that can be better discriminated in terms of their variance. Therefore, for feature extraction, only the

m first and last components of

Z are considered.

is the subset of

Z and the variances of each component are computed and normalized with the logarithm as

is the vector of features and has dimension. m was set to 4, and in the pre-processing phase, 4 band-pass filters were employed so the dimension is .

For the attention paradigm, power spectral estimation by Maximum Entropy Method (MEM) was used to obtain features associated with each task. The signal of each electrode was estimated as an autoregressive model in which the known autocorrelation coefficients were calculated and the unknown coefficients were estimated by maximizing the spectral entropy [

23]. Afterwards, the autocorrelation cofficients were used to compute the power spectrum that was compatible with the fragment of the signal analyzed, but it was also evasive regarding unseen data. Afterwards, only the power of the frequencies in the gamma band was considered [

15].

2.4.4. Classification

Training trials of each session were evaluated using leave-one-out cross-validation. Each trial was once used as a test and the remaining trials conformed to the training group. This process was performed independently for trials in which subjects were standing (10 trials) and trials in which they were in motion (10 trials). Linear Discriminant Analysis (LDA) [

24] classifiers were created depending on the subject status—full standing trials (StandClassifiers) and full motion trials(GaitClassifiers)—each one with two different models based on the decoding paradigm: MI and attention paradigms. As stated above, whereas LDA classifiers of the MI paradigm were only trained with data from MI and idle state, LDA classifiers of the attention paradigm were trained with data from all brain tasks (idle, regressive count, MI).

Concerning the test phase, the developed BMI was designed as a state machine system in which a group of classifiers was chosen based on the status of the exoskeleton. This way, if the subject is in a standing position, the MI and attention classifiers of the full standing trials (StandClassifiers) are used to decide if the exoskeleton keeps standing or starts moving, but if the subject is moving, the MI and attention classifiers obtained by the full motion trials (GaitClassifiers) are used to continue walking or to stop. Predictions from both paradigms were combined to decode control commands. Its design can be seen in

Figure 4. In summary, in each test trial, subjects started standing with the exoskeleton and StandClassifiers were employed. The system could decode stop or walk commands based on the prediction of their MI and attention classifiers. When a walk command was sent to the exoskeleton, it started the gait and the system was changed to Gait state. Consequently, GaitClassifiers were employed afterwards. Again, the system could decode stop or walk commands, but when a stop command was issued, the exoskeleton stopped the gait and the system changed to Stand state again.

2.4.5. Exoskeleton Control

In the test phase, the exoskeleton was controlled by BMI decoded commands. MI classifiers could predict two classes, 0 for idle state and 1 for MI, and attention classifiers could predict a 0 for low attention to gait and a 1 for high attention. These predictions were averaged every 10 s, which resulted in MI and attention indices that ranged from 0 to 1. Control commands were selected based on the following rules:

2.5. Evaluation

The accuracy of training trials was defined as the percentage of correctly classified epochs during each brain task. This metric was computed separately for trials in which participants were moving and trials in which they were static. Furthermore, the performance of closed-loop trials was assessed with the following indices:

%MI and %Att: percentage of epochs of data correctly classified for each paradigm.

%Commands: percentage of epochs of data with correct control commands.

Accuracy commands: percentage of correct commands issued.

True positive ratio (TPR): percentage of MI periods in which a walking event is executed. There is only an event of MI per trial, so this value can only be 0 or 100% per trial.

False positives (FP) and false positives per minute (FP/min): moving commands issued during rest periods.

Transition events were not considered for the computation of evaluation metrics.

3. Results

During training, participants wore the exoskeleton in an opened-loop control. Each subject completed several sessions, and on each of them, they completed 20 trials: 10 trials standing still and 10 trials walking. Results from subjects S1 and S2 are shown in

Table 1 and

Table 2, respectively. It must be noted that they did not have the same amount of practice since they participated in a different number of sessions. Two different BMI paradigms were carried out. For the MI paradigm, S1 reached an average accuracy of 72.77 ± 6.61% with a difference of around 6% between the two conditions, standing and walking. In the last session, S2 achieved an average accuracy of 64.11 ± 9.98 with a difference of 20% between the two approaches. With respect to the attention paradigm, S1 obtained an accuracy of 65.06 ± 6.44 with a difference of 8%, and S2 achieved 65.83 ± 4.43 and a 10% difference. The average accuracy of the MI and attention paradigm was 68.44 ± 8.46% and 65.45 ± 5.53%, respectively.

Figure 5 and

Figure 6 show the spatial patterns of S1 and S2 in their last session. Moreover, in order to provide a comparison under the same conditions,

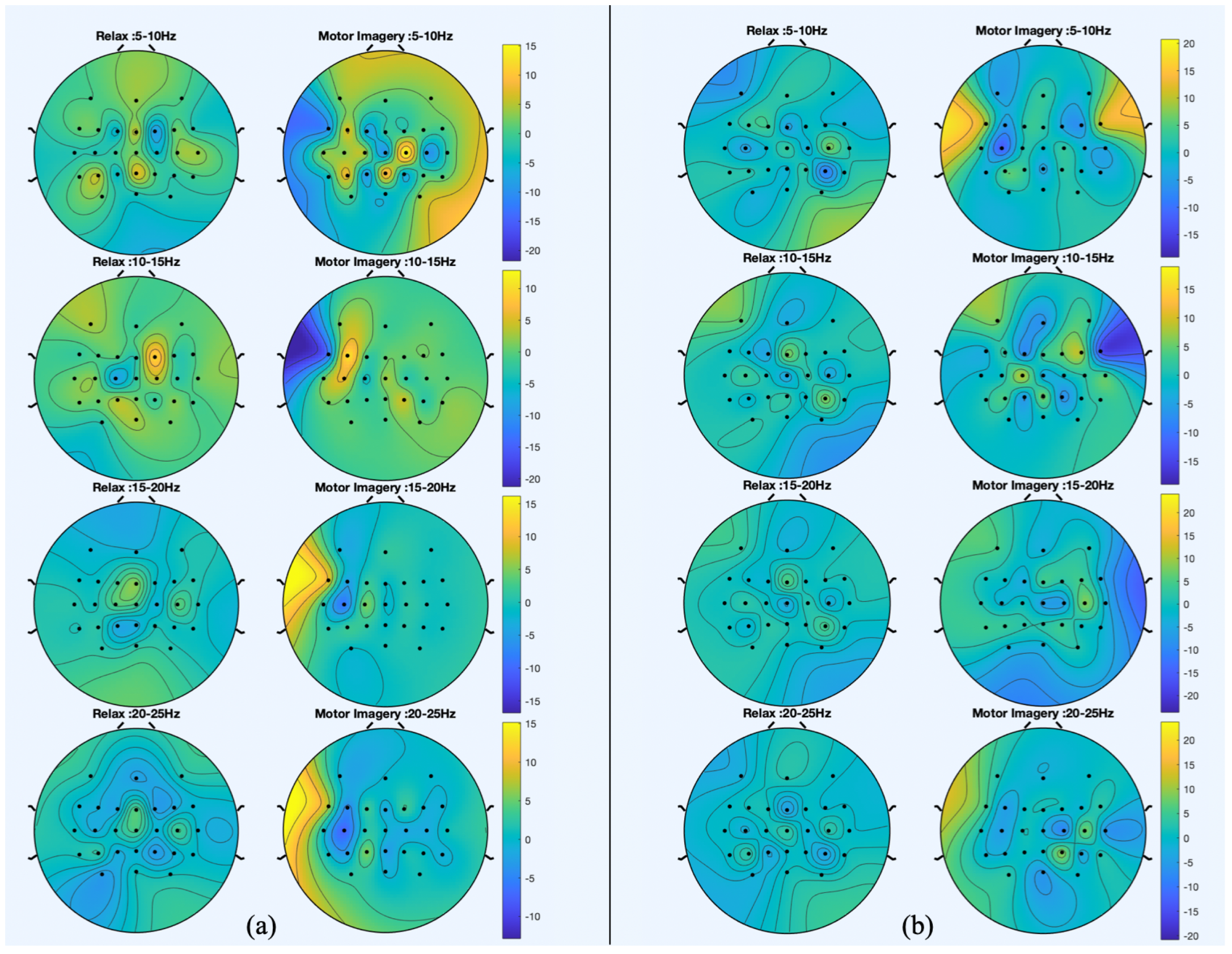

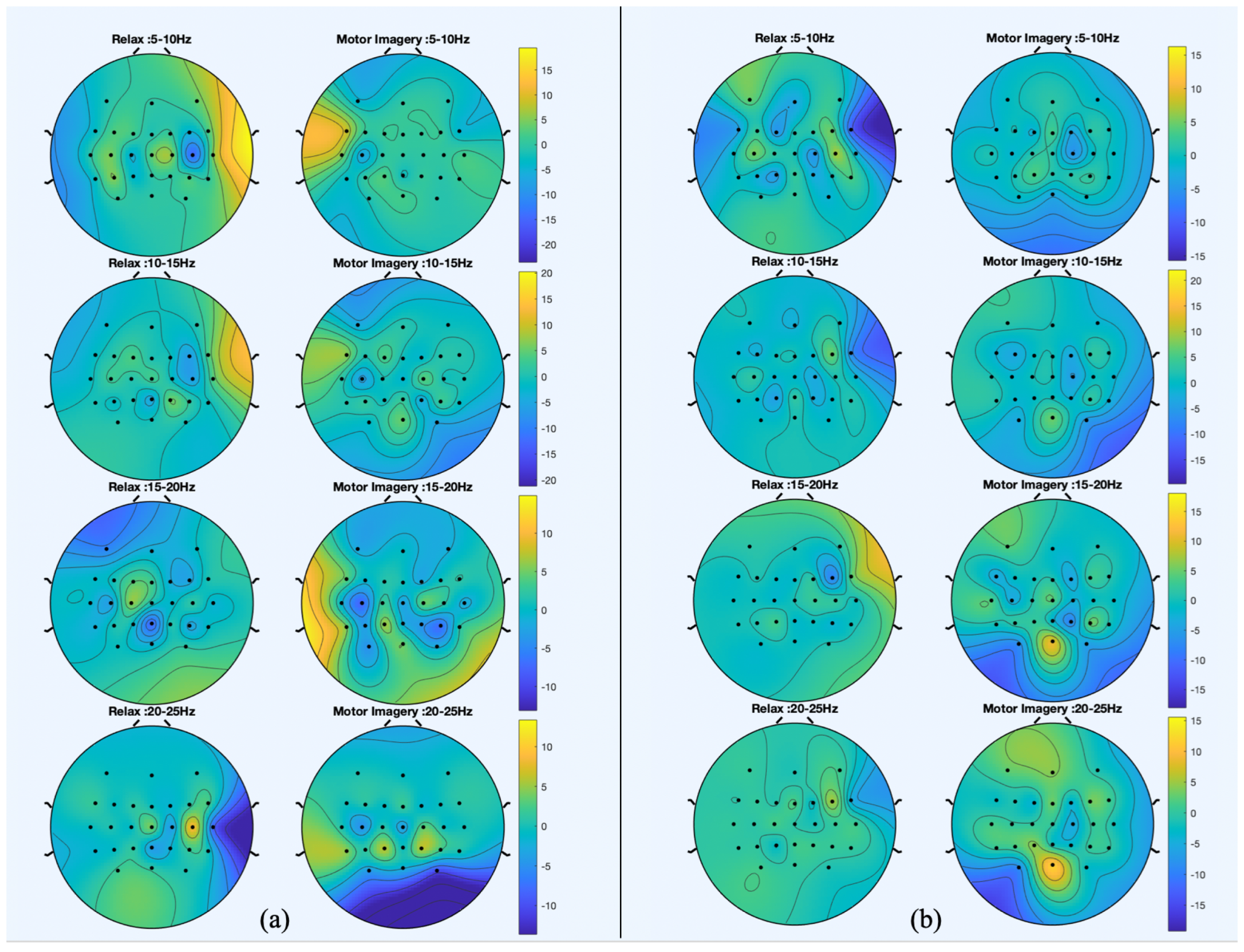

Figure 7 shows the spatial patterns of S3 in the second session. The spatial patterns estimated during trials without movement show that for S1 and S2, electrode FCz seems to have a relevant role in the discrimination of idle state. During MI events, the most significant electrodes for both subjects are peripheral as FC5. However, results from S2 show that in the 5–10 Hz band, C2 and CPz are relevant to the MI of gait. Regarding trials in which participants are walking, the distribution of relevant areas seems scattered for idle state and for MI; peripheral electrodes are also highlighted.

When comparing the spatial patterns of S2 in two different sessions, the main similarities can be found in the stand trials. CPz and Cz are highlighted for the relax class and electrode FC5 seems to be significant for the MI class.

3.1. Training Phase

Table 1.

Results from training, subject S1. Trials with opened-loop control of the exoskeleton.

Table 1.

Results from training, subject S1. Trials with opened-loop control of the exoskeleton.

| | | Session 1 | Session 2 |

|---|

| Stand | %MI | 59.29 ± 10.51 | 69.64 ± 7.62 |

| | %Att | 57.38 ± 9.27 | 60.83 ± 7.58 |

| Gait | %MI | 58.93 ± 11.60 | 75.89 ± 5.41 |

| | %Att | 65.83 ± 8.57 | 69.29 ± 5.04 |

Table 2.

Results from training, subject S2. Trials with opened-loop control of the exoskeleton.

Table 2.

Results from training, subject S2. Trials with opened-loop control of the exoskeleton.

| | | Session 1 | Session 2 | Session 3 | Session 4 | Session 5 |

|---|

| Stand | %MI | 53.32 ± 8.59 | 69.64 ± 8.70 | 65.54 ± 5.12 | 64.2 ± 11.45 | 74.11 ± 6.14 |

| | %Att | 63.95 ± 4.44 | 62.57 ± 8.77 | 58.21 ± 8.76 | 59.52 ± 8.44 | 60.83 ± 5.48 |

| Gait | %MI | 50.26 ± 7.54 | 54.17 ± 8.75 | 62.50 ± 8.91 | 59.82 ± 10.11 | 54.11 ± 12.71 |

| | %Att | 61.05 ± 5.13 | 63.36 ± 2.84 | 61.55 ± 7.21 | 65.71 ± 6.82 | 70.83 ± 3.04 |

Figure 5.

Spatial patterns for the session of S1 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

Figure 5.

Spatial patterns for the session of S1 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

Figure 6.

Spatial patterns for the session of S2 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

Figure 6.

Spatial patterns for the session of S2 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

Figure 7.

Spatial patterns for the fifth session of S2 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

Figure 7.

Spatial patterns for the fifth session of S2 that best discriminate between motor imagery (MI) and idle state. (a) The spatial patterns from trials in which participant was standing still and (b) the spatial patterns from trials in which they were walking with the exoskeleton.

3.2. Test Phase

The exoskeleton was controlled by the BMI decoded commands and the BMI classifiers were trained with training trials.

Table 3,

Table 4 and

Table 5 summarize the results from closed-loop trials. TPR is 100% in the majority of trials, which means that the exoskeleton was activated at least once during the MI event. The number of false positive activations during idle state ranged from 0 to 2. Regarding %Commands, it improved by 13% from the first to the last session of S2, although their performance in each session was not always superior to the previous one. In the last session, the average %Commands for both subjects was 64.50 ± 10.66%.

4. Discussion

Contrary to the findings of our previous work on a BMI-controlled treadmill [

19], we found significant differences between opened-loop trials in which subjects were standing and when they were walking. It is important to note that walking assisted by an exoskeleton is a more complex task than walking on a treadmill, so subjects must be concentrated. Consequently, it is more difficult for them to perform other brain tasks such as MI or regressive count. In addition, when comparing the results from closed-loop trials, the average percentage of epochs with correct commands was 64.5% with the exoskeleton and 75.6% with the treadmill. A possible explanation for this contrast could be also related to the complexity of the movement with the exoskeleton.

On the other hand, the attention paradigm showed worse performance than the MI paradigm in opened-loop trials, which is consistent with the findings of our previous work [

19]. However, in line with our previous work with an exoskeleton [

15], this difference is not as evident in closed-loop trials. Therefore, future BMI designs could rely more on the attention paradigm for the activation of the exoskeleton.

While results from the MI paradigm showed an increasing trend throughout sessions, this pattern is not as evident for the attention paradigm. Our results for the MI paradigm are in consonance with the conclusions from [

25]. Performing MI is not an intuitive activity for novel participants and practice could promote the modulation and enhance brain activity patterns. Nevertheless, with regard to the attention of the user, the performance does not seem to improve with practice. The attention is something that people train on daily basis, so this could explain why a few sessions cannot further improve it.

There are not many investigations in the literature that developed BMI based on lower-limb MI without other external stimuli [

2] and they are usually based on motion intention [

3,

6,

10]. In addition, the works of [

4,

26] employed upper-limb MI to control a lower-limb exoskeleton. Reference [

26] showed a percentage of correct commands issued every 4.5 s of 66% and [

4] of 80.16% but the BMI was only employed to start the gait and not to stop it. These values can be compared with the %Commands of the present paper. Although superior results are achieved with upper-limb MI, this paradigm cannot be applied to promote neuroplasticity.

In [

16], a BMI was presented that employed a combination of MI with eye blinking as a control paradigm, and an accuracy of 86.7% was reported. However, although control mechanisms that employ eye movements have proven to be precise, they lack application from the rehabilitation point of view. In addition, the work of [

14] presented a BMI that only controlled the start and maintenance of the gait of a lower-limb exoskeleton and they obtained an average accuracy of 74.4%. In our previous research [

15] that also combined the MI and attention paradigms to control an exoskeleton, the percentage of epochs with correct commands issued was 56.77%. Slightly superior results were achieved with the current BMI algorithm.

5. Conclusions

The current research presents a BMI system based on MI and attention paradigms that has been tested to control a lower-limb exoskeleton. Participants performed 2–5 sessions to assess the effect of practice on the performance. Each session was divided into two parts: the training and test phases. First, participants completed trials in which they had to perform certain brain tasks and the exoskeleton was controlled remotely by the laptop with predefined commands. During half of the trials, the exoskeleton was walking, and during the other half, it was completely static. Therefore, contrary to previous works, brain tasks to discriminate happened under the same conditions. Moreover, this setup can reduce the effect of artifacts on the predictions. The average performance in the last session was 68.44 ± 8.46% for the MI paradigm and 65.45 ± 5.53% for the attention paradigm. The second part of the each session consisted of closed-loop controlled trials in which the exoskeleton was commanded by the predictions of the BMI. The BMI worked as a state machine that used different classifiers depending on whether the exoskeleton was static or moving. Training trials were used to train the classifiers corresponding to each state of the state machine. The BMI took a decision every 0.5 s and the average percentage of correct commands chosen was 64.50 ± 10.66% for the last session of both subjects.

Participants did not have any motor impairment, but since the main of objective of the system is to promote neurorehabilitation and neuroplasticity, future research will focus on people with motor disabilities.