Self-Supervised Transfer Learning from Natural Images for Sound Classification

Abstract

1. Introduction

- We demonstrated that pre-trained networks with natural images can improve the performance of audio-related tasks with precise pre-training schemes.

- Networks pre-trained through self-supervised learning have similar effects on the performance of audio tasks as those pre-trained through supervised methods.

- When the self-supervised transfer learning scheme was validated using general sound classification datasets, such as (ESC-50, GTZAN, and UrbanSoun8K), the classification accuracy was significantly improved.

2. Related Works

2.1. Transfer Learning on Natural Image Domain

2.2. Transfer Learning on Audio Domain

2.3. Self-Supervised Learning

3. Method

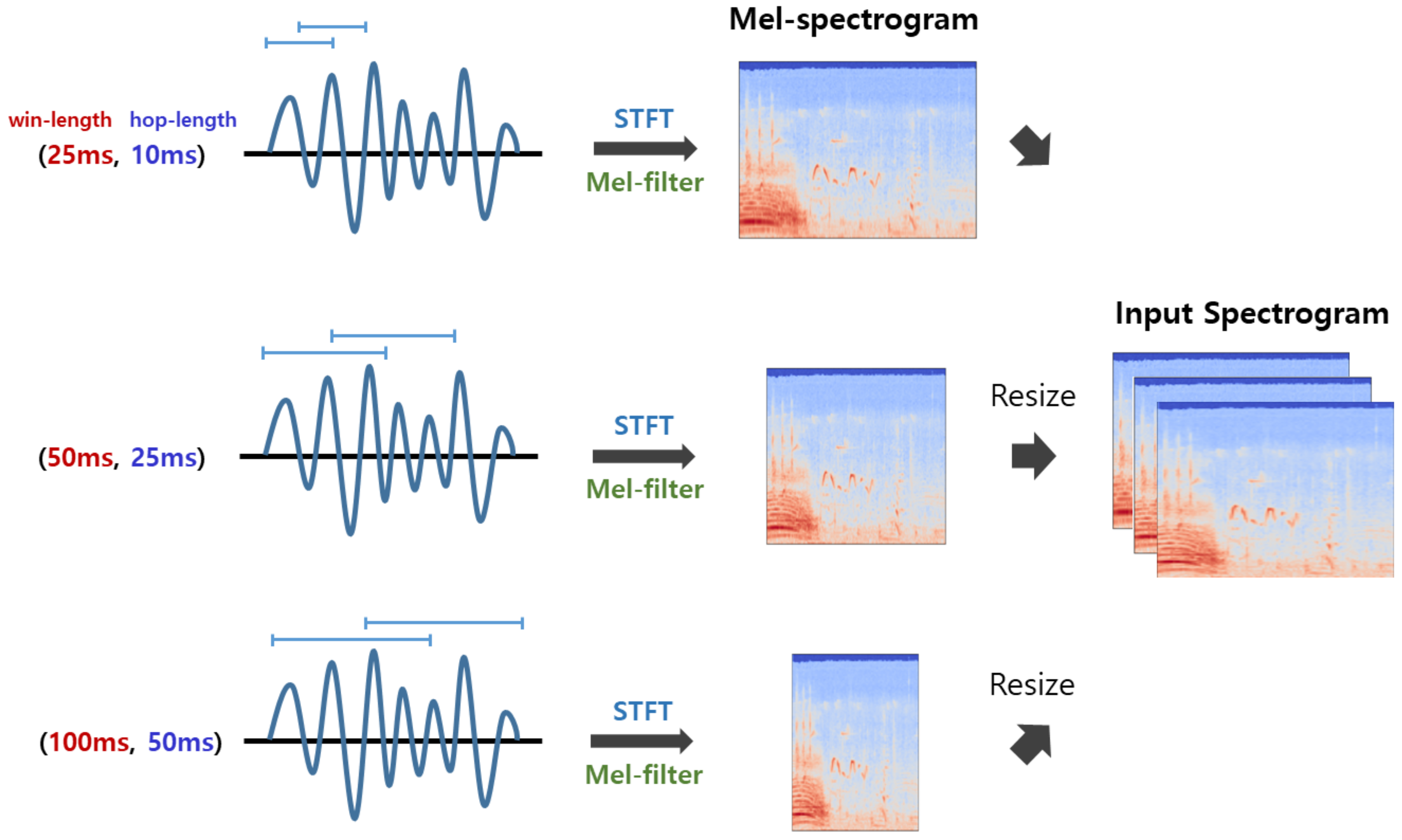

3.1. Data Pre-Processing

3.2. Deep Convolutional Neural Network for Sound Event Detection

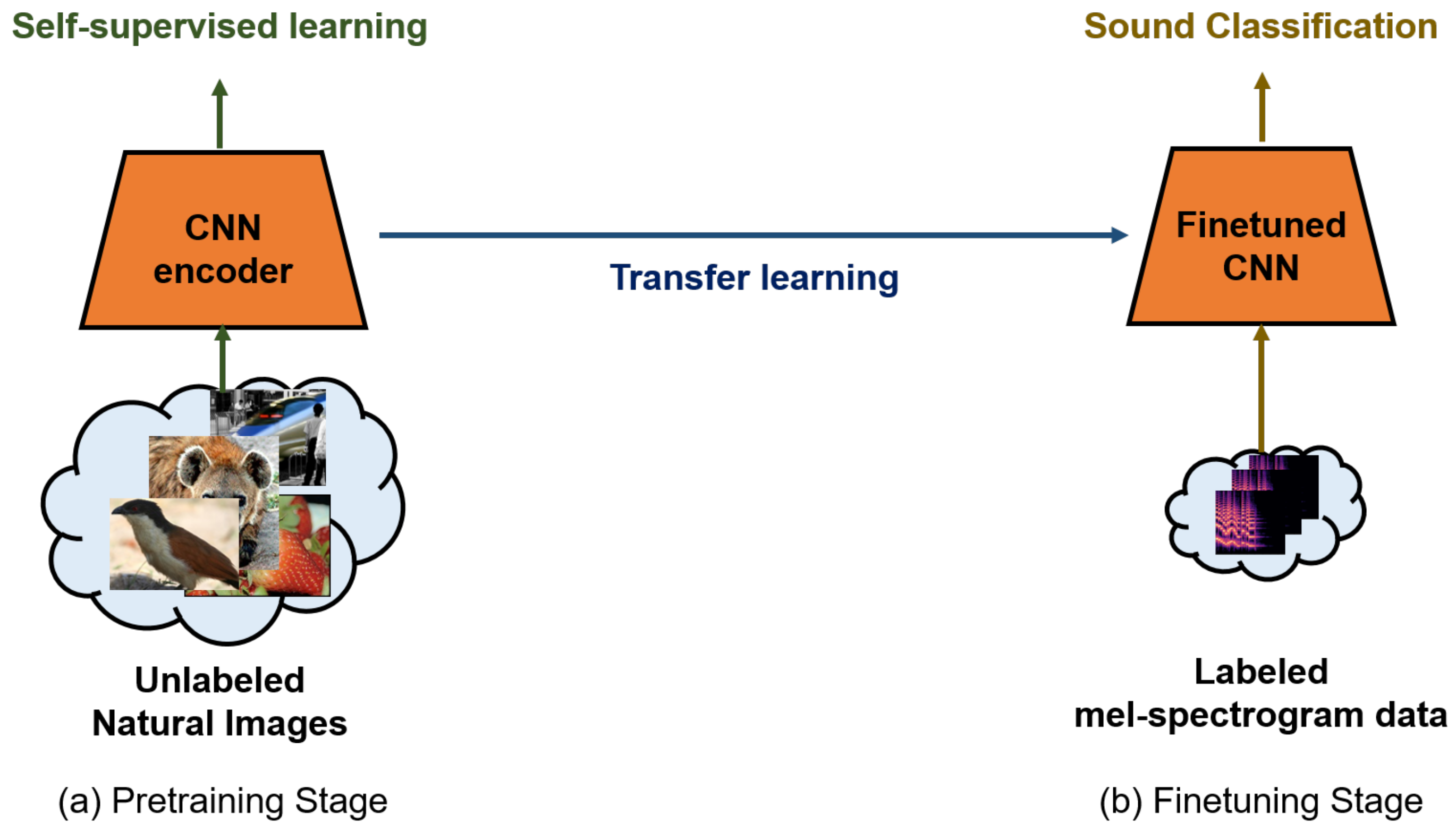

3.3. Self-Supervised Learning for Pre-Training

3.4. Transfer Learning from Natural Images to Audio Domain

3.5. t-Stochastic Neighbor Embedding Analysis

4. Experiments

4.1. Datasets

- (1)

- ESC-50: It [20] is composed of 2000 audio clips (duration = 5 s) that are labeled into 40 classes of environmental sound, such as door knock, dog, and rain. Each class has 40 audio clips, and each sample was sampled at 44.1 kHz.

- (2)

- UrbanSound8K: It [22] is composed of 8732 audio clips of urban sound. They are labeled into 10 classes, namely, air conditioner, car horn, children playing, dog bark, drilling, engine idling, gun shot, jackhammer, sire, and street music. Each class has 800–1000 clips; each sample was sampled at a rate of 16–44.1 kHz. To use the audio samples as input for deep learning, we fixed their length to 4 s by resizing.

- (3)

- GTZAN Dataset: It [21] is composed of 1000 music clips labeled with 10 classes of music genres. Each class has 100 clips and each clip was sampled at 22.5 kHz. All clips comprised 30 s-long music files.

4.2. Experiments Settings

4.3. Evaluation Results on GTZAN, ESC-50, and UrbanSound8K

4.4. Linear Evaluation of Self-Supervised Learning Model in Audio Domain

4.5. t-SNE Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Sample Availability

References

- Schuller, B.; Rigoll, G.; Lang, M. Hidden Markov model-based speech emotion recognition. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, Hong Kong, China, 6–10 April 2003; Volume 2, p. II-1. [Google Scholar]

- Nwe, T.L.; Foo, S.W.; De Silva, L.C. Speech emotion recognition using hidden Markov models. Speech Commun. 2003, 41, 603–623. [Google Scholar] [CrossRef]

- Sohn, J.; Kim, N.S.; Sung, W. A statistical model-based voice activity detection. IEEE Signal Process. Lett. 1999, 6, 1–3. [Google Scholar] [CrossRef]

- Chang, J.H.; Kim, N.S.; Mitra, S.K. Voice activity detection based on multiple statistical models. IEEE Trans. Signal Process. 2006, 54, 1965–1976. [Google Scholar] [CrossRef]

- Sehgal, A.; Kehtarnavaz, N. A convolutional neural network smartphone app for real-time voice activity detection. IEEE Access 2018, 6, 9017–9026. [Google Scholar] [CrossRef] [PubMed]

- Chang, S.Y.; Li, B.; Simko, G.; Sainath, T.N.; Tripathi, A.; van den Oord, A.; Vinyals, O. Temporal modeling using dilated convolution and gating for voice-activity-detection. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5549–5553. [Google Scholar]

- Ozer, I.; Ozer, Z.; Findik, O. Noise robust sound event classification with convolutional neural network. Neurocomputing 2018, 272, 505–512. [Google Scholar] [CrossRef]

- Fonseca, E.; Plakal, M.; Ellis, D.P.; Font, F.; Favory, X.; Serra, X. Learning sound event classifiers from web audio with noisy labels. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 21–25. [Google Scholar]

- Dong, X.; Yin, B.; Cong, Y.; Du, Z.; Huang, X. Environment sound event classification with a two-stream convolutional neural network. IEEE Access 2020, 8, 125714–125721. [Google Scholar] [CrossRef]

- Mirsamadi, S.; Barsoum, E.; Zhang, C. Automatic speech emotion recognition using recurrent neural networks with local attention. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2227–2231. [Google Scholar]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Yoon, S.; Byun, S.; Jung, K. Multimodal speech emotion recognition using audio and text. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 112–118. [Google Scholar]

- Yoshimura, T.; Hayashi, T.; Takeda, K.; Watanabe, S. End-to-end automatic speech recognition integrated with ctc-based voice activity detection. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6999–7003. [Google Scholar]

- Palanisamy, K.; Singhania, D.; Yao, A. Rethinking cnn models for audio classification. arXiv 2020, arXiv:2007.11154. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [PubMed]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep learning earth observation classification using ImageNet pretrained networks. IEEE Geosci. Remote Sens. Lett. 2015, 13, 105–109. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Guérin, J.; Gibaru, O.; Thiery, S.; Nyiri, E. CNN features are also great at unsupervised classification. arXiv 2017, arXiv:1707.01700. [Google Scholar]

- Guérin, J.; Boots, B. Improving image clustering with multiple pretrained cnn feature extractors. arXiv 2018, arXiv:1807.07760. [Google Scholar]

- Piczak, K.J. ESC: Dataset for Environmental Sound Classification. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1015–1018. [Google Scholar] [CrossRef]

- Tzanetakis, G. GTZAN Dataset. Available online: http://marsyas.info/downloads/datasets.html (accessed on 18 February 2021).

- Salamon, J.; Jacoby, C.; Bello, J.P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM international conference on Multimedia, Mountain View, CA, USA, 18–19 June 2014; pp. 1041–1044. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1735–1742. [Google Scholar]

- Dosovitskiy, A.; Springenberg, J.T.; Riedmiller, M.; Brox, T. Discriminative Unsupervised Feature Learning with Convolutional Neural Networks. arXiv 2014, arXiv:1406.6909v1. [Google Scholar] [CrossRef] [PubMed]

- Oord, A.V.D.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Bachman, P.; Hjelm, R.D.; Buchwalter, W. Learning Representations by Maximizing Mutual Information Across Views. In Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:cs.LG/2002.05709. [Google Scholar]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G. Big Self-Supervised Models are Strong Semi-Supervised Learners. arXiv 2020, arXiv:cs.LG/2006.10029. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M.; Cho, K. Transfer learning for music classification and regression tasks. In Proceedings of the 18th International Society for Music Information Retrieval Conference, Suzhou, China, 23–27 October 2017; pp. 141–149. [Google Scholar]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. Panns: Large-scale pretrained audio neural networks for audio pattern recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Lee, J.; Nam, J. Multi-Level and Multi-Scale Feature Aggregation Using Pretrained Convolutional Neural Networks for Music Auto-Tagging. IEEE Signal Process. Lett. 2017, 24, 1208–1212. [Google Scholar] [CrossRef]

- Doersch, C.; Gupta, A.; Efros, A.A. Unsupervised visual representation learning by context prediction. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1422–1430. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 649–666. [Google Scholar]

- Noroozi, M.; Favaro, P. Unsupervised learning of visual representations by solving jigsaw puzzles. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 69–84. [Google Scholar]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised representation learning by predicting image rotations. arXiv 2018, arXiv:1803.07728. [Google Scholar]

- Koch, G.R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Shin, S.; Lee, Y.; Kim, S.; Choi, S.; Gwan Kim, J.; Lee, K. Rapid and Non-Destructive Spectroscopic Method for Classifying Beef Freshness using a Deep Spectral Network Fused with Myoglobin Information. Food Chem. 2021, 352, 129329. [Google Scholar] [CrossRef] [PubMed]

- Van der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Model | Accuracy (%) | ||

|---|---|---|---|

| GTZAN | ESC-50 | UrbanSound8K | |

| Vanilla | 86.50 | 78.25 | 76.11 |

| Pre-trained (supervised) [14] | 90.50 | 90.55 | 83.34 |

| Pre-trained (self-supervised) (Ours) | 90.50 | 90.15 | 83.30 |

| Model | Accuracy (%) | ||

|---|---|---|---|

| GTZAN | ESC-50 | UrbanSound8K | |

| Vanilla | 86.50 | 78.25 | 76.11 |

| Linear evaluation | 77.00 | 63.40 | 74.24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, S.; Kim, J.; Yu, Y.; Lee, S.; Lee, K. Self-Supervised Transfer Learning from Natural Images for Sound Classification. Appl. Sci. 2021, 11, 3043. https://doi.org/10.3390/app11073043

Shin S, Kim J, Yu Y, Lee S, Lee K. Self-Supervised Transfer Learning from Natural Images for Sound Classification. Applied Sciences. 2021; 11(7):3043. https://doi.org/10.3390/app11073043

Chicago/Turabian StyleShin, Sungho, Jongwon Kim, Yeonguk Yu, Seongju Lee, and Kyoobin Lee. 2021. "Self-Supervised Transfer Learning from Natural Images for Sound Classification" Applied Sciences 11, no. 7: 3043. https://doi.org/10.3390/app11073043

APA StyleShin, S., Kim, J., Yu, Y., Lee, S., & Lee, K. (2021). Self-Supervised Transfer Learning from Natural Images for Sound Classification. Applied Sciences, 11(7), 3043. https://doi.org/10.3390/app11073043