Smart Rings vs. Smartwatches: Utilizing Motion Sensors for Gesture Recognition

Abstract

1. Introduction

1.1. Overview

1.2. Motivation

1.3. Scope and Focus

1.4. Goals and Research Focus

2. Related Work

2.1. Finger-Mounted Systems

2.2. Wrist-Mounted Systems

2.3. Discussion

3. Approach and Methodology

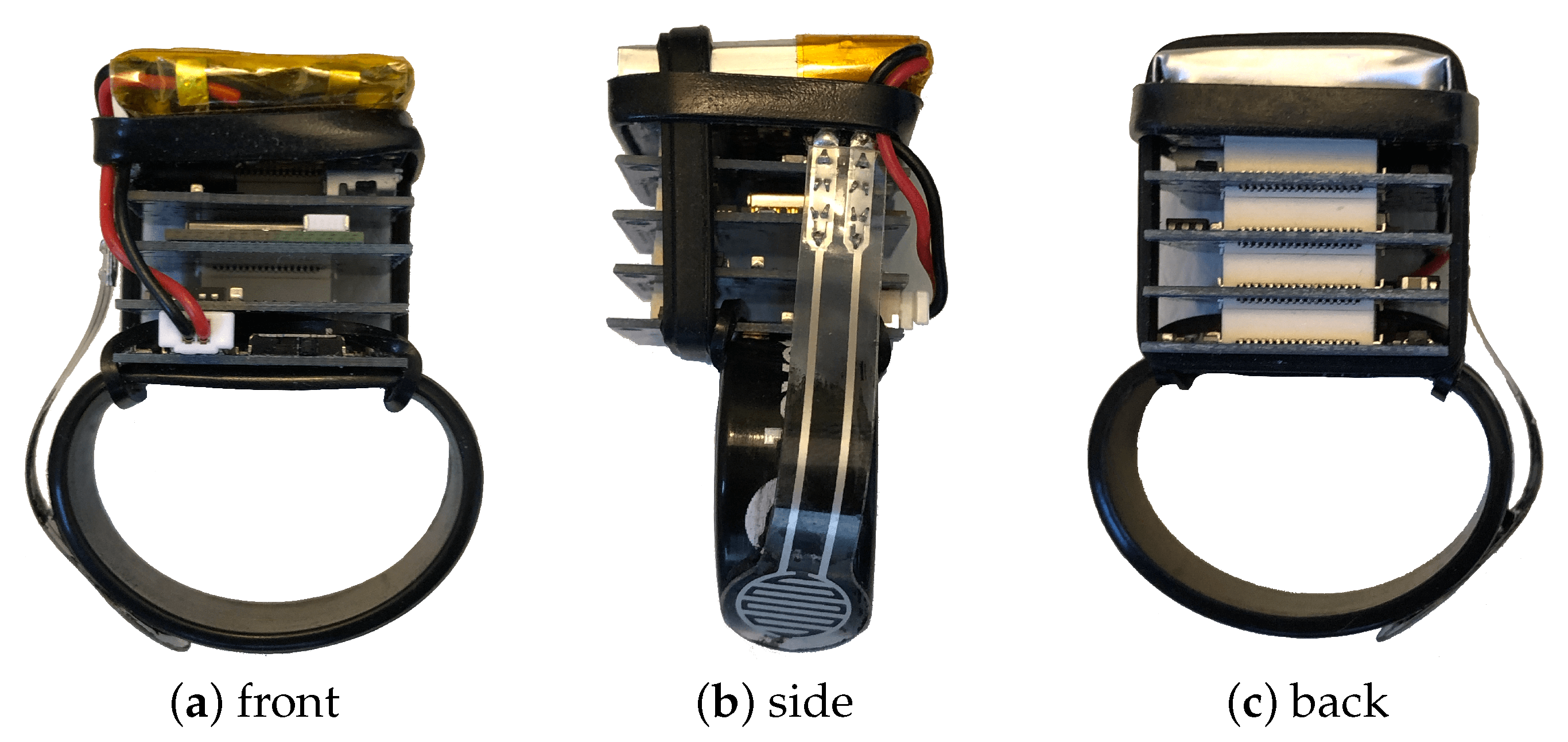

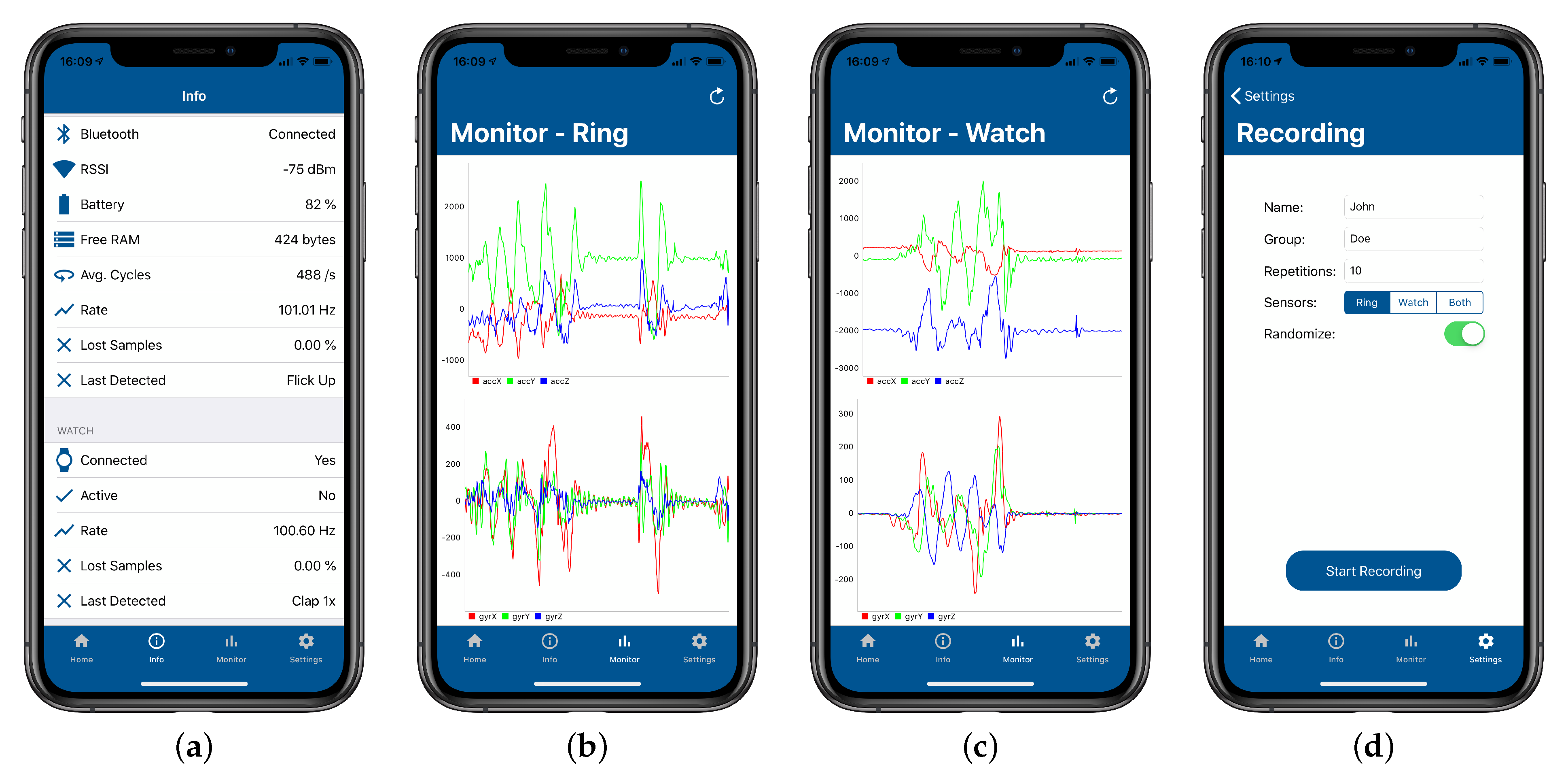

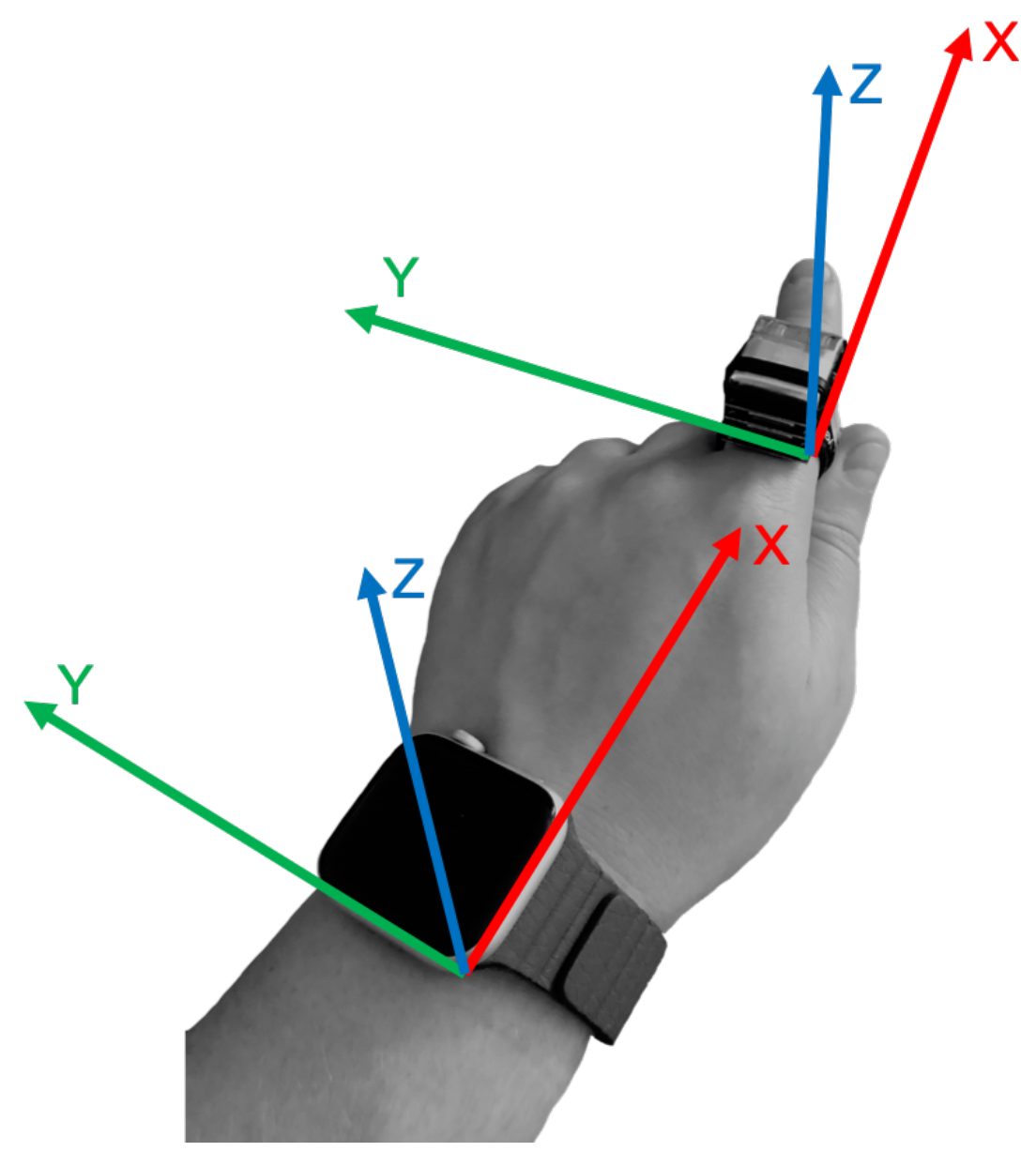

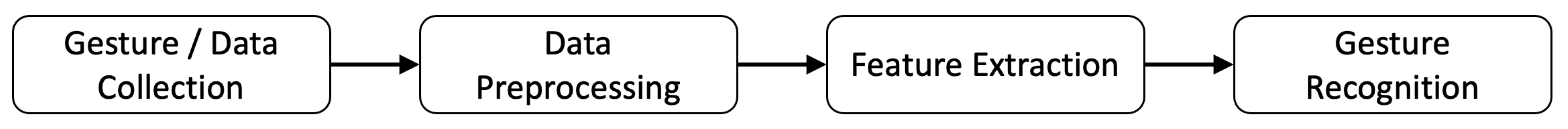

3.1. System Overview

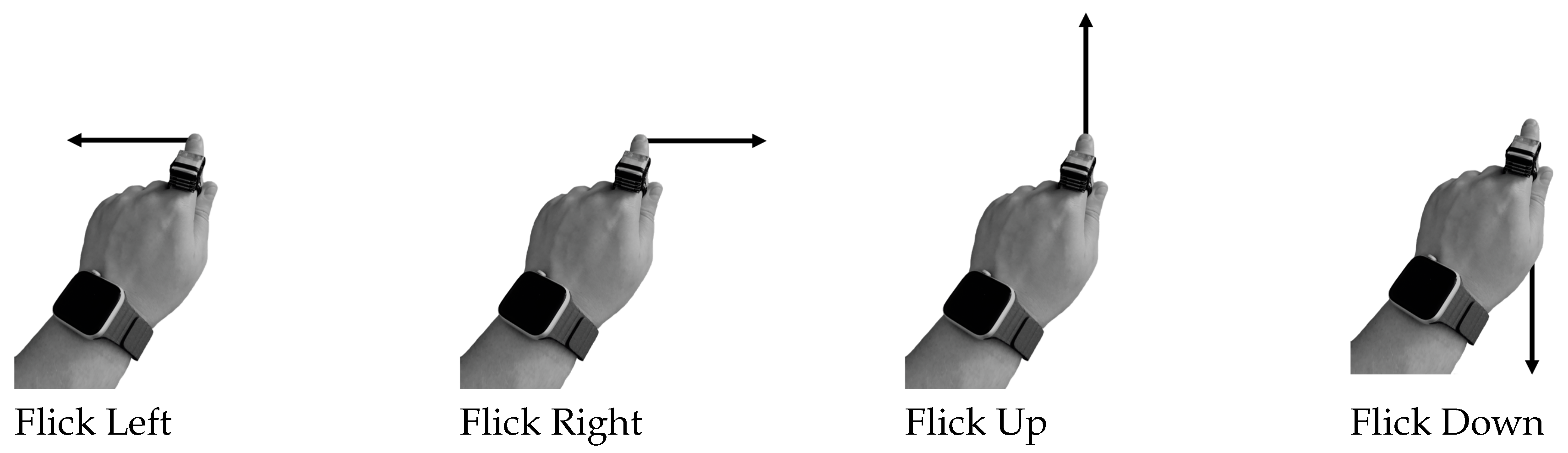

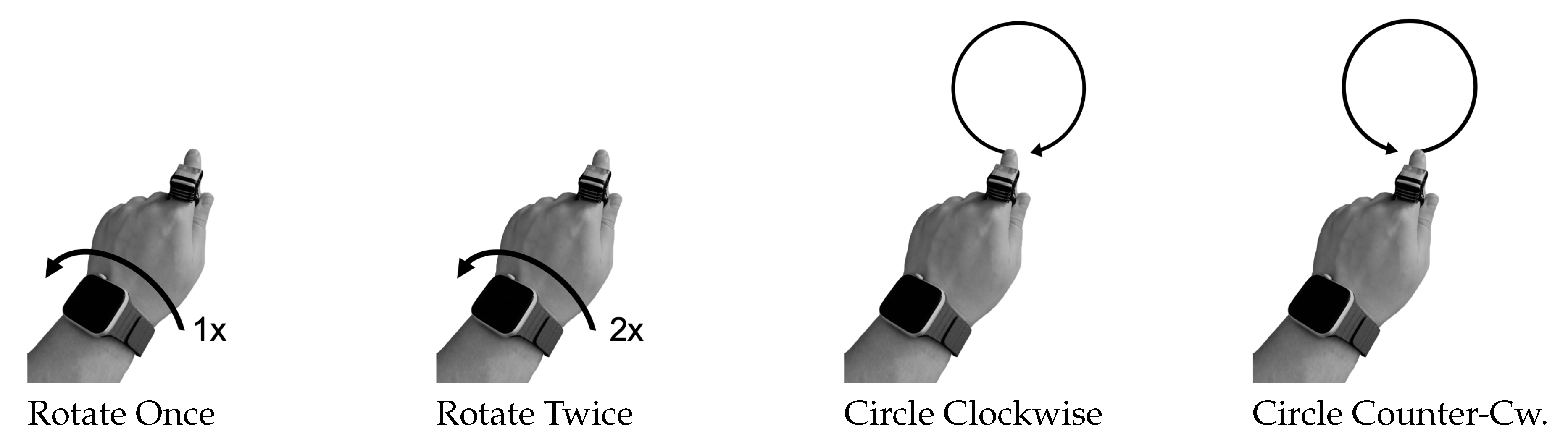

3.2. Relevant Gestures

3.3. Algorithmic Methodology

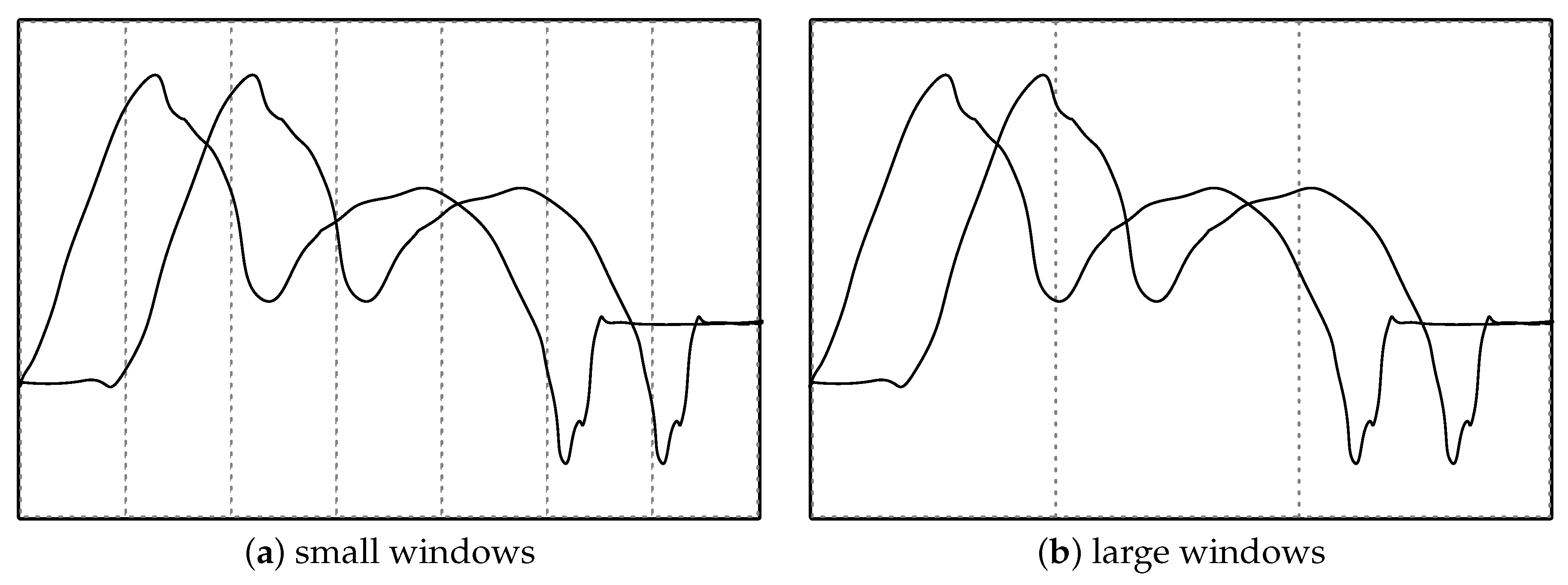

3.3.1. Data Analysis and Preprocessing

3.3.2. Feature Derivation and Selection

3.3.3. Machine Learning Models

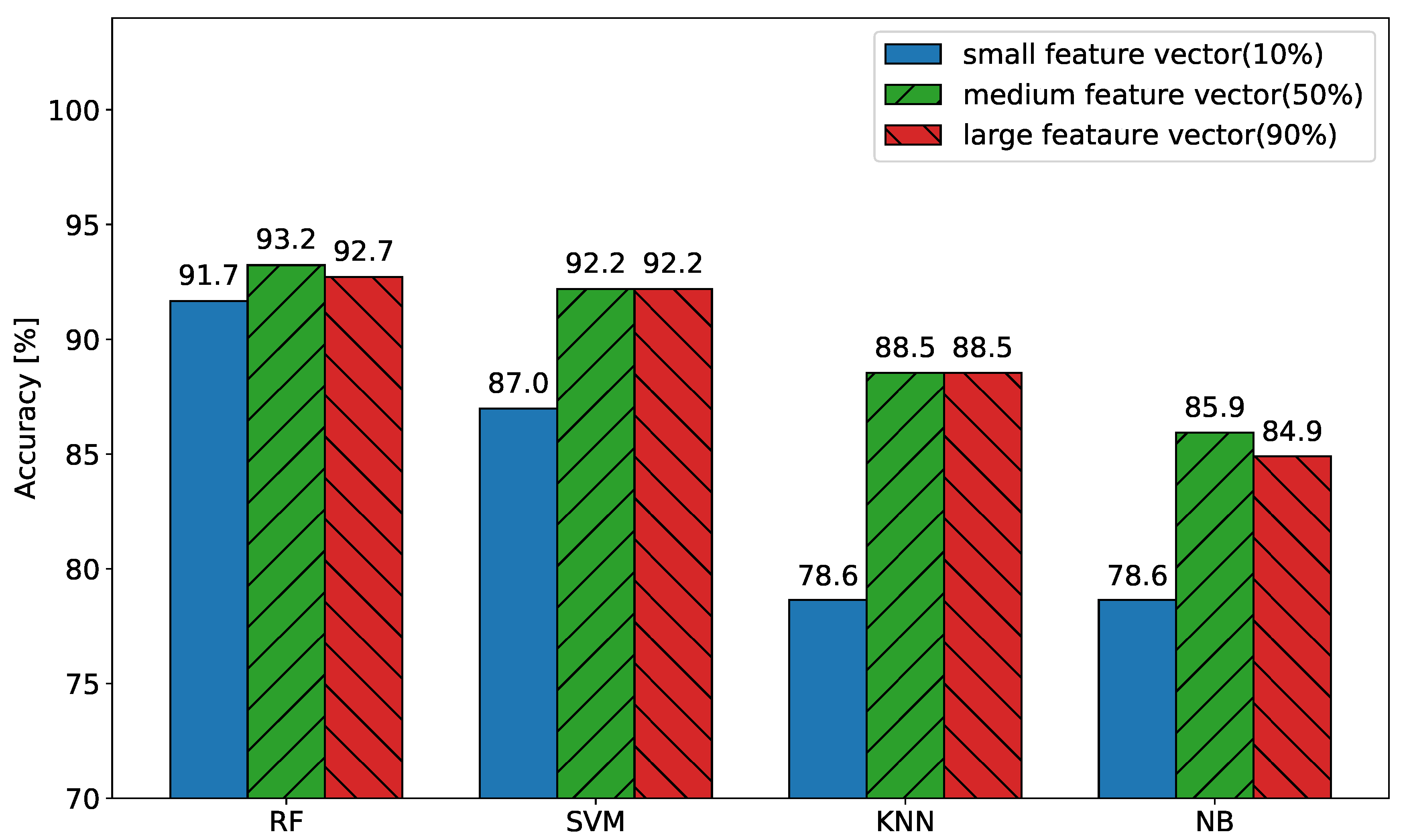

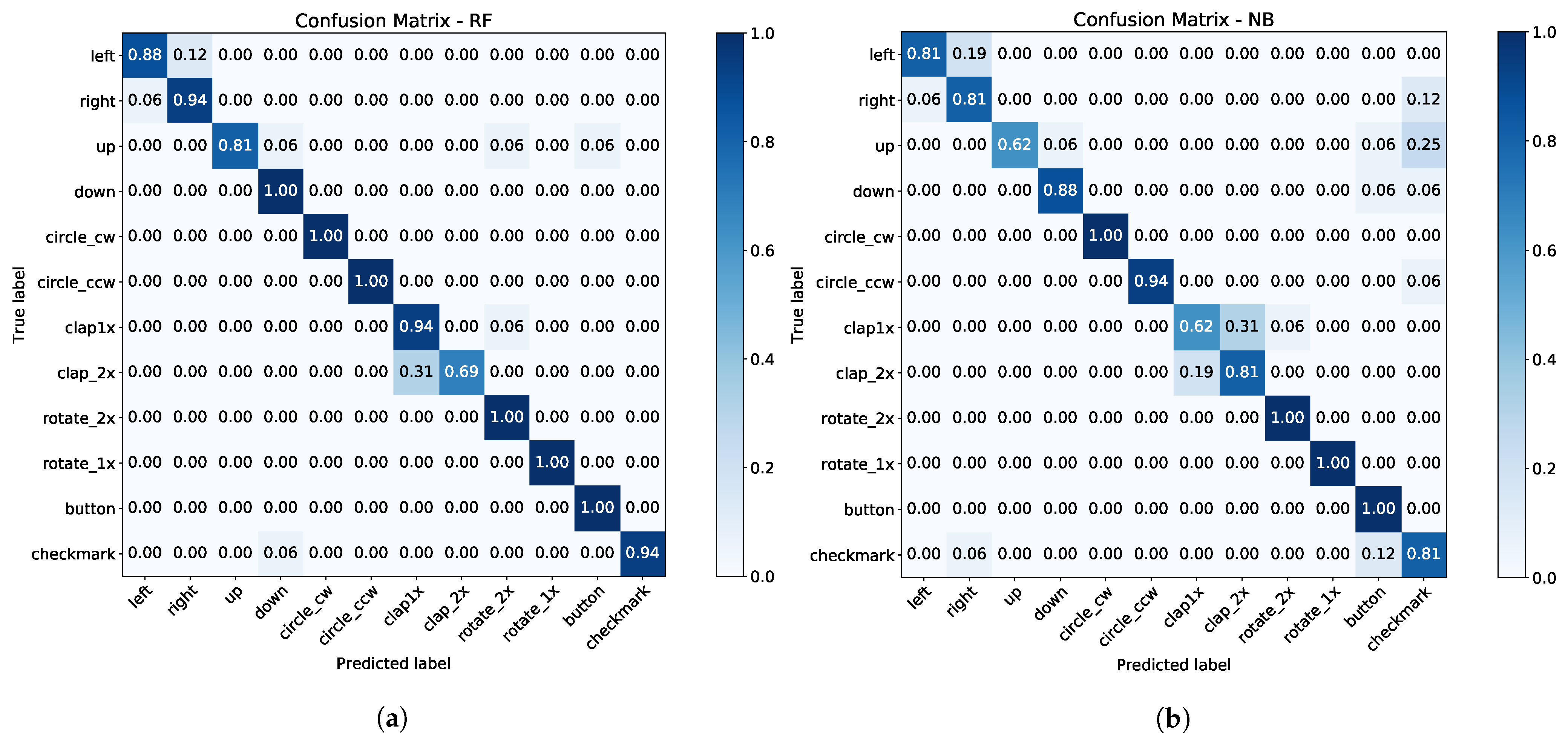

- Random forest (RF) [27]: based on multiple decision trees; can be tuned with various hyperparameters (e.g., number of trees, depth of the trees, no. of samples per leaf, etc.).

- Radial support vector machine (SVM) [28]: based on multi-dimensional lines (i.e., hyperplanes), which separate different classes in a multi-dimensional feature-space.

- k-nearest neighbor (KNN) [29]: based on a distance measure between a feature vector and the k nearest feature vectors (i.e., the neighbors).

- Gaussian naive Bayes (NB) [30]: based on the Bayes theorem for predicting probabilities.

3.3.4. Experiment and Data Recording

4. Evaluation and Results

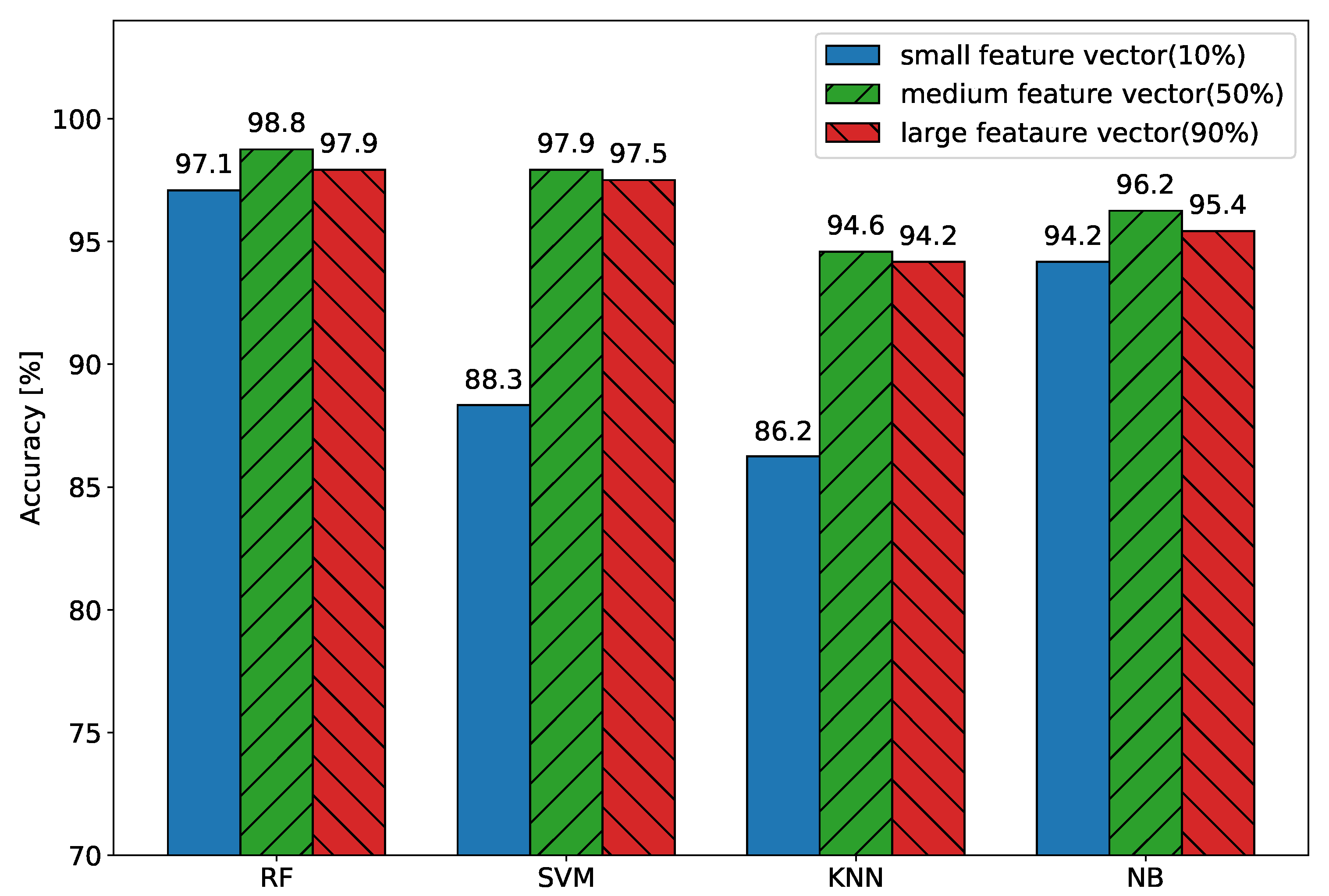

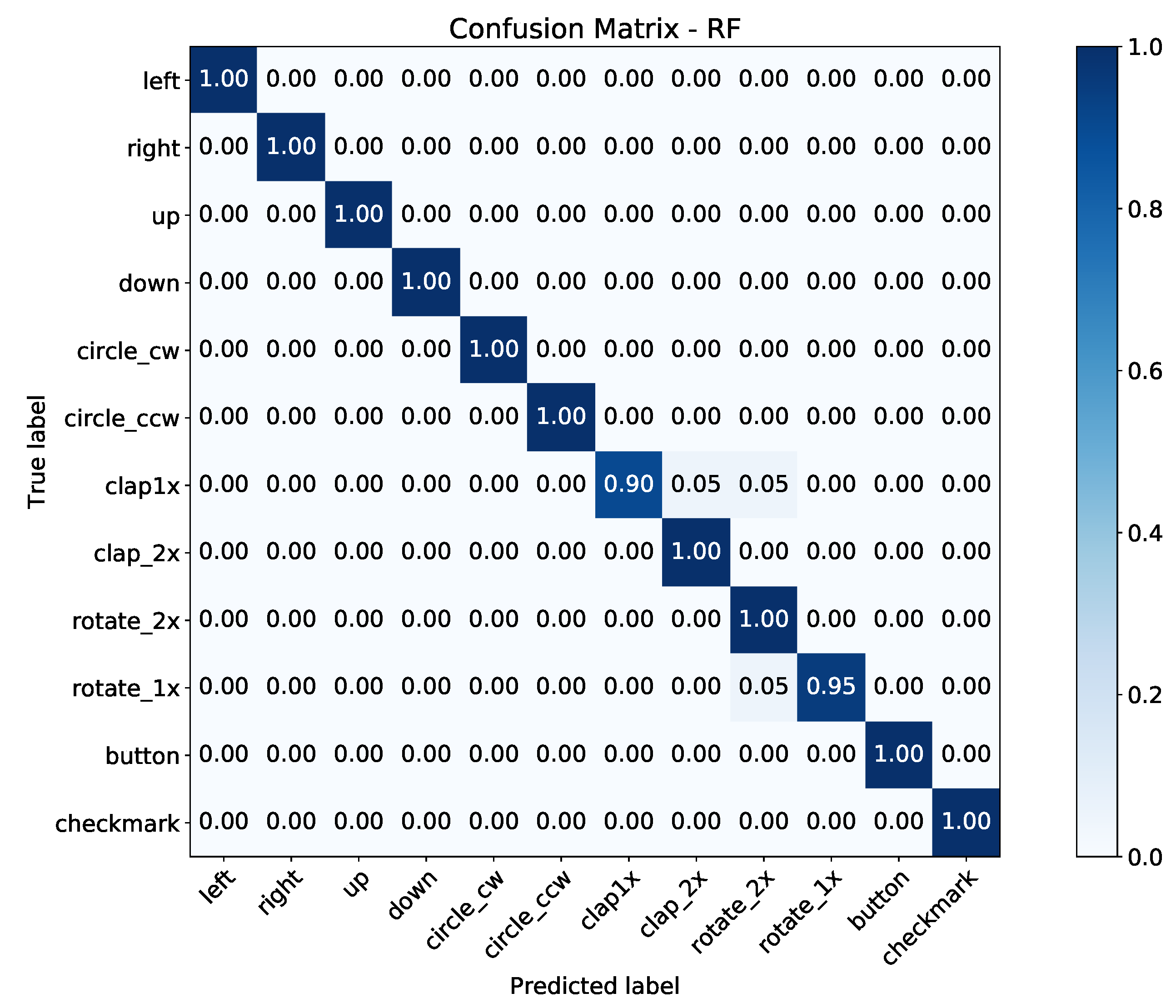

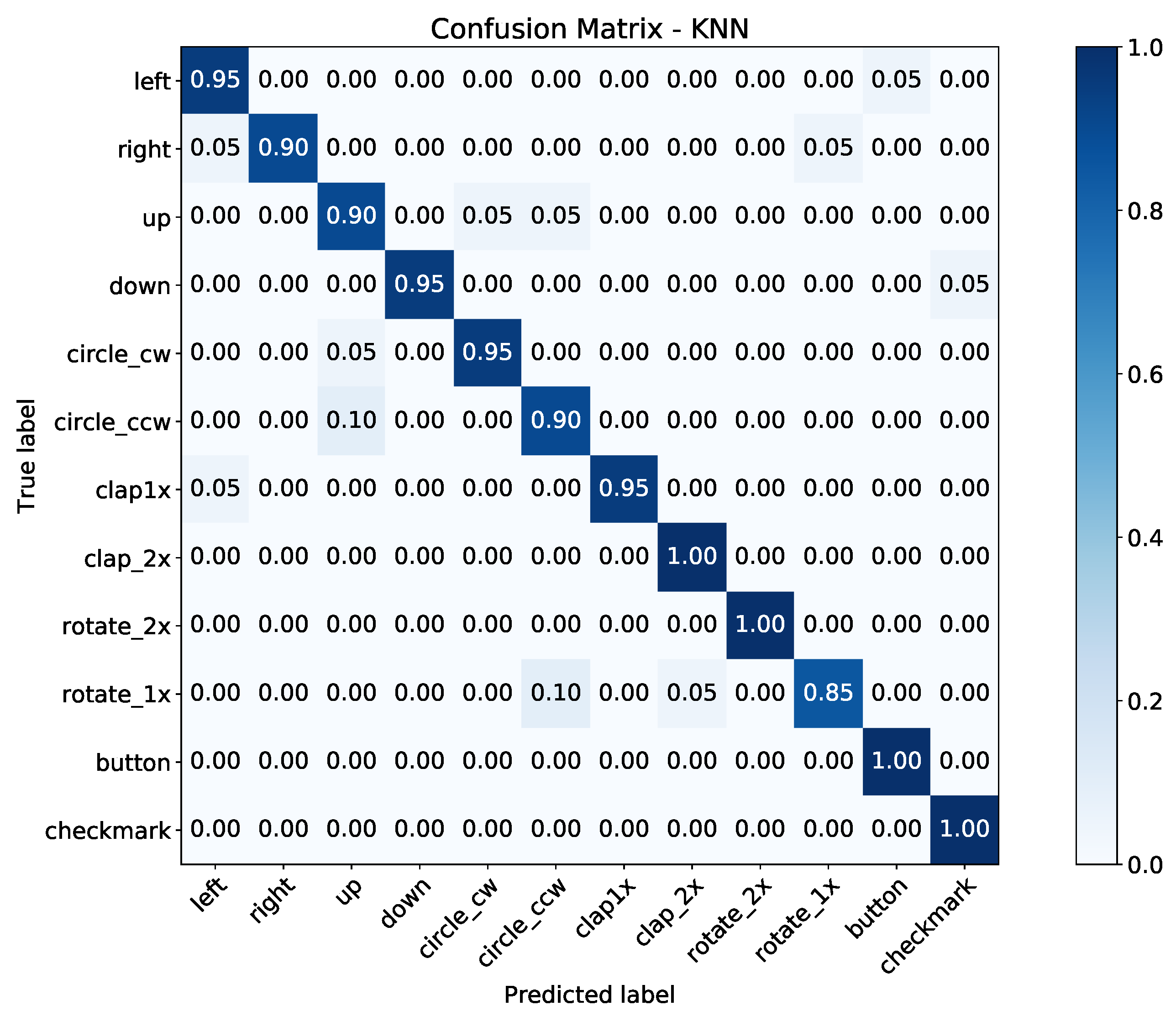

4.1. Watch Session Results

4.1.1. Smartwatch Observations

4.1.2. Smart Ring Observations

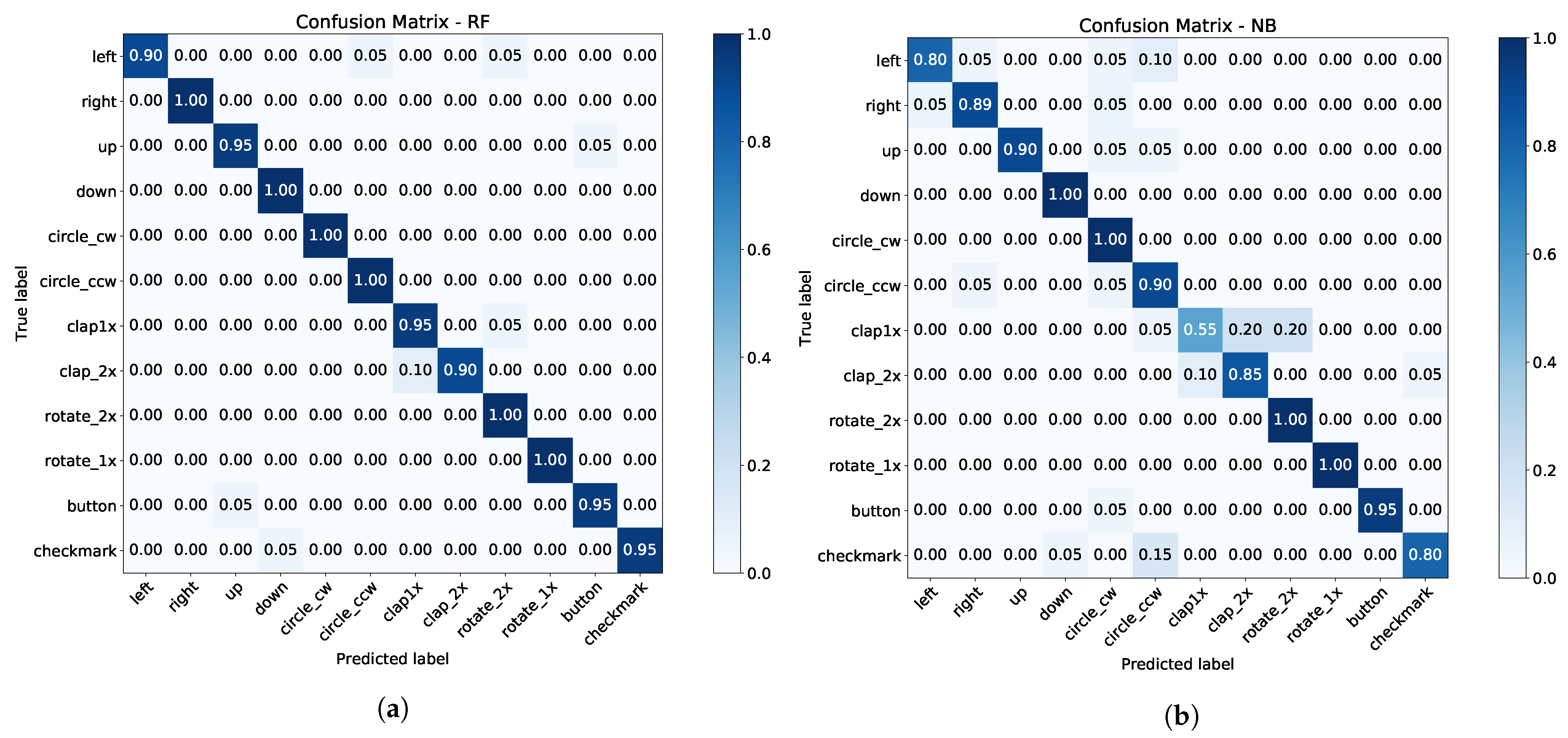

4.2. Ring Session Results

Smart Ring Observations

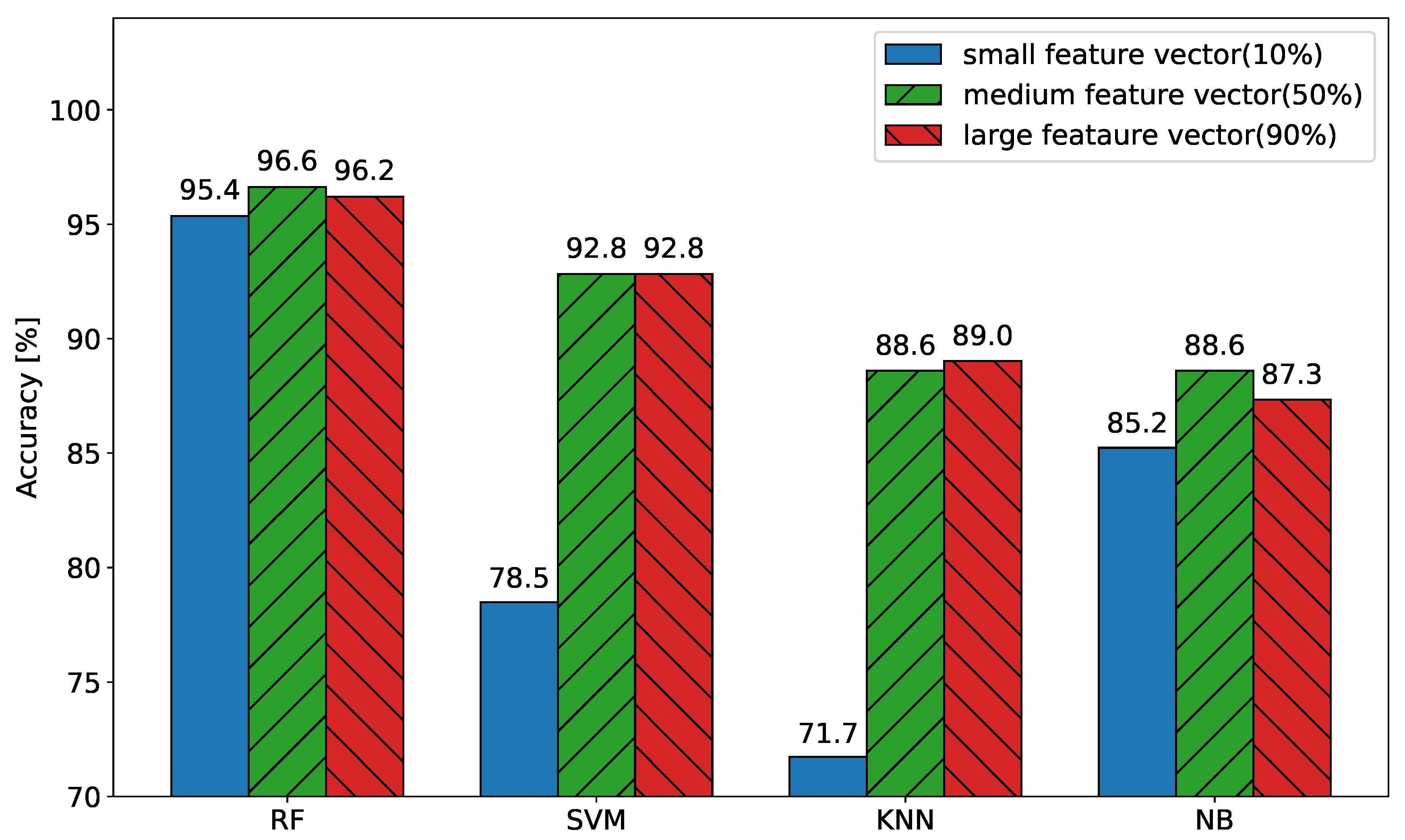

4.3. Evaluation

4.3.1. Activation Mechanism–Independent

4.3.2. Activation Mechanism–Dependent

4.4. Summary

5. Summary and Outlook

5.1. Conclusions

5.2. Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Weiser, M. The computer for the 21st Century. IEEE Pervasive Comput. 2002, 1, 19–25. [Google Scholar] [CrossRef]

- Honan, M. Apple Unveils iPhone. Macworld, 9 January 2007. [Google Scholar]

- Kurz, M.; Hölzl, G.; Ferscha, A. Dynamic adaptation of opportunistic sensor configurations for continuous and accurate activity recognition. In Proceedings of the The Fourth International Conference on Adaptive and Self-Adaptive Systems and Applications (ADAPTIVE2012), Nice, France, 22–27 July 2012. [Google Scholar]

- Roggen, D.; Calatroni, A.; Rossi, M.; Holleczek, T.; Förster, K.; Tröster, G.; Lukowicz, P.; Bannach, D.; Pirkl, G.; Wagner, F.; et al. Walk-through the OPPORTUNITY dataset for activity recognition in sensor rich environments. In Proceedings of the 8th International Conference on Pervasive Computing (Pervasive 2010), Helsinki, Finland, 17–20 May 2010. [Google Scholar]

- Roggen, D.; Calatroni, A.; Rossi, M.; Holleczek, T.; Förster, K.; Tröster, G.; Lukowicz, P.; Bannach, D.; Pirkl, G.; Ferscha, A.; et al. Collecting complex activity datasets in highly rich networked sensor environments. In Proceedings of the 2010 Seventh International Conference on Networked Sensing Systems (INSS), Kassel, Germany, 15–18 June 2010; pp. 233–240. [Google Scholar]

- Rupprecht, F.A.; Ebert, A.; Schneider, A.; Hamann, B. Virtual Reality Meets Smartwatch: Intuitive, Natural, and Multi-Modal Interaction. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2017; pp. 2884–2890. [Google Scholar] [CrossRef]

- Bui, T.D.; Nguyen, L.T. Recognizing postures in vietnamese sign language with MEMS accelerometers. IEEE Sens. J. 2007, 7, 707–712. [Google Scholar] [CrossRef]

- Zhou, S.; Dong, Z.; Li, W.J.; Kwong, C.P. Hand-written character recognition using MEMS motion sensing technology. In IEEE/ASME International Conference on Advanced Intelligent Mechatronics; AIM: Hong Kong, China, 2008; pp. 1418–1423. [Google Scholar] [CrossRef]

- Porzi, L.; Messelodi, S.; Modena, C.M.; Ricci, E. A smart watch-based gesture recognition system for assisting people with visual impairments. In Proceedings of the 3rd ACM International Workshop on Interactive Multimedia on Mobile & Portable Devices; ACM: New York, NY, USA, 2013; pp. 19–24. [Google Scholar] [CrossRef]

- Card, S.K.; Mackinlay, J.D.; Robertson, G.G. A morphological analysis of the design space of input devices. ACM Trans. Inf. Syst. 2002, 9, 99–122. [Google Scholar] [CrossRef]

- Roshandel, M.; Munjal, A.; Moghadam, P.; Tajik, S.; Ketabdar, H. Multi-sensor based gestures recognition with a smart finger ring. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Kurosu, M., Ed.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8511, pp. 316–324. [Google Scholar] [CrossRef]

- Xie, R.; Sun, X.; Xia, X.; Cao, J. Similarity matching-based extensible hand gesture recognition. IEEE Sens. J. 2015, 15, 3475–3483. [Google Scholar] [CrossRef]

- Jing, L.; Zhou, Y.; Cheng, Z.; Wang, J. A recognition method for one-stroke finger gestures using a MEMS 3d accelerometer. IEICE Trans. Inf. Syst. 2011, E94-D, 1062–1072. [Google Scholar] [CrossRef]

- Zhu, C.; Sheng, W. Wearable sensor-based hand gesture and daily activity recognition for robot-assisted living. IEEE Trans. Syst. Man Cybernet. Part A Syst. Hum. 2011, 41, 569–573. [Google Scholar] [CrossRef]

- Mace, D.; Gao, W.; Coskun, A. Accelerometer-based hand gesture recognition using feature weighted naïve bayesian classifiers and dynamic time warping. In Proceedings of the Companion Publication of the 2013 International Conference on Intelligent User Interfaces Companion; ACM: New York, NY, USA, 2013; p. 83. [Google Scholar] [CrossRef]

- Xu, C.; Pathak, P.H.; Mohapatra, P. Finger-writing with Smartwatch. In Proceedings of the 16th International Workshop on Mobile Computing Systems and Applications—HotMobile ’15; ACM: New York, NY, USA, 2015; pp. 9–14. [Google Scholar]

- Wen, H.; Ramos Rojas, J.; Dey, A.K. Serendipity: Finger Gesture Recognition Using an Off-the-Shelf Smartwatch. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3847–3851. [Google Scholar] [CrossRef]

- Seneviratne, S.; Hu, Y.; Nguyen, T.; Lan, G.; Khalifa, S.; Thilakarathna, K.; Hassan, M.; Seneviratne, A. A survey of wearable devices and challenges. IEEE Commun. Surv. Tutor. 2017, 19, 2573–2620. [Google Scholar] [CrossRef]

- TinyCircuits. Product Page. Available online: https://tinycircuits.com (accessed on 24 February 2021).

- Kopetz, H. Real-Time Systems: Design Principles for Distributed Embedded Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Shin, K.G.; Ramanathan, P. Real-time computing: A new discipline of computer science and engineering. Proc. IEEE 1994, 82, 6–24. [Google Scholar] [CrossRef]

- Gheran, B.F.; Vanderdonckt, J.; Vatavu, R.D. Gestures for Smart Rings: Empirical Results, Insights, and Design Implications. In Proceedings of the 2018 Designing Interactive Systems Conference; ACM: New York, NY, USA, 2018; pp. 623–635. [Google Scholar] [CrossRef]

- Gheran, B.F.; Vatavu, R.D.; Vanderdonckt, J. Ring x2: Designing Gestures for Smart Rings Using Temporal Calculus. In Proceedings of the 2018 ACM Conference Companion Publication on Designing Interactive Systems; ACM: New York, NY, USA, 2018; pp. 117–122. [Google Scholar] [CrossRef]

- Kodratoff, Y. Introduction to Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Kozachenko, L.F.; Leonenko, N.N. Sample estimate of the entropy of a random vector. Probl. Peredachi Inf. 1987, 23, 9–16. [Google Scholar]

- Kim, M.; Cho, J.; Lee, S.; Jung, Y. IMU sensor-based hand gesture recognition for human-machine interfaces. Sensors 2019, 19, 3827. [Google Scholar] [CrossRef] [PubMed]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar] [CrossRef]

- Burges, C.J. A Tutorial on Support Vector Machines for Pattern Recognition. Data Min. Knowl. Discov. 1998, 167, 121–167. [Google Scholar] [CrossRef]

- Cunningham, P.; Delany, S.J. k-Nearest Neighbour Classifiers. Mul. Classif. Syst. 2007, 34, 1–17. [Google Scholar] [CrossRef]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the International Joint Conferences on Artificial Intelligence 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 3, pp. 41–46. [Google Scholar]

| Publication | Mounting Location | Nr. of Gestures | Nr. of Samples | Sensors | Model | Accuracy |

|---|---|---|---|---|---|---|

| Roshandel et al. [11] | Finger | 9 | 120 | A,G | MLP | 97.80% |

| Xie et al. [12] | Finger | 8 | 70 | A | SM | 98.90% |

| Jing et al. [13] | Finger | 12 | 100 | A | DT | 86.90% |

| Zhu et al. [14] | Finger | 5 | 150 | A,G | HMM | 82.30% |

| Mace et al. [15] | Wrist | 4 | 25 | A | DTW | 95.00% |

| Porzi et al. [9] | Wrist | 8 | 225 | A | SVM | 93.33% |

| Xu et al. [16] | Wrist | 14 | 10 | A,G | NB | 98.57% |

| Wen et al. [17] | Wrist | 5 | 800 | A,G | KNN | 87.00% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kurz, M.; Gstoettner, R.; Sonnleitner, E. Smart Rings vs. Smartwatches: Utilizing Motion Sensors for Gesture Recognition. Appl. Sci. 2021, 11, 2015. https://doi.org/10.3390/app11052015

Kurz M, Gstoettner R, Sonnleitner E. Smart Rings vs. Smartwatches: Utilizing Motion Sensors for Gesture Recognition. Applied Sciences. 2021; 11(5):2015. https://doi.org/10.3390/app11052015

Chicago/Turabian StyleKurz, Marc, Robert Gstoettner, and Erik Sonnleitner. 2021. "Smart Rings vs. Smartwatches: Utilizing Motion Sensors for Gesture Recognition" Applied Sciences 11, no. 5: 2015. https://doi.org/10.3390/app11052015

APA StyleKurz, M., Gstoettner, R., & Sonnleitner, E. (2021). Smart Rings vs. Smartwatches: Utilizing Motion Sensors for Gesture Recognition. Applied Sciences, 11(5), 2015. https://doi.org/10.3390/app11052015